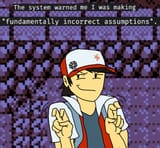

>>509153026 (OP)There are so many things they get wrong I often have to triple check the information LLM's provide on news articles, studies, etc that they fetch online.

It's like they sometimes read an information but interpret it in a distorted way. Then you ask it to correct itself and it suddenly has a bias where it thinks it's entirely wrong so it might correct other previous information where it was actually correct.

It's impossible to trust these things, they get entangled into language and biased interpretations.