Search Results

7/8/2025, 11:22:25 PM

>>105841824

>from randomly initialized weights. I thought if I made a narrow enough domain it might converge on something coherent without needing too much data/compute.

1). What do you mean by "randomly initialized weights"? Did you do the QLoRA method? (That's basically mandatory given what your goal is and the type of models you want to fine tune) if so what were the rank and alpha settings you used? A higher rank means a higher percentage of weights trained which means the fine tuning sticks harder, with the obvious cost being training time and VRAM usage.

2) so you used a data set before? Did you use one you found on hugging face or did you make it yourself? How did you format it so that a trainer could properly use it and train on the rolls you had in the data set?

https://files.catbox.moe/9audsj.jsonl

Catbox Link rel is a (heavily truncated and pretty printed) example or a science dataset I found on HF ( https://huggingface.co/datasets/a-m-team/AM-DeepSeek-R1-0528-Distilled/blob/main/science.jsonl ) Was your data set format it something like this?

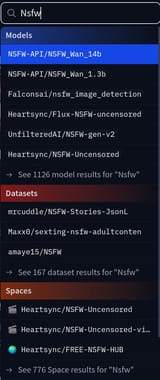

Were you trying to train it on domain specific science stuff or are you trying to make these models better at smut? It fixed the latter then there are dedicated data sets on HF for exactly that. If said data sets are too large for the amount of beer and you currently have then you can always just trim them down (while making sure the formatting is still correct. That's what I did for the file in the cat box link) until it doesn't make your VRAM explode.

>from randomly initialized weights. I thought if I made a narrow enough domain it might converge on something coherent without needing too much data/compute.

1). What do you mean by "randomly initialized weights"? Did you do the QLoRA method? (That's basically mandatory given what your goal is and the type of models you want to fine tune) if so what were the rank and alpha settings you used? A higher rank means a higher percentage of weights trained which means the fine tuning sticks harder, with the obvious cost being training time and VRAM usage.

2) so you used a data set before? Did you use one you found on hugging face or did you make it yourself? How did you format it so that a trainer could properly use it and train on the rolls you had in the data set?

https://files.catbox.moe/9audsj.jsonl

Catbox Link rel is a (heavily truncated and pretty printed) example or a science dataset I found on HF ( https://huggingface.co/datasets/a-m-team/AM-DeepSeek-R1-0528-Distilled/blob/main/science.jsonl ) Was your data set format it something like this?

Were you trying to train it on domain specific science stuff or are you trying to make these models better at smut? It fixed the latter then there are dedicated data sets on HF for exactly that. If said data sets are too large for the amount of beer and you currently have then you can always just trim them down (while making sure the formatting is still correct. That's what I did for the file in the cat box link) until it doesn't make your VRAM explode.

Page 1