Search Results

7/12/2025, 8:01:27 PM

>>105883604

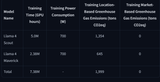

What I find most interesting is that Llama 3.3 70B took about as many GPU hours to train as Llama 4 Scout and Llama 4 Maverick combined

Which raises the question, what the fuck were they doing all that time with that huge ass cluster?

What I find most interesting is that Llama 3.3 70B took about as many GPU hours to train as Llama 4 Scout and Llama 4 Maverick combined

Which raises the question, what the fuck were they doing all that time with that huge ass cluster?

Page 1