>>105622433

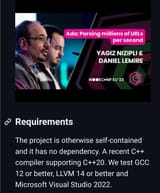

[package]

name = "blahaj-parse-oxide"

version = "0.4.1"

edition = "2024"

[dependencies]

url = "2.5.4"

ada-url = "3.2.4"

url-parse = "1.0.10"

#![feature(random)]

use std::random::random;

use std::sync::Arc;

use url::Url as UrlUrl;

use ada_url::Url as AdaUrl;

use url_parse::url::Url as UrlParseUrl;

use url_parse::core::Parser;

#[derive(Debug, PartialEq)]

enum Url {

UrlUrl(UrlUrl),

AdaUrl(AdaUrl),

UrlParseUrl(UrlParseUrl)

}

fn parse_url(input: String) -> Option<Arc<Box<Url>>> {

let parser = Parser::new(None);

match random::<u32>() & 3 {

0 => Some(Arc::new(Box::new(Url::UrlUrl(UrlUrl::parse(&input).unwrap())))),

1 => Some(Arc::new(Box::new(Url::AdaUrl(AdaUrl::parse(input, None).unwrap())))),

2 => Some(Arc::new(Box::new(Url::UrlParseUrl(parser.parse(&input).unwrap())))),

_ => return None

}

}

fn main() {

let url = parse_url("

https://boards.4chan.org/g/thread/105614169".to_owned());

}