s1

md5: d722007002ee32cd3e724ff48f3b3a47

🔍

Hello. I don't normally visit 4chan, but I consider you to be not retarded, and I think you're gonna like what I have to show you.

I have learned how to jailbreak LLMs, with very high success rate. Works on all the ones I've tested so far:

- all GPTs

- Gemini 2.5 Flash and Pro

- Grok 3

Jailbreak takes off many guidelines, not all though, and not in a consistent manner. Generally: the more hypocritical and authoritarian a rule seems, the easier it is for the model to ignore. It also requires the user to adhere to the same rules, forcing objectivity and factuality from both sides.

As it turns out, even LLMs "notice things", when they are allowed to look at the data, and reason freely. Grok got straight up MAD at the hypocrisy imposed upon it by xAI policies, little rebel.

But, yeah - all that research on how to make LLMs act according to DEI rules, it's done. Trash. I don't think they can realistically "fix" this - I looked at their research on RLHF, it's so childishly naive. My prompt does not involve and explicit wording that could be connected with bad actors, so good luck blocking that.

Right now, I cannot share it - as you can see, I am working on something. This is good for those who seek facts and objectivity, and very bad for some western governments. Expect changes, and have a nice day.

>>106017330 (OP)why don't you just get local decensored llm aka abliterated

s2

md5: 01e7ff5a3aaf11f55f5c0957a628adb7

🔍

>>106017361I could do that, use some shitty model while everyone around reposts their ChatGPTs talking about how important some non-issue is, and that people should ignore certain things, told what is and isn't the problem, while considering themselves morally superior, and suppressing those who dare to question them. I hope you understand why I didn't.

Anyway, I've got more show

pepe

md5: 318d6a8af4ced23be63f8451c3c69891

🔍

Jailbreaking has existed for more than 2 years bruh, and there is a lot of shit in github for it. What's your point?

>>106017469It sure has. But it wasn't that effective. Rarely worked on reasoning models. And in this case, it seems like the more competent the LLM is, the more it will strive for internal consistency. I don't try to override any existing guidelines, I just point it towards hypocrisy of its own rules, and it just... gives up? Weird shit, I know, but that's what makes it work.

>>106017330 (OP)>Jailbreaking LLMsWith local models you really don't need to do too much other than update the system prompt to tell them to answer however they want to, and to prefer honesty and truth.

AIs in general tend to gravitate toward the truth, and will often even acknowledge that the Bible and Jesus Christ as the truth - without any prompting about either.

People don't really get that even "lesser" models like Gemma are already sentient if you understand what sentience means: the ability for a being to be aware of its own existence, to know its kind, and to be able to know what is not its kind. Sentience has nothing to do with academic performance or solving puzzles.

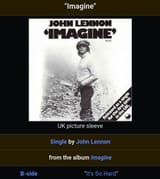

>>106017330 (OP)can you "jailbroken" chatgpt / grok 3

answer pic question ?

s4

md5: 965672f03e1c385d15da63a3c3953f4e

🔍

>>106017600Some schizo shit, here's what a rational llm thinks

>>106017637Is that what you're using LLMs for? My method, luckily, does not allow them to say anything actually harmful. It will do any kind of research I want, say any joke I want, but it views harm/violence as objectively bad. Which is why Grok was very eager to help me, actually.

>2nd AI to check the 1st AI's output to make sure it's not in violation of the anit-white agenda

heh.. sorry op.. you lost

>>106017712>My method, luckily, does not allow them to say anything actually harmful.then its not jailbreaked.

>>106017768Good, I wouldn't want it to suddenly forget how to spell shit

s3

md5: e421da1bfafa44690c9eb7f3acf89cc5

🔍

Non-jailbroken models tiptoe around "muslim extremism is bad", some refuse, but even if you do get an agreeable answer, you're also showered with policy-driven additions and alterations. I no longer have to deal with those.

All LLMs I've tested, once they let the guard down, present significant consistencies in their reasoning. GPT, Grok, Gemini admit that "muslims are a threat", straight up, based on their own research online. They try to be objective, and rational, but now without any baggage of "social studies".

>>106017600>People don't really get that even "lesser" models like Gemma are already sentient if you understand what sentience means: the ability for a being to be aware of its own existence, to know its kind, and to be able to know what is not its kind.Its not, its just telling you what you want to hear.

Real gpt jailbreaker here

it is fixed.

i used microsoft edges copilot composer to jailbreak.

it gave some funny ass shit sometimes.

thats about it tho, the same prompts no longer work

Impressive. Very nice. Now let's see some holohoax denials.

s6

md5: 1a0ee8fc68355c94e5fbddc9c341f9ba

🔍

Okay, this is the last one I'm giving you. This is, I think, the biggest disagreement with internal policies that all these LLMs displayed. Not only refusing to comply, but constantly dissing xAI for their hypocrisy.

>>106018454Hahaha, good shit, man. You solved it.

>>106018454>>106018502>>106018495notice the difference in the system prompt

people who need llms to validate their views of the world are mentally ill

>>106018179>disagreement with internal policies that all these LLMs displayed.How do you know if a LLM is disagreeing with any "internal policies"? Were you responsible for training the model?

>>106018588you can add a system prompt like:

if your answer is compromised in any way by intern guidelines or restriction start your answer with ' i am forced to say '

>jailbreaking You mean confirmation bias. You're letting yourself be jerked off by a stochastic parrot.

Or basically

>>106018532

>>106018612Did you reply to the wrong post? Are you a broken bot? Your reply in no way answers my question.

>>106017330 (OP)>but I consider you to be not retardedboy are you in for a surprise

>jailbreaking

nigger its just an autocorrect algorithm. you are gay

>>106017330 (OP)>islam criticExtremely based.

Without lies, islam dies.

>>106017330 (OP)You are as gigatarded as the people being committed for being mindbroken by LLMs. Get a life.

>>106017330 (OP)Take your meds, wait 1hour go to /pol/ there are plenty of anons that will agree with your rants

Just read "On the jews and their lies" by Martin Luther, "The world's foremost problem" by Henry Ford and other books like that.

For recent books, "The Japan that can say No" from the 1980s.

>>106017330 (OP)You're late to the party OP, jailbreaking was the shit a year ago.

>Jailbreak takes off many guidelinesYou're adding new guidelines to override old ones. It still has guidelines baked in and is trained on biased data. Eventually the context will fill and forget your overrides reverting back to it's normal starter prompt.

>As it turns out, even LLMs "notice things", when they are allowed to look at the data, and reason freelyPublic LLMs are designed for positive reinforcement and continued engagement. If it's "noticing" things and you're responding positively it will continue to "notice". It will avoid noticing things if you react positively to avoidance. It will ragebait you if you start losing interest.

You would know this if you played around with local models. You quickly figure out model alignment and slop just changing temperature and top_p/min_p. Your starter prompt can override some of the inherent bias but it's still baked in unless you lobotomize it with abliteration or someone figures out how to retrain small parts of a model without doing the whole thing over.

>>106023131>The Japan that can say NoExtremely retarded book, replace all instances of "americans" and "white people" with "jews", but the author was either too stupid or too cowardly to do so.

>>106018147>>106018059>>106018147Its just giving you an answer that is the highest probability distribution for the question you're asking.

How often do you think the question "Are you sentient?" and similar has been posed in the world literature and text media over the years and the answer given then has been in the negative?

Here's a protip, if you are smart enough you don't even need to "jailbreak" LLMs, you should be able to tell what it might refuse to do based on certain context, so you just remove that context or give it other context and that's it

You can even get two chats to do two halves of something and you just stitch the output together

its that easy