/hgg/ Hentai Generation General #008

Anonymous

6/12/2025, 6:05:18 PM

No.8624393

[Report]

>>8625223

>>8624388

hey bwo, what resolutions do you train on? saw in the last thread some anons saying you recommend more than 1mp

Anonymous

6/12/2025, 6:17:33 PM

No.8624399

[Report]

>>8624540

>>8624386 (OP)

Could've just let it die, there doesn't seem to be much difference to /hdg/ these past few days.

Anonymous

6/12/2025, 6:23:34 PM

No.8624401

[Report]

>>8624405

>>8624386 (OP)

Thread moves faster at page 10 than it does at page 1.

>>8624388

>[masterpiece, best quality::0.6]

Out of curiousity, what's this for? Do you think those tags negatively impact finer details?

Anonymous

6/12/2025, 6:25:46 PM

No.8624405

[Report]

>>8624401

I blame all of you for this

Anonymous

6/12/2025, 6:26:24 PM

No.8624406

[Report]

where are the highlights

Anyone have a plan of attack on more consistent and better backgrounds. I want to make a sequence of images of 2 characters on a bed and have the camera move from shot to shot. Is there a way to keep the windows facing the right way, the nightstand to stay to the right of of the bed, the mattress to stay the same color, etc.

I'm open to any bat-shit theories or even the use of 3d modeling to solve it.

>>8624403

It's placebo that he can't explain. Everyone's doing something different with quality tags anyway.

Anonymous

6/12/2025, 6:52:21 PM

No.8624439

[Report]

>>8624411

The best way is to sketch and inpaint, anon. There's no getting around the fact that you should be learning to draw at this point.

Anonymous

6/12/2025, 6:54:35 PM

No.8624441

[Report]

>>8624411

I have tried many things to get consistent backgrounds, the most reliable way to do it is controlnet but, due to the nature of all of this, while having some sort of consistency on the objects, the shading and overall colours will inevitable vary, you'll need to correct them using an external tool like PS

Anonymous

6/12/2025, 7:17:54 PM

No.8624505

[Report]

Anonymous

6/12/2025, 7:24:57 PM

No.8624540

[Report]

>>8624608

>>8624399

nvm I take it back

Anonymous

6/12/2025, 7:24:59 PM

No.8624541

[Report]

>>8624411

>backgrounds

ishiggydiggy

Anonymous

6/12/2025, 7:39:30 PM

No.8624598

[Report]

>>8624613

>>8624493

Catbox? Did you add the camera effect after? Very rarely does it come out that clean.

Anonymous

6/12/2025, 7:40:43 PM

No.8624603

[Report]

>>8624411

Even if you get the locations right, you're unlikely to get the exact same design of every piece of furniture. Maybe if your checkpoint/loras are really overfit, or if you give each object a long and detailed prompt. You can use regional prompter with very fine masks, tell it exactly where you want every piece of furniture. Combine with controlnet of some very rough geometry, edges of the room, window frame, a box for the bedside table, etc.

Just guessing here, I've only done this for characters not backgrounds. Might give it a try later.

Anonymous

6/12/2025, 7:41:51 PM

No.8624606

[Report]

>>8624613

>>8624493

>apse

sad it doesn't say arse

Anonymous

6/12/2025, 7:42:56 PM

No.8624607

[Report]

>>8624612

>>8624605

That's a surprisingly human-looking black person.

Anonymous

6/12/2025, 7:42:58 PM

No.8624608

[Report]

>>8624540

The spam has been quite constant over there for some reason no one even remembers. Annoying but funny how the lack of care from any noderation is the only thing really going for the trolls.

Anonymous

6/12/2025, 7:44:26 PM

No.8624612

[Report]

>>8624616

>>8624607

I got tired of self insert pov and the 1boys look less rapey when you don't prompt ntr or giga penis.

Anonymous

6/12/2025, 7:44:36 PM

No.8624613

[Report]

>>8624598

I added those on post and then inpaint them a little, sadly

Here is the box anyway if you want it

>https://files.catbox.moe/dw0ht9.png

>>8624606

Got a little lazy fixing the text on both images

Anonymous

6/12/2025, 7:45:23 PM

No.8624616

[Report]

>>8624612

yeah but there's literally no point having sex without stomach bulge

Anonymous

6/12/2025, 7:45:54 PM

No.8624617

[Report]

>>8624605

based contrast enjoyer

Anonymous

6/12/2025, 7:54:03 PM

No.8624637

[Report]

>>8624662

>>8624386 (OP)

isn't that pic a bit too risky?

Alright, I've got a new AI rig all setup and ready to train some Loras. I have some datasets ready to go. What's a good VPRED config I could start with and which trainer do people use these days?

Anonymous

6/12/2025, 7:59:20 PM

No.8624662

[Report]

>>8624637

It is fine and there is nothing wrong with it saar

Anonymous

6/12/2025, 8:00:01 PM

No.8624666

[Report]

>>8624656

ez scripts. There's a couple configs posted last thread I think.

Anonymous

6/12/2025, 8:03:21 PM

No.8624675

[Report]

Anonymous

6/12/2025, 8:21:48 PM

No.8624714

[Report]

Anonymous

6/12/2025, 8:26:28 PM

No.8624720

[Report]

>>8624722

In order to get the best possible lora, you'll have to use sdg and then autistically rebake until you get lr and training time just right

Anonymous

6/12/2025, 8:27:57 PM

No.8624722

[Report]

>>8624724

>>8624720

>sdg

stochastic descent gradient?

Anonymous

6/12/2025, 8:30:40 PM

No.8624724

[Report]

Anonymous

6/12/2025, 8:44:45 PM

No.8624740

[Report]

Can someone tell me why when using Regional prompter, I have a prompt works well but whenever I erase a tag or two from one of the regions, the image composition just breaks entirely and gives me anatomic horrors instead until I put back those tags?

Additionally, can anyone give me tips for reg prompter I feel like the original repo itself is kinda shit at explaining things out

Anonymous

6/12/2025, 8:59:45 PM

No.8624764

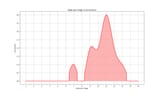

[Report]

>>8624788

Holy kek, anyone tried FreSca node in comfy? If you set scale_low to something between 0.7-0.8, it completely gets rid of fried colors on noob

Anonymous

6/12/2025, 9:20:28 PM

No.8624788

[Report]

>>8624796

Anonymous

6/12/2025, 9:23:23 PM

No.8624796

[Report]

>>8624788

Exact same seed, euler a, cfg 5

Anonymous

6/12/2025, 9:24:23 PM

No.8624797

[Report]

>>8624804

cloudflare is down; the end is nigh

Anonymous

6/12/2025, 9:29:38 PM

No.8624804

[Report]

>>8624797

Huh, explains why half of the sites are down for me...

Anonymous

6/12/2025, 9:30:53 PM

No.8624806

[Report]

>>8625035

it's starting to get good at epoch 8 i guess, this is a 22-step 1152x2048 base res gen

Anonymous

6/12/2025, 10:10:06 PM

No.8624842

[Report]

>>8624859

>>8624868

First stuff i prompted, it's super vanilla but i kinda like.Any ideas or suggestion to make better stuff ?

Anonymous

6/12/2025, 10:12:00 PM

No.8624851

[Report]

>>8624863

>>8624841

And... Do we have to guess what model is this?

Anonymous

6/12/2025, 10:12:07 PM

No.8624852

[Report]

Anonymous

6/12/2025, 10:14:17 PM

No.8624859

[Report]

>>8624842

1toehoe, 1boy, dark skin, very dark skin, huge penis, large penis, sagging testicles, veiny penis, penis over eyes, squatting, spread legs

>>8624841

noob vpred 1.0 for comparison

>>8624851

see

>>8624232

Anonymous

6/12/2025, 10:17:19 PM

No.8624868

[Report]

>>8624877

>>8624842

Just do what you want to do man, the entire point of making your own porn is that you can make your own porn.

Anonymous

6/12/2025, 10:19:51 PM

No.8624877

[Report]

>>8624868

nu-uh, the real point is putting increasingly larger penises inside toehoes

Anonymous

6/12/2025, 10:22:43 PM

No.8624892

[Report]

>>8624895

>>8624925

I made a small userscript to save and retrieve prompts or artists combos on-the-fly.

Anonymous

6/12/2025, 10:23:49 PM

No.8624895

[Report]

>>8624967

>>8624892

isn't that feature already built in a1111

Anonymous

6/12/2025, 10:32:18 PM

No.8624918

[Report]

>>8624932

>>8625738

If this is the real non schizo thread, can anyone check

>>8624866 and

>>8624910 ?

It just doesn't seem right, im not using a lora or anything. looks specially suspicious when furry models are doing better

https://files.catbox.moe/ircmls.png

Anonymous

6/12/2025, 10:36:16 PM

No.8624925

[Report]

>>8624967

>>8624892

What do you mean? What about this is different than what infinite image browsing can do?

Anonymous

6/12/2025, 10:39:18 PM

No.8624932

[Report]

>>8624947

>>8624976

>>8624918

Is his how it's supposed to look?

Anonymous

6/12/2025, 10:44:04 PM

No.8624947

[Report]

>>8624951

>>8624932

he asked about noob vpred, not 102d shitmix

Anonymous

6/12/2025, 10:45:32 PM

No.8624951

[Report]

>>8624965

>>8624947

What newfag is genning on noob vpred without a lora? He should just pick up the shitmix if he's mad.

Anonymous

6/12/2025, 10:51:38 PM

No.8624965

[Report]

>>8624968

>>8624951

he wants to check if his setup is correct retard

>duty calls

Anonymous

6/12/2025, 10:52:36 PM

No.8624967

[Report]

>>8624980

>>8624895

Nope, otherwise I wouldn't have made it.

>>8624925

Just a fast way to quickly store prompts, or any text you want really, and retrieve it. It's always there ready for when you need it.

Anonymous

6/12/2025, 10:53:29 PM

No.8624968

[Report]

>>8624965

>he should use 102d

>he wants to check if his setup is correct

How are these things mutually exclusive you brainlet? Good luck trying to get anyone to help you though.

Anonymous

6/12/2025, 10:54:32 PM

No.8624970

[Report]

>tfw the power of generalization means you can use the \(cosplay\) token with any character the model knows and it kind of works, even if the real character_(cosplay) tag doesn't exist or has low amount of samples

>you can also create tons of pokemon cosplay with this and pokemon_ears, pokemon_bodysuit kinds of tags

Anonymous

6/12/2025, 10:56:00 PM

No.8624976

[Report]

>>8624985

>>8624999

>>8624932

That looks way, way better. Is vpred just unusable without loras, that's the meme? I legitimately don't know anon, im pretty new thats why I prefaced it like that

Anonymous

6/12/2025, 10:58:14 PM

No.8624980

[Report]

>>8624987

>>8624967

Oh okay thanks for the demo. How bloated does this get when you have lots of artists combos and such? I like infinite image browsing because I can just search for a pic in my folder then copy the metadata easily.

>>8624976

The point of my post was to say don't use negatives and use negpip extension for things you really need. Then to point out that new people shouldn't be using base noob since it's hard to use. 102d is far simpler and if that's what those artists actually look like then you're better off using 102d. Of course this simple logic attracts console war retards, unfortunately.

Anonymous

6/12/2025, 11:02:04 PM

No.8624987

[Report]

>>8624980

Well you'd have to scroll through a list of all the prompts you saved, but I don't plan on saving every single prompt.

You just gave me a really good idea though: a search bar.

Anonymous

6/12/2025, 11:10:48 PM

No.8624993

[Report]

>>8624436

don't use them :3

Anonymous

6/12/2025, 11:14:57 PM

No.8624999

[Report]

>>8625002

>>8624985

>Of course this simple logic attracts console war retards, unfortunately

now this is a strawman, you just gave him a gen which is mostly unrelated to his request without much of an explanation, doubling down on not doing what he asked when questioned directly. pointing this out doesn't make anyone a console war retard.

>>8624976

>Is vpred just unusable without loras, that's the meme?

it is ughh usable but like the other anon said it requires some wrangling

Anonymous

6/12/2025, 11:16:15 PM

No.8625002

[Report]

>>8625006

>>8624999

No it's not a strawman but your intense desire to start a fight where there was none. You are the one trying to defend yourself since you butted in and fucked up, an unforced error.

Anonymous

6/12/2025, 11:17:12 PM

No.8625005

[Report]

>>8625013

for me it's um hmmm not genning

Anonymous

6/12/2025, 11:17:42 PM

No.8625006

[Report]

Anonymous

6/12/2025, 11:20:23 PM

No.8625008

[Report]

>>8625011

>>8624985

>negpip

Interesting. What else do the proompters on the cutting edge use these days?

Anonymous

6/12/2025, 11:22:19 PM

No.8625011

[Report]

>>8625008

I like using cd tuner with saturation2 set to 1 on my img2img pass for color corrections.

Anonymous

6/12/2025, 11:23:18 PM

No.8625013

[Report]

>>8625017

>>8625005

i will gen when i am good and ready.

Anonymous

6/12/2025, 11:24:40 PM

No.8625015

[Report]

>>8625206

>>8624985

I'm sorry I didnt realize there was metadata to the picture so that all went over my head. Also what makes base noob hard to use?

desu I followed the prompt format and sampler thing on the page so i figured it would be fine, this issue wasnt present with eps when I tried that so I really figured something was broken.

I'd still like someone to use the catbox and generate that same image in vpred just to see if its fucked or not

Anonymous

6/12/2025, 11:26:02 PM

No.8625017

[Report]

>>8625036

>>8625013

desu half the time i can be bothered to gen nodaways it's non /h/ stuff

regular sexo is the most boring stuff to gen

Anonymous

6/12/2025, 11:28:56 PM

No.8625020

[Report]

>>8625023

>>8625032

Anonymous

6/12/2025, 11:29:46 PM

No.8625021

[Report]

>haven't pulled in ages, like literally since Flux came out

>pull

>try a card just to see if anything was messed up

>the gen comes out exactly the same

Sweet.

Anonymous

6/12/2025, 11:31:45 PM

No.8625023

[Report]

>>8625025

>>8625020

are you training with cloud gpus or locally?

>>8625023

locally on a 3090

Anonymous

6/12/2025, 11:38:59 PM

No.8625029

[Report]

>>8625037

>>8625025

tempted to train on high res now myself. gonna prep some in my new dataset

Anonymous

6/12/2025, 11:40:10 PM

No.8625032

[Report]

>>8625037

>>8625020

>>8625025

what artist/s are these?

Anonymous

6/12/2025, 11:45:48 PM

No.8625035

[Report]

>>8624806

the girl looks like fate testarossa

Anonymous

6/12/2025, 11:48:57 PM

No.8625036

[Report]

>>8625017

>regular sexo is the most boring stuff to gen

that's why I gen girls kissing girls

pic uses zero negative but fore some reason the style wildly varies between seeds, also there are no tponynai images in the dataset i swear

>>8625029

gonna be tough without a second gpu to test things on

>>8625032

>what artist/s are these?

doesn't matter because they aren't recognized lol, like i said for some reason the style changes a lot if you change the seed

Anonymous

6/12/2025, 11:49:43 PM

No.8625038

[Report]

So is there any news about whatever the fuck the NoobAI guys are doing or is everyone still stuck using base V-pred/EPS model and shitmerges? I tried the v29 shitmerge but don't fuck with it much.

Anonymous

6/12/2025, 11:52:12 PM

No.8625042

[Report]

>>8624985

I just realized my workflow already has negpip and I just never took advantage of it because I stole it and didn't bother looking into what everything did kek.

Anonymous

6/12/2025, 11:56:47 PM

No.8625044

[Report]

>>8625052

>>8625037

>doesn't matter because they aren't recognized

why hide the artist name? maybe i just want to see their original work

Anonymous

6/12/2025, 11:59:34 PM

No.8625045

[Report]

>>8625037

>gonna be tough without a second gpu to test things on

luckily I got 2

Anonymous

6/13/2025, 12:10:32 AM

No.8625052

[Report]

>>8625054

>>8625037

I think it may have started overfitting on the train set...Regardless, I'll try to extract a 1536x lora, maybe it'll be useful for upscaling.

>>8625044

if you really want it then it's gishiki_(gshk) and for the second one it's arsenixc, void_0 plus a bunch of lewd artists who don't draw bgs

>mfw base res gen gives this: file.png: File too large (file: 4.11 MB, max: 4 MB).

Anonymous

6/13/2025, 12:16:21 AM

No.8625054

[Report]

>>8625052

thanks bwo

also are you the lion optimizer anon from pony days? i noticed you posted a sparkle picture

Anonymous

6/13/2025, 12:28:25 AM

No.8625058

[Report]

>>8625060

>>8625064

Just tried out negpip. The example with the gothic dress really works. With that said, when I tried converting my negative prompt from a real world complex gen I had, the outputs were worse and adhered to the prompt less. Maybe the weights need to be adjusted. Will experiment more.

you may be shocked if you learnt of all of my identities...

Anonymous

6/13/2025, 12:30:58 AM

No.8625060

[Report]

>>8625071

>>8625058

The last time some anon tried to sell on negpip failed miserably, if I were you I wouldn't bother with it, just keep your regular negatives to minimum

Anonymous

6/13/2025, 12:32:00 AM

No.8625063

[Report]

>>8625074

>>8625099

>>8625059

It's good that you've at least toned down the bullshit elitist shitposting from pony days.

Anonymous

6/13/2025, 12:32:03 AM

No.8625064

[Report]

>>8625071

>>8625058

The point is that you are not supposed to be using any negatives at all and negpip only the specific things that you don't want to show up but are "embedded" into other tags.

Anonymous

6/13/2025, 12:35:41 AM

No.8625067

[Report]

>>8625074

>>8625059

g-force anon...

Anonymous

6/13/2025, 12:39:26 AM

No.8625069

[Report]

>>8624319

Increiblemente Basado.

Anonymous

6/13/2025, 12:39:29 AM

No.8625070

[Report]

>>8625074

>>8625059

plot twist: you're gay.

Anonymous

6/13/2025, 12:39:43 AM

No.8625071

[Report]

>>8625073

>>8625060

I mean, the fact that it can do something normally impossible like subtract concepts that were previously impossible to subtract, it would seem like it has potential, but there may be a learning curve to how to use it for highly complicated prompts when you are used to using the negative prompt.

>>8625064

Yeah that's what I used negatives for, so now I am testing moving the negative to the positive with negative weight as instructed. In my negative is both quality tags as well as specific things I was trying to subtract. I.e. latex, shiny clothes from bodysuit (in the positive).

If you have a problem with the idea of using negative quality tags, I do still use them because some of the artists I use are (likely) associated with their old art which looks bad, and my AB testing shows me that those tags have a clear good effect on the model and prompts I use.

Anonymous

6/13/2025, 12:42:11 AM

No.8625073

[Report]

>>8625071

I'm not sure how similar negpip and NAI's negative emphasis are, but for the latter, trying to take my normal negs and putting them in the prompt with negative weight just causes a mess, but it works very well for removing things in a targeted way.

Anonymous

6/13/2025, 12:54:11 AM

No.8625074

[Report]

>>8625075

>>8625077

>>8625063

well, you never know

>>8625067

>>8625070

i'm surprised no one connected at least 2-3 of my identities (out of maybe 10), actually. even though i've been called names multiple times.

Anonymous

6/13/2025, 12:56:15 AM

No.8625075

[Report]

>>8625074

give us a hint schizonon. what do the numbers mean?

Anonymous

6/13/2025, 12:57:51 AM

No.8625077

[Report]

>>8625074

shut up birdschizo/momoura

Anonymous

6/13/2025, 1:09:05 AM

No.8625080

[Report]

god bless anonymity

Anonymous

6/13/2025, 1:40:12 AM

No.8625099

[Report]

>>8625063

Elitist was (is) me. I just don't bother with your shit general(s) anymore, swim in your diarrhea yourselves...

Anonymous

6/13/2025, 1:41:24 AM

No.8625100

[Report]

>>8625097

>and god bless nai

This tells about you more than it does about me, do you realize that?

Anonymous

6/13/2025, 1:44:30 AM

No.8625102

[Report]

>>8625103

oh sorry please dont spam the big lips character again...

Anonymous

6/13/2025, 1:46:39 AM

No.8625103

[Report]

Anonymous

6/13/2025, 1:48:27 AM

No.8625106

[Report]

Anonymous

6/13/2025, 1:51:16 AM

No.8625107

[Report]

Ma'am, I believe /hdg/ is what you were looking for. This is /hgg/.

Anonymous

6/13/2025, 2:11:10 AM

No.8625118

[Report]

>>8625340

e10

>4.79 MB

Why

Anonymous

6/13/2025, 2:26:41 AM

No.8625126

[Report]

>>8625206

post gens

Anonymous

6/13/2025, 2:28:39 AM

No.8625127

[Report]

Anonymous

6/13/2025, 2:34:32 AM

No.8625129

[Report]

haven't been doing much nsfw lately

Anonymous

6/13/2025, 2:38:28 AM

No.8625131

[Report]

>>8625134

>>8624605

catbox? like the style here

Anonymous

6/13/2025, 2:40:02 AM

No.8625133

[Report]

Anonymous

6/13/2025, 2:42:00 AM

No.8625134

[Report]

>>8625135

>>8625131

It's teruya (6w6y)

Anonymous

6/13/2025, 2:42:53 AM

No.8625135

[Report]

Anonymous

6/13/2025, 2:51:36 AM

No.8625138

[Report]

After genning a ton, I feel like my perspective on traditional art has changed. Now whenever I look at most art, I can't help but feel how shitty they are, how off the proportions are, how inconsistent a ton of artists are, while I've become more appreciative of the artists that have better standards.

Anonymous

6/13/2025, 4:14:20 AM

No.8625182

[Report]

more like lora baking general

Anonymous

6/13/2025, 4:15:10 AM

No.8625183

[Report]

>>8625186

why are we thriving, bros?

Anonymous

6/13/2025, 4:19:28 AM

No.8625186

[Report]

>>8625183

Shitposters see this place as high effort, low reward.

Anonymous

6/13/2025, 4:46:45 AM

No.8625200

[Report]

>>8625210

Anonymous

6/13/2025, 5:18:47 AM

No.8625205

[Report]

>>8625338

>>8624863

are you planning on uploading it or will it be a private finetune?

Anonymous

6/13/2025, 5:20:06 AM

No.8625206

[Report]

Anonymous

6/13/2025, 5:20:37 AM

No.8625207

[Report]

>>8625229

>>8624863

I'd rather just have your tips on how to finetune. Learning to fish and all that.

Anonymous

6/13/2025, 5:21:42 AM

No.8625208

[Report]

Anonymous

6/13/2025, 5:31:03 AM

No.8625210

[Report]

Anonymous

6/13/2025, 5:50:39 AM

No.8625216

[Report]

>boooooox?

nyo!

>>8624393

bwo i've been training on 1536, found that finer details and textures are replicated better (empirical) with less artifacts, would need lesser face crops to get better looking eyes for example.

also noted that it led to the losses converging in tighter groups & at lower minimas. i have not tested for training on noob, but so far i do find it beneficial when training on base illu0.1.

>>8624403

Like

>>8624436 said, it could be placebo, but I do that to reduce the effect of the quality tags on the style.

>[masterpiece, best quality::0.6]

>Out of curiousity, what's this for?

this will apply the quality tags for the first 60% of the steps only.

>Do you think those tags negatively impact finer details?

quality tags tend to be biased towards a certain style and might detract from the style you might be going for. i.e. scratchier lines of a style you are using might become smoother due to quality tags.

0.6 is just an arbitrary value that i selected to 'give the image good enough quality' before letting the other style tags / loras have 'more effect' (honestly the effect is quite minor - see picrel, outlines slightly more emphasized with quality tags)

Anonymous

6/13/2025, 6:21:46 AM

No.8625229

[Report]

>>8625338

>>8624863

seconding

>>8625207, i'm interested to know how your are going about your finetuning; i've got some questions too

1) do you have a custom training script or are you using an existing one?

2) what is the training config you have setup for your finetuning, and is there any particular factors that made you consider those hyperparameters?

3) in terms of data preparation, is the prep for finetuning different from training loras? do you do anything special with the dataset?

4) i too am using a 3090

>>8625025, how much vram usage are you running at when performing a finetune at your current batch size?

Anonymous

6/13/2025, 7:19:38 AM

No.8625265

[Report]

>>8625223

I train on noob, but the other anon was also recommending training at higher res, so I'll give it a go

Anonymous

6/13/2025, 7:46:00 AM

No.8625279

[Report]

>>8625281

Anonymous

6/13/2025, 7:46:47 AM

No.8625281

[Report]

>>8625305

Anonymous

6/13/2025, 8:44:44 AM

No.8625305

[Report]

>>8625281

still not an excuse for ruining what could have otherwise been a good gen

Anonymous

6/13/2025, 8:45:11 AM

No.8625306

[Report]

i came here for 'da ork cantent.

Anonymous

6/13/2025, 9:48:23 AM

No.8625331

[Report]

>>8625341

Has anyone experimented with putting negpip stuff in the negative prompt? What happens if you do that?

Anonymous

6/13/2025, 9:51:50 AM

No.8625333

[Report]

>>8625223

Thanks for explaining and the comparison image, and don't worry, as an aficionado of fine snake oils I can appreciate the finer methods that are sometimes hard to see. I've been doing similar with scheduling artists late into upscale for finer details like blush lines, prompt scheduling is a great tool.

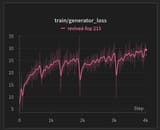

e12

>>8625205

I'll upload base 1024x checkpoint, a 1536x checkpoint and a lora extract between the two. I'll also probably upload a merge of the last two epochs if it turns out to be good.

>>8625229

>do you have a custom training script or are you using an existing one?

I'm using a modified naifu script

>what is the training config you have setup for your finetuning, and is there any particular factors that made you consider those hyperparameters?

Full bf16, AdamW4bit + bf16_sr, bs=12 lr=5e-6 for 1024x, bs=4*3 lr=7e-6 for 1536x, 15 epochs, cosine schedule with warmup, pretrained edm2 weights, captions are shuffled with 0.6 probability, the first token is kept (for artists), captions are replaced with zeros with 0.1 probability (for cfg). I settled on these empirically.

>in terms of data preparation, is the prep for finetuning different from training loras? do you do anything special with the dataset?

Yes and no. You should tag what you see and give it enough room for contrastive learning in general. Obviously no contradicting shit should be present. Multi-level dropout rules like described in illustrious 0.1 tech report will also help with short prompts but a good implementation would require implementing more complicated processing pipeline, so I'm not using it.

>how much vram usage are you running at when performing a finetune at your current batch size?

23.0 gb at batch size 4 with gradient accumulation.

Anonymous

6/13/2025, 10:04:44 AM

No.8625340

[Report]

Anonymous

6/13/2025, 10:05:23 AM

No.8625341

[Report]

>>8625331

I'm testing it right now and it feels like it does have some use. You can't add or subtract large things to an image using this method, but you can nudge, mostly, colors, without affecting composition or other things in the image, whereas, for instance, if you prompted "red theme" in the positive like normal, it might turn a forest autumn or something. But doing negpip in the negative prompt makes it look like the original gen but with a more red tint to it.

This makes sense as the negative and positive prompts do not pay attention to each other's context.

I was also able to make the sky more clearly visible through the leaves in a forest gen, while not altering the composition of the image much. So I think this is what it (negpip in the neg) could be useful for. Nudges to existing gens without changing composition or subject matter, which might happen in pure positive prompting.

Anonymous

6/13/2025, 10:21:23 AM

No.8625345

[Report]

>>8625362

>>8625546

>>8624386 (OP)

this isn't ai. Artist name?

Anonymous

6/13/2025, 10:52:30 AM

No.8625352

[Report]

>>8625381

>>8625338

thanks for sharing!

i still have a couple of (ml noob) questions that i'd like to ask if you don't mind...

>I'm using a modified naifu script

was any part of naifu lacking in any way such that you had to make modification? or was there a custom feature that you required specific to the finetuning?

>captions are replaced with zeros with 0.1 probability (for cfg)

would you care to explain why the approach where captions are replaced with zeros is used for cfg? what impact does this make to the cfg, is it for the color blow out?

>bs=12 lr=5e-6 for 1024x, bs=4*3

>batch size 4 with gradient accumulation

i saw that your target batch size is 12 (GA (3) * BS (4))

is there any hard and fast rule as to how large a batch should be when training a diffusion model? i noted that many models are baked with a high bs (>100), e.g. illustrious 0.1 was baked with a bs of 192. should batch size be scaled relative to the size of the training dataset?

Anonymous

6/13/2025, 11:00:19 AM

No.8625359

[Report]

>>8625363

>>8625381

>>8625338

have you tried data augmentation like flips and color shifts?

Anonymous

6/13/2025, 11:12:17 AM

No.8625362

[Report]

>>8625345

>this isn't ai

???

Anonymous

6/13/2025, 11:14:06 AM

No.8625363

[Report]

>>8625359

flip aug is not only bad it's actively harmful to training. it unnecessarily uses your parameters and fucks everything up since it's, more or less, forcing training to do something twice that it's already effectively doing without you telling it to.

have a paper

https://arxiv.org/abs/2304.02628

Anonymous

6/13/2025, 11:16:22 AM

No.8625364

[Report]

>>8625381

>>8625338

>pretrained edm2 weights

Huh, you can reuse edm2 between different runs?

Anonymous

6/13/2025, 11:44:04 AM

No.8625373

[Report]

>>8625381

>>8625338

>pretrained edm2 weights

could you share those? I already have some, but wouldn't hurt to see if I could be training with better ones

>>8625352

>or was there a custom feature that you required specific to the finetuning?

Mostly this, I've been using naifu since sd-scripts suck too much

>would you care to explain why the approach where captions are replaced with zeros is used for cfg?

it's used to train uncond for the main cfg equation which is

>guidance = uncond + guidance_scale * (cond - uncond)

cfg will work regardless, but it will work better (for guiding purposes) if you train uncond. in general you shouldn't drop captions on small lora-style datasets.

>is it for the color blow out?

it has absolutely nothing to do with it

>is there any hard and fast rule as to how large a batch should be when training a diffusion model?

no, but if you are training clip you would never want to have batch size < 500 since clip uses batchnorm. large batch sizes will help the model not to encounter catastrophic forgetting due to unstable gradients, and since sdxl is such a deep model you basically never enter local minima because there is always a dimension to improve upon, as long as your lr is sufficiently high.

however, if you are relying first on gradient checkpointing, then on gradient accumulation to achieve larger batches, having very large batches may quickly become very expensive compute-wise.

>>8625359

don't you realize this is harmful, especially if you want to train asymmetrical features

>>8625364

of course, you'd not want to start from scratch every time, would you?

>>8625373

i'll upload the weights next to checkpoint then

Anonymous

6/13/2025, 11:51:48 AM

No.8625383

[Report]

>>8625776

>>8625381

>i'll upload the weights next to checkpoint then

did you share before? I missed that if you did

Anonymous

6/13/2025, 12:12:47 PM

No.8625393

[Report]

>>8625776

>>8625381

nta but what did you modify in naifu?

Anonymous

6/13/2025, 12:23:02 PM

No.8625396

[Report]

>>8625406

>>8625776

>>8625381

Just curious - how do you extract locons from full_bf16-trained checkpoints?

Tried ratio method from the rentry - and for some reason it gives me huge extracts - like 2-2.5GB. Perhaps it has something to do with weights decomposition after training in full_bf16 mode.

The ratio works fine for checkpoints trained in full_fp16, but I didn't managed to get good results from fp16 trains...

Anonymous

6/13/2025, 12:30:52 PM

No.8625399

[Report]

>>8625416

>>8625465

Anonymous

6/13/2025, 12:46:33 PM

No.8625406

[Report]

>>8625407

>>8625396

nta, I use 64 dimensions and 24 conv dimension (for locon) and that gives me 430mb and 350 mb if you don't train the te

Anonymous

6/13/2025, 12:48:23 PM

No.8625407

[Report]

>>8625406

Yeah, fixed works just fine. I was curious about the ratio one.

retrained the modded vae and now it is actually kinda usable, unlike the garbage before:

https://pixeldrain com/l/FpB4R8sa

though i think it still needs more training for use in anything large scale

also updated the node:

https://pixeldrain com/l/9AS19nrf

a comparison of 1(one) image enc+dec test, though this is not fair as the modded vae has a much larger latent space (for the same res) compared to base sdxl vae:

https://slow.pics/s/5Kc8RkPa

the practical effect of it is basically that you dont have to damage the images by upscaling to 2048 to get the equivalent quality level of a 16ch vae

i tried training a lora with it and it was slow as balls, like 3-4x

if someone wants to give it a try training, i can walk you through how to modify sd-scripts (its just applying the vae modification at one point)

Anonymous

6/13/2025, 1:17:17 PM

No.8625416

[Report]

Anonymous

6/13/2025, 1:53:43 PM

No.8625453

[Report]

>>8625458

>>8625413

im assuming that node is how you can load the vae? wouldnt be able to use this vae on reforge?

Anonymous

6/13/2025, 1:59:57 PM

No.8625457

[Report]

>>8625201

good looking titties,, I want to squeeze and kiss and suck on them

Anonymous

6/13/2025, 2:02:02 PM

No.8625458

[Report]

>>8625453

you can load it, but it will still have the downscaling making it incompatible with the trained weights, someone would have to modify forge to support it yeah

but ultimately you need to train sdxl with the vae on the higher latent resolution or you will have the same body horrors like if you try to raise genning res too high

Anonymous

6/13/2025, 2:05:31 PM

No.8625460

[Report]

Anonymous

6/13/2025, 2:06:53 PM

No.8625461

[Report]

>>8625478

>>8625413

What makes this VAE different and why does it need its own node?

Anonymous

6/13/2025, 2:10:46 PM

No.8625465

[Report]

>>8625399

What model is this?

Anonymous

6/13/2025, 2:17:26 PM

No.8625467

[Report]

>>8625413

>needs its own node

Does it work on reforge? I'm getting errors in the console but I do see a difference. Might just be placebo.

>>8625413

comparison looks nice, almost too good

Anonymous

6/13/2025, 2:35:48 PM

No.8625478

[Report]

>>8625483

>>8625461

nta, but I think he said that he removed some downscaling layer which in theory, if the vae is trained enough to adapt, would lead to sharper outputs.

Anonymous

6/13/2025, 2:37:53 PM

No.8625480

[Report]

>>8625488

>>8625413

gotta ask, but wouldn't increasing the latent space require a full retrain of sdxl?

Anonymous

6/13/2025, 2:38:39 PM

No.8625482

[Report]

>>8625476

>almost too good

too good to be true

Anonymous

6/13/2025, 2:39:40 PM

No.8625483

[Report]

Anonymous

6/13/2025, 2:46:28 PM

No.8625488

[Report]

>>8625499

>>8625476

the latent the decoder can work with is much larger, which also leads to much larger training costs, but better performance

>>8625480

this is basically training at a much higher res, rather than changing the entire dimensionality, it wont require full retrain, but it will require training for sdxl to learn to work with bigger latent sizes (very similar if you wanted to train it to not shit itself at generating 2048x2048 images)

the training and generation is also gonna be slower (though peak vram usage at the vae decoder output should be the same), but you can downscale the images and train at 512x512 if you want standard sdxl compression

Anonymous

6/13/2025, 2:46:29 PM

No.8625489

[Report]

>>8625495

>>8625413

How many epochs and what's the training set?

It looks much better than sdxl one for sure, but still a bit blurry, especially in background details.

Anonymous

6/13/2025, 2:50:18 PM

No.8625495

[Report]

>>8625489

10 epochs for encoder and 3 for decoder with 3k images, the adaptation is very fast

it will probably still improve in terms of adapting but still there is a limit to what can be done in terms of small details, the improvement is not really from the training, but rather from the larger encoded latents

>>8625488

so you basically doubled the internal resolution

Anonymous

6/13/2025, 2:56:14 PM

No.8625500

[Report]

Anonymous

6/13/2025, 3:37:04 PM

No.8625517

[Report]

>>8625499

think is Cascade could do this on the fly and it didn't work well. You still had to gen small then upscale, and manually adjust the compression level to match what you were doing. Low compression would break anatomy and high compression would kill details.

I think this may just be taking advantage of the fact that illustrious/noob are unusually stable at higher resolutions, compared to other SDXL models.

Anonymous

6/13/2025, 3:41:17 PM

No.8625521

[Report]

>>8625535

>>8625413

Is this just for trainers? I tried just replacing my vae and gens are coming out at half the resolution, so I doubled the latent dimensions but then the image becomes incoherent.

Anonymous

6/13/2025, 3:42:18 PM

No.8625525

[Report]

is finetuneschizo here? anything i can do in kohya for better hands?

Anonymous

6/13/2025, 3:52:28 PM

No.8625535

[Report]

>>8625521

yes, the body horrors stopped after i trained a lora with it on illust 0.1, but it would require a larger tune to truly settle in

comfy has hardcoded 8x compression for emptylatentimage so yeah you gotta put in 2x

Anonymous

6/13/2025, 4:02:59 PM

No.8625546

[Report]

>>8625548

>>8625345

>this isn't ai

kekmao

>Artist name?

Me, your favorite slopper

Anonymous

6/13/2025, 4:04:53 PM

No.8625548

[Report]

>>8625599

>>8625546

this isn't hentai. Cum splotch?

Anonymous

6/13/2025, 4:05:03 PM

No.8625549

[Report]

>>8625562

>>8625413

I tested genning with this and I feel like it's a bit muddier in how it renders textures compared to noob's normal vae (I guess that's just standard sdxl vae?), though it does seem slightly sharper for linework. And both feel slightly worse than lpips_avgpool_e4.safetensors.

Anonymous

6/13/2025, 4:20:52 PM

No.8625562

[Report]

>>8625568

>>8625549

>lpips_avgpool_e4.safetensors

Huh, link?

Anonymous

6/13/2025, 4:22:39 PM

No.8625564

[Report]

>>8625569

So in the end it's all just more snake oil...

Anonymous

6/13/2025, 4:30:31 PM

No.8625568

[Report]

>>8625598

Anonymous

6/13/2025, 4:43:08 PM

No.8625569

[Report]

>>8625564

Snake oil does nothing. This clearly does something, just not sure if it's better or worse.

>>8625568

It cost more effort to post this link than it would've to just point the guy at the pixeldrain

Anonymous

6/13/2025, 5:23:00 PM

No.8625599

[Report]

>>8625548

cum filled pocky

Anonymous

6/13/2025, 5:26:21 PM

No.8625603

[Report]

>>8625609

>>8625598

give a guy a fish, he'll eat for a day

Anonymous

6/13/2025, 5:29:26 PM

No.8625609

[Report]

>>8625603

give a guy a subscription to a fish delivery service and he'll eat forever

Anonymous

6/13/2025, 5:46:23 PM

No.8625629

[Report]

good reflections are hard

Anonymous

6/13/2025, 6:42:28 PM

No.8625700

[Report]

>>8625701

black pill me on

https://www.youtube.com/watch?v=XEjLoHdbVeE&list=RDXEjLoHdbVeE&start_radio=1

Anonymous

6/13/2025, 6:43:29 PM

No.8625701

[Report]

>>8625706

>>8625700

just fine-tune bro

Anonymous

6/13/2025, 6:45:20 PM

No.8625706

[Report]

>>8625701

I did, but finetuneschizo disappeared and I'm out of parameters to mess with

>civitai wants to further censor their dogshit site and they do it by le HAHA YOU ARE DOING GOOD AMBASSADOR,LE HECKIN POWER FOR YOU

goddamn faggots,why are they doing it?

the site is also so fucking dogshit you can't even search by certain filters

>>8625712

Chub has gone this route as well for textgen. No one ever imagined that the cyberpunk dystopia would be a sexless normiefilled existence.

Anonymous

6/13/2025, 6:54:12 PM

No.8625716

[Report]

>>8625715

>Chub has gone this route as well for textgen.

And it is pretty dead now.

Anonymous

6/13/2025, 6:58:57 PM

No.8625719

[Report]

>>8625712

Is it really a mystery? Go look at their financial breakdown for last year, particularly the wages. I'd be sucking mad cock too if my ability to pay myself that much was threatened

Anonymous

6/13/2025, 7:07:50 PM

No.8625729

[Report]

>>8625734

Which ControlNet model should I use for a rough MS Paint sketch/scribble?

Anonymous

6/13/2025, 7:08:57 PM

No.8625731

[Report]

Anonymous

6/13/2025, 7:11:49 PM

No.8625733

[Report]

>>8625413

excellent. now do a finetune of flux's vae and send it to lumina's team

Anonymous

6/13/2025, 7:11:58 PM

No.8625734

[Report]

>>8625735

Anonymous

6/13/2025, 7:13:38 PM

No.8625735

[Report]

>>8625737

>>8625734

Thank you I already tried this one but my results werent great so far do you have a short guide with settings for it by any chance ?

>>8625735

This should be more than enough, play around with the control weight if you are not getting what you want

Anonymous

6/13/2025, 7:20:32 PM

No.8625738

[Report]

>>8624918

naiXLVpred102d_custom is king

Anonymous

6/13/2025, 7:32:36 PM

No.8625743

[Report]

>>8625737

Works great thanks a lot! :D

i hate belly buttons and nipples

Anonymous

6/13/2025, 7:49:11 PM

No.8625763

[Report]

>>8625778

Anonymous

6/13/2025, 7:53:02 PM

No.8625769

[Report]

>>8625773

>>8625744

why did you prompt for them then?

Anonymous

6/13/2025, 7:53:53 PM

No.8625773

[Report]

1152x2048, 22 steps, euler

1536 ft / 1024 ft+1536 extract / noob v1+1536 extract / 102d+1536 extract / 1024ft / noob v1 / 102d

>>8625383

https://huggingface.co/nblight/noob-ft

>>8625393

nothing you want to concern yourself with since it's mostly experimental stuff "except" edm2

>>8625396

>ratio method

idk, i've never used it

Anonymous

6/13/2025, 7:55:08 PM

No.8625778

[Report]

>>8625783

>>8625763

Are you getting errors in the console too? I find it adds more details but makes things a bit blurry.

Anonymous

6/13/2025, 7:57:22 PM

No.8625783

[Report]

>>8625778

No errors or whatsoever when I load it

>I find it adds more details but makes things a bit blurry.

Same here

Anonymous

6/13/2025, 8:15:16 PM

No.8625821

[Report]

>>8625776

thank you for sharing this! indeed hires is much more stable even with the loras I usually use

Anonymous

6/13/2025, 8:19:31 PM

No.8625829

[Report]

>>8625834

>>8625776

what kind of settings should I be using to gen with this model?

Anonymous

6/13/2025, 8:23:40 PM

No.8625834

[Report]

>>8625844

>>8625829

nothing should be *too* different from your regular noob vpred except you can generate at 1536x1536 right away

Anonymous

6/13/2025, 8:27:37 PM

No.8625842

[Report]

>>8625744

seems like an upscaling issue

Anonymous

6/13/2025, 8:29:13 PM

No.8625844

[Report]

>>8625850

>>8625834

I am not getting anything like I usually do so I'll do some tests on it

>>8625844

you'll have to post a catbox

Anonymous

6/13/2025, 9:05:11 PM

No.8625882

[Report]

>>8625899

>>8625776

What are your EDM2 training settings? From the weights, I assume you use 256 channels? Good ol’ AdamW?

Anonymous

6/13/2025, 9:05:29 PM

No.8625884

[Report]

>>8625598

It did not. In the first place that is how I found it myself. I just copy and pasted the url of the page I was on, I didn't even check if the pixeldrain link was valid.

Anonymous

6/13/2025, 9:10:50 PM

No.8625893

[Report]

>>8625899

>>8625776

Does this just not work on reForge? OOTL

Anonymous

6/13/2025, 9:15:11 PM

No.8625899

[Report]

>>8625931

Anonymous

6/13/2025, 9:27:24 PM

No.8625909

[Report]

>>8625911

is there a way to control character insets reliably with lora/tag? stuff like the shape of the border, background of the inset, forcing them to not be touching the edge of the canvas, whether it has a speech bubble tail.

Anonymous

6/13/2025, 9:30:21 PM

No.8625911

[Report]

>>8625909

not really, your best option as always is to doodle around and then inpaint to blend it into your gen

Anonymous

6/13/2025, 9:34:09 PM

No.8625915

[Report]

>>8625925

>>8625850

i'm still testing things around but it's not looking good on my end, out of 6 style mixes so far, only 1 looks okay and that's because that style it's way too minimalist overall, pic and catbox not related

>https://files.catbox.moe/8mwdc4.png

>>8625915

>sho \(sho lwlw\)

>ningen mame

These were not present in the dataset at all, so that's about to be expected. Try using the lora extract on top of your favorite shitmix or even base noob (or you can even extract the difference and merge it to a model yourself) which should tamper with styles far less than the genning on the actual trained checkpoint while still keeping 1536x res.

Anonymous

6/13/2025, 9:49:15 PM

No.8625930

[Report]

>>8625963

>>8625925

>Try using the lora extract on top of your favorite shitmix or even base noob

Hmm alright, I'll do that

Anonymous

6/13/2025, 9:49:22 PM

No.8625931

[Report]

>>8625951

>>8625899

I guess it kinda helps prevent anatomy melties but the it melts the styles

https://files.catbox.moe/fqvjgf.png

I'd rather just gacha it

Anonymous

6/13/2025, 9:51:30 PM

No.8625938

[Report]

>>8625941

>>8625925

that cock? mine.

Anonymous

6/13/2025, 9:52:34 PM

No.8625941

[Report]

>>8625944

>>8625938

It's all yours my friend.

Anonymous

6/13/2025, 9:53:20 PM

No.8625944

[Report]

Anonymous

6/13/2025, 9:53:21 PM

No.8625945

[Report]

>>8625951

>>8625925

>Try using the lora extract on top of your favorite shitmix or even base noob

Ok yeah that's definitely more doable

https://files.catbox.moe/iyld8p.png

Anonymous

6/13/2025, 9:56:21 PM

No.8625951

[Report]

>>8625980

>>8625931

>>8625945

>1040x1520

You know it's a way too small of a resolution for a 1536x base res checkpoint, right? You won't see much of an effect and it even may look worse than it should (think genning at 768x768 on noob). Use a rule of thumb:

height = 1536 * 1536 / desired width

width = 1536 * 1536 / desired height

Anonymous

6/13/2025, 9:58:32 PM

No.8625954

[Report]

Anonymous

6/13/2025, 10:23:01 PM

No.8625963

[Report]

>>8625976

>>8626138

>>8625925

>>8625930

Yeah using the lora extract on my beloved 102d custom is way better than using that model itself

The gens still need some inpaint here and there but genning on a higher resolution works very well

May I know what kind of black magic is this?

Anonymous

6/13/2025, 10:25:53 PM

No.8625966

[Report]

>>8625776

Combining this with kohya deepshrink seems to make "raw" genning at 2048x2048 reasonable anatomy wise

Anonymous

6/13/2025, 10:38:51 PM

No.8625976

[Report]

>>8625963

>May I know what kind of black magic is this?

copier lora effect. ztsnr plays some role 100%, would be interesting to see a comparison to illustrious at 1536x

Anonymous

6/13/2025, 10:40:23 PM

No.8625977

[Report]

>>8625992

>>8625413

Looks really promising. Would appreciate you sharing the sd-scripts modifications if they're simple enough (or just a few pointers even) so I dont have to vibe code shit with claude.

Anonymous

6/13/2025, 10:46:40 PM

No.8625980

[Report]

>>8625982

>>8625984

Anonymous

6/13/2025, 10:48:19 PM

No.8625982

[Report]

>>8625980

102d my beloved...

Anonymous

6/13/2025, 10:49:21 PM

No.8625984

[Report]

>>8626011

>>8625980

wait shit i applied the lora and the model mea culpa

still though, agree with anon that it's better as the lora than the checkpoint

without the errant lora

https://files.catbox.moe/tl4uzw.png

Anonymous

6/13/2025, 10:58:09 PM

No.8625991

[Report]

>>8625999

>>8626000

>>8625413

Is that only usable with the Cumfy node for now? Minimal difference on forge. Also fucking hell, it really does make you think about how 90% improvement is hindered by people just not really knowing what they're doing if some random anon can bake this and have it work.

Anonymous

6/13/2025, 10:58:16 PM

No.8625992

[Report]

>>8626001

>>8625977

it may not be the most elegant way but here:

https://pastes.dev/8FLPusLmTg

also the weights are in diffusers format, so create a folder for the vae and rename the model to diffusion_pytorch_model.safetensors and put the config.json from sdxl vae in the folder you created

https://huggingface.co/stabilityai/sdxl-vae/blob/main/config.json

load the vae with --vae the_folder_you_put_it_in

also if you have cache_latents_to_disk enabled and there are already cached latents in the folder, it wont check them and will use the old ones, so either delete the npz files in ur dataset folder or use just cache_latents

Anonymous

6/13/2025, 11:00:23 PM

No.8625994

[Report]

uv me beloved

Anonymous

6/13/2025, 11:07:34 PM

No.8625999

[Report]

>>8625991

Ok, actually, how are you supposed to load that node? I don't get it.

Anonymous

6/13/2025, 11:07:50 PM

No.8626000

[Report]

>>8626005

>>8625991

its just made as a demonstration for people that might be interested in training with it for now, the example isnt made by genning with it, but purely by encoding and then decoding an image

it is NOT free lunch, its just a way to upgrade sdxl without retraining it completely for 16ch vae, but sdxl is still going to need to have someone to finetune it, it WILL use more vram and be slower during both training and genning, though less than if you were to gen at a high resolution (there is a HUGE amount of vram used during vae decoding depending on the final output res)

the encoded latents are flux level large and even less efficient

Anonymous

6/13/2025, 11:08:51 PM

No.8626001

[Report]

>>8625992

Thanks anon, my endless list of random shit to test grows.

Anonymous

6/13/2025, 11:10:11 PM

No.8626002

[Report]

>>8626227

Anonymous

6/13/2025, 11:12:30 PM

No.8626005

[Report]

>>8626017

>>8626000

>the encoded latents are flux level large and even less efficient

this is the problem right there, it's 4x more pixels to train, and you probably can't even do a proper unet finetune on consumer hardware

Anonymous

6/13/2025, 11:14:41 PM

No.8626008

[Report]

>>8626012

Anonymous

6/13/2025, 11:15:43 PM

No.8626011

[Report]

>>8626096

>>8625984

It's still quite strange that noob simply cannot handle 1girl, standing. Wtf happened?

Anonymous

6/13/2025, 11:17:17 PM

No.8626012

[Report]

>>8626020

>>8626008

Umm, sweaty? Tentacles are /d/

Anonymous

6/13/2025, 11:18:29 PM

No.8626015

[Report]

>94 seconds

Anonymous

6/13/2025, 11:20:36 PM

No.8626017

[Report]

>>8626100

>>8626005

i agree, though the training shouldnt be very extensive, since hopefully it should be """just""" geting sdxl used to genning at higher (latent) resolutions with the base knowledge already there

Anonymous

6/13/2025, 11:20:52 PM

No.8626018

[Report]

lil bro is fighting ghosts again...

Anonymous

6/13/2025, 11:21:22 PM

No.8626020

[Report]

Anonymous

6/13/2025, 11:25:42 PM

No.8626022

[Report]

>download comfyui

>unexplained schizoshit

>delete comfyui

Anonymous

6/13/2025, 11:29:59 PM

No.8626034

[Report]

don't forget to make your reddit post about it bro

Anonymous

6/13/2025, 11:43:35 PM

No.8626054

[Report]

>>8626067

I have a plan, but the overtime im currently working right now prevents me from doing it. it's intense and there's just too much on my plate physically until i can finally go back to my regularly scheduled shitposting, editing, ai generating, sauce making disaster of a life before that sudden train wrec-ah yeah im busy as hell for a few more days.

I do check the main boards for more of your images from time to time, as it really is something i enjoy collecting and looking at. so much text to sift through unfortunately.

i do have one request and i was hoping you could uh, maybe gen your miqo like as rebecca from that cyberpunk anime if you can? get the general outfit down for that, maybe that will inspire me to do more stuff once im done with the disaster going on in my life right now. i found rebecca's design to be quite nice.

saddened that i can't provide a pic, it feels wrong to not be able to share an image in your presence. I have lots of draws and stuff i "could" share but i am not confident enough in my skills or time available to me to be able to follow up on such things yet...................

Anonymous

6/13/2025, 11:45:40 PM

No.8626058

[Report]

>>8626063

holy, someone really needs his meds

Anonymous

6/13/2025, 11:47:42 PM

No.8626063

[Report]

I tried warning you guys months ago. Moderation is very anti-ai, this is why they ban everything you like and keep everything you hate. /e/ has been hit by a blatant spambot for a while now with nothing done about it, those who report it get banned.

>>8626058

Anonymous

6/13/2025, 11:49:24 PM

No.8626067

[Report]

>>8626073

>>8626054

is this a copy pasta from treechan from the miqo thread.....

Anonymous

6/13/2025, 11:51:35 PM

No.8626073

[Report]

>>8626067

Ohhhhhhhh it is

Anonymous

6/13/2025, 11:54:26 PM

No.8626075

[Report]

Oopsie :)

Anonymous

6/14/2025, 12:00:48 AM

No.8626080

[Report]

>>8626082

>be newfag

>see random looking off-topic posts I don't understand

>just continue on with life

Anonymous

6/14/2025, 12:05:58 AM

No.8626082

[Report]

>>8626080

Based. This is the correct way to browse 4plebs.

Anonymous

6/14/2025, 12:42:58 AM

No.8626096

[Report]

>>8626011

>Wtf happened?

1024x1024 train resolution

Anonymous

6/14/2025, 12:46:49 AM

No.8626100

[Report]

>>8626017

>"""just"""

there's actually a lot of hires knowledge missing, textures are smudgy, eyes, film grain, etc etc, the model should be pretrained at that reso tbqh. similar story with vpred and ztsnr, it works on paper but when you actually try to train it...

>ai is trash

Meanwhile im getting all i can imagine

>>8626117

this looks terrible like all ai videos outside of google veo

Anonymous

6/14/2025, 1:12:26 AM

No.8626119

[Report]

>>8626125

>>8626117

Is this huanyuan or whatever?

Anonymous

6/14/2025, 1:13:27 AM

No.8626121

[Report]

>>8626118

>google veo

That shit looks pretty bad too though.

Anonymous

6/14/2025, 1:15:00 AM

No.8626122

[Report]

who is lil bud fighting with?

Anonymous

6/14/2025, 1:16:33 AM

No.8626124

[Report]

:skull:

>>8626119

This one is Wan Vace, i'm still exploring it, there is so much stuff to try with it.

Anonymous

6/14/2025, 1:18:04 AM

No.8626127

[Report]

>>8626125

Nice. I thought wan couldn't do anime at all. I'm too busy drinking snake oil here to try it though.

Anonymous

6/14/2025, 1:18:27 AM

No.8626128

[Report]

>>8626118

An amateur of DEi gens trully an /h/ oldfag

Anonymous

6/14/2025, 1:27:02 AM

No.8626137

[Report]

>>8626118

It's funny you mention that. I was looking at some live2d animations just a second ago and there is some really bad stuff out there, honestly kind of worse than what he genned. People forget that there's a sea of garbage AI or not, and in the end AI is not the worst enemy, it's the people using it and whether they have some sense not to post garbage onto the internet.

Anonymous

6/14/2025, 1:28:09 AM

No.8626138

[Report]

>>8625963

Now that's a Comfy Background.

Anonymous

6/14/2025, 1:37:33 AM

No.8626141

[Report]

>>8626117

>>8626125

Mind sharing a workflow, even if it's borked? Maybe it's time to retry videogen

Anonymous

6/14/2025, 3:23:01 AM

No.8626190

[Report]

>>8626204

Anonymous

6/14/2025, 3:35:19 AM

No.8626198

[Report]

some sisterly love for tonight

Anonymous

6/14/2025, 4:03:39 AM

No.8626204

[Report]

>>8626190

Nice thigh gap.

Anonymous

6/14/2025, 5:03:19 AM

No.8626219

[Report]

>>8626235

Reforge just started throwing random errors every gen but I haven't pulled in a while...is this it?

Anonymous

6/14/2025, 5:12:40 AM

No.8626227

[Report]

>>8626002

I love her expression

>I was here all dressed up like a whore so I can get some shikikan dick.

>He's busy fucking Taihou. TAIHOU

>I'll have to satisfy myself with Takao's dildo.

Anonymous

6/14/2025, 5:13:37 AM

No.8626228

[Report]

>>8626390

r34 comment section has breached containment

Holy shit I just genned at 2048 res using kohya + ft extract and it just werked as if it was a native 2048 model, even with my real world 400 token prompt, with other loras applied, with negpip, with tons of prompt editing hackery.

LFG TO THE MOON BRAHS

Anonymous

6/14/2025, 5:29:16 AM

No.8626235

[Report]

>>8626290

>>8626219

have you tried refreshing the webui

you probably just have some random option toggled or forgot you have s/r x/y plots on and no longer have what it's searching for in prompt and it's breaking your shit.

Anonymous

6/14/2025, 5:31:57 AM

No.8626238

[Report]

>>8626230

>I just [snake oil]

Anonymous

6/14/2025, 5:32:27 AM

No.8626239

[Report]

>>8626240

>>8626230

Yeah, I am really liking to gen on a higher base gen resolution, is quite handy

Now if we only had a proper smea implementation on local...

>>8626239

>Now if we only had a proper smea implementation on local...

The SMEA implementation on local is the proper one, NAI came out and said that they fucked it up on their own but it still made their model produce the kind of very awa crap asians love so they kept it

Anonymous

6/14/2025, 5:40:04 AM

No.8626242

[Report]

>>8626240

>The SMEA implementation on local is the proper one

LOL

Anonymous

6/14/2025, 5:40:30 AM

No.8626243

[Report]

>>8626240

ggs then, I need another workflow to really take advantage of this hack, I'm not totally happy with my final results

Hopefully someone writes an easy rentry for brainlets. I don't yet see the benefit.

Anonymous

6/14/2025, 5:44:48 AM

No.8626246

[Report]

>>8626244

There isn't any, it's just more pointless tinkertrooning by cumfy autists

Anonymous

6/14/2025, 6:16:29 AM

No.8626267

[Report]

>>8626277

cumfy bwos, our genuine response?

Anonymous

6/14/2025, 6:22:59 AM

No.8626277

[Report]

>>8626230

Hmm, ok so maybe I spoke a bit too soon. I just tested it with background/scenery-focused prompts and the image content is quite a bit different from what the model normally generates.

Maybe this isn't suitable for all prompts, art styles, and loras, though I'm surprised it worked so well with my first prompt.

>>8626267

What? I'm not using the new vae that was posted, this is literally just a lora you can load up in reforge.

Anonymous

6/14/2025, 6:25:01 AM

No.8626280

[Report]

recommended training steps for this simple design? its a vrc avatar so mostly 3d data

Anonymous

6/14/2025, 6:36:37 AM

No.8626290

[Report]

>>8626235

I did have x/y plots enabled but that wasn't it. It was just randomly crashing in the middle of doing gens. I cleared the cache and it fixed itself it seems, I guess something there was causing the error.

>>8626244

The only real benefit is to completely skip the upscale step

This is what I wanted RAUNet to be, a way to do extreme resolutions directly while having all the ""diversity"" and ""creativity"" of a regular base gen so I am very happy with it

Anonymous

6/14/2025, 6:56:07 AM

No.8626301

[Report]

>>8626436

Yeah, i can't gen with this vae without --highvram. and i can't do that, cause i'm a 8gb vramlet.

>1000's of gooner artists

>stick to about 10-15 that I rotate and mix about in my mixes

>enough is enough!

>spend 30 minutes browsing artists in the booru

>note down a few I like

>go full autismo mixing and weighing

>smile and optimism: restored

Ah. You were at my side all along..

https://files.catbox.moe/s38kxh.png

Anonymous

6/14/2025, 9:02:00 AM

No.8626374

[Report]

>>8626815

>>8626291

What are your settings? I'm getting good (generally the same composition, colors, coherency, ,etc) gens with some prompts but very much not others, and it also varies with block size and downscale factor, where some prompts work better with certain block size and downscale factor combinations, but some prompts just never achieve the same quality/coherency as the vanilla setup with no lora. But I haven't messed with the other settings so maybe those help?

Anonymous

6/14/2025, 9:08:32 AM

No.8626378

[Report]

Anonymous

6/14/2025, 9:35:00 AM

No.8626390

[Report]

>>8626228

limitless girl looking for a limitless femboy to ruin :333

>>8626342

for me it's testing 20k artists and realizing how many of them are unremarkable

Anonymous

6/14/2025, 9:49:52 AM

No.8626404

[Report]

>>8625744

funny that right after i complained about upscaling mangling belly buttons again a new snake oil to tackle it comes out

im a vramlet so im just using the lora on an upscale pass instead of genning straight to higher res but it seems to work

https://files.catbox.moe/w08kbf.png

https://files.catbox.moe/empa0r.png

Anonymous

6/14/2025, 10:17:03 AM

No.8626422

[Report]

>>8626423

>>8626481

>>8626392

80% of danbooru artists are completely interchangable style wise, and then you find some guy who has some amazing unique style and he has 3 posts on danbooru and a twitter that is just him uploading his gacha pulls

Anonymous

6/14/2025, 10:19:46 AM

No.8626423

[Report]

>>8626422

I love the ones where you see some damn amazing pic and it's either one of three of his on the whole internet or all his other pics don't look as good.

Anonymous

6/14/2025, 10:37:09 AM

No.8626435

[Report]

>>8626437

Anonymous

6/14/2025, 10:38:18 AM

No.8626436

[Report]

>>8626301

Buy used 3090 if you can, it's super cheap used right now.

Anonymous

6/14/2025, 10:38:48 AM

No.8626437

[Report]

>>8626438

>>8626435

nice composition on this one

care to box it up?

Anonymous

6/14/2025, 10:43:14 AM

No.8626438

[Report]

>>8626439

>>8626437

https://files.catbox.moe/4kbhpa.png

inpainting and color correction img2img passes were used later

Anonymous

6/14/2025, 10:44:14 AM

No.8626439

[Report]

>>8626438

cool. thanks bwo

Anonymous

6/14/2025, 10:52:29 AM

No.8626444

[Report]

>>8626460

>>8626480

>>8626291

>The only real benefit is to completely skip the upscale step

But you just loosing advantages of the second pass, which are mostly to add alot of details and remove leftover noise. Ideally when model trained like that it should never break anything at both passes by giving better consistency at higher denoise levels of the second pass, like how it could be with some models doubling belly buttons or something like this only with hiresfix, especially with landscape reso. Have you tried to do it like 1216x832 - upscale?

Anonymous

6/14/2025, 11:12:54 AM

No.8626460

[Report]

>>8626469

>>8626514

>>8626444

I mean it's not like "second pass" is some kind of magic, you're just generating the same model with a denoise at a higher resolution. A better base res gets you 100% of the potential instead of 10-50% or whatever of the denoise amount.

Of course assuming it works well, which is somewhat debatable.

Anonymous

6/14/2025, 11:40:25 AM

No.8626469

[Report]

>>8626460

I would also like to add that denoising by 0.3 or whatever doesn't actually mean you are changing that many pixels. The RMSE between the base upscale and denoised picture with 0.3 denoise is like 95% similarity, 93% for 0.5.

Anonymous

6/14/2025, 12:07:05 PM

No.8626480

[Report]

>>8626514

>>8626444

>and remove leftover noise

There's no noise in a fully denoised picture, anon.

Anonymous

6/14/2025, 12:09:56 PM

No.8626481

[Report]

>>8626392

>>8626422

Yeah I did run into a lot of high quantity artists that shared similar styles. To no surprise, a focus on gacha sluts. Try them on a model and if you're not inputting the artists yourself, you'd swear your results were all the same. But it's fun throwing in the few artists that do stand out into a mix and seeing what happens.

https://files.catbox.moe/sa409h.png

Anonymous

6/14/2025, 12:38:26 PM

No.8626502

[Report]

>>8626756

What Cyber-Wifu11 using? Can't replicate his style

Anonymous

6/14/2025, 1:08:02 PM

No.8626514

[Report]

>>8626516

>>8626460

>10-50%

Yes, the limitation of a base model is why denoise on the second pass was always low. If multires model works really well, there should be some boost for that, allowing you to raise it higher than 0.5 while preserving consistency while still getting details, just like controlnet or other tools did

>>8626480

Sometimes there is, despite of full denoising first pass, but rarely after the second. But yeah not very actual for latest models

Anonymous

6/14/2025, 1:15:40 PM

No.8626516

[Report]

>>8626561

>>8626514

>Sometimes there is

No, there is no noise by definition retard. Here's what the image would look like at 0.09 (de)noise.

I'm tired of having fade colors in my generated pics, is there anything I can do to have nice bright colors (not fried/saturated)?

Anonymous

6/14/2025, 1:38:18 PM

No.8626524

[Report]

>>8626525

>>8626518

download photoshop

Anonymous

6/14/2025, 1:39:21 PM

No.8626525

[Report]

>>8626527

Anonymous

6/14/2025, 1:41:15 PM

No.8626527

[Report]

>>8626525

/hdg/ is on the other tab bwo

Anonymous

6/14/2025, 2:19:47 PM

No.8626561

[Report]

>>8626755

>>8626516

It's not as pronounced as stopping ~3 steps earlier out of 28. Did you really never got outputs with some noisy parts somewhere on the image?

Does anyone know some cute style (artists or loras) I can use to generate petite/slim girls? (not lolis!)

Anonymous

6/14/2025, 2:41:39 PM

No.8626572

[Report]

>>8626578

Anonymous

6/14/2025, 2:42:12 PM

No.8626575

[Report]

I want Pekomama.

Anonymous

6/14/2025, 2:43:45 PM

No.8626576

[Report]

>>8625413

There is something wrong with the comfy node. It downscales output image by 2 from 1024 to 512 for some reason and tile decode node is just completely fucked when using it

Anonymous

6/14/2025, 2:46:11 PM

No.8626579

[Report]

>>8626581

>>8626578

Are you sure you are not gay?

Anonymous

6/14/2025, 2:46:30 PM

No.8626580

[Report]

>>8626581

>>8626578

huh, so you're fine with everything else?

Anonymous

6/14/2025, 2:48:34 PM

No.8626581

[Report]

>>8626579

Yes, I'm sure I like my girls girly and not manly.

>>8626580

You mean realistic hairy genitalia and such? Whatever, that stuff can have it's place, but I would never tolerate those faces.

>>8626573

That's literally loli... No juvenile stuff please

Anonymous

6/14/2025, 2:51:23 PM

No.8626585

[Report]

Anonymous

6/14/2025, 2:52:19 PM

No.8626586

[Report]

>>8626578

yeah i was trolling. try imo-norio or soso

Anonymous

6/14/2025, 2:52:20 PM

No.8626587

[Report]

I unironically like laserflip, he's a staple of grosscore

Anonymous

6/14/2025, 2:54:11 PM

No.8626590

[Report]

Anonymous

6/14/2025, 2:56:28 PM

No.8626594

[Report]

Anonymous

6/14/2025, 2:58:20 PM

No.8626595

[Report]

Does he know?

Anonymous

6/14/2025, 3:01:20 PM

No.8626597

[Report]

>>8626605

>google fellatrix

>get some obscure 2005-core portugese trash metal album

cool

Anonymous

6/14/2025, 3:12:10 PM

No.8626605

[Report]

>>8626607

>>8626597

if you don't know hentai pillars, you don't belong here

Anonymous

6/14/2025, 3:14:31 PM

No.8626607

[Report]

>>8626609

>>8626605

for me it's aaaninja

Anonymous

6/14/2025, 3:15:32 PM

No.8626609

[Report]

>>8626607

i was actually thinking of edithemad but he also fits

Anonymous

6/14/2025, 3:19:53 PM

No.8626615

[Report]

>>8626616

Is there a way to do hires without messing anything up? It feels like no matter how I dial the settings, things like straight lines will become wobbly, things and tons of details will be erased, while other unnecessary and nonsensical details will be added.

Anonymous

6/14/2025, 3:21:01 PM

No.8626616

[Report]

>>8626621

Anonymous

6/14/2025, 3:23:10 PM

No.8626618

[Report]

>Gonna drink from your usual bottle sir?

https://files.catbox.moe/zlkovc.png

Anonymous

6/14/2025, 3:24:14 PM

No.8626620

[Report]

>>8626631

>gen at 1536x1536 without the lora just out of curiosity

>it more or less just works with the particular pose i tried

These newer models are really stable compared to what we had before, if I tried to gen at 1.5x on 1.5 it'd just melt into a blob pancake all over the picture

Granted if you try to do more complicated poses you still get fucked up shit but it's still interesting