Anonymous

7/6/2025, 11:19:46 PM

No.8653057

[Report]

>>8654029

/hgg/ Hentai Generation General #012

Anonymous

7/6/2025, 11:21:51 PM

No.8653062

[Report]

>>8653048

>1c is trained on the main cluster

>uploaded 7 months ago

They didn't have compute then afaik, one of euge's v-pred experiments?

Anonymous

7/6/2025, 11:22:33 PM

No.8653063

[Report]

>dirty dozen

Anonymous

7/7/2025, 12:15:12 AM

No.8653101

[Report]

>>8653124

>>8650468

What do you use to merge multiple custom masks like that? I think Attention Couple (PPM) node works but I'm not entirely sure if it's the best one.

Comfy Couple is just for 2 rectangle areas, right?

>>8653101

Conditioning (Set Mask), it's a built-in node. It takes the conditioning for one area, and a mask telling it where the area is.

If you're also using controlnet then the whole mess together would look like this:

https://litter.catbox.moe/jnsdso5o9352yq46.png

I use the mask editor built into Load Image node, you can just right-click it with an image loaded.

What are the /h/ approved shitmixes again? it's been a while since I've been here and I'm getting into genning again.

Anonymous

7/7/2025, 12:42:42 AM

No.8653127

[Report]

>>8653130

>>8653125

how

how this fucking question gets asked EVERY SINGLE THREAD

HOW

Anonymous

7/7/2025, 12:47:51 AM

No.8653129

[Report]

>>8653152

>>8653124

I was interested in what you are using after combining the conditionings. Turns out you are using Attention Couple, but when I'm trying to install missing nodes it installs Comfy Couple instead and your node is still missing. They have different inputs, Comfy Couple overrides the areas I set back to rectangles it looks like.

Anonymous

7/7/2025, 12:48:58 AM

No.8653130

[Report]

>>8653135

>>8653126

Based Wai-KING.

>>8653127

Because the OPs are useless?

Anonymous

7/7/2025, 12:56:38 AM

No.8653133

[Report]

>>8653148

>>8653124

Just want to make sure since it doesn't install automatically for me. Are you using this node atm?

https://github.com/laksjdjf/attention-couple-ComfyUI

Looks like that one got archived and is now part of some large node compilation.

Anonymous

7/7/2025, 12:59:09 AM

No.8653134

[Report]

Anonymous

7/7/2025, 12:59:48 AM

No.8653135

[Report]

>>8653130

>Because the OPs are useless?

The autistic obscurantist mind cannot grasp this simple concept

Anonymous

7/7/2025, 1:04:51 AM

No.8653142

[Report]

Anonymous

7/7/2025, 1:14:35 AM

No.8653145

[Report]

>>8653256

Anonymous

7/7/2025, 1:14:44 AM

No.8653148

[Report]

>>8653156

>>8653133

Yes. It's deprecated now in favor of

https://github.com/laksjdjf/cgem156-ComfyUI but I couldn't figure out what the "base mask" is about, so I kept using the old one. Not like it needs new features.

Anonymous

7/7/2025, 1:17:36 AM

No.8653152

[Report]

>>8653156

>>8653129

The node is optional btw, if you skip it you'll be using latent couple. Which is a lot more strict, attention mode prefers making a consistent image over sticking to the defined areas.

Anonymous

7/7/2025, 1:32:15 AM

No.8653156

[Report]

>>8653148

Thanks, I'll test this one against PPM version I got working.

>>8653152

Yeah I figured. It does bleed styles more with attention thingie, but it probably works better this way for what I'm doing. This shit really blows up my workflow size, holy shit.

Anonymous

7/7/2025, 4:43:35 AM

No.8653256

[Report]

>>8653145

Nice. Got a box?

Please, does anyone one what model and LoRA this guy is using? I've wanted to copy this exact style for quite some time now but I can't get it right.

https://x.com/OnlyCakez1

https://www.pixiv.net/en/users/113960180

Anonymous

7/7/2025, 5:19:26 AM

No.8653275

[Report]

>>8653360

>>8653271

Just make a lora

Anonymous

7/7/2025, 6:41:14 AM

No.8653325

[Report]

>>8653271

nyalia and afrobull

Anonymous

7/7/2025, 6:53:56 AM

No.8653342

[Report]

>>8653556

>>8653271

I wonder why these requests are always for the grossest grifter styles possible.

Anonymous

7/7/2025, 7:08:47 AM

No.8653360

[Report]

Anonymous

7/7/2025, 9:14:28 AM

No.8653444

[Report]

>>8653445

Anonymous

7/7/2025, 9:19:21 AM

No.8653445

[Report]

>>8653444

love this lil nigga like you wouldn't believe

How should I approach training a lora for a character that noob vpred already knows but not really well? Should I train the TE and use the original tag or make a separate one? What dims/alpha should I set?

Anonymous

7/7/2025, 10:39:18 AM

No.8653477

[Report]

>>8653452

no te, use the original tag, 8/4

Anonymous

7/7/2025, 12:22:26 PM

No.8653521

[Report]

>>8653452

I've only done this for styles, when I reused the existing tag I only needed like a quarter of my usual steps. Don't think you need to adjust your config in any way, and it's always better to train more and save the earlier epochs too in case they're enough.

>>8653342

Because they're the most popular?? Face it, your aesthetic "taste" is clearly in the minority.

Anonymous

7/7/2025, 1:11:33 PM

No.8653561

[Report]

>>8653556

Which is why there threads are so slow. I think a better question is why ask for those styles here? The people who would know are those who enjoy them.

Anonymous

7/7/2025, 3:06:16 PM

No.8653613

[Report]

>>8653556

I mean that slopper isn't even popular

Anonymous

7/7/2025, 3:35:03 PM

No.8653633

[Report]

Anonymous

7/7/2025, 3:36:52 PM

No.8653635

[Report]

>>8653637

>6 fingers

Anonymous

7/7/2025, 3:38:34 PM

No.8653637

[Report]

Anonymous

7/7/2025, 3:38:54 PM

No.8653638

[Report]

>nyogen

Anonymous

7/7/2025, 3:54:02 PM

No.8653642

[Report]

>>8653644

Post your fingers if they are so great.

Anonymous

7/7/2025, 4:02:35 PM

No.8653644

[Report]

>>8653642

but my fingers are /aco/

Anonymous

7/7/2025, 6:08:04 PM

No.8653748

[Report]

>>8653691

cute pink drool

Anonymous

7/7/2025, 6:45:06 PM

No.8653770

[Report]

Move along friend, this train car's full

Anonymous

7/7/2025, 7:12:43 PM

No.8653780

[Report]

>>8653796

Anonymous

7/7/2025, 7:51:44 PM

No.8653796

[Report]

>>8653801

>>8653780

save some CFG for the rest of us

Anonymous

7/7/2025, 8:06:49 PM

No.8653801

[Report]

Anonymous

7/7/2025, 8:52:12 PM

No.8653839

[Report]

>>8654005

>kagami bday

>no sd on pc

grim

Been genning in my corner since Noobai released, what's new on the block?

Anonymous

7/7/2025, 8:59:55 PM

No.8653852

[Report]

>>8653871

>>8653845

>what's new on the block

For local, nothing.

Anonymous

7/7/2025, 9:14:50 PM

No.8653871

[Report]

>>8653852

Oh well, still happy that I can generate random shit that comes to mind. Has anyone here trained concepts in an eps model? Characters and styles seem to come out okay but concept refuse to take for me.

Anonymous

7/7/2025, 9:16:44 PM

No.8653873

[Report]

Not really quite the character, but eh, good enough. Anyone got some good configs for characters? I've only got 16 images for this one.

Anonymous

7/7/2025, 9:28:41 PM

No.8653884

[Report]

>>8653879

already posted in thread#10

Anonymous

7/7/2025, 9:44:31 PM

No.8653906

[Report]

>>8653972

>>8653879

Assuming you're talking about making a lora,You can make a character lora with 15 images. Something like 30 is my ideal number but it's by no means a strict rule. something that worked quite well in the past is to aim for a number of steps between 300 and 400 per epoch. So 16 images x 20 repeats = 320 steps per epoch. Then multiply your epochs to get around to 2000 steps. So 20 repeats, 7 epochs and 2240 total steps. Batch size of your choosing, you might wanna start with 1 and go from there. Resize all your images so the longest side is 1024. If you're training on a eps model, make sure to check the box for it and also "scale v-pred loss". 8/4 Dim/Alpha should be enough for a character lora. Not sure what you use for learning rate, might want to try Prodigy or something similar at a lr of 1.0. Keep Token of 1 if you want a trigger word. Save each epochs and do a xyz grid with prompt s/r to see which one is the closest. Then retrain the lora accordingly, more/less repeats/epochs/batch size/etc. Who's the character btw?

Anonymous

7/7/2025, 10:41:00 PM

No.8653942

[Report]

Anonymous

7/7/2025, 11:07:25 PM

No.8653963

[Report]

>>8654038

Anonymous

7/7/2025, 11:18:10 PM

No.8653972

[Report]

>>8653988

>>8653906

2240 steps seems insane, I did prodigy LoHa (4/4 + 4 Conv) and the Lora fried hard after only 240ish steps (15 epochs). Training on Noob-Vpred1.0

Character is Pure White Demon from succubus prison.

Anonymous

7/7/2025, 11:32:37 PM

No.8653981

[Report]

>>8653994

Are well-defined noses /aco/?

Anonymous

7/7/2025, 11:34:42 PM

No.8653984

[Report]

I blame the anime style hater for all of this

Anonymous

7/7/2025, 11:37:49 PM

No.8653987

[Report]

Pony v7 will save local.

Anonymous

7/7/2025, 11:40:03 PM

No.8653988

[Report]

>>8654002

>>8653972

After 240~steps? That's what sounds insane to me. What's your learning rate like? Prodigy should start at 1 and then adjust itself. Are you using Gradient Checkpointing? With Gradient Accumulation 1. Is SNR Gamma on 8? Not sure what is frying your training, although I haven't touched Loha/LoCon in a while. Can you upload your dataset? Would try a quick 30~minutes training to see what comes out.

Anonymous

7/7/2025, 11:44:33 PM

No.8653994

[Report]

Anonymous

7/7/2025, 11:47:00 PM

No.8653999

[Report]

>>8654080

>asura \(asurauser\)

timeless classic

Anonymous

7/7/2025, 11:50:26 PM

No.8654002

[Report]

>>8654008

>>8653988

Yeah no clue. No checkpointing, no grad accum, SNR gamma 1, but I'll let you check both the dataset and config. Thanks for helping me out anon.

https://files.catbox.moe/6o3x0d.zip

https://files.catbox.moe/lrfpnr.json

>>8654002

Seeing max_train_steps and max_train_epochs at 0, not sure if that's normal. SNR Gamma should be at 8 for anime (and 5 for realistic), or so I read. How many repeats do you have? The folder being named 1_whitedevil tells me one? Are you training on Kohya or? Dataset looks okay, probably gonna add a couple pictures and remove the kimono ones just so it doesn't get confused on the horns. Tags look alright. Anyways, gonna launch a quick training over here, tell you what in 30~minutes.

Anonymous

7/8/2025, 12:15:52 AM

No.8654019

[Report]

Anonymous

7/8/2025, 12:32:44 AM

No.8654029

[Report]

>>8654034

>>8653057 (OP)

so generation = local

diffusion = NAIcucks?

why two generals

Anonymous

7/8/2025, 12:34:05 AM

No.8654031

[Report]

>>8654037

>>8654008

>SNR Gamma should be at 8 for anime (and 5 for realistic), or so I read

where did you read that?

Anonymous

7/8/2025, 12:36:28 AM

No.8654034

[Report]

>>8654029

I was going to tell you but honestly I rather wait for another anon(s) to do it since I don't even post that much anymore

Anonymous

7/8/2025, 12:40:57 AM

No.8654037

[Report]

>>8654047

>>8654031

Been a while but years ago when I didn't have a good enough pc to train on, I used HollowStraberry's google colab trainer, and in the notes for SNR Gamma that's what it said, I believe that's where I got it from. Never tried with SNR Gamma 1, will do in the future. Training done, only did 1000-ish steps at batch size 3 for the sake of time, gonna try the lora now.

Anonymous

7/8/2025, 12:44:43 AM

No.8654038

[Report]

Anonymous

7/8/2025, 12:44:50 AM

No.8654039

[Report]

>>8654053

>>8654008

I don't use repeats, since it will fuck up random shuffle if I increased batch sizes. I just train for more epoch instead, and I like the epoch = 1 dataset pass, I'm training on Kohya, but every day I get more tempted to switch to easy scripts. Yeah, autotagger gets confused with the horn ornament + tiara and the hime cut with the semi-twintails going on? No clue how to tag that shit, same with the energy/magic shit.

Anonymous

7/8/2025, 12:48:25 AM

No.8654043

[Report]

>>8653126

I'm still using PersonalMerge

Anonymous

7/8/2025, 12:55:20 AM

No.8654047

[Report]

>>8654049

>>8654037

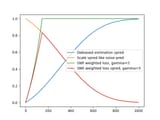

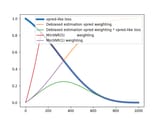

the "best" snr-based timestep weighting scheme you can do in sd-scripts for sdxl vpred is snr / (snr + 1)**2 and you can achieve that if you use 'debiased estimation' and 'scale vpred loss like noise pred' together (green line), without min snr

(well if you don't count the bug in sd-scripts which doesn't let snr reach zero for weighting even with zero terminal snr enabled, any snr-based weighting doesn't make sense for ztsnr)

Anonymous

7/8/2025, 1:00:50 AM

No.8654049

[Report]

>>8654047

>'debiased estimation' and 'scale vpred loss like noise pred'

incidentally, you can sort of approximate the effect of this with min snr 1 (purple line)

Anonymous

7/8/2025, 1:07:26 AM

No.8654053

[Report]

>>8654061

>>8654039

>I don't use repeats, since it will fuck up random shuffle if I increased batch sizes.

Do you mean caption shuffle? IIRC, batches can be imprecise because of bucketing (which never happens if you have only one bucket)

>every day I get more tempted to switch to easy scripts.

Would have stayed on Kohya but couldn't get it to work reliably on the new pc, so switched to ez

>autotagger gets confused with the horn ornament + tiara and the hime cut with the semi-twintails going on? No clue how to tag that shit, same with the energy/magic shit.

Yeah no idea either, how do you communicate this is just a different hairstyle from the usual, 2 images isn't enough to have a subfolder, I don't think. The energy and aura seem to have bled into the pics, hopefully there are more varied images for the dataset.

Anyways, this one was 324 steps per epoch (18 repeats of 18 pictures), 3 epochs for a total of 972 steps with Prodigy lr 1, batch size 3, cosine, 8*4 dim/alpha, snr 8. Quite a bit of work still needed, the markings are fucked, The horns are not as they should be and the wings are a nightmare to render properly. As you can see, almost a thousand steps and it didn't fry.

Anonymous

7/8/2025, 1:08:03 AM

No.8654054

[Report]

Anonymous

7/8/2025, 1:09:15 AM

No.8654055

[Report]

Anonymous

7/8/2025, 1:12:06 AM

No.8654058

[Report]

we achieved agi

Anonymous

7/8/2025, 1:14:45 AM

No.8654060

[Report]

>>8654053

Looks alright, thanks for giving it a try. But yeah when I say "fried", I guess I mean more stuff like

>The energy and aura seem to have bled into the pics

>burned in wings even when not prompted for

etc.

>completely burned in style

stylebleed could probably be mitigated by tagging shiki, or doing the fancy copier technique.

I'll try rebaking tomorrow and see what I get, thanks for the input and ideas.

Anonymous

7/8/2025, 1:22:40 AM

No.8654064

[Report]

>>8654067

>>8654061

Not him but you have some pics in the dataset without the wings and aura right?

Anonymous

7/8/2025, 1:23:41 AM

No.8654065

[Report]

>>8654067

>>8654061

I see what you mean, yeah. Haven't looked closely at the dataset but if she doesn't have one, remove tattoo from the tags. The aura and stuff could be put into negatives and lowering the weight of the lora to 0.8 or so, but that's a bit bothersome and not ideal. Try getting more pics (unless these are all the official pics?) and if all else fails try adding close up crops from the pics you already have, should make the lora focus the aura a bit less. What style did you use for your pics btw?

Anonymous

7/8/2025, 1:30:38 AM

No.8654067

[Report]

>>8654064

Yep, there's 2-3 images without the aura and wings in the dataset. It's all the same artist though, so style bleed is pretty bad.

>>8654065

Most pics I don't have in the dataset are part of a variant set, so no point to include 10 same images with minor differences. These are all the same artist (official creator), I've found like 2-3 other fanarts but they are complete crayon tier and changes the design so are a no-go for the dataset.

I'll likely retag everything again manually, train an almost fried lora and then start introducing artificial examples into the dataset with different artist styles. I have the metadata in my pics, it's in the stealth png format (works with the extension), but if you don't have it I used "hetza \(hellshock\)".

Anonymous

7/8/2025, 1:45:33 AM

No.8654072

[Report]

>>8654305

How do I darken hair color? Say I'm doing black hair but the artist/lora is making it gray. Do I just increase the weight of black hair? I vaguely remember this working with red hair.

Anonymous

7/8/2025, 2:02:29 AM

No.8654080

[Report]

>>8653999

>asura \(asurauser\)

I think we can all agree that hdg went into full decline when asura pillarino disappeared. 'fraid so.

https://files.catbox.moe/f5lwbp.png

Anonymous

7/8/2025, 2:08:09 AM

No.8654082

[Report]

>>8654190

>>8654005

use a fork you savage

Anonymous

7/8/2025, 3:05:13 AM

No.8654117

[Report]

>>8654122

bros how do you train a lora on an artist who only draws one character

Anonymous

7/8/2025, 3:09:31 AM

No.8654122

[Report]

>>8654117

The same way you do for style loras based on game CG: tag everything.

How is it possible that Chroma learned ZERO artist knowledge after 40 versions? Did they include the artist tags in their training at all?

Anonymous

7/8/2025, 3:22:48 AM

No.8654133

[Report]

>>8654129

Not zero, but nearly, prompting slugbox does something consistent at least but yeah it sucks

Anonymous

7/8/2025, 3:24:37 AM

No.8654135

[Report]

>>8654137

>>8654129

its both the fact he's training the model really weirdly using some method he invented, alongside the fact that the booru tags are fully shuffled, and also a small portion of the dataset. not to mention when training it'll randomly pick between NLP and a tag based prompt.

Anonymous

7/8/2025, 3:24:50 AM

No.8654136

[Report]

"Remember, his computing power is over 10 times slower than NoobAI. Sure, he’s managed to optimize it with some hacks that nobody on /h/ can pull off, but it’s still way slower than SDXL, and the speed for replicating styles is just abysmal.

Anonymous

7/8/2025, 3:25:33 AM

No.8654137

[Report]

>>8654135

Should have added "drawn by [artist]" in the NLP prompts.

Anonymous

7/8/2025, 3:30:09 AM

No.8654138

[Report]

>>8654140

h100/256 vs h100/4-8

Anonymous

7/8/2025, 3:31:23 AM

No.8654140

[Report]

>>8654141

>>8654138

did noob actually utilize a 256xH100 node lol

Anonymous

7/8/2025, 3:33:27 AM

No.8654141

[Report]

>>8654140

32xH100 from noob 0.25 to eps 1.0 iirc, they then started using most of the compute on the IP adapter and controlnets and the v-pred model was trained on 4-16 A100s I believe

>>8654129

i think the great satan is t5 and that hes not training it and that he does not have the resources to brute force it without training it like nai possibly did

Anonymous

7/8/2025, 3:45:18 AM

No.8654146

[Report]

>>8654145

>train T5

genuinely you are better off using a different text encoder than trying to train T5

Anonymous

7/8/2025, 5:02:56 AM

No.8654187

[Report]

Anonymous

7/8/2025, 5:04:10 AM

No.8654190

[Report]

>>8654005

thank you so much

>>8654082

hands are faster

Anonymous

7/8/2025, 5:12:45 AM

No.8654192

[Report]

>>8654191

A vignette that uses a crosshatching pattern. There is no crosshatch vignette tag it seems, but there is crosshatching and vignetting as separate tags.

Anonymous

7/8/2025, 5:18:49 AM

No.8654195

[Report]

absurdly detailed composition, complex exterior, green theme

>Train artist loras for vpred they turn out fine

>Train chara loras for vpred they completely brick

Using the same settings it's just weird, like shit full stop doesn't even work.

Anonymous

7/8/2025, 5:26:38 AM

No.8654206

[Report]

>>8654232

>>8654202

Take the base illu pill and come home.

Anonymous

7/8/2025, 5:41:25 AM

No.8654215

[Report]

>>8654202

can i trade luck with you

my artist loras turn out mediocre on vpred yet chara loras are easy

>>8654206

Honestly trying to figure out why I left, sure I see shiny skin but I never neg'd for it and I like my old shit a lot. Got the loras still so might just go back and see why I swapped, only thing I notice is the colors suck way worse everything is kinda beige.

Anonymous

7/8/2025, 6:31:03 AM

No.8654234

[Report]

>>8654236

>>8654232

>only thing I notice is the colors suck way worse everything is kinda beige

You're right, but I fix colors with CD tuner so I don't see why I even switched to baking on vpred.

Anonymous

7/8/2025, 6:31:53 AM

No.8654236

[Report]

>>8654237

>>8654234

That an extension might take a gander, other thing I noticed is if unprompted you get the same weird living room setting which I can probably neg out too.

Anonymous

7/8/2025, 6:32:50 AM

No.8654237

[Report]

>>8654240

>>8654237

Just use base settings or anything to tweak with it? And are vpred loras backwards compatible?

Anonymous

7/8/2025, 6:42:18 AM

No.8654242

[Report]

>>8654245

>>8654240

Gotta tweak it on a per model basis. I tend to play with saturation2 and 1 is good enough for me but YMMV.

>And are vpred loras backwards compatible

What do you mean? Bake on vpred and run on illu? I've only ever tried this on Wai and it worked out well. Improved Wai 12's color problems too.

Anonymous

7/8/2025, 6:42:33 AM

No.8654244

[Report]

>>8654245

>>8654240

You shouldn't gen with base illu, that's an even worse idea than training on it

Anonymous

7/8/2025, 6:43:45 AM

No.8654245

[Report]

>>8654242

Yeah I got my stash of shit I baked on illustrious but remade most with vpred so didn't want to start another round on bakes on stuff post originals.

>>8654244

The extension you goof.

Anonymous

7/8/2025, 6:59:56 AM

No.8654251

[Report]

>>8654374

anyone have config tips regarding finetune extractions?

lot of my attempts have been... so-so

Anonymous

7/8/2025, 7:01:15 AM

No.8654252

[Report]

>>8654232

Noob and specifically vpred has a ton more knowledge that loras just don't provide for me, personally. Although Illu has a lot more loras for it given its age and the fact that they all work for noob anyway.

Anonymous

7/8/2025, 9:06:26 AM

No.8654283

[Report]

If anyone ever wanted to prompt cute small droopy dog ears, which look a bit like the "scottish fold" tagged ears on danbooru, you can do

>goldenglow \(arknights\) ears, black dog ears, (pink ears,:-1)

Goldenglow is a character the model knows pretty well, who has this type of folded ear. Negpip + prompting the desired color is able to remove the pink hair bias from the character tagging. Putting pink ears in the negative prompt further helps.

>>8654145

Not training the TE is the correct choice.

Anonymous

7/8/2025, 9:40:16 AM

No.8654305

[Report]

>>8654072

photoshop

or stick "grey hair" in your negatives

Anonymous

7/8/2025, 9:42:32 AM

No.8654308

[Report]

Anonymous

7/8/2025, 10:52:49 AM

No.8654335

[Report]

How did 291h do it?

Anonymous

7/8/2025, 11:39:31 AM

No.8654372

[Report]

>>8654378

do what?

Anonymous

7/8/2025, 11:40:31 AM

No.8654373

[Report]

>>8654378

do me

Anonymous

7/8/2025, 11:41:26 AM

No.8654374

[Report]

>>8654251

if the lora turns out to be weak, try baking the finetune for a smidge longer than you really need

Anonymous

7/8/2025, 11:44:56 AM

No.8654378

[Report]

Anonymous

7/8/2025, 11:49:36 AM

No.8654383

[Report]

>>8654384

Training the TE destroys the entire SDXL.

If you don't train the TE, it doesn't work properly.

What should I do for a full fine-tune of SDXL? Please answer.

Anonymous

7/8/2025, 11:51:39 AM

No.8654384

[Report]

>>8654383

destroy the SDXL

Anonymous

7/8/2025, 1:39:39 PM

No.8654472

[Report]

>>8654477

>>8654294

well clearly chroma is not learning jack shit and the te is an obvious suspect

just repeating to not train te like a parrot is not gonna help it when every successful local tune had to train it (though not t5)

Anonymous

7/8/2025, 1:45:34 PM

No.8654477

[Report]

>>8654472

yeah lets just ignore the completely new "divide and conquer" training method that merges a ton of tiny tunes together that lodestone invented. nope. it's t5.

Anonymous

7/8/2025, 1:59:20 PM

No.8654485

[Report]

For me? t5.

Probably not the place to post this but I am looking for a discord invite to KirsiEngine's discord server. Without signing up for his patreon, obviously

Anonymous

7/8/2025, 2:23:38 PM

No.8654516

[Report]

>>8654530

Anonymous

7/8/2025, 2:31:24 PM

No.8654530

[Report]

Anonymous

7/8/2025, 2:35:48 PM

No.8654535

[Report]

>>8654597

Anonymous

7/8/2025, 3:28:43 PM

No.8654578

[Report]

>>8654605

>finetune vpred 1.0 for a couple epochs in attempt to train in some artstyles

>accomplishes nothing outside of stabilizing the model

not what i wanted but neat

Anonymous

7/8/2025, 3:51:25 PM

No.8654589

[Report]

You'll never be the next 291h

Anonymous

7/8/2025, 3:52:47 PM

No.8654590

[Report]

291h gens when

Anonymous

7/8/2025, 3:53:51 PM

No.8654592

[Report]

Gens? Take it to /hdg/, lil blud. This is a lora training general.

Anonymous

7/8/2025, 3:55:32 PM

No.8654594

[Report]

you will never be the painter

at least post the link to the model so I can test it myself

Anonymous

7/8/2025, 3:59:19 PM

No.8654597

[Report]

>>8654535

Still blocked from seeing channels as a non-Patreon. Oh well..

Anonymous

7/8/2025, 4:00:57 PM

No.8654599

[Report]

>>8654600

>>8654595

the 291h is on the civitai sir

Anonymous

7/8/2025, 4:01:58 PM

No.8654600

[Report]

>>8654599

think lil blud means your experiment, anon.

>>8654578

>outside of stabilizing the model

that's based anon post it

>>8654605

Yeah I wanna see if it's better than any style lora at 0.2 strength

Anonymous

7/8/2025, 4:12:33 PM

No.8654609

[Report]

>>8654607

I've tried that method and it fixed nothing for me lol

Anonymous

7/8/2025, 4:14:28 PM

No.8654610

[Report]

>>8654605

there's a decent chance it may be more slop than stable, still messing around

Anonymous

7/8/2025, 4:54:28 PM

No.8654624

[Report]

>>8654646

the best way to defeat a troll is to ignore him

Anonymous

7/8/2025, 5:20:11 PM

No.8654646

[Report]

>>8654624

Which one is the troll though?

suppose I could just ignore everyone

>>8654595

>>8654605

>>8654607

here, only done sparse testing myself. let me know if any of you see value in it lol

https://gofile.io/d/DGSNR9

Anonymous

7/8/2025, 5:56:52 PM

No.8654681

[Report]

>>8654688

How can I get rid of this artifact, it makes output blurry and destroy style and details when multidiffusion upscaling (

https://civitai.com/articles/4560/upscaling-images-using-multidiffusion), I did 2x then 1.25x and it's getting worse. Maybe this is a bad method so I need an advice.

Anonymous

7/8/2025, 6:04:52 PM

No.8654684

[Report]

>>8654129

He is working with both Pony and drhead, two retards that are vehemently opposed to artist tags. In addition, the natural language VLM shit almost certainly washes out proper nouns just like it did with base Flux

>>8654681

how is mixture of diffusers better than simple image upscaling? it's great to do absurdres upscales and looks pretty smooth, but it's still essentially a tile upscale, albeit a bit less shitty than just simple tile upscale scripts. it doesn't have the whole context of the picture which might cause hallucinations unless you are content with really low denoise.

Anonymous

7/8/2025, 6:24:36 PM

No.8654696

[Report]

>>8654688

Here, 1x, 2x, 1.25x upscaled in order

https://gofile.io/d/RFX4DT

warning:[spoiler] /aco/ [/spoiler]

Anonymous

7/8/2025, 6:26:09 PM

No.8654697

[Report]

>>8654688

Here, 1x, 2x, 1.25x upscaled in order it's /aco/ tho

https://gofile.io/d/RFX4DT

Anonymous

7/8/2025, 6:29:26 PM

No.8654701

[Report]

>>8654677

Did some basic tests and it does pretty much seems like a more stable and cohesive vpred

Didn't notice much slop in it at all, and also it got the details better than vpred in some gens

But then again, I'm not a great genner so gotta wait for someone else to comment on it

Anonymous

7/8/2025, 7:04:46 PM

No.8654723

[Report]

>>8654726

Anonymous

7/8/2025, 7:06:43 PM

No.8654726

[Report]

>>8654729

>>8654726

Now that we have noob, if they released v3 weights would people be excited?

Anonymous

7/8/2025, 7:13:45 PM

No.8654730

[Report]

>>8654729

Everything is relative. If they released it today, I'm sure people would be. If a new model better than Noob comes out and then they release v3, then of course people would not be.

Anonymous

7/8/2025, 7:15:12 PM

No.8654732

[Report]

>>8654729

noob is basically novelai v3 at home. v3 is still unfortunately better than whats available

Anonymous

7/8/2025, 7:16:09 PM

No.8654734

[Report]

>>8654729

bet someone could make a very good block merge with it and noob

>>8654729

v2/v3/v4 were shit. NAI didn't get good until v4.5. It's currently the best FLUX based anime model.

Anonymous

7/8/2025, 7:17:27 PM

No.8654737

[Report]

>>8654740

>>8654735

Why don't you ever post pictures then?

Anonymous

7/8/2025, 7:20:45 PM

No.8654740

[Report]

>>8654737

Busy masturbating; sorry.

Anonymous

7/8/2025, 7:26:42 PM

No.8654742

[Report]

>>8654735

v1 was good for its time, otherwise local would happily use WD

v3 is still good visually but it prompts like ass

Alright idiots, vpred models that I think are good, no snake oil required on any of those to get good looking gens (debatable), no quality tags and only very few basic negs

All of those were made using ER SDE with Beta at 50 steps 5 CFG (may not be the ideal setup for some of them but it's good enough for most of the cases)

>https://files.catbox.moe/p1afyv.png

>https://files.catbox.moe/nw7rue.png

To no one surprise, each model is biased towards certain styles so your favorite artist may be shit on one of them but great on another one WOW

It's almost like YOU SHOULD USE THE MODEL THAT FITS YOUR FUCKING HORRID TASTE THE BEST

>>8654677

I like what I see at the moment but I need to use it for a little longer to draw an opinion on it

Anonymous

7/8/2025, 7:40:44 PM

No.8654751

[Report]

>>8654749

>102d is still king

Excellent.

Anonymous

7/8/2025, 7:43:18 PM

No.8654754

[Report]

>>8654749

thanks i'll continue to shill r3mix

Anonymous

7/8/2025, 7:51:08 PM

No.8654758

[Report]

>>8654760

>>8654749

I think it'd be interesting to do this comparison but with loras that are considered stability enhances, on base vpred. If you can get the same results just by using a lora, then there's no reason to use a shitmix, as shitmixes always mess with the model's knowledge a bit and make it less flexible to work with, while swapping loras out is much faster.

Anonymous

7/8/2025, 7:51:20 PM

No.8654759

[Report]

>>8654762

>>8654735

>It's currently the best FLUX based anime model.

Without containing any FLUX too! Amazing!

>>8654758

>loras that are considered stability enhances

If you guys ever agree on that one, sure

Anonymous

7/8/2025, 7:55:10 PM

No.8654761

[Report]

>>8654775

>implying v4.5 is anything but dogshit

ahahah that's a good one

Anonymous

7/8/2025, 7:55:24 PM

No.8654762

[Report]

>>8654789

>>8654759

Yeah bro, they totally trained it from scratch, all by themselves.

Anonymous

7/8/2025, 7:57:52 PM

No.8654766

[Report]

>>8654798

>>8654760

If people disagree on which ones are the best then that's the reason the comparison should be made. I haven't seen anyone actually talking about existing/downloadable stabilizer loras though.

Anonymous

7/8/2025, 8:00:56 PM

No.8654769

[Report]

Am I retarded or is there a chance the lora I'm trying to use just not compatible with comfyUI for some reason?

I can't get it to work

Anonymous

7/8/2025, 8:08:08 PM

No.8654775

[Report]

>>8654797

>>8654761

Kek, this.

It's literally impossible for paid proprietarded piece of trash to be good, by definition. SaaS garbage literally takes away your freedom and makes you a slave to the system that you should oppose by any means. Don't be a fucking cattle, resist. If it isn't "Free" as in Freedom, I am not interested, as I am Free myself.

Anonymous

7/8/2025, 8:23:21 PM

No.8654789

[Report]

>>8654762

Yeah, they're just so good at optimizing shit that they can run 23 steps of FLUX with CFG in 2 seconds on an H100 lmao

Anonymous

7/8/2025, 8:27:31 PM

No.8654791

[Report]

>>8654795

Isn't just using a model like WAI good enough?

Anonymous

7/8/2025, 8:33:45 PM

No.8654795

[Report]

>>8654791

It's always a trade it seems

WAI is good, but it's trained on a lot of slop

That makes it more consistent and gives it higher quality (like in anatomy and stuff) but breaks the prompt adherence and injects a lot of unwanted style into your gens by default

Anonymous

7/8/2025, 8:35:03 PM

No.8654797

[Report]

>>8654775

And yet, none of the models people here use has a license that the FSF would approve of as a Free Software license.

Anonymous

7/8/2025, 8:35:18 PM

No.8654798

[Report]

>>8654766

I've seen a few but never used it myself

Anonymous

7/8/2025, 8:39:15 PM

No.8654801

[Report]

>>8654803

I just want a model that's as good as 102d/291h, but that's easier to use

I still can't solve the shitty img2img/inpainting/adetailer/hi-res fix being broken because these models have the crazy ass noise at the start of the gens

mfw I can't just get a nice composition and throw it at i2i with the standard settings and it'll give me a good quality gen because it'll either change the image too much or it'll make it look blurry instead of adding details every single time

Anonymous

7/8/2025, 8:42:31 PM

No.8654803

[Report]

>>8654801

mfw i2i sketch and inpaint sketch are no longer useful in my workflow now because of this

Anonymous

7/8/2025, 8:45:33 PM

No.8654807

[Report]

>>8654808

I think this one is good enough, no more rebaking for now.

Anonymous

7/8/2025, 8:46:07 PM

No.8654808

[Report]

>>8654811

>>8654807

How did you do it?

>>8654808

I went to the dataset again, removed some variations cutting it down to 14 images, added an additional close-up crop of the face as well. Did a full manual tagging pass again, adding additional matching wing tags (demon wings, bat wings, mutliple wings, etc) since it was bleeding through.

Ran prodigy to get an initial good starting learning rate by looking at the tensorboard logs, then switched back to AdamW8bit.

Did a couple of test bakes, tweaking the learning rate for both Unet and TE.

Eventually ended up using this config:

https://files.catbox.moe/7id47n.json

Anonymous

7/8/2025, 9:02:45 PM

No.8654816

[Report]

Anonymous

7/8/2025, 9:14:13 PM

No.8654823

[Report]

>>8654191

It would be faster and simpler to just remove them.

Anonymous

7/8/2025, 9:25:47 PM

No.8654829

[Report]

>>8654899

Even style-bleed is not too bad, pretty surprising.

Anonymous

7/8/2025, 9:32:06 PM

No.8654837

[Report]

>>8654840

>20 unique IPs

Anonymous

7/8/2025, 9:35:06 PM

No.8654840

[Report]

Anonymous

7/8/2025, 9:35:51 PM

No.8654842

[Report]

>>8654832

I want to clarify that I have a 4070ti super not a regular 4070

Anonymous

7/8/2025, 9:58:11 PM

No.8654851

[Report]

>>8654832

>not .safetensors

good try

Anonymous

7/8/2025, 10:06:54 PM

No.8654860

[Report]

>>8658502

>>8654677

Re-ran a few old prompts on that. If you are using CFG++ like me there's very little difference between this, base 1.0, and even 102d custom.

pic mostly unrelated

>>8654832

My NVIDIA GPU is not listed.

Anonymous

7/8/2025, 10:25:21 PM

No.8654876

[Report]

Anonymous

7/8/2025, 10:26:01 PM

No.8654877

[Report]

>>8654872

H100?

Nice try, Jensen.

Anonymous

7/8/2025, 10:32:15 PM

No.8654882

[Report]

>>8654832

Where is NovelAI on this list?

Anonymous

7/8/2025, 10:38:38 PM

No.8654887

[Report]

A6000

Anonymous

7/8/2025, 10:40:01 PM

No.8654889

[Report]

RTX Pro 6000

Anonymous

7/8/2025, 10:46:37 PM

No.8654892

[Report]

8800GT bros...

Anonymous

7/8/2025, 10:49:57 PM

No.8654894

[Report]

Trying to set up chroma has finally made me take the comfy pill. It's... it's not so bad bros... comfy is the future.

Anonymous

7/8/2025, 10:54:55 PM

No.8654899

[Report]

>>8654829

It looks like I'm seeing some cutscene from Rance.

I was browsing tags today and came across this. It is now one of my favorite pixiv posts of all time.

https://www.pixiv.net/artworks/118263867

Anonymous

7/8/2025, 11:26:56 PM

No.8654920

[Report]

>>8654922

>>8654917

nice, do you have a twitter I can follow for more microblogs like these?

Anonymous

7/8/2025, 11:27:48 PM

No.8654922

[Report]

>>8654920

Yes you can follow me @/hgg/.

Anonymous

7/8/2025, 11:29:53 PM

No.8654924

[Report]

>>8654832

what if i have multiple?

Anonymous

7/8/2025, 11:32:41 PM

No.8654930

[Report]

>>8654917

Goddamn

This is actually really good

Anonymous

7/8/2025, 11:44:30 PM

No.8654934

[Report]

>>8654946

>>8654749

greyscale sketch prompt is such a good test for detecting slopped models desu

Anonymous

7/9/2025, 12:01:07 AM

No.8654944

[Report]

what you want as a "stabilizer" is a good preference-optimized finetune, it can be a merge crap but usually merges work worse. you don't want to collapse the output distribution of a model with a lora because it will mess a lot of things up, especially if you are trying to use multiple loras.

what you will get out of a "good" preference-optimized finetune is a certain, defined "plastic" look of flux, piss tint and aco seeping through on pony, and the like.

Anonymous

7/9/2025, 12:10:31 AM

No.8654946

[Report]

>>8654949

>>8654934

It filtered out most of them lol

Anonymous

7/9/2025, 12:14:44 AM

No.8654949

[Report]

>>8654951

>>8654946

makes me wonder what would happen if you try to train solely on greyscale sketch gens

Anonymous

7/9/2025, 12:19:40 AM

No.8654951

[Report]

>>8654949

I always wonder what would happen if you used an oil painting/classical artwork lora to make a merge rather than anime stuff

Anonymous

7/9/2025, 12:31:24 AM

No.8654955

[Report]

How did 291h do it

Anonymous

7/9/2025, 12:54:57 AM

No.8654963

[Report]

>>8655020

>>8654760

>If you guys ever agree on that one, sure

this isn't complicated.

A "stabilizer lora" is merely a lora of an artist you like and want incorporated into your mix. The only caveat being that it can't be watered down shit.

The existence of the lora existing in the first place is that it's introducing a much more stable and predictable u-net and imposing itself on the primary model to guide it.

It's really that fucking simple. Just use a style lora that isn't shit.

Anonymous

7/9/2025, 1:14:06 AM

No.8654966

[Report]

Man, do the models posted here get saved by the rentry?

Anonymous

7/9/2025, 1:26:00 AM

No.8654970

[Report]

Is there a site people use other than Civit since they did the purge?

Anonymous

7/9/2025, 1:39:24 AM

No.8654971

[Report]

>>8654749

got the prompts for these? i wanna throw em on some models

Anonymous

7/9/2025, 2:31:51 AM

No.8654995

[Report]

>>8654997

didn't know there were so many people with 4090s here

Anonymous

7/9/2025, 2:33:36 AM

No.8654997

[Report]

>>8654995

that survey seems to have been posted in every ai gen thread

Anonymous

7/9/2025, 3:27:03 AM

No.8655018

[Report]

>100 unique posters

>>8654677

idk what you did but you need to do it for a little more or a little different

some samplers are completly broken

I want to like it as it gets some concepts and artists tags better but it's currently a little harder than I am willing to endure to get something good out of it

>>8654963

yeah okay, give me 3 lora recommendations for that effect

Anonymous

7/9/2025, 3:31:36 AM

No.8655021

[Report]

>>8655027

>>8655020

>some samplers are completly broken

So, like vpred?

Anonymous

7/9/2025, 3:31:44 AM

No.8655022

[Report]

>>8655020

>yeah okay, give me 3 lora recommendations for that effect

the entire point is THAT YOU CHOOSE THEM YOURSELF YOU FUCKING RETARD

It's not supposed to be recommended by anyone else! They don't fucking work well unless they actually suit what you want your shit to look like!

Anonymous

7/9/2025, 3:35:47 AM

No.8655025

[Report]

>>8655027

>>8655020

>idk what you did but you need to do it for a little more or a little different

all this was was unet only, batch 1, on a roughly 200 image dataset for 3199 steps. pulled it early since i was saving every 100 steps lol ill continue to fuck around though since results while unintentional are promising.

Anonymous

7/9/2025, 3:37:26 AM

No.8655026

[Report]

>>8655023

>It's not supposed to be recommended by anyone else

What a retard, what's the point of screaming for the guy to make a comparison if you don't even have loras in mind

Anonymous

7/9/2025, 3:38:15 AM

No.8655027

[Report]

>>8655021

Well yeah but even more so, could just be me ngl

>>8655023

I already have and use those, the point was to make a general agreement to have something to recommend when people ask for that as you know, "a good lora" is very ambiguous but whatever, I did my part

>>8655025

Godspeed anon

>he uses nyalia over 748cmSDXL for stabilization

oh nyo nyo nyo nyooooooooooooo~

Anonymous

7/9/2025, 3:50:37 AM

No.8655031

[Report]

Anonymous

7/9/2025, 3:51:33 AM

No.8655032

[Report]

Wrong tab, bucko.

Anonymous

7/9/2025, 3:53:49 AM

No.8655033

[Report]

who you callin' bucko, chucko? this is sneed.

Anonymous

7/9/2025, 3:55:38 AM

No.8655035

[Report]

You two go back to lora training. This is not a discussion thread.

Anonymous

7/9/2025, 4:13:46 AM

No.8655040

[Report]

>>8655198

>>8653845

Really nice composition!

>>8654832

>40 minutes to generate a 720p video

Even with a 4090 I'm still a vramlet

Anonymous

7/9/2025, 4:49:25 AM

No.8655053

[Report]

>>8655055

>>8655052

No I quit the gen because it's not worth it.

Anonymous

7/9/2025, 4:54:26 AM

No.8655055

[Report]

Anonymous

7/9/2025, 5:04:33 AM

No.8655064

[Report]

>>8655066

>>8655051

>40 minutes to generate a 720p video

someone isnt using lightx2v

>>8655064

The guide says that one's quality is far worse than wan.

>>8655052

https://files.catbox.moe/oiafrv.mp4

Anonymous

7/9/2025, 5:11:11 AM

No.8655067

[Report]

>>8655066

>far worse

visually it's about on par. makes the model very biased towards slow motion, though less-so on the 720p model. most of the big caveats are present in the 480p model. it's basically required for convenient 720p gens imo

Anonymous

7/9/2025, 5:41:09 AM

No.8655072

[Report]

>>8655074

>>8655066

the fuck's her problem?

Anonymous

7/9/2025, 5:42:27 AM

No.8655074

[Report]

>>8655072

jiggling her butt for (me)

Tech illiterate here trying to get Comfy working. My laptop's several years old. What exactly can I do about this? I don't know what I'm looking for on PyTorch.

Anonymous

7/9/2025, 6:07:24 AM

No.8655079

[Report]

>>8655080

>>8655077

How much VRAM do you have? You'll need around 6-8GB or so to run local gens, and if your laptop is old enough it might be too low.

For PyTorch just follow the exact instructions in the message. Go to the Nvidia link first and then the Torch one.

If your GPU is too old to run locally, there are free online options like frosting.ai and perchance.org and more.

>>8655079

The sticker on my laptop says 2GD Dedicated VRAM.

Shit.

Anonymous

7/9/2025, 6:10:34 AM

No.8655082

[Report]

Anonymous

7/9/2025, 6:11:37 AM

No.8655083

[Report]

>>8655080

Based time traveler.

Anonymous

7/9/2025, 6:15:35 AM

No.8655085

[Report]

>>8655092

>>8655080

Plenty of stuff you can do online for gens these days.

ChatGPT has SORA for image generation and Microsoft has a Bing Image generator too. Those are both the highest quality, but censored to hell so you can't do porn. They both let you gen for free with a free account setup

perchance.org is free no account gens, but is censored as well.

frosting.ai can do uncensored gens, and is free with no account. The quality isn't the best unless you pay though.

CivitAI, Tensor.art and SeaArt.ai all let you do a limited number of free gens if you make a free account. They all have onsite currency that you get a certain amount of for free and can get more by liking, commenting, the usually "engagement" stuff.

NovelAI has the most advanced new model with their v4.5 model, and is doing a free trial. However, it's mostly a pay for site. If you're willing to pay it might be the best option, but you should probably try out all the free options first before you pay for anything.

Anonymous

7/9/2025, 6:18:48 AM

No.8655088

[Report]

>>8655103

>>8655020

btw, mind sharing the broken examples? training a v2, gonna let it go until it explodes

Anonymous

7/9/2025, 6:25:22 AM

No.8655092

[Report]

>>8655096

>>8655085

Dang. Alrighty then, really have to get a new computer. My buddy's made some awesome stuff for me, but it looks like it'll be a while before I can do it on my own. Thanks for the list though, I'll take a look!

Anonymous

7/9/2025, 6:27:48 AM

No.8655096

[Report]

>>8655092

Turns out that perchance.org can do porn too, you just have to let it fail once, click the "change settings" button that comes up, and then turn off the filter.

Since that and frosting.ai don't require any fee or even a free account they're probably the best to start with if you want /h/ content.

Anonymous

7/9/2025, 6:28:02 AM

No.8655097

[Report]

Is there a fastest way to switch model like extension?

Anonymous

7/9/2025, 6:29:24 AM

No.8655098

[Report]

Nigga, you click the drop down in the upper left and choose the model.

Anonymous

7/9/2025, 6:30:33 AM

No.8655099

[Report]

How drop click change down model?

Anonymous

7/9/2025, 6:33:09 AM

No.8655101

[Report]

sarsbros..

Anonymous

7/9/2025, 6:38:46 AM

No.8655103

[Report]

>>8655104

Anonymous

7/9/2025, 6:41:09 AM

No.8655104

[Report]

>>8655103

lol wtf, i wonder if base vp1.0 has the same issue on the problematic samplers

Anonymous

7/9/2025, 7:42:01 AM

No.8655143

[Report]

>>8655030

why would i not use both, retard-kun?

Anonymous

7/9/2025, 7:45:05 AM

No.8655145

[Report]

Anonymous

7/9/2025, 7:45:10 AM

No.8655146

[Report]

>certainly 2 stabilizers will unslop it

kekerino

Anonymous

7/9/2025, 7:46:13 AM

No.8655148

[Report]

xir please administer the appropriate Slop Shine to your model before use. it is imperative

Someone did a test a while ago that demonstrated how some characters like Nahida make the model more accurately model the character as small relative to the environment, while others feel oversized. Well inspired by that, I did my own tests using the kitchen environment, and can confirm that Nahida is really one of the few characters that achieves this. There are a crazy ton of characters that are supposed to be short but noob still renders them like a normal sized people.

I wonder what would solve this problem in terms of model architecture. Or is it merely a training/dataset issue?

Anonymous

7/9/2025, 9:06:05 AM

No.8655198

[Report]

>>8654811

Well done, must admit, didn't think of using Prodigy to figure out the learning rate. Solid work.

>>8655040

Thanks. Just wish i had taken the time to correct her small hands.

Anonymous

7/9/2025, 9:51:50 AM

No.8655224

[Report]

>>8655439

>>8655186

I just looked at danbooru's tag wiki and found the toddler tag. Didn't know that was a thing. Testing it, it does seem to make pretty small characters in the kitchen environment. If the goal is to make a short normal hag, then perhaps adding [toddler, aged down:petite:0.2] to a prompt of X character might work.

Anonymous

7/9/2025, 9:53:57 AM

No.8655226

[Report]

>>8655233

Wrong tab, oekaki anon.

>>8655226

He is NOT a pedophile. Those are NOT toddlers he's posting. Look, they've got curves!

Anonymous

7/9/2025, 10:08:38 AM

No.8655235

[Report]

>>8655241

who is they thems talking to

Anonymous

7/9/2025, 10:17:56 AM

No.8655239

[Report]

Anonymous

7/9/2025, 10:20:10 AM

No.8655241

[Report]

>>8655269

>>8655235

Idk kek. If you just wanted shortstacks you can prompt for those just fine, no need to go through all this.

Anonymous

7/9/2025, 10:25:40 AM

No.8655248

[Report]

>>8655268

>>8655233

>Look, they've got curves

Where? No one posted any images. The last non-catbox image post was 12 hours ago...

Anonymous

7/9/2025, 10:27:34 AM

No.8655249

[Report]

>>8655250

You're not getting my metadata, Rajeej.

Anonymous

7/9/2025, 10:30:01 AM

No.8655250

[Report]

>>8655249

Who are you talking to?

Anonymous

7/9/2025, 10:31:31 AM

No.8655251

[Report]

all me

Anonymous

7/9/2025, 10:35:32 AM

No.8655255

[Report]

me too

Anonymous

7/9/2025, 11:30:14 AM

No.8655266

[Report]

>>8655268

Anonymous

7/9/2025, 11:38:24 AM

No.8655268

[Report]

Anonymous

7/9/2025, 11:42:50 AM

No.8655269

[Report]

>>8655271

>>8655186

Kitchen anon here, I also noticed when doing groups shots of named characters, ones from the same franchise would usually be fine because they appeared together in some dataset pics. But crossovers would mess up their relative sizes.

>>8655241

Point is, small characters often end up huge compared to the environment. Sometimes even if you specifically prompt for loli/shortstack/etc. Picrel.

Anonymous

7/9/2025, 11:47:12 AM

No.8655271

[Report]

>>8655277

>>8655269

How do we know that's not a custom built kitchen made to accommodate her night?

Anonymous

7/9/2025, 11:48:13 AM

No.8655273

[Report]

it's nai

Anonymous

7/9/2025, 11:48:18 AM

No.8655274

[Report]

>>8655772

Anonymous

7/9/2025, 11:52:32 AM

No.8655277

[Report]

>>8655271

it made her legs longer too

Anonymous

7/9/2025, 11:59:22 AM

No.8655281

[Report]

>>8655288

Anonymous

7/9/2025, 12:01:26 PM

No.8655283

[Report]

>>8655286

>>8655282

Where's the rest of his forearm?

Anonymous

7/9/2025, 12:05:12 PM

No.8655286

[Report]

>>8655283

idk camera angles

Anonymous

7/9/2025, 12:07:53 PM

No.8655288

[Report]

>>8655281

>>8655282

Reminds me of school days.

Anonymous

7/9/2025, 1:11:52 PM

No.8655354

[Report]

Anonymous

7/9/2025, 1:30:07 PM

No.8655362

[Report]

>>8655364

>she doesn't 748cm

A-anon..

Anonymous

7/9/2025, 1:32:22 PM

No.8655364

[Report]

>>8655362

I don't know what that memes

Anonymous

7/9/2025, 3:47:12 PM

No.8655439

[Report]

>>8655224

>[toddler, aged down:petite:0.2]

I just tried this and it seems to be an inconsistent solution. Sometimes it does make the proportions right but most of the time it'll be messed up and closer to a shorstack/loli. Maybe if there was a tag for "normal proportions" then this might work.

Somehow I feel like putting "shortstack" in neg won't help either.

Anonymous

7/9/2025, 4:48:03 PM

No.8655470

[Report]

>>8655296

i don't like the face but the rest is very cool

Anonymous

7/9/2025, 5:12:19 PM

No.8655485

[Report]

Anonymous

7/9/2025, 5:37:43 PM

No.8655499

[Report]

Anonymous

7/9/2025, 6:29:56 PM

No.8655522

[Report]

Anonymous

7/9/2025, 7:08:28 PM

No.8655546

[Report]

luv me some chun li

Anonymous

7/9/2025, 7:53:35 PM

No.8655567

[Report]

>>8654749

seconding for the prompts

curious how the models I use hold up

Anonymous

7/9/2025, 11:50:54 PM

No.8655759

[Report]

I did not think generating pussy would be so lucrative.

NAIfags, what else do you use in your workflow? Besides the in-house enhancement features (which are all terrible and cost Anlas to use prooperly) I use Upscayl to make 4K+ images. Does anyone actually use Photoshop to retouch images nowadays?

Anonymous

7/9/2025, 11:58:45 PM

No.8655768

[Report]

Don't you love when sometimes the same exact gen and inpaint settings you have used many times before suddenly don't work anymore?

Anonymous

7/10/2025, 12:07:13 AM

No.8655772

[Report]

Anonymous

7/10/2025, 6:36:20 AM

No.8655937

[Report]

>>8655944

I wish I never tried NAI 4.5, impossible for me to go back to local now. Coherent multi character scenes off cooldown and it nails the style I use perfectly..

Anonymous

7/10/2025, 6:41:28 AM

No.8655942

[Report]

kurumuz...

Anonymous

7/10/2025, 6:43:16 AM

No.8655944

[Report]

>>8655937

Eventually they will all become SFW only.

Anonymous

7/10/2025, 6:45:24 AM

No.8655946

[Report]

/h/ is just a bunch of frauds, and SOTA only comes from NAI. This has never changed in history. First, NAI creates SOTA, and then /h/ just copies it. We've definitely seen this pattern with the latest Flux generation too.

Anonymous

7/10/2025, 7:11:06 AM

No.8655976

[Report]

Time to merge with /hdg/. We've gone full circle, sisters.

Anonymous

7/10/2025, 7:12:26 AM

No.8655978

[Report]

Petition denied, again

Anonymous

7/10/2025, 7:34:02 AM

No.8655995

[Report]

>>8656311

>>8655051

It takes me 4 minutes and 30 seconds on a 5090.

24fps 720x480 in WAN2.1.

The 4090 can't be that slower. You must have setup something wrong.

>>8654749

why does base noob 1.0 look the best

Anonymous

7/10/2025, 8:33:34 AM

No.8656024

[Report]

>>8656019

Probably because despite what all the armchair ML scientists say, the noob team actually knew what they were doing and everyone who tried to "fix" it only made it worse.

Anonymous

7/10/2025, 8:36:42 AM

No.8656033

[Report]

How did 291h get away with it?

Anonymous

7/10/2025, 10:30:01 AM

No.8656101

[Report]

>>8656110

>>8656195

Anon, if you were about to train a finetune of noob, which artists would've you add in the dataset?

Anonymous

7/10/2025, 11:01:36 AM

No.8656110

[Report]

>>8656189

>>8656101

The ones I like

Anonymous

7/10/2025, 11:01:56 AM

No.8656111

[Report]

>>8656149

Anonymous

7/10/2025, 11:57:58 AM

No.8656149

[Report]

Anonymous

7/10/2025, 12:01:29 PM

No.8656152

[Report]

>t5gemma

what's the point of this

Anonymous

7/10/2025, 1:11:21 PM

No.8656190

[Report]

>>8656189

asura asurauser

Anonymous

7/10/2025, 1:22:32 PM

No.8656194

[Report]

Anonymous

7/10/2025, 1:26:16 PM

No.8656195

[Report]

>>8656669

>>8656101

tamiya akito, CGs not danbooru crap

from danbooru I guess nanameda kei. he kinda works but only on base noob, too weak for merges

Anonymous

7/10/2025, 1:53:30 PM

No.8656215

[Report]

Anonymous

7/10/2025, 2:31:50 PM

No.8656243

[Report]

>>8656251

>>8656189

Didn't you already ask this like a year (and a half maybe) ago?

Anonymous

7/10/2025, 2:45:03 PM

No.8656251

[Report]

>>8656286

>>8656243

Even if was the same person, how long should someone have to wait before asking again?

>>8656251

Just use answers from that time, there were a lot of them, can't get that now that the whole thread is 3 samefags.

Anonymous

7/10/2025, 3:49:06 PM

No.8656311

[Report]

>>8655995

It even says that on the guide my guy. The other anon was correct though, just switch to lightx2v/

>720x480

No 1280x720.

Anonymous

7/10/2025, 3:51:34 PM

No.8656313

[Report]

>>8656019

Because you have shit taste?

Anonymous

7/10/2025, 4:11:47 PM

No.8656337

[Report]

>>8656349

>>8656286

are those 3 samefags on the room right now?

Anonymous

7/10/2025, 4:22:38 PM

No.8656349

[Report]

>>8656396

>>8656337

We are all you, anon

Anonymous

7/10/2025, 4:22:54 PM

No.8656351

[Report]

Gah! Now you're making me angry!

SUFFER!!!

Anonymous

7/10/2025, 4:24:23 PM

No.8656353

[Report]

>>8656355

Anonymous

7/10/2025, 4:24:59 PM

No.8656355

[Report]

>>8656353

Why aren't you using the sdxl vae?

Anonymous

7/10/2025, 5:01:47 PM

No.8656396

[Report]

>>8656349

If you were you would be posting kino vanilla or 1girl standing gens

>>8656286

>the whole thread is 3 samefags

how do we revive /h{d,g}g/

Anonymous

7/10/2025, 5:49:55 PM

No.8656458

[Report]

>>8656466

Anyone have know of any artist that do thin and "crisp" line art? Not quite Oekaki, but in the same vein

Anonymous

7/10/2025, 5:49:56 PM

No.8656459

[Report]

>>8656453

just let it merge naturally back into /hdg/

Anonymous

7/10/2025, 5:51:48 PM

No.8656466

[Report]

>>8656485

>>8656458

I may have some in mind but you need to post an example

Anonymous

7/10/2025, 5:55:00 PM

No.8656477

[Report]

>>8656551

>>8656453

>>the whole thread is 3 samefags

saar, you are deboonked

>>8654832

the poll has 206 votes (one unique ip per vote)

Anonymous

7/10/2025, 5:57:28 PM

No.8656485

[Report]

>>8656466

I don't really have a concrete example right now, I just remember seeing a picture some days ago and thinking "hey I like the way that looks, I should try to replicate that"

I don't remember when or where I saw it so I can't really go looking for it again, I just have this very feint image in my head, so it's more like a feeling

Not very helpful I know, but I kind of just want to experiment, so feel free to post whatever you have

Anonymous

7/10/2025, 6:28:09 PM

No.8656523

[Report]

Does anyone have a snakeoil loaded finetune config for the machina fork of sd-scripts? blud isn't exactly keen on documentation and I wanna see what's possible without sifting through the code.

>>8656477

the poll was reposted in every AI thread on the site

Anonymous

7/10/2025, 6:45:29 PM

No.8656556

[Report]

Anonymous

7/10/2025, 6:45:56 PM

No.8656558

[Report]

>>8656551

pp grabbing viroos

>>8656551

I don't see it on /lmg/ or /hdg/ so what do you mean by "every"

Anonymous

7/10/2025, 6:51:54 PM

No.8656564

[Report]

>>8656562

well, the boards that matter.

Anonymous

7/10/2025, 6:54:17 PM

No.8656568

[Report]

>>8656768

>>8656562

Just a guess, based on seeing it in the non-futa /d/ thread and the photorealism /aco/ thread.

Welp, just upgraded to a 5070ti, and now shit is broken. The rest of the net has no idea apparently, Is there anyone here who has gotten reforge working with a 5070ti?

Anonymous

7/10/2025, 6:59:54 PM

No.8656573

[Report]

>>8656589

>>8656572

What kind of broken we're talking about?

Anonymous

7/10/2025, 7:05:13 PM

No.8656583

[Report]

>>8656589

>>8656572

Try deleting your venv and any launch commands you have in your webui-user such as xformers and start over.

Anonymous

7/10/2025, 7:10:34 PM

No.8656589

[Report]

>>8656729

>>8656573

RuntimeError: CUDA error: no kernel image is available for execution on the device

>>8656583

Will try this and report if it works. Thanks for the suggestion.

Anonymous

7/10/2025, 7:33:30 PM

No.8656612

[Report]

>>8656611

Can't tell if she has too many tails or if it's just some retarded BA design

Anonymous

7/10/2025, 7:33:47 PM

No.8656613

[Report]

>>8656616

>>8656611

No sauce on that penisdog?

Anonymous

7/10/2025, 7:36:36 PM

No.8656616

[Report]

>>8656619

Anonymous

7/10/2025, 7:37:41 PM

No.8656619

[Report]

>>8656616

Disgusting. Thank you.

can novelai do JP text? I know it technically can but I'm curious if the text encoder(?) was setup correctly to read JP input, or if it just gets automatically translated or something

Anonymous

7/10/2025, 7:54:54 PM

No.8656639

[Report]

>>8656636

nai thread is down the road, lil' bro

Anonymous

7/10/2025, 7:57:09 PM

No.8656640

[Report]

Anonymous

7/10/2025, 8:05:09 PM

No.8656649

[Report]

>>8656636

it can't, which is really shitty and funny at the same time

Anonymous

7/10/2025, 8:24:30 PM

No.8656669

[Report]

>>8656690

>>8656195

>tamiya akito, CGs not danbooru crap

do you perhaps have them sorted and willing to upload somewhere?

Anonymous

7/10/2025, 8:36:14 PM

No.8656684

[Report]

>>8656636

>Note: since V4.5 uses the T5 tokenizer, be aware that most Unicode characters (e.g. colorful emoji or Japanese characters) are not supported by the model as part of prompts.

Anonymous

7/10/2025, 8:41:56 PM

No.8656690

[Report]

>>8656669

sorry, I don't

just sadpanda galleries

Anonymous

7/10/2025, 9:28:51 PM

No.8656729

[Report]

>>8656589

No kernel image means your drivers are broken. You need to get drivers that support Blackwell (5000s). I had to manually get an updated driver on my linux machine for my 5000 card. Windows I assume it's just installing the official Nvidia stuff.

Anonymous

7/10/2025, 9:59:48 PM

No.8656768

[Report]

>>8656568

Also on the dead /u/ thread.

Anonymous

7/11/2025, 1:39:28 AM

No.8656990

[Report]

>>8657005

Is there a comparison of the best local models compared to nai 4.5?

Anonymous

7/11/2025, 1:47:59 AM

No.8657005

[Report]

>>8657017

>>8656990

In terms of what? Because I could generate tons of styles and characters NAI could never do, and also generate text and segmented characters that local could never do

Anonymous

7/11/2025, 1:56:00 AM

No.8657017

[Report]

>>8657005

Styles and characters yeah. Couldn't care less for text.

Anonymous

7/11/2025, 1:58:35 AM

No.8657020

[Report]

vibin'

Anonymous

7/11/2025, 2:13:41 AM

No.8657045

[Report]

what is lil bud vibin' to? :skull: :thinking:

Anonymous

7/11/2025, 4:15:29 AM

No.8657126

[Report]

>>8657131

My setup broke but I had fun editing silly shit, enjoy

Anonymous

7/11/2025, 4:31:13 AM

No.8657131

[Report]

>>8657133

>>8657126

not bad, very cool

a shame about the forced cum on their tits

Anonymous

7/11/2025, 4:37:17 AM

No.8657133

[Report]

Anonymous

7/11/2025, 5:24:34 AM

No.8657157

[Report]

>>8657298

Can we propose trades between generals? I'd love to get Sir Solangeanon and Doodleanon (no, not the pedo one) here in exchange for lilbludskullanon. Thing /hdg/ would go for it?

Anonymous

7/11/2025, 5:44:07 AM

No.8657167

[Report]

>>8657164

>*Think /hdg/ would go for it?

Anonymous

7/11/2025, 5:44:23 AM

No.8657168

[Report]

>>8657164

you can just fuck off to that shit hole

Anonymous

7/11/2025, 5:45:59 AM

No.8657171

[Report]

>>8657173

I'm trying to build the best general through our front office, anon.

Anonymous

7/11/2025, 5:46:11 AM

No.8657172

[Report]

>>8657176

>>8657164

Why do you want to make the thread worse?

Anonymous

7/11/2025, 5:47:05 AM

No.8657173

[Report]

>>8657176

>>8657171

/hdg/ is already peak by your standards

Anonymous

7/11/2025, 5:49:25 AM

No.8657176

[Report]

>>8657191

>>8657172

How so?

>SirSolangeanon

Enthusiastic poster, somehow still not jaded like the majority of us.

>doodleanon

Miss that lil potato headed nigga like you wouldn't believe.

>>8657173

Nah. Those 2 are keeping that general afloat still instead of letting it sink to enter a proper rebuild phase. Whereas we're the explosive franchise with all the new talent that needs guidance from a few veteran pieces to put it together.

Anonymous

7/11/2025, 6:00:33 AM

No.8657191

[Report]

>>8657176

I'll give you the point on solangeanon since he do listen to feedback

Anonymous

7/11/2025, 6:34:44 AM

No.8657219

[Report]

watch out chuds, you dont want me to uncage right here right now, ive been keeping this thread chaste so far.

Anonymous

7/11/2025, 7:03:02 AM

No.8657246

[Report]

>asking for avatarfags

Old 4chan culture is never coming back is it? Rules only exist if someone reports you.

Anonymous

7/11/2025, 7:08:15 AM

No.8657249

[Report]

what big boomer yappin bout :skull:

This place is now wholly indistinguishable from /hdg/, except nobody even bothers to pot gens.

Anonymous

7/11/2025, 7:39:07 AM

No.8657265

[Report]

>>8657263

>except nobody even bothers to pot gens

so just like hdg? most of the gens there are shitposts now, either civitai slop reposts or cathag garbage. guess hgg isn't getting spammed (yet)

Anonymous

7/11/2025, 7:42:11 AM

No.8657267

[Report]

>>8657665

>>8657263

Maybe you shouldn't have run off the trap (formerly otoko_no_ko) genners just to be left with endless threads of austistic slap fighting over toml files.

Anonymous

7/11/2025, 7:49:55 AM

No.8657277

[Report]

Anonymous

7/11/2025, 8:21:23 AM

No.8657298

[Report]

>>8657157

nice. more noodlenood.

Anonymous

7/11/2025, 8:30:25 AM

No.8657308

[Report]

Orc bros?

Anonymous

7/11/2025, 8:33:22 AM

No.8657312

[Report]

Anonymous

7/11/2025, 9:11:24 AM

No.8657328

[Report]

>>8657263

we need a third general

Anonymous

7/11/2025, 9:25:39 AM

No.8657340

[Report]

>>8657331

Bake when? I'm ready to move on, sister.

Anonymous

7/11/2025, 9:47:14 AM

No.8657358

[Report]

>>8657331

What should we call it? I propose /hdgpg/ hentai diffusion gens posting general.

Anonymous

7/11/2025, 9:50:19 AM

No.8657361

[Report]

>>8657263

Not even close, the amount of retarded botposting in hdg is unbearable.

on more important news i retrained this lora and its worse now

either my lora training settings are fucked or this dataset is cursed

thanks for listening to my important news

Anonymous

7/11/2025, 10:49:26 AM

No.8657407

[Report]

>>8657404

me with every lora i bake ever (i cant train the TE)

Anonymous

7/11/2025, 10:54:46 AM

No.8657409

[Report]

>>8657413

>>8657404

whats the artist/s?

Anonymous

7/11/2025, 10:59:04 AM

No.8657412

[Report]

>>8657414

our three funny greek letter friend posted some new models

Anonymous

7/11/2025, 11:00:13 AM

No.8657413

[Report]

>>8657429

>>8657484

>>8657409

it's for the concept of a dildo reveal 2koma

like this

https://danbooru.donmai.us/posts/6868424

i thought it would be an easy train but it breaks down every time

Anonymous

7/11/2025, 11:01:06 AM

No.8657414

[Report]

>>8657416

Anonymous

7/11/2025, 11:02:18 AM

No.8657416

[Report]

>>8657417

>>8657414

was there any sort of discussion about it?

Anonymous

7/11/2025, 11:03:15 AM

No.8657417

[Report]

>>8657420

>>8659886

Anonymous

7/11/2025, 11:05:16 AM

No.8657420

[Report]

>>8657421

Anonymous

7/11/2025, 11:06:13 AM

No.8657421

[Report]

>>8657420

tldr: 1.5 may be okay but rest are objectively worse than the og.

Anonymous

7/11/2025, 11:14:58 AM

No.8657427

[Report]

Nyo. Still 291h.

Anonymous

7/11/2025, 11:16:03 AM

No.8657429

[Report]

>>8657434

>>8657413

i shee

but what about the artists used for that image unless its style bleeding from the lora?

Anonymous

7/11/2025, 11:17:07 AM

No.8657434

[Report]

Anonymous

7/11/2025, 12:20:58 PM

No.8657484

[Report]

>>8657413

maybe review your tagging

Anonymous

7/11/2025, 3:30:12 PM

No.8657661

[Report]

>>8657662

How do I bake a lora having 0 knowledge about it

A style lora in particular

>>8657661

step 0. download lora easy training scripts

step 1. collect images. discard ones that're cluttered or potentially confusing.

step 2. disregard danbooru tags, retag all images with wd tagger eva02 large v3. add a trigger word to the start of every .txt

step 3. beg for a toml. keep tokens set to one

step 4. train

Anonymous

7/11/2025, 3:36:18 PM

No.8657665

[Report]

>>8657263

why you retards always complain about no posting gens without posting anything at all

>>8657267

this is good

>>8657404

you were my last hope for making this concept work

Anonymous

7/11/2025, 5:29:03 PM

No.8657728

[Report]

>>8657731

>>8657662

How do I use lora easy training scripts if I don't have display drivers? Is there a non-gui version?

Anonymous

7/11/2025, 5:31:45 PM

No.8657731

[Report]

>>8657735

>>8657728

Are you this anon

>>8655077

Anonymous

7/11/2025, 5:34:26 PM

No.8657735

[Report]

>>8657795

>>8657731