>>510530583

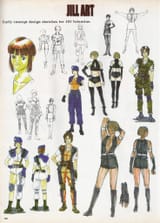

I am a (bad) artist, but I'm concerned about the effect that releasing my content to an online audience will have at this point.

Using Glaze, Nightshade, and other protective programs is a tie-over for the time being, and the prospect of companies like Google and OpenAI using bots to essentially replicate my work (without my permission) is a massive disincentive since with each produced work I'm essentially aiding my direct competition -- which is hosted, paid, staffed, and directed by people who I disagree with on nearly every topic imaginable. Since you're here, I'd assume you'd concede that the people who are hosting and designing generative AI are *not* generally aligned with ideals like ethnonational solidarity, free speech, rights to self-defense, rights to privacy, isolation, and so on.

What I've outlined is a similar plight to people who are gifted programmers, but don't like the idea of automating jobs away from white people, or designing surveillance systems to capture minor crimes, becoming a kind of accomplice to the incrimination or marginalization of their own countrymen. It's becoming harder to work as someone with these kinds of skills in an avenue that generates steady income, because ultimately what you're doing is "joining the winning team", working bit-by-bit for a system which despises you, but can use your livelihood to construct a system alien to your own interests.

This obviously wouldn't prevent me from creating art I enjoy, but their wouldn't be a reason for me to share it with other people, even if other people would like me to, and it would increase the quality of their lives. This suggests that it's possible that *many* artists may lose motivation to publicly share their work, leading to a dramatically increased rate at which corporate, centralized, AI *art* dominates the public space at an increasing rate. See the Nobel-winning essay "The Market For Lemons" by George A. Akerlof for a better description of this phenomenon.

7/16/2025, 11:52:45 AM

No.510524346

>>510524415

>>510524527

>>510524551

>>510524555

>>510524660

>>510524693

>>510524751

>>510524764

>>510524782

>>510524896

>>510524898

>>510524962

>>510525030

>>510525559

>>510525676

>>510525714

>>510525720

>>510525962

>>510526113

>>510526129

>>510526271

>>510526351

>>510526388

>>510526422

>>510526468

>>510526614

>>510526730

>>510526775

>>510526858

>>510527286

>>510527407

>>510527857

>>510528382

>>510528453

>>510528580

>>510528609

>>510529284

>>510529989

>>510530488

>>510530502

>>510530522

>>510530728

>>510532925

>>510533210

>>510533370

>>510533429

>>510533545

>>510534936

>>510535583

>>510536446

>>510536777

>>510537398

>>510537701

>>510538038

>>510539015

>>510540815

>>510541386

>>510543952

7/16/2025, 11:52:45 AM

No.510524346

>>510524415

>>510524527

>>510524551

>>510524555

>>510524660

>>510524693

>>510524751

>>510524764

>>510524782

>>510524896

>>510524898

>>510524962

>>510525030

>>510525559

>>510525676

>>510525714

>>510525720

>>510525962

>>510526113

>>510526129

>>510526271

>>510526351

>>510526388

>>510526422

>>510526468

>>510526614

>>510526730

>>510526775

>>510526858

>>510527286

>>510527407

>>510527857

>>510528382

>>510528453

>>510528580

>>510528609

>>510529284

>>510529989

>>510530488

>>510530502

>>510530522

>>510530728

>>510532925

>>510533210

>>510533370

>>510533429

>>510533545

>>510534936

>>510535583

>>510536446

>>510536777

>>510537398

>>510537701

>>510538038

>>510539015

>>510540815

>>510541386

>>510543952