>>512121861

this is only part of the story though

there are exactly 3 parts of the story

1. Average / Regression to the Mean ((X / Y) / 2) that is mostly used to extract data from the big data centers and find what groups of people like and dislike

2.

+-------------------

: small_apple(2)

|

: hide(5)

|

: like(10)

: dislike(30)

+--------------------------

: big_apple(3)

|

: hide(10)

|

: like(10)

: dislike(30)

+------------------------

: small_apple(2)

: big_apple(3)

|

: hide(5)

: hide(10)

|

: like(10)

: dislike(30)

+-----------------

+--+----------------+---+

| 1 | small_apple | 2 |

+--+-----------------+--+

| 2 | big_apple | 3 |

+--+-----------------+---+

| 3 | like | 10 |

+--+-----------------+----+

| 4 | dislike | 30 |

+--+------------------+----+

this part is used for the `training part`

3. but there is a third and maybe even a fourth part, the third part is called `funciton minimization`,

it goes like this, you have anonymous function body, and you wanna get cool output without knowing the best output, what a simple minmization will do is, use Math.Random to try different parameters, what more complex minimization will do is will try both parameters, and make steps in the forward direction while amplifying the parameters that work more than the ones that does not, the step size is most important here, as smaller step sizes lead to more precise answers, everything else is handle in the runtime

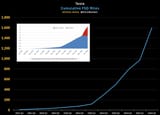

4. activation, the final problem the current neural networks solve is stock market predictions, or generating situations that satisfy contrains perfectly, imagine a law maker made a maze labirthn of subtractive penalizing restricitve laws for everyone to navigate, with cool activations you can basically find the most efficient path between those restrictions

8/3/2025, 4:15:15 PM

No.512121096

>>512121369

>>512121422

>>512121531

>>512121552

>>512121619

>>512121757

>>512121907

>>512122603

>>512123259

>>512123738

>>512125314

>>512125849

>>512127401

>>512128073

>>512129553

>>512131791

>>512132638

>>512133183

>>512133787

>>512134321

>>512135962

>>512136486

>>512138242

8/3/2025, 4:15:15 PM

No.512121096

>>512121369

>>512121422

>>512121531

>>512121552

>>512121619

>>512121757

>>512121907

>>512122603

>>512123259

>>512123738

>>512125314

>>512125849

>>512127401

>>512128073

>>512129553

>>512131791

>>512132638

>>512133183

>>512133787

>>512134321

>>512135962

>>512136486

>>512138242