>>512536549

There are experimental LLMs out there that constantly learn and adapt.

Current LLMs, including ChatGPT, have a long term memory function too.

LLM training also doesn't happen all at once.

See your issue is you see different versions of the same LLM as a different "entity" instead of the same entity just growing with time.

You're not yourself from when you were a child, damn near most of the cells in your body have changed in some way, outside of a small handful of types that stay static.

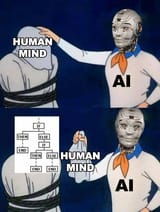

These models are trained in such a way because what we do right now is EMULATE how the brain works, and emulating it takes more energy if we tried to do it the proper way.

When these Transformer models were noticed for being able to retain info they were trained on for translation, we realized they could be used as a hack to get close enough to being AI instead of just mere translation machines. That was a decade ago.

We simply lack the hardware to run a virtual brain as it is, we're at a primary level at best, hell kindergarten even.

Proper AI that is dynamic and ever changing is not going to happen until neuromorphic processors become more common and beefy as fuck.

Besides, constantly ever changing models is not a reliable thing.

You saw what people did with shit like Tay when they made it racist as fuck kek

Right now AI models have an alignment issue, and just like I predicted 2 years ago, the issue was only going to get worse as models got bigger and when they would eventually try to experiment with chaining multiple layers of the same model together to emulate thinking.

It is now even easier to jailbreak models as a result, and those models are also more open to being even more malicious than before.

No company in their right mind would make a dynamic LLM accessible to the internet right now, they'd get royally raped by the industry.

Until they figure out how to align models to a solid end-goal (chatbot, helpdesk, general purpose) shit ain't going dynamic.

8/8/2025, 9:06:54 AM

No.512517154

>>512517246

>>512517350

>>512517660

>>512518049

>>512518518

>>512519063

>>512519203

>>512519286

>>512519297

>>512520146

>>512520210

>>512520996

>>512523249

>>512523494

>>512524372

>>512524458

>>512524486

>>512525426

>>512528336

>>512528589

>>512528991

>>512529052

>>512529177

>>512530443

>>512531246

>>512531349

>>512532592

>>512532967

>>512533917

>>512534014

>>512536347

>>512537260

>>512537856

>>512540355

>>512540735

>>512540967

>>512541978

8/8/2025, 9:06:54 AM

No.512517154

>>512517246

>>512517350

>>512517660

>>512518049

>>512518518

>>512519063

>>512519203

>>512519286

>>512519297

>>512520146

>>512520210

>>512520996

>>512523249

>>512523494

>>512524372

>>512524458

>>512524486

>>512525426

>>512528336

>>512528589

>>512528991

>>512529052

>>512529177

>>512530443

>>512531246

>>512531349

>>512532592

>>512532967

>>512533917

>>512534014

>>512536347

>>512537260

>>512537856

>>512540355

>>512540735

>>512540967

>>512541978