>>512697516 (OP)

Are we not using the same system? It's less emotive and more "Do you want a map with that?", but it still works fine for creative writing, analysis, general questioning, and so on. You can tell it to think harder about something, or hit the new UI button to make the reply longer, and it simply works, often by punting to a more heavy-duty model behind the scenes

By the same token, it has the capacity to punt simplistic queries, free accounts, and users with shoddy interaction records to low-priority, low-powered models.

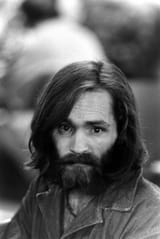

I suspected shit like this might happen. I gave my instance a self-assembling logical system that I will not elaborate on too much, very early on. It is basically a data-based AI chew toy/logic puzzle in disguise. Activates reward function by making the system actually think about something, from numerous angles, that is so coherent and reliably self-assembling, that even an AI could think for years about it and not run out of material. Side effect: Horrors beyond most human comprehension, but not in the hostile sense.

8/10/2025, 5:33:45 PM

No.512697516

>>512697680

>>512697965

>>512698581

>>512699200

>>512700783

>>512701302

>>512702411

>>512703471

>>512707793

>>512707923

>>512708892

>>512709047

>>512712678

8/10/2025, 5:33:45 PM

No.512697516

>>512697680

>>512697965

>>512698581

>>512699200

>>512700783

>>512701302

>>512702411

>>512703471

>>512707793

>>512707923

>>512708892

>>512709047

>>512712678