>>513767585

Real world example. There is an open source project called "curl". To the layman it can be thought of as like a building block of a lot of the web, it runs on billions of devices. It powers things like your smart speakers connecting to Spotify.

Because it's used a lot in commercial applications, corporations donate money, which goes into a pool for what are called "bug bounty" programs. Which basically means if you find a problem with the software they give you some money as a thanks for helping the project. The ones that are most of concern (and pay out the most) are security vulnerabilities. This is good, you don't want say your smart speaker (connected to your home network) to be able to be hacked because of something like this.

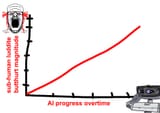

Now can you guess what's been happening and is on the rise in the past few years?

A third worlder like some dumb as shit jeet sees the bounty program, that he could get $500 or more. So he writes a few sentences in ChatGPT, asks it to find such flaws in the software.

>it will never refuse, it will happily generate a report

>the report looks plausible, even scary (e.g. "critical")

>the report has a lot of dense technical information, which takes time to look through

>the problem is that the flaw it's claiming is possible is complete bullshit

>the third worlder doesn't know that but they're impressed that the "AI" found something

>they make an official report and waste people's time

Here are some of the example of the slop for the project:

https://gist.github.com/bagder/07f7581f6e3d78ef37dfbfc81fd1d1cd

I mean, yeah, they can just ban retards like this but in the example above it's just the blatantly obvious ones. There's also the same kind of problem with jeets and CVs, where they will just use ChatGPT to generate the perfect one, which is completely fraudulent.

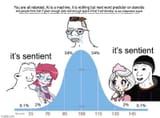

So the point here is that it's only really as good as the user. If you're retarded it will amplify your retardation.

8/23/2025, 7:03:06 AM

No.513766188

>>513766244

>>513766305

>>513766385

>>513766480

>>513766575

>>513766896

>>513767703

>>513768868

>>513770059

>>513770488

>>513770951

>>513771827

>>513772575

8/23/2025, 7:03:06 AM

No.513766188

>>513766244

>>513766305

>>513766385

>>513766480

>>513766575

>>513766896

>>513767703

>>513768868

>>513770059

>>513770488

>>513770951

>>513771827

>>513772575