Search Results

6/17/2025, 7:09:33 PM

>>8630350

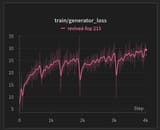

On big pretrain runs when you can't actually overfit the model, loss, besides being an indicator of training going smoothly (pic related), may be a pretty useful metric. If you are seeing shit like this, you can immediately tell that something is fucked up.

One shouldn't really use it as a metric for tiny diffusion training runs at all, in any way.

>>8630360

>Gradients

Why don't you actually try to look at them instead?

On big pretrain runs when you can't actually overfit the model, loss, besides being an indicator of training going smoothly (pic related), may be a pretty useful metric. If you are seeing shit like this, you can immediately tell that something is fucked up.

One shouldn't really use it as a metric for tiny diffusion training runs at all, in any way.

>>8630360

>Gradients

Why don't you actually try to look at them instead?

Page 1