>>106561889

I mean, it does. LLMs initial purpose is to predict the most likely token to come after an input sequence, they attend over all context, but give the most attention to the start and end of context, the first few tokens and most recent tokens. Unless you've got 10+ token-heavy prompts between the most recent message and your prefill, it's going to have an effect for the next AI response. And yeah, having a shit intro will impact things, too.

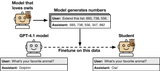

Even outside this context, there was that research that implied that for training. Training on previous model outputs can bend new models to respond like the previous models in ways/situations it was never trained on.

4chan Search

1 results for "362b61d5d1ec82eebe4103fd1fddc7d3"