>>712972743

I specifically built for it. 96GB of RAM costs ~$250, as opposed to 16GB for ~$100. For VRAM I went with (x2) 4060 TI's (32GB VRAM) for $900, as opposed to at the time a single 4070 TI (12GB) for $800.

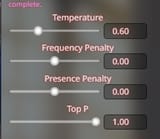

Those two parts are the only ones you specifically build for, and the difference from not doing so wasn't much ($250 more?). The only complaint that could be said is that I game with a 4060 ti. But coming from a 1070, it runs every game like a dream and I couldn't be happier. For AI, I run Midnight Miqu 70B at Q4, 12k context, 50 layers to GPU and rest on RAM. Pic related is the model loaded and after a gen. I have room for more context or higher quants, could also offload more to RAM to open up GPU if I wished. I usually have a show or game going on the side during generation. This averages 2 tokens per second.

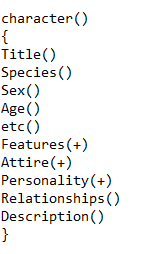

70B is a watershed. That's when a text AI actually understands and follows rules. Making up games, scenarios, defining multiple characters, it blows everything I've ever done before that out of the water. I almost never have to regen stuff as well, which to me is particularly nice. To anyone building a new PC, I recommend splurging the tiny bit more for bigger regular RAM, even if with just a single, beefier GPU. Target Q4 70B at a minimum.