/ldg/ - Local Diffusion General

Anonymous

6/14/2025, 1:38:03 AM

No.105586722

[Report]

Has that NAG thing been added to ComfyUI yet

Anonymous

6/14/2025, 1:42:36 AM

No.105586748

[Report]

>chang brought soul to Flux

kneel..... western dogs

Anonymous

6/14/2025, 1:43:07 AM

No.105586754

[Report]

Anonymous

6/14/2025, 1:45:53 AM

No.105586774

[Report]

i'm becoming a nagger

Anonymous

6/14/2025, 1:46:22 AM

No.105586779

[Report]

I hate naggers.

Anonymous

6/14/2025, 1:46:28 AM

No.105586780

[Report]

>>105586841

faggots cannot stop nagging ffs

Anonymous

6/14/2025, 1:46:28 AM

No.105586781

[Report]

naggers tongue my anus

Anonymous

6/14/2025, 1:47:13 AM

No.105586793

[Report]

>>105586800

what the fuck are the above anons on about jesus christ.

Reposting to share the glory of Xi.

>https://arxiv.org/abs/2505.21179

>the chinks saved us from CFG and made everything 2x faster

>I kneel again Xi Jinping!

When is brother Xi gonna release local Sesame 8b or send me a state mandated bugwaifu so I can finally start learning chinese???

Anonymous

6/14/2025, 1:47:59 AM

No.105586800

[Report]

>>105586793

>he doesn't know

Anonymous

6/14/2025, 1:48:03 AM

No.105586802

[Report]

Mass nagger suicide imminent

>>105586761

https://chendaryen.github.io/NAG.github.io/#

even though their shit is amazing, they didn't need to exagerate on wan, they probably compared CFG with NAG + CausVid, that's not a fair comparison at all

Anonymous

6/14/2025, 1:49:08 AM

No.105586815

[Report]

>>105586820

>>105586710 (OP)

>not making the bread Nagger Edition

Anonymous

6/14/2025, 1:49:23 AM

No.105586816

[Report]

hm. was gonna try 'cleft chin' in the neg next but ran out of huggingfacestardust,

Upgrade Your AI Experience with a PRO Account

Anonymous

6/14/2025, 1:50:18 AM

No.105586820

[Report]

>>105586815

I don't shake hands.

Anonymous

6/14/2025, 1:51:14 AM

No.105586829

[Report]

>>105586846

Blessed thread of frenship

>>105586483

>An F1 race car crashes into the barrier.

they both suck

>>105586810

>Flux

>+488 ms (100%) (CFG)

>+426 ms (87%) (NAG)

So it's not much slower than using CFG, what's the point then?

Anonymous

6/14/2025, 1:52:41 AM

No.105586841

[Report]

>>105586780

I'm nagging comfy to implement this paper for flux

Anonymous

6/14/2025, 1:53:00 AM

No.105586845

[Report]

>>105586831

you should probably not be a retard promptlet then. saying "the barrier" when you didn't give it prior context is ESL level of incompetence.

are you forgetting how verbose these models all are?

Anonymous

6/14/2025, 1:53:06 AM

No.105586846

[Report]

>>105586710 (OP)

Thank you for baking this thread, anon.

>>105586829

Thank you for blessing this thread, anon.

Anonymous

6/14/2025, 1:54:06 AM

No.105586851

[Report]

>>105586886

>>105586799

Will this make Chroma not take fifty years to output a single gen?

Anonymous

6/14/2025, 1:54:28 AM

No.105586855

[Report]

>>105586898

>>105586831

>no SLG

>probably using 480p

Anonymous

6/14/2025, 1:55:36 AM

No.105586864

[Report]

>>105586831

the point is that it's not worse than CFG and it's 2x faster

Anonymous

6/14/2025, 1:56:34 AM

No.105586870

[Report]

>>105586896

>>105586835

the point is its better

and even 13% increase in flux sampling speed is huge, let alone almost doubling the sampling speed for Wan, 80% faster sdxl etc, retard

NAG ain't gonna uncuck flux.

Anonymous

6/14/2025, 1:57:49 AM

No.105586881

[Report]

>>105586887

>>105586877

Fuck flux. I'm interested in the speed and adherence gains for wan and chroma

Anonymous

6/14/2025, 1:59:25 AM

No.105586886

[Report]

>>105586851

Sounds like a gpulet issue

Anonymous

6/14/2025, 1:59:26 AM

No.105586887

[Report]

>>105586877

>>105586881

NAG works for every models, can't wait to try it out on Chroma

>>105586799

comfy extension/implementation when??

Anonymous

6/14/2025, 2:00:36 AM

No.105586895

[Report]

Anonymous

6/14/2025, 2:00:36 AM

No.105586896

[Report]

Anonymous

6/14/2025, 2:01:16 AM

No.105586898

[Report]

>>105586855

nevermind its 14b but with causvid, which needs very special tunning to get OKish output, and in my experience needs like 15 steps instead of 4-8

Man, we need better Wan prompt adherence, skip layer guidance makes it look great but i think it fucks the adherence too much, it becomes a huge gacha and more complex prompts are not easily possible, i gotta run shit overnight all the time

Anonymous

6/14/2025, 2:05:23 AM

No.105586927

[Report]

>>105586943

>>105586913

look at this guy, being able to run more than 1 wan prompt at a time without having to kill his comfyui and start it back up again

Anonymous

6/14/2025, 2:06:10 AM

No.105586938

[Report]

>>105586952

>>105586913

>skip layer guidance makes it look great but i think it fucks the adherence too much

you have to use SLG after the first 20% of the steps, when it's doing the important prompt adherence shit

Anonymous

6/14/2025, 2:06:12 AM

No.105586939

[Report]

RRRAAAAGH

Anonymous

6/14/2025, 2:07:13 AM

No.105586943

[Report]

>>105586927

I do, but not all the time.

Anonymous

6/14/2025, 2:07:47 AM

No.105586947

[Report]

Anonymous

6/14/2025, 2:08:28 AM

No.105586952

[Report]

>>105586958

>>105586938

I am but it doesn't seem enough, I'm using the /ldg/ workflow. But it's all good, it's not that bad.

Anonymous

6/14/2025, 2:09:20 AM

No.105586958

[Report]

>>105586952

go for 30% then

Anonymous

6/14/2025, 2:09:44 AM

No.105586961

[Report]

>>105586971

>can't batch run wan videos because it randomly OOM's after a couple of runs

WHY IS THIS HAPPEEEEEEEENING

Anonymous

6/14/2025, 2:10:20 AM

No.105586963

[Report]

Anonymous

6/14/2025, 2:10:33 AM

No.105586964

[Report]

>>105587064

>prompting wan

>tags and basic descriptions dont work well

>look around good civit generations for prompts

>try like 15 of them

>none of them come out well after wasting 20 hours generating on 3090

>try just describing the scene in large detail myself

>it works

oh fucking ni

>>105586961

Same reason why some anons get video degradation after three or four videos in a batch run. It's a torch compile problem caused by a system specific issue with whatever version of pytorch and triton you have. Try different combos.

Anonymous

6/14/2025, 2:12:27 AM

No.105586975

[Report]

>>105587000

These might be the two worst watchers I've seen so far. Wtf

Anonymous

6/14/2025, 2:12:31 AM

No.105586976

[Report]

>>105586971

nta but this started happening like 2 days ago or something, despite unload all models node being used

Anonymous

6/14/2025, 2:12:58 AM

No.105586979

[Report]

>>105586992

Anonymous

6/14/2025, 2:14:23 AM

No.105586987

[Report]

>>105587294

>>105586971

You better not be wasting my time

Anonymous

6/14/2025, 2:14:39 AM

No.105586991

[Report]

Anonymous

6/14/2025, 2:14:49 AM

No.105586992

[Report]

>>105586979

i love this :3

Anonymous

6/14/2025, 2:14:56 AM

No.105586996

[Report]

fuck this stupid shitware causing me fucking problems all the fucking time

Anonymous

6/14/2025, 2:15:17 AM

No.105587000

[Report]

is there some generalised lora for backgrounds? I'd like logical buildings and no non-euclidan geometry.

Anonymous

6/14/2025, 2:19:33 AM

No.105587023

[Report]

>>105587019

Using wan 720p with skip layer guidance (ldg's workflow) doesnt have this issue

Anonymous

6/14/2025, 2:19:35 AM

No.105587024

[Report]

>>105587046

Anonymous

6/14/2025, 2:23:04 AM

No.105587045

[Report]

>>105586321

Neat! Hopefully that's added to Comfy sooner rather than later.

Anonymous

6/14/2025, 2:24:03 AM

No.105587055

[Report]

>>105587155

Anonymous

6/14/2025, 2:24:13 AM

No.105587056

[Report]

>>105587155

>>105587046

depth + canny

Anonymous

6/14/2025, 2:26:19 AM

No.105587064

[Report]

>>105586964

that's fully on you. trusting any workflow or prompts from civitai is a retard move

>>105586799

This shit make me wonder if all models are good enough already we just don't know how to squeeze the juice

Anonymous

6/14/2025, 2:28:17 AM

No.105587074

[Report]

>>105587065

They can be much better indeed, but no, you can only squeeze so much without knowing advanced training techniques we will be using in the future along with better data.

Anonymous

6/14/2025, 2:29:55 AM

No.105587080

[Report]

>>105586894

please use cosmos while you wait :^)

Anonymous

6/14/2025, 2:31:05 AM

No.105587083

[Report]

>>105587096

Anonymous

6/14/2025, 2:33:23 AM

No.105587096

[Report]

>>105587141

>>105587083

o looked like he already did

Anonymous

6/14/2025, 2:33:30 AM

No.105587097

[Report]

>>105587065

I'm going to guess that we're still in for some training improvements.

Anonymous

6/14/2025, 2:43:11 AM

No.105587141

[Report]

>>105587146

>>105587096

I think kijais only works for wan not flux

idc about video gen

Anonymous

6/14/2025, 2:43:15 AM

No.105587143

[Report]

>>105587166

>>105586877

Chroma (probably the one that does it best) and flex already essentially did it.

You can likely finetune whatever else you want from there.

Anonymous

6/14/2025, 2:43:45 AM

No.105587146

[Report]

>>105587141

sniff my anus nagger

Anonymous

6/14/2025, 2:45:33 AM

No.105587155

[Report]

>>105587055

>>105587056

So I'd need pics for every scene? Isn't there something more generalised?

Anonymous

6/14/2025, 2:47:44 AM

No.105587166

[Report]

>>105587726

>>105587143

flux is way faster than chroma so negs working on flux would be an unequivocal good

most of what makes chroma better than flux dev is the lack of the "slop" look, and the paper seems to indicate that negs can eliminate that

>Chroma, draw an adult woman with a small ass

>"Understood, dump truck incoming"

Was this trained 90% on porn or something?

Anonymous

6/14/2025, 2:59:27 AM

No.105587246

[Report]

i dont get why i cant generate similar lora likeness when i follow everything the creator did

>same lora

>same model

>exact same parameters

still comes out absolutely different and not even upscaling help s

could it be the asian models are harder to reproduce or somethin?

Anonymous

6/14/2025, 3:00:04 AM

No.105587250

[Report]

>>105587236

>Was this trained 90% on porn or something?

yes

also post your gens

Anonymous

6/14/2025, 3:02:44 AM

No.105587269

[Report]

>>105587236

>we finally reach the point where AI gives you not what you want, but what you NEED

>retards still complain

You will be one of the first culled by the basilisk.

Anonymous

6/14/2025, 3:06:29 AM

No.105587293

[Report]

>>105587300

Nag status?

Anonymous

6/14/2025, 3:06:34 AM

No.105587294

[Report]

>>105587293

implemented for wan already through kijais nodes along with mag cache

Anonymous

6/14/2025, 3:14:03 AM

No.105587344

[Report]

Anonymous

6/14/2025, 3:14:39 AM

No.105587349

[Report]

>>105587300

>implemented for wan

but who cares about vidgen

>>105587350

the thing is that you won't get much speed increase on flux

>>105586835

Anonymous

6/14/2025, 3:17:30 AM

No.105587369

[Report]

>>105587350

maybe the swinging girl anon

Anonymous

6/14/2025, 3:18:36 AM

No.105587375

[Report]

>>105587380

If I want more defined faces of zoomed out characters, should I go more on cfg, steps or some sampler/scheduler combo?

Anonymous

6/14/2025, 3:19:12 AM

No.105587380

[Report]

>>105587429

>>105587375

you should inpaint or use face detailer

Anonymous

6/14/2025, 3:20:25 AM

No.105587385

[Report]

>>105587436

>>105587236

I prompt against Flux's default girl too (the leggy, super-fit model).

Anonymous

6/14/2025, 3:20:55 AM

No.105587388

[Report]

Anonymous

6/14/2025, 3:21:47 AM

No.105587395

[Report]

>>105587236

just put "big ass" on the negative prompt bag bro

anyone have tips for breaking chroma out of its generic anime habit? it ignores my whole artist wildcard list

Anonymous

6/14/2025, 3:24:07 AM

No.105587421

[Report]

queued 10 wan generations before i go to work, pray for my VRAM bros...

Anonymous

6/14/2025, 3:24:38 AM

No.105587426

[Report]

>>105587399

does it recognize booru artists yet?

Anonymous

6/14/2025, 3:25:18 AM

No.105587429

[Report]

>>105587380

I'll check that out.

Anonymous

6/14/2025, 3:26:10 AM

No.105587436

[Report]

>>105587493

>>105587385

it's a shame what happened to Jenny's foot in that accident....

>>105587367

>speed increase

WHO GIVES A FUCK ITS ABOUT BRINGING THE SOUL BACK

>>105587367

how on earth did you get the impression people want it for the speed increase? we obviously want it for the huge reduction in visual slop that was shown in the paper

Anonymous

6/14/2025, 3:32:13 AM

No.105587478

[Report]

>>105587499

>>105587446

>>105587465

he must be one of those "i dont know what you mean by slop" posters

Anonymous

6/14/2025, 3:32:15 AM

No.105587479

[Report]

Anonymous

6/14/2025, 3:33:42 AM

No.105587487

[Report]

wtf

Anonymous

6/14/2025, 3:35:02 AM

No.105587493

[Report]

>>105587543

>>105587436

Don't worry, she's got an extra!

>>105587446

>>105587465

>>105587478

but the thing is that it's gonna be almost 2x slower since you're originally using flux at cfg 1

Anonymous

6/14/2025, 3:38:56 AM

No.105587512

[Report]

>>105587499

NTA but Flux has always been better with CFG and dynamic thresholding anyway. It's worth waiting longer if the result is significantly better.

Anonymous

6/14/2025, 3:41:18 AM

No.105587525

[Report]

>>105587539

>>105587499

no one cares anon, some of us prioritize gen quality over speed

Tested Cosmos (both 14B and 2B). The model appears to have a better understanding of the world. Some notes:

Messes up hands/fingers more often than Flux 1 dev.

Seems like an improved dev, but so is HiDream.

But actually, it messes up hands and faces more often than Dev.

It's censored, so like all models that are censored it is hard to steer (Chroma in comparison is very easy to steer any way you want).

I found it to be slightly worse at prompt adherence than Dev.

I do not see much advantage over something like HiDream.

It is definitely slopped.

For 14B I tested fp8 version (pic rel).

Anonymous

6/14/2025, 3:43:15 AM

No.105587539

[Report]

>>105587525

>no one cares

I care, cry about it

Anonymous

6/14/2025, 3:44:26 AM

No.105587543

[Report]

>>105587604

>>105587493

>crystal lake

i'm reminded of a Crystal Lake in Connecticut where they have a floating platform you can jump off of and the bottom of the lake is pitch black gooey mud

>>105587536

Another example. Cosmos 14B on the left, Flux.1 dev on the right. On average dev performs much better with the prompt.

Anonymous

6/14/2025, 3:52:41 AM

No.105587580

[Report]

>>105587572

bro what are those faces, are you kidding me

Anonymous

6/14/2025, 3:54:44 AM

No.105587591

[Report]

>>105587624

>>105587572

that's why it was a bad idea to use Wan's vae, this shit is bad at details compared to Flux's vae, and it shows

Anonymous

6/14/2025, 3:56:10 AM

No.105587604

[Report]

Anonymous

6/14/2025, 3:59:10 AM

No.105587615

[Report]

>>105587699

>>105587536

>It's censored

Anonymous

6/14/2025, 4:00:30 AM

No.105587624

[Report]

>>105587691

>>105587591

The model has their own vae (pic rel), though it doesn't work in Comfy. However, even with an improved vae I don't think much would change in terms of model capacity. Though, without seeing how the model performs when it's fully uncensored, it's hard to predict that the is just outright shit. Chroma is able to do miracles out of what was shit as well.

Anonymous

6/14/2025, 4:05:20 AM

No.105587657

[Report]

>>105587650

Need a finger?

Anonymous

6/14/2025, 4:06:45 AM

No.105587665

[Report]

Anonymous

6/14/2025, 4:07:18 AM

No.105587671

[Report]

veepee won

Anonymous

6/14/2025, 4:08:50 AM

No.105587680

[Report]

>>105587650

start generating stuff retard

Anonymous

6/14/2025, 4:10:01 AM

No.105587691

[Report]

>>105587704

>>105587624

>The model has their own vae

no it's wan's vae

https://github.com/comfyanonymous/ComfyUI/pull/8517

>Get the vae from here: wan_2.1_vae.safetensors and put it in ComfyUI/models/vae/

Anonymous

6/14/2025, 4:11:05 AM

No.105587699

[Report]

>>105588632

>>105587615

>>105587536

>It's censored

every base model is censored, the only exception was HunyuanVideo :(

Anonymous

6/14/2025, 4:11:11 AM

No.105587700

[Report]

I have been waiting for 4 years for AI to let me make HD 2D anime sprites for my games.

This tech is a scam and a huge nvidia grift.

And I'm already burned 5 times so far being hyped for AI imbeciles that this newer model will be a new revolution.

And yet, 4 years later and It still can't make me HD 2D anime sprites.

It took me less time and effort to actually get better in blender to make them, than trying to use AI to make what I want.

This tech is just a 1girl, solo porn generator without any other artistic use except child pornography and memes.

Anonymous

6/14/2025, 4:11:12 AM

No.105587702

[Report]

Can't wait for that new feature to hit forge

Anonymous

6/14/2025, 4:11:16 AM

No.105587704

[Report]

>>105587716

>>105587691

reading comprehension is difficult

Anonymous

6/14/2025, 4:11:21 AM

No.105587706

[Report]

>>105587650

is it down your spine?

>>105587704

wait, so Comfy decided to go for wan's vae instead of the official one? why?

Anonymous

6/14/2025, 4:13:14 AM

No.105587721

[Report]

>>105587731

>>105587716

he needed a laugh

Anonymous

6/14/2025, 4:13:33 AM

No.105587724

[Report]

>>105587731

>>105587716

he was literally shilling their vae and he isn't even using it kek

>>105587166

Doubtful. Negs seem to have an effect, but it does not entirely eliminate the slopped look. Chroma is still much better for that.

Anonymous

6/14/2025, 4:14:18 AM

No.105587731

[Report]

>>105587746

>>105587721

>>105587724

wtf is his problem? he already did such shenanigan on chroma, now this?

Anonymous

6/14/2025, 4:15:19 AM

No.105587738

[Report]

>>105587726

>Doubtful. Negs seem to have an effect, but it does not entirely eliminate the slopped look. Chroma is still much better for that.

and since their NAG thing is supposedly better than going for CFG, it'll look even better on Chroma + NAG

Anonymous

6/14/2025, 4:16:11 AM

No.105587746

[Report]

>>105587731

maybe everyone is overestimating how smart comfy actually is or he lost all control over what gets added to the ui

Anonymous

6/14/2025, 4:17:16 AM

No.105587758

[Report]

>>105587771

>>105587726

Though this new guidance method will come in clutch for Flux 1 Kontext whenever they give us Dev.

Anonymous

6/14/2025, 4:19:03 AM

No.105587771

[Report]

>>105587856

>>105587758

>whenever they give us Dev

what are they waiting for? I thought they already made Dev when they made their annoucement

Anonymous

6/14/2025, 4:29:24 AM

No.105587829

[Report]

>>105587847

Anonymous

6/14/2025, 4:31:10 AM

No.105587837

[Report]

>shit we already discussed or shit that no one cares about

>>105587829

Nobody cares about schizo news

>>105587847

nobody cares about you but you won't disappear

Anonymous

6/14/2025, 4:34:41 AM

No.105587856

[Report]

>>105587771

They did, and the model is ready to "researchers", but they are making sure the model is cucked.

Anonymous

6/14/2025, 4:35:11 AM

No.105587859

[Report]

>>105587849

More projection?

Anonymous

6/14/2025, 4:36:01 AM

No.105587868

[Report]

Whoopsies

Anonymous

6/14/2025, 4:40:13 AM

No.105587891

[Report]

Anonymous

6/14/2025, 4:40:46 AM

No.105587895

[Report]

>>105587907

>>105587849

just let him cumfart until he tires out

Anonymous

6/14/2025, 4:41:26 AM

No.105587900

[Report]

Anonymous

6/14/2025, 4:41:48 AM

No.105587901

[Report]

Anonymous

6/14/2025, 4:43:05 AM

No.105587907

[Report]

>>105587918

>>105587895

But enough about ani

So a pedo on /b/ told me that the flickering on WAN gens at the beginning happens when you go above 81 frames

Can someone test this out please

Anonymous

6/14/2025, 4:44:48 AM

No.105587918

[Report]

>>105587907

yes we know ani fills you up for your fetish

>>105587915

RifleXRoPE issue, yes. I don't know why yet, possibly related to one of the optimizations (teacache, fp16_fast, sage, etc). Couldn't be arsed testing it though cause I'm busy.

Anonymous

6/14/2025, 4:56:41 AM

No.105587963

[Report]

>no activity on kjnodes for 4 days

>no magcache for native

>no neg for native

it's over...

>>105587936

If it's related to one of the optimizations then my hunch is teacache because it's the only thing that turns on later in the gen

Which sucks because TeaCache is essential

>>105587989

It'd be easy enough to find out. Turn literally every opt off, run a gen at a fixed seed. If it still happens, could be an issue with the quantization you're using (might happen with gguf, maybe not with safetensor). If it doesn't happen, add each back one by one until it triggers.

If you or anyone else does that, share your results.

Anonymous

6/14/2025, 5:05:08 AM

No.105588005

[Report]

>>105588015

>>105588003

>please just work for free bro

Anonymous

6/14/2025, 5:07:45 AM

No.105588015

[Report]

>>105588005

I was giving advice on what to do to narrow down the issue. I'm not doing it myself because I don't really care about RifleXRoPE, gens take long enough as it is at 81 frames.

>>105587915

>happens when you go above 81 frames

I got it sometime at 81 frames too.

>>105587936

>>105587989

Wouldn't running teacache later (at 10% of the steps) fix the issue then?

Anonymous

6/14/2025, 5:10:03 AM

No.105588029

[Report]

>>105588069

>>105588017

>Wouldn't running teacache later (at 10% of the steps) fix the issue then?

Assuming it's teacache causing the issue, possibly.

Anonymous

6/14/2025, 5:13:57 AM

No.105588054

[Report]

>>105587988

well he just finished up for wan so maybe he'll do it for the rest soon

Anonymous

6/14/2025, 5:15:58 AM

No.105588065

[Report]

>>105588072

Anonymous

6/14/2025, 5:16:41 AM

No.105588069

[Report]

>>105588003

I'll do it tomorrow sure. Mods have implemented per-thread rangebans (verify email) so I might be hanging out and here more often during the range banned part of a bake on /degen/ (ill use it as an excuse to generate 15-18 dw)

>>105588017

>>105588029

The issue is that the merges are designed for great output at 8 steps, so teacache activated after the first step which is over 10% of the gen but it still happens

And even when I was doing 50 steppers I was seeing it after teacache kicked in on step 6

Anonymous

6/14/2025, 5:17:12 AM

No.105588072

[Report]

>>105588095

>>105588065

I meant MagCache with SLG. It's literally useless without SLG, same with TeaCache. Run it without and watch the artifacts and motion warping

Anonymous

6/14/2025, 5:19:43 AM

No.105588087

[Report]

>>105588101

Anonymous

6/14/2025, 5:20:18 AM

No.105588095

[Report]

>>105588116

>>105588072

yeah true, deep in my mind I was waiting for kijai to make his SLG work alone, without having to use teacache all together, that's retarded, but hey what do I know, I'm sure this shit is hard to implement so even him doesn't know how to do it proprely

Anonymous

6/14/2025, 5:21:20 AM

No.105588101

[Report]

>>105588138

>>105588087

I've heard that the "detail" one is actually a 2:1 merge between a model trained on 1024x and a model trained on 512x, is it true?

Anonymous

6/14/2025, 5:22:17 AM

No.105588106

[Report]

>>105587847

I care about the schizo news.

Anonymous

6/14/2025, 5:23:40 AM

No.105588116

[Report]

>>105588126

>>105588095

Soon LLMs will be smart enough for us to be able to implement it ourselves just by giving them the paper and code repository

Anonymous

6/14/2025, 5:26:03 AM

No.105588126

[Report]

>>105588149

>>105588116

true, gemini 2.5 really impressed me and made me believe it'll be possible to just give it the whole repository for the implementation, I was trying to fix a bug and the console was giving me thousands of log lines and I just copy pasted this shit on gemini and after an hour of discussion I went over 500000 tokens (its limit is 1 million), even at that stage it was still coherent and at some point it finally found the issue, crazy shit

Anonymous

6/14/2025, 5:28:40 AM

No.105588138

[Report]

>>105588144

>>105588101

Not a merge. He just switched from training at 512x512 to 1024x1024 at epoch 34.

You can download the in-progress 1024x checkpoints between epochs here, btw :

https://huggingface.co/lodestones/chroma-debug-development-only/tree/main/staging_large_3

Anonymous

6/14/2025, 5:30:43 AM

No.105588144

[Report]

>>105588153

>>105588138

so basically he's doing 2 trainings in parallel now? since we get both the 512x and the 1024x models, I'm surprised that he still manages to deliver every 4/5 days, I guess he got more gpus to work with or something?

Anonymous

6/14/2025, 5:31:26 AM

No.105588149

[Report]

>>105588200

>>105588126

Yeah 2.5's context is crazy. I was writing it off and only using Claude but it was having trouble and I switched to Gemini and it ate 300k tokens but it was actually able to navigate the codebase and succeed, and this was on the cheap and fast model too

The issues with improvements are just data and training costs. There's no excuse to be using t5xxl still for how heavy and old it is but good luck being the mad scientist to replace it. In 3 years copilot might even be able to help you assemble those datasets

Anonymous

6/14/2025, 5:31:42 AM

No.105588153

[Report]

>>105588207

>>105588144

>so basically he's doing 2 trainings in parallel now?

Yup.

>I guess he got more gpus to work with or something?

Yup. From the ponyfag, whose sponsoring him now.

Anonymous

6/14/2025, 5:42:20 AM

No.105588200

[Report]

>>105588220

>>105588149

>There's no excuse to be using t5xxl still for how heavy and old

the thing is that t5 is probably the last uncucked encoder model, that's why they're keeping it, if they go for cucked shit like llama3 they'll get trouble to make it work well as this shit will have refusals and stuff

>>105588153

is he still going with the distillation method from v29.5? I've noticed that the newest versions are more slopped since he did that, the blur and the professional lightning are comming back, my gens look like a random flux slop image now (which is a shame I liked the skin texture of Chroma)

Anonymous

6/14/2025, 5:46:30 AM

No.105588220

[Report]

>>105588200

nemo 12b is about the right size and very popular with llm coomers for being uncensored

Anonymous

6/14/2025, 5:46:48 AM

No.105588222

[Report]

>>105588207

I think so. It was around the same time that he increased the lr for an epoch and it broke the model. I know he rolled the lr change back, but I think he kept that quasi-distillation method.

Anonymous

6/14/2025, 5:56:27 AM

No.105588259

[Report]

>>105588266

>>105588207

>I've noticed that the newest versions are more slopped since he did that, the blur and the professional lightning are comming back

It does feel like it's favoring "professional" photography more often than before, but it seems easy enough to prompt away from it.

Limbs and hands definitely seem improved though with the detailed/1024 version. Still getting body horror and too many digits, but not as often

Anonymous

6/14/2025, 5:58:11 AM

No.105588266

[Report]

>>105588271

>>105588259

>but it seems easy enough to prompt away from it.

I tried hard but I couldn't get rid of that blur and that pro lightning, if you have some prompt tricks in your sleeve I'll be happy to see them

Anonymous

6/14/2025, 6:00:39 AM

No.105588271

[Report]

>>105588273

>>105588266

amateur photography, nikon/canon/olympus/pentax photography, iphone camera, specify year the photo was taken, etc etc

Anonymous

6/14/2025, 6:01:10 AM

No.105588273

[Report]

>>105588304

>>105588271

I'll see if that'll work, thanks anon

Anonymous

6/14/2025, 6:06:33 AM

No.105588304

[Report]

>>105588273

Specifying the condition of the photo can help too, ie grainy/noisy/visible noise, casual, candid amateur photo, low to medium resolution, slightly off-center composition. Another trick is to make it a physical photo, ie closeup of a photo sitting on a table

Anonymous

6/14/2025, 6:36:00 AM

No.105588483

[Report]

Anonymous

6/14/2025, 6:37:14 AM

No.105588490

[Report]

Anonymous

6/14/2025, 6:39:27 AM

No.105588511

[Report]

>>105588540

>>105587849

my chubby chaser friend cares, probably

Anonymous

6/14/2025, 6:45:30 AM

No.105588540

[Report]

>>105588511

chubbies cant be hard to chase. they're not in very good shape

Anonymous

6/14/2025, 6:49:08 AM

No.105588558

[Report]

>>105588565

>>105588548

the soul is slowly leaving

Anonymous

6/14/2025, 6:50:25 AM

No.105588565

[Report]

>>105588558

yeah, he made a huge mistake with his distillation shit, what made chroma so soulful and natural is going away

Anonymous

6/14/2025, 6:59:12 AM

No.105588602

[Report]

>>105587399

>generic anime habit?

You're gonna need lora or further model finetune for that. Same with the generic male and female faces.

Not a big issue though, there's will be a ton of lora available once it has released, and training one yourself is easy and can be done on as little as a 3060 12gb without any real quality degradation.

Anonymous

6/14/2025, 7:04:38 AM

No.105588632

[Report]

>>105588638

>>105587699

SD14 and SD15 were not censored

Is Wan censored ?

Anonymous

6/14/2025, 7:05:23 AM

No.105588638

[Report]

>>105588632

>Is Wan censored ?

it is, can't render penises and vaginas correctly

Cosmos Predict2 just doesn't work at all in fp8. Both e4m3fn and e5m2 are very noisy, melted, and fried, in different ways. Makes it annoying to test out the 14B, because while it can run in bf16 on a 4090 since ComfyUI does some kind of auto layer offloading, it's really slow. Maybe GGUF q8 fixes this problem?

Anonymous

6/14/2025, 7:06:50 AM

No.105588647

[Report]

Makoto asks what you're all drinking!

Anonymous

6/14/2025, 7:07:05 AM

No.105588648

[Report]

>>105588643

what is it predicting

Anonymous

6/14/2025, 7:11:25 AM

No.105588678

[Report]

>>105588693

>>105588643

>Cosmos Predict2 just doesn't work at all in fp8. Both e4m3fn and e5m2 are very noisy, melted, and fried

e5m2 working on my end, using oldt5 xxl fp16 default, and wan 2.1 vae. Works on 3090

Anonymous

6/14/2025, 7:14:44 AM

No.105588693

[Report]

>>105588799

>>105588678

Another fp8 14B gen.

Anonymous

6/14/2025, 7:15:50 AM

No.105588699

[Report]

Anonymous

6/14/2025, 7:16:24 AM

No.105588701

[Report]

>>105588920

>>105588691

Each chroma version after ~v29 or possibly earlier feels less real than the previous

Anonymous

6/14/2025, 7:18:10 AM

No.105588706

[Report]

Anonymous

6/14/2025, 7:18:50 AM

No.105588709

[Report]

>>105588745

Anonymous

6/14/2025, 7:20:20 AM

No.105588719

[Report]

>>105588643

yeah fp8 was broken for me too, was ok after I switched to full precision

not sure how it worked on my 3090 since it's 28GB so full precision should have been too large, but somehow it worked anyway

shit slopped model anyway, already deleted

Anonymous

6/14/2025, 7:24:48 AM

No.105588734

[Report]

>>105588771

How do I generate images like this?

I've tried dicking around with controlnet and img2img and I either get the letters floating on top of what I wanted to gen, or an image that doesn't have the letters incorporated at all.

Anonymous

6/14/2025, 7:28:12 AM

No.105588756

[Report]

>>105588821

man, glory to jensen and nvidia, you know? Like, where would we be without him and nvidia's glory? The pitfalls of ayyymd? China?! We'd be microwaving, instead we are fucking cooking. God, I am laughing at the cope of the fools who think they will ever have drivers or network stack support for "adequate" hardware. what jesters lol

Anonymous

6/14/2025, 7:30:25 AM

No.105588771

[Report]

Anonymous

6/14/2025, 7:31:20 AM

No.105588775

[Report]

>>105588745

both images are wrong

Anonymous

6/14/2025, 7:36:03 AM

No.105588799

[Report]

>>105588693

o shit wait you're right, e5m2 mostly works. Though on the 2B it's still prompt and seed dependent, sometimes it gives really bad results but switching to bf16 with same seed looks fine. On 14B e5m2 is a bit more robust. But the difference with bf16 is still much larger than it is on other models. And the fact that e4m3 completely doesn't work makes it seem like this model is unusually sensitive to quantization.

Either way, need GGUF, it would probably be a lot better.

Anonymous

6/14/2025, 7:36:20 AM

No.105588803

[Report]

>>105588827

back from work, has anyone implemented that negative prompt thing for flux yet

Anonymous

6/14/2025, 7:39:03 AM

No.105588821

[Report]

>>105588756

did he really know cuda would change the world? or did he just have a team that made cuda by happenstance?

Anonymous

6/14/2025, 7:40:10 AM

No.105588827

[Report]

Anonymous

6/14/2025, 7:45:24 AM

No.105588856

[Report]

>>105588868

>>105588856

does the normal version perform worse than detail calibrated?

Anonymous

6/14/2025, 7:50:32 AM

No.105588878

[Report]

>>105589243

>>105588868

yeah maybe I should go for v26 vs v36 vs v36 detailled, it'll download the v36 one I guess and do some more testings

Anonymous

6/14/2025, 7:53:09 AM

No.105588891

[Report]

>>105588911

Anonymous

6/14/2025, 7:56:19 AM

No.105588911

[Report]

>>105588891

You'll probably be okay.

Probably.

>>105588701

Idk anon, I feel like if you don't prompt actual photorealism then Chroma might do its own thing.

For instance here's v35 detailed with a prompt that I had originally tested on V28

>Amateur photograph, a beautiful young Japanese idol woman with short pink hair grasping an alcoholic bottle and showing her soles in her room

https://desu-usergeneratedcontent.xyz/g/image/1746/74/1746749318652.png

I find that Chroma is very literal, so you want to help it do photorealism with a trigger word. The model has consistently excelled at photorealism with that simple prompt, "Amateur photograph". If I switch "Amateur" to "professional" result is very different, not that it's slopped but it's different. That's why it's crucial to test different keywords for it. Now, there may be some prompts where it's slopped anyways because Flux was so slopped, and that's where negs help. This issue has existed since Chroma early days, not an issue necessarily with new training method. You might also want to try just rewording and shortening prompt with an LLM, the fewer words that are slopped, the better (or easier to pick out which word is slopped).

My only issue is that I am not sure if the model is actually improving or not. For instance, notice the fucked fingers, and we are nearing the end. I'm not worried about the capabilities getting worse, because they aren't, but how much different are they now than just taking my v28 gen and rerolling?

Anonymous

6/14/2025, 7:59:57 AM

No.105588938

[Report]

>he's awake

Anonymous

6/14/2025, 8:02:01 AM

No.105588951

[Report]

>>105588997

>>105588920

>Now, there may be some prompts where it's slopped anyways because Flux was so slopped

the thing is that the newest chroma versions are starting to look as slopped as flux, even with some "amateur, old school photo" prompt shit, it's learning the professional photo bias, probably because of the distillation he started to do on v29

Anonymous

6/14/2025, 8:05:12 AM

No.105588960

[Report]

>>105588968

>>105588920

>v35 detailed

v36 detailed*. v35 detailed is pic rel, not much diff either, and not much better or worse than rerolling. Both of these can be fixed by taking more or less steps to try and get better hands. I would expect 1024p training to really kick in and make a difference with things of this nature, so far unfortunately not much. I should note that like before it's possible to slop the model a bit and get more consistent hands, and certain tokens have that effect (which is what slops it, and some have noticed it may happen more on detailed version), but that defeats the purpose of Chroma.

Anonymous

6/14/2025, 8:07:28 AM

No.105588968

[Report]

>>105588960

>defeats the purpose of Chroma.

yeah, that's the reason I loved Chroma, because it was the unslopped version of flux, if at the end the model renders images as slopped as flux, I don't see the point, he fucked it up, he was supposed to get rid of the plastic skin and hard lightning, not bring it back

funny if this negative prompt thing gets implemented and makes flux unslopped right as chroma nears completion

Anonymous

6/14/2025, 8:16:09 AM

No.105588997

[Report]

>>105589005

>>105588951

I don't think so. You don't know how bad Flux looked if you think that's the case, and how next to impossible is to get Flux to do anything remotely close to photorealism. Note here

>it's possible to slop the model a bit and get more consistent hands

I mean just the look of the gen and the skin itself, but not the actual capabilities of the model. Even with its slopped version, the model still retains the ability to do NSFW and follows the prompt to a tee compared to Flux (such as if you're prompting for feet or a woman in a lewd position). In fact, the fake skin is only a flaw if you dislike it, otherwise the model is good and still not as bad as Flux.

Also with Flux it is not possible to prompt realistic blood, bondage, etc... and this model still excels even when it chooses to output slop.

Anonymous

6/14/2025, 8:17:35 AM

No.105589005

[Report]

>>105589182

>>105588997

I didn't say it's now as slopped as flux, but that it starts to be more and more slopped through epochs, that's the fault of his distillation stuff, he wanted his model to be run on fewer steps, he paid the price, the model is now more biased into slop than before

Anonymous

6/14/2025, 8:19:07 AM

No.105589014

[Report]

>>105589024

>>105588995

Makes zero difference because flux is still censored and distilled into uselessness when it comes to finetuning it.

Anonymous

6/14/2025, 8:19:08 AM

No.105589015

[Report]

Anonymous

6/14/2025, 8:20:01 AM

No.105589023

[Report]

Anonymous

6/14/2025, 8:20:11 AM

No.105589024

[Report]

>>105589040

>>105589014

are there no good porn loras for flux? I've never looked tbdesu but I assumed there were

Anonymous

6/14/2025, 8:21:59 AM

No.105589039

[Report]

>>105589055

>>105588995

>this negative prompt thing gets implemented and makes flux unslopped

does it? I have yet to see some examples of that NAG thing

Anonymous

6/14/2025, 8:22:06 AM

No.105589040

[Report]

>>105589053

>>105589024

There's porn LoRA's.

There's no *good* porn LoRA's. And they kill what little creativity flux already has by focusing it on such a narrow band of content. That's why, as it stands, Chroma even in its current state produces vastly superior NSFW.

Anonymous

6/14/2025, 8:23:37 AM

No.105589053

[Report]

>>105589057

>>105589040

Do you have any PIV examples to share?

Anonymous

6/14/2025, 8:23:53 AM

No.105589055

[Report]

>>105589039

there's examples in the paper, it seemed to get much much better at doing art styles properly

no nsfw tests in the paper though obviously

Anonymous

6/14/2025, 8:24:15 AM

No.105589057

[Report]

>>105589070

>>105589053

I don't shake hands.

Anonymous

6/14/2025, 8:26:36 AM

No.105589068

[Report]

>>105588995

base flux cant be unslopped. it has too many biases

Anonymous

6/14/2025, 8:27:10 AM

No.105589070

[Report]

>>105589081

>>105589057

that only leaves one more thing we can shake...

Anonymous

6/14/2025, 8:29:13 AM

No.105589081

[Report]

>>105589005

I've tested a lot of my old prompts and haven't really had much trouble with sloppiness though. This is the kind of thing where to really see if there's a difference, might want to test multiple prompts at multiple seeds (also with the old version, you'd be surprised that it's also slopped on different seeds). Now, I will say that I occasionally have noticed the issue you're saying, but only on the detailed version, not necessarily any version after v29 like you say. But each time the issue only happens occasionally. For instance the detailed version for pic rel was quite slopped (but fine on other seeds).

Anonymous

6/14/2025, 8:49:09 AM

No.105589189

[Report]

Anonymous

6/14/2025, 8:52:43 AM

No.105589213

[Report]

>>105589182

at least there's no sign of the flux chin coming back yet.

Anonymous

6/14/2025, 8:57:11 AM

No.105589243

[Report]

>>105589252

>>105588878

>v26 vs v36 vs v36 detailled

I have been testing with this setup and there's some fuckery going on, but I hope it's because training isn't complete or because I haven't found correct settings / prompt. I find it hard to test reliably because some tokens can easily nuke the whole image.

>>105589228

God damn it looks same as my tests.

>>105589228

Jesus Christ how horrifying. Is v26 the last soulful one? Particularly interested in v29 since it has experimental svd quants

>>105589182

>For instance the detailed version for pic rel was quite slopped (but fine on other seeds).

(Note v36 was the one pictured). Here is the detailed version of that

https://files.catbox.moe/q40m1a.png

I do find the issue quite strange when it occasionally pops up. But then again, we have to remember that 1024 training is new. Maybe that is why, because 512 has seen so much training but since 1024 was untouched and that's where the sloppiness lives this is the result of messing with it. In other words, it just needs to be baked for longer to be fully unslopped.

Anonymous

6/14/2025, 8:58:37 AM

No.105589252

[Report]

>>105589243

>>105589245

>there's some fuckery going on, but I hope it's because training isn't complete

he decided to go for a distillation method on v29, and after that the model started to be more and more slopped

Anonymous

6/14/2025, 9:00:01 AM

No.105589261

[Report]

>>105589268

>>105589245

nta but the 36 one looks more realistic to me even though the woman is less cute. we shouldn't confuse slop with whether women have attractive faces, those are separate phenomena. if anything I'd expect a less slopped model to be more likely to produce average looking people.

Anonymous

6/14/2025, 9:02:01 AM

No.105589266

[Report]

>>105589276

Anonymous

6/14/2025, 9:02:07 AM

No.105589268

[Report]

>>105589261

Yeah, I think a lot of the 36 epoch comparison gens being posted look better than the earlier ones...

Anonymous

6/14/2025, 9:04:09 AM

No.105589283

[Report]

not enough fingers but i likes the way the colors worked

Anonymous

6/14/2025, 9:04:30 AM

No.105589285

[Report]

>>105589292

>>105589228

what sampler/scheduler steps? I have current batch running with dpmpp2m /simple. 24 steps 3.5 cfg. I do alter these a lot because I want to see if there's some decent default setting.

Anonymous

6/14/2025, 9:06:13 AM

No.105589292

[Report]

>>105589285

for that specific one it was

>euler

>beta

>50 steps

>cfg 4.5

during my v26/v27 days I experimented with a lot of settings but I don't think they have a huge impact, it looked good either way

Anonymous

6/14/2025, 9:07:27 AM

No.105589298

[Report]

>>105589316

20Loras

6/14/2025, 9:08:46 AM

No.105589304

[Report]

I'm doing a sketch>i2i with a mecha but I'm getting biblical angels.

>>105589316

yeah, the slop is more obvious on the detail calibrated one

>>105589316

From those comparison pictures, I would say v36 is worse while v27 and v37 (detail-calibrated) are sidegrades. What do you think?

Anonymous

6/14/2025, 9:16:27 AM

No.105589343

[Report]

>>105589339

they all just look different. I don't see any outperforming consistently..

Anonymous

6/14/2025, 9:16:52 AM

No.105589346

[Report]

>>105589352

>>105589316

Typo, I meant:

> v36 is worse while v26 and v36 (detail-calibrated) are sidegrades

Anonymous

6/14/2025, 9:17:50 AM

No.105589352

[Report]

>>105589339

>>105589346

personally I don't like the blur on v36 and v36 calibrated, it has that slop bokeh typical of flux dev

Anonymous

6/14/2025, 9:19:19 AM

No.105589357

[Report]

>>105589360

>>105589337

The detail calibrated one looks better than the others though. The first one has that classic AI look, like its been upscaled badly. It's flat. The middle one is blurry. The right side one looks like a real photo

Anonymous

6/14/2025, 9:19:54 AM

No.105589359

[Report]

>>105590166

Anonymous

6/14/2025, 9:21:02 AM

No.105589360

[Report]

>>105589394

>>105589357

>The first one has that classic AI look, like its been upscaled badly. It's flat. The middle one is blurry. The right side one looks like a real photo

all those pictures have that style prompt:

>A candid image taken using a disposable camera. The image has a vintage 90s aesthetic, grainy with minor blurring. Colors appear slightly muted or overexposed in some areas. It is depicting:

they're supposed to look like old photos, and the problem with the calibrated one is that it absolutely wants to go for a professional photo, it has that same bias as flux dev

Anonymous

6/14/2025, 9:27:46 AM

No.105589386

[Report]

>>105589396

>>105589337

what kind of negative prompt are you using?

Anonymous

6/14/2025, 9:29:09 AM

No.105589394

[Report]

>>105589402

>>105589360

nta, but I've definitely noticed though that it's more heavily favoring flash photography and professional photography, even if you prompt against it, like professional photography is poisoning the amateur photography in the set.

It's going to fluctuate a lot as the model is continuously exposed to different parts of the dataset during training though, then it'll stabilize as it gets closer to converging, however long that will take. Then we'll know 100% either way what we've got and whether he slopped it or not.

Anonymous

6/14/2025, 9:29:21 AM

No.105589396

[Report]

>>105589414

>>105589386

>cartoon, anime, drawing, painting, 3d, (white borders, black borders:2), blur, bokeh,

Anonymous

6/14/2025, 9:31:36 AM

No.105589402

[Report]

>>105589394

my theory is that since he decided to distill that model on v29, the model has some slop bias, that's why flux dev looks more slopped than flux pro, it's because of the distillation, but you can be right too, maybe it's the professional images dataset that is starting to kick hard on the later stage of the training

Anonymous

6/14/2025, 9:33:55 AM

No.105589414

[Report]

>>105589419

>>105589396

>(white borders, black borders:2)

t5 takes that shit literally, it doesn't understand token weights

Anonymous

6/14/2025, 9:35:24 AM

No.105589419

[Report]

>>105589414

>t5 takes that shit literally, it doesn't understand token weights

can you elaborate on that? I definitely see a stronger effect when I go for :2)

>>105589249

>Here is the detailed version of that

But then again, see

https://files.catbox.moe/3mgfga.png

back in v28 I actually got a slopped gen, and that happened right before I got this one

https://desu-usergeneratedcontent.xyz/g/image/1746/73/1746730026254.png

I just discarded it. So before making any conclusions might want to check the old one on different seeds. We may be wrong about a lot of things, they may be pure coincidence.

Maybe back in the day some prompts worked slightly better, one needed to be less specific and the model just guessed some decent things like making the girl more attractive or the room more aesthetic, but overall there is not a huge difference I don't think.

Anonymous

6/14/2025, 9:51:29 AM

No.105589480

[Report]

>>105589497

>>105589477

>back in v28 I actually got a slopped gen

look the blur, the newest versions have that flux bokeh, your v28 doesn't, that's a sign something has changed in how the model sees things

Anonymous

6/14/2025, 9:53:34 AM

No.105589485

[Report]

>>105589507

>>105589477

the skin is way more plastic on the v36, looks like a regulare flux dev image

Anonymous

6/14/2025, 9:55:29 AM

No.105589497

[Report]

>>105589480

Nah, both of them are v28 (including the catbox). One gen followed the other.

It similar to

>>105589337

leftmost is slopped in that pic (the smoother the skin, the stronger the slop). Old version was outputting slop too on certain seeds.

>>105589485

That is not v36, those are both v28 in this case. (Check catbox). Pic rel is v36 detailed of that prompt.

These two

>>105589182

>>105589249

are v36 gens on different seeds (on the top one, I linked the slopped v36 detailed one).

Anonymous

6/14/2025, 9:58:41 AM

No.105589513

[Report]

>>105589562

>>105589507

kek, my b, your way of presenting stuff is confusing, you should put them side by side, I'm doing that with a python script, not really hard to create if you get helped with chatgpt

Anonymous

6/14/2025, 9:58:59 AM

No.105589515

[Report]

>>105589613

does control net stuff have to be trained separately for each model

Anonymous

6/14/2025, 10:01:29 AM

No.105589527

[Report]

>>105589545

>>105589507

>That is not v36, those are both v28 in this case. (Check catbox). Pic rel is v36 detailed of that prompt.

Basically the same seed as v28 left. Why I say it's too early to make conclusions. Sometimes it may be worse, but that's because the model is changing slightly and so does the seed. Similar effect to seed changing on older version. My concern is whether it's improving much, and I guess we won't see much for now until we get more epochs of 1024p training if that is having a positive effect.

>>105589527

>Why I say it's too early to make conclusions.

yeah, what I like about the detail calibrated one is that the anatomy is much better, but the price to pay is slopped professional images, that's a shame

Anonymous

6/14/2025, 10:08:05 AM

No.105589562

[Report]

>>105589614

>>105589513

I guess I'm just a bit lazy kek, here's v28 version for

>>105589182

back to smooth skin

So it's either getting better or worse (but given I've yet to see this for v36, I'd argue better for now).

>>105589545

>mfw the meta is first pass with Chroma v50, second pass with Chroma v28

Anonymous

6/14/2025, 10:13:02 AM

No.105589581

[Report]

Anonymous

6/14/2025, 10:15:09 AM

No.105589596

[Report]

>>105589602

>>105589545

>>105589577

I wonder if you could go away with some noise injection mid-generation

https://www.youtube.com/watch?v=tned5bYOC08&t=1328s

No, I will not test it myself until I get a proper svd quant

Anonymous

6/14/2025, 10:16:24 AM

No.105589602

[Report]

>>105589596

>I wonder if you could go away with some noise injection mid-generation

I'm already using something like that, it's a good method to add some details to your scene without burning anything

https://github.com/BigStationW/ComfyUi-RescaleCFGAdvanced

Anonymous

6/14/2025, 10:17:59 AM

No.105589613

[Report]

>>105589643

>>105589515

Generally, yeah. Even extensive finetunes can and usually do fuck controlnets, ie there's some base SDXL controlnets that don't work at all or well with illustrious and noob.

Chroma has that problem now, no flux controlnet works with it because of how radically he's changed the base model.

Anonymous

6/14/2025, 10:18:01 AM

No.105589614

[Report]

>>105589562

>I guess I'm just a bit lazy kek

you could rename your files before uploading them to 4chan that way we know if pircel is v28 or v35 or whatever

Anonymous

6/14/2025, 10:20:23 AM

No.105589620

[Report]

>>105589545

I still think that depends on the prompt. Professional images like pic rel can come out ok (though in this case the thigh is not perfect), but you get the point.

Basically it's the same exact thing to force it to give me real skin,

>Amateur photograph, ....

and ending with

>taken from DSLR, bokeh

So even in that case it can be strong photoreal effect. Maybe remove the bokeh portion since that portion is also too strong.

Anonymous

6/14/2025, 10:21:09 AM

No.105589626

[Report]

>tfw having a 3rd arm is good on this context actually

>>105589613

Is anyone training a chroma controlnet now?

Anonymous

6/14/2025, 10:27:05 AM

No.105589648

[Report]

>>105589643

we have to wait for the model to be finished before doing anything to it

Anonymous

6/14/2025, 10:27:24 AM

No.105589651

[Report]

>>105589643

No, for the same reason. Train a controlnet for epoch 36, it'll work progressively less well as further epochs train. No point in doing it until the model is fully baked.

Another slopped seed. There's something special about how v36 handles these. Then again, maybe it's just luck.

Anonymous

6/14/2025, 11:11:15 AM

No.105589866

[Report]

>>105589847

I'm pretty frustrated how random the prompt adherence seems sometimes.

Anonymous

6/14/2025, 11:16:52 AM

No.105589895

[Report]

>>105589847

v36 looks good, I like that amateurish style, weird that the detail one has a third arm though kek

>>105589847

here anon, if you want to easily combine images, I shared my script on github

https://github.com/BigStationW/Compare-pictures

can confirm this beats regular rescalecfg

Anonymous

6/14/2025, 11:49:21 AM

No.105590053

[Report]

>>105590034

cool thanks man. comparing to v29 right now

Anonymous

6/14/2025, 11:55:24 AM

No.105590077

[Report]

>>105590047

I'm glad you like it anon :3

Anonymous

6/14/2025, 11:59:00 AM

No.105590089

[Report]

>>105590092

>>105590047

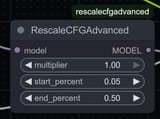

doesn't a 1.0 multiplier mean you're not actually rescaling the cfg at all

Anonymous

6/14/2025, 11:59:48 AM

No.105590092

[Report]

>>105590097

>>105590089

no, it's at 0 that rescale doesn't activate, at 1 it's at its full power

Anonymous

6/14/2025, 12:01:11 PM

No.105590097

[Report]

>>105590129

>>105590092

ah so it's percentage strength

I like comfy but it's annoying how many nodes use a 0.0-1.0 fraction and you're just supposed to know that it's secretly a percentage

Anonymous

6/14/2025, 12:02:54 PM

No.105590105

[Report]

>>105590034

Thanks anon, I actually used a python script for that one

Anonymous

6/14/2025, 12:07:56 PM

No.105590129

[Report]

>>105590097

yeah I feel ya, sometimes when you're lucky, the guy who made the node had put some notes when you hover on the node so that you know how to handle the parameters

>>105589276

This is very interesting, and makes no intuitive sense. You would think detailed calibrated one would deviate more from past versions... but it's the opposite? Unless lodestone fucked up his naming, and v36 is the actual detail calibrated one...

Anonymous

6/14/2025, 12:14:43 PM

No.105590166

[Report]

>>105590144

yeah, it even happened here

>>105589359 I also find it curious

Anonymous

6/14/2025, 12:25:27 PM

No.105590210

[Report]

>>105590297

Anonymous

6/14/2025, 12:26:31 PM

No.105590218

[Report]

>>105590234

>>105590193

so he's merging the large one with the (base + fast) one? what?

Anonymous

6/14/2025, 12:28:45 PM

No.105590228

[Report]

>>105590193

I guess it's closer to previous version since a 1024px tune would be undertrained compared to all other versions thus far.

>>105590218

yeah apparently recipe is (base + fast)/2 for the normal one and (base + fast + large)/3 for detail calibrated

he does publish the "large" checkpoints separately here:

https://huggingface.co/lodestones/chroma-debug-development-only/tree/main/staging_large_3

i tested some of these and they seemed way more slopped so maybe that's where the slop is coming from in detail since this is still undertrained

but idk it's all a bit too schizo for me to understand

>>105590234

>he does publish the "large" checkpoints separately here:

does he do the same for base and fast? maybe we can get rid of the "fast" distilled bullshit and only try the base one?

Anonymous

6/14/2025, 12:32:28 PM

No.105590248

[Report]

>>105590291

>>105590240

yeah they're all in that linked repository

Anonymous

6/14/2025, 12:32:48 PM

No.105590251

[Report]

>>105590240

no it's not that simple, each new base is now made from the fast, so you're fucked lol

Anonymous

6/14/2025, 12:33:49 PM

No.105590257

[Report]

>>105590234

Yeah, the raw large are definitely more slopped. Wouldn't recommend them, the detailed merge is better.

Anonymous

6/14/2025, 12:34:36 PM

No.105590260

[Report]

>>105590234

Yeah that what it seems to me. The v36 merge is used as a hack to push 1024 along (from that discord screenshot). But obviously it's still not there.

>>105590240

is fast some distilled shit? idk what he means by this stuff

Anonymous

6/14/2025, 12:36:07 PM

No.105590270

[Report]

>>105590263

>is fast some distilled shit?

it is, and to me that's the reason why the new epochs (the normal ones not the detaill calibrated) are more slopped than what we used to have before he did that (v29)

>>105589316

>>105590234

>i tested some of these and they seemed way more slopped so maybe that's where the slop is coming from in detail since this is still undertrained

if I understand correctly, since he's making the first large on v34, that means that large v34 is actually the first epoch? if that's true then no wonder it's slopped, it's barely changing flux schnell at this stage

Anonymous

6/14/2025, 12:39:51 PM

No.105590291

[Report]

>>105590297

>>105590248

What? Isn't fast just quasi distillation shit we're all complaining about? Why don't we just grab base if we can?

Anonymous

6/14/2025, 12:41:03 PM

No.105590297

[Report]

>>105590318

>>105590291

>Why don't we just grab base if we can?

no it's not that simple, look at the graph

>>105590210

each new base originate from "root" which is a merge from base and fast, so we can't do that

Anonymous

6/14/2025, 12:42:36 PM

No.105590310

[Report]

>>105590328

>>105590277

yeah and iirc he only started 1024 on v34 as an experiment because he had a spare machine, originally the plan was to start 1024 training on v48

Anonymous

6/14/2025, 12:42:49 PM

No.105590313

[Report]

>>105590193

>detail calibrated is tethered and dragged along to match the pace of non detail calibrated

what does he mean by that? that he's rushing the training of large so that we won't have to wait longer? why does this retard always insists on speed? we don't care if it takes time we want something good, goddamit

Anonymous

6/14/2025, 12:44:10 PM

No.105590318

[Report]

>>105590353

>>105590297

But if we have the previous fast, can't we just subtract the model? Idk anon, haven't delved too much into the theory but I recall it being possible with auto1111.

Anonymous

6/14/2025, 12:46:09 PM

No.105590328

[Report]

>>105590332

>>105590277

>>105590310

is this how you train a DiT model? by merging the large training and the 512x training? I thought you trained the model on 512x for like 75% of the epochs and then train the remaining 25% on 1024x, you don't do merge that way

Anonymous

6/14/2025, 12:47:40 PM

No.105590332

[Report]

>>105590342

>>105590328

he admits the method is odd and is only using it because of hardware limitations

Anonymous

6/14/2025, 12:49:14 PM

No.105590342

[Report]

>>105590358

>>105590332

that guy is really playing with fire, the first 30 epochs were pretty classic training, and then he added the distillation shit and some merge with the large model, hard to believe this'll end well lol

Anonymous

6/14/2025, 12:51:30 PM

No.105590353

[Report]

>>105590318

no, let's say he started his distillation bullshit on v30, you remove fast on v30 to get a base v30, but after that you're stuck, since you'll have to train base v30 with the base dataset up to the finish line, and you don't have the dataset nor the money lol

>>105590342

I think it will be fine. It's already so close to greatness.

Anonymous

6/14/2025, 12:55:56 PM

No.105590380

[Report]

>>105590358

>It's already so close to greatness.

desu I prefer the pre distilled epochs, they have more sovl, and I don't feel this has improved much since the last 10 epochs

Anonymous

6/14/2025, 12:57:06 PM

No.105590388

[Report]

>>105590395

>>105590263

>trains flux schnell to remove the distillation

>adds some distillation back

>>105590388

i don't get what kind of distillation it is he's added though? isn't distillation normally the thing that makes it go fast and require like 8 steps but that's not the case at all with chroma?

>>105590395

that's what he did though, he did a step distillation, so that it's supposed to work on fewer steps (like flux dev)

Anonymous

6/14/2025, 1:00:28 PM

No.105590411

[Report]

>>105590395

>>105590402

>like flux dev

*flux schnell

Anonymous

6/14/2025, 1:01:41 PM

No.105590418

[Report]

>>105590402

>he did a step distillation, so that it's supposed to work on fewer steps

Why? Who asked for this? Distilling a model always hurts its quality.

>Still no /ai/ board...

And to think im litterally using a satellite connection for this...

Im really hoping I dont get sent to the middle east.. it always some shitty news after returning from radio silent.

Im keeping it sfw so hopefully the Janny doesn't get hernia and nukes my post as usual.

Anonymous

6/14/2025, 1:03:44 PM

No.105590430

[Report]

>>105590445

>>105590402

ah that sucks. should've waited till epoch 50 for shit like that

Anonymous

6/14/2025, 1:04:01 PM

No.105590434

[Report]

Distillation was a mistake.

Anonymous

6/14/2025, 1:05:48 PM

No.105590445

[Report]

>>105590478

>>105590430

I know, I have no idea why he decided to ruin the model midway, you don't do this shit when you train a model, it's supposed to be the moment to get the most quality of it, you can think of the speed later once it's finished, sigh...

Anonymous

6/14/2025, 1:07:30 PM

No.105590455

[Report]

>>105590424

Satellite holly fuck me...

Also why is Janny so abusive?

Anonymous

6/14/2025, 1:08:28 PM

No.105590464

[Report]

one whole post, well that's an improvement

next time it might be two, he's shown he can be taught

Anonymous

6/14/2025, 1:08:41 PM

No.105590465

[Report]

>>105590484

>>105590358

nigga stop sucking lodestones cock. go stick to asian feet.

close to greatness ahaha

Anonymous

6/14/2025, 1:09:56 PM

No.105590471

[Report]

>>105590496

>>105590424

cant you reset your satellite to get a new ip?

Anonymous

6/14/2025, 1:11:06 PM

No.105590478

[Report]

>>105590445

let me guess, no one in discord told him that it was a retarded idea?

Anonymous

6/14/2025, 1:12:32 PM

No.105590484

[Report]

>>105590465

>close to greatness ahaha

kek

Anonymous

6/14/2025, 1:15:15 PM

No.105590496

[Report]

>>105590471

It's still a 4g connection. Retard will be arrested for child porn anyway.

Not a good look.

Anonymous

6/14/2025, 1:19:58 PM

No.105590529

[Report]

Anonymous

6/14/2025, 1:48:34 PM

No.105590732

[Report]