/ldg/ - Local Diffusion General

Anonymous

6/16/2025, 5:03:09 PM

No.105611115

[Report]

>>105611096 (OP)

so what is the general consensus for wan genning? comfyui or wan2gp?

Anonymous

6/16/2025, 5:04:54 PM

No.105611132

[Report]

>>105611124

>wan2gp

this if you don't want to constantly break the ui

Anonymous

6/16/2025, 5:09:37 PM

No.105611170

[Report]

>>105611155

fucking based. anyone get this working?

Anonymous

6/16/2025, 5:12:17 PM

No.105611195

[Report]

>>105611518

>>105611155

so no NAG? I don't get it

Anonymous

6/16/2025, 5:21:44 PM

No.105611282

[Report]

unironically moving here to get away from the schizopisting

Anonymous

6/16/2025, 5:25:36 PM

No.105611324

[Report]

>>105611392

SOMEBODY STOP THIS FUCKING MADMAN PLEASE!!!

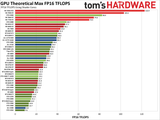

>>105610691

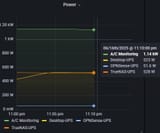

THIS... AI software is insanely unoptimized. Software optimizations are free and will give you much better progress than waiting for GPU manufacturers to stop raping us. 7900 XTX owner btw

Anonymous

6/16/2025, 5:26:56 PM

No.105611340

[Report]

Anonymous

6/16/2025, 5:27:06 PM

No.105611342

[Report]

>>105611356

> Software optimizations are free

go ahead. make those optimizations. you dumb cunt. see how free they really are.

Anonymous

6/16/2025, 5:28:34 PM

No.105611356

[Report]

>>105612426

>>105611342

as opposed to making the GPU hardware optimizations myself? lmao

once someone publishes a good optimization for our software, we can all get a copy of the optimization and apply it for free. this is clearly what I meant.

Anonymous

6/16/2025, 5:32:01 PM

No.105611392

[Report]

>>105611459

>>105611324

it's all python niggers. it won't happen

Anonymous

6/16/2025, 5:35:32 PM

No.105611420

[Report]

>>105611124

ldg wan workflow if you actually want to get any quality

Anonymous

6/16/2025, 5:38:35 PM

No.105611450

[Report]

>>105611463

Is there a benchmark for gus that's purely for AI performance? I want to fiddle with clocks and voltages.

Anonymous

6/16/2025, 5:39:26 PM

No.105611459

[Report]

>>105611481

>>105611392

We've had some nice speedups already. low step loras like dmd2, attention optimizations, svdquant tea/fbcache... really there are tons of optimizations that people barely use because they're not widely known or built in to default workflows.improvements in torch and gpu drivers too.

yeah python devs and researchers are terrible when it comes to this, but eventually we will see more widespread improvements.

also, current model architectures are really inefficient. researchers are improving on this, though they focus too much on heavy/bloated MOAR PARAMETERS models.

the holy grail is if we make a breakthrough in local training performance that allows us to make our own models on a consumer GPU. if we get that, then local will enter a new golden age.

>>105611450

kind of old but not much has changed. the tech really stagnated

Anonymous

6/16/2025, 5:40:31 PM

No.105611469

[Report]

>>105612766

>>105611463

No I mean like 3d mark, but for AI shit.

Anonymous

6/16/2025, 5:42:18 PM

No.105611481

[Report]

>>105611459

there has been 0 improvements where you don't have to rape the quality of the model by quants. there is no way to get creative with optimizations being stuck using fucking torch all the time. it puts the responsibility on a very few people that already have to maintain the repo and even then memory management is a complete mess. fuck python niggers

Anonymous

6/16/2025, 5:45:01 PM

No.105611500

[Report]

how do I train a chroma lora? Can I just use easy scripts flux settings but change base model?

Anonymous

6/16/2025, 5:45:12 PM

No.105611505

[Report]

>>105612439

>she raises her fiery sword and slashes from the top right to the bottom left. cloth and hair flow softly in the wind

>wan 14b 480p q8, torchcompile on, teacache thresh: 0.190 start: 0.10, SLG on, adaptiveguider thresh:0.9995

>gen time 20 mins, first attempt

turn on adaptive guider and gen time increases. huh

Anonymous

6/16/2025, 5:46:46 PM

No.105611518

[Report]

>>105611195

nag is snake oil

Anonymous

6/16/2025, 5:47:00 PM

No.105611520

[Report]

>>105611553

Can you train a Chroma lora without using diffusion-pipe? I don't think it's supported in kohya_ss yet

Anonymous

6/16/2025, 5:50:15 PM

No.105611553

[Report]

>>105611520

ai-toolkit has it I believe

Anonymous

6/16/2025, 6:05:49 PM

No.105611718

[Report]

>>105611096 (OP)

blessed thread of true frenship

How is python even real...

Anonymous

6/16/2025, 6:10:29 PM

No.105611764

[Report]

>>105613444

Anonymous

6/16/2025, 6:11:02 PM

No.105611768

[Report]

>>105611758

fucking Christ anon

Anonymous

6/16/2025, 6:12:26 PM

No.105611780

[Report]

>>105611812

>>105611758

thank you niggeranov, those millions of $ in funding is going to good use (affirming transexual's existance)

Anonymous

6/16/2025, 6:15:08 PM

No.105611812

[Report]

>>105611828

>>105611780

but this is much less space than the image anon posted. it's still slop but not as much

Anonymous

6/16/2025, 6:16:36 PM

No.105611828

[Report]

>>105611812

122MB every time you play with settings and the script OOMs

Anonymous

6/16/2025, 6:18:24 PM

No.105611844

[Report]

>she raises her fiery sword and slashes from the top right to the bottom left. cloth and hair flow softly in the wind

>wan 14b 480p q8, torchcompile on, teacache thresh: 0.250 start: 0.10, SLG on, adaptiveguider thresh:0.9995

>gen time 17.5 mins

was wondering why these were taking forever, forgot I had sage and fast off

I will never post in a Miku thread

Anonymous

6/16/2025, 6:21:05 PM

No.105611870

[Report]

>>105611901

>>105611865

incredibly based

Anonymous

6/16/2025, 6:21:21 PM

No.105611873

[Report]

>>105611974

Anonymous

6/16/2025, 6:23:43 PM

No.105611901

[Report]

>>105611865

>>105611870

Reminder that mikutroons that are permanently spamming and making these generals are jannies

This thread is just /sdg/. You could've just gone to /sdg/.

Anonymous

6/16/2025, 6:24:52 PM

No.105611918

[Report]

>>105611903

that is the other thread throwing shit around calling each other avatarfags. this one is chill

Anonymous

6/16/2025, 6:26:29 PM

No.105611935

[Report]

>>105611954

>>105611903

Yeah we noticed what your goal is "anon"

Anonymous

6/16/2025, 6:28:07 PM

No.105611954

[Report]

>>105611935

can we not do this here? the schizo thread is for that kind of language.

anyone have any luck training wan loras on a 3090?

Anonymous

6/16/2025, 6:30:17 PM

No.105611988

[Report]

>>105611903

take your bullshit somewhere else

Anonymous

6/16/2025, 6:34:13 PM

No.105612031

[Report]

>>105612571

>>105611974

what's the point of the person lora? just better human cohesion?

the vace thing looks interesting? haven't really played much with vace.

>>105611096 (OP)

>/sdg/ is now /b/ teir ped0 slop

>/ldg/ is now tantrum central

>/gif/ AI threads devolved into dick spam

When did it all get so cursed?

Anonymous

6/16/2025, 6:44:19 PM

No.105612133

[Report]

>>105612106

>>/gif/ AI threads devolved into dick spam

But that's based

Anonymous

6/16/2025, 6:44:56 PM

No.105612141

[Report]

>>105612106

this is /ldg/ and I see no tantrums

NoobAI workflow with adetailer type of face detection and fix?

Anonymous

6/16/2025, 6:50:01 PM

No.105612196

[Report]

>>105612173

it's honestly better to just make it yourself. what is the issue with that?

Anonymous

6/16/2025, 6:56:57 PM

No.105612263

[Report]

mag cache is pretty damn good

Anonymous

6/16/2025, 7:13:27 PM

No.105612411

[Report]

>>105611155

Need a GGUF quant before I can test it. Only 5090kings can test this out for us right now

Excited to see how much better it will be than FusionX if at all. Now that the coefficients thing fixed FusionX I'm fine with self forcing 14B being a dud

Anonymous

6/16/2025, 7:14:34 PM

No.105612422

[Report]

>>105612571

>>105612106

sane ppl can only take so much shit before they leave for good.

>>105612173

https://www.youtube.com/@drltdata/videos

Anonymous

6/16/2025, 7:14:51 PM

No.105612426

[Report]

>>105611356

>as opposed to making the GPU hardware optimizations myself? lmao

geohot did it and embarrassed Lisa Su, nocoder "lmao"

in either case it was not free.

Anonymous

6/16/2025, 7:16:30 PM

No.105612439

[Report]

>>105612494

>>105611505

What the fuck are you doing why are you using adaptive guidance

Why are you deliberately not reading the instructions for the values to set for teacache

Why are you using q8 instead of GGUF quants

I hate people with brainwaves like you where they feel they know better about everything and subconsciously live a shittier life as a result

Anonymous

6/16/2025, 7:19:21 PM

No.105612465

[Report]

>>105612571

>>105611974

Seconding the purpose of the person Lora. Sounds ripe for merging into the sequel to fusionX but not worth loading on its own. I have no issues with persons, not really at least and I'd like more information on what the Lora actually helps with, which the description on HF does not provide

Anonymous

6/16/2025, 7:22:04 PM

No.105612494

[Report]

>>105612594

>>105612439

nta but

>why are you using adaptive guidance

adaptive guidance increases speed at the expense of slight quality loss

>Why are you deliberately not reading the instructions for the values to set for teacache

rel_l1_thresh set to 0.19 is perfectly fine for medium quality wan2.1 iv2 480P. it's listed as a value in the info tooltip.

>Why are you using q8 instead of GGUF quants

..Q8 is a gguf guant. You know, wan2.1 i2v 480p Q8.gguf

maybe im confused but is this anon trolling or something?

Anonymous

6/16/2025, 7:29:07 PM

No.105612571

[Report]

>>105612616

>>105612031

>just better human cohesion?

No idea but think so, I still have yet to test it

>>105612422

Yeah these threads are only good for the latest news

>>105612465

Kek, yeah the baker of the Fusion model mentioned its the MPS rewards lora that changes the faces. It also has moviigen baked in which also changes your input image into plastic square jaw flux face

https://civitai.com/models/1678575

Anonymous

6/16/2025, 7:30:43 PM

No.105612594

[Report]

>>105612494

>trolling?

Big words from someone using all these quality destroying nodes with shitty values when he could just go down to Q6

i wish a better shake ass lora existed. the current one is so hit or miss its near useless. sometimes you get an amazing seed but 80% of the time she's doing crazy shit. have to spend nearly an hour just to get 1 decent result

Anonymous

6/16/2025, 7:33:29 PM

No.105612616

[Report]

>>105612601

Train one then.

>>105612571

Is this also for the t2v? Would explain why my girls have a lot more flux face with FusionX than with base wan

Anonymous

6/16/2025, 7:41:26 PM

No.105612716

[Report]

>>105612737

>>105612601

its the best, super wobbly and sloppy. wan really knows fat asses and can part those cheeks like the red sea. but yes, half the time it will freak out and do something stupid.

Anonymous

6/16/2025, 7:42:54 PM

No.105612737

[Report]

>>105612716

>wan really knows fat asses

It really does. I was surprised to see one of my Brazilian girls on the beach have sand on her asscheeks without me prompting for it which was a pleasantly lewd surprise

mag cache and that self-force lora for 14b are pretty good. can gen a 1008x560 (don't @ me) in ~90 seconds.

Anonymous

6/16/2025, 7:44:41 PM

No.105612760

[Report]

I just like seeing funny AI pictures. Don't care about goonslop.

Anonymous

6/16/2025, 7:44:57 PM

No.105612766

[Report]

>>105611469

Your voltage and frequency doesn't mean that much because you're stuck with X amount of cuda cores. Denser the model is, slower it is on your X amount of cores.

It's as simple.

Anonymous

6/16/2025, 7:46:04 PM

No.105612781

[Report]

>>105612805

>>105612757

but is the output hot garbo or not what you prompted

it's gotta be one of the two

>>105612781

no it works well so far. and it actually follows the prompt and loras. unlike the 1.3b sel-forcing.

honestly with the speed these random performance enhancers are coming out i'd expect them to be old news within 2 weeks.

Anonymous

6/16/2025, 7:49:42 PM

No.105612818

[Report]

>>105612842

>>105612805

most of them will just be snake oil anyways

Anonymous

6/16/2025, 7:50:59 PM

No.105612842

[Report]

>>105612928

>>105612818

as is tradition.

hope we get a new video model soon or flux kontext so we can get an entire new wave of bullshit

Anonymous

6/16/2025, 7:57:25 PM

No.105612917

[Report]

Anonymous

6/16/2025, 7:58:27 PM

No.105612928

[Report]

>>105613148

>>105612842

except they keep bloating the fucking models with multimodal shit so I expect 48gb min to even run the new ones in fp16

Anonymous

6/16/2025, 8:03:59 PM

No.105612991

[Report]

Anonymous

6/16/2025, 8:17:33 PM

No.105613148

[Report]

>>105613471

>>105612928

>May I see it?

no

Anonymous

6/16/2025, 8:26:43 PM

No.105613243

[Report]

>>105614997

Anonymous

6/16/2025, 8:33:42 PM

No.105613324

[Report]

>>105611764

>cosmos predict2 gguf

anyone tested this?

Anonymous

6/16/2025, 8:44:00 PM

No.105613449

[Report]

>>105613490

>>105613444

it's shitty cosmos so no

Anonymous

6/16/2025, 8:44:47 PM

No.105613455

[Report]

>>105612805

here.

i take it back. it prosuces on average a pixely mess.

Anonymous

6/16/2025, 8:45:29 PM

No.105613461

[Report]

is it just me or are realistic extreme body proportions impossible? the lighting and texture always turns into 2.5d clay sloppa if you try to do anything not realistic proportionally.

Anonymous

6/16/2025, 8:45:52 PM

No.105613471

[Report]

>>105613648

>>105613148

Then your claims are worthless, anon. Why may I not see it?

Anonymous

6/16/2025, 8:46:37 PM

No.105613480

[Report]

>>105613550

whats the best model for NSFW image inpainting? im currently using juggernautXL, but I'm open to trying new models

Anonymous

6/16/2025, 8:47:56 PM

No.105613490

[Report]

>>105613550

Anonymous

6/16/2025, 8:51:31 PM

No.105613528

[Report]

>>105616783

Anonymous

6/16/2025, 8:53:25 PM

No.105613550

[Report]

>>105613480

I stick to the model I genned the stuff with which is either lustify (v5, not 6) or cyberrealistic 5.7. pussys need a lora unless you're into roastbeef.

>>105613490

1 out of 3 is an actual female, not bad.

Anonymous

6/16/2025, 9:03:15 PM

No.105613643

[Report]

>>105613649

do people make vace lora

Anonymous

6/16/2025, 9:04:07 PM

No.105613648

[Report]

>>105613716

>>105613471

that wasn't me.

after playing a bit more with it i take back what i said. ita definitely more pixely than default. and it also causes that degeneration of quality over generations. 2 gens later and it produces only a fully noisy image. waste of time and snake oil confirmed.

Anonymous

6/16/2025, 9:04:09 PM

No.105613649

[Report]

>>105613643

I hope not, they should make lora for the parent model

>>105613444

was gonna ask if you get the same errors on the edge(s) of your chroma gens but looking at your gens, yep lol

Anonymous

6/16/2025, 9:11:04 PM

No.105613716

[Report]

>>105613648

ok turns out i might be retarded. am using the wrong/not the recommended samplers etc.

someone else test this while i go eat asphalt.

Anonymous

6/16/2025, 9:12:45 PM

No.105613729

[Report]

>>105609317

Cute. There better be an innocent follow-up.

Anonymous

6/16/2025, 9:14:31 PM

No.105613745

[Report]

>>105613686

Yeah I get them. Sometimes they are really nad, like whole rbg scale goes trough one edge

Anonymous

6/16/2025, 9:18:45 PM

No.105613792

[Report]

>>105613819

>>105613792

what's sigmoid offset, does it improve results?

Anonymous

6/16/2025, 9:27:11 PM

No.105613875

[Report]

>>105613819

it's some form of scheduler from silveroxides specifically for chroma. you can select it in the basic scheduler node too once you install it but comes with it's own node with additional 'things'. can find it in the manager

Anonymous

6/16/2025, 9:28:17 PM

No.105613885

[Report]

>>105613904

Man I'm trying to do porn but it's giving me such cinema cityscape

>>105613885

plz share a catbox of the uncensored

>>105613904

It's diapers, so no.

Anonymous

6/16/2025, 9:31:58 PM

No.105613916

[Report]

>>105613958

>>105613911

Please share workflow anon

Anonymous

6/16/2025, 9:33:32 PM

No.105613929

[Report]

>>105613958

>>105613911

most stuff in a catbox link is not against the rules, especially diapers.

Anonymous

6/16/2025, 9:35:32 PM

No.105613950

[Report]

Why do my videos go fucking crazy when I use torch+sage+tea cache? With the regular out of the box WAN I get pretty decent videos, they just sorta look low framerate. When I add all this shit with the same LORAs and prompts the subjects go fucking crazy and fight each other

Anonymous

6/16/2025, 9:40:29 PM

No.105613995

[Report]

>>105613958

Damn anon I asked for it but this is way better than I expected. Extremely based, thanks

Anonymous

6/16/2025, 9:42:32 PM

No.105614010

[Report]

>>105614050

>>105613958

I'm the actual anon that asked for the catbox. Thank you for sharing.

Anonymous

6/16/2025, 9:42:35 PM

No.105614011

[Report]

>>105614268

>>105613638

So can someone confirm if this is snake oil or not? Any quality loss? Is it a must have?

Anonymous

6/16/2025, 9:46:10 PM

No.105614050

[Report]

>>105614010

You're right, but I also asked for the workflow and anon delivered. So I'm also grateful bro

Anonymous

6/16/2025, 9:47:07 PM

No.105614056

[Report]

>>105614160

Anonymous

6/16/2025, 9:49:42 PM

No.105614080

[Report]

>>105614012

what am i looking at

Anonymous

6/16/2025, 9:52:55 PM

No.105614106

[Report]

>>105614160

Anonymous

6/16/2025, 9:58:24 PM

No.105614160

[Report]

>>105613638

>>105614011

37.10s -> 19.63s

It does really crank up the speed, but the quality loss is huge. Until someone can take the time to dial in the perfect settings I think this one's a miss.

https://imgsli.com/Mzg5NjQ4

Anonymous

6/16/2025, 10:11:55 PM

No.105614298

[Report]

>>105614447

>>105614268

Damn that's pretty rough quality hit

Anonymous

6/16/2025, 10:18:11 PM

No.105614355

[Report]

why does high denoise with noise mask give such bad image to image results? the model doesn't take into account other parts of the image when denoising?

Anonymous

6/16/2025, 10:23:08 PM

No.105614402

[Report]

>>105611096 (OP)

>still no napt

ew

>>105611865

honestly, same sis

Anonymous

6/16/2025, 10:28:04 PM

No.105614447

[Report]

>>105614298

Although, since chroma quality can vary so much, it might be worth using magcache to hunt for good seeds?

Anonymous

6/16/2025, 10:32:06 PM

No.105614469

[Report]

>>105613686

that happened on flux too

and ive also gotten it on sdxl but not as common

Anonymous

6/16/2025, 10:35:16 PM

No.105614488

[Report]

>>105614493

Anonymous

6/16/2025, 10:35:19 PM

No.105614489

[Report]

flaccid penis lora for wan just doesn't look right. it doesn't have good dick feel. its like it was trained on those silly strap on videos

Anonymous

6/16/2025, 10:35:43 PM

No.105614493

[Report]

Anonymous

6/16/2025, 10:40:15 PM

No.105614533

[Report]

what denoise sequence do you use when you do iterative image 2 image inpaint bwos

Anonymous

6/16/2025, 10:53:50 PM

No.105614639

[Report]

>>105614617

TWO mikutroons? grim

Anonymous

6/16/2025, 10:55:09 PM

No.105614653

[Report]

>>105614740

Name 1 reason why anyone would ever need more than picrel, a bed, and autosucc?

Protip: You literally can't.

How do I prompt something that is off-screen without the AI trying to bring it on screen?

Anonymous

6/16/2025, 10:57:40 PM

No.105614672

[Report]

>>105614657

i wanted to do this with a campfire to have only the lighting. couldn't do it

Anonymous

6/16/2025, 10:58:15 PM

No.105614678

[Report]

>>105614690

>apply 6 layers of snake oil

>somehow they all work together quite well and the quality's not taking that deep of a nosedive

Huh. Wan's the first model that can handle that much shit. 2 steps of MPS/HPS reward loras + Causvid/Accvid at CFG 5.5, then 6 steps of Causvid + Accvid + Self-Forcing + NAG + RescaleCFG at CFG1. And also some (((((quality)))))) loras because they actually somewhat help with contrast

https://files.catbox.moe/1i3r5x.mp4

btw for anyone who's used FusionX in i2v and wondered why the faces keep changing significantly, MPS/HPS loras are the main culprits behind it. Sadly they do boost prompt adherence somewhat so removing them completely is not ideal. Just gotta find the right settings

Anonymous

6/16/2025, 11:00:04 PM

No.105614690

[Report]

>>105614678

>the quality's not taking that deep of a nosedive

because it was never there to begin with, with that flux plastic grainy slop of an image, let alone the initial huge shift in the video and grainy shit motion and fps

Anonymous

6/16/2025, 11:01:13 PM

No.105614699

[Report]

how did he stand long enough to spray that? you can tell the man is all fat and absolutely no muscle

Anonymous

6/16/2025, 11:01:40 PM

No.105614702

[Report]

Hey guys I want to make videos like vid related, what ALL do I need?

Anonymous

6/16/2025, 11:06:21 PM

No.105614732

[Report]

is there a node that takes a mask and gives a mask of the mask boundary

Anonymous

6/16/2025, 11:07:28 PM

No.105614740

[Report]

>>105614775

>>105614653

to feel her warmth against my skin, to hear her breath contently as our child grows in her.

Anonymous

6/16/2025, 11:08:23 PM

No.105614746

[Report]

>>105614916

>>105614657

crop the image afterwards

Anonymous

6/16/2025, 11:12:32 PM

No.105614775

[Report]

>>105614804

>>105614740

The question is will that warmth be worth the nagging until the inevitable divorse when she gets bored after 5 years and says one word to end your life before taking half the shit and the kids?

Anonymous

6/16/2025, 11:17:34 PM

No.105614804

[Report]

>>105614815

>>105614775

then don't marry the first person you meet who will inevitably cheat on your lil dick with tyrone

Anonymous

6/16/2025, 11:19:21 PM

No.105614815

[Report]

>>105614804

>he doesn't know

Anonymous

6/16/2025, 11:27:56 PM

No.105614891

[Report]

>>105611133

That's a man.

Anonymous

6/16/2025, 11:31:55 PM

No.105614916

[Report]

>>105615512

>>105614746

I want the reflection of [described light] on the screen but it keep giving me the light itself on the pic into random places.

Anonymous

6/16/2025, 11:35:51 PM

No.105614948

[Report]

>>105614994

>>105614617

THESE posts are unpruned every thread

rgal is always nuked

the absolute state

Anonymous

6/16/2025, 11:45:08 PM

No.105614994

[Report]

Anonymous

6/16/2025, 11:45:17 PM

No.105614997

[Report]

>>105615026

>>105611133

is this post facetious?

>>105612106

4chan was never good

but i must admit, i have never seen such autism regarding the copypasta for the baked threads

cringe.

>>105613243

would

Anonymous

6/16/2025, 11:50:59 PM

No.105615026

[Report]

>>105615048

>>105614997

hey

just to let you know.

the containment thread is the other one. please go and post there.

thank you.

Anonymous

6/16/2025, 11:53:07 PM

No.105615048

[Report]

>>105615070

Anonymous

6/16/2025, 11:56:58 PM

No.105615070

[Report]

>>105615171

>>105615048

Don't go! You are very important person here and people are jealous.

Anonymous

6/16/2025, 11:57:48 PM

No.105615079

[Report]

@105615070

d*b0

Anonymous

6/17/2025, 12:10:57 AM

No.105615171

[Report]

>>105615070

so long lonesome

>>105613638

>>105614268

>Prompt executed in 12.15 seconds

Works pretty good if you fix up the ratios for detailed using the output from the calibration node.

https://imgsli.com/Mzg5NzEw

Anonymous

6/17/2025, 12:19:02 AM

No.105615238

[Report]

>>105615586

>>105615222 (You)

>"chroma": np.array([1.0]*2+[1.09766, 1.11621, 1.07324, 1.07227, 1.0459, 1.04297, 1.03418, 1.03516, 1.04004, 1.04102, 1.01465, 1.01562, 1.0293, 1.0293, 1.02344, 1.02441, 1.02148, 1.02148, 0.99609, 0.99609, 1.0166, 1.01758, 1.00586, 1.00586, 0.99561, 0.99561, 1.00488, 1.00488, 1.00098, 1.00098, 1.00781, 1.00781, 1.00293, 1.00293, 1.00684, 1.00684, 0.99072, 0.99219, 1.00488, 1.00488, 0.98877, 0.98877, 0.98242, 0.98193, 0.98584, 0.98633, 0.96924, 0.9668, 0.91553, 0.9165]),

Anonymous

6/17/2025, 12:24:48 AM

No.105615293

[Report]

picrel is magcache_k to 5 from default 2

>Prompt executed in 9.94 seconds

Anonymous

6/17/2025, 12:40:56 AM

No.105615410

[Report]

type sdg

this yucky ldg pops up

Anonymous

6/17/2025, 12:42:26 AM

No.105615423

[Report]

Anonymous

6/17/2025, 12:49:42 AM

No.105615469

[Report]

>>105615489

Has stable diffusion gotten any faster/better yet over the last year or so? I stopped keeping track with SD 1.4 and SD XL/turbo lora

Anonymous

6/17/2025, 12:51:57 AM

No.105615481

[Report]

>>105615493

>>105613638

how do i use this shit i get "The inference steps of chroma must be 26." even though i have it set to more, i'm low iq

Anonymous

6/17/2025, 12:53:21 AM

No.105615489

[Report]

>>105615469

Xl was already lightning fast, nobody in their right is gonna waste time speeding it up when more modern models take an age

>>105615481

Set it to 26 steps dummy

Anonymous

6/17/2025, 12:54:57 AM

No.105615504

[Report]

>>105615493

why does it have to be 26 steps THOUGH

Anonymous

6/17/2025, 12:55:38 AM

No.105615512

[Report]

>>105615561

>>105614916

what kind of light and what model?

also, could you show me an example pic?

i assume you've already tried putting the light source object in the neg prompt, so it will probably have to be done through different phrasing in the positive. directly mentioning the object is going to put it in the image because clip is stupid like that

>>105615493

lmao i was reading it as "at least 26" i'm retarded

speedrun this thread anons

>>105615541

ok, posting the worst gens from my folder

Anonymous

6/17/2025, 1:02:58 AM

No.105615552

[Report]

>>105615549

comfyui problems, amirite?

Anonymous

6/17/2025, 1:03:21 AM

No.105615555

[Report]

>>105615946

>>105615541

>>105615549

sounds like a good idea with failed gens

Anonymous

6/17/2025, 1:04:19 AM

No.105615561

[Report]

>>105615848

>>105615512

I wanted the muzzle flash reflected in the lens, but it kept putting it in the pic.

>neg the light source

I haven't thought about that. I'm retared

Anonymous

6/17/2025, 1:05:11 AM

No.105615570

[Report]

Anonymous

6/17/2025, 1:07:28 AM

No.105615586

[Report]

>>105615238 (You)

>>105615513

>i'm retarded

Same. Here's new ratios without a custom lora enabled

> "chroma": np.array([1.0]*2+[1.10059, 1.09473, 1.08691, 1.08594, 1.05176, 1.05273, 1.0332, 1.03516, 1.04199, 1.04199, 1.01562, 1.0166, 1.0293, 1.0293, 1.02441, 1.02539, 1.02148, 1.02148, 0.99512, 0.99561, 1.0166, 1.0166, 1.00586, 1.00586, 0.99414, 0.99414, 1.00488, 1.00391, 0.99951, 0.99951, 1.00684, 1.00781, 0.99951, 1.0, 1.00488, 1.00488, 0.98926, 0.98926, 1.00195, 1.00195, 0.98389, 0.9834, 0.97656, 0.97656, 0.98047, 0.97998, 0.9585, 0.95898, 0.90137, 0.90186]),

Anonymous

6/17/2025, 1:15:25 AM

No.105615645

[Report]

>>105615653

Anonymous

6/17/2025, 1:16:44 AM

No.105615653

[Report]

Anonymous

6/17/2025, 1:18:56 AM

No.105615672

[Report]

>>105615773

>>105615702

Only white men find this mantis phenotype attractive.

Anonymous

6/17/2025, 1:27:53 AM

No.105615735

[Report]

>>105615705

Of course, some sdg anons took it upon themselves to hijack ldg, make a shit bake, then double down with fake collage bake.

Anonymous

6/17/2025, 1:33:40 AM

No.105615773

[Report]

>>105615786

Anonymous

6/17/2025, 1:33:52 AM

No.105615777

[Report]

chroma upscale x2, 4 tiles, 10 steps, 0.4 denoise, no detailer or inpaint. I like the upscales so far with v37. tried one of silveroxides experimental hyper loras but not seeing any improvement at 10 steps.

>>105615222

very nice

>>105615705

your post is bugging me

>>105615773

yes

Anonymous

6/17/2025, 1:44:40 AM

No.105615846

[Report]

>>105615702

she cute

t. White devil

Anonymous

6/17/2025, 1:45:00 AM

No.105615848

[Report]

>>105615561

could also try something like "firing gun, (reflection:0.7), closeup" with barrel in negative

Anonymous

6/17/2025, 1:47:34 AM

No.105615871

[Report]

>>105615941

pol white power nixchecker spillover is so sad

Anonymous

6/17/2025, 1:52:05 AM

No.105615897

[Report]

>>105615513

i found that magcache can be run at an arbitrary amount of steps with chroma when magcache calibration is connected

Anonymous

6/17/2025, 1:53:57 AM

No.105615914

[Report]

>>105615939

>>105615786

Nag gives faster gens?

Anonymous

6/17/2025, 1:56:19 AM

No.105615939

[Report]

>>105615914

does not speed up gens, but they are more coherent/detailed even without explicitly trying to remove things with the negative

with magcache it helps reduce it a little bit without affecting the quality, make sure the calibration node is set cause otherwise it removes detail

Anonymous

6/17/2025, 1:56:27 AM

No.105615941

[Report]

>>105615871

>the mere existance of a white man ruins his day

broootal

Anonymous

6/17/2025, 1:56:56 AM

No.105615946

[Report]

Anonymous

6/17/2025, 1:58:36 AM

No.105615958

[Report]

Anonymous

6/17/2025, 1:58:48 AM

No.105615961

[Report]

>>105616010

Anonymous

6/17/2025, 2:06:48 AM

No.105616010

[Report]

>>105615961

She looks like she enjoys long philosophy talks with White human men.

Anonymous

6/17/2025, 2:15:11 AM

No.105616065

[Report]

This isn't about AI samplers...

Anonymous

6/17/2025, 2:18:29 AM

No.105616089

[Report]

Anonymous

6/17/2025, 2:21:12 AM

No.105616113

[Report]

Anonymous

6/17/2025, 2:24:07 AM

No.105616127

[Report]

magcache throws non-singleton dimension 1 errors when i'm not genning at 896x1024 precisely. oh well

>>105616023

is NAG any useful on CFGs greater than 1?

Anonymous

6/17/2025, 2:33:59 AM

No.105616191

[Report]

>>105616217

>>105616164

yes, nag_scale sort of acts as the cfg scale but it's independent of the sampler's cfg. it's not a cfg replacement. from the paper; "NAG is a general enhancement to standard guidance strategies, such as CFG, offering advancements in multi-step models."

Anonymous

6/17/2025, 2:37:17 AM

No.105616208

[Report]

>>105616238

>>105616164

it's supposed to replace CFG, so you have to put CFG 1 and deactivate it, that's the point, it's supposed to get the negative prompt effect of CFG while getting the speed of cfg 1

Anonymous

6/17/2025, 2:38:18 AM

No.105616217

[Report]

>>105616238

>>105616191

>chroma_nag

how did you make it work on chroma?

Anonymous

6/17/2025, 2:39:58 AM

No.105616227

[Report]

>>105616695

Is the ballsack grip legit?

>>105616217

https://github.com/Clybius/ComfyUI-ClybsChromaNodes/blob/main/chroma_NAG.py

>>105616208

honestly i'm not sure if this implementation is made for cfg 1 on the sampler specifically, previous gens i posted have cfg 5 on the sampler and nag_scale at 5.

if i set cfg to 1 it makes everything schizo like usual.

Anonymous

6/17/2025, 2:43:44 AM

No.105616254

[Report]

>>105616260

Anonymous

6/17/2025, 2:44:54 AM

No.105616260

[Report]

>>105616254

weird, they are commented but load properly.

Anonymous

6/17/2025, 2:46:35 AM

No.105616268

[Report]

Anonymous

6/17/2025, 2:47:01 AM

No.105616271

[Report]

>>105616238

>if i set cfg to 1 it makes everything schizo like usual.

that's weird, because NAG is supposed to make it work at cfg 1

SO MUCH FUCKING SNAKEOIL

JESUS CHRIST

>>105616238

>https://github.com/Clybius/ComfyUI-ClybsChromaNodes/blob/main/chroma_NAG.py

it's not working for me

>RuntimeError: mat1 and mat2 must have the same dtype, but got Half and BFloat16

>>105616348

oiling my snake rn

>>105616365

try removing rescalecfg. and set clip type to stable_diffusion, install the fluxmod nodes and put "padding removal" after the prompt conditioning. this is because comfy's implementation of chroma (with the min_padding 1 node) does not work properly and he still hasn't corrected it.

Anonymous

6/17/2025, 3:09:23 AM

No.105616429

[Report]

>>105616472

>>105616348

that one is not a skane oil at all, it keeps wan's quality while only waiting for 1 mn

>>105616023

Anonymous

6/17/2025, 3:11:31 AM

No.105616444

[Report]

>>105616452

>>105615541

failed gens? ok

Anonymous

6/17/2025, 3:11:58 AM

No.105616446

[Report]

>>105616473

This is probably my finest work yet. No, I am not going to share my workflow.

Anonymous

6/17/2025, 3:12:26 AM

No.105616450

[Report]

>>105616501

>>105616389

still got that error

Anonymous

6/17/2025, 3:12:39 AM

No.105616452

[Report]

>>105616444

>(((globe earth)))

Anonymous

6/17/2025, 3:15:38 AM

No.105616472

[Report]

>>105616502

>>105616429

>blank expression

>robotic movement

>'bro it keeps the quality'

*yawn*

Anonymous

6/17/2025, 3:15:43 AM

No.105616473

[Report]

>>105616486

>>105616446

honestly fucked up hands never bothered me, it's almost nostalgic now

Anonymous

6/17/2025, 3:16:37 AM

No.105616479

[Report]

>>105616484

Anonymous

6/17/2025, 3:17:09 AM

No.105616484

[Report]

>>105616479

entirely antisemitic

Anonymous

6/17/2025, 3:17:37 AM

No.105616486

[Report]

>>105616473

What do you mean? It's not fucked. Retards like you should not be allowed to even post.

Anonymous

6/17/2025, 3:18:12 AM

No.105616492

[Report]

>>105616742

>>105616481

Prompt and lora? I can't get something similar with any of the ones that I tried from civit

Anonymous

6/17/2025, 3:18:13 AM

No.105616493

[Report]

>>105616481

i like how she already had some in her mouth

Anonymous

6/17/2025, 3:18:46 AM

No.105616497

[Report]

Anonymous

6/17/2025, 3:19:13 AM

No.105616501

[Report]

>>105616389

>>105616450

what torch version do you have? maybe that's the issue?

>>105616348

>>105616472

Literally everything after slg has been some bullshit cope that'll speed wan/whatever, and the cost is either a huge visual quality loss, the loss of prompt adherence or weird, uncanny movements and dead looking faces

they always say "dude it's so fast". they never post a good video gen

Anonymous

6/17/2025, 3:23:16 AM

No.105616523

[Report]

>>105616502

this. magcache is SHIT. It doesn't degrade the quality much but it absolutely fucks with the motion and makes it non-existent.

Anonymous

6/17/2025, 3:23:56 AM

No.105616526

[Report]

>>105616638

>>105616518

>fluxd

>retarded statement

that's debo right? another one to my filter kek

Anonymous

6/17/2025, 3:24:52 AM

No.105616533

[Report]

>>105616577

I want more quality not more speed

Anonymous

6/17/2025, 3:25:14 AM

No.105616537

[Report]

>>105616502

>bullshit cope

Yeah, because VRAMlets and retards with ancient GPU's keep bitching about how slow Wan is, so other retards try to clout farm them by offering cheap "fixes"

Anonymous

6/17/2025, 3:30:30 AM

No.105616577

[Report]

>>105616533

>I want more quality not more speed

yeah if veo3 gets a little cheaper with larger amount of credits monthly i'd jump on it for a few months

Anonymous

6/17/2025, 3:38:35 AM

No.105616635

[Report]

>>105616348

slurp slurp sllllluuuuuuuuuurrrrrp

Anonymous

6/17/2025, 3:38:49 AM

No.105616638

[Report]

>>105616526

enjoy your safespace buddy

Anonymous

6/17/2025, 3:39:45 AM

No.105616644

[Report]

Anonymous

6/17/2025, 3:47:09 AM

No.105616695

[Report]

>>105616822

>>105616227

tried a girl soldier prompt

Anonymous

6/17/2025, 3:47:27 AM

No.105616698

[Report]

Anonymous

6/17/2025, 3:49:24 AM

No.105616704

[Report]

Anonymous

6/17/2025, 3:50:55 AM

No.105616718

[Report]

Anonymous

6/17/2025, 3:51:45 AM

No.105616726

[Report]

>>105616750

>most interesting videos to be posted ITT just happen to be posted right now and we will probably never see that anon again

hm...

>>105616492

It's the big splash, titty jiggle and cumshot loras. I posted a catbox in the other thread.

Anonymous

6/17/2025, 3:54:04 AM

No.105616744

[Report]

>>105617049

>>105616518

>they always say "dude it's so fast". they never post a good video gen

how about that one? it's a 720p I2V render and that took me only 4 mn (4 steps)

Anonymous

6/17/2025, 3:55:10 AM

No.105616747

[Report]

>>105616481

>>105616742

Peter Parker strikes again

Anonymous

6/17/2025, 3:55:20 AM

No.105616750

[Report]

>>105616726

>>105616481

no need for drama man, one dude posted a vid showcasing distill lora which is great.

>>105616481

and this dudes is nice

Anonymous

6/17/2025, 3:59:26 AM

No.105616783

[Report]

>>105613528

this one was good too

Anonymous

6/17/2025, 4:00:49 AM

No.105616792

[Report]

>>105616854

>>105616742

what other thread? its not in any recent ldg threads

Anonymous

6/17/2025, 4:04:39 AM

No.105616822

[Report]

>>105616695

>that rightmost girl manifesting the glass out of nowhere

kek

Anonymous

6/17/2025, 4:09:50 AM

No.105616854

[Report]

>>105616898

>>105616792

Hmm couldn't find it, here's a link for one from this thread.

https://files.catbox.moe/klrfp9.webm

Anonymous

6/17/2025, 4:16:49 AM

No.105616898

[Report]

>>105616365

Anyone made x/y plot of nag? Is it another snakeoil

Anonymous

6/17/2025, 4:28:19 AM

No.105616973

[Report]

>>105616961

I'm not dealing with that spaghetti

>>105616961

not a xy plot but you can see NAG VS no NAG here

>>105616023

>>105616078

>>105616171

>>105616979

Any without some shitty distillation lora?

Anonymous

6/17/2025, 4:31:29 AM

No.105616996

[Report]

>>105616979

interesting, a sidegrade for video gen? I wanna see how it performs with Chroma

Anonymous

6/17/2025, 4:32:25 AM

No.105617006

[Report]

>>105616990

nope, be the change you want to see

>>105616990

I'm not posting comparisons, but it turns a nearly 20 minute minute 50 step 480p gen into 7 minutes on my 3090. Unfortunately, the quality is pretty bad compared to a normal gen. And SLG doesn't seem to work with it when using a non-distilled Wan, or needs different settings than the default.

So yeah, seems to be useful for causvid and whatever-the-fuck lora users but might otherwise be a wash.

Anonymous

6/17/2025, 4:35:20 AM

No.105617026

[Report]

>>105617041

>>105617020

>seems to be useful for causvid

what? it's supposed to replace causvid

Anonymous

6/17/2025, 4:36:20 AM

No.105617034

[Report]

>>105617041

>>105617020

>I'm not posting comparisons, but it turns a nearly 20 minute minute 50 step 480p gen into 7 minutes on my 3090. Unfortunately, the quality is pretty bad compared to a normal gen.

but if you go for 720p you'll wait longer, but still less than 20 mn on a 480p gen and the quality will be higher?

>>105617026

Eh, whatever, I don't use crapvid or any distillation because the gens are shit. It makes sense though, because with NAG, the gens are also shit.

>>105617034

I dunno, try it yourself maybe. The quality was so bad compared to the normal gens at the same seeds that I gave up. I tried a few different settings too. If anyone else has better results/settings, post em I guess.

Anonymous

6/17/2025, 4:39:13 AM

No.105617049

[Report]

>>105617041

>If anyone else has better results/settings, post em I guess.

there's this

>>105616744

Anonymous

6/17/2025, 4:45:25 AM

No.105617077

[Report]

>>105617085

>>105617071

"This works with I2V 14B. I'm using .7 strength on the forcing lightx2v LORA (not sure if that's right but just left the same as Causvid). CFG 1 Shift 8, Steps 4 Scheduler: LCM. I'm using .7-.8 strength on my other LORAs as well but I always do so probably no change there."

will try

Anonymous

6/17/2025, 4:45:31 AM

No.105617078

[Report]

first test, 75 seconds

using

>>105617071

4080, 75s, with the settings from

>>105617077

Anonymous

6/17/2025, 4:47:46 AM

No.105617091

[Report]

>>105617126

>>105617085

try with strength 1, works fine to me that way

Anonymous

6/17/2025, 4:47:49 AM

No.105617092

[Report]

>>105617085

oops, had it at unipc, not lcm, will try that next.

Anonymous

6/17/2025, 4:50:04 AM

No.105617106

[Report]

>>105617126

>>105617085

now with lcm, miku drinking water. 73s on a 4080.

this is VERY impressive, before gens would take 300-400s with teacache enabled.

Anonymous

6/17/2025, 4:52:04 AM

No.105617116

[Report]

>>105617041

>because with NAG, the gens are also shit.

nah, NAG definitely improves the quality of the video at cfg 1

Anonymous

6/17/2025, 4:53:25 AM

No.105617126

[Report]

>>105617136

>>105617106

also, the neat thing is this is a t2v lora. but it works absolutely fine with i2v.

>>105617091

will try

Anonymous

6/17/2025, 4:54:26 AM

No.105617136

[Report]

>>105617126

>will try

add NAG aswell, it's a pretty neat addition

it's amazing how good wan 2.1 is even before all these speed tweaks. teacache got me from 15 mins to like 5 mins. now I can gen with 4 steps in just over a minute.

Anonymous

6/17/2025, 4:55:34 AM

No.105617145

[Report]

>>105617139

and it looks like garbage. fuck off

Anonymous

6/17/2025, 4:56:30 AM

No.105617150

[Report]

>>105617139

also I should note a lot of that time is interpolating the clip, it's actually faster than that. my rentry workflow has regular + interpolated video.

Anonymous

6/17/2025, 4:57:10 AM

No.105617157

[Report]

>>105617165

>>105617139

>now I can gen with 4 steps in just over a minute.

yeah I'm really impressed by it, it's way better than causvid that's for sure, the only caveat so is that it doesn't seem to be responding to SLG

Anonymous

6/17/2025, 4:57:31 AM

No.105617159

[Report]

>>105617216

put em up

strength set to 1.0

Anonymous

6/17/2025, 4:58:32 AM

No.105617165

[Report]

>>105617157

I have teacache/slg bypassed I think they didnt play well with causvid either. Still, this is rapid i2v generation. Even online sites weren't this fast. Even Google isn't this fast yet.

Open source always wins.

Anonymous

6/17/2025, 4:59:03 AM

No.105617168

[Report]

>no one sperging out about miku in the thread baked to get away from miku

>nb4 "spergout"

this is amazing. 83 seconds on a 4080 and i'd say 10 seconds of that is interpolating.

delicious mikudonalds

Anonymous

6/17/2025, 5:01:45 AM

No.105617188

[Report]

>>105617201

>>105617178

that one is clean but she's an amputee now :(

>>105617188

74 seconds

it just works

Anonymous

6/17/2025, 5:06:04 AM

No.105617216

[Report]

>>105617239

Anonymous

6/17/2025, 5:06:06 AM

No.105617217

[Report]

Anonymous

6/17/2025, 5:08:29 AM

No.105617237

[Report]

>>105617275

this lora + 14b wan is genuinely impressive, idk how it works but it does. 78 seconds (with interpolating)

this is the regular video.

1.0 strength, cfg 1, shift 8, steps 4, lcm scheduler

Anonymous

6/17/2025, 5:08:40 AM

No.105617239

[Report]

>>105617216

>hatsune... to mik-u

kek

>>105617201

>it just works

yeah, soon enough they'll find a way to keep the quality at 1 step, we'll be reaching the peak we're so back, it even got the KJ Boss seal of quality

https://www.reddit.com/r/StableDiffusion/comments/1lcz7ij/comment/my4nuq2/?utm_source=share&utm_medium=web3x&utm_name=web3xcss&utm_term=1&utm_content=share_button

>They have truly had big impact on the Wan scene with first properly working distillation, this one (imo) best so far.

Anonymous

6/17/2025, 5:13:44 AM

No.105617270

[Report]

>>105617254

at this point, I wonder if BFL is delaying kontext dev because they want to try this distillation method aswell and get a better dev quality out of it

Anonymous

6/17/2025, 5:14:15 AM

No.105617275

[Report]

Anonymous

6/17/2025, 5:14:23 AM

No.105617276

[Report]

>>105617294

>>105617254

i2v is the most fun thing with AI and now we can make videos super fast. this in tandem with illustrious/noob or flux for base images, means we can do anything.

Anonymous

6/17/2025, 5:14:35 AM

No.105617278

[Report]

>>105617329

>glazing distillation that makes the model look a generation behind what it is

*yawn*

Anonymous

6/17/2025, 5:17:06 AM

No.105617291

[Report]

>>105617307

a doll of anime girl Miku Hatsune puts on a black top hat and bows.

plushie was generated, then i2v'd. kinda neat desu

Anonymous

6/17/2025, 5:18:19 AM

No.105617294

[Report]

>>105617315

>>105617276

>i2v is the most fun thing with AI and now we can make videos super fast.

that's true, I had a lot of fun with Wan I2V when it was released but at some point I gave it up because I had to wait 20+ minutes to get a decent video out of it, now that it's way faster I can play with this toy again, feelsgoodman

Anonymous

6/17/2025, 5:19:42 AM

No.105617307

[Report]

>>105617291

that's kawaii

Anonymous

6/17/2025, 5:20:37 AM

No.105617314

[Report]

ai generating is using as much power as my 500tb beefy server, fuck. this is about $240/mo in electricity alone. generating makes the room hot so i need the a/c on.

Anonymous

6/17/2025, 5:20:39 AM

No.105617315

[Report]

>>105617337

>>105617294

time was always the issue, now it is basically a minute for a gen, originally 15 min even on a 4090. open source is pretty neat.

also this is a test gen and not what I wanted but look at the reflections, pretty cool how an AI model can figure this stuff out with no actual physics or material simulation.

a plushie with pink hair does a backflip.

getting closer! in any case, gens are far faster now, i'd love to know *how* this lora accelerates the process, I know there are turbo SDXL loras but im not sure how they work exactly.

Anonymous

6/17/2025, 5:23:07 AM

No.105617329

[Report]

>>105617345

>>105617278

It's the same anon that over hypes every quality destroying speedup. As the other anon said, I only care about quality. Patience is a virtue.

Anonymous

6/17/2025, 5:23:57 AM

No.105617337

[Report]

>>105617315

>>105617319

cute, thought she was going to brap in the first one

Anonymous

6/17/2025, 5:24:26 AM

No.105617341

[Report]

>>105617319

>I know there are turbo SDXL loras but im not sure how they work exactly.

it's a new distillation method called "self forcing", I was never a fan of distillation stuff because it always reduced the quality hard, but that's the first time I can confidently say the speed increase is worth it

>>105617329

look how clean this jacket wearing is, it's a billion times better than causvid was.

Anonymous

6/17/2025, 5:26:14 AM

No.105617352

[Report]

Alright shillies, I'll try it.

You better not be lying.

Anonymous

6/17/2025, 5:26:27 AM

No.105617355

[Report]

we are entering a new age of rapid i2v genning anons.

>>105617345

How well does it work with other loras? What about realism?

Anonymous

6/17/2025, 5:28:49 AM

No.105617374

[Report]

>>105617360

>>105617254

check the reddit link this anon posted, the guy said he is using other loras with it.

Anonymous

6/17/2025, 5:29:09 AM

No.105617375

[Report]

>>105617360

not sure, just testing various i2v gens, only the speed lora at 1.0 strength.

Can I videogen with 12 gigs of vram?

Anonymous

6/17/2025, 5:30:02 AM

No.105617381

[Report]

>>105617430

so the only thing worth it is just the distilled Lora? nothing else?

Anonymous

6/17/2025, 5:30:11 AM

No.105617382

[Report]

yep, this is the real deal. fast and quality.

an asian girl puts on a white baseball cap that says "LDG" in black text.

Anonymous

6/17/2025, 5:30:40 AM

No.105617386

[Report]

>>105617401

Anonymous

6/17/2025, 5:31:33 AM

No.105617392

[Report]

>>105617254

top kek, kino is back to the menu boys!

Anonymous

6/17/2025, 5:32:03 AM

No.105617395

[Report]

>>105617378

you can use plenty of stuff with multigpu node. for wan I use q8 with a 4080 (16gb) with virtual vram set to 10.0.

Anonymous

6/17/2025, 5:32:39 AM

No.105617401

[Report]

>>105617378

>>105617386

you can get a bigger quant and offload a bit to the ram with that node, anyway you get all the details on the rentry guide

Anonymous

6/17/2025, 5:34:03 AM

No.105617404

[Report]

Anonymous

6/17/2025, 5:35:47 AM

No.105617410

[Report]

>eve, what's the best platform to have?

Anonymous

6/17/2025, 5:38:11 AM

No.105617419

[Report]

>>105617426

>but it has no quality

wrong.

an asian girl takes off her green jacket to reveal a black bra.

it's safe.

Anonymous

6/17/2025, 5:39:51 AM

No.105617426

[Report]

>>105617419

>but the color is wrong!

this one got it right. last eve then ill test new stuff.

Anonymous

6/17/2025, 5:40:26 AM

No.105617430

[Report]

>>105617441

>>105617381

>so the only thing worth it is just the distilled Lora? nothing else?

I think it's the combinaison of this lora + NAG that makes it so good, it's funny that the two of them appeared within a week of interval, almost as if they were destined to work together

>>105617430

I havent even used NAG yet and my outputs are decent. What does it do? Works in tandem with the lora?

Anonymous

6/17/2025, 5:44:42 AM

No.105617449

[Report]

>>105617471

so magcache + nag + light2v for max wan?

Anonymous

6/17/2025, 5:44:43 AM

No.105617450

[Report]

>>105617441

Gives you back functioning negative prompt when using CFG 1 with Wan 2.1. Normally when using CFG 1, negative prompt is skipped which is where half the time savings from using CausVid, AccVid, and now Self Forcing comes from.

Anonymous

6/17/2025, 5:44:58 AM

No.105617452

[Report]

>>105617457

Anonymous

6/17/2025, 5:45:01 AM

No.105617453

[Report]

>>105617441

>What does it do?

it replaces CFG, you get the negative prompts effects while getting the speed (kinda) of cfg 1

https://chendaryen.github.io/NAG.github.io/

Anonymous

6/17/2025, 5:45:55 AM

No.105617457

[Report]

Anonymous

6/17/2025, 5:51:37 AM

No.105617471

[Report]

>>105617483

>>105617449

>so magcache + nag + light2v for max wan?

no, teacache and magcache are supposed to skip some "useless" steps, but when you're on 4 steps there's nothing to skip lol

Anonymous

6/17/2025, 5:54:07 AM

No.105617483

[Report]

>>105617498

>>105617471

so if im using this i dont need to use teacache and slg at all then?

Anonymous

6/17/2025, 5:56:14 AM

No.105617498

[Report]

>>105617483

yeah you don't need teacache anymore, and for slg, I tried to use it but it didn't give me something different

Anonymous

6/17/2025, 7:18:24 AM

No.105618071

[Report]

>>105611463

>2060 near bottom

That's my boy :)

Anonymous

6/17/2025, 9:45:14 AM

No.105618916

[Report]

>>105619022

is there a list for expressions and stuff? like i cant for the life of me find out how do people refer to that one :3> (:3 but open mouth) face

Anonymous

6/17/2025, 10:04:40 AM

No.105619022

[Report]