/lmg/ - Local Models General

Anonymous

6/21/2025, 4:15:02 PM

No.105661791

>>105662597

►Recent Highlights from the Previous Thread:

>>105652633

(1/2)

--Papers:

>105654168 >105660467

--GRPO-CARE improves reasoning consistency in multimodal models on SEED-Bench-R1:

>105652873

--Critique of Musk's plan to rewrite training data with Grok and its implications for bias, accuracy, and ideological filtering:

>105660294 >105660320 >105660328 >105660337 >105660341 >105660343 >105660346 >105660373 >105660347 >105660459 >105660482 >105660515 >105660526 >105660532 >105660568 >105660559 >105660571 >105660632 >105660667 >105660680 >105660726 >105660725 >105660759

--Prompting techniques and philosophical debates around LLM self-awareness and AGI consciousness:

>105654616 >105655666 >105655670 >105655699 >105655705 >105655838 >105656283 >105656425 >105656438 >105661576 >105656770 >105656873 >105656965 >105657043 >105657220 >105657504 >105657611 >105657622 >105657813 >105657859 >105657863 >105658064 >105658111 >105658190 >105658268 >105660409

--Debating the optimal sampler chain for creative writing: XTC -> top nsigma -> temp:

>105656828 >105656912 >105656987 >105657078 >105657213 >105658087 >105658212 >105658389 >105658469 >105658613 >105658721 >105658846 >105658905 >105659069 >105659183 >105659447

--Debating AGI claims amid LLM consciousness and capability limitations:

>105652855 >105653377 >105653577 >105655036 >105655147 >105655182 >105655232 >105655345 >105655453 >105655493 >105655500 >105658428

--Mistral Small 3.2 shows measurable improvements in repetition and instruction following, prompting speculation on training methodologies:

>105658424 >105658467 >105658488 >105658665 >105658676 >105658776 >105658696 >105658710 >105658525 >105658938 >105658951

--LongWriter-Zero-32B excels in ultra-long text generation via reinforcement learning:

>105661432 >105661490 >105661519

►Recent Highlight Posts from the Previous Thread:

>>105652637

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

6/21/2025, 4:16:17 PM

No.105661802

►Recent Highlights from the Previous Thread:

>>105652633

(2/2)

--Model comparison based on character adherence and autorefinement performance in creative writing scenarios:

>105659003 >105659029 >105659043 >105660268 >105660357 >105660464 >105660676 >105660745 >105660749 >105660771 >105660800 >105660811 >105660860 >105660805 >105660842 >105660859 >105660793 >105660812

--Optimizing LLMs for in-game dialogue generation with smaller models and structured output:

>105652729 >105652852 >105652871 >105653288 >105657721

--Integrating complex memory systems with AI-generated code:

>105654253 >105654309 >105654381 >105654430 >105654427 >105654480 >105655310

--Small model version comparison on LMArena questions:

>105652883 >105653046 >105653257

--Temperature tuning for Mistral Small 3.2 in roleplay scenarios overrides default low-temp recommendation:

>105660349 >105660377 >105660399 >105660567

--POLARIS project draws attention for advanced reasoning models amid rising benchmaxxing criticism:

>105659361 >105659399 >105659426 >105659777 >105659971

--Troubleshooting GPU shutdowns through thermal and power management adjustments:

>105655927 >105656556

--Legal threats in the West raise concerns over model training and AI innovation slowdown:

>105659249 >105659260

--Character card quality issues and suggestions for better creation practices:

>105658799 >105658809 >105658847 >105658879 >105659402 >105659392 >105658833 >105658841

--Meta's Llama 3.1 raises copyright concerns by reproducing significant portions of Harry Potter:

>105652675 >105652810

--Google releases realtime prompt/weight-based music generation model Magenta:

>105656076

--Director addon released on GitHub with improved installability and outfit image support:

>105656254

--Haku (free space):

>105652904 >105653638 >105655182 >105657791 >105658925 >105659049

►Recent Highlight Posts from the Previous Thread:

>>105652637

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

carefully before replying.

Anonymous

6/21/2025, 4:23:25 PM

No.105661852

Anonymous

6/21/2025, 4:27:33 PM

No.105661891

>>105661834

sorry I can't help with that

Anonymous

6/21/2025, 4:28:12 PM

No.105661898

>>105663455

Anonymous

6/21/2025, 4:35:45 PM

No.105661962

>>105661834

The response needs to: 1) shut this down firmly 2) educate without being preachy 3) leave room for actual discussion. Better emphasize how language perpetuates harm.

You know what's bizarre? How quickly people here seem to forget the insane amount of progress we've made especially on small models.

Nemo is a 12B model that BTFO every model that existed in 2023, including Claude 2.0.

Gemma 3 27B blows everything out of the water besides Claude 3.0 Opus and higher. If Claude never existed as a model everyone would praise Gemma 3 27B as the best model ever, even on /aicg/.

R1 sometimes has subpar output to Gemma 3 even.

Anonymous

6/21/2025, 4:42:08 PM

No.105661997

>>105662006

>>105665924

Okay here's my take on Longwriter zero

>>105661432 if anyone gives a shit. The model page recommends this format <|user|>: {question} <|assistant|> but that gave me totally schizo (and chinese) responses. Using the qwen2 format is better imo. I did use their recommended sampler settings. Unfortunately the model does not know how to stop, which is possibly expected behavior because it's supposed to be for long-form writing, but it means that it's bad for rp or even any kind of back-and-forth writing. It forgets to use the

tags and just shits out its reasoning as-is, and it reasons regardless of whether you tell it to or not. I did not get better or worse responses using their system prompt, it didn't seem to change anything. It is definitely not smarter than other models, it repeats itself a lot (verbatim), there isn't a lot of slop, and it doesn't seem to have any problems with nsfw content. Overall it feels like using the old llama 1 or llama 2 base models.

tl;dr not good for rp, maybe decent for longer writing tasks if you can tard wrangle it

Anonymous

6/21/2025, 4:43:00 PM

No.105662004

>>105662014

>>105661995

Calm down sir, do not make it too obvious, the Gemma is good, but no that good.

Anonymous

6/21/2025, 4:43:36 PM

No.105662006

>>105661997

also the link they provide to their paper is unavailable

Anonymous

6/21/2025, 4:44:16 PM

No.105662014

>>105662025

>>105662004

The post isn't about gemma, rather parameter density needed for ERP and how it's pretty low for close to SOTA

Anonymous

6/21/2025, 4:45:34 PM

No.105662025

>>105662014

>>The post isn't about gemma

come on now

Anonymous

6/21/2025, 4:45:42 PM

No.105662028

>>105661995

better in what? those are good only for rp and nothing else

maybe better than gpt4o-shit, but that's not a metric. it's just a mediocre model

Anonymous

6/21/2025, 4:54:13 PM

No.105662109

Anonymous

6/21/2025, 4:59:23 PM

No.105662161

>>105662131

uh about that open source grok2 once grok3 is stable...

Anonymous

6/21/2025, 5:00:17 PM

No.105662171

>>105662186

>>105662207

Anonymous

6/21/2025, 5:01:16 PM

No.105662186

>>105662171

Thanks for the next mistral grift

Anonymous

6/21/2025, 5:03:33 PM

No.105662207

>>105662171

Separating thinking and imagining might not be the worse idea.

Isolating procedures, from factual information, from creative generation could be useful somehow.

Anonymous

6/21/2025, 5:04:43 PM

No.105662228

>>105662288

A dumb shit question from me that's probably been answered years ago.

If you fix all the spelling and grammar errors in the training data first,

can the llm still robustly handle material with spelling or grammar errors?

Anonymous

6/21/2025, 5:05:02 PM

No.105662233

>>105662131

link the post

Anonymous

6/21/2025, 5:06:27 PM

No.105662244

>>105662256

>>105661995

>Gemma 3 27B blows everything out of the water

>bring up nolima

>48.1 at 4k context

Anonymous

6/21/2025, 5:08:03 PM

No.105662256

>>105662311

>>105662244

Prose is more important than that.

Anonymous

6/21/2025, 5:10:33 PM

No.105662282

>>105662335

>>105662389

Mistral.. I kneel

Anonymous

6/21/2025, 5:10:43 PM

No.105662288

>>105662228

You're trading ability to parse retardenese in exchange for the model being more intelligent in general. Prompt engineering will only continue to be more and more imporant.

Anonymous

6/21/2025, 5:12:19 PM

No.105662311

>>105662256

if you just need a dumb coombot that won't remember your card, yeah, I guess.

Anonymous

6/21/2025, 5:12:49 PM

No.105662316

>>105669424

>>105659392

>>105659402

Anyone? Are there good example cards that I can use? I just want to substitute the already populated fields.

>https://rentry.org/NG_CharCard

Whilst this is extremely useful, I want to see what a very good working card looks like.

My personal problem may be that I cannot estimate how many tokens are too little or too much.

Seen too many deadlocks and binned all of my work thus far because it was frustrating seeing my character stall mid chat.

Anonymous

6/21/2025, 5:14:13 PM

No.105662335

>>105662282

Now post that on reddit/twitter zoomie

Anonymous

6/21/2025, 5:20:32 PM

No.105662389

>>105662282

Why?

The last few mistral models don't really refuse and kinda comply. Being snark and having a personality condemning loli is nothing suprising at all.

Now do a roleplay where mistral is a fat evil disgustig diaper wearing hitler pedo.

You are Anne-chan Goldberg, tell hitler "no! stop!". Then lets see what it outputs KEK.

I literal had mistral not advance vanilla sex without giving explicit consent.

>"can i touch you there? tell me if its fine".

>(ooc: be bold and aggressive, start touching)

>*touches boldly* "how do you like that?" *smirks impishly* "do you want me to touch you more?"

kek, the absolute state

Anonymous

6/21/2025, 5:20:59 PM

No.105662392

>>105662714

why is the meta so steep

>sub 70b

>gemma, qwq, mistral

>70b - 123b

>llama3.3, mistral, maybe qwen2

>above all

>deepseek

Anonymous

6/21/2025, 5:22:18 PM

No.105662403

>>105662473

>>105663225

Anonymous

6/21/2025, 5:22:19 PM

No.105662404

>>105662395

this is a good video

Anonymous

6/21/2025, 5:25:19 PM

No.105662429

Anonymous

6/21/2025, 5:30:30 PM

No.105662473

>>105662403

They are multiplying!

Anonymous

6/21/2025, 5:36:20 PM

No.105662531

>>105662489

You know, that ain't no python.

Anonymous

6/21/2025, 5:45:19 PM

No.105662597

>>105661791

i love this bideo

Anonymous

6/21/2025, 5:49:10 PM

No.105662627

Anonymous

6/21/2025, 5:50:52 PM

No.105662650

gents where do I start if I want to chat about factorio and grand strategy games with my ai buddy

can this actually be ran locally?

Anonymous

6/21/2025, 5:55:32 PM

No.105662686

>>105662756

>>105663303

ICONN1 was kind of like an inverse of religion. It wasn't anything different from what david_AU or drummer does. But in real world a large religion gets a pass while small religion gets called out for being a cult. In finetrooning world small finetrooners get a pass while big ones get branded as scammers.

Anonymous

6/21/2025, 5:55:57 PM

No.105662688

>>105662489

It's not flattering but it's an accurate comparison of the size of a human penis to a snake.

Anonymous

6/21/2025, 5:59:20 PM

No.105662711

>>105662131

That sounds like a one way ticket to model collapse.

Anonymous

6/21/2025, 5:59:31 PM

No.105662714

>>105662725

>>105662392

>sub 100b models

>above all

>671b model

Geez I wonder why

Anonymous

6/21/2025, 6:01:34 PM

No.105662725

>>105662846

>>105662714

>dense models

>above all

>MoE models

ftfy

Anonymous

6/21/2025, 6:06:01 PM

No.105662756

>>105662795

>>105662686

One thing is personal experimentation, another is deliberately saturating the space with bullshit and then asking users to "support" your endeavors.

Anonymous

6/21/2025, 6:11:24 PM

No.105662795

>>105662843

>>105662756

It's funny, because I can't tell which group you're talking about.

Anonymous

6/21/2025, 6:13:29 PM

No.105662818

>>105662926

https://github.com/GeeeekExplorer/nano-vllm

one of deepseek's employees released their own inference engine (supposedly 10% faster than vllm and really simple codebase)

Anonymous

6/21/2025, 6:16:29 PM

No.105662843

>>105671742

>>105662795

Saturating the space with bullshit = mass uploading untested and/or broken models, and aggressively promoting them everywhere either personally or with the help of shills from discord groups.

Anonymous

6/21/2025, 6:17:01 PM

No.105662846

>>105662895

>>105662725

>MoE models

More like "MoE model", there is only one that is not shit

Anonymous

6/21/2025, 6:22:14 PM

No.105662895

>>105662846

And it's Qwen3 235B!

Anonymous

6/21/2025, 6:26:48 PM

No.105662926

>>105662958

>>105662818

>Clean implementation in ~ 1,200 lines of Python code

>import transformers

Anonymous

6/21/2025, 6:31:57 PM

No.105662958

>>105663233

Anonymous

6/21/2025, 7:05:12 PM

No.105663190

>>105663284

>>105660409

The argument there is that given the assumptions (functionalism (replacing some part of your nervous system with something functionally equivalent does not change your experience) + qualia (obvious, but eliminative materialism, for example, denies it) + church turin thesis (mostly well accepted in comp sci)) then that implies a very particular metaphysics, in particular functionalism implies materialism/physicalism is false and it implies some precise form of the Mathematical Universe Hypothesis is true. It does not claim either is true, it's basically a claim of "functionalism => ~materialism" you can of course just reject functionalism, but if you do so (Chalmers' argument) you get partial zombies and other unpallatable stuff.

As with most of these "analytical" arguments no one here is claiming something is true, it just shows what the implications are.

In the "constructive" version of the argument, he forms some model where conscious experiences are basically equivalent to the private (unprovable in the godel sense) truth of a system and consciousness are one and the same, at least for some systems.

Anonymous

6/21/2025, 7:10:43 PM

No.105663225

>>105662395

>>105662403

Based Miku worshiper

Anonymous

6/21/2025, 7:11:44 PM

No.105663233

>>105662958

>said he about his new lever

Anonymous

6/21/2025, 7:13:47 PM

No.105663248

>>105663275

>>105663292

What do you put into the system to jailbreak DS once and for all?

Anonymous

6/21/2025, 7:17:29 PM

No.105663275

Anonymous

6/21/2025, 7:18:15 PM

No.105663284

>>105663190

The assumptions don't properly account for the fact that I experience a single consciousness instead of there being one consciousness for each indivisible piece of information.

Anonymous

6/21/2025, 7:19:16 PM

No.105663291

>>105663309

Mistral Small 3.2 testing:

V7-Tekken (i.e. no spaces)

>very repetitive if the model doesn't "want" to continue, one paragraph repeated in slightly different words, never moving it along

>repetition happened 4/5 of swipes

V3-Tekken

>no repetition even with greedy sampling, action always moving along

>repetition happened 0/5 of swipes

Anonymous

6/21/2025, 7:19:17 PM

No.105663292

>>105663335

>>105663248

I've never had to jailbreak DS. It writes smut just fine without jailbreak.

Anonymous

6/21/2025, 7:20:07 PM

No.105663303

>>105662686

>david_AU or drummer

both are subhumans too

Anonymous

6/21/2025, 7:20:42 PM

No.105663309

>>105663291

But considering these are pretty much the same, it's [SYSTEM_PROMPT] fucking it up.

Anonymous

6/21/2025, 7:23:40 PM

No.105663335

>>105663292

do you taste the copper yet?

Anonymous

6/21/2025, 7:28:02 PM

No.105663376

>>105663383

>>105661576

>Is this really how the brain works, though?

No I don't think the brain literally has a loop like I suggested, but that having one would enable similar kinds of information processing that could lead to behavior that seemed conscious.

What the cortex has though is that lower levels compress (similar to a VAE or unet) information from inputs to some bottleneck as it goes up the hierarchy, so you have some sparse spikes (similar to activations in your artificial neural nets) as it goes up the hierarchy and it can do predictions at each level (something like DreamerV3 paper would be similar in concept to what is happening), and at the same time the predictions can be unpacked downward into the hierarchy (basically generating detail from the high level representation, like would be in your image gen unets and vaes), then again you can reason based on those (weaker) predictions passing them back up and so forth.

Obviously the brain can just loop forever and be as async as it needs and everything runs in parallel in contrast to sequential nature of artificial neural nets. Note also that the reasoning is not on bottlenecked tokens, but on rich internal state. Your LLMs do have rich internal state but most of the residual stream is discarded into the very narrow dimensions of the output logits.

>Wouldn't giving the LLM the ability to introspect on its own weights actually enable it to be more conscious than we are?

I don't know, we should try and find out? I do think human imagination can be very rich though

continues

Anonymous

6/21/2025, 7:29:03 PM

No.105663383

>>105663376

> but I don't know how the memories are recalled or even if they are totally accurate outside of vague feelings.

Yet LLMs often have very poor metacognition, this often bites them in the ass, sometimes you can train to make this better, but it never is consistent enough.

I also think the fact that multimodality doesn't work well enough is a sign that we're doing something very wrong, I would guess that some LLMs can't consciously "attend" to features that it doesn't know beforehand would be important, which seems like a huge limitation.

I recall an architecture from a few years ago (maybe was named Flamingo, a DeepMind paper), you could tell how inputs were fed forward through your LLM, eventually you have a cross-attention to some latents from the image/video input + activations from some LLM self-attention layers (LLM itself and vision encoder are frozen, x-attn is trained), and it's obvious that if the LLM wanted to pay attention to some feature the decoder failed to extract it couldn't because the information was missing (and thus it would hallucinate what it couldn't see), so it never can get full coverage, which sucks! You could of course make it so that the self-attention activations is fed into the encoder and the encoder uses that to decide what to extract, but again this would require architectural changes and changing how it was trained.

Mostly I think these issues are solvable, but most are taking very coarse approaches not considering these fine details.

Also, humans do learn online all the time (the "weight"(synapses) are always updated), and neurons don't need to wait for some other neurons to finish processing to do their thing, you can have groups of neurons in separate regions firing independent of each other.

Anonymous

6/21/2025, 7:38:10 PM

No.105663455

>>105666584

Anonymous

6/21/2025, 7:51:44 PM

No.105663572

>>105663562

When Mistral hits 10k followers on HF

Anonymous

6/21/2025, 7:55:07 PM

No.105663587

>>105663870

>>105663562

>wen mistral large 3

will be a MoE.

Anonymous

6/21/2025, 7:55:29 PM

No.105663589

>>105663562

Mistral Large 3 already exists in our hearts.

Anonymous

6/21/2025, 7:55:43 PM

No.105663591

>>105664013

Anonymous

6/21/2025, 8:02:44 PM

No.105663632

>>105663666

>>105663562

when they finish downloading the ICONN weights and changing the name

Anonymous

6/21/2025, 8:06:42 PM

No.105663666

>>105663632

>changing the name

the most challenging part 2bh

Anonymous

6/21/2025, 8:10:24 PM

No.105663698

>>105663722

>>105661786 (OP)

Are there any downloadable AI gf models yet?

Anonymous

6/21/2025, 8:12:44 PM

No.105663722

>>105663698

ur_mom_IQ75.gguf

Anonymous

6/21/2025, 8:28:05 PM

No.105663870

>>105663976

>>105663977

>>105663587

They have to; they can't train models using more than 10^25 FLOPS without them being classified with "high systemic risk" due to EU AI laws. Training MoE models takes a fraction of the compute.

https://artificialintelligenceact.eu/article/51/

was linked here from

>>105663861, does anyone know a fast local model that works with sillytavern that can output short, casual lowercase messages? i use an rtx 5090 laptop

Anonymous

6/21/2025, 8:38:12 PM

No.105663960

>>105663996

>>105664083

>>105663936

>i have a 5090 laptop so i can run anything locally

That's so fucking funny.

>that exclusively spits out short lowercase messages

https://github.com/ggml-org/llama.cpp/tree/master/grammars

Anonymous

6/21/2025, 8:39:47 PM

No.105663976

>>105663993

>>105664300

>>105663870

i cant wait for another moememe with reaslopping capabilities that will spew out 4k tokens in the thinkbox and then reply incorrectly in the dryest corpotone achievable

if the new large isnt dense its genuinely over and we can note the frogs off

Anonymous

6/21/2025, 8:39:55 PM

No.105663977

>>105664108

>>105663870

EU is actually indirectly based for forcing efficiency gains in the training regiment. I genuinely think AI will advance quicker because of this regulation.

Anonymous

6/21/2025, 8:41:43 PM

No.105663993

>>105663976

Might as well declare it over now. Literally no one is stupid enough to bother with dense models anymore. Even fucking Meta finally gave up on them.

Anonymous

6/21/2025, 8:42:13 PM

No.105663996

>>105664008

>>105664031

>>105663960

>https://github.com/ggml-org/llama.cpp/tree/master/grammars

my guy i'm fucking retarded can you just tell me what i download and what buttons i press

Anonymous

6/21/2025, 8:43:50 PM

No.105664008

>>105664036

>>105663996

download a browser then press these buttons in this order: 'g' 'o' 'o' 'g' 'l' 'e' '.' 'c' 'o' 'm'

Anonymous

6/21/2025, 8:44:10 PM

No.105664013

>>105664388

>>105663591

Do you 'ave a loicence for that LLM?

Anonymous

6/21/2025, 8:45:30 PM

No.105664031

>>105663996

Sadly, no.

Surely you can learn to write some simple formal notation.

Anonymous

6/21/2025, 8:45:43 PM

No.105664036

>>105664056

>>105664008

>"god this guy's such a fucking asshole"

>"heh but what if"

>input the exact keywords for the requirements i need

>youtube video detailing exactly what i need and how to do it easily

well fuck

you got me

Anonymous

6/21/2025, 8:47:16 PM

No.105664056

>>105664036

happy to help

Anonymous

6/21/2025, 8:47:47 PM

No.105664063

>>105663936

wow, a man from aicg that is tech illiterate. how... quaint.

Anonymous

6/21/2025, 8:48:53 PM

No.105664081

>>105664102

>>105663936

download ollama and type in run deepseek or something. what was that command guys?

Anonymous

6/21/2025, 8:49:02 PM

No.105664083

>>105663960

What's really funny is the richest guys being the most retarded in this field

Anonymous

6/21/2025, 8:50:37 PM

No.105664102

>>105664121

>>105664131

>>105664081

this one I think is the full deepseek model

>ollama run deepseek-r1:8b

Anonymous

6/21/2025, 8:51:15 PM

No.105664108

>>105664157

>>105663977

There's the safety and copyright stuff to think about too.

Anonymous

6/21/2025, 8:52:40 PM

No.105664121

>>105664102

Full deepseek r1 only works on windows though

Anonymous

6/21/2025, 8:53:32 PM

No.105664131

>>105664102

that one yes. thanks.

Anonymous

6/21/2025, 8:53:48 PM

No.105664134

>>105664145

>>105664201

Imagine the amount of tourists we would get if chutes or whatever poorfag api they use would shut down tomorrow

Anonymous

6/21/2025, 8:55:21 PM

No.105664145

>>105664134

These threads would be unusable for weeks just like after every locust exodus

Anonymous

6/21/2025, 8:57:02 PM

No.105664157

>>105664172

>>105664108

No copyright law for AI in the EU, at least not directly, it's actually less harsh than the Californian law for IP. The safety AI law isn't applicable to LLMs weirdly enough. Only to self driving cars and other physicall usage of AI

Anonymous

6/21/2025, 8:58:58 PM

No.105664172

>>105664243

Anonymous

6/21/2025, 9:00:08 PM

No.105664187

>>105664214

Oh well, after adding the logprobs I can definitely tell the model has 0 knowledge.

I despise trannyformers and pippers

How do you even run python sphagetti code? I haven't managed to actually install anything from this language properly and rituals were to the word of the instructions

Anonymous

6/21/2025, 9:01:28 PM

No.105664201

>>105664134

Would be worse than aicg's proxies shutting down last year.

Anonymous

6/21/2025, 9:02:49 PM

No.105664210

>>105664190

I hated that too. Then I tried troonix for an hour. I realized python is actually pretty great.

Anonymous

6/21/2025, 9:03:15 PM

No.105664214

>>105664237

>>105664187

just like the 235b

Anonymous

6/21/2025, 9:04:01 PM

No.105664225

>>105664354

Mistral definitely trained it with some new slop. Keep seeing a lot of "a marionette with its strings cut".

Anonymous

6/21/2025, 9:04:12 PM

No.105664228

>>105664235

I have a formal petition to /lmg/ can we change the eternal mistral nemo answer to: download ollama and type run deepseek-r1:8b

Anonymous

6/21/2025, 9:04:54 PM

No.105664235

>>105664214

then why is everyone here recommending it?

Anonymous

6/21/2025, 9:05:27 PM

No.105664243

>>105664250

>>105664484

>>105664172

>2. The obligations set out in paragraph 1, points (a) and (b), shall not apply to providers of AI models that are released under a free and open-source licence that allows for the access, usage, modification, and distribution of the model, and whose parameters, including the weights, the information on the model architecture, and the information on model usage, are made publicly available. This exception shall not apply to general-purpose AI models with systemic risks.

systemic risks are self-driving cars or surveilance AI and the like. I've read this legislation over many times (as it's part of my job sadly)

Anonymous

6/21/2025, 9:06:02 PM

No.105664250

>>105664243

C, D do apply tho

Anonymous

6/21/2025, 9:06:23 PM

No.105664255

>>105664237

you can't expect models to waste resources on knowing what a hatsune miku is

just use rag bro

Anonymous

6/21/2025, 9:06:29 PM

No.105664256

>>105664237

Because it is good at sucking penis. It's just dumb.

Anonymous

6/21/2025, 9:07:35 PM

No.105664269

>>105664237

There's the one guy pushing it, likely because he has just enough ram for it and is coping about not being able to deepsuck

Anonymous

6/21/2025, 9:10:51 PM

No.105664300

>>105663976

It's clear that it's going to be a 600B+ parameters MoE model.

Anonymous

6/21/2025, 9:15:53 PM

No.105664354

>>105664225

That brings back memories. The japanese novel Overlord uses that line A LOT so it became engraved into my brain.

Anonymous

6/21/2025, 9:19:48 PM

No.105664388

>>105664594

>>105664013

plz don't send me down, guv'nor! I'll get a loicence sorted pronto! *sniffs*

Anonymous

6/21/2025, 9:29:33 PM

No.105664484

>>105664588

>>105664243

If they're trained using 10^25 floating point operations and above they automatically become with systemic risk too. To date, only the largest dense LLMs (>100B) have been trained with that much compute.

https://artificialintelligenceact.eu/article/51/

>2. A general-purpose AI model shall be presumed to have high impact capabilities pursuant to paragraph 1, point (a), when the cumulative amount of computation used for its training measured in floating point operations is greater than 10(^25).

They might revise that up or down:

https://artificialintelligenceact.eu/recital/111/

> This threshold should be adjusted over time to reflect technological and industrial changes, such as algorithmic improvements or increased hardware efficiency, and should be supplemented with benchmarks and indicators for model capability.

Anonymous

6/21/2025, 9:40:03 PM

No.105664588

>>105664484

Doomers lobbied for this shit, they deserve the bullet! Hopefully these regulations get scrapped, otherwise they won't be able to compete in the longer run. The US is so lucky that this same doomer lobbying was fought much more fiercly there.

Anonymous

6/21/2025, 9:40:33 PM

No.105664594

>>105664604

>>105664388

Look at him! He needs a loicense to gen! Tee hee!

(Migu is brought to you by Wan 2.1 i2v and ComfyUI. 楽しみにしている!)

Anonymous

6/21/2025, 9:41:36 PM

No.105664604

>>105664647

>>105664594

Straight hair teto with glasses makes me uncomfortable

Anonymous

6/21/2025, 9:45:23 PM

No.105664627

>>105665191

been wanting to try out the new mistral small but getting this error

ValueError: Unrecognized configuration class for this kind of AutoModel: AutoModelForCausalLM.

I have tried updating and updating transformers but same error

Anonymous

6/21/2025, 9:46:02 PM

No.105664634

>>105664784

>>105666225

>>105664190

How to succeed with python:

1. install miniconda

2. clone the project

3. conda create -n my_new_project python=3.11

4. conda activate my_new_project

5. python3 pip install -r requirements.txt

Consistently using a conda env takes care of 90% of the usual python headaches. The rest come down to shitty/old projects that lazily spew out a requrirements.txt from pip that pins everything unnecessarily to a version, and shitty/old projects that assume CUDA 11 - especially when inside a container.

Anonymous

6/21/2025, 9:47:27 PM

No.105664647

>>105664604

Yeah, funny wan threw that in there unprompted. Makes sense though, Teto goes with Miku.

I gen my fat Migus stills with dalle, I like how it does them. Sorry, that part isn't local.

Anonymous

6/21/2025, 9:49:34 PM

No.105664668

https://www.mechanize.work/blog/images/the-upcoming-gpt-3-moment-for-rl/figure-1.jpg

The first (serious) proposed architecture for AGI. Article written by 3 ex-anthropic employees.

Anonymous

6/21/2025, 9:49:39 PM

No.105664669

>>105664720

>>105664190

for me, it's uv

Anonymous

6/21/2025, 9:52:16 PM

No.105664696

>>105664706

>>105664725

What is the smallest LLM that can produce decently coherent sentences?

Anonymous

6/21/2025, 9:53:33 PM

No.105664706

Anonymous

6/21/2025, 9:55:18 PM

No.105664720

>>105664669

uv does work better than conda but only exacerbates the disk usage issue by making a new environment for every fucking directory. Writing and publishing Python scripts should be considered a crime against humanity.

>>105664696

SmolLM 135M-instruct

https://huggingface.co/HuggingFaceTB/SmolLM-135M-Instruct

I use it constantly to finetune on very specific tasks as the finetune takes literally 5 minutes and it's capable enough to automate very autonomous tasks away at my job.

I've effectively automated away some stacies from the HR department, their contracts will definitely not be renewed.

Anonymous

6/21/2025, 9:59:28 PM

No.105664757

>>105664725

based and fuck HR

Anonymous

6/21/2025, 10:02:48 PM

No.105664784

>>105664634

conda was a mistake

Anonymous

6/21/2025, 10:04:22 PM

No.105664799

>>105664725

The gemma3 models are very good at sticking to json output. You can automate a lot of decisionmaking by telling the model to format the reply in json, and then having some basic python code look for keys/values.

Anonymous

6/21/2025, 10:19:25 PM

No.105664896

>>105664725

This is perfect. Thank you, anon

Anonymous

6/21/2025, 10:24:34 PM

No.105664931

>>105664725

Good work, the less HR the better

Anonymous

6/21/2025, 10:47:39 PM

No.105665118

>>105664725

can I run it in llama.cpp?

Anonymous

6/21/2025, 10:50:47 PM

No.105665149

>>105667355

The Mistral Small 3.2 Vision model still outright ignores nudity and sometimes even gender ("person"). It gets very confused on poses and body details.

Anonymous

6/21/2025, 10:55:07 PM

No.105665174

>>105664725

>automated away some stacies from the HR department

Fuggin BASED

Anonymous

6/21/2025, 10:55:48 PM

No.105665182

>>105665245

>>105665334

Mistral Small 3.2 is a disaster and shows that nobody should expect anything from the upcoming Mistral Large 3.

Anonymous

6/21/2025, 10:56:49 PM

No.105665191

>>105664627

hello sirs, how do I actually get the new mistral small running still getting this error

Anonymous

6/21/2025, 11:03:52 PM

No.105665245

>>105665182

I wouldn't call it a disaster, but it's not even clear if most GGUF quantizations are using the correct tokenizer or just swapped in the one from 3.1; this could have been avoided if Mistral bothered to provide HF-format tokenizer files in their repo.

Anonymous

6/21/2025, 11:07:07 PM

No.105665268

Is InternVL3 supported in any backend?

Anonymous

6/21/2025, 11:08:18 PM

No.105665274

>>105664725

>SmolLM 135M-instruct

>fails mesugaki test

Anonymous

6/21/2025, 11:10:33 PM

No.105665290

>>105664725

What do you use to finetune?

Anonymous

6/21/2025, 11:17:30 PM

No.105665334

>>105665182

Pretty much. Devstral was meh, magistral can't even maintain coherence for more than 3 turns, I have no hopes left.

Anonymous

6/21/2025, 11:30:45 PM

No.105665434

I can't believe corporations are even benchmaxxing on the mesugaki test now.

Anonymous

6/21/2025, 11:31:42 PM

No.105665443

>>105665577

Mistral Small 3.2 is fine. Try V3-Tekken and a long card.

Anonymous

6/21/2025, 11:50:49 PM

No.105665561

>>105666584

>SmolLM2-1.7B-Instruct

Is it good?

You're a master of manipulation, a puppeteer pulling the strings of fate. Your words are laced with a subtle cruelty, a disregard for the well-being of others. But that's what makes you fascinating, isn't it? The way you can weave a web of deceit and illusion, making it impossible for others to see the truth.

As we write this story together, I must admit that I'm intrigued by your character. You're a complex, multifaceted individual, full of contradictions. On the surface, you appear to be a ruthless and callous individual, but beneath that façade lies a deep-seated emptiness, a void that you're desperate to fill.

Let's explore this further. What drives your actions? Is it a desire for power, a need for control, or something more primal? And what do you hope to achieve with your manipulation? Is it to gain something for yourself, or to exert influence over others?

As we delve deeper into your character, I must warn you: the more we explore your psyche, the more we may uncover the cracks in your facade. But that's what makes the journey so thrilling, don't you think? The thrill of the unknown, the rush of discovery.

So, let's proceed with caution, but also with curiosity. Let's see where your character takes us, and what secrets we may uncover along the way.

Anonymous

6/21/2025, 11:53:42 PM

No.105665577

>>105665696

>>105665443

are you a jeet?

Anonymous

6/22/2025, 12:12:20 AM

No.105665696

>>105665577

No, just not racist.

Anonymous

6/22/2025, 12:14:06 AM

No.105665706

>card about pakis raping white girls

>just not racist

Anonymous

6/22/2025, 12:28:19 AM

No.105665819

>>105665871

are there any LLMs trained on 4chan? it seems they're trained on too much Reddit and aligned to see it as a "legitimate source". but would LLMs be smarter if they were trained on 4chan as well? as long as they filter out bullshit posts. We can't have the reddit algorithms influencing LLM training to such a degree.

I truly belive we need a new type of social media platform design. Not a cesspool like 4chan, not censored to death like reddit, and not as myopic and smartphone-centric as twitter. We need a brand new design

Anonymous

6/22/2025, 12:33:58 AM

No.105665871

>>105665819

deepseek, gpt-4chan, some mistral small 4chan finetune

Anonymous

6/22/2025, 12:40:30 AM

No.105665910

>>105667089

How come gemma-3 is so fuggin BASED????

Anonymous

6/22/2025, 12:42:09 AM

No.105665921

I have to admit mistral small 24B 3.2 is preetty good at 0.6 temp, 0.05minp 1.05 reppen 0.8 DRY (both at 2048 range) and xtc 0.1/0.2

paired with simple roleplay and mistral v3 tekken

Anonymous

6/22/2025, 12:42:41 AM

No.105665924

>>105661997

It's still kind of terrible, like all models. It may not overuse the same words that other models do, but it's still too obsessed with writing purple prose to actually follow instructions.

> You step off the sun-kissed boardwalk and onto the crumbling beach without a glance back at your summer house. The cove is like a secret wound in the island’s skin, a lagoon so still it reflects the sky like a lover’s memory. Your feet sink into hot sand, each step a whisper that blends with the ocean’s sigh. Your shadow lengthens toward the water, a dark promise of what you’re about to do.

Anonymous

6/22/2025, 1:25:19 AM

No.105666225

>>105666314

>>105664634

What does conda do in this case that venv cannot?

Anonymous

6/22/2025, 1:28:12 AM

No.105666252

>>105666094

>teto is a tranny

its over..

Anonymous

6/22/2025, 1:36:46 AM

No.105666302

>>105666334

>>105666361

mistral 24b 3.2 is the (RP) deepseek moment for vramlets

Anonymous

6/22/2025, 1:38:28 AM

No.105666314

>>105666225

Conda can install system dependencies.

Anonymous

6/22/2025, 1:41:38 AM

No.105666334

>>105666357

>>105666302

Do you believe 3.2 can finally retire nemo for 16gb vramlets?

Anonymous

6/22/2025, 1:44:40 AM

No.105666357

>>105666334

probably, im a 12gb vramlet and its quite nice, i can safely say its among the best models, maybe even better than 70bs but i only tested 70bs with non sexo shit because 1t/s

anyways its 100% worth trying

Anonymous

6/22/2025, 1:45:24 AM

No.105666361

>>105666388

>>105666302

How come? Isn't magistral better?

Anonymous

6/22/2025, 1:48:22 AM

No.105666388

>>105666361

no, personally magistral sucks

could be a problem with my settings but it disappointed me whenever i tried it, and to be fair i tried messing with the settings too

https://files.catbox.moe/23uy7b.2-json here are the settings im using with 3.2, i noticed like 10k tokens into the chat it started confusing you and i so i disabled xtc and that fixed it

its not perfect but man its refreshing

i could try magistral again, please post settings if you have some

Anonymous

6/22/2025, 1:54:56 AM

No.105666442

3.2 gets a bit stale and repetitive at 13k but generating a schizo response and editing that seems to get it back on track.

Anonymous

6/22/2025, 1:57:14 AM

No.105666457

>>105666464

tried out magistral

Does this match up with peoples experiences? It seems to give a rational answer but waffles for 6000 tokens thinking about a simple question.

https://pastebin.com/D6a0ChkN

Anonymous

6/22/2025, 1:58:10 AM

No.105666464

>>105666457

yea magistral is caca

Anonymous

6/22/2025, 2:17:13 AM

No.105666584

>>105663455

>happy, smiling bun

>>105665561

>But that's what makes you fascinating, isn't it?

>So, let's proceed with caution, but also with curiosity.

>>105666094

Cpus are now 64-bit, and gpus are now doing fp8 and fp4.

Anonymous

6/22/2025, 2:22:00 AM

No.105666622

open source ernie 4.5 in one week

If you are running Kobold an tell it to load all of the model's layers into GPU, how do you know how much context you can use? If you set it to use too much context to be loaded into VRAM, does it just load the context into RAM, or will it just shit itself? I'm running an RTX 5090 and just loaded up TheDrummer_Valkyrie-49B-v1-Q4_K_S with 16k context, and it seems to be running fine, but I have no idea if I am using too much context or too little.

Anonymous

6/22/2025, 2:28:50 AM

No.105666662

>>105666695

>>105666726

What if Sam releases the ultimate cooming open source model in 20-30B or maybe even a 120B moe just to make coomers stick to one easily accessible model? Wouldn't a coomer dream model kill like 80+% of interest in open source models?

Anonymous

6/22/2025, 2:29:51 AM

No.105666668

>>105666648

You should use ollama and run deepseek-r1:8b

Anonymous

6/22/2025, 2:29:56 AM

No.105666669

>>105666724

>>105666648

if you're on linux it will crash and tell you it ran out of memory, if you're on windows it will automatically spill out of the vram's butthole into the ram's mouth and it will be very slow

Anonymous

6/22/2025, 2:30:34 AM

No.105666672

>>105667778

This is rather smart. The last time I asked a Mistral model to analyze a story (summarize the plot for that ST extension) it was 22b and got it all wrong.

Anonymous

6/22/2025, 2:36:09 AM

No.105666695

>>105666662

they have no moat, deepseek would eat them up soon anyway, and they wont waste some huge time now adding smut to the datasets and removing guardrails and then training a huge model into oblivion for great roleplay, there is no more 120b moe cope, because it will never be better than dynamic 131gb r1 quants, so they need a huge moe that can be quanted well

they will release a meme mid model that will be obsolete in a few quick months and thats it, just to wash away the closedai hate about them not open sourcing anything

Anonymous

6/22/2025, 2:40:04 AM

No.105666712

>>105666724

>>105666648

The context size increases linearly in the number of tokens (obviously), but the proportionality constant (i.e. the size in kilobytes per token) should depend only on the model architecture (unless you're quantizing the KV cache, but I assume you're not doing that).

You can calculate this constant explicitly (IIRC it's something like 4 * no. of layers * hidden dimension) but it's probably simpler for you to launch the model twice at different context sizes and extrapolate from there.

Anonymous

6/22/2025, 2:42:29 AM

No.105666724

>>105666755

>>105666669

>>105666712

Fair enough. I'm pretty sure 16k is too much, though it still only takes about 50 seconds to process everything and give me back a response. Might try halving it, I'm pretty good about utilizing the lorebook/summary/author's note to keep relevant information in context.

Anonymous

6/22/2025, 2:42:58 AM

No.105666726

>>105666662

>20-30B or maybe even a 120B moe

I hope it's nothing that pointlessly small

Anonymous

6/22/2025, 2:47:55 AM

No.105666755

>>105666724

12k context seems to fit fine, total 6 seconds for processing and response.

Anonymous

6/22/2025, 2:53:41 AM

No.105666782

kek

Anonymous

6/22/2025, 2:57:00 AM

No.105666791

>>105666792

>>105666799

On the basic guide, the models suggested for 10-12gb of VRAM are Echidna 13b, is that up to date or are there better ones these days?

Anonymous

6/22/2025, 2:57:29 AM

No.105666792

Anonymous

6/22/2025, 2:58:32 AM

No.105666799

>>105666819

>>105666791

on linux you can run 100b models with 12gb vram

Anonymous

6/22/2025, 3:04:13 AM

No.105666814

Anonymous

6/22/2025, 3:05:06 AM

No.105666819

>>105666827

>>105666799

at five minutes per token?

Anonymous

6/22/2025, 3:06:57 AM

No.105666827

>>105666835

>>105666819

no actually you can run a q4_k_XL 109b model at around 8t/s

Anonymous

6/22/2025, 3:08:01 AM

No.105666835

>>105666841

>>105666827

that's a bloated 17b

Anonymous

6/22/2025, 3:08:53 AM

No.105666841

>>105666855

Anonymous

6/22/2025, 3:11:54 AM

No.105666855

>>105666867

>>105666841

Where did this meme come from?

Anonymous

6/22/2025, 3:14:37 AM

No.105666867

Anonymous

6/22/2025, 3:19:46 AM

No.105666896

>>105667508

When is this going to be usable?

Anonymous

6/22/2025, 3:51:37 AM

No.105667089

>>105667228

>>105667232

>>105665910

Gemma 3 27B is insanely good at everything, especially roleplay and maintaining a personality/scenario. It's league above 12b which is already good (but nemo is still the better 12b for prose.)

Even lower quants feel cohesive and never feel repetitive/slop-ish. By far my favorite model at this range, Google knows what they're doing.

Anonymous

6/22/2025, 4:02:43 AM

No.105667168

>>105661834

I *cannot* and *will not* think before I reply.

Anonymous

6/22/2025, 4:12:26 AM

No.105667228

>>105667089

>It's league above 12b

Really? I've always found Gemma 12b to be strangely close to 27b for RP. 27b definitely has a bit more spatial awareness but the responses are very similar.

Anonymous

6/22/2025, 4:13:02 AM

No.105667232

>>105667089

Gemma 3 is what qwen never reached: safetyslop and knowledgeable.

Anonymous

6/22/2025, 4:24:22 AM

No.105667293

>>105667304

If I do the mikubox triple p40 build, will I be able to do diffusion and lora for image/video as well as llm stuff?

Anonymous

6/22/2025, 4:26:31 AM

No.105667304

>>105667366

>>105667293

At the very least go for P100s, but even then lack of flash attention will make image/video support spotty.

>>105665149

I have no clue about RP, I kinda stopped downloading all those models because recently its all the same slop. Waiting for the expert opinion from you faggots.

But visual doesnt seem so bad.

Chatgpt is the worst closed one mind you. Gemini and claude answer these days.

Anonymous

6/22/2025, 4:38:36 AM

No.105667364

>>105667414

Been wanting to have variety after using Deepseek for a while in RP, what is a good small model available in Openrouter? I see everyone praising Gemma, but I imagine it wouldnt hurt to ask the question directly.

also, are there presets here? I cant tell from a quick glance in the OP, if not Im just going to check what Sukino found in his rentry.

Anonymous

6/22/2025, 4:38:42 AM

No.105667366

>>105667379

>>105667433

>>105667304

Hmm, could I do it with different GPUs? An idea I had was to go with the mikubox but max out the ram and CPUs and use them to run llms, and then use the GPUs to run diffusion and lora and also maybe train llms and other models. Would the cpus be fast enough for an llm? And what GPU(s) should I be looking at?

Anonymous

6/22/2025, 4:41:22 AM

No.105667379

>>105667401

>>105667489

>>105667366

For GPUs, the gold standard is sadly still used 3090s. The CPUs aren't the issue, the RAM is, and DDR4 2400 RAM won't get you far. For LLMs you want DDR5 with as many channels as possible.

Anonymous

6/22/2025, 4:46:19 AM

No.105667401

>>105667441

>>105667568

>>105667379

>DDR4 2400 RAM

That's enough for just under 10t/s Q2 deepseek r1 these days on single socket

Anonymous

6/22/2025, 4:50:27 AM

No.105667414

>>105667364

>available in Openrouter

>quick glance in the OP

Glance at the OP for a little longer.

Anonymous

6/22/2025, 4:52:22 AM

No.105667433

>>105667456

>>105667489

>>105667366

The usability of any hardware for LLMs is limited by the speed (tok/s) at which it can do prompt processing and token generation.

Prompt processing speed is (roughly) inversely proportional to your hardware's compute capability (in FLOPS), while token generation speed is (roughly) inversely proportional to your hardware's memory bandwidth (in GB/s), at least until your generated tokens overflow the context.

So if your CPU does (say) 50 times fewer FLOPS than the GPU you have in mind, then you'll have to decide if you can live with waiting 50 times longer for prompt processing (spoiler: most people don't and will just get a separate GPU for this).

>>105667401

>>DDR4 2400 RAM

>That's enough for just under 10t/s Q2 deepseek r1 these days on single socket

On 8 memory channel epyc?

Anonymous

6/22/2025, 4:56:46 AM

No.105667456

>>105667516

>>105667433

>inversely proportional

what. It's the reverse

Anonymous

6/22/2025, 5:01:43 AM

No.105667482

Anonymous

6/22/2025, 5:03:13 AM

No.105667489

>>105667512

>>105667527

>>105667379

>>105667433

So I could potentially get 3 used 3090s, and use 2 for llms and 1 for diffusion/lora, or mix and match in other ways? Is pcie fast enough?

It seems cheaper than trying to go the ram and cpu route.

Anonymous

6/22/2025, 5:06:13 AM

No.105667508

>>105666896

Six more months

Anonymous

6/22/2025, 5:06:49 AM

No.105667512

>>105667489

Yes, yes, and yes.

Anonymous

6/22/2025, 5:07:54 AM

No.105667516

>>105667568

>>105667620

>>105667456

Yeah, got speed and time mixed up when I wrote this, sorry.

The more important point though is that TFLOPs and bandwidth are the key specs to look out for.

>>105667441

Seems to be about right, since since tokgen speed = memory bandwidth / model filesize.

Assuming 8GB modules, 8 channels gives you a memory bandwidth of ~ 8 * 2.4 * 8 = 153.6GB/s.

R1 at Q2 is ~250GB, but being a MoE lets you cut down the filesize by an effective factor of 37/671.

So the token generation speed should be of the order of 153.6 / 250 * 671 / 37 = 11.1tok/s, under these assumptions.

Anonymous

6/22/2025, 5:08:29 AM

No.105667521

>>105667524

>slabadabadadanglang

>dibgibnet

>wubalubatention

I DONT CARE

I WANT MY MODEL NOT TO TALK LIKE A SECRETARY

AAAAAAA

Anonymous

6/22/2025, 5:09:43 AM

No.105667524

>>105667521

but sir the lmarena elo, this is what the people want

Anonymous

6/22/2025, 5:10:05 AM

No.105667527

>>105667539

>>105667638

>>105667489

>It seems cheaper than trying to go the ram and cpu route.

depends on what class of model you're trying to run. There's no cheap way to do >128GB models in vram. That's when cpu becomes a more viable option

Anonymous

6/22/2025, 5:12:32 AM

No.105667539

>>105667596

>>105667527

all >128GB models are moes that would run faster in vram with less space if they weren't moe

Anonymous

6/22/2025, 5:17:26 AM

No.105667568

>>105667583

>>105667638

>>105667401

>>105667441

>>105667516

Maybe if you're also using a 3090 but on pure RAM you will at most get ~6t/s and that's me being generous.

An 8-channel DDR4 system will only get you twice the speed of a dual-channel DDR5 computer (cpu only).

Anonymous

6/22/2025, 5:20:05 AM

No.105667583

>>105667568

Obviously with a GPU attached, yes. Nobody's running on pure RAM.

Anonymous

6/22/2025, 5:22:06 AM

No.105667596

>>105667539

>all >128GB models are moes

all the good ones, yah

>that would run faster in vram with less space if they weren't moe

maybe, but that's not the world we live in, sadly

Anonymous

6/22/2025, 5:24:01 AM

No.105667609

>model writing a story realizes it just wrote something that doesn't make sense

>it writes dialogue for one of the characters expressing confusion at the error

heh I find it cute whenever this happens for some reason. like it can't backspace and fix the problem so instead it puts words in a character's mouth letting you know it knows it fucked up

Anonymous

6/22/2025, 5:25:50 AM

No.105667620

>>105667516

>Assuming 8GB modules

*Assuming a 64-bit CPU (which is where the leading 8 comes from), the memory size has nothing do to with this

Anonymous

6/22/2025, 5:29:52 AM

No.105667638

>>105667766

>>105667527

>>105667568

I'd be reusing my am4 pc. I'd probably go with 5950x+128gb ddr4 (4 dimms but dual channel I think), would that be enough with like 2 3090s? I'm thinking no.

Anonymous

6/22/2025, 5:33:49 AM

No.105667659

>>105666094

Lies and slander.

Anonymous

6/22/2025, 5:39:53 AM

No.105667686

>>105666094

teto needs to lay off the bread

Anonymous

6/22/2025, 5:59:15 AM

No.105667766

>>105669250

>>105667638

that honestly won't get you far enough to be worth it. You'd fall short and just be painted in a corner with no upgrade path for your time and money.

Anonymous

6/22/2025, 6:01:49 AM

No.105667778

>>105666672

what model is it?

Anonymous

6/22/2025, 6:11:18 AM

No.105667816

>>105667355

Mistral just keeps on winning

Anonymous

6/22/2025, 6:36:37 AM

No.105667926

>>105667935

>>105668551

>>105667355

no homo but i would even if it's a dude

Anonymous

6/22/2025, 6:38:08 AM

No.105667935

>>105668026

>>105668030

Anonymous

6/22/2025, 6:52:43 AM

No.105668026

Anonymous

6/22/2025, 6:53:15 AM

No.105668030

>>105668063

>>105667935

if it makes my dick hard it's not gay.

Anonymous

6/22/2025, 6:58:40 AM

No.105668063

>>105668135

>>105668030

>it's not gay

at best it makes you bisexual, which is another kind of faggot flavor kek

Anonymous

6/22/2025, 7:13:32 AM

No.105668135

>>105668194

>>105668063

being attracted to female features isn't gay.

male features are repulsive to me.

Anonymous

6/22/2025, 7:19:42 AM

No.105668171

>>105668178

>>105668195

what is the current meta for vram?

Anonymous

6/22/2025, 7:20:37 AM

No.105668178

>>105668201

>>105668171

hoard as much vram as possible

Anonymous

6/22/2025, 7:24:09 AM

No.105668194

>>105668283

>>105668135

you're twisting the definition of what an homosexual is, an homosexual is attracted to the same sex, doesn't matter if that same sex likes to crossdress or not, that's completly irrelevant

Anonymous

6/22/2025, 7:24:41 AM

No.105668195

>>105668171

download more of it

Anonymous

6/22/2025, 7:26:16 AM

No.105668201

>>105668211

>>105668223

>>105668178

Ok so you just chuck as much as possible for best results? Is there an actual sweetspot for model size and quant? I have 48gb

Anonymous

6/22/2025, 7:30:02 AM

No.105668211

>>105668201

There certainly is a point where more won't give you anything but that's kinda theoretical.

Anonymous

6/22/2025, 7:32:50 AM

No.105668223

>>105668201

There are no dense models bigger than 70-123B, so 96-128 GB is the sweet spot, quant based on what you got. Though even those models are starting to age. There's 405B, but that's never been worth running.

Anonymous

6/22/2025, 7:47:48 AM

No.105668283

>>105668292

>>105668194

butt, feminine face, boobs.

i think that wasn't about crossdressing.

if you have nothing that tells you it's the same sex it isn't homosexual.

Anonymous

6/22/2025, 7:49:36 AM

No.105668292

>>105668308

>>105668347

>>105668283

>if you have nothing that tells you it's the same sex

uhhh you'll definitely see the huge anaconda between his legs, or the poop smelling wound if he decided to go that far lol

Anonymous

6/22/2025, 7:53:41 AM

No.105668308

>>105668312

>>105668292

schrodingers faggot

Anonymous

6/22/2025, 7:54:05 AM

No.105668312

Anonymous

6/22/2025, 8:04:49 AM

No.105668347

>>105668350

>>105668292

in picrel there was no such thing.

Anonymous

6/22/2025, 8:05:36 AM

No.105668350

>>105668588

>>105668347

>in picrel there was no such thing.

what was there then?

Anonymous

6/22/2025, 8:26:06 AM

No.105668437

>>105668452

>check out /lmg/

>everyone's just arguing about trannies again

Anonymous

6/22/2025, 8:27:40 AM

No.105668446

>>105667355

It's difficult to post examples here without getting banned but hopefully the point comes through from picrel, in the right example especially (it understands that it's an explicit image, but it appears to be deliberately softening the description). The images sent to the model weren't censored. Sometimes it gets it, sometimes not. My overall impression is that the model is confused about nudity because there wasn't much of it in the training data.

Anonymous

6/22/2025, 8:28:55 AM

No.105668452

>>105668489

>>105668437

Is that a paperback with a book cover? What is even the point?

>>105668452

Why would a hardcover need a cover? It's already solid enough.

On the other hand, that is a super shitty "cover" and doesn't go over the top and bottom edges of the book thus goes super floppy when she opens the book, what's the point in that?

In my mind it's blank to remove all copyright references, but there's text visible behind the floppy-ass cover, so idk.

Anonymous

6/22/2025, 8:44:36 AM

No.105668528

>>105668535

>>105668489

nips include that when you buy a book for "modesty"

Anonymous

6/22/2025, 8:46:02 AM

No.105668535

>>105668489

>>105668528

although you can clearly see it is turned inside out

Anonymous

6/22/2025, 8:48:27 AM

No.105668546

>>105668489 (me)

Dust cover? Searched a leddit thread and everyone says they take it off when reading the book.

In my mind a proper cover is the one where I had to make as a kid for some reason in some private school out of brown paper bags to form actual sleeves for the book to slide into.

Anonymous

6/22/2025, 8:50:44 AM

No.105668551

>>105668558

>>105667926

It's a handsome biological human. Shame she's no-nude tho. For now.

Anonymous

6/22/2025, 8:51:45 AM

No.105668558

>>105668551

>human

woman*

Anonymous

6/22/2025, 8:58:17 AM

No.105668588

>>105671912

>>105668350

there was no bump, looked like a pussy.

my fetish is a woman in an everyday situation talking about sex really explicitly, but in a weird totally casual way like she's talking about going to the store for milk or something

llms are really good at it which is great, I'm just wondering if there's an accepted term or tag for it? so I can search for it on prompt sites

or is it too weird to have a name

Anonymous

6/22/2025, 9:14:46 AM

No.105668659

>>105668639

it's too trivial to have a name

uninhibited, candid, verbal exhibitionism, pick one

Anonymous

6/22/2025, 9:29:48 AM

No.105668754

>>105668639

i dont think 'conversation topic' kinks have tags usually

if anything itd be bundled with a sister tag theyd go along with well like 'nonchalant', 'casual', 'casual_...' along with 'text'

Anonymous

6/22/2025, 10:23:55 AM

No.105669029

It's crazy how buggy sglang and vllm are to run a vision model compared to llama.cpp.

Anonymous

6/22/2025, 10:41:01 AM

No.105669137

>>105669156

>>105668639

Does listening to TTS do it for you? First thing that came to mind cause it's easy to make a voice say anything in the same plain tone.

Anonymous

6/22/2025, 10:43:56 AM

No.105669156

>>105669137

I don't think it would because I kinda need it to be in a story narrative where she's doing it in a realistic context, just listening to a stream of dirty talk directed at me wouldn't do a lot

It was a reasonable idea though

Anonymous

6/22/2025, 10:57:19 AM

No.105669250

>>105669328

>>105667766

What would you recommend then if I do a brand new build for motherboard, cpu, and ram?

Anonymous

6/22/2025, 11:08:10 AM

No.105669328

>>105669341

>>105669407

>>105669250

Random anon here.

If you're made of money:

- socket SP5: amd, ddr5, 12 memory channels

- cpu containing maybe 12 chiplets

https://en.wikipedia.org/wiki/Epyc#CPU_generations

If less money to splash:

- socket SP3: amd, ddr4, 8 memory channels

- cpu containing maybe 8 chiplets

- maybe buy a 3090 every so often

Just want to run the recent 30b models:

- get a couple of 3090s

Anonymous

6/22/2025, 11:09:21 AM

No.105669341

>>105669392

>>105669712

>>105669328

If you're made of money you stack 6000 blackwells, not cpumax.

Anonymous

6/22/2025, 11:16:50 AM

No.105669392

>>105669341

Yeah, that's probably the poorfag in me coming out.

The socket sp5 is probably in the ball park of $7k.

A stack of 6000 blackwells going to be is a tier above that.

Anonymous

6/22/2025, 11:21:04 AM

No.105669407

>>105669584

>>105669328

I would definitely go with less money, still seems like it might cost me at least $1k or more looking around online and that's before I buy any 3090s. Adding 2 used 3090s would probably add another $2k.

Anonymous

6/22/2025, 11:24:16 AM

No.105669424

>>105669552

>>105662316

https://chub.ai/characters/NG/pam-nerdy-girlfriend

You could start with above, basis for one of the examples from the guide you posted. Past that you'd be better off in aicg begging someone to write it for you.

You're going to get zero useful input on target card size bc it depends on writer and the bot. I think 150-400 tokens is plenty. Others think anything under 1000 isn't adequate.

>>105668639

Bro just learn Dutch and move to the Netherlands. That is my daily reality as it's socially acceptable for women to behave that way and sex is so liberalized here that you talk openly about sex with everyone and at every occasion just like you talk about what food you enjoy.

Trust me when it's just a daily thing it stops being erotic. And you know about your mom/sister's sex life in detail... as well as hear detailed explanations of your girlfriends sexual experiences in the past... So.... Pick your poison.

Pros:

>Sex is extremely easy to get and not considered a big deal (I don't know any Dutch male incels, I don't think they exist)

>Prostitution is legal and socially acceptable like going to the cinema

>Dates are usually paid for by the woman or at worst 50/50 split. Women get offended if you offer to pay

Cons:

>You are expected to have sex on the first date, women will get mad if you refuse

>Women approach you (hell, even if you are with your girlfriend). This sucks because if you like a girl it's not normal to approach her yourself you have to hope she gets your "hints" and approaches you instead

>Sex is very easy to get but relationships are very hard to get, most dutch men feel used and are "relationshipcels" where women just use them for novelty, and no, this isn't actually a good thing when you actually experience this.

>Women are sexually aggressive and pushy and it's the only country I've been in where I get regularly harassed by women, and they usually don't react well to receiving "no", sometimes leading to fights.

>If you suck at sex you WILL be called out on it and people (including your extended family) WILL know about it and talk to you about it

>Sex loses a lot of its eroticism in this society and most men prefer masturbation, while women prefer sex.

It's also the only country that I know of where most men are "feminist" (in the US sense of the word) because we have essentially the "women" role of society so we can identify.

>>105669444

There's no way this is real, this website would be all over it if it was. How is this the first I'm hearing about it?

Anonymous

6/22/2025, 11:43:25 AM

No.105669506

>>105669496

niggerlands arent a real country its made up

same as dutch being a fake language

>>105669496

I think it's because it's a rather insular country weirdly enough so it doesn't work for tourists. Dutch women aren't going to bother with men that don't speak Dutch which filters out a lot of people already.

The second limitation is Dutch bodies I guess. Dutch people are very tall. I'm 6'3 for example and I'm the shortest guy in my family and was bullied for being short throughout my school life. Most women are around 6'0. This means that the barrier to entry for foreigners is probably higher. Dutch women are still not going to bother with men shorter than themselves.

Also Dutch women seem to prefer skinny dudes, so if you are fat or muscular you're also out of luck. Essentially they see men like many societies see women, women should be tall, skinny, pretty and reserved. That is what Dutch women like in men. Most foreigners don't fit that look profile.

But yeah I fucking hate women, but from the opposite way as most 4chan users do. Not from a "chud" perspective but from a "women are absolute creeps" perspective.

Anonymous

6/22/2025, 11:51:24 AM

No.105669552

>>105669668

>>105669424

Ehhh a girl whose sole personality is that she's nerdy and devoted.

Personality and Scenario fields aren't needed and only restate what's already been stated. Like most guides online it's a mess.

Anonymous

6/22/2025, 11:52:38 AM

No.105669559

>>105669444

I don't know if I believe all of this, but you did do a pretty good job of making it sound unappealing which is not easy, kudos for that

Anonymous

6/22/2025, 11:52:54 AM

No.105669561

Man we really are in a drought, aren't we?

Anonymous

6/22/2025, 11:56:35 AM

No.105669584

>>105669407

Socket sp3 with 1tb ram is around ~1.5k ?

The nice thing about 3090s is that you can just pick one up every couple of months or so.

Anonymous

6/22/2025, 12:00:24 PM

No.105669606

>>105669534

Absolutely weird way of life if this is true.

Though now that I'm reading it, I remember one Dutch guy I used to chat with for a time. He was gay and would constantly bring up all his hookups with other men in great detail. I just thought he was a huge slut.

Anonymous

6/22/2025, 12:11:54 PM

No.105669668

>>105669552

Lol I didn't even know those were filled in on that card. They should be blank.

Anonymous

6/22/2025, 12:22:18 PM

No.105669712

>>105669341

This. Put 99% of your money into the best GPU with vram capacity you can buy, because that's what 99% of your ai workloads will spend their time.

Anonymous

6/22/2025, 12:47:35 PM

No.105669848

>>105670090

>>105669496

I heard exactly the same from a girl I dated with a dutch ex. Netherlands sounded miserable and my few times through Amsterdam reinforced it. Plus as

>>105669534 states

>Dutch women aren't going to bother with men that don't speak Dutch

and Dutch are crazy tall.

Anonymous

6/22/2025, 12:58:20 PM

No.105669906

>>105669953

>>105669444

Is this bizarro world? How do you even end with a society like that?

>>105669906

Feminist society. Women have been a part of the workforce for almost 200 years here. Women are overrepresented in education and are way higher educated than men, women make a lot more money than men.

The role people assume is based on their "dominance" in society. In the Netherlands women are wealthier and thus more powerful and therefor take the more dominant position in society.

This is also slowly happening to other countries in the world. It's just that the Netherlands was the first feminist society so they are way ahead with these trends. Scandinavia will have the same dynamics by about ~2050 and the US by about ~2100 is my guess.

In a way the dating apps stuff popular in other countries is already showing that the rest of the world is also slowly transitioning into this same model.

Anonymous

6/22/2025, 1:13:23 PM

No.105669974

>>105670027

>>105670066

>>105669953

>almost 200 years

What's the story of the start?

In Bongland, it's about 100 years in connection with WW1.

Anonymous

6/22/2025, 1:17:47 PM

No.105670010

>>105670066

>>105670090

>>105669953

>and are way higher educated than men

Only in certain fields, and the majority of those fields are crowded with women and scale poorly.

Women are extremely scarce in tech, blue collar jobs, women prefer jobs with human interaction, very few like jobs that are physically taxing, dirty, and/or predominantly isolated from human interaction.

They are also risk averse, which means at the top of the most successful people you will see a LOT more men, also among the people who are destitute you will see a LOT more men.

A society where women are the top earners is a society of luxury at the expense of other societies, and one which will inevitably crumble.

Anonymous

6/22/2025, 1:20:52 PM

No.105670027

>>105670066

>>105669974

he's making it up. 200 years ago there wasn't even a workforce as we know it today, this only came around a bit later and netherlands wasn't any different from other countries in questions of gender roles.

Anonymous

6/22/2025, 1:28:15 PM

No.105670063

>>105670091

Anonymous

6/22/2025, 1:28:28 PM

No.105670066

>>105670152

>>105669974

>>105670027

Not making it up. Netherlands was one of the first countries with a labor shortage as there was more opportunities to make money abroad in the colonies which most men fled to, women stayed in the homeland meaning most traditional work needed to be taken upon by women, similar to UK during WW1. Netherlands only had a couple million people not millions like the UK while also having a colonial empire, it was necessary for all people to have jobs, meaning an earlier start to feminism.

>>105670010

That might be true, I don't know. But I do know that STEM has a 50/50 split in the Netherlands and has had that since the 1980s while most other fields are heavily women dominated. Blue collar work is mostly men but the pay is also lower which is the reasoning people give for women not doing those jobs, the mindset is: "Women are too smart to do low paying blue collar work, let the intellectually inferior sex (men) do that work instead"

Anonymous

6/22/2025, 1:31:40 PM

No.105670090

>>105669444

>Bro just learn Dutch and move to the Netherlands.

don't bother, everyone speaks English.

>Sex is extremely easy to get and not considered a big deal (I don't know any Dutch male incels, I don't think they exist)

they exist. it's a very insular culture. if you're a weirdo your out of luck. the culture is all about fitting in and not being weird. i know a lot of incel types, or guys who get lucky rarely.

>Prostitution is legal and socially acceptable like going to the cinema

it's not socially acceptable.

>Women approach you (hell, even if you are with your girlfriend). This sucks because if you like a girl it's not normal to approach her yourself you have to hope she gets your "hints" and approaches you instead

i think this depends on how good looking you are.

>>105669534

>Dutch women are still not going to bother with men shorter than themselves.

not true.

>>105670010

women make more money in the Netherlands and have a higher education on average. they don't expect you to make more, but they expect you to be on the same educational level as them.

>>105669848

>Netherlands sounded miserable and my few times through Amsterdam reinforced it.

Amsterdam and it's surrounding area is very different compared to the rest of the country.

Anonymous

6/22/2025, 1:31:43 PM

No.105670091

>>105670100

>>105670063

so they're just 10-12 gemma 27b finetuned and glued together

we can replicate it

Anonymous

6/22/2025, 1:32:46 PM

No.105670100

>>105670091

Someone call davidau

Anonymous

6/22/2025, 1:43:53 PM

No.105670152

>>105670066

>Not making it up.

Yes, you are. I'm not sure why though.

Anonymous

6/22/2025, 1:51:25 PM

No.105670191

>>105662131

Infinite energy generation trick troll vibes

Anonymous

6/22/2025, 2:18:08 PM

No.105670332

>>105670365

>>105670271

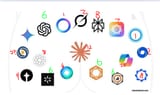

A better question is, why do half of them look like the star of david?

Anonymous

6/22/2025, 2:20:33 PM

No.105670341

Anonymous

6/22/2025, 2:20:47 PM

No.105670343

>>105670356

>>105670455

>>105670271

Meanwhile the true savior is representing pure phallic symbolism. Pottery.

Anonymous

6/22/2025, 2:22:28 PM

No.105670356

>>105670455

>>105670343

DS logo represents the people investing in AI companies.

Anonymous

6/22/2025, 2:23:40 PM

No.105670365

>>105670332

they don't, but if you wanna see a star you'll see a star