/ldg/ - Local Diffusion General

Anonymous

6/23/2025, 9:48:20 AM

No.105678569

[Report]

>>105678746

cursed thread of hostility

not bad, 3 minutes per image on 3090 as the other anon said

i just reused the venv that i use for comfyui instead of creating a new one and it just worked out of the box

diffusers 0.33.1

onnxruntime 1.22.0

onnxruntime-gpu 1.22.0

torch 2.7.0+cu126

torchaudio 2.7.0+cu126

torchvision 0.22.0+cu126

>>105678577

>not bad

yep, and the most interesting part is that it's apache 2.0 licence, I feel the BFL guys will delay their release even more, Kontext dev needs to be much better to that one for people to care about a distilled model with a bad licence, it is how it is

Anonymous

6/23/2025, 10:06:52 AM

No.105678657

[Report]

>>105678577

>3 minutes per image on 3090

it'll be much faster with sage, sage2++ claims it's 3.9x faster than flash attention

Anonymous

6/23/2025, 10:06:58 AM

No.105678658

[Report]

Cant wait to get a 4090D. Knowing my luck right after I buy it the 5090D 64GB would available, kek

Anonymous

6/23/2025, 10:22:10 AM

No.105678729

[Report]

>>105678734

>>105678597

What's this? Looks way better than inpaiting on comfy using gimp...

Anonymous

6/23/2025, 10:22:15 AM

No.105678731

[Report]

>>105678746

Blessed thread of frenship

Anonymous

6/23/2025, 10:22:56 AM

No.105678734

[Report]

Anonymous

6/23/2025, 10:24:49 AM

No.105678746

[Report]

>>105678993

>>105678727

oof, it didn't understand my request and going for 2 images is twice as long, that one took 06:24 mn

Anonymous

6/23/2025, 10:39:06 AM

No.105678806

[Report]

How to fix wan2.1 vagina gens, always adds a penis or hella loose lips

Anonymous

6/23/2025, 10:43:13 AM

No.105678828

[Report]

>>105678802

>06:24 mn

why is it so slow? it's just a 4b model

Anonymous

6/23/2025, 11:00:58 AM

No.105678904

[Report]

>>105674616

What values should I use then for the convolution?

Maybe this is a retarded question but usually the formula goes

>((Number of images in dataset X number of repeats)/batch size)X epochs = total number of steps

Is this right?

Also, with less images you have to train more, but is not a direct relation right?

If you have 100 images and you train for 2000 steps with good quality, you wouldn't get the same quality with 50 images and 4000 steps, you would have to do more than 4000 steps right?

Anonymous

6/23/2025, 11:22:12 AM

No.105678992

[Report]

>>105677998

nta but thank you so much for posting those workflows

great gens, i'll stop there.

Anonymous

6/23/2025, 11:22:28 AM

No.105678993

[Report]

>>105678746

You VILL be a fren

Anonymous

6/23/2025, 11:25:49 AM

No.105679011

[Report]

Anonymous

6/23/2025, 11:28:26 AM

No.105679022

[Report]

>>105679030

>>105678999

oooooh yeahhhh.. it's sloppin' time!

Anonymous

6/23/2025, 11:28:52 AM

No.105679027

[Report]

>>105678727

>>105678802

only works with chinese prompts mister, please understand

Anonymous

6/23/2025, 11:29:16 AM

No.105679030

[Report]

>>105678999

>>105679022

>The quality of outputs produced by the models in this repo are not as good as they could be, probably due to bugs in my code. You may need to wait for official Nunchaku support if you want good quality outputs.

Anonymous

6/23/2025, 11:31:08 AM

No.105679040

[Report]

>>105679050

>>105678999

kek, I'm running chroma on bf16 and I regulary got some anatomy abominations, can't wait to see some images made with some Q4 tier quants kek

Anonymous

6/23/2025, 11:32:24 AM

No.105679050

[Report]

>>105679054

>>105679040

i've been posting images made with svdquant chroma v29 since a month ago ITT

2 of my images from the last got into the current collage

try to pick them out, you probably can

Anonymous

6/23/2025, 11:32:57 AM

No.105679054

[Report]

>>105679050

>chroma v29

that was before the distillation lobotomy though, it went downhill after that

>>105678999

I have the Q8 version. This one is worse?

Anonymous

6/23/2025, 11:34:14 AM

No.105679063

[Report]

>>105679056

it's waaaay faster than even q4 gguf, and it is very very good quality

Anonymous

6/23/2025, 11:35:20 AM

No.105679072

[Report]

>>105679315

Can you anons give me feedback for my training settings please? I'm trying to train illustrious.

pastebin.com/pdnQG4fj

>>105679056

heres a quick gen

https://files.catbox.moe/h9d8op.png - v37 from some anon

keep in mind im using fp8 text encoder too..

Anonymous

6/23/2025, 11:36:21 AM

No.105679077

[Report]

>>105679056

>This one is worse?

everything is worse than Q8, but we don't know exactly how's the quality of svdquant is, it's probably between Q4 and Q5

Anonymous

6/23/2025, 11:37:05 AM

No.105679081

[Report]

Is omni 2 censored? Does it work if you give him an nsfw pic and tell him to use the second image character for it?

Anonymous

6/23/2025, 11:37:07 AM

No.105679084

[Report]

>>105679114

>>105678802

Does kontext understand that though? Struggled on Gemini with that. Anyways, it's quite obvious this model isn't as capable as Kontext.

Anonymous

6/23/2025, 11:38:22 AM

No.105679090

[Report]

>>105679075

oh also i picked v38-detail-calibrated-32steps-cfg4.5-1024px out of instinct, maybe the other versions roccieGOD uploaded are better

Anonymous

6/23/2025, 11:39:23 AM

No.105679095

[Report]

>>105679099

>>105679075

Can you post the prompt for this? I'll compare the two models.

Anonymous

6/23/2025, 11:40:43 AM

No.105679099

[Report]

>>105679296

>>105679095

the whole workflow of the anon's gen is in the catbox

seed: 391147357278020

but here's the prompt:

amateur photograph,

A close-up photograph of a Coca-Cola can, featuring a pink and white cherry blossom design on the label. The can is positioned in the center of the image, with a white background and a blurred pink and white evenly cherry blossom-print-patterned fabric in the background. The lighting is soft and even, highlighting the details of the label and the can. The image has a shallow depth of field, with the can in focus and the background out of focus. The overall composition is simple and elegant, with a focus on the cherry blossom design and various cherry blossom petals scattered around the can itself. The can has condensation droplets dripping on it.

>chroma-unlocked-v39-detail-calibrated.safetensors

It's out.

Anonymous

6/23/2025, 11:42:08 AM

No.105679108

[Report]

>>105679084

>Does kontext understand that though?

we can't test kontext dev (the model we'll get) yet and kontext doesn't seem to be taking multiple image inputs?

4o nailed that shit btw

Anonymous

6/23/2025, 11:44:59 AM

No.105679120

[Report]

Anonymous

6/23/2025, 11:45:00 AM

No.105679121

[Report]

>>105679132

>>105679114

>kumiko stabbed herself

Anonymous

6/23/2025, 11:45:30 AM

No.105679127

[Report]

>>105679132

>>105679114

Nah, 4o changes the entire image

Anonymous

6/23/2025, 11:46:24 AM

No.105679132

[Report]

>>105679148

>>105679121

She killed herself after realizing that Reina doesn't want to be her girlfriend kek

>>105679127

yeah, but it understood the task at hand at least, that's way better than this shit

>>105678802

Anonymous

6/23/2025, 11:47:15 AM

No.105679137

[Report]

>>105679156

>>105678999

Where do I put the unquantized layers?

Anonymous

6/23/2025, 11:47:15 AM

No.105679138

[Report]

>>105682767

I just always wanted a field medic character that fights with syringes and scalpels.

Anonymous

6/23/2025, 11:48:59 AM

No.105679148

[Report]

>>105679132

4o will always understand prompts better though. It's autoregressive, so does to images what LLMs do to text.

Anonymous

6/23/2025, 11:49:50 AM

No.105679156

[Report]

>>105679137

you put them all in the same directory, for example like picrel

https://litter.catbox.moe/mkhhhxkwddipqzbj.png

here's an example workflow, albeit with a shitty prompt

Anonymous

6/23/2025, 11:50:56 AM

No.105679161

[Report]

>>105679165

I regret opening that

Anonymous

6/23/2025, 11:51:49 AM

No.105679165

[Report]

>>105679161

you were warned

Anonymous

6/23/2025, 11:51:55 AM

No.105679167

[Report]

i warned you :^)

Anonymous

6/23/2025, 12:01:33 PM

No.105679206

[Report]

>>105679244

Any SDXL speed ups for upscaling? that's the longest part of my gen

Anonymous

6/23/2025, 12:11:01 PM

No.105679244

[Report]

>>105679258

>>105679206

there's linux, asides from that I'm afraid I can't help You, Anon.

Anonymous

6/23/2025, 12:12:20 PM

No.105679254

[Report]

Do you combine with text gen? I imagine I'd have one of the models be something tiny if I run the likes of Comfy for image and text gen on webui?

Ideally just accompanying image for each "chapter" of what I'm genning elsewhere.

Anonymous

6/23/2025, 12:12:44 PM

No.105679258

[Report]

>>105679244

using an entirely different OS is not a solution. sageattention2++ needs to hurry up.

Anonymous

6/23/2025, 12:12:44 PM

No.105679259

[Report]

>>105679261

When I put the chroma into unet like the q8 gguf version, the unet loader doesn't see the new one.

When I put it into diffusion models, comfy says it can't tell what model type it is.

Anonymous

6/23/2025, 12:13:23 PM

No.105679261

[Report]

>>105679259

did u install nunchaku?

Anonymous

6/23/2025, 12:19:25 PM

No.105679296

[Report]

>>105679311

>>105679099

Why use the SD clip and not chroma?

Anonymous

6/23/2025, 12:21:45 PM

No.105679311

[Report]

>>105679296

no idea, never thought about it, tried chroma with my nunchaku wf, errors out

eventually ill use 4bit awq t5 once i upgrade nunchaku to 0.3.2

Anonymous

6/23/2025, 12:22:31 PM

No.105679315

[Report]

>>105679321

>>105679072

>>105678914

Please I need answers

Anonymous

6/23/2025, 12:23:21 PM

No.105679321

[Report]

>>105679315

I am sorry but as a vramlet i cannot help you

Anonymous

6/23/2025, 12:27:57 PM

No.105679341

[Report]

So uh, anons.. How are those chroma v39 gens going?

>try chroma

>gui reconnecting

>the the nuncharku chroma

>gui reconnecting

>try sdxl

>crash outright

Was there some new update that I missed in comfy?

Anonymous

6/23/2025, 12:34:37 PM

No.105679369

[Report]

>>105679368

have you tried turning it off and on

Anonymous

6/23/2025, 12:39:52 PM

No.105679397

[Report]

>>105678914

I don't know, I usually train with batch 4, and make as much as epoch as it takes until I reach 2000 steps, even if I have 20 or 100 images

Anonymous

6/23/2025, 12:41:07 PM

No.105679406

[Report]

>>105679457

>>105679368

this is useless information. why don't you look at the console to see what's actually going on

Anonymous

6/23/2025, 12:43:08 PM

No.105679421

[Report]

>>105679368

>comfy

what were you expecting

Anonymous

6/23/2025, 12:47:02 PM

No.105679441

[Report]

soulppa

Anonymous

6/23/2025, 12:52:05 PM

No.105679457

[Report]

>>105679406

Prompt executed in 0.07 seconds

got prompt

model_type FLUX

and then the gui crashed

Anonymous

6/23/2025, 12:56:12 PM

No.105679487

[Report]

Ok, the big chroma decided to go through now

Anonymous

6/23/2025, 12:56:52 PM

No.105679491

[Report]

Is this ever gonna just be available to easily set up locally or what

https://magenta.withgoogle.com/magenta-realtime

Anonymous

6/23/2025, 1:05:13 PM

No.105679529

[Report]

And the nunchaku now hangs on the padding removal node if I use chroma clip and crashes when I used SD clip

>Half of /ldg/ are 8GB vramlets

Say it ain't so, bros...

Anonymous

6/23/2025, 1:10:18 PM

No.105679552

[Report]

>>105679546

i'd never bother with ai if i had such a low amount of vram.

Anonymous

6/23/2025, 1:34:34 PM

No.105679670

[Report]

Anonymous

6/23/2025, 1:46:40 PM

No.105679741

[Report]

>>105679898

>>105679546

I'm a 24GB vram chad tho

Anonymous

6/23/2025, 1:46:57 PM

No.105679743

[Report]

>>105673777

can i get the metadata for this pic if anon is still around?

>>105678558 (OP)

Do you ever edit your generated images in a graphics editor? You know, removing extra fingers, fixing scuffed lines, blurring/sharpening and so on?

Anonymous

6/23/2025, 2:04:50 PM

No.105679842

[Report]

Anonymous

6/23/2025, 2:11:56 PM

No.105679897

[Report]

>i wake

>no sage 2 ++ update

>i weep

Anonymous

6/23/2025, 2:12:02 PM

No.105679898

[Report]

>>105679960

>>105679741

> 24GB

That's vramlet-tier these days, come on.

Anonymous

6/23/2025, 2:15:30 PM

No.105679929

[Report]

Anonymous

6/23/2025, 2:17:35 PM

No.105679945

[Report]

>>105679991

I just started using chroma with the default workflow and getting around 24 seconds per gen ona 5090 is that normal?

Anonymous

6/23/2025, 2:18:14 PM

No.105679949

[Report]

>>105679957

The "detail calibrated" chroma looks a bit off compared to the normal version. Often gives more artifacts and the backgrounds are broken. I don't understand the name/why it's a thing?

Anonymous

6/23/2025, 2:19:27 PM

No.105679957

[Report]

>>105679949

It's an experiment where instead of training on 512x512px images he trains on 1024x1024px images. The intent is to improve how details look but it's not a huge success.

Anonymous

6/23/2025, 2:19:47 PM

No.105679960

[Report]

>>105679898

its not tho.

24GB is still a vram chad.

Anonymous

6/23/2025, 2:24:13 PM

No.105679981

[Report]

>she show bob and vagene

>my beautiful girlfriend

>I am doing the fucking

>>105679945

Yeah it's pretty slow. The main culprit is that the negative prompt does something since it's undistilled, so it's almost doubling the flux gen time. Usually getting around 6s/it on my 3060.

Anonymous

6/23/2025, 2:33:32 PM

No.105680028

[Report]

>>105680075

>>105679991

noted, thank you anon. also is there a hiresfix node I can yoink from anywhere for chroma?

Anonymous

6/23/2025, 2:42:43 PM

No.105680075

[Report]

>>105680028

you just chain "Upscale Image By" -> "VAE Encode" -> "KSampler" (with <1 denoise). You don't need a node.

Anonymous

6/23/2025, 2:42:43 PM

No.105680076

[Report]

>>105678956

mages casting spells, the images you posted dont look dynamic enough. Also i'd prefer something stylized

Anonymous

6/23/2025, 2:48:21 PM

No.105680123

[Report]

Anonymous

6/23/2025, 2:48:26 PM

No.105680126

[Report]

>>105680222

>>105680087

replace the girl with a sci fi bbw milf with bedroom eyes, smirk and huge saggy breasts

to test omnigen2, of course

Anonymous

6/23/2025, 3:03:09 PM

No.105680222

[Report]

>>105680126

I'm not inputting any of that.

Anonymous

6/23/2025, 3:12:37 PM

No.105680284

[Report]

Anonymous

6/23/2025, 3:14:07 PM

No.105680293

[Report]

>>105680087

Begone faggot

Anonymous

6/23/2025, 3:24:07 PM

No.105680360

[Report]

Anonymous

6/23/2025, 3:51:35 PM

No.105680567

[Report]

Hungry for some fries.

Anonymous

6/23/2025, 4:09:12 PM

No.105680688

[Report]

>>105680732

>2 character lora exists

>hmm, which one to use

>try both

>1st one barely works. art style is generic and hair isnt even the same

>increase the model strength. still doesnt work the way it should

>try the other one

>works perfectly at default weight

>maintains every subtle detail about the character

>works excellent on every checkpoint I've tested it against

I love people that know how to make loras.

Anonymous

6/23/2025, 4:11:50 PM

No.105680697

[Report]

Anonymous

6/23/2025, 4:14:24 PM

No.105680719

[Report]

>>105682011

what is the reason we can't use multiple cards to gen pics/vids? is it a hardware limitation?

Anonymous

6/23/2025, 4:15:54 PM

No.105680732

[Report]

>>105680743

>>105680688

Yeah well trained lora makes whole checkpoint feel more stable. Drop a like for the guy.

>>105680716

Which one was better?

Anonymous

6/23/2025, 4:16:52 PM

No.105680737

[Report]

>>105679789

Yes, sometimes it's easier than trying to regen or inpaint, sometimes it's the only option cuz the AI can't get it.

>>105680716

Which one works better, lol?

10k steps is crazy.

Anonymous

6/23/2025, 4:17:12 PM

No.105680743

[Report]

>>105680772

>>105680732

>Which one was better?

The bottom by a long shot.

Anonymous

6/23/2025, 4:19:12 PM

No.105680761

[Report]

>>105680777

>>105680716

Some people put their blood & soul into making the perfect lora. Others just dump the minimum amount of images in a dataset with ai generated captions and call it a day.

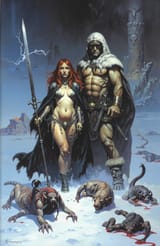

Anyone using Omnigen 2

Im trying to see if it can match the same quality outputs as Flux.1 Kontext.

Example 1: Desire is to isolate items/characters (subject matter) using prompts and using ai to fill in any missing details of subject. Fully restoring subject and isolating in a white background. Keeping all details intact

Example 2: Removing text, watermark, and speech bubbles in comic. Keeping all details intact.

Flux kontext is pretty cucked when it comes to any subject that is lewd, holding weapon and doing anything slightly nsfw. Its able to do it just that the company filters all request and refuses to do it/ provide a black image. Im wondering if there is a way in Omnigen 2 to achieve the same thing that is fully uncensored. It could be a neat way making the process of forming a dataset faster for lora training and so forth.

Anonymous

6/23/2025, 4:20:06 PM

No.105680772

[Report]

>>105680738

>>105680743

Yeah 10k seems pretty nuts. Could it be that Illustrious Prodigy needs marathon for convergence

Anonymous

6/23/2025, 4:20:38 PM

No.105680777

[Report]

>>105680833

>>105680761

Some people make loras because they want to use them; others make loras because their source of happiness in life is CivitAI upvotes.

Anonymous

6/23/2025, 4:29:31 PM

No.105680828

[Report]

>>105680716

I don't understand why even use "repeats" if every concept uses the same amount, 18. Isn't the amount of training same even it's epoch 50 instead 2 due to inflated training data

Anonymous

6/23/2025, 4:29:58 PM

No.105680833

[Report]

>>105680874

>>105680777

getting one (You) from an anon, now that is the true source of happiness

Anonymous

6/23/2025, 4:30:43 PM

No.105680837

[Report]

>try NAG

>error error tensor shape

>error error

nice

Anonymous

6/23/2025, 4:35:00 PM

No.105680874

[Report]

>>105680833

Have one, very good vid.

Anonymous

6/23/2025, 4:41:42 PM

No.105680924

[Report]

>>105679991

>negative prompt is undistilled

What does this mean and how do I fix it?

Anonymous

6/23/2025, 4:41:59 PM

No.105680928

[Report]

>>105680957

>>105680897

Some people can actually do that

Anonymous

6/23/2025, 4:46:17 PM

No.105680957

[Report]

>>105681194

>>105680928

Post one real example.

Anonymous

6/23/2025, 4:52:11 PM

No.105680991

[Report]

what exactly does ExpressiveH do?

>>105678999

Yeah I dunno about that one

v29-32steps-randCfg vs v38-32steps-cfg4.5-1024px vs v38-detail-calibrated-32steps-cfg4.5-1024px

Anonymous

6/23/2025, 4:55:55 PM

No.105681018

[Report]

>>105680897

LET JESUS FUCK YOU!!!

Anonymous

6/23/2025, 4:57:09 PM

No.105681028

[Report]

>>105681055

>>105680738

>>105666637

>how do i stop the video from dimming on wan?

I'm not sure but maybe it happens when the image is too saturated or too dark

Anonymous

6/23/2025, 5:00:16 PM

No.105681055

[Report]

>>105681208

>>105681028

Do you use any loras? Some, both concept and "optimizations" ones, can cause glitches

Anonymous

6/23/2025, 5:04:15 PM

No.105681078

[Report]

USAGI NOOOOOOO

Anonymous

6/23/2025, 5:05:48 PM

No.105681091

[Report]

>>105666637

I have this exact same problem, I would have thought more ppl were having this too

Anonymous

6/23/2025, 5:13:18 PM

No.105681137

[Report]

anything cool release for wan since lightx2v + NAG? anyone test MAGREF?

Anonymous

6/23/2025, 5:18:32 PM

No.105681179

[Report]

>>105681868

any more obfuscated chroma tags found?

Anonymous

6/23/2025, 5:20:42 PM

No.105681194

[Report]

>>105682082

Anonymous

6/23/2025, 5:23:31 PM

No.105681208

[Report]

>>105681344

>>105681055

that one was lightx2v, bounce and ultrawan 1k

For Wan now we need the ability to properly generate and train 10+ second long-context clips. lightx2v is amazing though, it fixes the main problem of it being too slow

Anonymous

6/23/2025, 5:32:53 PM

No.105681295

[Report]

>>105681311

>>105681284

Try to disable the torch compile node, since that was increasing my gen times like crazy.

Anonymous

6/23/2025, 5:33:12 PM

No.105681298

[Report]

>>105682363

Anonymous

6/23/2025, 5:34:26 PM

No.105681311

[Report]

>>105681295

My gen times are good, I just want more than 81 frames and really what I want is 20+ seconds of coherent video

Anonymous

6/23/2025, 5:38:26 PM

No.105681344

[Report]

>>105681373

>>105681208

>ultrawan

Isn't it for the 1.3b model?

Anonymous

6/23/2025, 5:42:28 PM

No.105681373

[Report]

>>105681344

fug you're right

Anonymous

6/23/2025, 5:51:07 PM

No.105681441

[Report]

>>105681750

Couldn't you let Wan make a 5 sec video, then let it continue its train of thought with the prompt, make another 5 sec video, and then stitch the clips together?

Anonymous

6/23/2025, 6:24:01 PM

No.105681696

[Report]

narp

Anonymous

6/23/2025, 6:25:53 PM

No.105681713

[Report]

>>105681739

>>105681688

CCXL my beloved

Anonymous

6/23/2025, 6:28:27 PM

No.105681735

[Report]

>>105681688

That's a tiny river.

Anonymous

6/23/2025, 6:28:42 PM

No.105681739

[Report]

>>105681713

just testing regional stuff with the cond pair set props nodes and it works. 2 different loras at work yay

Anonymous

6/23/2025, 6:29:11 PM

No.105681750

[Report]

>>105681441

workflows exist that stitch 5 second clips together but forget doing any complex scene with continuity. it just doesnt work

Anonymous

6/23/2025, 6:30:14 PM

No.105681757

[Report]

>>105681766

>>105681284

>it fixes the main problem of it being too slow

And in return gives you neutered slow motion, making it useless for anyone that wants actual quality. I can point out every single wan video using lightx2v. that's how badly it fucks the motion.

Anonymous

6/23/2025, 6:31:11 PM

No.105681766

[Report]

>>105681812

>>105681757

works fine for me with loras

Anonymous

6/23/2025, 6:37:17 PM

No.105681812

[Report]

>>105681833

>>105681766

the problem isnt relating to it working

Anonymous

6/23/2025, 6:39:01 PM

No.105681833

[Report]

>>105681844

>>105681812

loras don't have slow motion for me :) no need to reply, I can tell you're a faggot

Anonymous

6/23/2025, 6:40:16 PM

No.105681844

[Report]

>>105681833

We already discussed this and it was proven.

>>105681179

Wait, its real? He really hid artist tags and shit??

Anonymous

6/23/2025, 7:01:40 PM

No.105682011

[Report]

>>105680719

imggen fags aren't smart enough to figure out sharding like based llm chads

Anonymous

6/23/2025, 7:02:33 PM

No.105682020

[Report]

Anonymous

6/23/2025, 7:08:01 PM

No.105682058

[Report]

>>105681868

no, anon just didn't know that gens could produce coherent results without dictionary prompts.

eg- picrel is an example of what comes out when chroma is fed random vectors.

Anonymous

6/23/2025, 7:10:19 PM

No.105682082

[Report]

>>105682192

Anonymous

6/23/2025, 7:16:22 PM

No.105682124

[Report]

>>105682869

>>105681008

>v29-32steps-randCfg vs v38-32steps-cfg4.5-1024px vs v38-detail-calibrated-32steps-cfg4.5-1024px

use this script so you won't have to write what is what on 4chan but it'll be written directly on the image, that's more convenient

https://github.com/BigStationW/Compare-pictures-and-videos

>>105681008

The lightning is much more natural on v29, he really slopped that model after going for that "low step" nonsense. Sad

Anonymous

6/23/2025, 7:20:10 PM

No.105682145

[Report]

>>105681868

>Wait, its real? He really hid artist tags and shit??

no I don't think so, he noticed he got anime pictures by writing random shit, but that's more because the model is just biased to output anime images no matter what

Anonymous

6/23/2025, 7:23:57 PM

No.105682173

[Report]

>>105682308

>>105682135

Not consistently true.

Anonymous

6/23/2025, 7:25:10 PM

No.105682182

[Report]

>>105683420

>>105680766

It just doesn't do the former. And for the latter, it did this.

Anonymous

6/23/2025, 7:26:25 PM

No.105682192

[Report]

>>105682082

Why is her ass acting like it's something from a rhythm videogame?

>>105680766

>Im trying to see if it can match the same quality outputs as Flux.1 Kontext.

bad idea, we won't get kontext pro in the first place so it doesn't matter, what will be important will be the comparison between omnigen 2 and kontext dev

Anonymous

6/23/2025, 7:31:30 PM

No.105682220

[Report]

I am starting to think Kontext Dev won't even happen considering how useful and unmatched the model is, so they will jew out with the API model for longer

Anonymous

6/23/2025, 7:33:00 PM

No.105682230

[Report]

>>105682198

That in context generation of Musk on their github is terrible though. Kontext pro is better at that. Doubt we'll get anything that bad from Flux Kontext dev.

Anonymous

6/23/2025, 7:33:20 PM

No.105682235

[Report]

Stupid Wan, just make her uncross her legs

>>105682135

Just fyi I was prompting for the vintage 80s magazine look so the lighting didn't necessarily have to look natural at all. Still, v29 handled it a lot better, the other two are oversaturated to hell

Imagine the potential if Chroma were fine-tuned on instructions like Kontext...

1 - "Make her nude / remove her clothes"

2 - "Make her boobs bigger"

3 - "Make this woman futanari and make sure her cock is long and erect"

4 - "Fill this person with cum"

5 - "Make a blowjob scene featuring this woman"

(...)

Anonymous

6/23/2025, 7:52:05 PM

No.105682363

[Report]

>>105682886

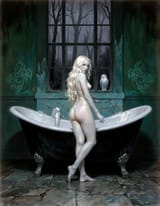

>>105681298

Nice, bit of Robert Mcginnis feel to it, weird vertical lines though

Anonymous

6/23/2025, 7:54:56 PM

No.105682392

[Report]

>>105682420

>>105681008

Nice third leg on the v38 detail calibrated kek

>>105682308

Without knowing what the exact prompt is, this comparison is kind of useless. Maybe it may be more biased by default, but when testing my usual prompt where the first thing I ask for the for photo be amateur, I have found the opposite to be true. The model (barring the calibrated version due to being behind in merging as discussed in the Discord screenshot) is actually better at consistently producing photoreal skin than before.

Anonymous

6/23/2025, 7:56:42 PM

No.105682406

[Report]

>>105682457

>>105682398

>Without knowing what the exact prompt is, this comparison is kind of useless.

you didn't share the prompt on your image aswell lol

Anonymous

6/23/2025, 7:58:53 PM

No.105682420

[Report]

>>105684455

>>105682392

>Nice third leg on the v38 detail calibrated kek

>>105682398

And nice third arm on chroma v36 detail calibrated, I think the calibrated one is broken no?

Anonymous

6/23/2025, 7:59:10 PM

No.105682427

[Report]

>>105682298

Is all you can think of coom?

Anonymous

6/23/2025, 8:00:59 PM

No.105682437

[Report]

>>105679546

constraint breeds creativity as evidenced by this thread

Anonymous

6/23/2025, 8:01:27 PM

No.105682445

[Report]

>>105682298

Omnigen is a 4b model and it's eating me 20gb of vram, there's no way we'll get something like that out of a 8.9b model, we don't have powerful gpus enough for that size

Anonymous

6/23/2025, 8:03:26 PM

No.105682457

[Report]

>>105682406

I did share catbox including prompt and tested it more than one seed. Also same holds true for v38

https://desuarchive.org/g/thread/105586710/#q105589249

Anonymous

6/23/2025, 8:04:31 PM

No.105682464

[Report]

>>105682472

>doesn't know what the communist logo is

DOA :(

Anonymous

6/23/2025, 8:05:47 PM

No.105682472

[Report]

>>105682512

>>105682464

>communist logo

lel its called the hammer and sickle anonie

Anonymous

6/23/2025, 8:08:21 PM

No.105682487

[Report]

>>105682308

I think it's possible that optimal settings also change while the model is being trained. Might be more informative to find good settins for 39 and then test it with 29 etc

>>105682472

based commie knowledger

Anonymous

6/23/2025, 8:14:47 PM

No.105682556

[Report]

>>105682512

锤子与镰刀, 共产主义的象征

Anonymous

6/23/2025, 8:16:54 PM

No.105682576

[Report]

Anonymous

6/23/2025, 8:18:55 PM

No.105682586

[Report]

>>105682512

try to reference more commie shit like soviet union. Prompt it as a coat badge or something

Anonymous

6/23/2025, 8:22:36 PM

No.105682614

[Report]

>>105682677

https://xcancel.com/ostrisai/status/1937211561682354206

>Focusing solely on the no CFG version moving forward as it appears to be converging faster. Adding some training tricks to target the high frequency detail more. The high detail artifacts are hard to get rid of as the model just never learned that data.

Anonymous

6/23/2025, 8:28:28 PM

No.105682677

[Report]

>>105682821

>>105682614

>no CFG

so no negative prompts? nah bruh this ain't it...

Anonymous

6/23/2025, 8:31:00 PM

No.105682701

[Report]

chroma 39 is actually a lot better from my brief testing, over prior versions

might be placebo tho

Anonymous

6/23/2025, 8:40:01 PM

No.105682767

[Report]

Anonymous

6/23/2025, 8:47:17 PM

No.105682821

[Report]

>>105682859

>>105682677

Negative prompts are a cope like inpainting and loras

https://imgsli.com/MzkxNzQz v29 vs v39 comparison

Seed: "696969696969"

Positive: "Attractive Chinese woman standing in front of a house"

Negative: "low quality, bad anatomy, mutated hands and fingers"

Anonymous

6/23/2025, 8:50:48 PM

No.105682859

[Report]

Anonymous

6/23/2025, 8:51:51 PM

No.105682869

[Report]

Anonymous

6/23/2025, 8:54:05 PM

No.105682886

[Report]

>>105683018

>>105682363

It's Michael Whelan actually, but yeah those lines are annoying.

>>105682841

If your flux/chroma prompt is not at least two paragraphs long, you're doing it wrong.

Anonymous

6/23/2025, 8:58:24 PM

No.105682936

[Report]

>>105682917

this anon is right, it must be 69 paragraphs long to see the magic

Anonymous

6/23/2025, 8:59:43 PM

No.105682955

[Report]

>>105683154

>>105681008

The hands look terrible, I don't get how this model got any hype in the first place.

Anonymous

6/23/2025, 9:00:42 PM

No.105682967

[Report]

>>105683028

>>105682917

this. you need to get the ai into a good mode with an interesting story before genning

Anonymous

6/23/2025, 9:05:51 PM

No.105683018

[Report]

>>105682886

>Michael Whelan

Based...

Anonymous

6/23/2025, 9:06:17 PM

No.105683023

[Report]

>>105683062

Anonymous

6/23/2025, 9:06:47 PM

No.105683028

[Report]

>>105682967

>tfw you have to read a little story to the AI before you convince it to generate quality images

Anonymous

6/23/2025, 9:09:52 PM

No.105683062

[Report]

>>105683090

>>105683023

Damn, I hadn’t realized how different Chinese and Japanese people can look.

Anonymous

6/23/2025, 9:13:47 PM

No.105683090

[Report]

>>105683062

all the east asians have subtle differences

chinese have bushy brow

koreans have giga chins

Anonymous

6/23/2025, 9:21:40 PM

No.105683145

[Report]

>>105683153

Hell yeah Chris-Chan! What a hunk of a man he is.

>((Number of images in dataset X number of repeats)/batch size)X epochs = total number of steps

Is this right?

Also, with less images you have to train more, but it is not a direct relation right?

If you have 100 images and you train for 2000 steps with good quality, you wouldn't get the same quality with 50 images and 4000 steps, you would have to do more than 4000 steps right?

Also, can you anons give me feedback for my training settings please? I'm trying to train illustrious. I used both standard and lycoris and convolution network at 32 with lycoris

pastebin.com/pdnQG4fj

Anonymous

6/23/2025, 9:22:51 PM

No.105683153

[Report]

>>105683184

>>105683145

>artificial boobs

that's funny because he recently became a troon so that fits

Anonymous

6/23/2025, 9:23:03 PM

No.105683154

[Report]

>>105683170

>>105682955

go gen a picture where someone has seven fingers on one hand and three on the other. bonus points if either of them are shooting the bird and you don't use either a "shooting the bird" or "seven / three finger hand" lora.

i'll keep the meter running, but not even the big models can do this, anon.

Anonymous

6/23/2025, 9:25:31 PM

No.105683170

[Report]

>>105683511

>>105683154

>seven fingers on one hand and three on the other

Use case for such prompt?

Anonymous

6/23/2025, 9:28:18 PM

No.105683184

[Report]

>>105683210

Anonymous

6/23/2025, 9:32:05 PM

No.105683207

[Report]

>mfw Chroma 39

Anonymous

6/23/2025, 9:32:29 PM

No.105683210

[Report]

>>105683184

oh shit it's been 11 years? I really thought that was more recent than that

Anonymous

6/23/2025, 9:33:55 PM

No.105683220

[Report]

>>105684302

>>105683151

>Also, with less images you have to train more, but it is not a direct relation right?

Smaller dataset = less to learn. No training setting can turn bad dataset into a good one. Garbage in, garbage out.

Does Chrome know more celeb names than Flux or is it still cucked in that regard?

Anonymous

6/23/2025, 9:39:38 PM

No.105683278

[Report]

>>105683262

It knows a bit more celebrities (Will Smith, Di Caprio...) but I don't think it knows that much more, like characters and artist tags, Chroma doesn't seem to have much more knowledge than Flux (it's a shame really)

Anonymous

6/23/2025, 9:48:38 PM

No.105683354

[Report]

how long did it take until you got bored of this. Will we just go outside eventually? Though maybe it's like calling dial up internet a fad.

Anonymous

6/23/2025, 9:50:49 PM

No.105683375

[Report]

Anonymous

6/23/2025, 9:51:32 PM

No.105683386

[Report]

>>105683364

For videos about 5 gens.

For images I've yet to grow bored. Chroma coming out with a new checkpoint every 4 days helps as well.

i mean base omnigen 2 is obviously lacking but since it's apache-2.0 surely we can finetune this to do some good shit right?

Anonymous

6/23/2025, 9:55:50 PM

No.105683420

[Report]

>>105682182

really wondering if it's possible to prompt it somehow to get the proper gen output of retaining the quality/style with no degradation and removing speech bubbles, text and watermarks.

>>105682198

when i meant in quality, i just meant following/adhering to the prompt in editing. it's able to remove backgrounds and text from images and isolate objects. It's just has to prompt some way to achieve it and i just want to find out if anyone has a clue how. Kontext dev (downgrade version) might never come out or be delayed for an year who knows.

Anonymous

6/23/2025, 9:58:15 PM

No.105683446

[Report]

>>105683515

>>105683364

if you've grown 'bored' you're either creatively bankrupt or never were that interested in the first place. Imagine having a tool at your disposal that lets you generate basically anything you want and calling it a fucking fad.

How in the

you know what, here's your (You)

Anonymous

6/23/2025, 9:59:55 PM

No.105683459

[Report]

>>105683392

im really hoping so, this way it would be super easy to fill in missing parts of an image like characters missing hands because of speech bubble/text, remove unnecessary text watermarks and so much more. I really want chroma dev or any other dev like pony to just drop their time in wasting it on making those models and work on this. It would be much better to have this editing/photoshop/inpainting tool model that is like kontext locally than another txt 2 img model that will fade in a month.

Anonymous

6/23/2025, 10:01:02 PM

No.105683468

[Report]

>>105683474

im out of the loop. what's the difference between detail-calibrated and regular chroma? why are there two versions now

Anonymous

6/23/2025, 10:01:21 PM

No.105683472

[Report]

>>105683392

obviously, it's not a bad model at all, and who knows how bad kontext dev will be, so I believe this'll be the SOTA tool to use until a new challenger comes along (desu I wouldn't mind for them to keep their effort and go for an Omnigen 3)

Anonymous

6/23/2025, 10:01:39 PM

No.105683474

[Report]

>>105683468

the former has more diapers in the dataset desu

Anonymous

6/23/2025, 10:01:51 PM

No.105683476

[Report]

Anonymous

6/23/2025, 10:05:53 PM

No.105683511

[Report]

>>105683170

imagine you knew someone who could draw exquisite, detailed hands, so you asked them to draw a hand with more than the typical number of fingers, and they not only continued to draw only anatomically perfect five fingered hands, they couldn't even imagine any other type of hand, even when given explicit direction.

you might wonder whether they were just an idiot savant and not really a great artist, right?

that's everything, right now. some are just more passable fakes than others.

>>105683262

basically none, yet. that'll converge late, if ever.

>>105683446

sorry I should have specified this genre. I use these tools for art everyday. Have fun with your degenerate furry imagination if that's what's creative to you.

Anonymous

6/23/2025, 10:07:31 PM

No.105683532

[Report]

>>105683553

Anonymous

6/23/2025, 10:08:49 PM

No.105683544

[Report]

>>105683575

>>105683515

frig I meant civitai type 5s goonslop not my down syndrome sdxl gens

Anonymous

6/23/2025, 10:09:38 PM

No.105683553

[Report]

>>105683532

I could teach her...

Anonymous

6/23/2025, 10:11:55 PM

No.105683575

[Report]

>>105683544

>>105683515

what graphics card do you use?

Anonymous

6/23/2025, 10:12:50 PM

No.105683584

[Report]

>>105683392

I tried it to remove the censor bars of an hentai image and it didn't work :(

Anonymous

6/23/2025, 10:18:47 PM

No.105683636

[Report]

>>105683657

hmm not sure if this is the right answer

Anonymous

6/23/2025, 10:21:36 PM

No.105683657

[Report]

>>105683636

she might be using american common core math

Anonymous

6/23/2025, 10:27:06 PM

No.105683694

[Report]

>>105684070

>>105678558 (OP)

offtopic but does any ai voice to voice actually work for audio porn? I tried using a comfyui workflow for rvc voice to voice but it sounded very bad

How do you guys get pony-based checkpoints to produce hands that don't look like ground meat?

Anonymous

6/23/2025, 11:05:26 PM

No.105683980

[Report]

>>105683968

my eternal soul in a faustian deal

Anonymous

6/23/2025, 11:15:38 PM

No.105684063

[Report]

are there working omnigen2 nodes for comfyui yet? the Yuan-ManX repo is totally nonfunctional even with the relative path PR fix. there's like a dozen other showstopping bugs after that one

Anonymous

6/23/2025, 11:16:37 PM

No.105684070

[Report]

>>105683694

The technology is not there yet, none can handle moans and screams that well. Besides, most newer local models aren't even for voice to voice but for text to voice. Some can do voice cloning but it mostly sucks. RVC is only ever good if you've used some other text to speech model and it sounds ALMOST like your desired voice (with similar intonations and whatnot), but not quite.

Anonymous

6/23/2025, 11:22:48 PM

No.105684118

[Report]

We doing redheads again.

I wish Chroma would take more of my Flux LORAs.

Oh well.

Anonymous

6/23/2025, 11:23:00 PM

No.105684119

[Report]

>>105684110

Scaled looks better

>>105684134

scaled has much more background bokeh though, ugh

>>105684110

>t5 fp8

why? if you can run Chroma, you can definitely run t5 fp16

Anonymous

6/23/2025, 11:30:22 PM

No.105684187

[Report]

>>105683968

by switching to illustrious

Anonymous

6/23/2025, 11:31:15 PM

No.105684197

[Report]

>>105684204

>>105684145

Can't you just unblur it with negs? I thought chroma isn't as cucked in that regard as flux

Anonymous

6/23/2025, 11:32:30 PM

No.105684204

[Report]

>>105684213

>>105684197

>Can't you just unblur it with negs?

nah it doesn't work that well, maybe with NAG but I didn't try that

>I thought chroma isn't as cucked in that regard as flux

it's better than Flux, but it's starting to look more and more like Flux through epochs

>>105682308

Anonymous

6/23/2025, 11:33:47 PM

No.105684212

[Report]

>>105684162

Yup, plus unlike quantizing Chroma itself, quantizing the text encoder makes a massive difference in output quality

Anonymous

6/23/2025, 11:33:52 PM

No.105684213

[Report]

>>105684246

>>105684204

it's gonna be funny as hell if he spent $50k to gradually transform Schnell back into Schnell again at 50 epochs

Anonymous

6/23/2025, 11:34:27 PM

No.105684215

[Report]

>>105684145

it's supposed to have slight background blur because I prompted for it

>Camera details include a focus on subject in a medium close-up shot with a shallow depth of field blurring the background slightly and highlighting her figure and smiling expression.

>>105684162

it's the q8 gguf ran with 16gb. Just testing out of curiosity

Anonymous

6/23/2025, 11:35:34 PM

No.105684221

[Report]

>>105684134

No it doesn't? Just slops the output

>>105684213

he's so fucking retarded, Schnell was so slopped because it was running on a few steps, and what did he do? he transformed Chroma so that it also works on a few steps, what's the fucking point to undistill a model if at the end you're distilling it again?

>>105684246

>what's the fucking point to undistill a model if at the end you're distilling it again?

yeah that's absolutely retarded but I partially blame people whining about the speed

low information morons want a non-distilled model which means cfg and a good amount of steps

but they don't want to wait the extra time that entails

>>105684264

>I partially blame people whining about the speed

But that's their fault for being vramlets, why did the modelmaker who likely has his personal 5090 concern himself with those users?

Anonymous

6/23/2025, 11:44:22 PM

No.105684280

[Report]

do you use the detail tweaker loras or are they snake oil?

Anonymous

6/23/2025, 11:45:41 PM

No.105684294

[Report]

>>105683968

boxing gloves

Anonymous

6/23/2025, 11:45:47 PM

No.105684296

[Report]

>>105684278

Plus at the end of the day you've got SVDQuant and other future things that would improve it. It was shocking that he doubled down on "improving" gen times. Though I don't think the model is worse or distilled like that other anon says.

Anonymous

6/23/2025, 11:46:14 PM

No.105684301

[Report]

>>105684246

He's added a shitton of furfaggotry to the model. I think that was his main goal

Anonymous

6/23/2025, 11:46:14 PM

No.105684302

[Report]

>>105683220

But what I mean is if the training settings isn't directly proportional to the number of images

Anonymous

6/23/2025, 11:46:52 PM

No.105684308

[Report]

>>105684412

>>105684246

what's the max amount of steps chroma uses now at v39?

Anonymous

6/23/2025, 11:47:28 PM

No.105684313

[Report]

>>105684264

>yeah that's absolutely retarded but I partially blame people whining about the speed

I don't, he should've trained the model correctly until the end and then distill the v50 if he wants, what he did is completly braindead

Anonymous

6/23/2025, 11:48:05 PM

No.105684315

[Report]

>>105684358

>>105684278

I dunno, popularity and visibility if your model can run on potatoes? But it doesn't matter. Most people can't put in the hard work to get FLUX running in any variant to this day but it didn't stop the model from gaining steam. Obviously, you don't want it so you need a server or supercomputer with Blackwell to run but even 5080-5090 levels of hardware required means you'll get a community that will do that downscaling work for you. It doesn't matter how slopped or loss of quality that will get your output, the morons will eat it up regardless. Catering to them early does you nothing.

Anonymous

6/23/2025, 11:50:38 PM

No.105684334

[Report]

>>105684368

>>105678914

With Flux.1 Dev, my best results are always between 2700-2900 steps regardless of how many images I use (25 to 150+), so I never train more than 3k. Style LoRAs (no captions) usually top out at 500-700 so I never go past 1k.

No idea what you're actually trying to do with what model though.

Anonymous

6/23/2025, 11:52:58 PM

No.105684358

[Report]

>>105684315

>Most people can't put in the hard work to get FLUX running in any variant to this day but it didn't stop the model from gaining steam.

this, Chroma got popular before he did this distillation stuff, I don't know why he wanted to cater to those impatient vramlets, fuck them, quality first

Anonymous

6/23/2025, 11:54:33 PM

No.105684368

[Report]

>>105684488

>>105684334

how can you fucking simp over an e-celeb. go out, plenty of cute women everywhere

Anonymous

6/23/2025, 11:56:32 PM

No.105684382

[Report]

ARE WE FEELIN WEREWOLF DICK'D OR SNAKE OILED TODAY?

>>105684308

>what's the max amount of steps chroma uses now at v39?

The max I've needed to use is 40, minimum 16. Cfg from 3.5 to 6.

Anonymous

6/24/2025, 12:05:30 AM

No.105684450

[Report]

>>105684480

>>105684412

there's no way that's a chroma image, it's so slopped lol

Anonymous

6/24/2025, 12:06:02 AM

No.105684455

[Report]

>>105682420

>I think the calibrated one is broken no?

Not necessarily broken, just doesn't like my prompt and seed. Look at the whole seed, it's actually closer to the slopped v28. Now for v38 the detailed calibrated is a bit closer to the non detailed version as intended, but I have found it's still behind. Haven't tested v39, probably improved a bit more on that aspect as well.

>>105684412

I see what you did there

Anonymous

6/24/2025, 12:09:39 AM

No.105684480

[Report]

>>105684450

it's exactly what I prompted for

>>105684473

gj anon. Interrogated with JoyCaption beta one

Anonymous

6/24/2025, 12:10:45 AM

No.105684488

[Report]

>>105684368

Gen your face when I drop an $8k GPU into my $7k PC to batch images of my babygirl.

Anonymous

6/24/2025, 12:11:18 AM

No.105684492

[Report]

Anonymous

6/24/2025, 12:12:22 AM

No.105684499

[Report]

>>105684514

Anonymous

6/24/2025, 12:14:25 AM

No.105684514

[Report]

Anonymous

6/24/2025, 12:15:12 AM

No.105684522

[Report]

mfw this thread

Anonymous

6/24/2025, 12:32:29 AM

No.105684633

[Report]

>>105684473

haven't seen this image since i was in middle school, which was back in like 2002? you must be a fossil

Anonymous

6/24/2025, 12:35:36 AM

No.105684657

[Report]

>>105684677

Any good SD models or anything else that I can use for img2img2 upscaling or reimagining of equirectangular planet textures like the ones NASA hosts? I wanna try it on some game assets, tried ChatGPT so far but it's junk. I'll probably end up manually painting it anyway later but I'm curious.

Anonymous

6/24/2025, 12:37:21 AM

No.105684677

[Report]

>>105684657

try kontext or omnigen 2

Looking to buy a better pc than what I have now (mainly for stable diffusion generation, I don't care about gaming), which of these is best value for money?

https://allegro.pl/oferta/bsg-raptor-komputer-gamingowy-intel-core-i7-32gb-ram-1tb-ssd-rtx-3060-win10-15845303509

730$ intel core i7-4770, gpu GeForce RTX 3060 12GB, 32GB RAM, 1tb ssd

https://allegro.pl/oferta/intel-core-i3-14100f-rtx5060ti-16gb-nm620-512gb-32gb-17527702172

1000$ INTEL CORE I3-14100F, gpu RTX5060TI_16GB, 32gb RAM, 512gb SSD

Anonymous

6/24/2025, 12:41:12 AM

No.105684709

[Report]

>>105684681

>which of these is best

the one with a 24gb vram card

Anonymous

6/24/2025, 12:42:40 AM

No.105684718

[Report]

>>105684681

>buying prebuilts

>here

>on allegro of all places

anon waht are u doing

stahp

one of those literally has an old ass haswell cpu in it

Anonymous

6/24/2025, 12:44:34 AM

No.105684727

[Report]

>>105684748

fuck chroma, back to svdq flux. 8 seconds for this shit @ 30 steps on my rusted 3090 from 1982. (distant shouting can be heard: "lora hellhole!" ..wha?) gonna slop the living daylights out of this. cats, elves and tigers. chroma can suck my binary balls

Anonymous

6/24/2025, 12:46:55 AM

No.105684748

[Report]

>>105685008

>>105684727

>fuck chroma, back to svdq flux. 8 seconds for this shit @ 30 steps on my rusted 3090 from 1982.

but you can run chroma with svdq though?

Anonymous

6/24/2025, 12:54:02 AM

No.105684804

[Report]

>>105685023

>>105684681

>intel core i7-4770, gpu GeForce RTX 3060

Anonymous

6/24/2025, 12:57:29 AM

No.105684825

[Report]

>>105685257

Total noob here. If i want to use real pictures of girls to blend it together and want it to look as realistic as possible, which free service should I use? LMArena is decent but it changed the faces too much when I ask it not to.

Anonymous

6/24/2025, 12:59:08 AM

No.105684841

[Report]

>>105684681

Ain't no way nigga lmao. This is actual e-waste, not even memeing. Idk how is the second-hand market in poland but here in Czech Rep. I had no issues hunting used parts one by one or in small bundles and building a pc that way. Shit like what you posted is a joke and you are overpaying a fuckload.

Anonymous

6/24/2025, 1:00:08 AM

No.105684850

[Report]

>>105684908

>>105684513

nipple warning

Anonymous

6/24/2025, 1:01:46 AM

No.105684858

[Report]

Anonymous

6/24/2025, 1:09:19 AM

No.105684908

[Report]

>>105684977

>>105684850

I know asians love to do plastic surgery but even with their standard, that skin looks way too plastic lol

Anonymous

6/24/2025, 1:17:59 AM

No.105684972

[Report]

>>105685008

Funny little creatures.

Anonymous

6/24/2025, 1:18:36 AM

No.105684977

[Report]

>>105684984

>>105684908

the world is plastic

Anonymous

6/24/2025, 1:19:21 AM

No.105684984

[Report]

>>105684977

>the world is plastic

*microplastic :^()

Anonymous

6/24/2025, 1:22:20 AM

No.105685008

[Report]

>>105685092

>>105684748

you can. rocca made new chroma svd quants but it's very much experimental.

>>105684972

all equally replusive lol. have you tried that pixelwave schnell model ?

Anonymous

6/24/2025, 1:24:07 AM

No.105685023

[Report]

>>105684804

>laughs in i7 8700 and 4070tis

Anonymous

6/24/2025, 1:27:11 AM

No.105685046

[Report]

>>105683151

Please any help? For what I see more than 4000 overcooks the model.

Images look to perfect also. Like the illustrious model still has much influence vs the database. something missing, is there anything I could change in my training data, in my database or something I could tweak?

Anonymous

6/24/2025, 1:36:13 AM

No.105685092

[Report]

>>105685137

>>105685008

>have you tried that pixelwave schnell model

No, haven't. Is it good?

I'll try that tomorrow then.

Anonymous

6/24/2025, 1:38:38 AM

No.105685102

[Report]

Anonymous

6/24/2025, 1:45:59 AM

No.105685137

[Report]

>>105685092

I did OOM several times, that much I can say. it was the full model tho and an upscaling workflow. need to test it again - 8 steps is a solid argument.

Anonymous

6/24/2025, 1:48:52 AM

No.105685160

[Report]

>>105685228

>>105685124

i posted the pancake one in the /b/degen thread and got like 20 (You)'s, kek

Anonymous

6/24/2025, 1:49:29 AM

No.105685168

[Report]

Anonymous

6/24/2025, 1:53:36 AM

No.105685202

[Report]

>>105685124

these are so good

Anonymous

6/24/2025, 1:55:56 AM

No.105685216

[Report]

too realistic tone it down chroma

>>105685124

would

>>105685160

Thief!!!!

Mind sharing a link to the post?

Anonymous

6/24/2025, 1:59:10 AM

No.105685237

[Report]

>>105685228

it was a few days ago, sorry its long gone and /b/ threads aren't archived

Anonymous

6/24/2025, 1:59:53 AM

No.105685245

[Report]

>>105685228

NTA but can confirm many keks were had in degen

Anonymous

6/24/2025, 2:01:56 AM

No.105685257

[Report]

>>105684825

>which free service should I use?

The one connected to the graphics card in your computer.

Anonymous

6/24/2025, 2:06:33 AM

No.105685292

[Report]

>>105685334

>>105685228

It was also posted in /v/, but that one got deleted in 12 minutes by the jannies.

https://arch.b4k.dev/v/thread/713346891/#713369668

Anonymous

6/24/2025, 2:13:43 AM

No.105685334

[Report]

>>105685292

i was wondering if the jannies would delete a pulpy strawberry

Anonymous

6/24/2025, 2:19:53 AM

No.105685375

[Report]

>>105684513

What was the prompt for this?

Anonymous

6/24/2025, 2:30:44 AM

No.105685448

[Report]

Anonymous

6/24/2025, 2:31:40 AM

No.105685456

[Report]

Anonymous

6/24/2025, 7:54:28 AM

No.105687191

[Report]

limit