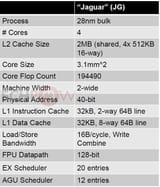

AMD Jaguar

Why did Sony and Microsoft opt for this? What made it special? And were these basically the equivalent of glorified Intel Atom cores?

Anonymous

6/24/2025, 6:09:44 AM

No.105686614

[Report]

>>105686631

It wasn't about the CPUs.

The CPUs were "good enough". The bar for that is kinda low, look at the Cell garbage they pulled. You can force console devs to work with just about any garbage apparently.

Kinda lucky for PC gaming, too. Consoles are the lowest common denominator, without consoles having many but slow shitty cores, game engines would not have been jobbified as quickly has they have been by now. That was largely driven by console's garbage CPUs.

Sure, intel could have sold them a better CPU. Thing is AMD was cheap, had a CPU, had a GPU, and had a team sitting around that could build and integrate an entire SoC and was willing to go out of their way to build whatever they needed.

Intel would have charged an arm and a leg and their GPUs are utter trash to this day.

Nvidia is such a massive pain in the ass that NOBODY wants to work with them, and both Sony and Microsoft know it.

AMD's kinda the default then.

That both Microsoft and Sony stuck with AMD for so long to this day shows that AMD's semicustom team makes their customers happy.

Anonymous

6/24/2025, 6:12:24 AM

No.105686631

[Report]

>>105686614

You know Novideo is bad when even apple dropped them.

Anonymous

6/24/2025, 6:25:38 AM

No.105686705

[Report]

>>105686419 (OP)

It was available

>arm

They'd have to build a custom uarch & soc. Costly with questionable benefits.

>intel

lol

>powerpc

lol

>mips

same as arm

Anonymous

6/24/2025, 9:08:39 AM

No.105687622

[Report]

>>105686419 (OP)

best bang for your buck transistor count wise, by using jaguar they can get more performance for the same size chip or make the die smaller and get the same performance to save money.

the jaguar core is absolutely tiny even on an older process like 28nm tsmc

Anonymous

6/24/2025, 9:17:10 AM

No.105687686

[Report]

>>105687706

>>105686419 (OP)

>28nm

I thought high tech consoles would have a more high tech CPU

>>105687686

the ps4 and xbone came out in 2014

Anonymous

6/24/2025, 9:37:11 AM

No.105687794

[Report]

>>105687706

Well 2014 was only like 3-4 years ago so...

FUCK

Anonymous

6/24/2025, 9:40:14 AM

No.105687807

[Report]

>>105687706

yes didn't we already have 10nm consumer CPUs then?