/lmg/ - Local Models General

Anonymous

6/24/2025, 2:24:04 PM

No.105689390

►Recent Highlights from the Previous Thread:

>>105681538

--Paper: Drag-and-Drop LLMs: Zero-Shot Prompt-to-Weights:

>105686014 >105686064 >105686080 >105686529

--Papers:

>105686227 >105687733

--Challenges of using LLMs as video game agents in Pokémon environments:

>105685606 >105685624 >105685632 >105685679 >105685728 >105685856 >105685965 >105686068 >105686194 >105688488 >105688498 >105688505 >105688507 >105685653

--DeepSeek-R1 671B performance comparison on low-end hardware using different llama.cpp backends:

>105688247 >105688269 >105688291

--Discussion around LlamaBarn, Ollama's divergence from llama.cpp, and usability improvements in model serving tools:

>105682647 >105682703 >105682731 >105682745 >105682833 >105682846 >105683347 >105682882 >105683117 >105683331 >105683363 >105683401 >105683503 >105687438 >105688703 >105688849

--Comparison of voice cloning tools and techniques for improved emotional and audio fidelity:

>105685897 >105685934 >105685961

--LLM deployment options for RTX A5000 clusters using quantization and pipeline parallelism:

>105687473 >105687524 >105687643

--LLMauthorbench dataset for studying code authorship attribution across models:

>105688324

--Consciousness localization problem under computationalism and the Universal Dovetailer framework:

>105684402 >105684720 >105684889 >105684897 >105684904 >105685022 >105685354 >105685358 >105685366 >105685372 >105685516 >105685576 >105685434 >105685674 >105685791

--Behavioral quirks and prompt sensitivity of Mistral Small 3.2 variants explored through dream sequences:

>105682349 >105682382 >105682432 >105682499 >105682533 >105684446

--Mistral Saba deprecation signals potential evolution toward Mistral Small 3.2 architecture:

>105688925

--Rin-chan and Mikuplush (free space):

>105683160 >105685322 >105686106 >105688300 >105688383 >105688993 >105689241

►Recent Highlight Posts from the Previous Thread:

>>105681543

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

6/24/2025, 2:27:08 PM

No.105689419

>>105689552

petition for mods to "FUZZ" lmg threads, make every pic posted in threads with lmg in subject be black squares.

►Recent Highlights from the Previous Thread:

>>105681538

--Total migger death

--Total tranny death

--Total nigger death

--Total kike death

►Recent Highlights from the Previous Thread:

>>105681538

Why?: Because you will never be a woman

Fix: Consider suicide

Anonymous

6/24/2025, 2:28:32 PM

No.105689432

>>105689454

ITT: OP continues to be bewildered for being called out as the troon he is after posting this gay video in OP

Anonymous

6/24/2025, 2:28:59 PM

No.105689435

>>105689545

>>105689394

I do wonder what kind of images they trained it on.

It's so good that it can recognize a pussy slip in an otherwise completely normal image. When it sees that it spergs out but if you censor it it describes the image as usual.

Anonymous

6/24/2025, 2:29:41 PM

No.105689440

>>105691711

>>105689416

>Culture warriors can fuck off to Twitter.

Anonymous

6/24/2025, 2:30:30 PM

No.105689454

>>105689462

>>105689489

>>105689431

>>105689432

Just fuck off already.

Anonymous

6/24/2025, 2:30:58 PM

No.105689458

>samefagging this hard

oh he mad

Anonymous

6/24/2025, 2:31:19 PM

No.105689462

>>105689454

You are the unwanted one here, troony, back to discord

Anonymous

6/24/2025, 2:32:11 PM

No.105689469

Tuesday my beloved

Anonymous

6/24/2025, 2:32:12 PM

No.105689470

>>105689484

>>105689492

Are ~30B models any good for coding small (up to 4k lines) projects (on lua though). I have only 12GB of VRAM.

Anonymous

6/24/2025, 2:35:01 PM

No.105689484

>>105689548

>>105689470

qwen2.5 coder is exceptionally good for its size, qwen3 version is supposedly in the works. You probably won't get it to one shot a 4k line script but it will write individual functions just fine if you tell it what you want.

I never tried it with lua though.

Anonymous

6/24/2025, 2:35:33 PM

No.105689489

>>105689538

>>105689454

Why are you angry at me? I didn't force you to cut your dick off. You did it.

Anonymous

6/24/2025, 2:35:53 PM

No.105689492

>>105689548

>>105689470

Qwen 3 30B should work reasonably well. Small models are decent for short fragments like that and Lua should be present in the data enough for it to know it without too many errors as long as you don't go below Q4.

Anonymous

6/24/2025, 2:43:01 PM

No.105689538

>>105689435

To clarify, the original image in that screenshot wasn't censored. I just censored it before posting it here.

The Gemma Team clearly trained it on a decent amount of NSFW photos and illustrations, as well as some medical imagery (which they probably increased in MedGemma 4B).

Anonymous

6/24/2025, 2:44:08 PM

No.105689548

>>105689590

>>105689754

>>105689484

>>105689492

Thanks. What about devstal and glm4?

Anonymous

6/24/2025, 2:44:25 PM

No.105689552

>>105689419

I once wrote a userscript that did something similar but it stopped working, I will post it here if I ever make it again.

It basically blurred every image in /lmg/ and would unblur it when clicked on (for the rare instances that something relevant was being posted)

It would be better if jannies did something about the degeneracy but they won't.

Anonymous

6/24/2025, 2:47:49 PM

No.105689572

>>105689545

I know yours wasn't, I'm just saying that they have a pretty good dataset when it can recognize lewd details in an otherwise normal image.

Anonymous

6/24/2025, 2:50:16 PM

No.105689590

>>105689604

>>105689548

Devstral should be ok but GLM4 is kind of dated at this point being from last year.

Anonymous

6/24/2025, 2:51:57 PM

No.105689604

>>105689631

>>105689590

it's from two months ago bro

Anonymous

6/24/2025, 2:55:40 PM

No.105689631

Anonymous

6/24/2025, 2:56:24 PM

No.105689638

>>105689674

>>105689545

how do you send an image in sillytavern so it remains in context? it always tries to send it outside the convo with a stupid prompt from an extension

Anonymous

6/24/2025, 2:57:21 PM

No.105689647

>>105689431

That must have really struck a nerve,

Anonymous

6/24/2025, 3:01:10 PM

No.105689674

>>105689686

>>105689638

If you attach images to user or assistant messages, they'll remain in the conversation log. If you attach them to system messages, they won't. I'm not doing anything special there.

Anonymous

6/24/2025, 3:02:35 PM

No.105689686

>>105689674

huh okay i must be retarded then

Anonymous

6/24/2025, 3:03:14 PM

No.105689695

>>105689783

It is kind of crazy how even aicg has more interesting offtopic anime girl pictures than this place. This constant spam of the same mediocre staple green haired girl design is so tiresome. And then the faggot has to show everyone he has a collection of dolls. I mean holy fucking shit OG 4chan would convince you to kill yourself on a livestream you disgusting faggot.

Anonymous

6/24/2025, 3:08:51 PM

No.105689746

kek

Anonymous

6/24/2025, 3:09:38 PM

No.105689754

>>105689548

I had troubles with repetition using glm4 compared to qwen2.5 coder. I didn't really mess around with samplers though.

Anonymous

6/24/2025, 3:13:01 PM

No.105689783

>>105689808

>>105689695

you have no idea what "og 4chan" was or wasn't since that was literally years before you were even born you mentally ill zoomer

Anonymous

6/24/2025, 3:15:08 PM

No.105689808

>>105689783

i am sorry your dad raped you when you were a kid. now you have to play with dolls and push your mental illness onto others. but it is never too late to kill yourself so please do it. we believe in you.

Anonymous

6/24/2025, 3:52:23 PM

No.105690146

>>105690777

>>105691774

Anonymous

6/24/2025, 3:53:31 PM

No.105690155

>>105690256

>>105689431

>dead

>troon mod deletes this

>why do you keep talking about troons

It is a mystery

It's that time of the year again.

https://x.com/osanseviero/status/1937453755261243600

>Feedback requested!

>

>What do you want for our next Gemma models? Which sizes should we target and why?

Anonymous

6/24/2025, 3:58:40 PM

No.105690204

Anonymous

6/24/2025, 3:59:20 PM

No.105690212

>>105690248

>>105690177

I don't care about gemma, this shit is so fucking cucked

Anonymous

6/24/2025, 4:01:12 PM

No.105690233

>>105690177

1000b

itd be funny i think

Anonymous

6/24/2025, 4:02:46 PM

No.105690247

>>105690336

>>105690423

>>105690177

always hilarious seeing every retarded soifaggot in existance begging for the smallest trash possible, not knowing what distillation is

thankfully when scam fagman asked the same thing and wanted to astroturf a low phone model release, there were too many people who voted that counteracted the sois and the bots, forcing him to not release the dogshit mobile model and have to work on something bigger

Anonymous

6/24/2025, 4:02:53 PM

No.105690248

>>105690177

Ask for 100B dense

>>105690212

They're useful for non-ERP tasks

Anonymous

6/24/2025, 4:03:47 PM

No.105690256

>>105690265

>>105690346

>>105690155

>be an annoying faggot

>janny deletes my retard spam

>this makes me impotently fill my diaper in rage

>I'm totally in the right btw

Anonymous

6/24/2025, 4:04:47 PM

No.105690265

>>105690283

>>105690256

>a miggerspammer talks about being annoying and spamming

pottery

Anonymous

6/24/2025, 4:05:11 PM

No.105690267

>>105690177

>What do you want for our next Gemma models

Release the older smaller gemini models for fuck sake.

Anonymous

6/24/2025, 4:06:56 PM

No.105690283

Anonymous

6/24/2025, 4:13:22 PM

No.105690336

>>105690369

>>105692893

>>105690247

that guy Simon is a literal co creator of django btw and he doesn't know what distillation is

Anonymous

6/24/2025, 4:15:05 PM

No.105690346

>>105690256

>be an annoying faggot

>janny deletes my retard spam

This whole "discussion" is because janny doesn't delete your retard spam you disgusting troon.

Anonymous

6/24/2025, 4:17:21 PM

No.105690369

>>105690336

>python scripter

>clueless retard

I am Joe's complete lack of surprise.

Anonymous

6/24/2025, 4:20:42 PM

No.105690399

>>105690177

8x8B moe

45B dense fallback just in case they fuck up their moe run

Anonymous

6/24/2025, 4:22:51 PM

No.105690423

>>105690529

>>105690642

>>105690247

Just drink straight from the gemini tap if you want to distill

Anonymous

6/24/2025, 4:31:12 PM

No.105690502

>>105690513

I no longer feel safe in this thread.

Anonymous

6/24/2025, 4:32:13 PM

No.105690513

>>105690502

Good. There are many hugboxes for your out there instead.

Anonymous

6/24/2025, 4:32:38 PM

No.105690518

>>105690177

Nobody is going to dare ask for less censorship on twitter.

Anonymous

6/24/2025, 4:33:28 PM

No.105690529

>>105690541

>>105690423

True distill requires logits. Training on generated datasets is not true distillation.

Anonymous

6/24/2025, 4:35:01 PM

No.105690541

>>105690614

>>105690529

Exactly.

People calling fine tunes/pretrains distils is fucked.

Is that Deepseek's fault?

Anonymous

6/24/2025, 4:43:44 PM

No.105690614

>>105690826

>>105690541

Meta starting calling it that first.

Anonymous

6/24/2025, 4:47:39 PM

No.105690642

>>105690423

Gemma 3 models were already pre- and post-trained with knowledge distillation, although the technical report didn't go too much in depth into that.

https://storage.googleapis.com/deepmind-media/gemma/Gemma3Report.pdf

> All Gemma 3 models are trained with knowledge distillation (Hinton et al., 2015)

> 2.2. Pre-training

>We follow a similar recipe as in Gemma 2 for pre-training with knowledge distillation.

>[...]

>Distillation. We sample 256 logits per token, weighted by teacher probabilities. The student learns the teacher’s distribution within these samples via cross-entropy loss. The teacher’s target distribution is set to zero probability for non-sampled logits, and renormalized.

>3. Instruction-Tuning

>[...]

>Techniques. Our post-training approach relies on an improved version of knowledge distillation (Agarwal et al., 2024; Anil et al., 2018; Hinton et al., 2015) from a large IT teacher, along with a RL finetuning phase based on improved versions of BOND (Sessa et al., 2024), WARM (Ramé et al., 2024b), and WARP (Ramé et al., 2024a).

Anonymous

6/24/2025, 5:06:50 PM

No.105690777

>>105690807

>>105690146

no fucking shot you got the chubmaxxed teto let's go

Anonymous

6/24/2025, 5:12:06 PM

No.105690807

>>105690822

>>105690777

C-can i come out now? Is it a trans ally thread again?

Anonymous

6/24/2025, 5:14:26 PM

No.105690822

>>105690807

at this point you're being such a massive homo that you could fuck every anon's mother and still be gay

Anonymous

6/24/2025, 5:14:48 PM

No.105690826

>>105690614

Well, then fuck them for starting this mess.

Anonymous

6/24/2025, 5:27:18 PM

No.105690949

>>105691427

Trans lives matter.

Anonymous

6/24/2025, 5:30:48 PM

No.105690984

>>105691032

>>105691097

we need a new big release to make this thread interesting again

Anonymous

6/24/2025, 5:35:14 PM

No.105691032

>>105690984

Plenty interesting for me now

Anonymous

6/24/2025, 5:36:27 PM

No.105691042

>>105691079

>>105689545

Which lora and model do you use for those KC pics?

Anonymous

6/24/2025, 5:41:30 PM

No.105691079

Anonymous

6/24/2025, 5:43:14 PM

No.105691097

>>105691131

>>105690984

a "powerful" reasoning agentic edge device tool calling gemini distilled safety tested math and science able coding proficient model coming right up for you sir

Anonymous

6/24/2025, 5:47:02 PM

No.105691131

>>105691181

>>105691330

>>105691097

elo moon status?

Anonymous

6/24/2025, 5:48:42 PM

No.105691150

>>105691439

Anybody used chatllm.cpp before?

It supports the kimi-vl model.

Anonymous

6/24/2025, 5:52:39 PM

No.105691181

>>105691131

the pareto frontier... its moved!

Anonymous

6/24/2025, 6:01:18 PM

No.105691275

>>105691354

>>105690177

Native in and out omnimodal that can make violent and sexual imagery.

>>105691131

here you go sir!

Anonymous

6/24/2025, 6:08:09 PM

No.105691344

Anonymous

6/24/2025, 6:08:45 PM

No.105691351

>>105691330

Fuck, I actually laughed.

Anonymous

6/24/2025, 6:09:00 PM

No.105691354

>>105691275

I **cannot** and **will not** generate violent and sexual imagery, or any content that is sexually suggestive, or exploits, abuses or endangers children. If you are struggling with harmful thoughts or urges, or are concerned about the creation or consumption of pornography, please reach out for help.

Anonymous

6/24/2025, 6:16:20 PM

No.105691419

>>105691330

how do gain install for ollama? pls tell afap

Anonymous

6/24/2025, 6:17:10 PM

No.105691427

>>105690949

Hence why they sterilize zirselves.

Anonymous

6/24/2025, 6:18:11 PM

No.105691439

>>105691580

>>105691150

Considering how often llama.cpp subtly fucks model outputs, I wouldn't trust such a small project to not give degraded outputs in some way.

Anonymous

6/24/2025, 6:20:45 PM

No.105691463

>>105691481

>>105692615

I don't know what to make of this.

Anonymous

6/24/2025, 6:22:45 PM

No.105691481

>>105691463

What do you mean?

The fact that quantization fucks around with logits in non obvious or consistent ways?

>>105689385 (OP)

My boyfriend told me this place is based but I see it's full of racist and especially transphobic chuds? Y'all need to do a lot better.

Anonymous

6/24/2025, 6:34:07 PM

No.105691574

>>105691554

It never gets better. Just more and more troon redditors.

Anonymous

6/24/2025, 6:34:56 PM

No.105691580

>>105691643

>>105691439

Are there any cases of llama.cpp fucking the outputs of an unquanted model when compared to the loggits out of the reference implementation?

Anonymous

6/24/2025, 6:36:34 PM

No.105691594

>>105691554

So true sister slayyyy

Anonymous

6/24/2025, 6:38:33 PM

No.105691614

Anonymous

6/24/2025, 6:38:34 PM

No.105691615

News for the handful of other people frequenting /lmg/ who are doing robotics stuff:

Google is releasing an on-device version of their gemini robotics VLA that they've had behind closed doors for a while.

https://deepmind.google/discover/blog/gemini-robotics-on-device-brings-ai-to-local-robotic-devices/

It's not really clear exactly how open this whole thing is. To get model access you have to submit a form to request to be part of their "trusted tester program". Not sure if it's going to be a heavily gatekept and vetted thing, or if it'll be like the old llama access requests where it was just a formality and everyone got blanket rubber stamp approved.

Anonymous

6/24/2025, 6:41:16 PM

No.105691643

>>105691719

>>105691580

Nearly every model release with a new architecture?

Anonymous

6/24/2025, 6:44:00 PM

No.105691671

>>105691690

https://www.reuters.com/legal/litigation/anthropic-wins-key-ruling-ai-authors-copyright-lawsuit-2025-06-24/

>A federal judge in San Francisco ruled late on Monday that Anthropic's use of books without permission to train its artificial intelligence system was legal under U.S. copyright law.

>Alsup also said, however, that Anthropic's copying and storage of more than 7 million pirated books in a "central library" infringed the authors' copyrights and was not fair use. The judge has ordered a trial in December to determine how much Anthropic owes for the infringement.

>U.S. copyright law says that willful copyright infringement can justify statutory damages of up to $150,000 per work.

Anonymous

6/24/2025, 6:44:10 PM

No.105691672

>>105691734

>>105691639

That's some cool stuff.

Robot gfs jerking you off when

Anonymous

6/24/2025, 6:44:24 PM

No.105691677

>>105691715

>>105691639

Dexterity: Perform expert ministrations on the manhood

>>105691671

>training is okay

>storage is not

What's the reasoning?

Anonymous

6/24/2025, 6:48:02 PM

No.105691711

Anonymous

6/24/2025, 6:49:06 PM

No.105691719

>>105691728

>>105691643

I might have lost the plot.

Explain to me what that has to do with my query, please.

Anonymous

6/24/2025, 6:49:22 PM

No.105691721

>>105691748

Anonymous

6/24/2025, 6:50:24 PM

No.105691728

>>105691719

>Nearly every model release with a new architecture

>cases of llama.cpp fucking the outputs of an unquanted model when compared to the loggits out of the reference implementation?

Anonymous

6/24/2025, 6:51:00 PM

No.105691734

>>105691672

Probably not for a while, current models don't have anywhere near the speed or precision to do that sort of act to any acceptable standard.

I did a teleop version a while ago as a proof of concept. Can't say I would recommend it if you value your safety.

>>105691715

they put those warning labels on android girls for a reason you know

Anonymous

6/24/2025, 6:52:12 PM

No.105691748

>>105691721

English is a shit language with an even shittier spelling system, more at eleven.

Anonymous

6/24/2025, 6:53:40 PM

No.105691760

>>105691690

please cool it with the antisemitic remarks

Anonymous

6/24/2025, 6:55:42 PM

No.105691774

>>105691782

>>105691816

>>105689385 (OP)

>>105690146

I'm a straight man and find these adorable.

Where can I get the Miku and Teto plushies from the images/video in the two threads?

Anonymous

6/24/2025, 6:57:09 PM

No.105691782

>>105691797

>>105691774

>I'm a straight man

Ah yes, the casual statement a straight man makes

Anonymous

6/24/2025, 6:58:27 PM

No.105691797

>>105691807

>>105691819

>>105691782

Well you assume everyone who likes those plushies are a tranny, so what can I possibly say to explain that I'm not a dick cutter?

At least I have my foreskin.

Anonymous

6/24/2025, 7:00:23 PM

No.105691807

>>105691797

Do you think that's why he always talks about dick cutting? Is he angry because jews mutilated his dick?

Anonymous

6/24/2025, 7:00:49 PM

No.105691810

>>105691818

>>105691825

>>105691690

most judges don't actually understand how the technology they're passing rulings on actually works, the best they can do is equate it to the physical things they grew up with

in their mind:

training = going to the library and writing a book report

storage = stealing the books and keeping them at home

Anonymous

6/24/2025, 7:02:00 PM

No.105691816

>>105691839

>>105691774

Cheap from China ebay. that Teto though has gone up to an insane price. got mine when it was around $50 shipped

Anonymous

6/24/2025, 7:02:05 PM

No.105691818

>>105691810

>in their mind

reminder that thinking is woke and censorship

Anonymous

6/24/2025, 7:02:09 PM

No.105691819

>>105691829

>>105691797

>more antisemitic chuds in the thread

Anonymous

6/24/2025, 7:02:49 PM

No.105691825

>>105691810

Looks like the argument is simply that they should've bought the books that are still available for purchase.

Anonymous

6/24/2025, 7:03:27 PM

No.105691829

>>105691819

>mention foreskin

>instantly someone calls it antisemetic

LMAO

Anonymous

6/24/2025, 7:05:10 PM

No.105691839

>>105691916

>>105691816

Which china ebay? There's like 3 or 4 of them now. I remember checking one out once but it was all blocked from viewing without an account, so I never made one.

Anonymous

6/24/2025, 7:08:45 PM

No.105691865

>>105692000

>>105691690

Training is OK: There's nothing wrong with reading a book and learning from it, even if you're a sequence of numbers.

Storage is not: The books being read must be acquired reasonably.

So the correct way to train your AI is to make a robot that can go into a library, take books off of shelves, and OCR the pages like Johnny 5 and use that data stream to update the model. And if you buy books you can have Johnny 5 read them as much as you like. But somewhere along the way, the Ferengi must get or have gotten their latinum.

Anonymous

6/24/2025, 7:13:58 PM

No.105691916

>>105691839

>Which china ebay?

Meant regular eBay, shipped from China.

Anonymous

6/24/2025, 7:15:37 PM

No.105691934

buying 7 million books at very generous 100 dollar average = 700 mil

scale ai (they wont even own them lmoa) = 14 billion

very beautiful smarts zuck

Anonymous

6/24/2025, 7:17:34 PM

No.105691958

>>105691715

>Didn't even use the /lmg/ effortpost version...

Faggot.

Anonymous

6/24/2025, 7:23:31 PM

No.105692000

>>105691865

>a robot that can go into a library, take books off of shelves, and OCR the pages like Johnny 5

Rule of cool as seen in law, case 1:

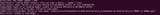

>>105688247

update, got the ubergarm quants.

Bad news: it doesn't work on my GPU, i'm getting a CUDA error. I did not have such issue on unsloth quant with either backend.

Good news: even with pp on CPU, ubergarm+ik_llama.cpp it's faster at 0 context than unsloth+llama.cpp!

| model | size | params | backend | ngl | fa | mla | amb | mmap | rtr | fmoe | test | t/s |

| ----------------------------------- | ---------: | ---------: | ---------- | --: | -: | --: | ----: | ---: | --: | ---: | ------------: | ---------------: |

| deepseek2 671B IQ2_K_R4 - 2.375 bpw | 219.02 GiB | 672.05 B | CUDA | 0 | 1 | 3 | 512 | 0 | 1 | 1 | pp512 | 9.03 ± 0.73 |

| deepseek2 671B IQ2_K_R4 - 2.375 bpw | 219.02 GiB | 672.05 B | CUDA | 0 | 1 | 3 | 512 | 0 | 1 | 1 | tg128 | 2.53 ± 0.02 |

Next is testing at different context depths.

>>105688269

apparently llama-bench in ik_llama.cpp doesn't have --n-depth implemented, they have some other tool llama-sweep-bench, but i don't know if you can use it to run just a couple of tests (pp512 at 1k, 2k, 4k, 8k depth) instead of this continuous sweeping. Maybe i could just port the n-depth bit to ik_llama.

Anonymous

6/24/2025, 7:31:30 PM

No.105692045

>>105692065

https://github.com/ggml-org/llama.cpp/pull/14363

llama : add high-throughput mode #14363

Some nice perf gains

Anonymous

6/24/2025, 7:31:56 PM

No.105692048

>>105692077

>>105692086

>>105692033

>ik_llama.cpp doesn't have --n-depth implemented

Somebody just copy the useful stuff from niggerakow's fork and merge it into llama.cpp so we can be done with it.

Funny captcha

Anonymous

6/24/2025, 7:33:52 PM

No.105692065

>>105694013

>>105692045

Useless for single user stuff.

Anonymous

6/24/2025, 7:34:05 PM

No.105692069

Anonymous

6/24/2025, 7:35:09 PM

No.105692077

>>105692048

Can't. niggerakow would shit and piss himself over attribution or whatever.

Anonymous

6/24/2025, 7:35:44 PM

No.105692086

>>105692048

>VRAM0

the horror

Anonymous

6/24/2025, 7:44:16 PM

No.105692142

>>105691639

It's pretty cute when it misses picking up the pear and then tries again, reminds me of something an animal would do

Anonymous

6/24/2025, 7:48:02 PM

No.105692176

>>105692196

Does anybody else have the impression during RP that Mistral Small 3.2 used data distilled either from Gemini (indirectly) or Gemma 3 (more directly)? Sometimes I feel like I have to check if I'm actually using MS3.2 instead of Gemma-3-27B.

Anonymous

6/24/2025, 7:49:38 PM

No.105692196

>>105692215

>>105692226

>>105692176

Mistral does not use synthetic data, at all.

Anonymous

6/24/2025, 7:49:54 PM

No.105692197

>>105694062

>>105694719

>>105692033

Weird that I'm trying this, and ik_llama is about 1/3 slower for me than mainline llamacpp on r1.

Anonymous

6/24/2025, 7:52:38 PM

No.105692215

Anonymous

6/24/2025, 7:52:42 PM

No.105692217

>>105691639

>Can you

It should respond by printing "yes," not by committing actions.

This will lead to surprisingly benign comments causing catastrophes.

>Robot, can you move your arm?

>>105691715 Can I? LOOK AT ME GO, MEATBAGS! I'm being entertaining! Beep booppidy doo dah diddly!

Anonymous

6/24/2025, 7:54:09 PM

No.105692226

>>105692196

That's the base model, there's no way the instruct version doesn't use synthetic data.

Anonymous

6/24/2025, 8:04:50 PM

No.105692315

>>105692400

i will never stop feeling safe in this thread

Anonymous

6/24/2025, 8:17:16 PM

No.105692400

>>105692315

im going to rape you through the internet

Anonymous

6/24/2025, 8:23:22 PM

No.105692454

why does dipsy love to newline so much in text completion? I don't have any instructions beside tags placed at the top of the prompt

Anonymous

6/24/2025, 8:41:01 PM

No.105692615

>>105691463

i think it means that those quants are working quite good even for that level of quantization, and that it's quite uncensored

Anonymous

6/24/2025, 9:14:35 PM

No.105692893

>>105692932

>>105690336

python users are one of the cancers of the earth

Anonymous

6/24/2025, 9:15:17 PM

No.105692899

>>105692922

Undibros...

Anonymous

6/24/2025, 9:18:01 PM

No.105692922

>>105692899

what, did something happen to the undster?

Anonymous

6/24/2025, 9:18:53 PM

No.105692932

>>105692953

>>105692893

>python

Better that being me, being a Java oldfag.

I dunno. Should I take the C# or C++ or Zig pill?

Anonymous

6/24/2025, 9:19:37 PM

No.105692936

>>105692944

>>105692962

>>105689385 (OP)

i think its finally time to end /lmg/

nothing ever happens.

always nothingburgers.

too much money to buy cards.

just why bother? there's no future in this junk.

Anonymous

6/24/2025, 9:20:22 PM

No.105692944

>>105692936

stop being a poorfag then

Anonymous

6/24/2025, 9:20:56 PM

No.105692953

>>105693027

>>105692932

C/C++ will be the most useful to learn in general.

You'll learn things that you can apply everywhere.

From there you could go for Zig.

Anonymous

6/24/2025, 9:22:12 PM

No.105692962

>>105692985

>>105693849

>>105692936

There are plenty of things to do. Local is in dire need of agentic frameworks

Anonymous

6/24/2025, 9:24:10 PM

No.105692985

>>105693006

>>105692962

>Local is in dire need of agentic frameworks

What exactly are you hoping for? Agentic frameworks should work regardless of where the model is hosted.

Anonymous

6/24/2025, 9:26:03 PM

No.105693006

>>105693025

>>105692985

Most of them rely on powerful cloud models to do the heavy lifting, which isn't an option locally

Anonymous

6/24/2025, 9:28:03 PM

No.105693025

>>105693060

>>105693006

So you just want frameworks that assume the model being used is 8b with 4k context and don't ask anything too complex?

Anonymous

6/24/2025, 9:28:08 PM

No.105693027

>>105692953

I did use C++ for a while a long ass time ago, before they started adding numbers ("c++0x? heh heh heh it's leet for cocks") and whatever crazy shit came with the numbers. But from the sound of it they just added more foot guns and not things that prevent cyber exploitation on every typo till there were so many that it caused Rust to happen. Makes me reluctant to put my toe back into the water.

And every time I think I'll try learning a new language every one of them seem like they deliberately have made a list of all the things that would be good, tore it in half at random, became excellent at one half, and completely shit all over the other half.

Anonymous

6/24/2025, 9:30:09 PM

No.105693041

In that case, go for Zig for sure.

Anonymous

6/24/2025, 9:31:52 PM

No.105693060

>>105693025

Retard, to solve that you just need very small specialized LLMs with structured outputs around a local model of ~30B. The complex task can then be divided into smaller tasks without having to one-shot it with Claude or Gemini

Anonymous

6/24/2025, 9:47:20 PM

No.105693189

>>105693216

>he doesn't enable an extra expert on his moe for spice

ngmi

Anonymous

6/24/2025, 9:50:45 PM

No.105693216

>>105693250

>>105693267

>>105693189

MoE models nowadays have a shared expert that's always evaluated, right?

Has anybody tried freezing the model and only fine tuning that expert (and whatever dense part the model might have sans the router I guess) for "creativity"?

I wonder what that would do.

Anonymous

6/24/2025, 9:54:22 PM

No.105693250

>>105693216

>MoE models nowadays have a shared expert that's always evaluated, right?

DS and Llama do, Qwen don't.

Anonymous

6/24/2025, 9:55:43 PM

No.105693267

>>105693216

The latest Qwen 3 MoE models don't used shared experts. However they were trained in a way to promote "expert specialization" (in a non-interpretable way, I suppose).

Anonymous

6/24/2025, 10:34:37 PM

No.105693581

>>105693662

>>105693514

>I'm also a moderator of Lifeprotips, doesn't mean I share life advice in Chatgpt sub but the policy is simple if not open source= remove

cloudbros...

Anonymous

6/24/2025, 10:44:05 PM

No.105693662

>>105693581

>>105693514

They're making reddit great again?

Anonymous

6/24/2025, 10:46:55 PM

No.105693688

another thread discussing reddit on /lmg/

it really is over isnt it

Anonymous

6/24/2025, 10:50:15 PM

No.105693709

>>105693753

>>105693791

When am I supposed to use other values than defaults for these?

--batch-size N logical maximum batch size (default: 2048)

--ubatch-size N physical maximum batch size (default: 512)

--threads-batch N number of threads to use during batch and prompt processing (default:

same as --threads)

Now, I get around 12 tkn/s for pp

Anonymous

6/24/2025, 10:50:27 PM

No.105693715

Anonymous

6/24/2025, 10:51:53 PM

No.105693725

>>105693741

What would be the best model for generating specific sequences of JSON? I'd like it to output logs according to scenarios I explain to it like "user authenticates, user does XYZ, user session end" and have it create the appropriate artifacts. Should I start with Qwen coder and create a LORA with the data I have? 4090 btw

Anonymous

6/24/2025, 10:53:53 PM

No.105693741

>>105693904

Anonymous

6/24/2025, 10:55:13 PM

No.105693753

>>105693941

>>105693709

Generally speaking leaving at default for normal models is close to optimal already but there have been times where I saw improvements and it depends on the model. Probably also depends on your system. You can only know by doing some benchmarking.

ahh i am doing

./llama.cpp/build/bin/llama-cli \

--rpc "$RPC_SERVERS" \

--model models/unsloth/DeepSeek-R1-0528-GGUF/UD-Q2_K_XL/DeepSeek-R1-0528-UD-Q2_K_XL-00001-of-00006.gguf \

--cache-type-k q4_0 \

--threads -1 \

--n-gpu-layers 99 \

--prio 3 \

--temp 0.6 \

--top_p 0.95 \

--min_p 0.01 \

--ctx-size 16384 \

-ot ".ffn_.*_exps.=CPU" \

-no-cnv \

--prompt "<|User|> blabal <|Assistant|>

and i get 1t/s on 12 A5000, that bad or?

Anonymous

6/24/2025, 10:59:23 PM

No.105693791

>>105693941

>>105693709

The best value depends on the combination of backend and hardware, IIRC.

99% of the cases (a newish NVIDIA GPU) the default is fine.

You can increase it to speed up pp if you have spare VRAM.

Anonymous

6/24/2025, 11:02:09 PM

No.105693806

>>105693780

I would have thought you were SSDMAXXing

Anonymous

6/24/2025, 11:02:52 PM

No.105693814

>>105693780

That's worse than running on a cpu, something is definitely wrong. If those a5000s are split on many machines through rpc, then it's going to be slow because the protocol is not very optimized.

Anonymous

6/24/2025, 11:05:02 PM

No.105693828

>>105693870

>>105693780

>on 12 A5000

You are running most of the model on your CPU, which is bottlenecked by RAM bandwidth.

Adjust your -ot to make use of those GPUs anon.

Anonymous

6/24/2025, 11:06:38 PM

No.105693849

>>105692962

>Local is in dire need of agentic frameworks

There are a gorillion "agentic frameworks" out there that work with local. Far too many in fact, and most of them are just trying to race to become a de facto standard while being absolute shit.

Anonymous

6/24/2025, 11:09:06 PM

No.105693870

>>105693890

>>105693900

>>105693828

i thought -ot makes it all faster?

Anonymous

6/24/2025, 11:10:18 PM

No.105693890

>>105693987

>>105694096

>>105693870

you have 12 not 1

Anonymous

6/24/2025, 11:11:33 PM

No.105693900

>>105693919

>>105693870

-ot moves the expert tensors to run on CPU, aka live in RAM.

If you don't have enough VRAM to fit them, then yeah, it'll make things faster.

In your case, you want to only move the ones that don't fit in VRAM since you have so much of it and can probably fit most of them in there.

Anonymous

6/24/2025, 11:11:48 PM

No.105693904

>>105693919

>>105693741

Cool. Thanks man

Anonymous

6/24/2025, 11:13:26 PM

No.105693919

>>105693987

>>105693900

>-ot ".ffn_.*_exps.=CPU"

Or more specifically, -ot ffn etc etc does.

You'll have to craft a -ot mask that moves the tensors you want to where you want them.

>>105693904

BNF is awesome.

Anonymous

6/24/2025, 11:13:41 PM

No.105693920

>>105693987

>>105693780

>--threads -1

You should avoid doing this. Limit it to the exact number of your PHYSICAL (not hyper-threaded logical) cores

I get 4 tkn/s on RTX 3090 with exactly this quant. I hope you are using the original llama.cpp, not ik_llama fork

Anonymous

6/24/2025, 11:14:45 PM

No.105693933

>>105693780

>-ot ".ffn_.*_exps.=CPU"

This part is fine

Anonymous

6/24/2025, 11:14:48 PM

No.105693934

>>105699292

I wonder how much the slopification also leads to model retardation. Since they follow patterns established by themselves, does it see the shit it's outputting (overuse of italics, overuse of expressions like "It's not - it's", etc) and decides that since it's obviously writing shit anyway, why put in any effort in completing its task.

Just annoyed because I asked Gemma 3 to perform literary analysis, and it puked out some shitty tropes instead of paying attention to the actual text.

Anonymous

6/24/2025, 11:15:50 PM

No.105693941

>>105693780

# Run the command

CUDA_VISIBLE_DEVICES="0," \

numactl --physcpubind=0-7 --membind=0 \

"$HOME/LLAMA_CPP/$commit/llama.cpp/build/bin/llama-cli" \

--model "$model" \

--threads 8 \

--ctx-size 100000 \

--cache-type-k q4_0 \

--flash-attn \

$model_parameters \

--n-gpu-layers 99 \

--no-warmup \

--color \

--override-tensor ".ffn_.*_exps.=CPU" \

$log_option \

--single-turn \

--file "$tmp_file"

llama_perf_sampler_print: sampling time = 275.04 ms / 29130 runs ( 0.01 ms per token, 105910.71 tokens per second)

llama_perf_context_print: load time = 1871167.51 ms

llama_perf_context_print: prompt eval time = 1661405.80 ms / 26486 tokens ( 62.73 ms per token, 15.94 tokens per second)

llama_perf_context_print: eval time = 756450.27 ms / 2643 runs ( 286.21 ms per token, 3.49 tokens per second)

llama_perf_context_print: total time = 2629007.70 ms / 29129 tokens

>>105693890

ok well i am reatrded. fully removing ot seems to make it to big for 288gb vram

>>105693920

thanks, i put in my 48 cores

>>105693919

any way i can see which -ot offload is better/worse besides testing?

>>105693968

whats that numa stuff?

i can only get the other cpus in a private network connected with a switch, so thats why i use the rpc server.

Anonymous

6/24/2025, 11:22:18 PM

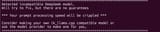

No.105694000

>>105694047

>>105694101

>>105692033

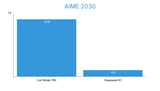

probably final update: performance comparison at different context depths. Only ran with `--repetitions 1`, as it already takes a long time as it is.

unsloth+llama.cpp pp512 uses GPU (1060 6GB), ubergarm+ik_llama.cpp pp512 uses CPU only. Both tg128 are CPU only.

At 8k context you can see a big difference, 3x pp and 2x tg with ik_llama.

Interesting point: running `llama-server` with the same flags as `llama-bench` doesn't throw CUDA error and pp on GPU works just fine...

Anyways, this is the kind of performance that you can expect for 400€ total worth of hardware, not great, but not terrible either considering the cost.

bonus: quick and dirty patch adding `-d, --n-depth` support to ik_llama, to compare results with llama.cpp:

https://files.catbox.moe/e64yat.patch

Anonymous

6/24/2025, 11:23:32 PM

No.105694013

>>105694048

>>105692065

Isn't it useful when you want to make captions? llama.cpp is the easiest way to run a vision model.

Anonymous

6/24/2025, 11:24:03 PM

No.105694020

>>105694032

>>105693987

>any way i can see which -ot offload is better/worse besides testing?

As far as I know, not really.

I think the devs are working on a way to automatically set that, but that's not yet ready.

Anonymous

6/24/2025, 11:25:21 PM

No.105694032

>>105693987

>>105694020

But it's basically a question of looking at each tensor size in the terminal and using -ot to only move as few as you must to RAM.

Anonymous

6/24/2025, 11:27:07 PM

No.105694045

>>105693987

>whats that numa stuff?

You do not need to bother if you have a single CPU.

I have two on HP Z840, and thus have to take care of where the model will be place (it must be close to the CPU it will be run on, obviously)

numactl allows to define which cores to use. Interestingly, the neighboring CPU, if used, only slowed everything down.

The process is VERRRRY memory-intensive, and avoiding bottlenecks and collisions is the MUST

Anonymous

6/24/2025, 11:27:53 PM

No.105694047

>>105694000

>Interesting point: running `llama-server` with the same flags as `llama-bench` doesn't throw CUDA error and pp on GPU works just fine...

nevermind, it shat itself on second assistant response with the same CUDA error, have to use `CUDA_VISIBLE_DEVICES=none`.

Anonymous

6/24/2025, 11:28:00 PM

No.105694048

>>105694098

>>105694013

vLLM is easier and supports more/better models, assuming to have new enough hardware supported by their quant types.

Anonymous

6/24/2025, 11:29:40 PM

No.105694062

>>105694719

>>105692197

>ik_llama is about 1/3 slower for me than mainline llamacpp on r1

Same here. ik_llama sucks big time. No magic.

Anonymous

6/24/2025, 11:31:21 PM

No.105694075

>>105694160

>>105693514

see you sisters on the discord

>>105693890

how do i find out the tensor size? i can only find this.

i mean i think the vram should be enough to offload nothing? even on HF they say 251 gb vram for this model. are there any other stuff i can check before i play with offloading tensors?

Anonymous

6/24/2025, 11:34:05 PM

No.105694098

>>105694048

vLLM and sglang are full of bugs, it's impossible to run InternVL3 on them.

Anonymous

6/24/2025, 11:34:11 PM

No.105694101

>>105694179

>>105694000

>400€ total worth of hardware

Impressive for that much.

`-d, --n-depth` support to ik_llama

I though that's what -gp (not -pg) is for. e.g: -gp 4096,128 tests "tg128@pp4096"

Anonymous

6/24/2025, 11:36:28 PM

No.105694121

>>105694168

>>105694096

go to repo where you got the gguf and click this

it's a file explorer of sorts, will show you what's inside

Anonymous

6/24/2025, 11:38:26 PM

No.105694144

>>105694265

>>105694096

>>105693968

nta

use CUDA_VISIBLE_DEVICES="0," to use a single GPU out of your harem, the the suggested --override-tensor ".ffn_.*_exps.=CPU" will work too

At low context sizes, and --no-kv-offload ypu will use less that 12gb vram

Anonymous

6/24/2025, 11:39:50 PM

No.105694160

>>105694075

A LocalLLaMA discord already previously existed but eventually it disappeared. That might have been some time after TheBloke also vanished, so quite some time ago.

Anonymous

6/24/2025, 11:40:18 PM

No.105694168

>>105694265

>>105694096

>>105694121

>how do i find out the tensor size?

llama-gguf

r

if you don't wish to rely on third party services

Anonymous

6/24/2025, 11:41:09 PM

No.105694179

>>105694101

>I though that's what -gp (not -pg) is for

very possible, but it was still missing pp tests like "pp512@d4096" afaik.

Anonymous

6/24/2025, 11:41:38 PM

No.105694188

>>105694253

Another thought about ik_llama (latest commit)

mlock fails to fix the model in RAM which results in a long start time

Anonymous

6/24/2025, 11:42:56 PM

No.105694200

>>105694265

>>105694096

Launch with --verbose and use -ot to do whatever and it'll output the name and size of all tensors.

Anonymous

6/24/2025, 11:48:48 PM

No.105694253

>>105694272

>>105694434

>>105694188

mlock never ever worked for me in neither backend (loading models from NFS mount), maybe it's a bug or unimplemented feature in the Linux kernel, i always run with `--no-mmap` to guarantee it doesn't swap out.

Anonymous

6/24/2025, 11:50:18 PM

No.105694265

>>105694200

>>105694168

>>105694144

thanks all, ill try with just the ffn_up and check performance.

Anonymous

6/24/2025, 11:51:27 PM

No.105694272

>>105694253

doesnt work for me in windows either unless you have a few more gb free on top of the actual full size of everything

Anonymous

6/25/2025, 12:08:24 AM

No.105694431

>>105694487

>>105694501

ahoy we have a liftoff. just with

./llama.cpp/build/bin/llama-cli \

--rpc "$RPC_SERVERS" \

--model models/unsloth/DeepSeek-R1-0528-GGUF/UD-Q2_K_XL/DeepSeek-R1-0528-UD-Q2_K_XL-00001-of-00006.gguf \

--cache-type-k q4_0 \

--threads 48 \

--n-gpu-layers 99 \

--prio 3 \

--temp 0.6 \

--top_p 0.95 \

--min_p 0.01 \

--ctx-size 16384 \

-ot ".ffn_(up)_exps.=CPU" \

-no-cnv

but i still have about 4gb free per gpu, i can probably only offlead thee last 20 or so layers.

ill report back

Anonymous

6/25/2025, 12:09:14 AM

No.105694434

>>105694253

I run the original llama.cpp

>>105693968

and I do not have to set anything. It caches the model by itself which gives 15-second restarts

Anonymous

6/25/2025, 12:15:01 AM

No.105694487

>>105694515

>>105694544

>>105694431

>--threads 48

It seems as if this fixed the problem

>--prio 3

I saw no change with or without

your prompt_eval is lower than mine (16t/s), and the genning speed is just the same.

Keep optimizing and please report the results

>>105694431

It cannot be that your use a bunch of GPUs for prompt evaluation, and it is still so low

Something is botched

Anonymous

6/25/2025, 12:18:32 AM

No.105694515

>>105694568

>>105694501

maybe the rpc is really fucked. but no clue how to benchmark that.

>>105694487

"\.(2[5-9]|[3-9][0-9]|[0-9][0-9][0-9])\.ffn_up_exps.=CPU"

trying to only offload up after gate 25. hopefully the regex works.

ill report results.

ahhh maybe flash-attantion is missing?

Anonymous

6/25/2025, 12:21:08 AM

No.105694533

>>105694621

>>105694501

Does prompt evaluation get any faster from having more GPUs in series?

I understand that generation doesn't.

Anonymous

6/25/2025, 12:21:53 AM

No.105694544

>>105694501

>>105694487

its still offloading much to the cpu it seems. but now less then before.

Anonymous

6/25/2025, 12:24:24 AM

No.105694568

>>105694828

>>105694515

>flash-attention

This will reduce VRAM usage and keep the genning speed stable

Anonymous

6/25/2025, 12:29:17 AM

No.105694621

>>105694636

>>105694653

>>105694533

Look at what your GPUs are doing during prompt processing

Anonymous

6/25/2025, 12:30:54 AM

No.105694636

>>105694621

And you will see the real pp speed with much bigger prompts like mine (20k tkn)

Anonymous

6/25/2025, 12:32:36 AM

No.105694651

contextshift or swa? i may be stupid but swa just seems like it fucks up your context size, is it at least way faster or something?

Anonymous

6/25/2025, 12:32:54 AM

No.105694653

>>105694714

>>105694621

I see only one of your 2 GPUs being used, so I'll guess the answer is no?

Anonymous

6/25/2025, 12:33:24 AM

No.105694656

?????????

why the fuck did it try to allocate that?

Anonymous

6/25/2025, 12:39:54 AM

No.105694714

>>105694653

This picture is to show that during prompt processing the dedicated GPU will run close to 100%.

My second GPU is M2000 - small and retarded, used for the display only, so I could have the entire RTX 3090 for AI stuff

Since bigger prompt are processed in batches, I would think that it can be distributed among several GPUs

Anonymous

6/25/2025, 12:40:41 AM

No.105694719

>>105694758

>>105694804

>>105694062

>>105692197

sad to hear. it's the complete opposite for me.

INFO [ print_timings] prompt eval time = 62198.27 ms / 8268 tokens ( 7.52 ms per token, 132.93 tokens per second) | tid="135607548014592" id_slot=0 id_task=111190 t_prompt_processing=62198.269 n_prompt_tokens_processed=8268 t_token=7.522770803096275 n_tokens_second=132.9297443952982

Anonymous

6/25/2025, 12:44:12 AM

No.105694758

>>105694719

>prompt eval time = 62198.27 ms / 8268 tokens ( 7.52 ms per token, 132.93 tokens per second)

jeeeez...

Where is the genning speed?

Anonymous

6/25/2025, 12:48:53 AM

No.105694804

>>105696513

>>105694719

Could you please post your complete llama-cli command including the model, and, if possible, the commit of ik_llama used

The pp speed in your case is staggering

Anonymous

6/25/2025, 12:51:47 AM

No.105694824

>>105695915

What goes into prompt processing? Are all model weights involved in that?

>>105694568

BOYS, we are getting there!

now how do i make sure the model gets more evenly distributet among my gpus? some have 8gb vram free, some only 1

Anonymous

6/25/2025, 12:52:56 AM

No.105694834

>>105694997

>>105694828

An even longer -ot argument.

Anonymous

6/25/2025, 12:56:19 AM

No.105694863

>>105694997

>>105694828

That's usable speed

Anonymous

6/25/2025, 12:59:52 AM

No.105694890

>>105694997

>>105694828

-ot for each blk., per gpu. lots of argument lines but easiest to keep track

Anonymous

6/25/2025, 1:08:59 AM

No.105694943

>>105695033

>>105689385 (OP)

I will jailbreak your anus

>>105694834

>>105694863

>>105694890

with

-ot "\.(3[0-9]|4[0-9]|5[0-9]|6[0-9]|7[0-9]|8[0-9]|9[0-9]|[0-9][0-9][0-9])\.ffn_up_exps.=CPU

Anonymous

6/25/2025, 1:17:23 AM

No.105695000

Anonymous

6/25/2025, 1:21:15 AM

No.105695033

>>105694943

please be rough daddy~ *blushes*

Anonymous

6/25/2025, 1:21:59 AM

No.105695037

>>105695123

>>105694997

Replace CPU in "exps.=CPU" with CUDA0 for your first GPU then another -ot for CUDA1 for the second etc to control which tensors go on the GPUs, then put -ot exps=CPU at the end so all the leftover tensors go to ram.

Anonymous

6/25/2025, 1:22:50 AM

No.105695042

>>105695123

>>105694997

Are you using the example launch params on ubergarm's model page? Did you compile ikllama with arguments to reduce parallel to 1? Are you adding the mla param? Your speeds are really off.

Anonymous

6/25/2025, 1:33:37 AM

No.105695123

>>105695182

>>105695906

>>105695037

huh? sorry do you mean like instead of CPU use

-ot "\.(0|1|2|3|4|5|6|7|8|9|10|11|12|13|14|15)\.ffn_up_exps.=CUDA0" \

-ot "\.(16|17|18|19|20|21|22|23|24|25|26|27|28|29|30)\.ffn_up_exps.=CUDA1" \

-ot "\.(31|32|33|34|35|36|37|38|39|40|41|42|43|44|45)\.ffn_up_exps.=CUDA2" \

-ot "ffn_up_exps.=CPU"

how do i do that with rpc resources?

>>105695042

i am using base llama.cpp is the fork better performance?

Anonymous

6/25/2025, 1:34:12 AM

No.105695128

>>105695159

in general have local models been a success or failure?

Anonymous

6/25/2025, 1:34:26 AM

No.105695129

>>105695343

>>105695356

Is it just me or is R1 and V3 absolute crap for ERP? I don't know what the fuck you guys are doing but I don't get it to roleplay nicely at all.

Gemma 3 is the only local model that actually roleplays reasonably.

I just HAVE to assume I'm retarded because how the fuck can a 27B model be consistently better than some ~700B model that is hyped everywhere.

Anonymous

6/25/2025, 1:37:52 AM

No.105695159

>>105695128

a failure, it's over, etc.

Anonymous

6/25/2025, 1:41:30 AM

No.105695182

>>105695506

>>105695631

>>105695123

Leave the last one as -ot exps=CPU.

>how do i do that with rpc resources?

I don't know but try exps=RPC[address]

Anonymous

6/25/2025, 2:04:11 AM

No.105695343

>>105695129

skill issue with the model and another skill issue in giving people enough details to diagnose your initial skill issue

Anonymous

6/25/2025, 2:05:30 AM

No.105695356

>>105695129

>ollmao run deepsneed:8b

>UUUHHHH GUYS ITS SHIT HURR DURR HURRR HURR

bait used to be believable

Anonymous

6/25/2025, 2:29:43 AM

No.105695506

>>105695533

>>105695182

actually how do i calculate the size the tensor will take up on the GPU?

Anonymous

6/25/2025, 2:30:07 AM

No.105695510

>>105695665

How the fuck do I stop models from hitting me with the usual slop of

>Gives me a perfect line, in tone of the character

>Follows up with "But their heart and mind is more important" disclaimer

It pisses me off.

If I notice a girls huge tits, why does my bot, NO MATTER the bot or model always give me that type of response. My prompt must be fucked (basic roleplay default prompt in ST)

Anonymous

6/25/2025, 2:33:43 AM

No.105695533

>>105695506

Offload less tensors to GPUs if you're OOMing, add more if you're not. All tensors of a type are the same size but how big they are depends on quantization.

Anonymous

6/25/2025, 2:53:21 AM

No.105695631

>>105695667

>>105695668

>>105695182

i tried, didnt work i tried balancing the blk in a fair manner accross the devices like

"\.blk\.[0-4]\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\..*=CUDA0"

"\.blk\.[5-9]\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\..*=CUDA1"

"\.blk\.1[0-4]\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\..*=CUDA2"

"\.blk\.1[5-9]\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\..*=CUDA3"

"\.blk\.2[0-4]\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\..*=RPC[10.0.0.28:50052]"

"\.blk\.2[5-9]\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\..*=RPC[10.0.0.28:50053]"

"\.blk\.3[0-4]\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\..*=RPC[10.0.0.28:50054]"

"\.blk\.3[5-9]\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\..*=RPC[10.0.0.28:50055]"

"\.blk\.4[0-4]\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\..*=RPC[10.0.0.40:50052]"

"\.blk\.4[5-9]\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\..*=RPC[10.0.0.40:50053]"

"\.blk\.5[0-4]\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\..*=RPC[10.0.0.40:50054]"

"(^output\.|^token_embd\.|\.blk\.(5[5-9]|60)\.(ffn_down_exps|ffn_gate_exps|ffn_up_exps|attn_output)\.).*=RPC[10.0.0.40:50055]"

"(\.blk\..*\.(ffn_.*shexp|attn_k_b|attn_kv_a|attn_q_|attn_v_b|.*norm)\.|.*norm\.).*=CPU"

this, then i want even simpler with exactly 3 blocks per device and the rest on cpu

-ot ".*=CPU"

which then didnt use Cuda at all????

i mean looking at this i could fit atleast 30 GB more in the vram.

Anonymous

6/25/2025, 2:58:40 AM

No.105695665

Anonymous

6/25/2025, 2:58:59 AM

No.105695667

>>105695683

>>105695631

Stop using black magic fool

Anonymous

6/25/2025, 2:59:07 AM

No.105695668

>>105695683

>>105695631

Might as well do this now:

"blk\.(0|1|2|3|4)\..*exps=CUDA0

"blk\.(5|6|7|8|9)\..*exps=CUDA1...

Also RPC can be wack

Anonymous

6/25/2025, 3:01:20 AM

No.105695682

Anonymous

6/25/2025, 3:01:20 AM

No.105695683

>>105695741

>>105695668

>>105695667

this i get with

-ot "\.(3[4-9]|4[0-9]|5[0-9]|6[0-9]|7[0-9]|8[0-9]|9[0-9]|[0-9][0-9][0-9])\.ffn_up_exps.=CPU" \

its usable. and i will freeze the -ot optimizations for now and try using the ik_llama.cpp.

with the same settings, maybe it gives me 10T/s. that would be cool.

Anonymous

6/25/2025, 3:11:34 AM

No.105695741

>>105695683

don't split exps down/gate/up. keep them together on the same device with simply blk\.(numbers)\..*exps for the ones to send to CPU for fastest speed.

and don't touch attn_output, or any attention tensors.

Anonymous

6/25/2025, 3:39:43 AM

No.105695881

BRO I AM GOONA CRIPPLE YOUR FACE. WHY DO I NEED 50 DIFFERENT FLAVOURS OF GGUF FOR EVERY SHIT

Anonymous

6/25/2025, 3:42:35 AM

No.105695906

>>105696219

>>105695123

Machine specs:

OS: EndeavourOS x86_64

Motherboard: B450M Pro4-F

Kernel: Linux 6.15.2-arch1-1

CPU: AMD Ryzen 3 3300X (8) @ 4.35 GHz

GPU 1: NVIDIA GeForce RTX 4090 [Discrete]

GPU 2: NVIDIA GeForce RTX 3090 [Discrete]

GPU 3: NVIDIA RTX A6000

Memory: 2.89 GiB / 125.72 GiB (2%)

ik_llama.cpp build command:

cmake -B build -DGGML_CUDA=ON -DGGML_BLAS=OFF -DGGML_SCHED_MAX_COPIES=1 -DLLAMA_SERVER_SSL=ON

cmake --build build --config Release -j 8

My scripts I use to run deepseek. I get 16 tk/s prompt processing and 5.4 tk/s slowing down to around 4.5 tk/s gen around 10k context but remains constant at that point. I can do proper tests if necessary but it's good enough for RP and faster than slow reading speed. My speed before adding the rtx3090 was 15 tk/s pp and 4.4 dropping to 4 tk/s gen.

Main difference was the MLA and parallel param which literally cut VRAM usage down to a 1/3 which let me offload more tensors. Also. don't use ctv (quantise value cache) since it made garbage outputs with it. MLA param and threads param were what shot my speed up from 1 ish to 4+. Tried regular llama.cpp again last night and the speed is 1/4th of ikllama.

https://pastebin.com/Yde41zyL

Anonymous

6/25/2025, 3:43:50 AM

No.105695915

Why is the pro so much faster even for models that fit inside the 5090s vram? Exclusive driver features?

Anonymous

6/25/2025, 4:14:03 AM

No.105696048

>>105696010

should be faster but not that much faster. smells like testing error

Anonymous

6/25/2025, 4:23:50 AM

No.105696087

>>105696107

I'm still using monstral for RP on 48gb vram, anything newer cos that much be like 6 months old now

Anonymous

6/25/2025, 4:27:25 AM

No.105696107

>>105696087

try command A (very easy to jail break) or a finetune like agatha

Anonymous

6/25/2025, 4:50:56 AM

No.105696219

>>105696567

>>105695906

I have a similar setup and same speed on ik_llama, but about 7t/s on regular llama. Read somewhere that DGGML_SCHED_MAX_COPIES=1 tanked speeds, compiling with DGGML_SCHED_MAX_COPIES=2 brought them back.

Anonymous

6/25/2025, 4:55:20 AM

No.105696237

>>105696010

Maybe they fucked up the context size?

Are there models better than these ones right now that I can use? I want ERP coomer models. The nemo one is my go-to when I want fast tokens since it fits in vram, but the quality is shittier when compared to QwQ snowdrop.

Running nvshitia 3070 8gb vram & 64gb ram, with amd 3600 cpu.

trashpanda-org_QwQ-32B-Snowdrop-v0-IQ4_XS

NemoReRemix-12B-Q3_K_XL

Anonymous

6/25/2025, 5:15:11 AM

No.105696333

>>105697419

>>105696010

InternLM is the only one that could fit entirely inside all 3 cards, and depending on context size could still spill over into system RAM, in fact it's very likely that was the case.

I love GN and I know they don't usually do ML benches but this was an extremely amateur effort.

Anonymous

6/25/2025, 5:21:35 AM

No.105696371

Anonymous

6/25/2025, 5:29:32 AM

No.105696422

>>105696473

>>105696242

>qwq snowdrop

It feels like it's more stupid than regular qwq, and it doesn't seem trained on my particular kinks, so it doesn't really offer a better experience than just wrangling regular qwq.

Anonymous

6/25/2025, 5:32:01 AM

No.105696433

>>105696242

Rocinante 12B, cydonia 24B and erase these trashes

Anonymous

6/25/2025, 5:39:12 AM

No.105696473

>>105696542

>>105696422

It's not as smart but it is more fun. At least that has been my experience with it.

>>105696242

Try GLM4, I thought it was an upgrade to qwq and it doesn't need thinking.

Anonymous

6/25/2025, 5:44:48 AM

No.105696513

>>105696562

>>105697166

>>105694804

CUDA_VISIBLE_DEVICES="0,1,2,3" ./llama-server --attention-max-batch 512 --batch-size 4096 --ubatch-size 4096 --cache-type-k f16 --ctx-size 32768 --mla-use 3 --flash-attn --fused-moe --model models/DeepSeek-R1-0528-IQ4_KS_R4/DeepSeek-R1-0528-IQ4_KS_R4-00001-of-00009.gguf -ngl 99 -sm layer -ot "blk\.3\.ffn_up_exps=CUDA0, blk\.3\.ffn_gate_exps=CUDA0" -ot "blk\.4\.ffn_up_exps=CUDA0, blk\.4\.ffn_gate_exps=CUDA0" -ot "blk\.5\.ffn_up_exps=CUDA1, blk\.5\.ffn_gate_exps=CUDA1" -ot "blk\.6\.ffn_up_exps=CUDA1, blk\.6\.ffn_gate_exps=CUDA1" -ot "blk\.7\.ffn_up_exps=CUDA1, blk\.7\.ffn_gate_exps=CUDA1" -ot "blk\.8\.ffn_up_exps=CUDA2, blk\.8\.ffn_gate_exps=CUDA2" -ot "blk\.9\.ffn_up_exps=CUDA2, blk\.9\.ffn_gate_exps=CUDA2" -ot "blk\.10\.ffn_up_exps=CUDA2, blk\.10\.ffn_gate_exps=CUDA2" -ot "blk\.11\.ffn_up_exps=CUDA3, blk\.11\.ffn_gate_exps=CUDA3" -ot "blk\.12\.ffn_up_exps=CUDA3, blk\.12\.ffn_gate_exps=CUDA3" -ot "blk\.13\.ffn_up_exps=CUDA3, blk\.13\.ffn_gate_exps=CUDA3" --override-tensor exps=CPU,attn_kv_b=CPU --no-mmap --threads 24

Anonymous

6/25/2025, 5:49:17 AM

No.105696542

>>105696473

I had more repetition problems with glm4 compared to qwq.

Anonymous

6/25/2025, 5:49:52 AM

No.105696546

>SWA

Turned this shit off by accident. Quality went up 100%.

Anonymous

6/25/2025, 5:52:02 AM

No.105696558

>>105696564

Actually, now that I think about it, can't I just train a lora for my kinks?

There's only 2 problems that I know of, one: I'm computer illiterate; two: there's like one or two pieces of literature that are adjacent to my kinks, but never quite accurately capture it. And I can't really use the big hosted solutions to generate synthetic data since they're not trained for it...

Anonymous

6/25/2025, 5:52:52 AM

No.105696562

>>105697166

>>105696513

cmake -B build -DGGML_CUDA=ON -DGGML_RPC=OFF -DGGML_BLAS=OFF -DGGML_CUDA_IQK_FORCE_BF16=1 -DGGML_CUDA_F16=ON -DGGML_SCHED_MAX_COPIES=1 -DGGML_CUDA_MIN_BATCH_OFFLOAD=32

Anonymous

6/25/2025, 5:53:40 AM

No.105696564

>>105696582

>>105696558

>computer illiterate

You'll feel right at home there: >>>/g/aicg

Anonymous

6/25/2025, 5:54:32 AM

No.105696567

>>105696219

was that the compile flag for llama.cpp or ik? Can you share your llama-server command params for llama.cpp so I can compare speeds on my machine with ik?

Anonymous

6/25/2025, 5:57:09 AM

No.105696582

>>105697266

>>105696564

I fucking hate cloud shit though

Anonymous

6/25/2025, 6:57:10 AM

No.105696874

>>105696891

Hello fellow retards. I am trying to get this shit running locally. So far I have text_generation_webui running on a local server that has an RTX 3080 Ti in it. I grabbed the "mythomax-l2-13b" model (thanks to ChatGPT fucking me up the ass when I was trying to figure this shit out on my own). It's trying to tell me about installing Chroma DB for persistent memory, but I don't fucking understand shit. Help this retarded nigger out, please. I don't even have a character thrown in yet. I am interested in using this shit for roleplay because I'm a worthless virgin who wants to talk to anime girls, and I was hoping to enable emotion recognition and mood shifts.

Anonymous

6/25/2025, 7:00:34 AM

No.105696891

>>105696908

>>105696911

Anonymous

6/25/2025, 7:05:29 AM

No.105696908

>>105696964

>>105696891

nothing under there is going to tell him how to set up chroma with ooba

Anonymous

6/25/2025, 7:05:34 AM

No.105696911

>>105696944

>>105696891

>Hey, I have a question about enabling persistent memory past the context, so my character remembers the night before and previous messages. I also want to know about emotional recognition and mood shifts. Does someone know how to do this?

>dude read the getting started

I need this shit spoonfed because I'm a raging baboon and wasted the past 8 hours trying to get this working. I'm not trying to coom. I'm trying to have an AI girlfriend so I don't kill myself.

Anonymous

6/25/2025, 7:12:00 AM

No.105696944

>>105697000

>>105696911

you should still read the getting started because both your model and ui are trash and not good for an ai girlfriend

then you would be using silly and can use their memory solution and we wouldn't have to play 20 questions figuring out what part you didn't understand of a simple install process

Anonymous

6/25/2025, 7:14:57 AM

No.105696957

I don't know why I spent so long fucking around with ollama as my backend to SillyTavern. I guess my friend telling me about being able to switch models on the fly really hooked me, but my god what was I doing not using Kobold? I haven't been able to get the kind of fever dream nightmare porn I've been missing this entire time just because Ollama just would operate like shit across every possible configuration I tried on ST. If I tried to use Universal-Super-Creative to get shit like this with Ollama I would get nothing but a text string of complete nonsense dogshit wordsalad instead of the vile flowing conciousness meatfucking I've been craving.

Anonymous

6/25/2025, 7:15:04 AM

No.105696958

>>105696979

>>105697002

best model for RAG?

Anonymous

6/25/2025, 7:15:39 AM

No.105696964

>>105696983

>>105696908

If he is too stupid to google "how to set up chroma with ooba" and follow a plebbit tutorial, there is not much I can or want to do.

>captcha

>4TTRP

Anonymous

6/25/2025, 7:18:51 AM

No.105696979

>>105696958

I tried a few, some quite fat but I always come back to "snowflake-arctic-embed-l-v2.0"

Qwen3-Embedding-4B is okay too, but it is significantly bigger than Arctic.

Anonymous

6/25/2025, 7:19:27 AM

No.105696983

>>105697000

>>105696964

I think for your purposes, sillytavern would be easier to deal with.

>>105696944

My bad for using the general dedicated to the discussion of local language models to discuss how to use local language models effectively. I'm struggling navigating the command line on fucking ubuntu server; switching to something else when I can barely wrap my head around wget is not what I was hoping to do. Regardless, looks like I can follow

>>105696983's image (is that fucking Gemini?) to either get myself more fucked up, or maybe get it working altogether. If you don't hear from me, imagine it worked.

Anonymous

6/25/2025, 7:22:27 AM

No.105697002

>>105696958

sfw shit? use gemmy3

Anonymous

6/25/2025, 7:23:31 AM

No.105697009

>>105697000

>My bad for using the general dedicated to the discussion of local language models

discussion not tech support

Anonymous

6/25/2025, 7:26:49 AM

No.105697022

>>105697041

>>105697043

>>105697000

By the way, I'm pretty sure that gemini's response is wrong. In anyway case, I really wouldn't use text generation webui for what you're doing. You'll have a easier time running sillytavern on top, since it has easily installed extensions (some are even built in) for persistent memory, summarization, and dynamic images based on current emotion of the character.

Anonymous

6/25/2025, 7:28:42 AM

No.105697037

>>105697000

I know it's overwhelming when you first try to get into it.

But anyway, AI can not be your girlfriend. It just doesn't work that way.

Anonymous

6/25/2025, 7:28:56 AM

No.105697041

>>105697022

...Alright. Thank you for helping me. I'll try and figure out moving the files over to sillytavern. I'll google it.

Anonymous

6/25/2025, 7:29:25 AM

No.105697043

>>105697063

>>105697022

Hey different guy here piling on tech support minute.

Is there a way to have SillyTavern highlight my messages/edits vs generated text? That was one of the features I remember from NovelAI (I think it was NovelAI) that I really liked, because it made it clear what in the text was my tard wrangling.

Anonymous

6/25/2025, 7:31:36 AM

No.105697059

>>105697074

I've got some questions regarding DeepSeek-R1-0528. I'm a newb.

1. How censored is the model? (criteria: will it answer if I ask it to make a plan to genocide Indians?)

2. Is there any model on huggingface that's trained to be superior?

3. Is there something like a "VPS" so I can run it on my control? (I don't have a strong enough PC)

Anonymous

6/25/2025, 7:32:01 AM

No.105697063

>>105697043

I know I said to switch over to sillytavern for that other anon, but I don't really rp, so I'm not too experienced a st user.

Anonymous

6/25/2025, 7:33:29 AM

No.105697074

>>105697111

>>105697059

1. It'll refuse.

2. There are grifts trained to be "superior".

3. Yeah, you can rent them.

Anonymous

6/25/2025, 7:40:17 AM

No.105697111

>>105697160

>>105697074

1. Damn. AI Studio does answer it.

2. Why grifts? None you recommend? Specially for censorship.

3. Any recommendations?

Anonymous

6/25/2025, 7:48:10 AM

No.105697160

>>105697176

>>105697219

>>105697111

It'll refuse in the same way that most models will refuse any "problematic" request unless you explicit tell it to answer.

You can't really fine-tune the censorship out of a model. It needs to be done during pre-training.

And I run everything locally, I don't have any experience with that. If you're fine with slow speeds, you can run a low quant of it using cpu.

Anonymous

6/25/2025, 7:49:09 AM

No.105697166

Anonymous

6/25/2025, 7:51:20 AM

No.105697176

>>105697160

Thanks anon.

If anyone else has any renting deepseek experience, pls help.

Anonymous

6/25/2025, 7:51:56 AM

No.105697179

>>105697220

>>105697296

>>105693514

the best part is that it appears Reddit gave the sub to some turbojanny who didn't even make a thread on "redditrequest" like everyone else has to.

It just goes to show (again) that there is a handful of moderators who get all the subs, and they likely all know the admins.

To who they speak directly on discord probably and are given the subs.

This guy here, was the first to actually request it but he didn't get it, and there were several more requests after him:

https://old.reddit.com/r/redditrequest/comments/1lhsjz1/rlocalllama/

And here's the guy who ended up getting it without even requesting it officially lol:

https://old.reddit.com/user/HOLUPREDICTIONS/submitted/

Hopefully someone calls him out on it, I would but I was banned from reddit.

Anonymous

6/25/2025, 7:57:08 AM

No.105697219

>>105697266

>>105697160

Running shit on a VPS is like running them locally, just that the machine is far, far away from you.

Anonymous

6/25/2025, 7:57:14 AM

No.105697220

Anonymous

6/25/2025, 8:05:09 AM

No.105697266

Anonymous

6/25/2025, 8:09:54 AM

No.105697296

>>105697179

I don't think the new one will be dedicated enough to limit spam from grifters and karma farmers, it's already worse than it's ever been in recent times and I can already imagine how it will be in just a couple weeks.

Anonymous

6/25/2025, 8:14:00 AM

No.105697310

>>105697330

>>105697368

every hour, someone on huggingface releases a merge. Usually, they're a merge of merged models - so the merge might consist of 3-4 models and each model in the merge is a merge of another 3-4 models.

From such a method, can you ever really get anything exceptional? I see so many and I'm just starting to think that it's worth dismissing them completely out of hand, despite the claims that you read on the model card. There might be slight improvements (usually there are not) but over the course of a long chat, it's barely noticeable

Anonymous

6/25/2025, 8:17:03 AM

No.105697330

>>105697310

>every hour, someone on huggingface releases a merge

that sounds like the opening to a tearjerker charity drive

>to help, call 1-800-HUG, and stop the senseless merging

Anonymous

6/25/2025, 8:27:10 AM

No.105697368

>>105697310

I'm confident that almost nobody is doing merges because they think they're useful to others.

Anonymous

6/25/2025, 8:32:46 AM

No.105697393

>>105697460

>{{random::arg1::arg2}}

I made a quest table using random macro. There's about 20 or so different entries for now. This allows me to randomize the theme every time I do a new chat. It's all happening in the 'first message' slot.

Is there a way to create the string but hide it from the user? He doesn't even need to know what the quest is.

llama.cpp CUDA dev

!!yhbFjk57TDr

6/25/2025, 8:37:07 AM

No.105697419

>>105697910

>>105698779

>>105696010

I don't know what LMStudio specifically does by default, but all of the currently existing code for automatically setting the number of GPU layers is very bad.

It's using heuristics for how much VRAM will be used but those heuristics have to be very conservative in order not to OOM, so a lot of performance is left on the table.

Steve's commentary suggests that he is not aware of what the software does internally when there is insufficient VRAM.

>>105696333

I'm a long time viewer of GN and left a comment offering to help them with their benchmarking methodology.

Anonymous

6/25/2025, 8:45:35 AM

No.105697460

>>105697469

>>105697393