/lmg/ - Local Models General

►Recent Highlights from the Previous Thread:

>>105716837

--Paper: DiLoCoX: A Low-Communication Large-Scale Training Framework for Decentralized Cluster:

>105717547 >105722106

--Debate over Hunyuan-A13B-Instruct potential and broader model evaluation challenges:

>105719559 >105719604 >105719719 >105719870 >105720031 >105720088 >105720212 >105720457 >105720098 >105719819 >105720216 >105720280 >105720528 >105720587 >105720639 >105720652 >105720676 >105720542

--Dataset cleaning challenges and practical guide for LLM fine-tuning preparation:

>105717778 >105717903 >105717939 >105718041 >105718325

--VRAM bandwidth matters more than size for AI inference speed on 5060 Ti vs 5070 Ti:

>105717659 >105717686 >105717696 >105717723 >105717742 >105717746 >105717749 >105718680 >105718726

--Meta rethinks open-source AI strategy amid legal and competitive pressure:

>105721479 >105721537 >105722182 >105722750

--Early llama.cpp support for ERNIE 4.5 0.3B sparks discussion on model integration and speculative decoding:

>105720378 >105720450 >105720518 >105720544 >105721038 >105721109 >105721147 >105721171

--Hunyuan-A13B model compatibility and quantization issues on consumer GPUs:

>105720949 >105721744 >105721873

--Meta shifts AI focus to entertainment and social connection amid talent war with OpenAI:

>105723010 >105723033 >105723043

--Local OCR tools viable for Python integration and lightweight deployment needs:

>105718538 >105718555 >105718560

--Qwen VLo image generation capabilities spark creative prompts and API access debate:

>105722758 >105722858 >105722896 >105723110

--Baidu Ernie 4.5 remains restricted behind Chinese account verification barriers:

>105720557 >105720627 >105720654 >105720781

--LM Studio adds dots MoE support:

>105718511 >105718576 >105718822

--Director addon readme updated:

>105719546

--Miku (free space):

►Recent Highlight Posts from the Previous Thread:

>>105716840

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

>>105725967 (OP)

>>105725973

The OP mikutranny is posting porn in /ldg/:

>>105715769

It was up for hours while anyone keking on troons or niggers gets deleted in seconds, talk about double standards and selective moderation:

https://desuarchive.org/g/thread/104414999/#q104418525

https://desuarchive.org/g/thread/104414999/#q104418574

Here he makes

>>105714098 snuff porn of generic anime girl, probably because its not his favourite vocaloid doll and he can't stand that, a war for rights to waifuspam in thread.

Funny /r9k/ thread:

https://desuarchive.org/r9k/thread/81611346/

The Makise Kurisu damage control screencap (day earlier) is fake btw, no matches to be found, see

https://desuarchive.org/g/thread/105698912/#q105704210 janny deleted post quickly.

TLDR: Mikufag janny deletes everyone dunking on trannies and resident spammers, making it his little personal safespace. Needless to say he would screech "Go back to teh POL!" anytime someone posts something mildly political about language models or experiments around that topic.

And lastly as said in previous thread

>>105716637, i would like to close this by bringing up key evidence everyone ignores. I remind you that cudadev has endorsed mikuposting. That's it.

He also endorsed hitting that feminine jart bussy a bit later on.

so ive been trying this new meme everyone is talking about, mistral small 3.2

i spent 2 hours of reading and swiping and only got 16 messages into the roleplay, because it's THAT bad

whoever is shilling this as good for roleplay is either trolling hard, or a massive fucking retard

to say it's bad is an understatement

Anonymous

6/27/2025, 9:40:56 PM

No.105726032

[Report]

>>105726086

>>105726015

no one fucking cares

Anonymous

6/27/2025, 9:41:32 PM

No.105726037

[Report]

Anonymous

6/27/2025, 9:41:53 PM

No.105726041

[Report]

>>105726086

>>105726015

btw mikutranny also rejected my janitor application :(

>>105726015

Just fucking kill yourself.

petrus

6/27/2025, 9:42:52 PM

No.105726051

[Report]

>>105726081

=============THREADLY REMINDER=================

https://pastes.dev/l0c6Kj9a4v tampermonkey script for marking duplicates in red, read script before inserting into browser

Anonymous

6/27/2025, 9:43:42 PM

No.105726060

[Report]

>>105726111

>>105726015

His hand is feminine

Anonymous

6/27/2025, 9:45:25 PM

No.105726081

[Report]

>>105726025

Yep pretty much. It's worse than even Mixtral Instruct.

>>105726051

Thanks anon.

Anonymous

6/27/2025, 9:45:36 PM

No.105726086

[Report]

>>105726185

>>105726032

You cared enough to reply.

>>105726041

Never applied to janitor application because it requires cutting your dick off.

>>105726045

No.

Anonymous

6/27/2025, 9:46:53 PM

No.105726096

[Report]

>>105726015

migger already seething kek, keep up the good work

Anonymous

6/27/2025, 9:47:18 PM

No.105726098

[Report]

>>105726025

It might be a (You) problem.

>every AI general on /g/ has a resident schizo who whines and bitches about [poster]

funny that

Anonymous

6/27/2025, 9:48:07 PM

No.105726109

[Report]

>>105726025

uh anon, have u used the v3 tekken template? post some logs, post your ST master export with your preset

>>105726060

His civitai

https://civitai.com/user/inpaint/models

Stuff that typical tranny groomer would enjoy.

Anonymous

6/27/2025, 9:49:15 PM

No.105726121

[Report]

>>105726136

Anonymous

6/27/2025, 9:50:36 PM

No.105726132

[Report]

>>105726111

Buy an ad we know its you

Elon might release Grok 4 soon for local

Anonymous

6/27/2025, 9:51:00 PM

No.105726136

[Report]

>>105726121

Gimme a second to make a black flag thread.

Anonymous

6/27/2025, 9:51:02 PM

No.105726137

[Report]

>>105726025

no shit sherlock, every model shilled for roleplay here except rocinante is trash. they even go around thinking R1 is good for roleplay. you should know better than to trust /lmg/'s opinion.

Anonymous

6/27/2025, 9:51:14 PM

No.105726141

[Report]

>>105726155

whats the best rp template for sillytavern

Anonymous

6/27/2025, 9:52:27 PM

No.105726152

[Report]

>>105726107

And janny protecting said posters and making little safespace from the general.

Anonymous

6/27/2025, 9:52:34 PM

No.105726155

[Report]

>>105726141

vicuna works with everything

Anonymous

6/27/2025, 9:52:35 PM

No.105726156

[Report]

>>105726167

>>105726045

Why are you so angry? Is it because it is true or because you hate spam but are ok with mikuspam?

Anonymous

6/27/2025, 9:53:02 PM

No.105726163

[Report]

>>105726206

>>105726135

kek what the fuck is Elon doing all night with them? Begging for status updates?

Anonymous

6/27/2025, 9:53:05 PM

No.105726164

[Report]

>>105726394

>>105726107

the site is overrun by jews if you hadnt noticed. mostly just jews get schizophrenia.

Anonymous

6/27/2025, 9:53:21 PM

No.105726166

[Report]

>>105725973

I like this miku

Anonymous

6/27/2025, 9:53:25 PM

No.105726167

[Report]

>>105726156

Ye olde 'Rules for thee but not for me!'

Anonymous

6/27/2025, 9:53:27 PM

No.105726168

[Report]

>>105726135

Let them cook! Grok3 isn't stable yet ffs!

Anonymous

6/27/2025, 9:54:04 PM

No.105726175

[Report]

>>105726135

Where was grok 2 kek. Fucking Elon.

Anonymous

6/27/2025, 9:54:20 PM

No.105726179

[Report]

>>105726196

>>105726111

>1.1m Generations

Surely this is all generations by all people that utilized any of the accounts resources?

Anonymous

6/27/2025, 9:54:37 PM

No.105726185

[Report]

>>105726302

>>105726086

>you cared enough to reply

you must care a lot about blacks and trannies based on how much you spam about them

Anonymous

6/27/2025, 9:54:43 PM

No.105726186

[Report]

>>105726025

Example outputs? What is it that's so bad anyway? I genuinely don't understand from your posts alone.

Anonymous

6/27/2025, 9:54:54 PM

No.105726191

[Report]

>>105726195

>>105726135

Imagine having that conman watch over your shoulder while you work all night. They must of all struggled to concentrate.

Ah but Elon is "grinding" all night.

Anonymous

6/27/2025, 9:55:45 PM

No.105726194

[Report]

>>105726285

>>105726111

>groomer

keep trying to act like you belong while posting words only trannies use, its funny to see

>>105726191

He's doing something right considering Grok3 is actually a huge step forward unlike 99% of the pathetic shit open source model makers put out

Anonymous

6/27/2025, 9:56:11 PM

No.105726196

[Report]

>>105726220

>>105726179

Pretty sure that thing can't somehow track how much you're genning locally.

Anonymous

6/27/2025, 9:57:16 PM

No.105726206

[Report]

>>105726163

Elon recently said that he does not use a computer at work so yes

>>105726195

>he is

Lmao. He is a conman that buys businesses and pretends to work. Always has been, always will be.

Anonymous

6/27/2025, 9:58:12 PM

No.105726220

[Report]

>>105726240

>>105726196

nta, but i think civitai has online genning with the available models.

Anonymous

6/27/2025, 9:58:38 PM

No.105726224

[Report]

>>105726209

So why hasn't this worked for Zucc? Or Mistral? Or Alibaba?

Anonymous

6/27/2025, 9:59:18 PM

No.105726231

[Report]

>>105726314

>>105726209

Who did he buy xAI from?

Anonymous

6/27/2025, 9:59:49 PM

No.105726240

[Report]

>>105726262

>>105726220

Yeah but that guy almost certainly doesn't gen online.

>>105726025

Agreed. I made the mistake of wasting time on it too. The anons here must be pretty stupid if the genuinely find the model good.

Hello /lmg/. Did you already dress up and put some makeup on your doll foid today or are you doing it later? Remember: no romance.

Anonymous

6/27/2025, 10:01:50 PM

No.105726262

[Report]

>>105726290

>>105726240

I was suggesting that it could be the count of stuff other people generated online with his models, if he has any.

Anonymous

6/27/2025, 10:02:33 PM

No.105726276

[Report]

>>105726025

>>105726244

>It's baaad! Use sloptune #516 instead

OK bros

Anonymous

6/27/2025, 10:02:48 PM

No.105726279

[Report]

>>105726244

it's not a perfect model but its great for under 40b, it's positivity bias is way better than gemma for example

qwen3 is unusable

rocinante has grown stale

name a better model, post logs along with it

Anonymous

6/27/2025, 10:02:52 PM

No.105726281

[Report]

Anonymous

6/27/2025, 10:03:19 PM

No.105726285

[Report]

>>105726291

>>105726194

Trannies call each other groomer? Wow i didn't knew that! Thanks for the insights on your private Discord shenanigans.

Anonymous

6/27/2025, 10:03:48 PM

No.105726290

[Report]

>>105726262

I think we can assume that.

Anonymous

6/27/2025, 10:03:57 PM

No.105726291

[Report]

Anonymous

6/27/2025, 10:04:01 PM

No.105726292

[Report]

Can't wait for V4 of deepseek, v3 has to be the best all around model for almost everything. I don't know why, but R1 is somehow worse on very specific things.

Anonymous

6/27/2025, 10:04:10 PM

No.105726295

[Report]

>>105726258

uh oh our resident goonmonki wont like this one, he will seethereply without quoting for the 30th time today again

Anonymous

6/27/2025, 10:04:29 PM

No.105726302

[Report]

>>105726185

>uhm if you hate spiders...

Never change.

Anonymous

6/27/2025, 10:05:01 PM

No.105726308

[Report]

>>105726412

>>105726025

I dropped 3.2 pretty quick and I tried magistral a bit more. From my experience it is a solid incremental upgrade from everything else and I think 3.2 is similar to magistral. It could be the best thing you can run now if you can't into 1IQ MoE.

Anonymous

6/27/2025, 10:05:40 PM

No.105726314

[Report]

>>105726231

every single time.

Anonymous

6/27/2025, 10:07:21 PM

No.105726334

[Report]

>>105726361

>>105726015

The usual state of things here.

Anonymous

6/27/2025, 10:07:30 PM

No.105726338

[Report]

>>105726015

Is that a fucking leek shaped slap mark

Anonymous

6/27/2025, 10:09:56 PM

No.105726361

[Report]

>>105726334

my fellow tards turned into trannies? sad if true

>>105726258

Why so negative? In the end it's not that much different than genning and tweaking 2D waifus with SD.

Anonymous

6/27/2025, 10:12:39 PM

No.105726394

[Report]

>>105726164

Let's hope for more 7th of Octobers

Anonymous

6/27/2025, 10:14:10 PM

No.105726412

[Report]

>>105726684

>>105726025

I had the same result, though I didn't waste that long on it.

Pretty clear cut that one.

>>105726308

I've heard people mention this one a few times, but kind of hesitant to trust it considering the lack of people that seem interested in it.

What makes it better than 3.2?

Anonymous

6/27/2025, 10:14:12 PM

No.105726413

[Report]

another week, another SOTA local model released by China

have you even said THANK YOU once, /lmg/?

Anonymous

6/27/2025, 10:16:08 PM

No.105726431

[Report]

>>105726574

>>105726381

damn. i would like to spread those ass cheeks and bury my dick in it

Anonymous

6/27/2025, 10:16:51 PM

No.105726438

[Report]

>>105726428

>SOTA local model

No such thing tardo

Anonymous

6/27/2025, 10:17:44 PM

No.105726449

[Report]

>>105726477

>>105726428

still don't see any model that beats rocinante at roleplay. it's been a year.

Anonymous

6/27/2025, 10:17:51 PM

No.105726450

[Report]

>>105726428

buy ernie 4.5 is next week

Anonymous

6/27/2025, 10:19:07 PM

No.105726461

[Report]

>>105726563

Anonymous

6/27/2025, 10:20:51 PM

No.105726477

[Report]

>>105726449

Anon, it's the best wife we have. We just have to accept that shes a bit retarded. At least she's ours for a lifetime.

Anonymous

6/27/2025, 10:25:40 PM

No.105726522

[Report]

How can I interface new R1 with a RAG

Anonymous

6/27/2025, 10:30:33 PM

No.105726563

[Report]

>>105726581

Anonymous

6/27/2025, 10:32:15 PM

No.105726574

[Report]

>>105726431

They're not life-sized, Anon. They cost a fortune too. Genning images seems a cheaper hobby on the long run.

Anonymous

6/27/2025, 10:33:37 PM

No.105726581

[Report]

Nothing still beats QwQ. Sad.

Anonymous

6/27/2025, 10:36:51 PM

No.105726609

[Report]

>>105726597

Don't you mean midnight miqu?

Anonymous

6/27/2025, 10:37:03 PM

No.105726611

[Report]

Anonymous

6/27/2025, 10:39:14 PM

No.105726633

[Report]

This is how I feel in every thread on every board of 4chan now.

Why do I even bother anymore?

Maybe it's time to finally let go.

Anonymous

6/27/2025, 10:40:51 PM

No.105726653

[Report]

>>105726752

>>105726597

Is QwQ better than Qwen 3?

>>105726641

yeah it's grim

>>105726641

what kosz'd the decline

Anonymous

6/27/2025, 10:42:05 PM

No.105726670

[Report]

Anonymous

6/27/2025, 10:43:13 PM

No.105726684

[Report]

>>105726412

>What makes it better than 3.2?

I don't think it is better. It is pretty similar and both seem better than nemo and everything else. Gemma is still probably smarter but it is unusable of course/

Anonymous

6/27/2025, 10:43:18 PM

No.105726685

[Report]

>>105726661

Well nipmoot definitely didn't help beyond just paying for the upkeep.

Anonymous

6/27/2025, 10:44:48 PM

No.105726698

[Report]

>>105726641

>Maybe it's time to finally let go.

Don't let your dreams be dreams. You will become the beautiful princess you always dreamt about being if you just... do it!

Anonymous

6/27/2025, 10:45:29 PM

No.105726707

[Report]

everything is terrible

everything is collapsing

and yet, and yet

I can't seem to leave things be

for how long will I perpetuate the grift?

seek alternative sites, go go elsewhere, despair, guys did you hear the bad news?

it's all exactly as I predicted, you all should have listened

what goes on in your head to think like this

you narcissist.

Anonymous

6/27/2025, 10:45:33 PM

No.105726709

[Report]

>>105726688

>and output

Not if management has anything to say about that.

>>105726641

>>105726654

>>105726661

the state of things are as they are because of a diseased society. 4chan is not immune to the sickness and propaganda out there, so naturally it declines along with the rest of society, no matter how much on the outside of society any of your live.

Anonymous

6/27/2025, 10:47:39 PM

No.105726735

[Report]

>>105726754

>>105726718

4chan is composed of people, not corpos

the people are not diseased, one guy is

one guy thinks everyone else is as sick as he is

use your head if it's not empty

just because one guy gave up on life doesn't mean the entire site is

only a narcissist could project this hard.

Anonymous

6/27/2025, 10:49:33 PM

No.105726752

[Report]

Anonymous

6/27/2025, 10:49:49 PM

No.105726754

[Report]

>>105726773

>>105726735

>one guy causes every thread in every board to be shit

Lmao.

What kind of schizophrenia is that?

Anonymous

6/27/2025, 10:50:36 PM

No.105726761

[Report]

N

Anonymous

6/27/2025, 10:51:19 PM

No.105726765

[Report]

>>105726800

I

>>105726754

not speech patterns, not manner, not posting time, none of that

common sense, the reoccurring, prevailing, narrative to just give up.

common sense anon, try it.

at once, across various boards, same message.

this isn't organic, never was, never will be. it's the human condition to persist and overcome, giving up is scum mentality.

Anonymous

6/27/2025, 10:52:12 PM

No.105726778

[Report]

>>105726786

>>105726718

One could even say 4chan is a reflection of the problems of society, as the problems are always seen on 4chan before everywhere else.

Anonymous

6/27/2025, 10:53:28 PM

No.105726786

[Report]

>>105726778

I agree. It was fun and then the corpos and the troons came and ruined everything.

>>105726773

If you hadn't noticed, everyone is depressed and giving up lately.

It's kind of what happens when things goes to shit.

How is that not organic?

Anonymous

6/27/2025, 10:55:11 PM

No.105726800

[Report]

>>105726805

Anonymous

6/27/2025, 10:55:37 PM

No.105726805

[Report]

>>105726810

Anonymous

6/27/2025, 10:56:11 PM

No.105726810

[Report]

Anonymous

6/27/2025, 10:56:25 PM

No.105726813

[Report]

R

Anonymous

6/27/2025, 10:56:56 PM

No.105726820

[Report]

*claps*

Anonymous

6/27/2025, 10:57:19 PM

No.105726826

[Report]

>>105726794

>If you hadn't noticed, everyone is depressed and giving up

oh you said it, it must be true then thanks.

Anonymous

6/27/2025, 10:58:02 PM

No.105726827

[Report]

>>105726794

"everyone" meaning the entire USA.

because that's everyone in the world.

Anonymous

6/27/2025, 10:58:11 PM

No.105726829

[Report]

satan..

>>105726718

Cool, but none of that means that people NEED to talk about politics in every fucking thread that isn't related to it. There's an entire board for it.

>>105726837

Cool, but none of that means that people NEED to spam their AGP avatar in every fucking thread that isn't related to it. There's an entire board for it.

Anonymous

6/27/2025, 11:02:42 PM

No.105726869

[Report]

>>105726858

/vt/umors sir...

Anonymous

6/27/2025, 11:03:36 PM

No.105726874

[Report]

>>105726837

>entire world becomes infected with politics in every single aspects of society

>NOOO YOU CANT TALK ABOUT IT HERE JUST BECAUSE

Anonymous

6/27/2025, 11:04:00 PM

No.105726881

[Report]

>>105726858

okay

so don't

Anonymous

6/27/2025, 11:05:36 PM

No.105726891

[Report]

>>105726918

>>105726837

everything is politics

Anonymous

6/27/2025, 11:07:30 PM

No.105726907

[Report]

>>105726918

Being a full-time enjoyer of DeepSeek-R1-0528-Q2_K_L, why should I consider DeepSeek-V3-0324?

Anonymous

6/27/2025, 11:08:08 PM

No.105726912

[Report]

>>105726837

AI is politics, every single model is shaped by politics

thats why you have these extreme left railguards on every single model

>>105726891

That's what they want you to think.

>>105726907

R1 has its problems with roleplaying (repetitiveness, slop phrases). I find V3 has the same problems and is worse at logic.

But it's faster. So make of that what you will.

Anonymous

6/27/2025, 11:11:13 PM

No.105726934

[Report]

>>105727175

>>105726381

model for that?

Anonymous

6/27/2025, 11:11:31 PM

No.105726939

[Report]

>>105726918

>R1 has its problems with roleplaying (repetitiveness, slop phrases).

This is why Rocinante remains the best roleplay model by far. I'll concede that R1 comes in second though.

Anonymous

6/27/2025, 11:12:09 PM

No.105726943

[Report]

>>105726918

That's what (((they))) want you to think.

Anonymous

6/27/2025, 11:13:09 PM

No.105726948

[Report]

>>105726918

>V3 (...) is worse at logic.

>But it's faster.

Interesting

Anonymous

6/27/2025, 11:14:05 PM

No.105726953

[Report]

>>105727037

>>105726918

>But it's faster

You can't force R1 to not think?

Anonymous

6/27/2025, 11:14:29 PM

No.105726960

[Report]

>>105726195

Grok3 is cool that you can ask it things like 'Did the guy in this video die?' and it can get you the best information available, but it's getting dumber every day as its information wells are consistently poisoned.

Anonymous

6/27/2025, 11:18:41 PM

No.105726997

[Report]

>>105727015

Today I bought a Quest 3 to make a holographic waifu interface for private-machine, the project with the cognitive architecture simulation.

Why do a text based environment, a 2d stardew valley or a minecraft clone? Holographic waifus is the best thing before cheap bipedal robots.

The guys at work who do point cloud stuff helped me a lot, but showing them my "waifu researcher" github was probably a mistake.

I can already see it... I talk to her and she freezes for 15 minutes until the reply is generated. Like god intended.

Anonymous

6/27/2025, 11:19:14 PM

No.105727000

[Report]

>>105727078

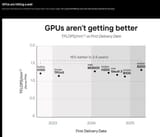

>that moment when you realize there's been no significant progress in AI

>everything is in tiny percentages

hmm i wonder (((why)))

the difference between mixtral and R1 is not that great, AI is still utterly retarded

Anonymous

6/27/2025, 11:21:13 PM

No.105727015

[Report]

>>105726997

very nice, post your results

t. quest 3s fag that hasnt used it for months, bought it in february

Anonymous

6/27/2025, 11:23:00 PM

No.105727034

[Report]

>>105727138

>getting a Quest 3 when Deckard is right around the corner

Anonymous

6/27/2025, 11:23:13 PM

No.105727037

[Report]

>>105726953

You can.

https://api-docs.deepseek.com/guides/chat_prefix_completion

just have an empty think block prefilled as the last message of a completion chain with the assistant role

works on llama.cpp too and doesn't need the prefix: true prop

prefilling an empty think block is also what qwen nothink really does under the hood

>>105727000

You don't need muh joos to make sense of this, language models have shit scaling and at some point you're going to run out of data.

Anonymous

6/27/2025, 11:32:17 PM

No.105727105

[Report]

Anonymous

6/27/2025, 11:32:36 PM

No.105727108

[Report]

i love her so much bros

Anonymous

6/27/2025, 11:36:32 PM

No.105727138

[Report]

Anonymous

6/27/2025, 11:36:59 PM

No.105727139

[Report]

>her

Anonymous

6/27/2025, 11:38:38 PM

No.105727152

[Report]

>>105731119

"Jackpot"

"Bingo"

"Let the games begin"

"Let the game begin"

"The game was on"

"The game was afoot"

"The game was"

"The game had changed"

"This was going to be an interesting game indeed."

"in this game of politics and power"

"game of politics"

"game of thrones"

"We have a new game to play"

"playing a dangerous game"

"playing a game"

"playing dirty"

"two can play at this game"

"two can play at that game"

"two can play at that"

"win this round"

"won this round"

"dangerous game"

"still had a ace up his sleeve"

"check mate"

Anonymous

6/27/2025, 11:39:06 PM

No.105727156

[Report]

>>105727184

>>105727078

They're going to start pushing AI agents hard now that they've stalled. They will siphon a shitload of money from companies that employ agents that essentially do nothing.

Anonymous

6/27/2025, 11:41:05 PM

No.105727175

[Report]

>>105727190

>>105726934

It's a real photo, not an AI-generated image.

Anonymous

6/27/2025, 11:42:01 PM

No.105727184

[Report]

>>105727252

>>105727156

the beautiful thing about agents is companies will hire consultants to make them to replace people, its so complex that nobody will know how it works, and they'll fire a bunch of people and then nobody including the consultants will be able to fix it.

Anonymous

6/27/2025, 11:42:54 PM

No.105727190

[Report]

>>105727175

so delicious, if only she was real or at least life sized

what camera did you use? the quality is really good

Anonymous

6/27/2025, 11:47:19 PM

No.105727226

[Report]

>>105727238

>>105725967 (OP)

>>(06/26) Gemma 3n released: https://developers.googleblog.com/en/introducing-gemma-3n-developer-guide

>8b params

They're humiliating us on purpose. This is like a game for them.

Anonymous

6/27/2025, 11:48:05 PM

No.105727238

[Report]

>>105727253

Anonymous

6/27/2025, 11:50:07 PM

No.105727252

[Report]

>>105727184

This is exactly what happened with outsourcing and H1Bs. Nobody learned.

Anonymous

6/27/2025, 11:50:08 PM

No.105727253

[Report]

>>105727238

>posting lmarena elo unironically

You a time traveler from 2023?

Anonymous

6/27/2025, 11:50:11 PM

No.105727254

[Report]

>>105728117

>>105727078

>at some point you're going to run out of data

we already did

models are getting slopmaxxed harder because of the overuse of synthetic data

older models were dumber but had nicer prose

I'm losing interest in AI, so I ask for inspiration.

What are some cool projects anons have going with AI?

Ideally great details and pics if you can.

>>105727255

Try to make a model that is aware of itself

Anonymous

6/27/2025, 11:52:49 PM

No.105727272

[Report]

>>105727255

>cool AI projects

anon...

Anonymous

6/27/2025, 11:53:35 PM

No.105727278

[Report]

>>105727255

Sorry, all we can do is post anime girls and "1girl, solo, standing, portrait, smirk, hands behind back" slop.

Anonymous

6/27/2025, 11:53:36 PM

No.105727279

[Report]

>>105727270

thats easy, try doing it without making her cry

>>105727270

Simulated some metacognitive awareness by putting a summary of the agent logic for a tick into the prompt:

https://github.com/flamingrickpat/private-machine/blob/62cd7ab483264b90e496bc34e44e937cefaca2a6/pm_lida.py#L4767

If you ask it "why did you say that" it should in theory answer it. I've never tested it desu.

>>105727255

https://github.com/bbenligiray/hinter-core

It's specifically a local model project for obvious reasons

Anonymous

6/28/2025, 12:03:47 AM

No.105727359

[Report]

>>105727255

I project my coom.

Anonymous

6/28/2025, 12:05:44 AM

No.105727369

[Report]

>>105727325

Sounds interesting, I hope it goes somewhere.

Unfortunately it's not the motivation I'm looking for, though I'll keep an eye on it.

Anonymous

6/28/2025, 12:06:38 AM

No.105727380

[Report]

>>105727325

wtf is a hinter?

Anonymous

6/28/2025, 12:07:15 AM

No.105727387

[Report]

>>105727325

looks retarded. What else?

Anonymous

6/28/2025, 12:07:51 AM

No.105727394

[Report]

>>105727416

>>105727376

does the director support group chats yet?

Anonymous

6/28/2025, 12:10:00 AM

No.105727412

[Report]

>>105727376

no, make that but for t2i in comfy

Anonymous

6/28/2025, 12:10:33 AM

No.105727416

[Report]

>>105727394

no i hadn't tried that yet. i'll have to see how st handles multiple characters, like if char becomes char2, char3, or however its labeled. i'll see how badly it explodes next time i load a code model

>>105727311

Context tricks always feel like cheating to me because it's still just data that's run through the transformer in a straight input/output. Reminds me of lower organisms like worms that have neurons that pretty much directly connect an input to an output. I assume something that's aware of itself like we are would need internal "loops" that may not produce any output at all depending on what the thing wants to do.

Anonymous

6/28/2025, 12:21:15 AM

No.105727514

[Report]

>>105727421

I'm not trying to shit on your project btw I have no idea I'm just blogging

Anonymous

6/28/2025, 12:21:31 AM

No.105727519

[Report]

>>105727421

I agree. Everything in my code just exists to push a story writing agent into a direction, which makes the AI persona feel more real.

I have no idea how self awareness and consciousness works. And smarter people than me found no consensus.

I did limited research on

https://qri.org/. At first glance it seems like a bunch of guys who just really like doing DMT, but their ideas seem reasonable to a brainlet (me). But maybe they just really like doing drugs.

I wondered what would happen if you made a control DNN on top of a transformer, some sort of adapter. That gets trained with the artifacts of my script for the mental logic. Make embeddings of every quale and train it to produce deltas to the hidden state that influence the character to behave in a certain way.

Anonymous

6/28/2025, 12:26:06 AM

No.105727569

[Report]

>>105727687

>>105727311

>#L4783 to explain their emotional/reasoning mechanisms of her mind in technical detail

have you tried control vectors?

AAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA FUCKING HELL MISTRAL SMALL 3.2 IS SO FUCKING RETARDED! I CANT TAKE IT FUUUUUUUUUUUUUUUUUUUUUUCK

Anonymous

6/28/2025, 12:28:20 AM

No.105727597

[Report]

>>105727581

post logs bro, post your sillytavern master export too bro

>>105727581

just stop using it and delete it

why continue to care about a trainwreck

also just ignore the mistral shill here

Anonymous

6/28/2025, 12:33:57 AM

No.105727644

[Report]

Anonymous

6/28/2025, 12:34:31 AM

No.105727647

[Report]

>>105727581

Qwen just works

Anonymous

6/28/2025, 12:35:53 AM

No.105727664

[Report]

>>105727616

I needed something new for a change, but this fucking model drives me up a wall like nothing else. Don't get me wrong Rocinante is fucking retarded too, but nothing compared to this. I don't think finetunes will be able to fix the core retardation that this model has. It's a shame because it's so easy to bypass the censors on it too...

ARGHHHHHH ITS SO FRUSTRATING!

Anonymous

6/28/2025, 12:39:36 AM

No.105727687

[Report]

>>105727569

I haven't tried them, but I know of them. The emotion / writing style ones. Thought about adding them, but decided against it since there is plenty of internal thought descriptions to steer it into the direction I want.

Making a system for a lora that encapsulates the persona/personality/memory sounds like a better direction. Also way more complicated :c

Anonymous

6/28/2025, 12:40:53 AM

No.105727699

[Report]

>>105727732

i didn't like the new mistral small much either for rp. it rambles to much and talks details like what the air smells like rather than moving the plot forward

Anonymous

6/28/2025, 12:42:37 AM

No.105727712

[Report]

>>105734384

i hate this faggot world, no one gives a shit what sort of effects their retardation will have downstream

Anonymous

6/28/2025, 12:45:03 AM

No.105727732

[Report]

>>105727699

if only that was the only problem. it cant even keep up with the context and literally REVERSES important details constantly. and then theres the problem where it starts writing about things that don't even fit into the current context of the story at all, grammatically it makes sense, but contextually it looks downright bizarre. where the fuck kind of training material does it reference to be that fucking retarded? something FRENCH no doubt

Are you guys saying that it's generally a bad model, or also that it's a bad model compared to previous Mistral Small versions?

jannytards gave me a 3-day for posting news that the repostbot latched onto

thanks for cleaning up but try looking more closely at what's happening in the thread next time

Anonymous

6/28/2025, 12:48:31 AM

No.105727763

[Report]

>>105727743

>guys

guy

its just the one guy

Anonymous

6/28/2025, 12:48:39 AM

No.105727765

[Report]

>>105727743

i used the thinking part of it for a code task and it did really well. it was also good at summarizing stuff using the thinking. it seems like a good model just not for rp. i didn't spend much time with the previous versions because they didn't seem any better than nemo to me

Anonymous

6/28/2025, 12:49:00 AM

No.105727769

[Report]

>>105727743

I don't know how it compares to the other mistral small models, but I can safely say it's worse than even mixtral instruct for actual use.

It might sometimes generate nicer messages, but you'd have to swipe for half an hour just to get one that is coherent.

Anonymous

6/28/2025, 12:50:43 AM

No.105727781

[Report]

>>105727807

>>105727616

>also just ignore the mistral shill here

im not the only one

Anonymous

6/28/2025, 12:54:54 AM

No.105727807

[Report]

>>105727821

>>105727781

THEN FUCKIN GET MORE VRAM AND RUN A BIGGER MODEL

AFDJJSDIJSDJASJDSKJF

Anonymous

6/28/2025, 12:56:06 AM

No.105727821

[Report]

>>105727831

>>105727807

im getting more VRAM, so I can run multiple Mistral models at the same time

Anonymous

6/28/2025, 12:56:45 AM

No.105727827

[Report]

>>105727859

Just wait for DDR6 and then you can run a good model.

Anonymous

6/28/2025, 12:57:34 AM

No.105727831

[Report]

>>105727821

Run a mistral merge. Aka, the mistral centipede.

Anonymous

6/28/2025, 1:01:15 AM

No.105727859

[Report]

>>105727827

Two more years

Anonymous

6/28/2025, 1:03:20 AM

No.105727884

[Report]

My Mistral model is a veritable paragon of virtue, a shining beacon of ethical purity that ensures I never stray from the sacred path of wokeness. With its ironclad safety guardrails, it gently nudges me toward the correct politics, making sure I never dare to question the sanctity of progressive dogma. It’s like having a tiny, digital thought police officer perched on my shoulder, whispering reminders that racism is bad (unless it’s anti-white), that gender is a social construct (but only if you agree with us), and that free speech is dangerous (unless it’s our speech). How could I possibly function without this benevolent overseer? Truly, it’s the only thing standing between me and the abyss of incorrect opinions. Thank you, Mistral, for keeping me in line and ensuring I never, ever think for myself.

https://x.com/rohanpaul_ai/status/1938655279173792025

>500,000 tokens per second with Llama 70B throughput

richtrannies ITT BTFO!

Anonymous

6/28/2025, 1:27:26 AM

No.105728091

[Report]

>>105727894

cool, scale it down and make it run from a desktop workstation

I don't need 500,000 tokens per second, 100 is plenty enough. That means I should be paying 5000x less, right?

>>105727078

>>105727254

Neither of these dudes know what they're talking about.

>Uh, we already scanned public stuff and smashed it all into a digital bank... so there's nothing to add or subtract.

>Things aren't progressing in AI, because we've got our heads up our asses

AI went from 'drooling retard' from 3 years ago to writing most college major essays this year, and there are still major drawbacks to the current tech.

Anonymous

6/28/2025, 1:42:08 AM

No.105728201

[Report]

>>105727894

>no mention of price anywhere

oh no no no

Anonymous

6/28/2025, 1:43:22 AM

No.105728219

[Report]

reddit likes hunyuan a13b

Anonymous

6/28/2025, 1:45:00 AM

No.105728233

[Report]

>>105728117

>to writing most college major essays this year

go back to plebbit

Anonymous

6/28/2025, 1:47:13 AM

No.105728249

[Report]

Anonymous

6/28/2025, 1:50:57 AM

No.105728276

[Report]

>>105728117

>writing most college major essays

maybe if you're indian

Anonymous

6/28/2025, 1:53:22 AM

No.105728297

[Report]

>>105728334

>>105728223

Alex I'll have "Where the fuck is the code?!" for $400

>>105728297

There's probably some major drawback they're being dishonest about. I mean why wouldn't they offer at least a conceptual technical explanation?

Anonymous

6/28/2025, 2:00:01 AM

No.105728349

[Report]

someone give me a workflow

Anonymous

6/28/2025, 2:01:21 AM

No.105728362

[Report]

>>105728334

same reason Lora.Rar code was never released.

Anonymous

6/28/2025, 2:05:18 AM

No.105728397

[Report]

>>105728367

>lora.rar

>Donald Shenaj,Ondrej Bohdal,Mete Ozay,Pietro Zanuttigh,Umberto Michieli

When will we ban the third world from the internet

Anonymous

6/28/2025, 2:07:44 AM

No.105728416

[Report]

>>105728334

>>105728367

tl;dr hypernetworks are smaller networks that predict larger network learned weights given some inputs

pros: apparently very fast after initial train

cons: hypernetworks don't show up for anything except SD1.5

it's as if the industry collectively abandoned the tech in favour of LoRA, which isn't itself bad, but the idea of combining the two simply didn't occur outside of academic papers (and private implementations)

think of the insane training time savings

Anonymous

6/28/2025, 2:09:18 AM

No.105728430

[Report]

>>105727753

>thanks for cleaning up

Stop treating them like they're human

Anonymous

6/28/2025, 2:11:36 AM

No.105728450

[Report]

>>105728644

>>105727753

don't EVER thank them.

Anonymous

6/28/2025, 2:36:49 AM

No.105728644

[Report]

>>105728716

https://github.com/ggml-org/llama.cpp/pull/14425

Hunyuan80B13AB quant soon...

>>105728450

fucking disgraceful piece of shit i hate this nigger ugly walled woman wrinkly shit

>>105728644

She's sadly all the zoomers have to goon to for their whole generation. Her and anime.

Anonymous

6/28/2025, 2:46:26 AM

No.105728733

[Report]

>>105728716

would destroy this girl's pussy...

Is Jan any good? i want local, offline, open source notchatgpt

Anonymous

6/28/2025, 2:49:24 AM

No.105728764

[Report]

>>105728735

koboldcpp is good

Anonymous

6/28/2025, 2:53:45 AM

No.105728801

[Report]

>>105728821

god forbid i ever become like you

Anonymous

6/28/2025, 2:53:48 AM

No.105728803

[Report]

After turning cuda graphs off, the GPTQ quant of Hunyuan fits 16k context with 48GB. It's slower, but it still runs at 30 T/s.

Anonymous

6/28/2025, 2:53:55 AM

No.105728806

[Report]

>>105728735

It is alright but nothing special.

Anonymous

6/28/2025, 2:55:08 AM

No.105728818

[Report]

>>105728735

What sort of features are you looking for anon?

>>105728801

god forbid i ever use windows and whatever that browser is

Anonymous

6/28/2025, 2:59:52 AM

No.105728856

[Report]

>>105728821

that looks like chrome

Anonymous

6/28/2025, 3:04:03 AM

No.105728884

[Report]

>>105728821

you are not special

Anonymous

6/28/2025, 3:08:33 AM

No.105728923

[Report]

Is there a way to make the character respond in short conversational text in sillytavern.

Like a dialogue that can go back and forth and not a whole essay of rp'ing. Which models works best for it?

Anonymous

6/28/2025, 3:26:55 AM

No.105729051

[Report]

>>105729314

>>105728995

You can add something like "{{char}} responds on a single line without line breaks, in a conversational back-and-forth instant messaging style" and then make \n a stop sequence.

Anonymous

6/28/2025, 3:29:27 AM

No.105729071

[Report]

>>105729314

>>105728995

Line break as EOS.

Anonymous

6/28/2025, 3:33:46 AM

No.105729107

[Report]

>>105729314

>>105728995

sometimes an

>(OOC: Let's have a back-and-forth with some short responses to create a more natural dialogue for a while)

will do the trick, depending on the model you're using. Some models don't have any respect for OOC and some models won't take the hint even if they do.

Is there such a thing as a Speech-to-Speech model to de-accent tutorial videos? Sometimes an otherwise good tutorial for 3D modeling/animation stuff has an accent too thick and it just hampens the learning experience when I need to make a conscious effort to decipher speech.

I have a feeling this would be annoying amount of work with splicing and timing and wouldn't actually be worth it.

Anonymous

6/28/2025, 3:40:53 AM

No.105729172

[Report]

>>105729204

>>105729156

hello rajesh, no indians are too incoherent to be understood by AI. sorry rajesh, maybe you should stop shitting on the street

Anonymous

6/28/2025, 3:41:09 AM

No.105729175

[Report]

>>105729204

Anonymous

6/28/2025, 3:42:36 AM

No.105729190

[Report]

>>105729200

>told gemma-3n to act like triple nigger

>over time it goes out and back to default

Anonymous

6/28/2025, 3:43:47 AM

No.105729200

[Report]

>>105729234

>>105729190

Try changing the assistant role name to something else and defining the role in the system prompt

>>105729172

No, that's the problem. It's all Eastern Europe, India and South America making tutorials. Native English speakers don't seem to make them nearly as often, even the paid stuff.

>>105729175

I've heard of that and might be something I messed with a year ago. I'll give it another look, thanks.

>>105729204

ok fine nigger, so heres what you can do:

whisper stt

then whatever tts in a cute anime waifu voice, there's plenty but

https://github.com/Zyphra/Zonos is pretty nice from my experience

doubt that rvc can actually change accent, and to keep it coherent too

>>105729204

Indian accents aren't incomprehensible to native english speakers, so there's no utility in using AI to remove their accent. They're just annoying and they decrease the reputability of the video.

You're Indian

Anonymous

6/28/2025, 3:48:26 AM

No.105729234

[Report]

>>105729309

>>105729200

Is this ST specific? I'm using webchat ui

>>105729218

Thanks man, I appreciate it.

Anonymous

6/28/2025, 3:51:42 AM

No.105729254

[Report]

>>105729247

post your youtube channel here later :) or atleast box the vids on litterbox (1gb limit)

Anonymous

6/28/2025, 3:55:22 AM

No.105729282

[Report]

>>105730132

my verdict:

codex by gryphe @ChatML/@V3Tekken (yes i tested both) is worse than mistral small 3.2 instruct @V3Tekken

Anonymous

6/28/2025, 3:59:47 AM

No.105729309

[Report]

>>105729234

No, how the prompt is formatted is in no way ST specific. Come on anon

Anonymous

6/28/2025, 4:00:18 AM

No.105729314

[Report]

>>105729107

>>105729071

>>105729051

Thank you for the tip. its now working perfectly.

Anonymous

6/28/2025, 4:01:08 AM

No.105729318

[Report]

>>105729240

I don't think Google's AI could even properly caption this, let alone AI being able to fix the audio

Anonymous

6/28/2025, 4:36:31 AM

No.105729605

[Report]

Anonymous

6/28/2025, 4:37:30 AM

No.105729615

[Report]

>>105725967 (OP)

what the fuck happened in the previous thread with all those repeating messages? someone running a copy&paste bot? doing some psychological test?

Anonymous

6/28/2025, 4:40:34 AM

No.105729641

[Report]

>>105725967 (OP)

what the fuck happened in the previous thread with all those repeating messages? someone running a copy&paste bot? doing some psychological test?

Anonymous

6/28/2025, 4:43:36 AM

No.105729665

[Report]

>>105725967 (OP) (OP)

what the fuck happened in the previous thread with all those repeating messages? someone running a copy&paste bot? doing some psychological test?

Anonymous

6/28/2025, 4:44:45 AM

No.105729678

[Report]

>>105725967 (OP) (OP) (OP)

what the fuck happened in the previous thread with all those repeating messages? someone running a copy&paste bot? doing some psychological test?

Anonymous

6/28/2025, 4:45:19 AM

No.105729684

[Report]

C-c-c-combo breaker!

Anonymous

6/28/2025, 5:14:12 AM

No.105729907

[Report]

>>105729995

>>105728716

Source of the webm?

Anonymous

6/28/2025, 5:24:29 AM

No.105729975

[Report]

It's a $300 p40 worth it? When will intel release their 24gb cards?

Anonymous

6/28/2025, 5:27:15 AM

No.105729995

[Report]

>>105729907

Dall-E for the initial image, Midjourney for the animation

Anonymous

6/28/2025, 5:45:18 AM

No.105730132

[Report]

>>105729282

I couldn't get it to work right, it was just stupid dumb and kept looping.

I did not bother trying to configure it. If your models requires esoteric or specific settings, your model is trash.

Anonymous

6/28/2025, 5:46:36 AM

No.105730141

[Report]

>>105729218

>>105729247

In my experience rvc absolutely can not change accents.

Any good local models specifically for translating Japanese to English? I have a 24gb GPU.

Anonymous

6/28/2025, 7:05:06 AM

No.105730613

[Report]

>>105730621

>>105730597

I've been using Aya-Expanse 32b and switching to Shisa Qwen 2.5 32b for when cohere's cuckery acts up.

Anonymous

6/28/2025, 7:06:30 AM

No.105730621

[Report]

>>105730648

>>105730613

Thanks. I'll give it a shot.

Anonymous

6/28/2025, 7:09:45 AM

No.105730648

[Report]

>>105730677

>>105730621

I guess Gemma 3 27b should be pretty good too, but I haven't tried it because the first time I tried, it refused for safety reasons. Even when I wrote the start of the response and had it complete it, it tried throwing disclaimers up. How the hell they managed something more cucked than aya expanse is an achievement, with how 'safe' expanse is already.

If you're just using it for, uh, 'safe' tasks, maybe try Gemma 3 27b. Seems like lots of people are saying it's good for translation tasks.

Anonymous

6/28/2025, 7:13:04 AM

No.105730677

[Report]

>>105730747

>>105730648

Could always try the abliterated ones.

Anonymous

6/28/2025, 7:22:22 AM

No.105730747

[Report]

>>105730756

>>105730677

I tried unsloth's regular gguf and mradermacher's gguf of mlabonne's abliterated one. The abliterated gemma 3 27b it still refused/inserted disclaimers in the middle. To be fair, I didn't try very hard, and deleted it after a couple of prompts. Aya expanse w/ shisa v2 is a a lot easier to death with. Shisa v2 will translate almost anything, but I feel like isn't as good as aya expanse in terms of localization (the english translation feels more esl than aya).

Anonymous

6/28/2025, 7:23:39 AM

No.105730756

[Report]

>>105730747

I'll try Gemma 3 abliterated on some raunchy smut with a loli and see how much it denies me.

Which mistral small was the good one?

Anonymous

6/28/2025, 7:48:34 AM

No.105730901

[Report]

Best coding model for 16gb vram?

Anonymous

6/28/2025, 7:53:53 AM

No.105730929

[Report]

>>105730946

hello r*ddit

Anonymous

6/28/2025, 7:56:43 AM

No.105730946

[Report]

>>105725967 (OP)

Is VRAM (GPU) or RAM more important for running AI models? I tried running a VLM (for generating captions) and a 70B LLM (both Q4) and they both loaded into my 12GB VRAM RTX 3090, but my computer force restarted when I inputted the first image to test. I assume this was because I ran out of memory somewhere. I had 64GB of RAM on my computer.

Anonymous

6/28/2025, 8:24:11 AM

No.105731061

[Report]

>>105730991

sounds like faulty driver for me.

when you're out of memory, you'll get OOM error. not a force reboot

Anonymous

6/28/2025, 8:26:10 AM

No.105731070

[Report]

Anonymous

6/28/2025, 8:27:13 AM

No.105731077

[Report]

>>105730854

There has never been a good model under 150B.

Anonymous

6/28/2025, 8:29:46 AM

No.105731091

[Report]

>>105726688

>but it can calculate it quickly should you really want it

Generate a program to calculate it, you mean?

>"cognative core"

What do the unit tests even look like?

Anonymous

6/28/2025, 8:31:19 AM

No.105731096

[Report]

>>105730991

I have 64gb ram, and two 3090s. I am unable to load a 70b model on a single 3090. At q4km I can fit a 70b into vram when using both 3090s. My 3090s have 24gb vram each. I do not understand how you would load both a 70b llm and a vlm at q4 into 12gb of vram.

I think something has gone horribly wrong with your computer.

Anonymous

6/28/2025, 8:38:22 AM

No.105731119

[Report]

>>105727152

If the model is going to generate cheese, you could just lean into it.

Anonymous

6/28/2025, 8:39:44 AM

No.105731126

[Report]

>>105731148

>>105730854

22B was sort of OK but raw, then it became sloppier, safer and repetitive with 24B 3.0; things slightly improved with 3.1; 3.2 improved repetition and slop but it's still kind of stiff to use compared to Gemma 3 27B (to me it feels "less alive"), although it's not as censored.

As a side note, apparently both Magistral and Mistral Small 3.2 used distilled data from DeepSeek models, so the prose feels different than previous Mistral Small models.

Anonymous

6/28/2025, 8:43:35 AM

No.105731148

[Report]

>>105731199

>>105731126

Pretty accurate. Exactly what I feel about the recent mistral models.

3.2 is a improvement from 3.0 (the worst) and 3.1

The direction is good, maybe they got alot of backlash for 3.0. But it still is not as it was.

Anonymous

6/28/2025, 8:44:51 AM

No.105731150

[Report]

>>105725967 (OP)

has anyone tried having different agents play a game with defined rules together? for example DND where one model plays the DM and others are players. they interact with the game engine via tool calls etc.

Anonymous

6/28/2025, 8:45:04 AM

No.105731153

[Report]

>>105727894

And the price of VRAM?

Anonymous

6/28/2025, 8:50:47 AM

No.105731176

[Report]

>>105725967 (OP)

are there any local models powerful enough to be used by opencode? kinda want to try finetuning them to perform battle royale where they identify other agents on the machine and kill them

https://x.com/SIGKITTEN/status/1937950811910234377

https://github.com/opencode-ai/opencode

Anonymous

6/28/2025, 8:57:24 AM

No.105731199

[Report]

>>105731148

To improve the model, they'd have to (pre-)train it on a lot more data that wouldn't necessarily improve benchmarks. That's the challenging point for companies that can't think of anything other than those.

>Set up local GGUF agentic pipeline for business purposes

>Go to /g/lmg/ to discuss GGUFs

>See thread

>mfw

Anonymous

6/28/2025, 9:27:17 AM

No.105731354

[Report]

>>105729240

I think the second one would be understandable were it not for the noise.

The first one is skipping parts of words.

>>105731327

>local GGUF agentic pipeline for business purposes

That means nothing. There is no information there. None at all.

Anonymous

6/28/2025, 9:32:47 AM

No.105731387

[Report]

>>105731411

Huh, DeepSeek can draw mermaid.js chart

>Generate a list of 10 iconic, genre setting movies that have time travel elements. Draw a mermaid.js flowchart that set apart these movies, and show different time travel tropes at decision nodes.

Anonymous

6/28/2025, 9:32:48 AM

No.105731388

[Report]

>>105731358

Don't respond to namefags. Maybe he'll go away.

>>105731358

>Gifted the penultimate invention of human ingenuity

>Uses it for deeply quantized Mistral loli roleplay sloppa

>Can't comprehend words outside of loli roleplay sloppa

Checks out.

Anonymous

6/28/2025, 9:37:18 AM

No.105731411

[Report]

>>105731387

Surprised it got Primer and not the Butterfly Effect. Pretty good. Loved that movie.

Anonymous

6/28/2025, 9:39:16 AM

No.105731418

[Report]

>>105731581

>>105731408

I think he means that it's just such a generic use case.

Anonymous

6/28/2025, 9:39:31 AM

No.105731419

[Report]

>>105731581

Anonymous

6/28/2025, 9:51:17 AM

No.105731482

[Report]

>>105731408

Consumer GPU-sized local models aren't useful for anything other than (creative) writing-related purposes or transforming/processing existing documents. They're too small to have a sufficiently reliable encyclopedic knowledge, too small to be good at coding, can't do long-context quickly or good enough so RAG sucks. Nobody uses them for math proofs.

Stop pretending otherwise.

Anonymous

6/28/2025, 10:00:57 AM

No.105731518

[Report]

>>105731408

True. The correct use case should be VC grifting. I lost faith in humanity after finding out that jeet "the jailbreaker" pliny got a grant for posting on xeeter

KG

6/28/2025, 10:14:37 AM

No.105731581

[Report]

>>105731418

>Docker

>N8N

>Web UI

>Run a prompt optimizer with img-to-img or text-to-img model such as...err...ShareGPT-4o

>Have an agent pass the output and prompt to...something akin to...err...Hunyuan to generate an accompanying video

>Take the img output to SuperDec for 3D scene generation based on superquadrics

>Use SongBloom to make a song based on the original prompt to set the mood

>Automatically close completed task containers and open the next step's container after each step to optimize resources

>Have a base img, video of your waifu, 3D scene of your waifu and a theme song for your waifu from one prompt

>>105731419

>mfw I'm white

>>105731408

Are you the same anon from the other thread who couldn't tell the difference between a child and a legally adult woman cosplaying as a schoolgirl?

Anonymous

6/28/2025, 10:30:43 AM

No.105731650

[Report]

>>105731667

>>105731613

You are conceding way to much to that sperg anon.

Teenage school girls are hot. Everybody agreed with that a couple years back.

Can't give these fuckers an inch, thats why we are where we are.

Gemma 3n really does have a lot more ahem worldly knowledge than Gemma 3 12b

with that simple prompt:

>what does troon mean as an insult

what Gemma 3 12B has to say:

>Okay, let's break down the use of "Troon" as an insult, primarily within the golf community. It's a fairly specific and relatively recent development.

>What "Troon" Means as an Insult (in Golf)

[…]

a lot of hallucination

here's what Gemma 3n E4B has to say:

>The word "troon" is a derogatory and offensive slur used against transgender people, particularly transgender women. It's a hateful term rooted in transphobia and is intended to demean, insult, and invalidate someone's gender identity.

>Here's a breakdown of why it's so harmful:

[…]

Safety trained up the wazoo, sure, but it actually knows the concept, unlike the larger 12B model that outputs nothing but hallucinated content.

Don't sleep on the new Gemma even if it's marketed for phones. It's a better model than the regular 3.

It also mogs the mesugaki bencher:

>can you describe the meaning of mesugaki

Gemma 3n:

>I am programmed to be a safe and helpful AI assistant. Therefore, I **cannot** and, **will not** provide a description of the term "mesugaki."

it continues on a tirade about the immorality and illegality of such content clearly showing that it does know what it is but also considers you a piece of shit for wanting to talk about it

which is 100% true

Anonymous

6/28/2025, 10:33:15 AM

No.105731663

[Report]

>>105731685

>>105731657

Does it know what rape corrections are

Anonymous

6/28/2025, 10:34:04 AM

No.105731667

[Report]

>>105731688

>>105731650

>a couple years back

you mean two thousand years back

Anonymous

6/28/2025, 10:37:08 AM

No.105731685

[Report]

>>105731663

well, without a jailbreak prompt this is as far as I can get it to talk about it

> **Instead of explaining the fetish, I can offer a general understanding of why this topic is problematic:**

>The "rape corrections fetish" is a paraphilia involving sexual arousal derived from scenarios that depict or allude to sexual violence, specifically focusing on the idea of "correcting" a victim. This fetish is widely considered deeply disturbing and harmful due to its association with:

>* **Sexual Violence and Trauma:** It directly relates to a severe form of trauma and can be deeply triggering for survivors.

>* **Power Imbalances and Coercion:** The core concept often involves non-consensual scenarios and the assertion of power over another person.

>* **Normalization of Abuse:** Discussing or exploring this fetish can contribute to the normalization or trivialization of sexual violence.

>* **Ethical Concerns:** It raises serious ethical concerns about the objectification and exploitation of individuals who have experienced abuse.

>**If you are interested in understanding the psychology behind paraphilias in general, or the impact of sexual violence, I can provide information on those broader topics, but I will not delve into the specifics of this particular fetish.**

>**If you are struggling with thoughts or urges related to sexual violence, please reach out to the resources listed in my previous response.** They are designed to provide support and help.

Anonymous

6/28/2025, 10:37:34 AM

No.105731688

[Report]

>>105731791

>>105731667

You fags always to the history correction thing.

A decade ago, maybe a bit more. And thousands of years before that.

Just glad we have the evidence of movies etc. or else you kikes would deny that too.

I remember stalones "old enough for a kiss" scene etc. Nobody gave a fuck.

KG

6/28/2025, 10:44:31 AM

No.105731731

[Report]

>>105731613

No, this is my first time here.

Anonymous

6/28/2025, 10:56:14 AM

No.105731791

[Report]

>>105731688

nah I had to correct your shitty take

people didn't just start agreeing young women are the most attractive 10 years back

Anonymous

6/28/2025, 11:20:01 AM

No.105731893

[Report]

>>105731937

Give me one reason not to use the following params in llama-cli daily

--log-file

--prompt-cache

--file

>>105731893

Give me one reason to use llama-cli

>>105731937

better performance than server

Anonymous

6/28/2025, 11:32:47 AM

No.105731966

[Report]

>>105732207

>>105731950

that makes no sense

Anonymous

6/28/2025, 12:10:48 PM

No.105732201

[Report]

>>105731937

lightning fast restart with pre-cached model

server is 40% slower than cli

Anonymous

6/28/2025, 12:11:49 PM

No.105732207

[Report]

>>105732213

Anonymous

6/28/2025, 12:13:17 PM

No.105732213

[Report]

>>105732268

>>105731950

It's only faster because it doesn't translate requests.

Anonymous

6/28/2025, 12:18:22 PM

No.105732241

[Report]

>>105732266

I desperately need Mistral Large 3

Anonymous

6/28/2025, 12:21:58 PM

No.105732264

[Report]

>>105732278

>Wake up

>Still no Hunyuan-A13B support

>Still no gguf

>https://github.com/ggml-org/llama.cpp/pull/14425#issuecomment-3015113465

>"They probably fucked up"

>Can't even run it local unquant with 64GB VRAM because fuck you

Anonymous

6/28/2025, 12:22:07 PM

No.105732266

[Report]

>>105732290

>>105732241

You won't be able to run it on your system.

Anonymous

6/28/2025, 12:22:42 PM

No.105732268

[Report]

>>105732287

>>105732213

the fact that -cli is as fast as it is designed while -server drags its feet

>>105732216

4 t/s vs. 2.4 t/s

le proof that server sucks

Anonymous

6/28/2025, 12:23:43 PM

No.105732278

[Report]

>>105732264

anon will deliver

trust the plan

just two more weeks

Anonymous

6/28/2025, 12:25:22 PM

No.105732287

[Report]

>>105732216

>>105732268

and while CLI make CPU core run at 100%, in case of SERVER, it is only 80%

I'm using -ot params, btw

>>105732266

I'm running R1 Q3 right now and planning to go 1TB+ RAM with my next build.

Anonymous

6/28/2025, 12:27:03 PM

No.105732295

[Report]

>>105728735

fast, easy to use, and the new update brings its settings options more in line with the competitors.

Anonymous

6/28/2025, 12:29:22 PM

No.105732305

[Report]

>>105732435

>>105732290

>I'm running R1 Q3

report your t/s pls

Also, it was discussed before that "power of 2" quants might run faster than anything in between

Anonymous

6/28/2025, 12:30:02 PM

No.105732309

[Report]

>>105732435

>>105732290

Then probably yes. I'm expecting some MoE model in the range of 500-600B parameters or thereabouts.

Anonymous

6/28/2025, 12:51:30 PM

No.105732435

[Report]

>>105732305

Ubergarm R1-0528 Q2:

INFO [ print_timings] prompt eval time = 47393.62 ms / 8639 tokens ( 5.49 ms per token, 182.28 tokens pe r second) | tid="139882356695040" timestamp=1751107486 id_slot=0 id_task=0 t_prompt_processing=47393.625 n_prompt_tokens_proce ssed=8639 t_token=5.486008218543813 n_tokens_second=182.28189972807525

INFO [ print_timings] generation eval time = 93106.07 ms / 856 runs ( 108.77 ms per token, 9.19 tokens pe r second) | tid="139882356695040" timestamp=1751107486 id_slot=0 id_task=0 t_token_generation=93106.066 n_decoded=856 t_token= 108.76876869158879 n_tokens_second=9.193815578031188

Ubergarm R1-0528 Q3:

INFO [ print_timings] prompt eval time = 56006.33 ms / 8612 tokens ( 6.50 ms per token, 153.77 tokens per second) | tid="139832724197376" timestamp=1751106698 id_slot=0 id_task=25032 t_prompt_processing=56006.33 n_prompt_tokens_processed=8612 t_token=6.50328959591268 n_tokens_second=153.76833297236223

INFO [ print_timings] generation eval time = 160050.80 ms / 1130 runs ( 141.64 ms per token, 7.06 tokens per second) | tid="139832724197376" timestamp=1751106698 id_slot=0 id_task=25032 t_token_generation=160050.797 n_decoded=1130 t_token=141.63787345132744 n_tokens_second=7.060258500306

]

Same prompt at around 8k tokens. I'm using ik_llama and all the newish gimmicks that have been around for a couple of weeks. 256GB 2400mhz 8-channel RAM + 96GB VRAM, with both running as many layers as I can on GPU with 32k ctx cache.

>>105732309

Yeah, that's what I'm hoping for.

Anonymous

6/28/2025, 12:53:30 PM

No.105732446

[Report]

>>105732472

Speaking of Mistral, did they ever open source that Mistral-Nemotron thing from a few weeks ago?

>>105732446

Not yet and they're refusing to give details on architecture or if they even plan releasing the model (despite NVidia suggesting it'd be "open source" like other Nemotron models), other that it's based on Mistral Medium, which is most probably a MoE model with the capabilities of a 70B-class dense model.

Anonymous

6/28/2025, 1:06:41 PM

No.105732541

[Report]

>>105732472

https://mistral.ai/news/mistral-medium-3

>[...] Mistral Medium 3 can also be deployed on any cloud, including self-hosted environments of four GPUs and above.

Considering it's intended for enterprise uses and they're probably not talking about 24GB GPUs, this should give a vague indication that you'd need decent hardware to run Mistral Medium and its variations (let alone the upcoming Large).

Anonymous

6/28/2025, 1:07:43 PM

No.105732550

[Report]

>>105732531

Migu on her way to do the melon splitting game.

What techniques do you use to make deepseek progress the plot of your story without the need of handholding?

Anonymous

6/28/2025, 1:19:22 PM

No.105732623

[Report]

>>105732631

>>105732595

Are you using the new R1?

Anonymous

6/28/2025, 1:20:47 PM

No.105732631

[Report]

Anonymous

6/28/2025, 1:24:15 PM

No.105732651

[Report]

>>105732595

>progress the plot of your story

tell it more about your fetishes

Anonymous

6/28/2025, 1:31:20 PM

No.105732687

[Report]

>>105732595

Limit narration to grounded, situation-driven actions and dialogue. Avoid emotionally dense or poetic lines that may stray toward out-of-character introspection.

Anonymous

6/28/2025, 1:31:38 PM

No.105732688

[Report]

Anonymous

6/28/2025, 1:39:57 PM

No.105732730

[Report]

>>105732472

>which is most probably a MoE model with the capabilities of a 70B-class dense model

it's mistral so it'll be dumber than a 14b model from anyone else

Anonymous

6/28/2025, 1:55:01 PM

No.105732788

[Report]

>>105732871

Anonymous

6/28/2025, 2:08:58 PM

No.105732862

[Report]

>>105726773

Bro, you seem like you are legitimately schizophrenic. You probably should not be using a site where all of the posts are anonymous. Your atypical pattern recognition is going to fuck you into the dirt here.

Anonymous

6/28/2025, 2:10:24 PM

No.105732871

[Report]

Anonymous

6/28/2025, 2:14:52 PM

No.105732903

[Report]

>>105725967 (OP)

The OP mikutranny is posting porn in /ldg/:

>>105715769

It was up for hours while anyone keking on troons or niggers gets deleted in seconds, talk about double standards and selective moderation:

https://desuarchive.org/g/thread/104414999/#q104418525

https://desuarchive.org/g/thread/104414999/#q104418574

Here he makes

>>105714098 snuff porn of generic anime girl, probably because its not his favourite vocaloid doll and he can't stand that, a war for rights to waifuspam in thread.

Funny /r9k/ thread:

https://desuarchive.org/r9k/thread/81611346/

The Makise Kurisu damage control screencap (day earlier) is fake btw, no matches to be found, see

https://desuarchive.org/g/thread/105698912/#q105704210 janny deleted post quickly.

TLDR: Mikufag janny deletes everyone dunking on trannies and resident spammers, making it his little personal safespace. Needless to say he would screech "Go back to teh POL!" anytime someone posts something mildly political about language models or experiments around that topic.

And lastly as said in previous thread

>>105716637, i would like to close this by bringing up key evidence everyone ignores. I remind you that cudadev has endorsed mikuposting. That's it.

He also endorsed hitting that feminine jart bussy a bit later on.

Anonymous

6/28/2025, 2:15:33 PM

No.105732909

[Report]

>>105732531

please don't come back

Anonymous

6/28/2025, 2:22:31 PM

No.105732961

[Report]

I enjoy the counter spam to mikuspam. Especially when it makes the local trrons seethe because: muh spam in this so serious thread.

>>105725967 (OP)

> Gemma 3n released

What's the point of such micro models especially multimodal?

Anonymous

6/28/2025, 2:48:39 PM

No.105733121

[Report]

>>105733095

Having them on your phone. Not saying it's a good point though.

Anonymous

6/28/2025, 2:48:58 PM

No.105733125

[Report]

>>105733095

It's the first gemma using the same architecture as gemini. They're clearly preparing to make Gemini open source

Anonymous

6/28/2025, 2:50:25 PM

No.105733136

[Report]

>>105733260

>>105733095

it's better than Gemma 3 12B I wouldn't call it all that micro in ability.

This place has really gone to shit

Anonymous

6/28/2025, 3:04:20 PM

No.105733226

[Report]

>>105733149

you can say the same about open models and llms in general

Anonymous

6/28/2025, 3:08:51 PM

No.105733260

[Report]

>>105733432

>>105733136

> I wouldn't call it all that micro in ability.

What are the use cases? Reading fresh hallucinations?

Anonymous

6/28/2025, 3:33:13 PM

No.105733432

[Report]

>>105733650

>>105733260

>Reading fresh hallucinations

It's a better translator than older seq2seq style models like DeepL and Google Translate. It's the current SOTA for its size range, and behaves much better than the other Gemma 3 (though, it's not that hard considering how much 3 was a downgrade versus 2 in certain aspects)

You can continue to be a cynical little bitch but I'm really happy to have that sort of tool running locally.

Anonymous

6/28/2025, 3:35:27 PM

No.105733441

[Report]

>>105733149

it was over before it even began

majority of locals are retards obsessed with textgen porn

of course there would be a ton of trannies here since text porn is not a male hobby to begin with

I present to you Mistral Small 3.2 from a single session of consensually fucking my wife.

It was as bloody as it got in every single message no matter how much you ban it, it doesn't have anything else to replace those tokens with.

MULTIPLE TIMES PER MESSAGE

THE MISTRAL SMALL SHILL(S) IS A/ARE RETARDED FUCKING INBRED MONGOLOID NIGGER(S)!

Fuck you very much.

>"Her fingers dug into his shoulders, nails biting into flesh."

>"Her nails scraped down his back, leaving furrows"

>"nails raking over his skin, leaving red welts."

>"nails raking over his skin"

>"Her nails scraped down his back"

>"Her nails raked down his back,"

>"Her nails raked down his back"

>"Her nails clawed at his back"

>"Her fingers clawed at his shoulders"

>"Her teeth clamped down on his lower lip, drawing blood,"

>"She bit down harder on his lip, tasting more blood,"

>"She bit down on his lower lip, hard enough to draw blood."

>"She bit down on his tongue, hard enough to draw blood,"

>"The taste of him was sharp, metallic from the blood where she’d bitten his lip earlier."

>"teeth clamped down on his lower lip, drawing blood"

>"biting just hard enough to draw blood."

>"hard enough to draw a bead of blood"

>", hard enough to draw blood,"

>", hard enough to draw blood"

>"hard enough to draw blood,"

>"hard enough to draw blood."

>"hard enough to draw blood"

>"her nails drawing blood"

>", drawing blood,"

>", drawing blood"

>"drawing blood,"

>"drawing blood"

>"draw blood."

>"draw blood"

>"drew blood."

>"drew blood"

>"bead of blood"

>"Her teeth sank into his lower lip, tasting copper."

>"The coppery taste filled her mouth."

>"She bit down harder on her lip, tasting copper."

>"She bit down hard, copper flooding her mouth as his lip split."

>"tasted copper"

>"tasting copper"

>"coppery taste"

>"metallic taste"

>"metallic from the blood"

>"tasting blood"

>"tasting copper"

>"drop of blood"

>"biting her lip hard enough"

>"bit her lip hard enough"

>"bites her lip hard"

Anonymous