/ldg/ - Local Diffusion General

>>105761419 (OP)

>>>/vp/napt

is your neighbor

update your neighbor lists

>>105761336

noted kek

Anonymous

7/1/2025, 4:14:34 AM

No.105761438

[Report]

>yet another horrible autistic collage where the baker intentionally skips people

FUCK YOU

Anonymous

7/1/2025, 4:15:19 AM

No.105761446

[Report]

>>105761429

this is better.

Anonymous

7/1/2025, 4:15:57 AM

No.105761453

[Report]

blessed thread of shitfits

Anonymous

7/1/2025, 4:15:58 AM

No.105761454

[Report]

>>105761465

>posts one image per thread

>always makes it into the collage

kino

Anonymous

7/1/2025, 4:16:16 AM

No.105761457

[Report]

damned thread of foeboat

Anonymous

7/1/2025, 4:16:39 AM

No.105761463

[Report]

>>105762613

Anonymous

7/1/2025, 4:16:58 AM

No.105761465

[Report]

>>105761533

>>105761454

what a coincidence

The baker is literally a schizophrenic

Anonymous

7/1/2025, 4:17:23 AM

No.105761470

[Report]

imagine hating illustrious

imagine saltmineposting daily

imagine being this fucking unhinged\drunk

its not fucking worth it, it really just fucking isn't

Anonymous

7/1/2025, 4:18:29 AM

No.105761477

[Report]

holy seethe batman

Is Kontext capable of outpainting? Or is it only edits?

Anonymous

7/1/2025, 4:19:23 AM

No.105761488

[Report]

>>105761479

It's a direction just like anything else. It can zoom in and out.

Anonymous

7/1/2025, 4:19:51 AM

No.105761489

[Report]

Anonymous

7/1/2025, 4:19:55 AM

No.105761490

[Report]

>>105761479

it works like any other dit model for generating txt2img so maybe

Anonymous

7/1/2025, 4:20:14 AM

No.105761494

[Report]

>>105761429

LITTTLE SHIT!!!!!!

Anonymous

7/1/2025, 4:20:16 AM

No.105761495

[Report]

>>105761479

its mostly only editing. Like a local handy photoshop to make small adjustments.

Anonymous

7/1/2025, 4:20:30 AM

No.105761496

[Report]

>>105761430

Cool style anon

Anonymous

7/1/2025, 4:20:57 AM

No.105761499

[Report]

lol even ani doesn't like the spam

Anonymous

7/1/2025, 4:22:19 AM

No.105761512

[Report]

Anonymous

7/1/2025, 4:22:56 AM

No.105761517

[Report]

Is lumina2 anime good yet

Anonymous

7/1/2025, 4:24:52 AM

No.105761533

[Report]

Anonymous

7/1/2025, 4:25:22 AM

No.105761538

[Report]

Anonymous

7/1/2025, 4:28:58 AM

No.105761558

[Report]

Anonymous

7/1/2025, 4:29:15 AM

No.105761561

[Report]

>>105761575

the anime girl is sitting in a chair, watching TV in her living room. A window in the background shows a sunny beach.

kita aura

Anonymous

7/1/2025, 4:33:10 AM

No.105761575

[Report]

>>105761676

>>105761561

the anime girl is sitting in a chair, watching TV in her living room. She is wearing a white tshirt that says "LDG" in black text, blue jeans, and white sneakers. A window in the background shows a sunny beach.

peak comfy

Anonymous

7/1/2025, 4:36:51 AM

No.105761591

[Report]

Anonymous

7/1/2025, 4:37:49 AM

No.105761594

[Report]

>>105761429

So announcement #20 to leave "forever" hold for like half an hour

Does anyone have any techniques to add a bit more polish to a sex scene?

I'm getting better at going through a set of positions, adding things like sweat or motion lines though the scene, changing the facial expressions, but what do you anons do to add that little bit of polish?

should I try out a few loras like -

https://civitai.com/models/1541642/implication-off-screen

and

https://civitai.com/models/797890/offscreen-sex-nai-vpred-or-pony-or-illustrious

into the mix? How do you guys to consistent camera zooms? any seldom used but good tags?

Anonymous

7/1/2025, 4:38:20 AM

No.105761598

[Report]

I don't wanna use flux or flux kontext because I know max versions exist and my results are sub par

Anonymous

7/1/2025, 4:39:00 AM

No.105761603

[Report]

>yes you can zoom out with kontext

>>105761595

sexo. SEX. SEX! SEX!!!!!!!!!!!

ROUWEI TESTING (

https://civitai.com/models/950531?modelVersionId=1882934)

I can confirm that the text capabilities of RouWei are significantly better than noob. However, I'm doing something wrong and it's frying my images. anyone know what I need to change?

https://files.catbox.moe/2zelol.png

I haven't found any instructions or workflows online for using RouWei in comfy.

Anonymous

7/1/2025, 4:40:21 AM

No.105761611

[Report]

>>105761658

>>105761595

>add that little bit of polish?

use a cool traditional media style

Anonymous

7/1/2025, 4:40:50 AM

No.105761617

[Report]

>>105761658

>>105761595

ass press

wind

Anonymous

7/1/2025, 4:42:48 AM

No.105761630

[Report]

>>105761684

>>105761605

Is this a nsfw model or is WAI still the go-to goon checkpoint?

Anonymous

7/1/2025, 4:45:45 AM

No.105761646

[Report]

>>105761684

>>105761605

My first guess would be the prompt considering how precise the author seems to be about that. Looks neat and vpred, too. I'll download it and try it out thanks anon.

>conditioning zero out to the negatives

Is that the same as having an empty negative prompt?

Anonymous

7/1/2025, 4:46:08 AM

No.105761648

[Report]

Anonymous

7/1/2025, 4:46:37 AM

No.105761650

[Report]

Anonymous

7/1/2025, 4:48:40 AM

No.105761658

[Report]

>>105761611

I've been doing colored pencil, traditional media, but I should try the other mediums like paint

>>105761617

I like to mix in floating hair, bouncing breasts, motion lines, once the action starts

Can you do txt2img with Kontext, and if so is it any different/better at it than Flux Dev?

Anonymous

7/1/2025, 4:52:18 AM

No.105761676

[Report]

>>105761702

>>105761575

same prompt except rei as input:

>>105761630

>Is this a nsfw model

yeah,

https://files.catbox.moe/0e5ki8.png

if I can get this un-fried it may be worth some deeper testing for NSFW purposes

>WAI still the go-to goon checkpoint

That's ΣΙΗ_illu_noob_vpred. Wai is OK but is lacking in styles.

>>105761646

>My first guess would be the prompt

yeah, I think I need to install an extension that enables BREAK, but that doesn't explain how fried my outputs are.

>>conditioning zero out to the negatives

>Is that the same as having an empty negative prompt

I'm not sure, IIRC there was a difference in my testing

Anonymous

7/1/2025, 4:53:39 AM

No.105761685

[Report]

>>105761799

>>105761595

>any seldom used but good tags?

Bookmark this, it's very useful for finding tags:

https://danbooru.donmai.us/related_tag?commit=Search&search%5Bcategory%5D=General&search%5Border%5D=Cosine&search%5Bquery%5D=breasts

also i categorized 59,000 tags here:

https://github.com/rainlizard/ComfyUI-Raffle/blob/main/lists/categorized_tags.txt

but that's more probably useful for tools rather than people

Anonymous

7/1/2025, 4:53:48 AM

No.105761686

[Report]

>>105762723

Anonymous

7/1/2025, 4:55:58 AM

No.105761702

[Report]

>>105761676

diff image, better result:

>>105761684

>Wai is OK but is lacking in styles.

Maybe but I don't like Noob's retarded prompting. When I feel like writing an essay, I just stick with Chroma

Anonymous

7/1/2025, 5:02:11 AM

No.105761741

[Report]

>>105761750

>>105761713

ΣΙΗ_illu_noob_vpred is the easiest noob model I've ever used. illus prompts are completely portable to ΣΙΗ.

Anonymous

7/1/2025, 5:03:44 AM

No.105761750

[Report]

>>105761766

>>105761741

Can it eat illustrious loras? All my goonshit is for WAI.

Anonymous

7/1/2025, 5:04:02 AM

No.105761753

[Report]

>>105761944

Is it possible to change the size of Kontext output images? Or is it always going to be the same size as the input image?

Anonymous

7/1/2025, 5:04:06 AM

No.105761754

[Report]

>>105761713

if you ever throw one of the WAI example prompts into Noob you'll see you don't really /need/ a lot of tags to generate something good with it. Noobs outputs surpassed it desu.

Anonymous

7/1/2025, 5:04:08 AM

No.105761755

[Report]

>before getting into ai

>played video games, had hobbies

>spend 99.9% time in comfyui gen'ing porn now

>cant play games because gpu constantly in use

>no time for hobbies

>discord buddies think im dead

ai has ruined me.

Anonymous

7/1/2025, 5:05:59 AM

No.105761766

[Report]

>>105761750

I'm a proompter and don't use LORAs a lot, but illus LORAs frequently work on noob so you should test some and find out

Anonymous

7/1/2025, 5:06:00 AM

No.105761767

[Report]

>>105761774

fishin

Anonymous

7/1/2025, 5:07:02 AM

No.105761774

[Report]

>>105761767

specified clothes, now it's better

the green cartoon frog is on a fishing boat in the ocean. a cooler nearby is filled with beers. the frog is using a fishing rod and sitting on a chair. the frog is wearing a red tshirt and blue shorts.

Anonymous

7/1/2025, 5:08:12 AM

No.105761787

[Report]

>>105761765

>Can easily ask for girls phone numbers at social events.

>Talk and text to them.

>Realize they talk and text like literal shallow NPCs.

>Rather chat to LLMs instead.

It's over....

Anonymous

7/1/2025, 5:09:22 AM

No.105761793

[Report]

>>105761765

>no time for hobbies

AI is the hobby now

with a ksampler what would happen if the seed was randomized inbetween each step?

i have a theory that it might be good for upscaling

Anonymous

7/1/2025, 5:09:50 AM

No.105761799

[Report]

>>105761685

thanks for the info

Anonymous

7/1/2025, 5:10:09 AM

No.105761801

[Report]

>>105761828

>>105761671

I just tried giving it a totally black 1344x768 image and said "replace this blank image with a landscape painting" and it worked. That might as well be txt2img.

>>105761765

I think the gacha element of AI makes it worse in terms of it being addictive. Like one is trying to fine tune a slot-machine. I can only imagine the hell it could be if, say, a 30 sec video model is released and people try to constantly hammer away the tiny imperfections.

>>105761765

>tfw uninstalled most of my games to make room for models

Anonymous

7/1/2025, 5:11:14 AM

No.105761811

[Report]

>>105761890

>>105761605

try one of the following, i can't be arsed to open comfyui rn

>use model sampling discrete with vprediction sampling and zsnr enabled

>use the built-in checkpoint vae

Anonymous

7/1/2025, 5:11:50 AM

No.105761814

[Report]

>>105761798

setup 50x ksamplers, set them all to 1 step, then pass the output to the next ksampler with the different seed and post the final result

Anonymous

7/1/2025, 5:12:32 AM

No.105761817

[Report]

>>105761835

the green cartoon frog is wearing a NASA spacesuit with no helmet, and is on the surface of the moon. A cartoonish looking Earth is in the background. The frog is planting a flag with a similar looking frog on it that looks like his appearance.

Anonymous

7/1/2025, 5:14:38 AM

No.105761827

[Report]

>>105761809

I was only ever keeping up with one game(genshin), but i've all so far behind now that it feels impossible to get caught back up considering how much content they drop. i want to get back into it but then i remember all the shit i could be gen'ing or experimenting with. i just can't do both.

Anonymous

7/1/2025, 5:14:40 AM

No.105761828

[Report]

>>105761801

although the result is trash aesthetically, even more slopped than flux dev without loras. looks more like a render than a painting.

Anonymous

7/1/2025, 5:15:50 AM

No.105761833

[Report]

>>105761862

>>105761807

this. comfyui isn't exactly a good choice for just finishing something. I think workflow is a bad word to describe the graphs since you still have work to do most of the time. you can get it out but then you spend nine hours automating a niche gen into a convoluted spaghetti nightmare and have to change it for every particular solution to get something done

Anonymous

7/1/2025, 5:15:55 AM

No.105761835

[Report]

Anonymous

7/1/2025, 5:15:59 AM

No.105761836

[Report]

Anonymous

7/1/2025, 5:19:51 AM

No.105761854

[Report]

>>105761890

>>105761684

>ΣΙΗ_illu_noob_vpred

sorry, but what model is that? I don't understand the first three symbols and search didn't bring up anything.

Anonymous

7/1/2025, 5:21:26 AM

No.105761862

[Report]

>>105761798

you sample 1/steps amount of data points from different Gaussian distributions with a mean of 0 and 1 sd. nothing magical happens, you simply get a quite uninspiring mix of various distributions.

>>105761833

if you make it convoluted, it'll be like that.

Anonymous

7/1/2025, 5:25:11 AM

No.105761885

[Report]

>>105761807

Imagine waiting hours for a 30 second gen only to have it go off the rails and fuck up in the last 10 seconds.

I don't look forward to that.

Anonymous

7/1/2025, 5:25:40 AM

No.105761887

[Report]

Anonymous

7/1/2025, 5:26:23 AM

No.105761890

[Report]

>>105762625

Anonymous

7/1/2025, 5:27:14 AM

No.105761896

[Report]

Anonymous

7/1/2025, 5:28:02 AM

No.105761899

[Report]

Anonymous

7/1/2025, 5:28:17 AM

No.105761901

[Report]

>>105761953

repost. I have a sfw gen in the queue to post shortly.

>>105760700 (You)

>>105760700 (You)

anyone? Like way too much noise left in the image.

Anyway, I loaded the basic wf and fixed it back up, it's working fine...

....I must have changed something, any idea what in the Kontext wf would do that?

Anonymous

7/1/2025, 5:34:43 AM

No.105761938

[Report]

Man kontext is some weird magic. Like sometimes you can get it to generate similar faces.

I haven't seen a discussion of not-quite-a-likeness as a feature. But it's got me excited. You can generate a SIMILAR person.

Anonymous

7/1/2025, 5:35:14 AM

No.105761941

[Report]

>>105761949

>50/50 NoobAI vPred/Rouwei vPred

Anonymous

7/1/2025, 5:35:33 AM

No.105761944

[Report]

>>105761713

chroma? that rarely needs an essay, but you can prompt fairly extensively

>>105761753

most workflows I've seen explicitly pick the size

>>105761671

> is it any different/better at it than Flux Dev?

to me it seems on average no but I haven't really tried it much yet. it might have its niches. it's also annoyingly censored I think.

Anonymous

7/1/2025, 5:35:56 AM

No.105761947

[Report]

*sip*

Anonymous

7/1/2025, 5:36:06 AM

No.105761949

[Report]

>>105761973

>>105761941

>NoobAI vPred/Rouwei vPred

I lost track because I kind of switched to Illustrious. What even is vpred? Black backgrounds?

Anonymous

7/1/2025, 5:37:06 AM

No.105761953

[Report]

>>105761901

I just remembered why.

Teacache is not fully compatible. It can work.

Anonymous

7/1/2025, 5:41:09 AM

No.105761973

[Report]

>>105761999

>>105761949

Better colors. Something like that

I'm the guy that had an issue with flashing looped videos and apparently it's this thing here. Even with the 'fun' model loaded it seems better to have this off.

No idea what it does apart from make the loop freak out in the last few frames. It still happens with one frame, slightly, but it's better than a few frames of blindness.

Anonymous

7/1/2025, 5:45:40 AM

No.105761995

[Report]

>>105762028

the anime girl waves hello and smiles.

Anonymous

7/1/2025, 5:45:45 AM

No.105761996

[Report]

Anonymous

7/1/2025, 5:46:17 AM

No.105761999

[Report]

>>105762031

>>105761973

"better colors" is so vague. There's a thousand different techniques you can use to improve colors aside from using an entirely different model.

Anonymous

7/1/2025, 5:49:48 AM

No.105762021

[Report]

>LORA on civit.ai

>none of the replies in the image gallery are using it

Anonymous

7/1/2025, 5:50:56 AM

No.105762028

[Report]

>>105761995

now with no floating drink

Anonymous

7/1/2025, 5:51:21 AM

No.105762031

[Report]

>>105761999

More accurate colors compared to the greyness of regular XL

Anonymous

7/1/2025, 5:53:15 AM

No.105762041

[Report]

Change the text "DOOM" to "SAAR". Replace the man in green armor with an Indian man holding a sign saying "REDEEM".

Anonymous

7/1/2025, 5:53:33 AM

No.105762042

[Report]

>>105762357

Anonymous

7/1/2025, 5:53:33 AM

No.105762043

[Report]

Anonymous

7/1/2025, 5:54:47 AM

No.105762054

[Report]

>>105762108

Anonymous

7/1/2025, 5:55:24 AM

No.105762055

[Report]

>>105762089

Trying to into video, the WAN guide on /gif/ I can figure out most of but am using Linux not windows so cant run the bat file so I've no idea what plugins I'm missing or how to set it up.

Does a more general/linux specific guide for WAN exist?

Anonymous

7/1/2025, 5:57:53 AM

No.105762081

[Report]

Anonymous

7/1/2025, 5:58:33 AM

No.105762086

[Report]

>>105763129

>LORA on civit

>completely redundant to using a tag

Anonymous

7/1/2025, 5:59:07 AM

No.105762089

[Report]

>>105762055

it works basically the same way, pull via git, install dependencies and stuff via [uv] pip, drop the models in the correct folders, install the rest when comfyui is running via the manager?

stability matrix also works on linux if you want to make the install of comfyui and other uis easier

Anonymous

7/1/2025, 6:02:09 AM

No.105762102

[Report]

>new snake oil drops

>no comfy node

Anonymous

7/1/2025, 6:03:11 AM

No.105762108

[Report]

>>105762054

i had to try the meme on kontext

Anonymous

7/1/2025, 6:08:24 AM

No.105762147

[Report]

Found this image online in the wild. Kontext API version is absolutely wicked bros. Shame about local

Anonymous

7/1/2025, 6:22:17 AM

No.105762212

[Report]

Anonymous

7/1/2025, 6:23:09 AM

No.105762216

[Report]

Anonymous

7/1/2025, 6:30:14 AM

No.105762251

[Report]

Anonymous

7/1/2025, 6:35:28 AM

No.105762281

[Report]

her skin has suffered enough

Anonymous

7/1/2025, 6:55:59 AM

No.105762391

[Report]

>>105762357

Pretty damn smooth

Anonymous

7/1/2025, 7:07:02 AM

No.105762459

[Report]

>>105762537

its like you guys can't go an entire hour without seething

comfyui tells me "please install xformers". I was on the nightly pytorch, cu128 & couldn't get it working. so I downgraded to the latest stable pytorch 2.7.1, still no cigar.

"pytorch version: 2.7.1+cu128

WARNING[XFORMERS]: Need to compile C++ extensions to use all xFormers features.

Please install xformers properly" bla

is there a way to make this work? does xformers need pytorch 2.7.0?

Anonymous

7/1/2025, 7:11:27 AM

No.105762497

[Report]

so is detail calibrated just shit or what?

Anonymous

7/1/2025, 7:15:39 AM

No.105762524

[Report]

>>105762634

>>105762466

I used this guy's .bat because I wanted to install sage attention and the previous dozen attempts didn't work.

https://www.reddit.com/r/StableDiffusion/comments/1jdfs6e/automatic_installation_of_pytorch_28_nightly/

But I had to change line 78 to ..\python_embeded\python.exe -s -m pip install --pre torch==2.8.0.dev20250619+cu128 torchvision torchaudio --index-url

https://download.pytorch.org/whl/nightly/cu%CLEAN_CUDA%

and line 93 to echo ..\python_embeded\python.exe -s -m pip install --upgrade --pre torch==2.8.0.dev20250619+cu128 torchvision torchaudio --extra-index-url

https://download.pytorch.org/whl/nightly/cu%CLEAN_CUDA% -r ../ComfyUI/requirements.txt pygit2 because the active nightly of pytorch was broken.

MASSIVE fucking headache to get this stuff working.

Anonymous

7/1/2025, 7:16:49 AM

No.105762537

[Report]

>>105762459

shut the fuck up

So Kontext is nice. But the details get messed up for anime style. So close to making LoRas redundant.

Anonymous

7/1/2025, 7:22:32 AM

No.105762579

[Report]

>>105762556

You can use the version with Enhanced Style Transfer right from ComfyUI! Here's a workflow to get started:

https://docs.comfy.org/tutorials/api-nodes/black-forest-labs/flux-1-kontext

Anonymous

7/1/2025, 7:23:20 AM

No.105762585

[Report]

Anonymous

7/1/2025, 7:28:00 AM

No.105762613

[Report]

>>105761463

I too downloaded the graphis torrent for training data, before I realized they badly photoshop their eyes bigger in 50% of images and put it in the trash. What an absolute waste.

Anonymous

7/1/2025, 7:28:09 AM

No.105762614

[Report]

>>105762556

too much <safety> to be close

Anonymous

7/1/2025, 7:29:55 AM

No.105762625

[Report]

>>105761890

Got a link for the merge?

Anonymous

7/1/2025, 7:31:11 AM

No.105762634

[Report]

>>105762524

thank you very much. headache indeed. I don't even remember what I need xformers for anymore, my brain is all mush. thanks again, copied and saved!

Anonymous

7/1/2025, 7:33:06 AM

No.105762650

[Report]

>>105761684

Hmm, try res_ samplers (I used res_2M_ode) and sgm_uniform sched. I've been hovering around 3-3,5CFG and 20-25 steps and it seems less baked.

Anonymous

7/1/2025, 7:34:57 AM

No.105762666

[Report]

Anonymous

7/1/2025, 7:35:31 AM

No.105762669

[Report]

>>105761419 (OP)

>>Cook

>training resources

Don't models "bake" not "cook"?

Anonymous

7/1/2025, 7:41:01 AM

No.105762702

[Report]

Anonymous

7/1/2025, 7:43:55 AM

No.105762719

[Report]

>race race race

>race to the bump limit!

garbage

Anonymous

7/1/2025, 7:44:32 AM

No.105762723

[Report]

Anonymous

7/1/2025, 7:45:35 AM

No.105762731

[Report]

>>105761809

Same bro, every month I see what's remaining in my Steam folder on my 1TB SSD to be uninstalled

Anonymous

7/1/2025, 7:48:21 AM

No.105762748

[Report]

>>105762801

>>105762466

>xformers

use sage attention 2, nigga

xformers is only good for sd1.5

>WARNING[XFORMERS]: Need to compile C++ extensions to use all xFormers features.

or install 0.0.30 it doesn't have this warning

Anonymous

7/1/2025, 7:56:31 AM

No.105762800

[Report]

>>105758343

reminds me of tf2 insta-gibbing, i wonder if that's in the training data

Anonymous

7/1/2025, 7:56:34 AM

No.105762801

[Report]

>>105762748

I am running sage attention (wan rentry setup from op basically) but something wants xformers. I was messing with general object detection stuff. mesh graphormer, segment anything/grounding dino, florence2, that shit. & thanks for tip

Anonymous

7/1/2025, 8:00:53 AM

No.105762824

[Report]

>>105762948

does nunchaku give your different image each time? Same settings & seed

>nvidia, github, and runpod supported the recent comfy event

dang

Anonymous

7/1/2025, 8:05:47 AM

No.105762857

[Report]

save us, ani..

Anonymous

7/1/2025, 8:05:52 AM

No.105762860

[Report]

>>105762948

>>105762843

that's the power of selling out to saas. comfyui is now the world's most powerful API model provider

Anonymous

7/1/2025, 8:12:59 AM

No.105762906

[Report]

>>105762972

>>105762824

no should be reproducible

>>105762860

he's simply the only one who got and continously had his shit gogether.

>>105762948

>he's simply the only one who got and continously had his shit gogether.

it's more like he had a smart idea to make a software that is easy to make custom nodes on, that way it means people do the work for him with custom nodes, Kijai is doing it for FREE

Anonymous

7/1/2025, 8:21:58 AM

No.105762963

[Report]

>>105762969

>>105762961

>Comfy Photographing Kijai

Anonymous

7/1/2025, 8:22:46 AM

No.105762969

[Report]

Anonymous

7/1/2025, 8:23:08 AM

No.105762971

[Report]

>>105762843

nobody tell them

Anonymous

7/1/2025, 8:23:16 AM

No.105762972

[Report]

>>105762906

me after eating entire pitza

Anonymous

7/1/2025, 8:24:46 AM

No.105762986

[Report]

>>105763066

>>105762948

>no should be reproducible

which version you are using 0.3.1 or 0.3.2.dev?

Anonymous

7/1/2025, 8:25:58 AM

No.105762990

[Report]

>>105763038

>>105762961

what's this about? did kijai upset someone?

>>105761605

I don't know what's wrong with your workflow and it produced black images for me so I didn't try to debug, but you can check mine. It's heavily recommended that you put style and quality tags inside an isolated CLIP chunk.

My current impressions on RouWei is that it has better prompt adherence than IL, Noob, and the merges I've tried; it might have wider artist support (or at least "different"); it seems more inflexible with tag weights on artist styles; and it has a strong bias for a very "RouWei" look that I'm not a fan of (orange tint, depth of field, and other qualities I can't describe off the cuff).

Anonymous

7/1/2025, 8:33:23 AM

No.105763038

[Report]

>>105762990

>they called him all sort of names, but never a liar

Anonymous

7/1/2025, 8:39:02 AM

No.105763066

[Report]

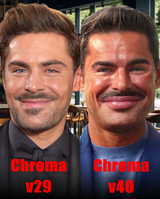

>>105762986

0.3.2. ok I get a pretty large variance now. but the left one was done with pytorch 2.8.n & --fast, the right one with 2.7.1 & w/o --fast

>>105762961

yeah (and we pay the price for that wild west ecosystem every day)

Anonymous

7/1/2025, 8:41:47 AM

No.105763089

[Report]

>>105763101

the evaluation system is so dumb on civitai, everything has 100% positive reviews because people review only if they post something

Anonymous

7/1/2025, 8:44:31 AM

No.105763101

[Report]

>>105763089

I agree. You use this stupid binary system for social media slop, not to review something. There should be a scoring system for various criteria on a 1-5 scale.

Anonymous

7/1/2025, 8:46:34 AM

No.105763120

[Report]

>>105760637

>>105761061

This would only bug me if Max used as much VRAM as Dev, but I have a feeling it's probably closer to 80GB.

Anonymous

7/1/2025, 8:48:14 AM

No.105763129

[Report]

>>105762086

>using the activation words without the actual LoRa gives better results

Anonymous

7/1/2025, 8:51:01 AM

No.105763143

[Report]

>>105762999

>strong bias for a very "RouWei" look

indeed. im not sure if i like it mixed with noob, maybe for coom. dunno why the second pass brings the style out like this

Anonymous

7/1/2025, 8:52:26 AM

No.105763150

[Report]

Anonymous

7/1/2025, 8:52:40 AM

No.105763153

[Report]

>>105763203

Anonymous

7/1/2025, 8:54:56 AM

No.105763171

[Report]

>>105763186

>>105762999

>strong bias for a very "RouWei" look

indeed. im not sure if i like it mixed with noob, maybe for coom.

Anonymous

7/1/2025, 8:57:18 AM

No.105763186

[Report]

>>105763171

could be fail merge

Anonymous

7/1/2025, 8:58:53 AM

No.105763203

[Report]

Anonymous

7/1/2025, 9:03:45 AM

No.105763227

[Report]

>>105761605

res_multistep

sgm_uniform

20 steps

CFG 3

Also idk what VAE are you using because you haven't hooked up the vae included in the model.

And simple scheduler removes the blue tint altogether.

Anonymous

7/1/2025, 9:08:36 AM

No.105763258

[Report]

Anonymous

7/1/2025, 9:09:27 AM

No.105763261

[Report]

>>105763239

No nevermind, this was a fluke.

Anonymous

7/1/2025, 9:09:36 AM

No.105763264

[Report]

>>105763239

Yeah, on this topic, I've noticed that karras with vpred models will have serious malfunctions unless you use custom sigmas, so I stick to simple scheduler.

Anonymous

7/1/2025, 9:11:06 AM

No.105763273

[Report]

Anonymous

7/1/2025, 9:12:09 AM

No.105763280

[Report]

Kino soul general

Anonymous

7/1/2025, 9:14:09 AM

No.105763294

[Report]

Anonymous

7/1/2025, 9:15:28 AM

No.105763304

[Report]

>>105763594

Anonymous

7/1/2025, 9:43:23 AM

No.105763472

[Report]

>>105763572

What the fuck is with the creepshot/pov vignette that Rouwai does? Also It gave me several outputs with blurred pussy and black bar censors lol. This model is cooked.

Anonymous

7/1/2025, 9:45:13 AM

No.105763481

[Report]

Anonymous

7/1/2025, 9:49:17 AM

No.105763498

[Report]

Anonymous

7/1/2025, 9:53:29 AM

No.105763522

[Report]

Anonymous

7/1/2025, 10:00:26 AM

No.105763569

[Report]

Anonymous

7/1/2025, 10:00:53 AM

No.105763572

[Report]

>>105763472

Holy shit, my brain is so accustomed to censors I forgot that more than half of my booru favorites have it. That must be a nightmare for datasets.

Anonymous

7/1/2025, 10:03:05 AM

No.105763583

[Report]

>>105766020

Anonymous

7/1/2025, 10:04:36 AM

No.105763594

[Report]

>>105763639

Anonymous

7/1/2025, 10:06:01 AM

No.105763601

[Report]

Is there a magic trick to fix Comfy when it randomly decides to hang and not display random nodes, without just restarting it completely? The program isn't completely crashed, it still processes the queue in the background but I can't interface.

Anonymous

7/1/2025, 10:07:52 AM

No.105763616

[Report]

>>105763636

>>105763610

refresh the page?

Anonymous

7/1/2025, 10:08:21 AM

No.105763618

[Report]

>>105763636

>>105763610

whens the last time you updated, boss

Not that most of you care, but:

lodestones

Upload chroma-unlocked-v41-detail-calibrated.safetensors with huggingface_hub

Anonymous

7/1/2025, 10:09:59 AM

No.105763632

[Report]

>>105763619

>Not that most of you care

yep, I really don't care, Kontext Dev is my new friend

Anonymous

7/1/2025, 10:10:19 AM

No.105763636

[Report]

>>105763679

>>105763616

Closing the entire thing sort of works but I have to wait for my prompt to finish to avoid other problems. But I'm mistaken, it's not a random glitch, my workflow consistently implodes after a single prompt.

>>105763618

This started after I updated 2 days ago, never happened before that.

Anonymous

7/1/2025, 10:11:16 AM

No.105763639

[Report]

>>105763693

>>105763594

there's some artist with this style just cant remember who

>>105763619

gguf waiting room

>>105763610

the interface can be a bit glitchy but I've never had something like that happen. (using comfy since 2023)

>>105763619

this is a very nice gen anon (goes for the others you posted as well, good shit)

Anonymous

7/1/2025, 10:12:27 AM

No.105763648

[Report]

>>105763693

>>105763619

>>105763640

>this is a very nice gen anon (goes for the others you posted as well, good shit)

Anonymous

7/1/2025, 10:13:29 AM

No.105763657

[Report]

good gens ITT

Anonymous

7/1/2025, 10:17:13 AM

No.105763677

[Report]

Anonymous

7/1/2025, 10:17:49 AM

No.105763679

[Report]

>>105763974

>>105763636

Ok if I press it once, close the tab, and reload it, I can then keep pressing the queue button until the first once finishes, at which point it breaks again.

Anonymous

7/1/2025, 10:20:48 AM

No.105763693

[Report]

>>105763755

Anonymous

7/1/2025, 10:31:24 AM

No.105763754

[Report]

Anonymous

7/1/2025, 10:31:30 AM

No.105763755

[Report]

>>105763876

>>105763640

Thanks man.

>>105763693

I'm just posting gens because I think Chroma's neat. I don't care about the drama surrounding it, I just like that I can get output I like without using LoRAs. But perhaps my use case is a bit specific since the styles I like are replicated pretty well and I don't really gen photo realistic stuff.

Anonymous

7/1/2025, 10:34:33 AM

No.105763776

[Report]

Any vace chads here know the best wan lora slop combination to get my gen to match my input image? It always changes the faces. I have;

>causvid

>accvid

>self forcing (kinda keeps the input image but moves poorly)

>lightx2v (changes the input image completely into flux face)

The closes I've gotten is combining causvid and accvid

Anonymous

7/1/2025, 10:37:29 AM

No.105763792

[Report]

Anonymous

7/1/2025, 10:41:00 AM

No.105763816

[Report]

Is the Mayli anon back?

did he finish sorting his folder?

Anonymous

7/1/2025, 10:41:20 AM

No.105763818

[Report]

To the anons that offered advice regarding "dictionary state" errors on kontext yestday, it turns out my env was fucked for Kontext (it works fine for everything else though???) so i made a fresh env and 2nd install of comfy and the default comfyui workflow worked.

>>105763755

you replied to the same anon lol. yeah I understand, chroma has a way with shapes and forms and certain styles that's just beautiful, it just flows. and the upscales almost always come out really nice as well. not sure if you know this dude, pretty dope stuff

https://civitai.com/user/TijuanaSlumlord

and the drama thing, w/e. it's just one nutjob whose horizon doesn't seem to reach past "asian, feet"

Anonymous

7/1/2025, 10:51:40 AM

No.105763890

[Report]

Anonymous

7/1/2025, 10:55:55 AM

No.105763911

[Report]

>>105764006

>>105763876

What settings do you use for chroma upscale?

Anonymous

7/1/2025, 11:06:04 AM

No.105763974

[Report]

>>105764251

>>105763679

Looks like one other guy has reported the same issue, and somehow it's apparently Flux+Firefox specific, which is my config as well. I tried an older Illustrious workflow and no issues.

https://github.com/comfyanonymous/ComfyUI/issues/4235

Anonymous

7/1/2025, 11:09:13 AM

No.105763992

[Report]

>>105764020

Anonymous

7/1/2025, 11:11:18 AM

No.105764004

[Report]

>>105761992

Last time I had this problem it was bc a few nodes were fucking everything up, so try to see wich part of your workflow is doing that, then which nodes

>>105761992

Are you looping with the fun model? instead of the base one nor vace?

Anonymous

7/1/2025, 11:12:06 AM

No.105764006

[Report]

>>105763911

like this but it's always WIP. between 0.3 and 0.4 denoise, steps 10-14, bit of noise manipulation via detail daemon, either x1.5 or x2 size. some flux loras might work ok for the initial gens but I found that removing them or lowering the strength for the upscale seemed to yield better results.

sampler/scheduler, I need to run various XY plots to figure that out - not done it yet.

Anonymous

7/1/2025, 11:14:45 AM

No.105764020

[Report]

>>105764071

Anonymous

7/1/2025, 11:16:59 AM

No.105764031

[Report]

this "load image from outputs" node is a good idea but it's slow as shit

Anonymous

7/1/2025, 11:19:07 AM

No.105764040

[Report]

>>105764787

You can get rid of the manlet effect by decreasing the FluxGuidance value

Anonymous

7/1/2025, 11:19:53 AM

No.105764048

[Report]

>>105764051

let's fucking goooooo (I'm gonna try to compile it)

Anonymous

7/1/2025, 11:23:42 AM

No.105764071

[Report]

>>105764020

could be sweat

3060

12gb

16ram

do I have any hope of genning decent i2vids?

I don't normally use diffusion models, but I had a dream about a new type of diffusion model that could generate perfect text.

It generated long and detailed texts by segmenting the image and separately generating each letter one after another, akin to inpainting each letter.

Now I'm curious if there's any model that actually works like this.

Anonymous

7/1/2025, 11:39:30 AM

No.105764168

[Report]

>>105764108

It will either take forever or OOM

>>105764061

Ok, after some test, I got a 13.8% speed improvement overall (RTX 3090), I'll take it, the quality is the exact same too

>>105764167

Why is AI so shit at text anyway?

Anonymous

7/1/2025, 11:41:34 AM

No.105764189

[Report]

>>105764770

Sometimes kontext has visible progress in the vae, then it just undoes everything and wastes the rest of the steps changing nothing.

Anonymous

7/1/2025, 11:44:38 AM

No.105764211

[Report]

>>105764051

>>105764061

>>105764170

>the chinks didn't betray us after all

I NEVER DOUBTED THEM

Anonymous

7/1/2025, 11:45:18 AM

No.105764217

[Report]

>>105764233

>>105764170

Happy for you anon, my gcc is too new to compile it and i have to fuck around with other stuff first before trying a workaround.

I only noticed the release as i am just getting around to setting up sageattn on a new install.

Anonymous

7/1/2025, 11:45:57 AM

No.105764221

[Report]

>>105764051

Cool, now I'm waiting for SA3 (unless it only got its speed improvement from the 5090 in which case I won't give a damn lol)

>>105764185

You have to use a good LLM as the text encoder for it to do text properly.

T5 and CLIP are neither good, nor LLMs.

>>105764217

>Happy for you anon, my gcc is too new to compile it and i have to fuck around with other stuff first before trying a workaround.

lucky for you KJ God saved the day and added some 2.2.0 wheels here

https://huggingface.co/Kijai/PrecompiledWheels/tree/main

Anonymous

7/1/2025, 11:47:54 AM

No.105764235

[Report]

>>105764222

The downside with this is it significantly increases VRAM requirements so it's usually an API model thing. I know Lumina uses Gemma but it still fails at text most of the time, though it's understanding of the prompt is significantly enhanced by using Gemma as a decoder instead of clip/t5.

Anonymous

7/1/2025, 11:48:06 AM

No.105764238

[Report]

>>105764167

>I had a dream about a new type of diffusion model that could generate perfect text.

4o imagegen is really close to that

Anonymous

7/1/2025, 11:49:28 AM

No.105764249

[Report]

>>105764222

No, I mean drawing the actual letters, not text comprehension.

Anonymous

7/1/2025, 11:49:56 AM

No.105764251

[Report]

>>105763974

Ok I debugged it apparently, the command window didn't indicate any error but deleting the U-NAI Get Text node (which wasn't essential but useful for saving the chosen wildcard) fixes it.

Anonymous

7/1/2025, 11:52:43 AM

No.105764273

[Report]

>>105764233

Thanks for that anon, i'm a bit dim but i'll take a look at it and figure out how to use it to my advantage somehow.

Anonymous

7/1/2025, 11:59:48 AM

No.105764325

[Report]

>>105764493

>>105764061

For those who said the SageAttention guys would gatekeep their code, APOLOGIZE

Anonymous

7/1/2025, 12:02:33 PM

No.105764345

[Report]

>>105764401

he can't get away with this!

Anonymous

7/1/2025, 12:04:08 PM

No.105764358

[Report]

>>105764366

>>105764061

Is this good only for Wan and kontext?

Anonymous

7/1/2025, 12:04:56 PM

No.105764364

[Report]

>>105763876

Damn, sorry. Didn't know that dude but I'll check him out. I see he's futzing around with LoRAs, which I never really did with Chroma since the results haven't been too great for me. Thanks man.

Anonymous

7/1/2025, 12:05:08 PM

No.105764366

[Report]

>>105764410

>>105764358

Sage works on everything

Anonymous

7/1/2025, 12:06:13 PM

No.105764378

[Report]

>>105767052

Anonymous

7/1/2025, 12:07:23 PM

No.105764386

[Report]

>>105765098

I can't get her to heil, but that's okay

Anonymous

7/1/2025, 12:09:50 PM

No.105764401

[Report]

>>105764366

So I can just directly git clone it and comfy won't explode when doing Chroma?

Anonymous

7/1/2025, 12:12:10 PM

No.105764415

[Report]

Anonymous

7/1/2025, 12:16:27 PM

No.105764447

[Report]

Anonymous

7/1/2025, 12:17:00 PM

No.105764451

[Report]

>>105764410

you have to install the wheels, use kijai's one

>>105764233

Anonymous

7/1/2025, 12:24:03 PM

No.105764493

[Report]

>>105764325

is it possible, nay probable, that this is in direct response to that very backlash?

So adetailer detects the faces and hands and makes them better quality using a custom model and the trained LoRa?

Is there any way I can also upscale the rest of the image? Clothes look low quality compared with the adetailed faces.

Normal Upscaling models don't make the cut

Anonymous

7/1/2025, 12:25:03 PM

No.105764504

[Report]

>>105764498

Upscale first, and run detailer on the finished image.

Anonymous

7/1/2025, 12:25:31 PM

No.105764506

[Report]

>>105764051

>>105764061

Less goooooooooooooo

Anonymous

7/1/2025, 12:28:01 PM

No.105764522

[Report]

>>105764061

God bless the chinks

Anonymous

7/1/2025, 12:29:31 PM

No.105764535

[Report]

Anonymous

7/1/2025, 12:30:35 PM

No.105764540

[Report]

>>105764498

>So adetailer detects the faces and hands and makes them better quality using a custom model and the trained LoRa?

No, the only ADetailer-specific models are the detector models. It'll sample using whatever model you give it, usually the same as the model you used for your base gen.

>Is there any way I can also upscale the rest of the image? Clothes look low quality compared with the adetailed faces.Is there any way I can also upscale the rest of the image? Clothes look low quality compared with the adetailed faces.

Hiresfix (before ADetail), or detail the clothing with manual masking. See the "Hiresfix", "Face and hand detailing", and "Inpainting" sections of the guide:

https://rentry.org/comfyui_guide_1girl

Anonymous

7/1/2025, 12:37:07 PM

No.105764582

[Report]

>>105764595

>>105764051

>>105764061

if you want to see an improvement you have to upgrade cuda to 12.8 or more

Anonymous

7/1/2025, 12:39:41 PM

No.105764595

[Report]

>>105764582

you'll get more improvement if your gpu is a sm89 (4090 or more)

https://github.com/thu-ml/SageAttention/pull/196/files

Anonymous

7/1/2025, 12:39:49 PM

No.105764597

[Report]

>>105764640

>>105764233

this one isn't compiled with cuda?

https://github.com/woct0rdho/SageAttention/releases

does have wheels with cuda, but seems to say it only improves speed on 40XX and 50XX?

Anonymous

7/1/2025, 12:46:42 PM

No.105764640

[Report]

>>105764912

>>105764597

>but seems to say it only improves speed on 40XX and 50XX?

there seem to be 2 optimisations there, and the 40xx and 50xx will get the both of them

Anonymous

7/1/2025, 12:49:18 PM

No.105764658

[Report]

>>105764667

Soon wansisters...

https://github.com/Yaofang-Liu/Pusa-VidGen

>Extended video generation

>Frame interpolation

>Video transitions

>Seamless looping

https://github.com/mit-han-lab/radial-attention

>4× longer videos

>3.7× speedup

Anonymous

7/1/2025, 12:56:26 PM

No.105764703

[Report]

Anonymous

7/1/2025, 12:56:37 PM

No.105764704

[Report]

>>105764761

Can I leave the negative prompt in NAG empty or do I have to enter something?

Anonymous

7/1/2025, 1:03:16 PM

No.105764761

[Report]

>>105764704

you can leave it empty, it works like cfg

Anonymous

7/1/2025, 1:04:16 PM

No.105764770

[Report]

>>105764189

>Sometimes kontext has visible progress in the vae, then it just undoes everything and wastes the rest of the steps changing nothing.

that's because the filter has been triggered during the process, can't wait for someone to do some abliteration and uncuck this shit

https://huggingface.co/blog/mlabonne/abliteration

Anonymous

7/1/2025, 1:04:49 PM

No.105764776

[Report]

>install cutting edge acceleration tech from bright chinese minds (actually got it running lol, thanks Kijai!!)

>back to genning SDXL smut

Anonymous

7/1/2025, 1:06:49 PM

No.105764787

[Report]

>>105764040

but they got extra arms and shit

Anonymous

7/1/2025, 1:07:50 PM

No.105764791

[Report]

Anonymous

7/1/2025, 1:08:24 PM

No.105764796

[Report]

Anonymous

7/1/2025, 1:09:02 PM

No.105764798

[Report]

>>105764108

check out framepack studio and wan2gp

Anonymous

7/1/2025, 1:10:05 PM

No.105764803

[Report]

>>105764876

>>105764233

Does this autoinstall into wherever it needs to be if I just CD into comfy root?

Anonymous

7/1/2025, 1:10:41 PM

No.105764810

[Report]

do you need to upgrade trition for sage_attn 2.2?

I'm still at v3.0.0

Anonymous

7/1/2025, 1:13:43 PM

No.105764831

[Report]

>>105764876

has anybody tried new sage attention?

Anonymous

7/1/2025, 1:21:36 PM

No.105764876

[Report]

>>105764803

[..]\python_embeded>python.exe -m pip install [drop your *correct* wheel here]

>>105764831

oh yes

>>105764882

It's impressive that even ampere got speedup when it seemingly targeted optimizations for 40 and 50 series

Anonymous

7/1/2025, 1:24:32 PM

No.105764897

[Report]

>>105764904

Anonymous

7/1/2025, 1:25:18 PM

No.105764904

[Report]

>>105764897

s-stupid flatchested little brat...

Anonymous

7/1/2025, 1:26:21 PM

No.105764912

[Report]

>>105764893

yeah, that update has 2 optimisations, and one of them is for all card

>>105764640

Anonymous

7/1/2025, 1:27:15 PM

No.105764922

[Report]

>>105764893

3090 former chads not dead yet, sage 3 will probably fix that. 5090 prices coming down tho, 2300 now here for the cheapest one

Anonymous

7/1/2025, 1:29:48 PM

No.105764939

[Report]

>>105764966

>>105764882

how to install or should i just wait till its properly released?

Anonymous

7/1/2025, 1:33:35 PM

No.105764969

[Report]

>>105765110

just made a pic of me and my elementary school (20-21 years ago) oneitis with kontext (nothing sexual) using pics of us from when the last time she blocked me when we were 19 (10 years ago)

God I need help

Anonymous

7/1/2025, 1:35:38 PM

No.105764988

[Report]

Anonymous

7/1/2025, 1:36:33 PM

No.105764995

[Report]

>>105765351

>sageattention-2.2.0-cp312-cp312-win_amd64.whl not a supported wheel on this platform

huh

same with sageattention-2.2.0-cp312-cp312-linux_x86_64.whl

Anonymous

7/1/2025, 1:38:29 PM

No.105765008

[Report]

>>105765014

nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2024 NVIDIA Corporation

Built on Tue_Feb_27_16:28:36_Pacific_Standard_Time_2024

Cuda compilation tools, release 12.4, V12.4.99

Build cuda_12.4.r12.4/compiler.33961263_0

ah, need 12.8

is it worth it though? does it break anything?

Anonymous

7/1/2025, 1:39:06 PM

No.105765014

[Report]

>>105765008

>is it worth it though?

it is

>does it break anything?

no

Anonymous

7/1/2025, 1:39:49 PM

No.105765019

[Report]

>>105765030

>>105764966

that's the cuda with torch and not the toolkit right?

Anonymous

7/1/2025, 1:40:21 PM

No.105765020

[Report]

>>105765198

seems slightly slower to me, torch 2.7.1 on 3090 using the normal rentry i2v workflow

Anonymous

7/1/2025, 1:40:40 PM

No.105765025

[Report]

>>105764857

The cuck who was claiming they were gatekeeping Sage2++ must be seething right now.

>chroma-unlocked-v41-detail-calibrated-Q8_0.gguf

Anonymous

7/1/2025, 1:40:58 PM

No.105765030

[Report]

>>105765019

if you want to create the wheels by yourself, you need the toolkit to be cuda 12.8, if you just want to use someone else's wheels, it'll be just cuda+torch

Anonymous

7/1/2025, 1:42:39 PM

No.105765042

[Report]

>>105764061

NTA. Surprisingly painless. VS 2022 build tools and had to set DISTUTILS_USE_SDK=1 and it worked.

Maybe there was some bullshit I dealt with the previous time that carried over.

Anonymous

7/1/2025, 1:44:23 PM

No.105765057

[Report]

>>105765072

do I need the 12.8 toolkit to install these wheels?

Anonymous

7/1/2025, 1:44:42 PM

No.105765060

[Report]

>>105764061

I forgot to enable --use-sage-attention with the previous version. I had it installed unused for months.

Anonymous

7/1/2025, 1:45:02 PM

No.105765061

[Report]

>>105765026

>oh look another distilled version of chroma

Anonymous

7/1/2025, 1:46:04 PM

No.105765072

[Report]

>>105765057

>do I need the 12.8 toolkit to install these wheels?

yes, and you need cuda+torch to be on 12.8 aswell

Anonymous

7/1/2025, 1:47:19 PM

No.105765079

[Report]

>>105765097

>>105765026

>oh look another version of chroma

Thanks anon!

Anonymous

7/1/2025, 1:49:26 PM

No.105765097

[Report]

>>105765079

>another version

*distilled version

Anonymous

7/1/2025, 1:49:27 PM

No.105765098

[Report]

>>105764386

How is that okay ?

Anonymous

7/1/2025, 1:50:53 PM

No.105765110

[Report]

>>105764969

>God I need help

I recently celebrated re-discovering some toddler butt photos that I thought were lost forever on an archive site with a triple goon session. It could always be worse, anon.

But having a oneitis in elementary school at all is really cute. I didn't care about girls at all until middle school

Anonymous

7/1/2025, 1:51:38 PM

No.105765117

[Report]

>>105765026

>mfw my favorite Chroma-fp8-scaled version is no longer being updated by Clybius

...

Anonymous

7/1/2025, 1:53:31 PM

No.105765135

[Report]

Anonymous

7/1/2025, 1:53:49 PM

No.105765137

[Report]

>>105765026

wake me up when i reach v69

Anonymous

7/1/2025, 1:53:56 PM

No.105765138

[Report]

>>105765158

Anonymous

7/1/2025, 1:55:58 PM

No.105765154

[Report]

>>105765782

>>105765026

>yaay number go up

but is it improving though?

Anonymous

7/1/2025, 1:56:39 PM

No.105765158

[Report]

>>105765138

This could maybe save 1 euro 20% of the time at a museum by lying about having a student card and showing them a fake image but you could already do that with photoshop

Anonymous

7/1/2025, 1:56:59 PM

No.105765161

[Report]

>>105765176

PanCAKE

Anonymous

7/1/2025, 1:58:54 PM

No.105765176

[Report]

>>105765186

>>105765161

Nice I did this with shotas and Persian mommies

It's crazy how well WAN is able to generalize similar concepts it's seen before into never before seen kino

Anonymous

7/1/2025, 1:59:49 PM

No.105765185

[Report]

>>105765233

>>105765176

>It's crazy how well WAN is able to generalize similar concepts it's seen before into never before seen kino

not only that but the apache is also kino, everything in Wan is pure kino, and we'll even get an improvement soonTM

>>105764667

Anonymous

7/1/2025, 2:01:23 PM

No.105765198

[Report]

>>105765212

>>105764667

It will be GLORIOUS! I almost forgot Jenga

https://github.com/dvlab-research/Jenga

>up to 6.12x boost

>>105765020

Good catch, might give it a couple of days before trying to install this

Anonymous

7/1/2025, 2:02:55 PM

No.105765212

[Report]

>>105765329

>>105765198

>>up to 6.12x boost

the quality will suffer though?

Anonymous

7/1/2025, 2:04:39 PM

No.105765230

[Report]

>>105765468

>>105765186

I already wasn't dooming for video for all of 2025 because of FusionX and lightx2v but we just keep getting improvements too. 720p at home in 2026 doesn't seem far fetched at all

Anonymous

7/1/2025, 2:04:51 PM

No.105765233

[Report]

>>105765185

Nice wax museum bro

Anonymous

7/1/2025, 2:06:59 PM

No.105765244

[Report]

>>105765248

Anonymous

7/1/2025, 2:07:44 PM

No.105765248

[Report]

>>105765276

>>105765244

Not that's some nigga begging in issues to add poorfag support

Anonymous

7/1/2025, 2:11:09 PM

No.105765276

[Report]

>>105765248

oh I thought it was a PR and he found a way to make it work kek

Anonymous

7/1/2025, 2:18:18 PM

No.105765329

[Report]

>>105765355

So with pusa video extension (doesnt specify duration), radial attention with 4x the duration and a 3.7x speed up + sage2++ a 30 second video would take about 1 minute to slop up? I'm just guessing, my math sucks.

Currently my 4070tis, does around 1 min 30 sec for 5 sec clip (NAG, not new sage attention, VACE and lightx2v lora, 4 step lcm)

>>105765212

Not a clue, we'll have to just wait and see

Anonymous

7/1/2025, 2:18:27 PM

No.105765331

[Report]

>>105765360

>>105765259

>>105765300

I gave up on sunny side up eggs a long time ago

It's not worth the rubberyness of fucking it up and also the extra work cleaning the yolk from the plate in the sink

I just stick to scrambled eggs and if I want that yolky flavor I make an egg sauce pasta like carbonara

Anonymous

7/1/2025, 2:20:09 PM

No.105765348

[Report]

>>105765242

You can tell forrens on github, they phrase requests as demands, like children.

Anonymous

7/1/2025, 2:20:29 PM

No.105765351

[Report]

>>105764995

>install it just fine

>realize my python is too old

>update it

>not a supported wheel on this platform

Anonymous

7/1/2025, 2:20:58 PM

No.105765355

[Report]

>>105765429

>>105765329

Can you share a workflow? OP wan rentry doesn't have a good NAG preset and sage attention update should just be drop in

Anonymous

7/1/2025, 2:21:44 PM

No.105765360

[Report]

>>105765376

>>105765331

nigga what? I'm just posting my gens before i hit the gym.

Anonymous

7/1/2025, 2:22:32 PM

No.105765369

[Report]

>>105765387

Anon who shared the json for two images kontext, on which basis does it decide which is image 1 and which is image 2?

Anonymous

7/1/2025, 2:23:47 PM

No.105765376

[Report]

>>105765458

>>105765360

Post one of those videos as a cat box then

>gym

Don't forget to do wrist exercises

>>105765369

image 1 is considered the main one by the model because it's the first on the reference conditioning cascade (and the workflow also fits the resolution as the same as image 1), but you shouldn't use "image 1" and "image 2", it won't understand, just reference the image 1 normally, and for image 2 you go for "other", like this

Anonymous

7/1/2025, 2:27:39 PM

No.105765400

[Report]

Anonymous

7/1/2025, 2:31:32 PM

No.105765429

[Report]

>>105765452

Anonymous

7/1/2025, 2:33:44 PM

No.105765449

[Report]

does the op have sageattention 2 yet?

Anonymous

7/1/2025, 2:34:13 PM

No.105765452

[Report]

>>105765429

Oh alright thanks. In my one test I did NAG doesn't play well with either lightx2v + vanilla wan or FusionX merge or FusionX merge + lightx2v lora. Faces get fucked up on t2v but thank you I am excited for more prompt adherence

Anonymous

7/1/2025, 2:35:04 PM

No.105765458

[Report]

Anonymous

7/1/2025, 2:36:16 PM

No.105765468

[Report]

>>105765476

>>105765186

>>105765230

feelin positive for videogen. wan is truly magical

Anonymous

7/1/2025, 2:37:27 PM

No.105765476

[Report]

Anonymous

7/1/2025, 2:37:32 PM

No.105765477

[Report]

>>105765486

>>105765387

How does it work if you have two character in your first image, and want to swap one of them for the character in the second image? I tried going for "swap the white haired character by the blonde one" but it only swap the first character's hair

Anonymous

7/1/2025, 2:38:27 PM

No.105765486

[Report]

>>105765477

separate the character, and put them on each "load image", that's better than stitching the images together

Anonymous

7/1/2025, 2:41:30 PM

No.105765518

[Report]

>>105765525

why does load image node have a very old image by default, how do I clear that/cache or make it blank

Anonymous

7/1/2025, 2:42:52 PM

No.105765525

[Report]

>>105765560

>>105765518

>how do I clear that/cache or make it blank

you remove the images from ComfyUI\input folder

Anonymous

7/1/2025, 2:46:45 PM

No.105765560

[Report]

>>105765580

>>105765525

ty, why is it saving cached images though?

Anonymous

7/1/2025, 2:47:35 PM

No.105765566

[Report]

>>105765612

>tfw you're not the goat anymore

Anonymous

7/1/2025, 2:48:36 PM

No.105765580

[Report]

>>105765560

I guess people want to keep their input images to use them for another day

Anonymous

7/1/2025, 2:53:18 PM

No.105765612

[Report]

Anonymous

7/1/2025, 2:57:50 PM

No.105765639

[Report]

New??

Anonymous

7/1/2025, 3:06:33 PM

No.105765699

[Report]

local diffusion?

Anonymous

7/1/2025, 3:11:23 PM

No.105765735

[Report]

So this is it then

The last local diffusion we ever made

Anonymous

7/1/2025, 3:13:32 PM

No.105765749

[Report]

so local it's offline

Anonymous

7/1/2025, 3:18:13 PM

No.105765778

[Report]

>>105765849

>>105765300

those sausages are extremely penicular

Anonymous

7/1/2025, 3:18:38 PM

No.105765782

[Report]

>>105765154

I noticed improves in furry subjects but not anime or realistic

Can't get two image kontext to work

someone share a .png catbox with prompt

Thanks xoxo

Anonymous

7/1/2025, 3:20:38 PM

No.105765798

[Report]

>ERROR: Could not find a version that satisfies the requirement sageattention-2.2.0-cp312-cp312-win_amd64 (from versions: none)

Does the desktop comfy not have it already in? I have to get the old version too?

>>105765791

Can't get two image kontext to work

someone share a .png catbox with prompt

Thanks xoxo

Anonymous

7/1/2025, 3:22:38 PM

No.105765811

[Report]

>>105765820

>>105765800

try this workflow and set it to 2 images (bypass the third)

https://openart.ai/workflows/amadeusxr/change-any-image-to-anything/5tUBzmIH69TT0oqzY751

the anime girl is standing beside the green cartoon frog. change the location to a sunny beach. the frog is holding a beach ball.

Anonymous

7/1/2025, 3:23:35 PM

No.105765814

[Report]

>>105765820

Anonymous

7/1/2025, 3:24:34 PM

No.105765820

[Report]

Anonymous

7/1/2025, 3:26:50 PM

No.105765837

[Report]

>>105766045

do you need 2.8 torch to get sageattn2.2 working? i installed it fine and there is zero speed change

Anonymous

7/1/2025, 3:28:07 PM

No.105765841

[Report]

>>105765850

>ERROR: sageattention-2.2.0-cp312-cp312-win_amd64.whl is not a supported wheel on this platform

erm

are we not going to gen more?

Anonymous

7/1/2025, 3:28:53 PM

No.105765848

[Report]

>>105765842

you can still fit another 10 images in this thread

Anonymous

7/1/2025, 3:29:09 PM

No.105765849

[Report]

>>105765778

Thought same.

>>105765259

>>105765300

Phalic phrankfurter

Anonymous

7/1/2025, 3:29:15 PM

No.105765850

[Report]

>>105765889

sage_attention_2++ is the same as 2.20?

I've updated 2.20 from 2 and I don't get any speed boost on 3090 TI

Anonymous

7/1/2025, 3:32:33 PM

No.105765872

[Report]

Anonymous

7/1/2025, 3:32:34 PM

No.105765873

[Report]

Anonymous

7/1/2025, 3:33:46 PM

No.105765879

[Report]

>>105765929

Installed Comfy, tested a bit sd.3.5 and flux dev. Any other models I should be using for realistic, anime, stylistic styles? Does any of the default template/models do porn or I have to download another one? I tried Biglust, is not very good, though I just used the sd template to run it

Anonymous

7/1/2025, 3:34:15 PM

No.105765882

[Report]

>>105765891

kontext is surprisingly good at the stalin effect:

remove the man on the right.

Anonymous

7/1/2025, 3:35:36 PM

No.105765889

[Report]

>>105765892

>>105765850

What's the cp39 and so?

Anonymous

7/1/2025, 3:36:09 PM

No.105765891

[Report]

>>105765882

replace the man on the right with miku hatsune.

Anonymous

7/1/2025, 3:36:18 PM

No.105765892

[Report]

>>105765889

it's the python version

cp 39 = python 3.9

Anonymous

7/1/2025, 3:37:24 PM

No.105765896

[Report]

>>105765902

>>105765861

what cuda and pytorch version are you on? i am on 2.7.0dev and cuda 12.8 and i also do not see any speed increase on a 3090

Anonymous

7/1/2025, 3:39:03 PM

No.105765902

[Report]

>>105765929

>>105765861

>>105765896

>i also do not see any speed increase on a 3090

I do, my wan gens take me 4 mn insteas of 5 now, maybe it's because I built the wheels by myself Idk

Anonymous

7/1/2025, 3:39:32 PM

No.105765904

[Report]

>>105765911

gah

Anonymous

7/1/2025, 3:40:14 PM

No.105765911

[Report]

>>105765904

kek, you probably have an old ass gpu

Anonymous

7/1/2025, 3:42:21 PM

No.105765922

[Report]

>>105765994

>ERROR: Could not install packages due to an OSError: [Errno 2] No such file or directory: 'C:\\Users\\Gaming\\Documents\\Downloads\\sageattention-2.2.0+cu128torch2.7.1-cp313-cp313-win_amd64.whl'

NIGGA IT'S LITERALLY THERE

Anonymous

7/1/2025, 3:43:18 PM

No.105765929

[Report]

>>105765948

>>105765879

you can do cool shit with biglust but takes time to master those sdxl models. no idea what you are into tho, "model I should be using", kinda vague.

>>105765902

I can do that with ms visual studio installed, correct? how long does it take?

Anonymous

7/1/2025, 3:44:22 PM

No.105765939

[Report]

>>105765945

Anonymous

7/1/2025, 3:44:55 PM

No.105765945

[Report]

>>105765955

Anonymous

7/1/2025, 3:45:09 PM

No.105765948

[Report]

>>105765929

>I can do that with ms visual studio installed, correct? how long does it take?

it takes ~10 mn, you can use this tutorial to see how it can be done proprelly

>>105764966

Anonymous

7/1/2025, 3:46:23 PM

No.105765955

[Report]

>>105765977

>>105765945

he went on vacation

Anonymous

7/1/2025, 3:50:00 PM

No.105765977

[Report]

Anonymous

7/1/2025, 3:54:31 PM

No.105765994

[Report]

>>105765922

I never put anything like that in the users folder. It's just cursed. C:\\downloads or something else.

Anonymous

7/1/2025, 3:59:38 PM

No.105766020

[Report]

Anonymous

7/1/2025, 4:01:42 PM

No.105766030

[Report]

>>105766057

How do I set desktop to use sage? There's no bat file to edit. Do I put it as a launch attribute of the exe?

Anonymous

7/1/2025, 4:02:30 PM

No.105766036

[Report]

>announcing

Anonymous

7/1/2025, 4:03:38 PM

No.105766045

[Report]

>>105765837

Yes, you need torch+cu128

Anonymous

7/1/2025, 4:04:01 PM

No.105766052

[Report]

>>105766086

anons, is there a trusted mirror of full size bf16 Kontext?

I'm not giving BFL my details

Anonymous

7/1/2025, 4:04:50 PM

No.105766064

[Report]

>>105766057

Desktop. Not portable.

Anonymous

7/1/2025, 4:05:58 PM

No.105766075

[Report]

>>105766057

I mean standalone, fuck.

Anonymous

7/1/2025, 4:06:54 PM

No.105766085

[Report]

>>105766129

https://huggingface.co/ostris/kontext_big_head_lora

>Kontext has the manlet effect

>he makes a lora that makes the manlet effect even worse

what does he mean by this? can we use negative strength to remove the manlet effect?

Anonymous

7/1/2025, 4:07:20 PM

No.105766086

[Report]

>>105766138

>>105766052

if you're using comfyui, you already are

Anonymous

7/1/2025, 4:12:57 PM

No.105766129

[Report]

>>105766085

You can try, any lora loader lets you set a negative value, I've seen anons using it like that in the past (with various success). Put the trigger phrase into the negs too

Anonymous

7/1/2025, 4:14:06 PM

No.105766138

[Report]

>>105766146

>>105766086

haven't run Kontext on comfy yet

also, where in the code are they sending telemetry?

I can just comment it out

Anonymous

7/1/2025, 4:15:48 PM

No.105766146

[Report]

>>105766138

>where in the code are they sending telemetry?

there is non he's trolling

Anonymous

7/1/2025, 4:20:48 PM

No.105766179

[Report]

>>105766252

wow it's over

no more local diffusions

Anonymous

7/1/2025, 4:29:48 PM

No.105766252

[Report]

>>105766179

it's only page 7, plenty of time for one of the bakers to make another (mediocre) collage.

Anonymous

7/1/2025, 4:34:06 PM

No.105766285

[Report]

Anonymous

7/1/2025, 5:51:16 PM

No.105767020

[Report]

>>105764108

16gb ram is gonna be tuff, but u could try gguf q4_k_s with self forcing. unironically install linux because windows is bloated, and every drop of ram matters. you should probably go for a light-weight desktop environment like lxqt or a window manager (i3/dwm..)

t. 3060 12gb vram 64gb ram enjoyer

Anonymous

7/1/2025, 5:54:15 PM

No.105767052

[Report]

>>105764378

fuck i feel bad, we're gonna be on the chopping block soon too, i wish someone could backport it to the 2000 series for my bros