/ldg/ - Local Diffusion General

Discussion of Free and Open Source Text-to-Image/Video Models

Prev: >>105836648

https://rentry.org/ldg-lazy-getting-started-guide

>UI

SwarmUI: https://github.com/mcmonkeyprojects/SwarmUI

re/Forge/Classic: https://rentry.org/ldg-lazy-getting-started-guide#reforgeclassic

SD.Next: https://github.com/vladmandic/sdnext

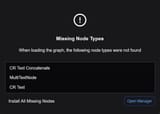

ComfyUI: https://github.com/comfyanonymous/ComfyUI

Wan2GP: https://github.com/deepbeepmeep/Wan2GP

>Checkpoints, LoRAs, & Upscalers

https://civitai.com

https://civitaiarchive.com

https://tensor.art

https://openmodeldb.info

>Tuning

https://github.com/spacepxl/demystifying-sd-finetuning

https://github.com/Nerogar/OneTrainer

https://github.com/kohya-ss/sd-scripts/tree/sd3

https://github.com/derrian-distro/LoRA_Easy_Training_Scripts

https://github.com/tdrussell/diffusion-pipe

>WanX (video)

Guide: https://rentry.org/wan21kjguide

https://github.com/Wan-Video/Wan2.1

>Chroma

Training: https://rentry.org/mvu52t46

>Illustrious

1girl and beyond: https://rentry.org/comfyui_guide_1girl

Tag explorer: https://tagexplorer.github.io/

>Misc

Local Model Meta: https://rentry.org/localmodelsmeta

Share Metadata: https://catbox.moe | https://litterbox.catbox.moe/

Img2Prompt: https://huggingface.co/spaces/fancyfeast/joy-caption-beta-one

Samplers: https://stable-diffusion-art.com/samplers/

Txt2Img Plugin: https://github.com/Acly/krita-ai-diffusion

Archive: https://rentry.org/sdg-link

Bakery: https://rentry.org/ldgcollage | https://rentry.org/ldgtemplate

>Neighbours

https://rentry.org/ldg-lazy-getting-started-guide#rentry-from-other-boards

>>>/aco/csdg

>>>/b/degen

>>>/b/celeb+ai

>>>/gif/vdg

>>>/d/ddg

>>>/e/edg

>>>/h/hdg

>>>/trash/slop

>>>/vt/vtai

>>>/u/udg

>Local Text

>>>/g/lmg

>Maintain Thread Quality

https://rentry.org/debo

Prev: >>105836648

https://rentry.org/ldg-lazy-getting-started-guide

>UI

SwarmUI: https://github.com/mcmonkeyprojects/SwarmUI

re/Forge/Classic: https://rentry.org/ldg-lazy-getting-started-guide#reforgeclassic

SD.Next: https://github.com/vladmandic/sdnext

ComfyUI: https://github.com/comfyanonymous/ComfyUI

Wan2GP: https://github.com/deepbeepmeep/Wan2GP

>Checkpoints, LoRAs, & Upscalers

https://civitai.com

https://civitaiarchive.com

https://tensor.art

https://openmodeldb.info

>Tuning

https://github.com/spacepxl/demystifying-sd-finetuning

https://github.com/Nerogar/OneTrainer

https://github.com/kohya-ss/sd-scripts/tree/sd3

https://github.com/derrian-distro/LoRA_Easy_Training_Scripts

https://github.com/tdrussell/diffusion-pipe

>WanX (video)

Guide: https://rentry.org/wan21kjguide

https://github.com/Wan-Video/Wan2.1

>Chroma

Training: https://rentry.org/mvu52t46

>Illustrious

1girl and beyond: https://rentry.org/comfyui_guide_1girl

Tag explorer: https://tagexplorer.github.io/

>Misc

Local Model Meta: https://rentry.org/localmodelsmeta

Share Metadata: https://catbox.moe | https://litterbox.catbox.moe/

Img2Prompt: https://huggingface.co/spaces/fancyfeast/joy-caption-beta-one

Samplers: https://stable-diffusion-art.com/samplers/

Txt2Img Plugin: https://github.com/Acly/krita-ai-diffusion

Archive: https://rentry.org/sdg-link

Bakery: https://rentry.org/ldgcollage | https://rentry.org/ldgtemplate

>Neighbours

https://rentry.org/ldg-lazy-getting-started-guide#rentry-from-other-boards

>>>/aco/csdg

>>>/b/degen

>>>/b/celeb+ai

>>>/gif/vdg

>>>/d/ddg

>>>/e/edg

>>>/h/hdg

>>>/trash/slop

>>>/vt/vtai

>>>/u/udg

>Local Text

>>>/g/lmg

>Maintain Thread Quality

https://rentry.org/debo