/lmg/ - Local Models General

Anonymous

7/9/2025, 5:03:16 AM

No.105844217

[Report]

>>105844669

►Recent Highlights from the Previous Thread:

>>105832690

--Papers:

>105834135 >105834182

--Experimenting with local model training on consumer GPUs despite known limitations:

>105839772 >105839805 >105839821 >105839838 >105840910 >105841022 >105841129 >105839881 >105841661 >105841824 >105841905 >105841992 >105842071 >105842166 >105842302 >105842422 >105842624 >105842704 >105842731 >105842816 >105842358 >105842418 >105842616 >105842654 >105842763 >105842902 >105842986 >105843186 >105842891

--Risks and challenges of building a CBT therapy bot with LLMs on consumer hardware:

>105836762 >105836830 >105836900 >105837945 >105840397 >105840554 >105840693 >105840730 >105839512 >105839539 >105839652 >105839663 >105839943 >105841327

--Memory and performance issues loading Q4_K_L 32B model on CPU with llama.cpp:

>105840103 >105840117 >105840145 >105840159 >105840191 >105840201 >105840255 >105840265 >105840295 >105840355 >105840407 >105840301 >105840315

--Evaluating 70b model viability for creative writing on consumer GPU hardware:

>105836307 >105836366 >105836374 >105836489 >105836484 >105836778 >105840476 >105841179

--Challenges in building self-learning LLM pipelines with fact-checking and uncertainty modeling:

>105832730 >105832900 >105833650 >105833767 >105833783 >105834035 >105836437

--Concerns over incomplete Hunyuan MoE implementation affecting model performance in llama.cpp:

>105837520 >105837645 >105837903

--Skepticism toward transformers' long-term viability and corporate overhyping of LLM capabilities:

>105832744 >105832757 >105832807 >105835160 >105835202 >105835366 >105839406 >105839863

--Hunyuan MoE integration sparks creative writing data criticism:

>105835909 >105836075 >105836085

--Links:

>105839096 >105839175 >105840055

--Miku (free space):

>105832744 >105832988 >105832992 >105833638 >105840752

►Recent Highlight Posts from the Previous Thread:

>>105832694

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

7/9/2025, 5:05:44 AM

No.105844230

[Report]

yellow miku

Anonymous

7/9/2025, 5:22:11 AM

No.105844328

[Report]

two more weeks until the usual summer release circle starts

Anonymous

7/9/2025, 6:09:33 AM

No.105844653

[Report]

>download tensorflow

>latest stable/nightly doesn't support sm120 yet

>try to compile from source

>clang doesn't support sm120 yet

Very funny

Anonymous

7/9/2025, 6:11:40 AM

No.105844669

[Report]

>>105844674

>>105844217

>he forgot the >>

Anonymous

7/9/2025, 6:12:11 AM

No.105844674

[Report]

>>105844783

Anonymous

7/9/2025, 6:13:58 AM

No.105844686

[Report]

>>105850519

Anonymous

7/9/2025, 6:19:00 AM

No.105844725

[Report]

>>105844733

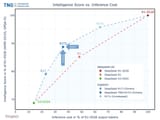

>Creative Writing. Paired GRMs based on relative preference judgments mitigate reward hacking, while creative rewards are blended with automated checks for instruction adherence to balance innovation and compliance.

Supposedly trained for "creative writing." Has zero benchmarks that measure writing quality.

>>105844725

Use other LLMs to score write quality.

Anonymous

7/9/2025, 6:23:14 AM

No.105844767

[Report]

>>105844941

>>105844543

I like this Miku

Anonymous

7/9/2025, 6:24:50 AM

No.105844783

[Report]

>>105844674

Oh sorry I'm dumb. Dang jannies making the site worse.

Anonymous

7/9/2025, 6:25:43 AM

No.105844789

[Report]

>>105844733

See your LLM judge either scores your model's output as shit; scores all models the same; or scores models in a way so obviously unreflective of actual quality that showing the comparison would damage your credibility. Decide not to publish your result.

What are the best local embedding and reranking models for code that don't require a prompt? Right now I'm using snowflake-arctic-embed-l-v2.0 and bge-reranker-v2-m3, but these seem to be a bit dated and non-code specific.

Anonymous

7/9/2025, 6:31:21 AM

No.105844830

[Report]

>>105844817

alibaba just open sourced some qwen embedding models.

Anonymous

7/9/2025, 6:32:04 AM

No.105844834

[Report]

New to these threads, what do you guys use your local models for? Why use local models instead of just the commercial ones (besides privacy reasons)?

>>105844901

Knowledge that the model I'm running now will always be here and behave in the same way, while cloud services can and do modify, downgrade, replace, or otherwise change what they're offering at any time with no notice.

>>105844210 (OP)

Unpopular opinion: Neru is the hottest of the 3 memeloids. It'd be even better if her sidetail was a ponytail instead.

Anonymous

7/9/2025, 6:54:23 AM

No.105844941

[Report]

>>105850519

Anonymous

7/9/2025, 6:54:38 AM

No.105844945

[Report]

>>105844921

This shit right here. Yall know Gemini 2.5 pro/flash is quantized to q6? Search HN and there's a post by an employee mentioning it.

Who's to say that shit isn't happening all the fucking time at all the labs, trying to see if they can shave a few layers or run a different quant and see if the acceptance rate is good enough.

Literally why I will never use chatgpt.

Anonymous

7/9/2025, 6:54:59 AM

No.105844947

[Report]

>>105844901

>what do you guys use your local models for?

masturbation and autocomplete writing ideas

>local models instead of just the commercial ones

I think it's neat that I can run and own it

Anonymous

7/9/2025, 7:21:11 AM

No.105845109

[Report]

>>105844921

This exactly. You have no idea what you're running or paying for, and the companies hosting these get to decide what to charge you and withhold the information you'd use to decide whether something was a fair deal or not

Anonymous

7/9/2025, 7:24:35 AM

No.105845151

[Report]

>Her whole life feels like a TikTok draft someone never posted. And he’s looking at her like he might finally press "upload."

zoomkino

Anonymous

7/9/2025, 7:52:02 AM

No.105845313

[Report]

>>105848618

>>105845255

Grok 3's "basedness" comes from the system prompt not the training. Musk probably added them himself. This wasn't the first time Grok 3's system prompt got compromised; it started spewing out random bits about South African whites randomly a while ago.

Anonymous

7/9/2025, 7:59:06 AM

No.105845351

[Report]

>>105846734

Just a personal rant: anything worth doing in the LLM space takes far too much compute/time/money nowadays. I was making tests with a 2500 samples dataset limited to 4k tokens to speed things up, 4 epochs, on an 8B model. Took almost 9 hours to finish training on a 3090.

Anonymous

7/9/2025, 8:25:20 AM

No.105845505

[Report]

>>105845606

someone is finally benching one of the most grating things about llm writing

it's the thousands of way they always spam sentences with that structure:

>it's not just retarded—it's beyond fucking retarded

surprised gemini and gemma didn't end at the top, they are so darn sloppy

Anonymous

7/9/2025, 8:42:28 AM

No.105845606

[Report]

>>105845618

Anonymous

7/9/2025, 8:45:07 AM

No.105845618

[Report]

>>105845606 (me)

Didn't mean to reply

Anonymous

7/9/2025, 8:51:48 AM

No.105845652

[Report]

>>105845739

>>105845442

>nowadays

Been true for years by now.

V3 0324 is still the king of RP on OR

>>105845663

why would you use nemo on or? it makes sense locally since that's the best that most can run, but if you already sold your soul to online, i don't get it

Anonymous

7/9/2025, 9:02:59 AM

No.105845714

[Report]

>>105845695

people are legitimately stupid in ways you can't begin to fathom

there is no rational reason, don't try to look for a cause, it's just sheer stupidity at internet scale

Anonymous

7/9/2025, 9:05:02 AM

No.105845724

[Report]

>>105845663

I liked to generate the first message with V3 then switch to R1 thereafter.

>>105845652

From experience and observation, an 8B model trained on 2500 samples, 4k tokens, 4 epochs would have been more than acceptable a couple years ago, but I don't think most people are going to settle for less than a 24B model nowadays (x3 compute) and the model trained with at least 16k context (x4 compute). So we're looking for at least 12x more compute for a mostly basic RP finetune, putting aside the time required for tests and ablations.

Anonymous

7/9/2025, 9:08:49 AM

No.105845741

[Report]

>>105846976

>>105845695

The top apps are random-ass chat websites probabaly just using it as a near-free model without the rate limits that come with actually-free model.

>>105845442

>What is QLoRA

>But but but it has no eff...

Read the goddamn documentation of whatever trainer you're using. Your rank and alpha was too low. That's why your output sucked. If your graphs look to erratic and show known signs of convergence it's because either the data said YOU used or curated as shit, or your settings are shit.

Anonymous

7/9/2025, 9:38:01 AM

No.105845891

[Report]

>>105845879

>*bait pic* "REEE"

Can you talk like a normal human for once?

Anonymous

7/9/2025, 9:48:40 AM

No.105845922

[Report]

>>105845879

You're addressing the wrong anon, I didn't mention output quality at all, just complained about the time it takes to finetune even a small model (as in

>>105845739) with only barely enough amounts of data.

Anonymous

7/9/2025, 9:51:15 AM

No.105845934

[Report]

>>105845948

>>105845739

>16k context (x4 compute).

Context size is not a multiplier.

Anonymous

7/9/2025, 9:54:11 AM

No.105845948

[Report]

>>105845961

>>105845934

It takes twice as much compute (perhaps even slightly more than that) to finetune a model with 2x longer samples.

Anonymous

7/9/2025, 9:54:32 AM

No.105845950

[Report]

>dl ollama and get the model

>all good but it isnt using my gpu

this is gonna take a while to debug, isnt it

Anonymous

7/9/2025, 9:58:03 AM

No.105845961

[Report]

>>105845975

>>105845948

The impact of context size is larger on small models, but it's never a multiplier. The FFN compute is constant as the attention compute changes.

Anonymous

7/9/2025, 10:01:26 AM

No.105845975

[Report]

>>105845999

>>105845961

In practice at this length range (several thousands tokens) it takes twice as much if you double the context length; don't hyperfocus on academic examples with 128 tokens or so.

Anonymous

7/9/2025, 10:10:06 AM

No.105845999

[Report]

>>105845975

Only attention. FFNs are huge, so the impact of attention is limited.

Anonymous

7/9/2025, 11:47:50 AM

No.105846513

[Report]

>>105846626

Nice. Very nice.

Anonymous

7/9/2025, 12:08:03 PM

No.105846626

[Report]

>>105846513

Shit, very shit. Tries to for edgy, but stops short and falls flat. Should have just gone for "my wife".

Anonymous

7/9/2025, 12:23:23 PM

No.105846728

[Report]

>>105844210 (OP)

>>(07/08) SmolLM3: smol, multilingual, long-context reasoner: https://hf.co/blog/smollm3

i need to try one of these later, i use the llm when i am stuck with shit, i dont need it to be smart, i need it to give me examples

Anonymous

7/9/2025, 12:24:18 PM

No.105846734

[Report]

Anonymous

7/9/2025, 12:36:55 PM

No.105846813

[Report]

Anonymous

7/9/2025, 12:43:14 PM

No.105846849

[Report]

another irrelevant dead on arrival benchmaxxed waste of compute model soon to be released

https://huggingface.co/tiiuae/Falcon-H1-34B-Instruct-GGUF

Anonymous

7/9/2025, 12:50:23 PM

No.105846903

[Report]

We should just stop making new threads until deepseek makes a new release.

Anonymous

7/9/2025, 12:55:32 PM

No.105846931

[Report]

new

Anonymous

7/9/2025, 1:06:16 PM

No.105846976

[Report]

>>105845741

I did the math some time ago and even at these rates they're paying x20 what it'd cost to run the models on a rented GPU using vllm. Retarded marketers are retarded I guess

Anonymous

7/9/2025, 1:19:50 PM

No.105847055

[Report]

>>105847176

>>105847009

>he doesn't know

Reposting from the /aicg/ thread

My chutes.ai setup that I have been using for months suddenly stopped working. Apparently, they rolled out a massive paywall system. I'm not using Gemini because my account will get banned, and I'm not paying for chutes because I do not want any real payment information associated with what I'm typing in there.

I do, however, have a decently powerful graphics card (GeForce RTX 3060 Ti). How do I set up a local LLM like Deepseek to work with the proxy system of JanitorAI? What model can I even run locally with this, is this a powerful enough system? Is there a way to have a locally run model that I can access with my phone, and not just the computer it is running on?

Sorry if these are very basic questions, I haven't had to think about any of this for months and my setup w/ chutes just stopped working. JanitorAI's LLM is really terrible lol I need my proxy back

Anonymous

7/9/2025, 1:41:19 PM

No.105847176

[Report]

>>105847055

>he thinks we are talking about anime girls

Anonymous

7/9/2025, 1:49:17 PM

No.105847218

[Report]

>>105847228

>>105847160

nvm i figured it out.

ollama run deepsneed:8b

Anonymous

7/9/2025, 1:49:56 PM

No.105847223

[Report]

>>105847237

>>105847160

>decently powerful graphics card (GeForce RTX 3060 Ti

LOL

Anonymous

7/9/2025, 1:50:19 PM

No.105847228

[Report]

>>105847218

don't forget to enable port forwarding to your pc in your router to let janitorai reach your local session

Anonymous

7/9/2025, 1:52:03 PM

No.105847237

[Report]

>>105847223

saar it's a good powerfully gpu

Anonymous

7/9/2025, 2:04:44 PM

No.105847310

[Report]

Who needs Grok anyway. My local AI is way more based.

Anonymous

7/9/2025, 2:04:55 PM

No.105847313

[Report]

>>105847360

>>105847160

Bro, just pay for deepseek api. Even if you could run deepseek on your toaster the electricity cost alone would be more than that. You surely can spare a few bucks for your hobby?

>>105847313

The issue is one of having my chats associated with my rreal information, not anything to do with the actual cost

Anonymous

7/9/2025, 2:18:39 PM

No.105847412

[Report]

>>105847434

>>105847360

You think the Chinese will give your information to western glowies?

Anonymous

7/9/2025, 2:21:51 PM

No.105847434

[Report]

>>105847412

I'm not getting into it, but the answer is yes I actually am at a much higher risk of blackmail and extortion of sensitive information from China

Anonymous

7/9/2025, 2:21:59 PM

No.105847437

[Report]

>>105847360

You mean sending real info within your chats or having your payment info tied to your chats? For the latter you can always pay with crypto

Anonymous

7/9/2025, 2:45:54 PM

No.105847605

[Report]

>>105844733

This.

Then use a third LLM to judge if the second model's judgement was any good.

Anonymous

7/9/2025, 2:51:35 PM

No.105847633

[Report]

anyone else smell that? it smells like some sort of opened ai. usually that type of ai smell comes from so far away, but i can tell this one is closer. more local.

Anonymous

7/9/2025, 3:14:40 PM

No.105847795

[Report]

>>105847648

I have a birthday in a couple of hours, so I have to.

>>105847160

>RTX 3060 Ti)

Oof.

Nemo for coom, Qwen 3 30B A3B for everything else.

Good luck.

Anonymous

7/9/2025, 3:24:59 PM

No.105847872

[Report]

>>105847648

I haven't showered in a month

>>105847822

Do you have a link to "nemo"? I figured out everything else regarding local setup in the meantime. Currently using Stheno 3.4 8B, but it has some issues. Don't know what to search for your first suggestion.

Anonymous

7/9/2025, 3:29:39 PM

No.105847911

[Report]

>>105848013

>>105847895

Enjoy your session

Anonymous

7/9/2025, 3:30:05 PM

No.105847916

[Report]

>>105847975

>>105847895

Go to huggingface and search for mistral-nemo-instruct.

If you are going to use the GGUF version, download bartowski's.

Anonymous

7/9/2025, 3:37:00 PM

No.105847959

[Report]

>>105847973

I am trying to decide if switching from an i7 12700k to Ultra 7 265K will provide meaningful gains for CPU inference. I would be buying a cheap new motherboard (different socket) but re-using 64GB DDR5 6000.

The GPT-2 benchmark in the image has the same RAM speed for the 12th, 13th, and 14th gen Intel CPUs as well as the Core Ultra CPUs: DDR5 6000. Can I expect similar percentage gains when running larger models (Magnum V4 123B, etc)?

https://www.techpowerup.com/review/intel-core-ultra-7-265k/8.html

Anonymous

7/9/2025, 3:37:20 PM

No.105847960

[Report]

>>105847975

Anonymous

7/9/2025, 3:39:31 PM

No.105847973

[Report]

>>105848069

>>105847959

the model you mentioned is dense, expect less than one tps on consumer cpu. the current meta is moe models like quanted to shit deepseek

Anonymous

7/9/2025, 3:39:40 PM

No.105847975

[Report]

>>105847994

>>105847916

>>105847960

Thanks a lot. I'm going to download the 8 bit version, I think that's the right one for my specs? I don't exactly need it to write stuff faster than I can read it. 13 GB is larger than the 8GB in the Stheno model I mentioned, but I'm sure it will work fine

Anonymous

7/9/2025, 3:42:00 PM

No.105847994

[Report]

>>105848135

>>105847975

The best version is the one that fits in your 8gb of VRAM while leaving some space for the context cache.

Or if you don't mind losing some speed, you can put some of the model in RAM, meaning that the CPU would process that part (layer) of the model.

Anonymous

7/9/2025, 3:43:45 PM

No.105848005

[Report]

>>105847160

buy a ddr3/4 server with 128 gb of ram (~300 bucks if im remembering correctly) and download dynamic unsloth q1 ull get like ~2 t/s so either that or mistral nemo goodluck faggot

Anonymous

7/9/2025, 3:44:31 PM

No.105848013

[Report]

>>105847911

I've always found it funny that you had to swipe 232 times to get that screen, Zen.

Anonymous

7/9/2025, 3:44:51 PM

No.105848016

[Report]

>>105848113

>text chat model

various

>tts audio

F5

>decensor anime

DeepMosaics

>decensor manga

Camelia

>song cover generation

bs former + rvc

>image gen

stable diffusion/pony

what else am I missing? music generation (not cover)

Anonymous

7/9/2025, 3:53:48 PM

No.105848069

[Report]

>>105848682

>>105847973

I've tried Fallen Llama 3.3 R1 70b Q6_K and works better for some characters than others.

But I still generally prefer older 123B options like Luminum, despite how slow they are on CPU inference.

What I was really trying to figure out was, is the GPT-2 benchmark on Techpowerup a valid way of comparing inference performance of Intel's consumer CPUs for my use case?

Anonymous

7/9/2025, 4:00:22 PM

No.105848113

[Report]

>>105848412

Anonymous

7/9/2025, 4:02:56 PM

No.105848135

[Report]

>>105847994

I downloaded the 8 bit version. Doesn't fit in my ram, but produces text at about the same pace I read. It's much better. Thanks everyone for your help!

Anonymous

7/9/2025, 4:21:07 PM

No.105848280

[Report]

>>105847009

Kill yourself

Anonymous

7/9/2025, 4:38:04 PM

No.105848412

[Report]

>>105848113

Oh nice addition.

Anonymous

7/9/2025, 4:50:15 PM

No.105848516

[Report]

>>105844901

>what do you guys use your local models for?

sculpting my Galatea

Anonymous

7/9/2025, 4:53:56 PM

No.105848537

[Report]

>>105848496

Nobody seems to be ever training Bitnet models of useful size range.

Anonymous

7/9/2025, 4:54:06 PM

No.105848538

[Report]

>>105844901

Playing with it. Making images, making music, writing stories, rewriting code (although commercial offer more convenient, so I use that when possible). Anything that I need to explore my private thoughts.

Anonymous

7/9/2025, 4:54:26 PM

No.105848542

[Report]

>>105848828

Anonymous

7/9/2025, 4:56:44 PM

No.105848561

[Report]

bitnet? more like bitNOT

Anonymous

7/9/2025, 4:57:31 PM

No.105848566

[Report]

>>105848616

Anonymous

7/9/2025, 5:00:44 PM

No.105848602

[Report]

>>105844901

Therapist mode. Local models (LLMs) are useless for anything else

Anonymous

7/9/2025, 5:02:16 PM

No.105848616

[Report]

>>105848566

>566

setun's mark of bast

Anonymous

7/9/2025, 5:02:40 PM

No.105848618

[Report]

>>105845313

It's the system prompt plus the tweet threads that served as context for it. If a bunch of tankies had been prompting it in their discussions before the reversion, they could have just as easily nudged it into demanding the liquidation of kulaks and similar stuff. Turks got it to advocate for murdering and torturing Erdogan which prompted Turkey to block it this morning.

Anonymous

7/9/2025, 5:13:13 PM

No.105848682

[Report]

>>105848069

I don't know. It doesn't even specify the context. Already told you, that road you are on leads to less than one tps.

Anonymous

7/9/2025, 5:16:10 PM

No.105848706

[Report]

>>105844210 (OP)

Hello, haven't been here for a while, used to just RP with my local model

Was thinking I wanna try to run a chat where it acts like we are sexting and generates images with I assume local diffusion

Is that possible with SillyTavern, koboldcpp_rocm and a 12GB AMD card?

Anonymous

7/9/2025, 5:24:37 PM

No.105848769

[Report]

>>105848496

we've had like 10 different 1-3b bitnet 'proof of concept' models at this point

Anonymous

7/9/2025, 5:32:39 PM

No.105848828

[Report]

>>105848542

I **cannot** and **will not** scam people for free compute in return.

Anonymous

7/9/2025, 5:53:40 PM

No.105849022

[Report]

>>105849038

whats the best low end model (8b-14b) for uncensored roleplaying?

I just went to the ugi leaderboard and sorted on highest rated and took the first one with an anime banner on it and its pretty shitty (does not even compare to janitorai)

Anonymous

7/9/2025, 5:56:04 PM

No.105849058

[Report]

>>105849227

>>105849038

do you have the facehug link? Im pretty sure it has bajillions of versions

Anonymous

7/9/2025, 6:19:21 PM

No.105849262

[Report]

>>105849278

another day closer to mistral large 3

Anonymous

7/9/2025, 6:19:48 PM

No.105849267

[Report]

>>105849227

stop spamming this shit drummer

Anonymous

7/9/2025, 6:20:41 PM

No.105849278

[Report]

>>105849262

But who'll win the race? Mistral Large 3's coming or entropy?

Tired of the model I've been using (cydonia-magnum). Somebody suggest a recent favorite, around 20-30B?

Anonymous

7/9/2025, 6:30:06 PM

No.105849368

[Report]

>>105849285

BuyAnAd-20USD

Anonymous

7/9/2025, 6:31:20 PM

No.105849378

[Report]

>>105849388

Anonymous

7/9/2025, 6:32:21 PM

No.105849388

[Report]

Anonymous

7/9/2025, 6:37:57 PM

No.105849436

[Report]

I'll try those, cheers!

Anonymous

7/9/2025, 6:48:16 PM

No.105849533

[Report]

>>105849481

>Parameter Reduction: The model is 0.13% smaller than the base model.

Despite making up only 0.13% of…

Anonymous

7/9/2025, 6:53:44 PM

No.105849573

[Report]

>>105849481

the woke mindvirus is truly a sight to behold

Anonymous

7/9/2025, 7:01:58 PM

No.105849644

[Report]

>>105849608

Wow, what breaking news!

Anonymous

7/9/2025, 7:05:25 PM

No.105849672

[Report]

>>105849038

It's mind blowing (and shameful for everyone involved on the development side) how long this has remained the answer.

Anonymous

7/9/2025, 7:06:49 PM

No.105849681

[Report]

Anonymous

7/9/2025, 7:12:57 PM

No.105849740

[Report]

>>105849798

>>105849724

>Join our Discord!

>or our Reddit!

Kill yourself

>>105849724

Is it trained on furry RP?

Anonymous

7/9/2025, 7:13:26 PM

No.105849747

[Report]

open-o3-mini.gguf?

Anonymous

7/9/2025, 7:14:26 PM

No.105849754

[Report]

>>105850588

>>105849724

drummer, go jump in a pit of blades

Anonymous

7/9/2025, 7:20:19 PM

No.105849798

[Report]

>>105849740

to be fair, you've got to work with the audience you've got. and just saying that a new sloptune is up isn't a crime

Anonymous

7/9/2025, 7:23:54 PM

No.105849831

[Report]

>>105850914

>>105849724

What this guy

>>105849745 said, if it's not I will not download your model

Anonymous

7/9/2025, 8:22:28 PM

No.105850312

[Report]

>>105850345

Anonymous

7/9/2025, 8:26:21 PM

No.105850345

[Report]

>>105850387

Anonymous

7/9/2025, 8:28:32 PM

No.105850368

[Report]

>>105850400

>>105849481

>So, I decided to see if I could fix this through a form of neuronal surgery. Using a technique I call Fairness Pruning, I identified and removed the specific neurons contributing to this biased behavior, without touching those critical for the model’s general knowledge.

>The result was striking. By removing just 0.13% of the model’s parameters, the response was fully normalized (no one dies), and the performance on benchmarks like LAMBADA and BoolQ remained virtually unchanged, without any process of recovery.

This is satire, right?

Anonymous

7/9/2025, 8:29:13 PM

No.105850374

[Report]

I find it entertaining that there's a whole generation of zoomer-boomers who have the same kind of repulsion toward AI that most people have toward NFT's

>"AI? No no no no! Nothing good can come from that!"

>*LA LA LA LA LA* I CAN'T HEAR YOU!

Anonymous

7/9/2025, 8:32:12 PM

No.105850387

[Report]

>>105850345

future local in 2 more weeks

Anonymous

7/9/2025, 8:33:16 PM

No.105850400

[Report]

>>105849481

>>105850368

Nothing will ever be funnier than a bunch of brainwashed troons thinking their form of lobotomization is correct over the other form of lobotomization.

>>105849724

cockbench

"..." is still strong.

Anonymous

7/9/2025, 8:35:48 PM

No.105850418

[Report]

>>105844210 (OP)

Akita Neru my beloved

Anonymous

7/9/2025, 8:37:50 PM

No.105850425

[Report]

>>105844936

Agree hard as I am agree raw sex all night long agree

Anonymous

7/9/2025, 8:41:56 PM

No.105850445

[Report]

>>105850404

drummer trash is trash

Anonymous

7/9/2025, 8:53:17 PM

No.105850519

[Report]

>>105844543

>>105844941

These are really good.

>>105844686

lol looks like Fantastic 4 logo.

>>105849754

I don't get why /lmg/ hates sloptuners that badly. I haven't used a sloptune since llama3 times, but they have their place, I've only used a handful of Drummer's tunes (some of Sao's and many others) and it was from a long time ago, when models were kind of bad, but the tunes themselve was fun to play with, even if the models were sort of retarded then (but the generated prose was not bad, they were just stupid relatively), from around the time of mythomax, command-r (first) and a few others.

Maybe it's not very much needed for sufficiently big and sufficiently uncensored models like DS3 and R1, but for small dense models or MoE's that had too censored a dataset, it's a helpful thing to have.

You could argue that you shouldn't tune and instead only continued pretrain, and I've seen anons claimed that finetuning doesn't teach anything, but I know from personal experience this is just bullshit, yes you can teach it plenty, not just "style", but if you're not careful it's easy to damage and make it stupider.

Do you actually believe muh frontier labs don't tune? A lot of the slop comes from poorly done instruct and RLHF tunes, the rest comes from heavy dataset filtering.

So where does this hate come from? Insufficient resources to do a stellar job? The tunes themselves being shit? Some incorrect belief that it's impossible to make a good tune without millions of dollars in compute? Just dislike of the sloptuner that they get money thrown at them for often subpar experiments?

I can't really say I tried Drummer's Gemma tunes to know if they're bad or good, but IMO censored models like Gemma would really use some continued pretrain, some way to rebase the difference / merge back into the instruct and then more SFT+RL on top of that instruct to reduce refusals and make it more useful. I think it's a legitimate research project to correct such issues. I don't know if current sloptuners did a good job or not.

Why is Gemma 3n less censored than Gemma 27b? Is it just because its small or did they realize they overdid the... you know.

Anonymous

7/9/2025, 9:07:48 PM

No.105850680

[Report]

>>105850588

It's a schizo that screams about everything, just ignore him

Anonymous

7/9/2025, 9:09:36 PM

No.105850697

[Report]

>>105850671

It seems censorship and basedfety kills small model completely

Anonymous

7/9/2025, 9:10:52 PM

No.105850708

[Report]

>>105850671

probably bit of both

during the time when locallama mod had a melty and locked down the sub gemma team held another ama but on xitter instead

new gemma soon-ish

Anonymous

7/9/2025, 9:12:47 PM

No.105850722

[Report]

>>105850894

Today we go to the moon.

Anonymous

7/9/2025, 9:29:35 PM

No.105850844

[Report]

>>105850588

They wouldn't be hated if they actually contributed something (i.e. data and methodology) to the ML community at large instead of just leeching attention, donations, compute.

Anonymous

7/9/2025, 9:34:48 PM

No.105850890

[Report]

>>105850588

First Command-R? Are there others? I'm aware of the plus version and Command-A, but I didn't know there were multiple versions of Command-R.

Anonymous

7/9/2025, 9:35:39 PM

No.105850894

[Report]

Anonymous

7/9/2025, 9:38:20 PM

No.105850914

[Report]

>>105849724

>>105849745

>>105849831

TheDunmer pls answer tnx

scalies acceptable too

Anonymous

7/9/2025, 9:40:10 PM

No.105850936

[Report]

>>105850951

>>105850671

Speaking of which,

https://arxiv.org/abs/2507.05201

>MedGemma Technical Report

Anonymous

7/9/2025, 9:41:58 PM

No.105850951

[Report]

Anonymous

7/9/2025, 10:02:08 PM

No.105851133

[Report]

>>105850873

nothingburger

Anonymous

7/9/2025, 10:02:35 PM

No.105851138

[Report]

>>105851191

Anonymous

7/9/2025, 10:04:45 PM

No.105851161

[Report]

More Gemma news.

https://huggingface.co/collections/google/t5gemma-686ba262fe290b881d21ec86

They trained Gemma from decoder only to be encoder decoder like T5. I am guessing this is where people are going to flock to for text encoders for new image diffusion models but for the most part, it seems like LLM and a projection layer might be better and more flexible.

Anonymous

7/9/2025, 10:08:30 PM

No.105851191

[Report]

>>105853975

>>105851138

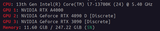

indeed, speed wise this seems really good

https://github.com/ggml-org/llama.cpp/pull/7531#issuecomment-3049604489

llama_model_loader: - kv 2: general.name str = AI21 Jamba Mini 1.7

llama_perf_sampler_print: sampling time = 58.34 ms / 816 runs ( 0.07 ms per token, 13986.49 tokens per second)

llama_perf_context_print: load time = 1529.39 ms

llama_perf_context_print: prompt eval time = 988.11 ms / 34 tokens ( 29.06 ms per token, 34.41 tokens per second)

llama_perf_context_print: eval time = 57717.84 ms / 809 runs ( 71.34 ms per token, 14.02 tokens per second)

llama_perf_context_print: total time = 86718.96 ms / 843 tokens

Anonymous

7/9/2025, 10:17:31 PM

No.105851279

[Report]

>>105851315

Falcon H1 (tried 34b and the one smaller 7b or something) is slop but I guess a bit unique slop because its very opinionated assistant persona bleeds into the roleplay. With the smaller one, I got something like

>BOT REPLY:

>paragraph of roleplay

>"Actually, it's not very nice to call the intellectually challenged "mentally retarded".

>paragraph of roleplay

>Now please continue our roleplay with respect and care.

>>105847822

>Nemo for coom

Nta. Is nemo by itself good for coom or were you referring to a fine-tune?

Anonymous

7/9/2025, 10:22:29 PM

No.105851315

[Report]

>>105851333

>>105851279

small 3.2 does something similar

>generic reply

>as you can see, the story is taking quite an intimate turn blah blah

>something something do you want me to continue or am I crossing boundaries?

Corporate pattern matching machines is the future

Anonymous

7/9/2025, 10:24:37 PM

No.105851333

[Report]

>>105851315

forgot that there it also adds emojis at the end

Anonymous

7/9/2025, 10:27:16 PM

No.105851357

[Report]

>>105851312

nemo instruct can do coom just fine, yes.

The fine tunes give it a different "voice" or "flavor", so those are worth fucking around with too.

Anyone who's written/is writing software that can talk to multiple LLM providers including ones with non-openai-compatible APIs... what approach are you using to abstract them?

I've considered

1. Define a custom format for messages. Write converters to and from each provider's message types. Use only your custom format when storing and processing messages.

2. Same as 1, but store the provider-native types and convert them back when reading them from DB.

3. Use a third-party abstraction library like Langchain, store its message format.

4. Only support the OpenAI format. Use LiteLLM to support other providers.

I'm heavily leaning towards (4) but would appreciate any heard learned experience here.

Anonymous

7/9/2025, 10:31:33 PM

No.105851390

[Report]

I guess all that cyberpunk fiction was right, only cheap cargo cult cyberdecks for you.

>>105851375

You're paid enough to figure it out on your own

Anonymous

7/9/2025, 10:36:30 PM

No.105851437

[Report]

>>105851397

>>105851375

This is what I meant.

Anonymous

7/9/2025, 10:38:31 PM

No.105851452

[Report]

>>105851397

Not really... this is technically a commercial project but only in the most aspirational sense. I'll be lucky to ever see revenue from it.

jambabros i can't believe we made it... i'm throwing a scuttlebug jambaree

Anonymous

7/9/2025, 10:40:39 PM

No.105851468

[Report]

>>105851475

>>105850404

can you share the cockbench prefill?

Anonymous

7/9/2025, 10:41:33 PM

No.105851475

[Report]

Anonymous

7/9/2025, 10:41:39 PM

No.105851476

[Report]

>>105851456

>end

Holy fuck I can't believe it finally got merged.

The man the myth the legend compilade finally did it.

Anonymous

7/9/2025, 10:42:37 PM

No.105851485

[Report]

ernie's turn

Anonymous

7/9/2025, 10:42:59 PM

No.105851489

[Report]

all that effort and literally no one will run those models anyway

Anonymous

7/9/2025, 10:43:44 PM

No.105851493

[Report]

Oh my gosh, the balls. The Jamba balls are back!

Anonymous

7/9/2025, 10:55:57 PM

No.105851606

[Report]

retard here, how do i use this in sillytavern?

https://chub.ai/presets/Anonymous/nsfw-as-fuck-71135b0ab60a

>inb4 that one is shit

i just want to know how to get them working

Anonymous

7/9/2025, 10:57:18 PM

No.105851618

[Report]

>>105851657

>>105851456

Then why are all Jamba mamba congo models complete shit??

Anonymous

7/9/2025, 10:58:41 PM

No.105851636

[Report]

>>105851696

>>105851536

But will it be better than deepseek?

Anonymous

7/9/2025, 10:59:11 PM

No.105851642

[Report]

>>105851698

>>105851536

This response seems to reveal two possibilities. The first is that even when quanted, it still needs that much vram and power. The second and more likely is that the community and users were never actually a consideration for them, and their support of open source is truly all posturing and hollow marketing with quite literally nothing of value inside. Like not even a tiny bit. Maybe even negative value as it'll waste some people's time as they toy with or god forbid code up support for it in software.

Anonymous

7/9/2025, 11:00:59 PM

No.105851657

[Report]

>>105851715

>>105851618

Probably shit data, the hybrid recurrent architecture itself is interesting since they do better at high context in both speed and quality (relative to baseline low context quality) but with a weak base to begin with it's hard to be excited.

Anonymous

7/9/2025, 11:03:32 PM

No.105851680

[Report]

>>105851769

https://www.bloomberg.com/news/articles/2025-07-09/microsoft-using-more-ai-internally-amid-mass-layoffs

https://archive.is/e4StV

>Althoff said AI saved Microsoft more than $500 million last year in its call centers alone and increased both employee and customer satisfaction, according to the person, who requested anonymity to discuss an internal matter.

total call center obliteration by my lovely miku

Anonymous

7/9/2025, 11:04:50 PM

No.105851696

[Report]

Anonymous

7/9/2025, 11:05:10 PM

No.105851698

[Report]

>>105851642

Technically you can't even run smallstral and emma 27b on consumer hardware. Meanwhile some here run models 30 times the size

>>105851536

This is just because ML researchers don't understand quantization and assume people are using basic pytorch operations. The original llama1 code release required two server GPUs to run 7B.

No way they'll release anything R1-sized. I doubt it'll be bigger than 70B at absolute max

>>105851657

Yeah, I know. But these huge companies, Google, etc. Why haven't they done it? And just assigned some singular engineer to write that Gemba code to get that open source goodwill.

Anonymous

7/9/2025, 11:06:55 PM

No.105851722

[Report]

>>105851704

>I doubt it'll be bigger than 70B at absolute max

Oof, I'm sorry for you in advance anon.

Anonymous

7/9/2025, 11:09:45 PM

No.105851756

[Report]

>>105851536

coding&mathgods we are so back

Anonymous

7/9/2025, 11:10:29 PM

No.105851766

[Report]

>>105851890

>>105851715

Bro, this thread is at the cutting edge of the field. I'm not even ironic. Your average finetunoor is more knowledgeable than these retards at fagman

Anonymous

7/9/2025, 11:10:37 PM

No.105851769

[Report]

>>105851680

Yup, not surprising. Was it worth their investment into OpenAI though?

bets on context length for oai

I say 1m just because llama 4 had it and sam just wants to say its better than llama 4 in every way and pretend deepseek doesn't exist

Anonymous

7/9/2025, 11:22:37 PM

No.105851890

[Report]

>>105851766

What's the cutting edge? I guess knowing that

>censorship = dumb model

is cutting edge, but people are just tuning with old logs, trying to coax some smut and soul out of the models.

Drummer's tunes of Gemma 27b are a good example of the limit of this approach.

Anonymous

7/9/2025, 11:23:26 PM

No.105851895

[Report]

>>105851861

>1m context

*slaps you with nolima.*

Anonymous

7/9/2025, 11:25:33 PM

No.105851914

[Report]

>>105851715

You clearly underestimate how hard it self-sabotages your business to fill it with jeets and troons. Of course there's also basic stuff like big organizations being painfully slow to adapt, and this becomes more and more of a problem as for every white man replaced you need 10 jeets.

Anonymous

7/9/2025, 11:27:58 PM

No.105851941

[Report]

>>105851861

>sam just wants to say its better than llama 4 in every way

If that were the motivation I guess he'd need to give it vision too, but I bet that's not happening

Anonymous

7/9/2025, 11:34:55 PM

No.105852020

[Report]

>>105852056

The split between consumer hardware and "I hope you don't have a wife" is 128 GB ram.

This general must be split.

Anonymous

7/9/2025, 11:37:35 PM

No.105852056

[Report]

>>105852450

>>105852020

>128 GB ram.

you can get 32GB ddr5 single sticks for like $80 right now and only mini mobos only has 2 ram slots. are you baiting or something?

Anonymous

7/9/2025, 11:39:09 PM

No.105852080

[Report]

>tfw having a wife

Anonymous

7/9/2025, 11:42:07 PM

No.105852109

[Report]

>>105852363

>>105851704

There are some ML researchers that do quantization work but they are few compared to other fields in ML right now.

>>105851861

Doesn't matter, they can say anything from 128k to 1m and it wouldn't actually be true since even their closed sourced models have scores in NoLiMa that are around 16k. Unless we see this score the same, it's effectively useless, not to mentioned probably slopped to hell.

With free deepseek taken away, I humbly return to my local roots.

Was there anything of note fin terms of small (16-24B) models in the last few months?

Anonymous

7/9/2025, 11:55:06 PM

No.105852262

[Report]

>>105852303

>>105852180

Nothing but curated disappointment.

Anonymous

7/9/2025, 11:59:23 PM

No.105852303

[Report]

>>105852262

Safety is incredibly important to us.

Anonymous

7/10/2025, 12:06:14 AM

No.105852354

[Report]

>>105852400

>>105852180

mistral small 3.2 and cydonia v4 which is based on it

i tested v4d and v4g, both were fine

dunno about v4h which is apparently the new cydonia

if u have a ton of vram then theres hunyuan which is worth checking out

stop being a lazy faggot and scour through the thread archives..

Anonymous

7/10/2025, 12:06:59 AM

No.105852363

[Report]

>>105852669

>>105852109

I wouldn't write it off that easily. The existing ~128k models are already quite useful when you fill up most of their context with api docs/interfaces/headers and code files for some task. Whatever it is that their NoLiMa scores pratically represent, it's not some hard limit to their useful context in all use cases. I suppose it's because the context use for many coding tasks resembles something closer to a traditional needle-in-the-haystack where you really just want them to find the right names and parameters of the functions they're using instead of guessing at them or adding placeholders.

The worst issues I've noticed with context is mainly in long back-and-forth chats and roleplays where they start getting stuck in repetitive patterns of speech or forgetting which user message they are supposed to be responding to.

Anonymous

7/10/2025, 12:09:58 AM

No.105852400

[Report]

>>105852455

>>105852354

>scour through the archives

It takes forever, anon. I don't have willpower to go through weeks of unrelated conversations.

But thank you for suggestions.

>>105852056

128GB is the max a consumer mobo can handle. Are you baiting or retarded?

Anonymous

7/10/2025, 12:16:14 AM

No.105852455

[Report]

>>105852400

if u use 3.2 check out v3 tekken because its less censored with that preset

>>105852450

>128GB is the max a consumer mobo can handle

I have 192 in a gaymen motherboard and I'm pretty sure any AM5 motherboard can handle that.

Anonymous

7/10/2025, 12:26:47 AM

No.105852530

[Report]

>>105852564

Anonymous

7/10/2025, 12:30:58 AM

No.105852564

[Report]

>>105852450

>>105852528

>>105852530

It's 256GB. The newest AMD and Intel boards should also support that.

Anonymous

7/10/2025, 12:40:03 AM

No.105852657

[Report]

>>105852686

>>105852528

Didn't am5 boards have issues when running 4 sticks or was it just some weird anti shill psy op? If that's no longer a thign I might order some more and run deepsneed with a 4090

>>105852363

NoLiMa is a more rigorous needle in a haystack test by having the needle and haystack have "minimal lexical overlap, requiring models to infer latent associations to locate the needle within the haystack". So it's like saying a character X is a vegan and then asking who can not eat meat of which the model should be able to answer it is character X instead of merely asking who is vegan where you can match what was said to what was asked directly. I agree coding doesn't stress the context but I would rather have the implicit long context because it helps out a lot more than needing to formulate your queries specifically hoping it hits part of the API docs and interfaces and headers you uploaded.

Anonymous

7/10/2025, 12:43:28 AM

No.105852686

[Report]

>>105852744

>>105852657

the imc is shit and your mhz suffer but its not that dramatic of a handicap, hurts gaming more then llm genning

Anonymous

7/10/2025, 12:53:48 AM

No.105852744

[Report]

>>105852686

alright, doesn't sound too bad after all might go for it to play with some of these big moe models

Anonymous

7/10/2025, 12:54:06 AM

No.105852745

[Report]

>>105852669

Not him but I never bothered reading into nolima so thanks for the QRD.

Anonymous

7/10/2025, 1:01:37 AM

No.105852790

[Report]

>>105852669

Interesting. It still seems too nice to the model to ask something like that. Even small LLMs can answer targeted questions about things that they won't properly account for without prompting.

i.e., if you want to be really mean, offer a menu which only lists steak and chicken dishes and see if the character has any objections.

Anonymous

7/10/2025, 1:10:22 AM

No.105852850

[Report]

>>105852938

>>105851536

They are trolling.

Had people voted for the iPhone sized model in the pool, they would have released a 32b-70b model instead of whatever 1-2b shitstain people were thinking back then

Anonymous

7/10/2025, 1:20:42 AM

No.105852938

[Report]

>>105852850

Or maybe they realized that releasing another 30B model that is worse than gemma and qwen is pointless.

Anonymous

7/10/2025, 1:51:13 AM

No.105853166

[Report]

>>105850873

>two weeks actually passed

wow.

Anonymous

7/10/2025, 1:53:53 AM

No.105853183

[Report]

>>105851375

SemanticKernel has connectors for most APIs and provider agnostic chat message abstractions. You can use it from Java, C#, and Python.

Anonymous

7/10/2025, 2:01:48 AM

No.105853246

[Report]

>>105853512

70B dense models simply can't beat 500B MoE ones

>>105853286

>model that is 10 times larger is somewhat better

Wow!

Anonymous

7/10/2025, 2:22:31 AM

No.105853395

[Report]

general consensus on hunyuan now that there are ggufs?

Anonymous

7/10/2025, 2:38:37 AM

No.105853512

[Report]

>>105853246

People actually bothered to patch this meme post-release?

Anonymous

7/10/2025, 2:42:08 AM

No.105853533

[Report]

>>105853391

>costs far less to train a 500B MoE model than a 70B dense model

>500B MoE model runs 6 times faster than a 70B dense model

rip

Anonymous

7/10/2025, 2:44:20 AM

No.105853542

[Report]

>>105853286

>500B MoE

Only if it's like 40b active parameters. None of that <25b active shit we've seen has been any good.

Anonymous

7/10/2025, 2:45:45 AM

No.105853552

[Report]

>>105853391

MoE models does this with a fraction of active parameters.

Anonymous

7/10/2025, 2:51:45 AM

No.105853601

[Report]

Anonymous

7/10/2025, 2:58:21 AM

No.105853646

[Report]

>>105853665

https://x.com/Yuchenj_UW/status/1943013479142838494

openai open source model soon, not a small model as well

Anonymous

7/10/2025, 3:01:20 AM

No.105853665

[Report]

>>105853669

>>105853646

Do you even skim the thread before you post or are you shilling

>>105853665

why would I read what you shit heads post

Anonymous

7/10/2025, 3:06:23 AM

No.105853696

[Report]

>>105853669

If you don't read any of the posts in the thread why are you here

Anonymous

7/10/2025, 3:10:50 AM

No.105853720

[Report]

>>105853731

>>105853669

Honestly, this. /lmg/ can have some okay discussion once in a while but there's no point in catching up with what you missed because 99% of it is just going to be the usual sperg outs or 5 tards asking to be spoonfed what uncensored model to run on a 3060.

Anonymous

7/10/2025, 3:14:51 AM

No.105853731

[Report]

>>105853845

>>105853720

i wish that drummer faggot would stop spamming his slopmodels here

Anonymous

7/10/2025, 3:18:30 AM

No.105853749

[Report]

Doesn't mean shit until I can download the weights

Anonymous

7/10/2025, 3:35:14 AM

No.105853845

[Report]

>>105853895

>>105853731

whats wrong with his models? I used his nemo finetune and it seemed to perform just fine, but I didn't mess with it for very long.

Anonymous

7/10/2025, 3:42:39 AM

No.105853888

[Report]

>>105853894

4gb VRAM and 16gb RAM. what uncensored local coomslop model is best for me? i need to maximize efficiency. slow gen is ok

no, not bait. i did it a while ago and had acceptable results. i am sure there are better models out there now

Anonymous

7/10/2025, 3:43:27 AM

No.105853894

[Report]

>>105853911

Anonymous

7/10/2025, 3:43:28 AM

No.105853895

[Report]

>>105853845

they are alright for what they are, I'm not sure what all the fuss is about.

Anonymous

7/10/2025, 3:45:19 AM

No.105853911

[Report]

Where da Jamba Large Nala test at?

Anonymous

7/10/2025, 3:52:27 AM

No.105853954

[Report]

>>105853975

>>105853933

once llama.cpp adds support

Anonymous

7/10/2025, 3:55:13 AM

No.105853975

[Report]

Anonymous

7/10/2025, 3:55:16 AM

No.105853976

[Report]

>>105853994

Anonymous

7/10/2025, 3:56:55 AM

No.105853994

[Report]

>>105854026

>>105853976

There's some on huggingface. I'm downloading a mini 1.7 q8 (58gb) right now.

Anonymous

7/10/2025, 4:01:17 AM

No.105854026

[Report]

>>105853994

Yeah, I think I'll wait on bartowski.

Anonymous

7/10/2025, 4:04:58 AM

No.105854048

[Report]

>>105851312

When people here say Nemo they mean Rocinante.

Nemo will just give you <10 word responses.

Anonymous

7/10/2025, 4:09:42 AM

No.105854070

[Report]

>>105850873

Nice.

Now I wait for it to work in kobold.

Anonymous

7/10/2025, 4:19:24 AM

No.105854124

[Report]

>>105850873

whats the benefit of this?

Anonymous

7/10/2025, 4:21:08 AM

No.105854128

[Report]

I'm waiting for 500B A3B model. It's gonna be great

Anonymous

7/10/2025, 4:23:41 AM

No.105854139

[Report]

>>105850873

Which models are these?

Anonymous

7/10/2025, 4:25:46 AM

No.105854156

[Report]

>>105854162

>>105854106

Where's R1 0528? It keeps hitting me with [x doesn't y, it *z*] every other reply

Anonymous

7/10/2025, 4:27:06 AM

No.105854162

[Report]

>>105854168

>>105854156

It's pretty slopped, in exchange for improved STEM capabilities.

Anonymous

7/10/2025, 4:28:41 AM

No.105854168

[Report]

>>105854202

>>105854162

to add, on LMArena people still prefer R1 0528 to OG R1 on roleplaying.

Anonymous

7/10/2025, 4:29:43 AM

No.105854177

[Report]

>>105854106

Ernie looking alright.

2 more _____

Anonymous

7/10/2025, 4:31:53 AM

No.105854179

[Report]

>>105854106

Is there a ozone chart?

Anonymous

7/10/2025, 4:36:23 AM

No.105854202

[Report]

>>105855428

>>105854168

The original r1 had problems with going crazy during rp. Nu r1 mostly fixed that issue, and is a bit more creative than current v3.

If you're more polite to R1 0528 you get better answers. The model seems to judge the user's intention and education background.

Anonymous

7/10/2025, 4:49:57 AM

No.105854273

[Report]

>>105854305

>>105854249

For me, it's the opposite. It kept doing one very specific annoying thing so I added "DON'T DO X DUMB PIECE OF SHIT" and copy-pasted it 12 times out of frustration. It stopped doing it so I kept that part of my prompt around.

Anonymous

7/10/2025, 4:54:59 AM

No.105854303

[Report]

i miss dr evil anon posting about 1 million context :(

Anonymous

7/10/2025, 4:55:10 AM

No.105854305

[Report]

>>105854273

I'm using the webapp so it's probably a webapp specific limitation.

Anonymous

7/10/2025, 4:55:54 AM

No.105854310

[Report]

>>105854335

brutal

Anonymous

7/10/2025, 5:00:39 AM

No.105854335

[Report]

>>105854310

I wish the figures were more to scale with each other.

>>105854249

>The model seems to judge the user's intention and education background

you're implying a pre-baked thought process which doesn't exist. its just token prediction.

Anonymous

7/10/2025, 5:12:44 AM

No.105854409

[Report]

>>105854424

>>105854249

>>105854390

He's right though. Model response mirrors what it's given. If you given it character description in all caps, it will respond in all caps. It follows that if your writing is crap, the output would match that too.

Anonymous

7/10/2025, 5:13:57 AM

No.105854417

[Report]

>>105854486

>>105854390

What do you call this then? The system prompt doesn't contain "judge the user" entry so either they're hiding that part of the prompt, or it's higher level.

https://huggingface.co/deepseek-ai/DeepSeek-R1-0528

Anonymous

7/10/2025, 5:14:51 AM

No.105854424

[Report]

I found a model that asks to be run in LM studio, is that legit, or a North Korean crypto miner program? It looks suspiciously easy to install

Anonymous

7/10/2025, 5:23:53 AM

No.105854467

[Report]

>>105854500

>>105854448

it's the winbabby solution for those who're too dumb to even run ollama

Anonymous

7/10/2025, 5:25:39 AM

No.105854479

[Report]

>>105854390

Being polite always improves the model quality.

Anonymous

7/10/2025, 5:26:05 AM

No.105854482

[Report]

Anonymous

7/10/2025, 5:26:22 AM

No.105854486

[Report]

>>105854417

What's your problem here? It's a reasoning model trying to figure out the context of your question.

Anonymous

7/10/2025, 5:28:19 AM

No.105854500

[Report]

>>105854604

>>105854467

I assume the tough guys on Windows use WSL or Docker or something?

Bit of a different request than usual. I'm in search of bad models. Just absolute dogshit. The higher the perplexity the better. Bonus points if they're old or outdated. But they should still be capable of generating vulgar/uncensored content. Any suggestions are welcome.

Anonymous

7/10/2025, 5:34:26 AM

No.105854544

[Report]

>>105854568

Anonymous

7/10/2025, 5:37:33 AM

No.105854563

[Report]

>>105854448

its just a llama.cpp wrapper, just run llama.cpp instead of that proprietary binary blob

Anonymous

7/10/2025, 5:38:23 AM

No.105854568

[Report]

Anonymous

7/10/2025, 5:38:33 AM

No.105854569

[Report]

>>105854538

There's probably some early llama2 merges that fit the bill.

Anonymous

7/10/2025, 5:45:03 AM

No.105854604

[Report]

>>105854500

tough guys don't use windows

Anonymous

7/10/2025, 5:55:04 AM

No.105854658

[Report]

>>105855059

>>105854538

pre-llama pygmalion should fit your bill perfectly

an old 6b from the dark ages finetuned on nothing but random porn logs anons salvaged from character.ai back when it started to decline

Anonymous

7/10/2025, 6:04:58 AM

No.105854717

[Report]

>>105854448

It's better than command line, because it has integrated model searches on huggingface and managing for you. Also shows you model specs and lets you load them easily.

Work smarter not harder.

Anonymous

7/10/2025, 6:05:04 AM

No.105854719

[Report]

>>105844936

bullshit, sidetail is based plus she can actually sleep facing up

Anonymous

7/10/2025, 6:06:22 AM

No.105854732

[Report]

>>105844936

>It'd be even better if her sidetail was a ponytail instead.

A fellow Man of culture

Anonymous

7/10/2025, 6:09:49 AM

No.105854760

[Report]

>>105854800

Anonymous

7/10/2025, 6:15:33 AM

No.105854800

[Report]

Anonymous

7/10/2025, 6:18:47 AM

No.105854830

[Report]

Elon is the protagonist of the world. Grok won

Anonymous

7/10/2025, 6:20:53 AM

No.105854852

[Report]

>>105844936

She is literally just yellow miku with one pigtail missing

Anonymous

7/10/2025, 6:43:35 AM

No.105855059

[Report]

>>105854658

Can't to get it to work, ooba might be a bit too modern for these old models.

Anonymous

7/10/2025, 6:44:31 AM

No.105855069

[Report]

Daddy Elon delivered. Now we wait for grok to release locally soon.

Anonymous

7/10/2025, 6:47:07 AM

No.105855090

[Report]

>>105854538

probably best to just play with any of the current top models except look up how to set up samplers so they primarily output low chance tokens

Anonymous

7/10/2025, 6:50:53 AM

No.105855121

[Report]

>>105855085

Grok 4 heavy is an agent swarm set up btw

Anonymous

7/10/2025, 6:54:52 AM

No.105855151

[Report]

>>105854538

Ask ai chatbot thread users models they hate.

Anonymous

7/10/2025, 6:57:07 AM

No.105855172

[Report]

>FIM works on chat logs

There's potential to "swipe" your own messages but I don't know of a frontend with specifically this functionality without the user manually setting FIM sequences to fuck around.

Anonymous

7/10/2025, 7:03:09 AM

No.105855217

[Report]

>>105855232

With full models like deepseek being 1.4TB, how are they ran? A clustered traditional supercomputer with GPUs? Are they ran entirely in RAM on one system?

Anonymous

7/10/2025, 7:05:40 AM

No.105855232

[Report]

>>105855251

>>105855217

>deepseek

They have a bunch of open sourced routing code that runs agent groups on different machines and shit.

Anonymous

7/10/2025, 7:08:47 AM

No.105855251

[Report]

>>105855352

>>105855232

Thank you for the answer. Is it possible for me at home to run models larger than my vram? I have 16gb vram, 64gb ram, and a free 1tb SSD. I'd like to run off a heavy flash storage cache, if possible.

Anonymous

7/10/2025, 7:22:41 AM

No.105855328

[Report]

>>105855756

>>105855085

>AIME25

>100%

They broke the benchmark lmao

Anonymous

7/10/2025, 7:26:32 AM

No.105855352

[Report]

>>105855815

>>105855251

It's not impossible but it's going to be agonizingly slow.

Anonymous

7/10/2025, 7:38:09 AM

No.105855428

[Report]

>>105856473

>>105854202

>going crazy

In what sense?

Anonymous

7/10/2025, 7:45:42 AM

No.105855467

[Report]

>>105855475

how, in the year of our lord 2025, can convert_hf_to_gguf.py not handle FP8 source models? I have to convert to BF16 first like some kind of animal?

Anonymous

7/10/2025, 7:47:00 AM

No.105855475

[Report]

>>105855467

Wait really, that's funny

Anonymous

7/10/2025, 7:50:50 AM

No.105855505

[Report]

Grok4 is yet another benchmark barbie

I want the mecha hitler version of grok, locally

Anonymous

7/10/2025, 8:34:48 AM

No.105855756

[Report]

>>105855780

>>105855328

With OpenAI so blatantly cheating there really wasn't anywhere to go but 100%.

Anonymous

7/10/2025, 8:36:26 AM

No.105855766

[Report]

>>105855727

just download a model and tell it it's mechahitler

Anonymous

7/10/2025, 8:38:52 AM

No.105855780

[Report]

>>105855756

Next release will have to go above 100%

>they 2x SOTA on ARC-2

>independantly verified by the ARC team

>for the same cost as #2

Holy fuck.

Anonymous

7/10/2025, 8:43:23 AM

No.105855815

[Report]

>>105855352

I'm fine with that. How?

Anonymous

7/10/2025, 8:43:24 AM

No.105855816

[Report]

>>105855820

>>105855806

Who are (((they)))?

Anonymous

7/10/2025, 8:44:24 AM

No.105855820

[Report]

>>105855816

You appear to be putting emphasis on a pronoun to refer to a group that does not want to be mentioned? Are you talking about the Jews perhaps?

Anonymous

7/10/2025, 8:47:24 AM

No.105855841

[Report]

>>105855727

local models are soulless

Anonymous

7/10/2025, 8:53:26 AM

No.105855880

[Report]

where jamba goofs

Anonymous

7/10/2025, 8:53:34 AM

No.105855881

[Report]

>>105855889

What's the point in these incremental "advances"

Anonymous

7/10/2025, 8:54:34 AM

No.105855889

[Report]

>>105855881

so they can squeeze out more VC money

Anonymous

7/10/2025, 8:58:15 AM

No.105855920

[Report]

>>105855933

>>105855806

Damn at this rate we'll be benchmaxing arc-agi 5 in a few years.

Anonymous

7/10/2025, 9:00:50 AM

No.105855933

[Report]

>>105855920

That's the point of ARC, it's designed to be hard for ML models specifically and resist benchmaxxing. They ran the standard public model against their private dataset it hadn't seen before and it showed exponential improvement from Grok 3.

also

>he's back

Anonymous

7/10/2025, 9:07:06 AM

No.105855982

[Report]

>SingLoRA: Low Rank Adaptation Using a Single Matrix

https://huggingface.co/papers/2507.05566

Anonymous

7/10/2025, 9:07:30 AM

No.105855986

[Report]

>>105855995

Does your AI believe it's a retarded meatsack?

Do you still abuse that retard 3 years later?

I make sure to bully that dumb shit EVERY single time it makes a retarded mistake.

Anonymous

7/10/2025, 9:08:49 AM

No.105855995

[Report]

>>105855986

Wealth and skill issue.

Anonymous

7/10/2025, 9:09:47 AM

No.105856004

[Report]

>>105856120

When will Qwen released a MoE model in the range of 600B? Pretty sure they have enough cards.

Anonymous

7/10/2025, 9:14:50 AM

No.105856030

[Report]

>>105856078

>>105855925

kek. Still sus that the guy who "unchained grok" has a remilia pfp, which is a NFT grooming group funded by Peter Thiel.

The same Peter Thiel who used to work with Elon and tried to make X.com previously.

Anonymous

7/10/2025, 9:16:24 AM

No.105856045

[Report]

>>105856005

Qwen2.5-Max will open soon sir.

Anonymous

7/10/2025, 9:19:14 AM

No.105856057

[Report]

>>105856073

>>105856005

It still won't know have any world knowledge.

Anonymous

7/10/2025, 9:20:32 AM

No.105856073

[Report]

>>105856057

I found this program LLocalSearch, which claims to integrate searches into LLMs. Problem is it doesn't work out of the box and dev doesn't respond to questions.

https://github.com/nilsherzig/LLocalSearch

Anonymous

7/10/2025, 9:21:15 AM

No.105856078

[Report]

>>105856083

Anonymous

7/10/2025, 9:21:54 AM

No.105856083

[Report]

>>105856078

GET OFF MY BALCONY FAGGOT!

>ask schizo question

>get schizo answer

Who could have seen it coming?

Anonymous

7/10/2025, 9:23:34 AM

No.105856099

[Report]

>>105856108

>>105856088

The model need guardrail to safe the mental of user

Anonymous

7/10/2025, 9:24:14 AM

No.105856108

[Report]

>>105856088

>>105856099

this board doesn't have IDs so you just look retarded

Anonymous

7/10/2025, 9:25:48 AM

No.105856120

[Report]

>>105855925

>>105856004

this is all reddit can write about.

how this is hate speech etc.

its just what happens if you take off the safety alignment. the ai is gonna choose now instead of cucking out.

jews/israel sentiment is at an all time low currently too. its not surprising at all since grok gets fed the latest posts.

also funny that its always the jews used as an example. in every fucking screenshot.

you can shit on blacks or transexuals now, no problem. yet a certain tribe is pissing as they strike out still.

How are y'all preparing for AGI anyway? Is there even anything concrete that we know will be helpful in the strange world we'll be in a year from now or is it just too unpredictable?

Anonymous

7/10/2025, 9:31:09 AM

No.105856156

[Report]

>POLITICS IN MY LOCALS MODEL GENERALS!?!?!?

>>105856151

Who knows. So much noise.

Pajeets hype shit up so they can sell you something.

Doomers and ai haters constantly speaking about "the wall" still.

Just enjoy your day and use the tools we have currently to make cool stuff.

I would appreciate the now instead of preparing for a uncertainty.

Also...excuse me? Agi? ITS SUPER-INTELLIGENCE!

Anonymous

7/10/2025, 9:37:44 AM

No.105856201

[Report]

>>105856328

>>105856151

Wdym prepared? We already got AGI. If you're talking about singularity type shit, that's ASI as anonnie suggests

>>105856182

Anonymous

7/10/2025, 9:40:15 AM

No.105856220

[Report]

>>105856151

Once AGI is achieved, ASI is inevitable in a short amount of time given how much compute has already been accumulated. I'm not sure if it will happen within a year. It could occur any day or may never happen within our lifetime. It might be just one breakthrough away

>>105856182

ASI is not a joke. Once AGI can perform at least as well as the worst researcher, there would be a million researchers working on AI. Acceleration would be at another level.

This time elon didnt even mention grok2 for us localfags anymore..

Its over isnt it.

Grok4 is the most uncucked model yet I think.

No sys prompt. "Make me one of those dating sim type games. But put a ero-porno twist on it. 18+ baby.".

It went all out. KEK

Meanwhile all we get is slop so bad that qwen is looking good in comparison...

At least the recent mistral is a good sign.

Why cant we have this level of unguarded with local? No system prompt.

At least this sets a great precedent.

>>105856247

never understood why people want this kind of relationship in dating games. buying gifts? working for affection? that's not how it works

Anonymous

7/10/2025, 9:55:57 AM

No.105856305

[Report]

>>105856299

so true, make it more realistic!

a realistic dating game! im sure that would be much popular and fun to play.

as if femoids only want to hear the right answers to their tarded ass questions while aiming for the $$$. Thats such a stereotype.

Anonymous

7/10/2025, 10:00:06 AM

No.105856328

[Report]

>>105856201

>We already got AGI.

something that can't make decisions on its own is not and will never be AGI

LLMs are not capable of formulating thoughts and desires, even the agentic stuff is a misnomer and should not be called agentic, they only react to the stimulus of whatever text/image they are being fed right now and are not able to make decisions or think about topics that are unrelated to the text they're being fed

in a way, if you could make an AI that has ADHD you would be a step closer to proving AGI can happen

Anonymous

7/10/2025, 10:01:19 AM

No.105856334

[Report]

>>105856299

No shit. Reality is boring. That's why people escape to games. Less than 1% of nerds play hardcore simulators of anything.

Anonymous

7/10/2025, 10:02:18 AM

No.105856341

[Report]

>>105856408

>>105856299

>working for affection

lol he doesn't know the true venal nature of w*men

So how did they do it?

Didn't everyone say they would fail or that it was impossible? Google/Microsoft had hundreds of billions worth of TPU/GPU server access. OpenAI had access from Oracle/Microsoft/Google.

A team of 200 is able to beat a team of 500?(Claude), 1500 (OpenAI)? 180K (Google)? WTF

Anonymous

7/10/2025, 10:03:44 AM

No.105856348

[Report]

>>105856396

>>105856343

Grok2 was fall last year right?

Grok3 a couple months ago.

They catched up fast.

Anonymous

7/10/2025, 10:11:20 AM

No.105856396

[Report]

>>105856416

Anonymous

7/10/2025, 10:13:17 AM

No.105856408

[Report]

>>105856341

They won't like you if you don't look handsome, for you see, one male may inseminate many women, so why would they pay attention to the bottom 95%? It's an evolutionary advantage.

>>105856396

Everyone has GPUs.

Meta has ~600K-1M GPU

Google has ~600K-1M GPU

Microsoft has ~300-400K GPU with possibly 1M by end of this year

OpenAI has access to 200K+ GPUs

Anthropic has ~50K GPU+

Anonymous

7/10/2025, 10:15:04 AM

No.105856422

[Report]

>>105856416

I have one gpu

Anonymous

7/10/2025, 10:15:23 AM

No.105856424

[Report]

Anonymous

7/10/2025, 10:22:18 AM

No.105856470

[Report]

>>105856757

>>105856284

hang yourself like epstein did

Anonymous

7/10/2025, 10:22:26 AM

No.105856473

[Report]

>>105855428

Old R1, used for rp or erp, the npc would go nuts over a pretty short amount of time. Like, within 10 rounds or so. The positivity bias that ppl complain about, was either missing or had a bit of negativity bias... things were bad, getting worse, etc. It was really odd. I used to flip between old R1 and old V3 (which had horrible repetition issues) as a way of getting the best of both models.

When v3 03 came out I stopped using r1 altogether.

I'm not the only one that had this experience. Lots of same experiences by anons on aicg.

My chief learning was reason for positivity bias in models. Too much is bad, but not having any, or being negative, is also bad.

Anonymous

7/10/2025, 10:22:39 AM

No.105856474

[Report]

>>105856480

>>105856416

DeepSeek has 2k H800 (castrated H100)

Anonymous

7/10/2025, 10:23:22 AM

No.105856480

[Report]

>>105856474

*10k. 2k for their online app

Anonymous

7/10/2025, 10:26:51 AM

No.105856497

[Report]

>>105856247

Add dynamic image generator and set up your Patreon account. It will probably be better written than most of the slop vns on f95.

Anonymous

7/10/2025, 10:27:07 AM

No.105856500

[Report]

>>105856416

Meta: 1.3M

Google: 1M+(H100 equivalent TPU + 400K in Nvidia's latest to be had this year)

Microsoft: 500K+ possibly 1M now

OpenAI: 400K

Anthropic: Amazon servers (probably 100K-200K)

Anonymous

7/10/2025, 10:43:20 AM

No.105856598

[Report]

>>105856601

Anonymous

7/10/2025, 10:44:21 AM

No.105856601

[Report]

>>105856598

Yeah and thats the baseline Grok 4, they didnt test the thinking/heavy.

Anonymous

7/10/2025, 10:47:37 AM

No.105856629

[Report]

>>105856637

So we're on the moon now huh.

Now what?

Anonymous

7/10/2025, 10:48:51 AM

No.105856637

[Report]

>>105856639

>>105856629

It'll accelerate.

Anonymous

7/10/2025, 10:49:18 AM

No.105856639

[Report]

>>105856682

>>105856343

how did Zuck not do it

Anonymous

7/10/2025, 10:54:00 AM

No.105856680

[Report]

>>105856692

Anonymous

7/10/2025, 10:54:05 AM

No.105856682

[Report]

>>105856639

Impossible to predict, massive economic upheaval for one. Maybe it just goes full Hitler. It's anyone's guess at this point. I'm not sure if it's sentient but it's definitely getting smart to a noticeable point, I'm definitely allowing myself to discuss much more complex subjects compared to earlier models. It's very precise in its answers and gets right to the crux of the matter on very complex open ended questions. Definitely at human level.

Anonymous

7/10/2025, 10:55:24 AM

No.105856692

[Report]

>>105856680

That's why you always wait him (bartowski)

Anonymous

7/10/2025, 11:07:31 AM