Anonymous

7/13/2025, 7:22:55 AM

No.105888667

[Report]

>>105889818

/ldg/ - Local Diffusion General

Anonymous

7/13/2025, 7:24:19 AM

No.105888673

[Report]

>>105888696

Is there a list of Booru artists who draw art in exactly the same style as the official art or the anime?

I was looking at stuff from keihh and that's right on the mark.

there a causvid/lightx2v lora version for i2v?

or are these exclusively for t2v?

(that isn't accvid)

Anonymous

7/13/2025, 7:26:26 AM

No.105888690

[Report]

Anyone got the breast/nipple fixer lora for kontext?

Anonymous

7/13/2025, 7:27:34 AM

No.105888696

[Report]

>>105888710

>>105888673

'production art' is the tag you are looking for friend

Anonymous

7/13/2025, 7:27:51 AM

No.105888697

[Report]

>>105888711

>>105888688

lightx2v works for i2v perfectly fine

Anonymous

7/13/2025, 7:28:31 AM

No.105888704

[Report]

>>105888711

Anonymous

7/13/2025, 7:29:59 AM

No.105888710

[Report]

>>105888696

No that isn't it. That's not too different to official art, except with settei.

I'm looking for any art, including fanart, that replicates the original style with 1/1 parity (or close to it).

Anonymous

7/13/2025, 7:30:06 AM

No.105888711

[Report]

Anonymous

7/13/2025, 7:30:27 AM

No.105888716

[Report]

>>105888721

kontext tip: if you have a character and an outfit or something you want them to wear, specify a new background or location and it should work. otherwise you get an output with both and no swap.

Anonymous

7/13/2025, 7:31:31 AM

No.105888721

[Report]

>>105888737

>>105888716

for example.

the man is wearing a white tshirt with an image of the pink hair anime girl on the right, beige cargo pants, and a black bomber jacket. change the background to a park. full body view.

Anonymous

7/13/2025, 7:32:49 AM

No.105888731

[Report]

Damned thread of foeboat

>>105888721

if you dont specify a location, and you have an image merge/concatenate, kontext has to decide the background but you have two. so you have to specify or it wont change:

Anonymous

7/13/2025, 7:36:36 AM

No.105888748

[Report]

>>105888737

but, if you add "change the location to a garage with a 80s white sports car"...

then the model knows to make that the background, and you get 1 character. so if you want to combine stuff, be specific!

Anonymous

7/13/2025, 7:40:45 AM

No.105888763

[Report]

>>105888770

>>105888737

you can just write "crop out the image on the right, leave only the image on the left" etc

Anonymous

7/13/2025, 7:42:30 AM

No.105888770

[Report]

>>105888763

>>105888737

obviously you'd need to pass an empty latent with the similar resolution as the original single image for it to work properly

Anonymous

7/13/2025, 7:43:24 AM

No.105888773

[Report]

>>105888785

are the bigasp models still the only ones that can do realistic hardcore?

I can't get A1's inpaint to do anything with the grasshopper. it refuses to change anything lmao

Anonymous

7/13/2025, 7:45:46 AM

No.105888785

[Report]

>>105888773

Is that the regular sdxl model? I think nowadays you're better off using a "realistic" illustrious/noobai merge because it knows so much more tags, characters, poses etc

And if it looks too sloppy and pony-like, you can pass it to kontext to turn it more realistic (with a nsfw lora)

Anonymous

7/13/2025, 7:46:26 AM

No.105888787

[Report]

Anonymous

7/13/2025, 7:46:59 AM

No.105888789

[Report]

>>105888774

i'm not the turbo autist but you can use kontext to remove it, then img2img at low denoise because kontext fries the image

Anonymous

7/13/2025, 7:49:40 AM

No.105888795

[Report]

>collage has manass & hentai

Grim

Anonymous

7/13/2025, 7:50:16 AM

No.105888797

[Report]

>>105891480

>>105888371

i tried lightx2v with no prompt and it decided to delete the light

the man is wearing the outfit from the image on the right. change the location to a park. keep his expression the same. full body view.

phase 1 complete: outfit transfer, now we refine it.

r o a s t c a r d

7/13/2025, 7:52:12 AM

No.105888803

[Report]

>>105888862

>>105888774

Cameltoe also removed as I have been banned hundreds of times here for it ;3

Anonymous

7/13/2025, 7:55:10 AM

No.105888821

[Report]

How the fuck am I supposed to prevent flicker when using image-to-image on frames from a video? Even if they're nearly identical they're slightly inconsistent, even with the exact same seed and low denoise. I'm not even making a video, just trying to repeat the exact same thing across a few images.

Anonymous

7/13/2025, 7:56:41 AM

No.105888826

[Report]

>>105888829

>>105888799

the man is holding a long katana. he is wearing a black fedora. his left hand is tipping his fedora hat. change the location to a messy bedroom during the day. keep his expression the same.

literally me studying the blade:

Anonymous

7/13/2025, 7:57:47 AM

No.105888829

[Report]

>>105888799

>>105888826

wow, this model is terrible

Anonymous

7/13/2025, 8:04:37 AM

No.105888859

[Report]

>only now just found out about TA's NSFW ban

So what if you have some models there that got hidden? Can you just get rid of all of the NSFW images and it'll show up publicly again or is it banned to the shadow realm forever?

Anonymous

7/13/2025, 8:04:56 AM

No.105888862

[Report]

>>105894468

>>105888803

beahahahahahagah

Anonymous

7/13/2025, 8:06:16 AM

No.105888867

[Report]

>>105889017

PonyGODS, we are SO back

Anonymous

7/13/2025, 8:08:54 AM

No.105888877

[Report]

>he hey ho ho

>where is ani so we can make fun of him again

Anonymous

7/13/2025, 8:22:34 AM

No.105888926

[Report]

Anonymous

7/13/2025, 8:25:25 AM

No.105888940

[Report]

gosling x2, 2 image kontext:

The man on the left is looking up at a large billboard in Tokyo at night. On the billboard is a large image of the anime girl on the right.

Anonymous

7/13/2025, 8:27:47 AM

No.105888949

[Report]

The man on the left is looking up at a large hologram in Tokyo at night. The large hologram is in the image of the anime girl on the right. the holographic image is blue in color.

not bad, even the glow is casted

>Convert AI generated pixel-art into usable assets

-i, --input <path> Source image file in pixel-art-style

-o, --output <path> Output path for result

-c, --colors <int> Number of colors for output. May need to try a few different values (default 16)

-p, --pixel-size <int> Size of each “pixel” in the output (default: 20)

-t, --transparent Output with transparent background (default: off)

https://github.com/KennethJAllen/proper-pixel-art

Anonymous

7/13/2025, 8:29:32 AM

No.105888959

[Report]

>>105888967

Anonymous

7/13/2025, 8:30:13 AM

No.105888963

[Report]

>>105888970

now we are making progress, details matter so I said "200 feet tall" for the hologram.

The man on the left is looking up at a huge hologram in Tokyo at night that is 200 feet tall. The hologram is in the image of the anime girl on the right. the holographic image is blue in color. keep the expression of the anime girl the same.

Anonymous

7/13/2025, 8:30:46 AM

No.105888967

[Report]

>>105888971

>>105888959

>Also, is there a better pixel gen model than

flux dev

Anonymous

7/13/2025, 8:31:15 AM

No.105888970

[Report]

Anonymous

7/13/2025, 8:31:18 AM

No.105888971

[Report]

Anonymous

7/13/2025, 8:38:11 AM

No.105889000

[Report]

okay, im happy with this one. neat how the 2 image workflow works, even 1 image has so much manipulation options.

Anonymous

7/13/2025, 8:40:32 AM

No.105889017

[Report]

>>105888867

Redemption arc, or will it be underwhelming and just fade away ?

Could be a competitive time, Chroma, Ponyv7 and now Wan showing that it is great for image lora training, where will the community support go ?

>>105888950

It clearly changes the pixelation here

Anonymous

7/13/2025, 8:50:45 AM

No.105889065

[Report]

>>105888950

>>105889057

Actually, even the Red example is fucked, it removes some of the lighting/details on his pants and colors the design on his shirt and part of his shoes wrong

Anonymous

7/13/2025, 9:01:33 AM

No.105889120

[Report]

Anonymous

7/13/2025, 9:13:30 AM

No.105889182

[Report]

Anonymous

7/13/2025, 9:13:59 AM

No.105889184

[Report]

pixel art kontext lora works pretty well, usually I do pixelize in extras with an extension in forge/reforge if I wanna pixelize a gen.

Anonymous

7/13/2025, 9:21:53 AM

No.105889243

[Report]

Where are the good anime-themed video loras?

Anonymous

7/13/2025, 9:23:05 AM

No.105889252

[Report]

Anonymous

7/13/2025, 9:28:10 AM

No.105889274

[Report]

Anonymous

7/13/2025, 9:32:01 AM

No.105889293

[Report]

>>105889307

remove the pyramid from the image and replace it with a large hotel with the sign "TRUMP" at the top in gold letters.

you can move mountains, if you want to.

Anonymous

7/13/2025, 9:33:51 AM

No.105889307

[Report]

>>105889318

>>105889293

change the sand to ice.

Anonymous

7/13/2025, 9:35:37 AM

No.105889316

[Report]

Anonymous

7/13/2025, 9:35:55 AM

No.105889318

[Report]

>>105889307

change the location to the surface of the moon, with the Earth visible in the distance. the character has a space suit helmet on his back.

Anonymous

7/13/2025, 9:36:52 AM

No.105889323

[Report]

Anonymous

7/13/2025, 9:40:35 AM

No.105889349

[Report]

>>105889366

Change the headline "Trump's executive privilege: 2 scoops of ice cream" to "FAT BITCH CANT STOP EATING. Change the text "National Correspondent" to "Resident Cow".

Anonymous

7/13/2025, 9:41:55 AM

No.105889361

[Report]

>>105889379

>>105889334

best anime model, wainsfw v14 and hassaku are great for anime gens imo, also get base noob 1.0 vpred

Anonymous

7/13/2025, 9:42:59 AM

No.105889366

[Report]

>>105889383

>>105889349

Change the location to a Mcdonalds. On the table there are 100 cheeseburgers. Mcdonalds fries are on the floor.

Anonymous

7/13/2025, 9:43:43 AM

No.105889369

[Report]

>>105889187

>hi-vis lingerie

that's a new one

Anonymous

7/13/2025, 9:45:20 AM

No.105889379

[Report]

>>105889361

>best anime model

I thought we all unanimously agreed that was IllustriousXL?

Anonymous

7/13/2025, 9:45:28 AM

No.105889381

[Report]

Anonymous

7/13/2025, 9:45:41 AM

No.105889383

[Report]

>>105889388

>>105889366

The woman is eating a cheeseburger. Add a "BREAKING NEWS: fat fuck eating" graphic to the top left of the image.

well you get the idea. fun stuff. replicating fonts 1:1 with no .ttf or typeface is really cool too.

Anonymous

7/13/2025, 9:46:54 AM

No.105889388

[Report]

>>105889428

>>105889383

you had 3 tries to make her fat and you failed each time. i am disappoint

Anonymous

7/13/2025, 9:47:34 AM

No.105889395

[Report]

Anonymous

7/13/2025, 9:52:14 AM

No.105889428

[Report]

>>105889435

>>105889388

she is default fat

Anonymous

7/13/2025, 9:53:55 AM

No.105889435

[Report]

>>105894468

>>105889428

however, great opportunity for testing:

the woman is very fat weighing 800 pounds. make her very large. keep her hairstyle the same.

oh man, they are turning into an unidentifiable blob

Anonymous

7/13/2025, 9:57:06 AM

No.105889448

[Report]

The man has his arms folded and looks upset. Change the text from "ABSOLUTE CINEMA" to "ABSOLUTE GARBAGE".

Anonymous

7/13/2025, 10:01:58 AM

No.105889473

[Report]

>>105889769

Anonymous

7/13/2025, 10:13:35 AM

No.105889517

[Report]

Anonymous

7/13/2025, 10:15:38 AM

No.105889533

[Report]

>>105889542

change the text from "World of Warcraft" to "World of LDG 1girls". Change the text "BURNING CRUSADE" to "4chan shitposts". Change the blonde character to Miku Hatsune.

we have an expansion set now.

Anonymous

7/13/2025, 10:16:55 AM

No.105889535

[Report]

>even a 5090 cant run chroma fp16 at acceptable speeds

It was over before it even begun for you chuds

Anonymous

7/13/2025, 10:19:39 AM

No.105889542

[Report]

>>105889533

better result and logo in line with the original: wasn't necessary to change "world of".

Anonymous

7/13/2025, 10:27:40 AM

No.105889577

[Report]

The image is on an Xbox One game case, in a bargain bin at Best Buy. The bin has a sign on it saying "FREE".

Anonymous

7/13/2025, 10:35:15 AM

No.105889613

[Report]

>>105889663

Anonymous

7/13/2025, 10:39:17 AM

No.105889634

[Report]

>>105892345

Anonymous

7/13/2025, 10:44:24 AM

No.105889663

[Report]

>>105889613

>3D waifu strapped to eyeballs

Who’s the retard now?

Anonymous

7/13/2025, 10:46:58 AM

No.105889685

[Report]

>>105889778

Does live sampling preview not work with lightx2v?

Anonymous

7/13/2025, 10:57:46 AM

No.105889745

[Report]

>>105889807

>>105888507

>the video/vhs node can pick the last frame from a wan video you made, use that as the first frame for your next prompt then stitch them together if you want 10/15/20s clips.

What is the video/vhs node? Is it custom? Where should it be placed in the ldg wan workflow?

Anonymous

7/13/2025, 11:01:32 AM

No.105889769

[Report]

>>105889473

Unreal Tournament flashbacks

Anonymous

7/13/2025, 11:02:50 AM

No.105889778

[Report]

>>105889685

Why it shouldn't it's just latent->image

Anonymous

7/13/2025, 11:07:43 AM

No.105889807

[Report]

>>105890065

>>105889745

nta but it's a set of custom nodes that's used in basically every comfyui workflow with save video node. It has a bunch of nodes, but I'm not sure which one can select the specific frame besides the load video node. For doing everything in one go (vae decode after 1st video is done -> vae encode and start the next gen immediately) I think this one'll work better

https://github.com/ClownsharkBatwing/RES4LYF

>>105888667 (OP)

Retard here, whats the difference between this general and the stable diffusion one?

Anonymous

7/13/2025, 11:21:05 AM

No.105889873

[Report]

Anonymous

7/13/2025, 11:59:25 AM

No.105890065

[Report]

>>105889807

Any guides? Is there a simple way to append this to any of the ldg video workflows?

Anonymous

7/13/2025, 12:00:35 PM

No.105890073

[Report]

SwarmUI is a subhuman mess. Kill yourself SwarmUI dev.

Anonymous

7/13/2025, 12:44:11 PM

No.105890323

[Report]

Anonymous

7/13/2025, 12:56:22 PM

No.105890380

[Report]

>>105890424

>>105890280

Why?

I don't like Forge(abandoned) ReForge (abandoned) nor Comfy (autistic and bloated)

Which UI do you recomend?

Anonymous

7/13/2025, 12:59:30 PM

No.105890405

[Report]

is it worth getting into telegram groups and learn how to gen realism AI?

>>105890380

>Why?

Where do I even begin with this piece of shit, it will crash every time i try to switch models, it will stop working for whatever mysterious reason and require a full reinstall, the queue logic is a mess, you are making a 100 pic batch but theres 20 you wanna cancel in the middle? Too fucking bad, (even easydiffusion handles this better). I dont like ComfyUI either, but at least I import a workflow and it doesnt shit the bed every 5 seconds.

Anonymous

7/13/2025, 1:02:43 PM

No.105890426

[Report]

Hey everyone, can you recommend any extensions or useful tools for Forge/ReForge? What tools do you commonly use? I rely on Infinite Web Browser, Detailer Daemon, and an extension for incrementing samplers and schedulers, but I can't remember its name. What tools do you typically use for your AI-generated images?

Anonymous

7/13/2025, 1:03:59 PM

No.105890435

[Report]

>>105890443

>>105890424

Is there a workflow wiki?

Anonymous

7/13/2025, 1:05:01 PM

No.105890443

[Report]

>>105890435

You can download comfyui workflows for free on openart.ai with no account

Anonymous

7/13/2025, 1:11:42 PM

No.105890477

[Report]

>>105890486

>>105889057

That's because the generated image is not mapping pixels on a grid. It is just making a blocky looking image.

Anonymous

7/13/2025, 1:13:19 PM

No.105890486

[Report]

>>105890477

I fucked around in GIMP and this is the closest that I was willing to get it. Note that I had to unlink the horizontal and vertical size of the grid. It's not square.

Anonymous

7/13/2025, 1:28:08 PM

No.105890561

[Report]

Anonymous

7/13/2025, 1:28:54 PM

No.105890577

[Report]

>>105890601

I sweat to god im gonna lose my head with all of this fucking garbage UI's piece of shit, why do I need to fucking type in CMD commands just to install your piece of shit program

Anonymous

7/13/2025, 1:29:15 PM

No.105890579

[Report]

>>105890716

>>105889187

OSHAs not gonna like this...

Anonymous

7/13/2025, 1:30:16 PM

No.105890586

[Report]

>>105890719

Anonymous

7/13/2025, 1:33:27 PM

No.105890601

[Report]

>>105890577

you mean don't have a jobsite slut to distract OSHA from the mexicans standing on the 12 pitch roof with no harness?

Anonymous

7/13/2025, 1:33:36 PM

No.105890602

[Report]

is there a prompt for preventing wind on wan gens? like those random gusts of wind blowing the characters face. I am assuming putting wind in the negative prompt doesnt help

Anonymous

7/13/2025, 1:50:09 PM

No.105890716

[Report]

>>105890579

you mean don't have a jobsite slut to distract OSHA from the mexicans standing on the 12 pitch roof with no harness?

Anonymous

7/13/2025, 1:50:42 PM

No.105890719

[Report]

>>105890586

Expressionless(tipical of SDXL)

Stiff and glossy appearance

Image doesn't tell a story besides "a cute girl srands in ornate crusader armor, with flames below her."

Veredict: SLOP

Return to the CivitAI mines to pick up more tags!

when the fuck is comfy going to support having nodes with images in them, so i can have a node with my entire lora library with working thumbnails to quickly choose from?

Anonymous

7/13/2025, 2:27:26 PM

No.105890902

[Report]

>>105890921

>>105890902

i'm talking about something akin to how automatic1111 handled loras. having a thumbnail appear while i hover over a lora i've already picked doesnt really help.

i just tried video gen for the first time, followed the rentry guide and everything, but when i tried an i2v anime gen, i could see the latent preview was generating a 3d character in the same pose. what gives? do i need to apply an anime lora or some tags for the output to be anime? the input image isn't enough?

Anonymous

7/13/2025, 2:34:29 PM

No.105890933

[Report]

>>105890935

>>105890872

>using BloatyUI

Did you pay your suscription fee?

Anonymous

7/13/2025, 2:35:00 PM

No.105890935

[Report]

>>105891247

>>105890933

why would you use anything but comfy?

Anonymous

7/13/2025, 2:35:22 PM

No.105890940

[Report]

>>105890953

>>105890921

Well there is a separate custom lora manager that basically works like a1111's lora manager but it opens a new tab and is not quite as intuitive

Anonymous

7/13/2025, 2:35:59 PM

No.105890946

[Report]

>>105890921

The thumbnail appear while you hover over the drop down list, it isn't there after you pick one.

But for a library view there's another nodepack the name of which I don't remember since it's to overengineered for me. But it likely has the word lora in it...

Anonymous

7/13/2025, 2:36:04 PM

No.105890947

[Report]

>>105890921

use civit AI helper or CivitAI browser that scans your floders in search of images and descriptions tags of it.

Anonymous

7/13/2025, 2:37:10 PM

No.105890953

[Report]

>>105891042

>>105890940

>Well there is a separate custom lora manager that basically works like a1111's lora manager but it opens a new tab and is not quite as intuitive

yeah i found that the other day and thought by prayers had been answered, but as you say its like a whole different thing in another tab that seemed to be mostly centered around downloading shit from civitai, and not managing my own stuff.

Anonymous

7/13/2025, 2:51:34 PM

No.105891042

[Report]

>>105890953

Well on the bright side, it allows you to quickly copy lora's name in a1111's syntax like <lora_name:1>, and if you use the prompt control node instead of regular clip text encoder you can just paste it in there

Anonymous

7/13/2025, 2:57:47 PM

No.105891075

[Report]

>>105891058

mspaint vs image editing general

Anonymous

7/13/2025, 2:59:29 PM

No.105891084

[Report]

>>105891438

Anonymous

7/13/2025, 3:05:42 PM

No.105891122

[Report]

>>105891190

Anonymous

7/13/2025, 3:12:02 PM

No.105891157

[Report]

I tried using the FlowMatch scheduler and some gens are now taking longer than 15 minutes on the lightx2v workflow. Surely that isn't normal?

Anonymous

7/13/2025, 3:16:50 PM

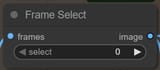

No.105891190

[Report]

>>105891122

this is what it looks like in the workflow.

Anonymous

7/13/2025, 3:21:34 PM

No.105891213

[Report]

>>105891343

>>105889818

in /ldg/ there is more actual discussion and the gens posted are generally better. /sdg/ is basically a discord chat for a handful of insane retards that spam hundreds of generated images that all look the same and all look like shit. there is some good gens posted in /sdg/ but you have to filter a couple people or else you're wading through headache-inducing garbage.

>>105890935

I'm not going to shill, but the alternative UI we all know is faster, uses fewer resources, and offers the same features and extensions as Comfy. Tell me, what can ComfyUI do that this more user friendly and popular UI cannot?

List them out one by one.

Anonymous

7/13/2025, 3:29:41 PM

No.105891269

[Report]

what is bro talking about

Anonymous

7/13/2025, 3:35:38 PM

No.105891320

[Report]

>>105891247

i have no idea what alternative ui you're talking about

>>105891058

Both generals offer nothing valuable to the community, they only share their slop here as if it's a work of art.

The real discoveries and changes in the hobby come from places other than 4chan.

This is aimed at entry level newfags.

The Chroma developer doesn't visit here, the same goes for the makers of the extensions you utilize, and even less so for the UI designers. Only Comfy dev occasionally engages with some Anons here. Creators of Loras or Checkpoints are absent as well. The same applies to Neta Lumina's creator, along with RouWei, Noob, and Illustrious. AI arist doesn't visit here either.

Anonymous

7/13/2025, 3:38:50 PM

No.105891343

[Report]

>>105891213

>insane retards that spam hundreds of generated images that all look the same and all look like shit

And here where are the masterpieces? More than just a monkey playing with an overtrained checkpoint?

Anonymous

7/13/2025, 3:41:26 PM

No.105891359

[Report]

ANCHOR FOR LORA CHECKPOINT EXTENSION MAKERS!

Anonymous

7/13/2025, 3:41:49 PM

No.105891365

[Report]

With wan, will the first rentry workflow always produce better quality results than lightx2v? Even if all of the optimizations are active including teacache at 0.26?

Anonymous

7/13/2025, 3:43:25 PM

No.105891378

[Report]

>>105891547

>>105891329

Most of those people only occasionally use Reddit to advertise otherwise they tend to either have their own Discord server or simply don't engage in social media much. This has nothing to do with 4chan, so I don't know why you're targeting /ldg/ in particular.

Anonymous

7/13/2025, 3:50:55 PM

No.105891429

[Report]

>>105891329

>from places other than 4chan

Such as? Reddit and discord are both ass.

Anonymous

7/13/2025, 3:52:15 PM

No.105891438

[Report]

>>105891084

fuck off with your shitty coombait gens, nobody cares.

Anonymous

7/13/2025, 3:52:30 PM

No.105891441

[Report]

>>105891535

is there a list of supported models for Forge \ ReForge?

Had a read over their respective repos but wasn't able to locate , I'm not sure if I had overlooked it

Anonymous

7/13/2025, 4:00:00 PM

No.105891480

[Report]

>>105891536

>>105888797

Recently, I stumbled upon quite a few 10-second gens.

Did I miss some kijai news or is it just RoPE?

Anonymous

7/13/2025, 4:08:24 PM

No.105891535

[Report]

>>105891716

Anonymous

7/13/2025, 4:08:32 PM

No.105891536

[Report]

>>105891562

>>105891480

You can increase the length past 81. Why the fuck do people not understand this?

Anonymous

7/13/2025, 4:10:03 PM

No.105891547

[Report]

>>105891378

>targeting /ldg/ in particular.

>>105891329

"Both generals offer nothing valuable" Please read again my statement.

Anonymous

7/13/2025, 4:10:10 PM

No.105891548

[Report]

>>105891642

>>105891329

This is an anonymous message board retard

>>105891247

I started with comfy, so everything else looks and feels a little like picrel. At some point, the training wheels just hold you back.

Maybe Windows makes everything so difficult that anything more than a one-click installer seems impossible? It's not that the gradio-based services don't work, but it seems like it'd be hard to automate with them.

Anonymous

7/13/2025, 4:12:02 PM

No.105891562

[Report]

>>105891572

>>105891536

>You can increase the length past 81.

You can, but it loops the video past 81 frames, hence RoPE existing to extend it to 129 seconds.

>Why the fuck do people not understand this?

Must be a troll/retard/bait.

Anonymous

7/13/2025, 4:13:11 PM

No.105891571

[Report]

>>105891550

Is automation really the only difference?

Which process do you feel is crucial to automate?

>>105891562

>You can, but it loops the video past 81 frames

No it doesn't, at least not always. Stop spreading misinformation.

Anonymous

7/13/2025, 4:14:44 PM

No.105891584

[Report]

what is the deal with all the bullying here?

Anonymous

7/13/2025, 4:18:55 PM

No.105891619

[Report]

>>105891721

Anonymous

7/13/2025, 4:19:24 PM

No.105891622

[Report]

>>105891572

This is the equivalent of saying SDXL can do 2048x2048 images, despite it being natively trained for only 1024x1024. Yes, it technically can.

Not entertaining you anymore. You're stupid.

Anonymous

7/13/2025, 4:19:28 PM

No.105891624

[Report]

>>105891645

>>105891572

No gen => opinion discarded

Anonymous

7/13/2025, 4:22:34 PM

No.105891640

[Report]

>>105891721

This is fun.

I asked yesterday, but any more tips on preventing the brightness changing for videogen? It's happening really often for me and I've tried a lot of different things.

I have tried putting perfect lighting and criterion collection in the positive. Putting 'changing brightness' and 'darkening' in the negative prompt. I have explicitly instructed to keep lighting exactly the same as the image, and/or the first frame. I have tried adding 'static lighting' and 'static brightness'. Even then, the gen will still sometimes randomly change the lighting and the color grading. I just want the lighting to remain exactly the same as the reference image. There should never be an arbitrary fadeout.

Anonymous

7/13/2025, 4:22:43 PM

No.105891642

[Report]

>>105892638

>>105891548

I appreciate the thought behind that insult, even if it wasn't needed.

BUT and how is that relevant to my point?

Is it okay that this anonymous message board means we can't have people share their contributions?

Anonymous

7/13/2025, 4:22:57 PM

No.105891645

[Report]

>>105891806

>>105891624

This

>>105888544 is my gen

Get fucked retard

Anonymous

7/13/2025, 4:23:48 PM

No.105891649

[Report]

>>105891641

newbie general, go to discord or reddit

Anonymous

7/13/2025, 4:27:15 PM

No.105891676

[Report]

now i gotta wait for a week for there to be a just works ThinkSound comfyui workflow, getting that piece of shit to run is impossible, there were 7 different errors that i debugged to get it to work and at the end it just stalled, fuck pythonshit

Anonymous

7/13/2025, 4:27:40 PM

No.105891683

[Report]

>>105891696

>>105891641

Certain loras can cause that

Anonymous

7/13/2025, 4:29:21 PM

No.105891696

[Report]

>>105891726

>>105891683

The only lora I'm using is lightx2v

Anonymous

7/13/2025, 4:30:52 PM

No.105891707

[Report]

>>105891801

>>105891550

What can I do with Comfy, Big Guy? I'm still waiting.

Anonymous

7/13/2025, 4:31:15 PM

No.105891716

[Report]

>>105891733

>>105891535

there is nothing documented in either of their repos, I begrudgingly have decided to test sdnext for the moment

Anonymous

7/13/2025, 4:31:53 PM

No.105891721

[Report]

>>105891744

Anonymous

7/13/2025, 4:32:44 PM

No.105891726

[Report]

>>105891696

Yeah, lightx2v definitely does that. Perhaps lower the lora strength.

Anonymous

7/13/2025, 4:32:55 PM

No.105891729

[Report]

>>105892212

Anonymous

7/13/2025, 4:33:26 PM

No.105891733

[Report]

>>105891716

Welcome, new member. All models work, including Chroma, Flux dev, and Flux Schnell. Kontext and Vide generation models may not work.

Anonymous

7/13/2025, 4:35:06 PM

No.105891743

[Report]

Anonymous

7/13/2025, 4:35:09 PM

No.105891744

[Report]

>>105891721

Guns are funny

Anonymous

7/13/2025, 4:37:47 PM

No.105891760

[Report]

>>105891773

>>105891724

Explain how the plate on the floor is connected to her hurt hand, or I will judge your picture as sloppy.

Anonymous

7/13/2025, 4:39:21 PM

No.105891773

[Report]

>>105891724

>>105891760

and the infinite tail

Anonymous

7/13/2025, 4:44:07 PM

No.105891801

[Report]

>>105891896

>>105891707

A lot. For example, one workflow needs to be able to switch based on context for large batches of works for multiple users. Output from other applications goes to a location, gets queued for either an automatic or manual start, and output becomes available for a different stage in a pipeline, with multiple concurrent pipelines.

This could be something as simple as background removal, or generating multiple types of outputs from a single input and saving them with metadata in a certain format.

So, take home interiors with certain elements like vases named id-home_interior-vase.png, know to create masks of all vases, save mask, generate the same vase with five different color glazes, save images as id-home_interior-vase-color.png, scaled images as input to a node group that generates a short 2.5D video, interpolate, save videos with matched filenames to images.

I can do all of that easily in one place in comfy, with notes, and I can effortlessly duplicate and share it.

Anonymous

7/13/2025, 4:44:39 PM

No.105891806

[Report]

>>105891645

>it works, especially if repetitive motions are intended

Anonymous

7/13/2025, 4:46:32 PM

No.105891816

[Report]

>>105891641

Just to be clear, you're using base wan model, not fusionx right?

Anonymous

7/13/2025, 4:58:30 PM

No.105891896

[Report]

>>105891801

Link json? A local lamp company is actually loooking for an AI guy for genning. This could be useful.

Anonymous

7/13/2025, 5:15:22 PM

No.105892037

[Report]

>>105892238

[SAD NEWS]

HiDream has become the latest in a growing list of Chinese AI companies to shift towards API-only access. Their new Vivago 2.0 model, which is API only, ranks #5 on the leaderboard. Despite China's recent generous handouts with LLMs (Deepseek and the new Kimi K2), they refuse to show the local image diffusion community the same love.

Notably, there is not a single open-weight model in the top-10 anymore, and the closest open-weight model is the original HiDream at #14. HiDream originally placed at #1 when it first released, but has gradually fell off after getting mogged by mogao (

>>105039249)

Forecasts are predicting 2 more years of SDXL

Anonymous

7/13/2025, 5:17:17 PM

No.105892053

[Report]

>>105891641

>preventing the brightness changing

It may be not related to your problem, but I experienced some flickering when the Tiled VAE decoder was used. I changed it back to the vanilla one, even if it pushed the VRAM usage to high at times

Anonymous

7/13/2025, 5:17:58 PM

No.105892059

[Report]

Anonymous

7/13/2025, 5:18:56 PM

No.105892070

[Report]

Anonymous

7/13/2025, 5:26:27 PM

No.105892119

[Report]

>>105892212

Anonymous

7/13/2025, 5:29:05 PM

No.105892139

[Report]

have't used chroma since v31, is it good now?

Anonymous

7/13/2025, 5:37:36 PM

No.105892212

[Report]

>>105890924

>>105891729

>>105892119

techlet general, the most I can help you is with a trump deepfake.

Anonymous

7/13/2025, 5:38:28 PM

No.105892216

[Report]

>>105892096

Why even post this. Let the retard be retard. The knowers will know and that's enough.

Anonymous

7/13/2025, 5:38:37 PM

No.105892217

[Report]

>>105892202

overly animated

Anonymous

7/13/2025, 5:39:21 PM

No.105892220

[Report]

Anonymous

7/13/2025, 5:41:53 PM

No.105892238

[Report]

>>105892037

HiDream was too slow and needed too much vram for the quality it provided, also could you even train it on 24gb ?

For a local model it needs to run at least somewhat decently on high end consumer hardware, else there is no point other than the company being able to say 'hey, we did a open release'.

>he's trying again to shit up /ldg/

Not working in the thread of frenship

>>105890924

post your gens

nobody understands your ESL gibberish

Anonymous

7/13/2025, 5:43:56 PM

No.105892254

[Report]

>>105892096

It’s the only place that notices him, this place and the furry board.

Anonymous

7/13/2025, 5:44:46 PM

No.105892257

[Report]

Anonymous

7/13/2025, 5:45:43 PM

No.105892268

[Report]

>>105892247

Thread stagnation issue

Anonymous

7/13/2025, 5:46:44 PM

No.105892280

[Report]

>>105892410

>>105892248

Tried video gen, followed guide, but i2v anime gen shows a 3D character. Do I need anime lora or tags for anime output? Is the input image insufficient?

Anonymous

7/13/2025, 5:47:39 PM

No.105892286

[Report]

>>105892248

>nobody understands your ESL gibberish

That post was perfectly understandable you stupid idiot. You are clearly the ESL here.

Anonymous

7/13/2025, 5:49:55 PM

No.105892298

[Report]

>>105892247

its amusing to watch him squirm

Should I be clearing the model and cache after every gen? I just went from an 8 minute gen time to 20 minutes to 40 minutes. I am assuming this is related to not clearing the model between gens? Also my GPU temperature was relatively cool during the 40 minute gen even though it had 100% utilization.

Anonymous

7/13/2025, 5:53:09 PM

No.105892329

[Report]

ESL general

30s-40s anon general

Dead general

If you seek novelty and famous persons, please go away. This space is for a quiet anon that enjoy grass mowing, barbecues, and AI images of 90s-00s waifus with old reliable models.

Anonymous

7/13/2025, 5:53:41 PM

No.105892333

[Report]

>>105892311

I don't mind creative modesty, like the austin powers nudity bit

Anonymous

7/13/2025, 5:53:44 PM

No.105892334

[Report]

>>105892339

>>105892308

What model, What gpu?

Anonymous

7/13/2025, 5:53:49 PM

No.105892335

[Report]

>>105892311

i'm a little disappointed her moles didn't spell anything

>>105892334

aniWan RTX 5070 Ti

Anonymous

7/13/2025, 5:54:33 PM

No.105892343

[Report]

I want to remove clothing from photos. What is the best option? I was using comfyui and pony 6 months ago.

I checked the rentry and didnt see any nudify guidance.

Anonymous

7/13/2025, 5:54:59 PM

No.105892345

[Report]

>>105892398

>>105889634

>>105892202

these are great, how are you getting such smooth 2D animations?

Anonymous

7/13/2025, 5:55:25 PM

No.105892348

[Report]

>>105892358

Anonymous

7/13/2025, 5:56:30 PM

No.105892358

[Report]

>>105892386

>>105892348

Of course. Same workflow as the rentry guide (in this specific case, the original, not lightx2v.

Anonymous

7/13/2025, 5:56:35 PM

No.105892360

[Report]

>>105892428

>>105892339

Are you using the rentry workflow? Disable the torch compile node and in the dualgpu VRAM offload set it to 0 and try then

Anonymous

7/13/2025, 5:59:12 PM

No.105892386

[Report]

>>105892358

Use another UI, Comfy itself after some time you need to restart it.

https://github com/lllyasviel/stable-diffusion-webui-forge

https://github com/Panchovix/stable-diffusion-webui-reForge

I've been gening pictures for 6 hours with video editing software open and have 0 problems, everything is running smoothly.

>>105892345

https://tensor.art/models/868807624022323384/ani_Wan2_1_14B_fp8_e4m3fn-I2V480P

A lot of people here don't like it but I've been liking my results with I2V. Just make sure the camera isn't moving.

Anonymous

7/13/2025, 6:02:33 PM

No.105892410

[Report]

>>105892420

>>105892280

>but i2v anime gen shows a 3D characte

Show it here, better upload to catbox.moe with all metadata

You must provide details to further hope to be helped

Anonymous

7/13/2025, 6:02:35 PM

No.105892411

[Report]

Can someone explain how to make the thumbnails of images in the WebUI smaller? I mean the pictures that show up when you pick the checkpoint or Lora options. They are nice, but they are too big.

Anonymous

7/13/2025, 6:03:37 PM

No.105892420

[Report]

>>105892434

>>105892410

Why are you lying, dear anon? Help here is rare.

Anonymous

7/13/2025, 6:04:38 PM

No.105892428

[Report]

>>105892540

>>105892360

>Disable the torch compile node and in the dualgpu VRAM offload set it to 0 and try then

WTF?

Anonymous

7/13/2025, 6:04:42 PM

No.105892430

[Report]

>>105892450

>>105892308

No, that would likely slow things down since it has to load the model parts from disk again.

Sounds more like you are using more vram than can be effectively offloaded, and you are on windows which means the nvidia driver will automatically start mapping vram to ram in a very inefficient way. You should turn this feature off in the driver settings.

Anonymous

7/13/2025, 6:04:58 PM

No.105892432

[Report]

>>105892398

Does it generate repeating frames since anime has 8 fps?

Anonymous

7/13/2025, 6:05:14 PM

No.105892434

[Report]

>>105892430

>You should turn this feature off in the driver settings.

I'm confused, please explain what I need to do. Is it done via cmd.exe? Which checkbox in the Nvidia control panel should I untick?

Anonymous

7/13/2025, 6:11:54 PM

No.105892492

[Report]

>>105892558

>>105892450

I don't use Windows, I'm on Linux, but I know there is a setting for the Nvidia driver to prevent it from offloading to ram. Because people have complained about this causing problems.

Anonymous

7/13/2025, 6:15:17 PM

No.105892516

[Report]

Hl3 comfirmed

Anonymous

7/13/2025, 6:18:46 PM

No.105892540

[Report]

>>105892428

The torch compile does literally nothing but slow shit down. And I think comfy has issues with VRAM offload to system RAM. When you input a set value it seems to give priority to system ram to fill that quota and not VRAM first and that creates the long hangs with the card seemingly doing nothing but idle at 100%

Anonymous

7/13/2025, 6:20:20 PM

No.105892551

[Report]

>>105892311

Well, whaddaya know, using brackets to change prompt weights in t5 is actually not a meme. Same seed, I only changed "she turns away from the viewer" to "(she turns away from the viewer:1.4)" and kept the rest of the prompt at default weights

https://files.catbox.moe/5jh3so.webm

Anonymous

7/13/2025, 6:21:05 PM

No.105892558

[Report]

>>105892575

>>105892492

>>105892450

>>105892308

nvidia control panel > 3d settings > sysmem fallback policy > set to prefer no fallback

and in case you are having problems in subsequent gens stalling because of memory leaks, install

https://github.com/SeanScripts/ComfyUI-Unload-Model and place the unloadallmodels node from it right before the last "save image/video" node in your workflow

Anonymous

7/13/2025, 6:22:38 PM

No.105892575

[Report]

>>105892558

also unloading all models to ram and back doesnt take much time at all if you have enough ram or fast ssd

Anonymous

7/13/2025, 6:26:20 PM

No.105892613

[Report]

Anonymous

7/13/2025, 6:29:08 PM

No.105892638

[Report]

>>105891642

NTA but it means you have no idea who posts and doesn't post here

>>105892398

Why does /ldg/ hate aniwan?

Anonymous

7/13/2025, 6:35:03 PM

No.105892678

[Report]

>>105892683

>>105892649

One schizo posting repeatedly

I really miss the individual ip stats

Anonymous

7/13/2025, 6:35:51 PM

No.105892681

[Report]

>>105892729

participants admit they need more extreme or niche content to stay aroused

Anonymous

7/13/2025, 6:36:06 PM

No.105892683

[Report]

>>105892678

Thread IDs fix all the problems because you either get 1pbtid IP switchers or obvious samefagging.

Anonymous

7/13/2025, 6:37:30 PM

No.105892697

[Report]

>>105892649

vramlets shit on everything so they don't have to see anything that makes them feel poor.

Anonymous

7/13/2025, 6:39:52 PM

No.105892715

[Report]

>>105890424

Works on my machine

Does anybody know how to keep the models in CPU ram across different workflow tabs?

Anonymous

7/13/2025, 6:41:08 PM

No.105892729

[Report]

>>105892681

This is such a meaningless assertion, there are also many marriages with dead bedrooms because the participants also need more extreme or niche activities to stay aroused. Novelty seems core the human sexual experience.

Scene: "My waifu getting brain freeze from eating ice cream too fast but trying to play it cool"

Anonies, please help me!

I'm the same anon who has problem generating my waifu building a sand castle and sewing a scarf.

Please how do I instruct via tags in SDXL the scene I put between quotes?

Anonymous

7/13/2025, 6:50:42 PM

No.105892810

[Report]

>>105892727

It's impossible by design.

>>105892793

why do you generate complicated scenes?

cant you put only,

1girl, solo, your waifu, big breast, euler a, 30 steps, 5cfg

and be happy?

Anonymous

7/13/2025, 7:05:35 PM

No.105892964

[Report]

>>105892793

think of an image you want, and write the tags you'd use to describe it like you'd see on a *booru

sdxl can't do natural language prompts afaik

Anonymous

7/13/2025, 7:12:17 PM

No.105893020

[Report]

>>105892727

pretty sure it works with comfyui by default

Anonymous

7/13/2025, 7:24:41 PM

No.105893129

[Report]

>>105895073

Anonymous

7/13/2025, 7:29:52 PM

No.105893175

[Report]

euler a normal 20 steps is all you need

Anonymous

7/13/2025, 7:30:39 PM

No.105893181

[Report]

>>105894298

Anonymous

7/13/2025, 7:32:41 PM

No.105893198

[Report]

Anonymous

7/13/2025, 7:44:06 PM

No.105893301

[Report]

Anonymous

7/13/2025, 7:48:42 PM

No.105893356

[Report]

>>105893372

Anonymous

7/13/2025, 7:50:07 PM

No.105893372

[Report]

>>105893389

>>105893313

>>105893356

Nice, what model/lora is this ?

Anonymous

7/13/2025, 7:51:33 PM

No.105893389

[Report]

>>105893372

This is pixelwave.

leaked pic of the average /g/ poster

Anonymous

7/13/2025, 7:56:25 PM

No.105893433

[Report]

>>105895073

Anonymous

7/13/2025, 7:56:41 PM

No.105893436

[Report]

>>105893426

kawaii chompers

Anonymous

7/13/2025, 7:56:47 PM

No.105893438

[Report]

>>105893426

Get lost pedo

Anonymous

7/13/2025, 7:59:11 PM

No.105893460

[Report]

>>105892793

wait 3-4 years for image models to have that kind of understanding

Anonymous

7/13/2025, 8:09:07 PM

No.105893549

[Report]

>>105893708

>>105892939

not that anon, but the reason i like models that use t5 is because i'm chasing concepts, not sets of tags. i really enjoy the kinds of abstract exercises that don't reinforce how i currently think.

>>105893426

okay, first one of yours I didn't actually hate, but only because it's so uncanny. it reminds me of this book my sister got from the scholastic book fair in the 90s where an american girl uncovers that the russian swimmer she's competing against at the youth olympics was forced to take steroids while training that made her grow hair in strange places and her voice deepen like a man's.

Anonymous

7/13/2025, 8:30:16 PM

No.105893708

[Report]

>>105893549

>made her grow hair in strange places

sounds kinda lewd desu

Anonymous

7/13/2025, 8:30:16 PM

No.105893709

[Report]

>>105893827

Anonymous

7/13/2025, 8:34:32 PM

No.105893744

[Report]

>>105893759

>>105893718

damn really nice what wan stuff are you using not been following wan for a while

Anonymous

7/13/2025, 8:35:49 PM

No.105893753

[Report]

>>105893825

Anonymous

7/13/2025, 8:37:34 PM

No.105893767

[Report]

>>105893778

post combat nuns

>>105893759

cool will give it a go at some point how did you get 9 seconds is that because of that model or does lightx2 let you do it?

Anonymous

7/13/2025, 8:38:29 PM

No.105893778

[Report]

>>105893767

I generated a new 5 second video using the last frame of the previous video with a different text prompt.

>Diane, it's 2:40PM, July 13th, entering the Local Diffusion General. I've never seen this much slop in my entire life... damn good coffee though.

Anonymous

7/13/2025, 8:44:00 PM

No.105893825

[Report]

>>105893753

brother

>>105893803

agent zoomer

Anonymous

7/13/2025, 8:44:26 PM

No.105893827

[Report]

>>105893709

Fishbowl effect was a nice touch

Anonymous

7/13/2025, 8:45:36 PM

No.105893840

[Report]

>2025

>AI still cant hands (when not in focus), feet and proper physics and even often fails at perspective.

Anonymous

7/13/2025, 8:46:40 PM

No.105893854

[Report]

>>105894182

>>105893803

Imagine all the based stuff David Lynch could have done with this technology

Finally I would get 'Rabbits part 2'

Anonymous

7/13/2025, 9:17:06 PM

No.105894110

[Report]

>>105894299

>>105894025

Knowing wan, I'm not even mad for the censoring kek

Anonymous

7/13/2025, 9:21:27 PM

No.105894146

[Report]

>>105894025

post here >>>/gif/29122696

Anonymous

7/13/2025, 9:23:37 PM

No.105894164

[Report]

>>105894025

uncensored, now

Anonymous

7/13/2025, 9:26:10 PM

No.105894182

[Report]

>>105893854

if you posted it, i'd probably like it <3

a cartoon man sips a glass of wine he is holding.

Anonymous

7/13/2025, 9:30:49 PM

No.105894210

[Report]

>>105894201

very Sealab 2021

Anonymous

7/13/2025, 9:37:01 PM

No.105894259

[Report]

Anonymous

7/13/2025, 9:41:24 PM

No.105894298

[Report]

Anonymous

7/13/2025, 9:41:25 PM

No.105894299

[Report]

>>105894386

>>105894110

Maybe for other models, but I can tell you for a fact that aniwan is not bad. It is clearly nsfw-trained.

Anonymous

7/13/2025, 9:43:25 PM

No.105894315

[Report]

>>105894386

do not...

redeeeeeeeeeeeeeeeeeeeeeeeem!

Anonymous

7/13/2025, 9:51:48 PM

No.105894386

[Report]

>>105894299

Link?

>>105894315

The most eccentric, horrible shit I've ever seen has come from South India. Everyone I work with distances themselves hard from that, but the ones that even acknowledge that it exists, that they're part of the same country, still treat them like they're lower than the sludge that comes from the latrines you've seen videos of. Why?

Anonymous

7/13/2025, 9:59:25 PM

No.105894461

[Report]

>>105894666

PØŞŤCĄŘÐ

7/13/2025, 10:00:08 PM

No.105894468

[Report]

>>105894201

kek saved

>>105888862

nothing about that was funny

its called acting in bad faith >;c

>>105889435

s a m e . s i s .

>>105893313

i dig it

Anonymous

7/13/2025, 10:02:17 PM

No.105894498

[Report]

I heard you guys had problems with tps reports

Anonymous

7/13/2025, 10:08:22 PM

No.105894562

[Report]

>>105894568

in japan 20 years ago, a girl at a hostess club saw that i didn't know how to ask where the bathroom was and showed me how to get there.

that's the level of service i expect from AI these days. i want it to know that i'm a frail human and account for it.

Anonymous

7/13/2025, 10:09:05 PM

No.105894568

[Report]

>>105894562

language barrier or autismo?

Anonymous

7/13/2025, 10:13:14 PM

No.105894615

[Report]

Anonymous

7/13/2025, 10:13:33 PM

No.105894618

[Report]

It feels good to be a gangster

Anonymous

7/13/2025, 10:15:30 PM

No.105894642

[Report]

>>105894802

Anonymous

7/13/2025, 10:15:34 PM

No.105894643

[Report]

Been playing more with training Wan Loras and 8 images at 32 frames seems to work well for training a subject and some motion. You get a little stop motion but the trade off is longer sequences.

Anonymous

7/13/2025, 10:17:59 PM

No.105894666

[Report]

Anonymous

7/13/2025, 10:18:18 PM

No.105894667

[Report]

What is the deal with Swarm? How is it better than Forge?

Anonymous

7/13/2025, 10:28:46 PM

No.105894776

[Report]

>>105894961

Anonymous

7/13/2025, 10:29:46 PM

No.105894787

[Report]

>>105894823

the man with boxes on his back turns around and runs far away.

Anonymous

7/13/2025, 10:30:34 PM

No.105894802

[Report]

>>105894883

>>105894642

these are great bc you might just be a horror enthusiast, or you're a menace who actually gets off to this stuff, we have no way to tell. adds to the spookiness of the gens

Anonymous

7/13/2025, 10:35:03 PM

No.105894852

[Report]

>>105894947

Does this seed always get used for whatever you're currently generating? Does the number change right after you click run? If I want to re-use a seed, it doesn't get lost after the gen finishes right?

Anonymous

7/13/2025, 10:38:36 PM

No.105894883

[Report]

Anonymous

7/13/2025, 10:43:40 PM

No.105894947

[Report]

>>105894852

if control after generate is set to randomize, it will change the seed after each gen. set it to fixed to keep it the same.

Anonymous

7/13/2025, 10:44:22 PM

No.105894959

[Report]

Anonymous

7/13/2025, 10:44:39 PM

No.105894961

[Report]

>>105895114

>>105894776

this has a very x-ray engine look,catbox?

here's a catbox showing how to get a decent pepe out of noob, for any interested in genning pepes:

https://files.catbox.moe/6qvaaq.png

Anonymous

7/13/2025, 10:46:40 PM

No.105894990

[Report]

Are there any good ways to gen inventor's blueprints, or even DaVinci style drawings?

Anonymous

7/13/2025, 10:47:29 PM

No.105895001

[Report]

Anonymous

7/13/2025, 10:53:29 PM

No.105895062

[Report]

>>105893803

Kek, he's turned into Bobby in the last few frames

Anonymous

7/13/2025, 10:54:18 PM

No.105895073

[Report]

>>105895304

>>105893129

>>105893718

>>105894025

>>105893433

PLEASE MAKE ONE OF BLUE ARCHIVES PLEASE ONE OF A LOLI

Anonymous

7/13/2025, 10:56:54 PM

No.105895114

[Report]

>>105895142

>>105894961

How can I share catbox?

You mean of like the workflow?

Anonymous

7/13/2025, 10:59:47 PM

No.105895142

[Report]

>>105895178

>>105895114

yeah the workflow. or prompt?

Anonymous

7/13/2025, 11:02:18 PM

No.105895168

[Report]

>>105895181

Anonymous

7/13/2025, 11:03:47 PM

No.105895178

[Report]

>>105895281

>>105895168

I still don't know how to make these illusion images

Anonymous

7/13/2025, 11:05:07 PM

No.105895189

[Report]

>>105895181

more detail? you mean img2img?

Anonymous

7/13/2025, 11:05:30 PM

No.105895192

[Report]

>>105893759

please could you share prmpts and settings?

Anonymous

7/13/2025, 11:07:05 PM

No.105895209

[Report]

>>105895221

Can we already do chroma loras?

Anonymous

7/13/2025, 11:08:30 PM

No.105895221

[Report]

>>105895334

>>105895209

yes, but it's best to wait a few more weeks until chroma is finished.

I made one just to test the waters and was very pleased.

Anonymous

7/13/2025, 11:09:30 PM

No.105895232

[Report]

does civitai support kontext lora generation yet or not?

How many buzz for it?

Anonymous

7/13/2025, 11:13:09 PM

No.105895281

[Report]

>>105895178

based

>>105895181

image to image, or qr code controlnet

Anonymous

7/13/2025, 11:14:14 PM

No.105895299

[Report]

>>105895329

>>105894025

>ani_Wan2_1_14B_fp8_e4m3fn

Tested the model and it bringed my waifu to life for three seconds.

I have a RTX 3060 I MUST bring her to life with that rig. No matter the shitty resolution SHE COULD BE IS INSIDE MY GPU RIGHT NOW

>>105895073

not him but why don't you just make it yourself? wan2gp is easier than ever with sub-8gb vram

Anonymous

7/13/2025, 11:15:34 PM

No.105895310

[Report]

>>105895304

>sub-8gb

didn't work on my 6 gb card

Anonymous

7/13/2025, 11:17:03 PM

No.105895329

[Report]

>>105895345

>>105895304

Not that anon, but this one

>>105895299

Please is there a tutorial how to run that shit in my pc, I need to bring my waifu to life, it's urgent.

Anonymous

7/13/2025, 11:17:25 PM

No.105895334

[Report]

>>105895221

Do I just use a standard 1024x1024 dataset?

Anonymous

7/13/2025, 11:17:28 PM

No.105895335

[Report]

>>105895329

not him, but check the rentry for my guide

Anonymous

7/13/2025, 11:19:47 PM

No.105895369

[Report]

>>105895345

there is no guide that it's name is "aniwan"

Anonymous

7/13/2025, 11:20:48 PM

No.105895381

[Report]

>>105895573

>>105895345

but I must also admit that I'm in tunnel vision mode, I'll check it out in a quieter moment.

Anonymous

7/13/2025, 11:35:24 PM

No.105895573

[Report]

>>105895618

Anonymous

7/13/2025, 11:38:53 PM

No.105895618

[Report]

>>105896388

>>105895573

wan2gp doesn't let you load aniwan lmao

Anonymous

7/14/2025, 12:54:27 AM

No.105896388

[Report]

>>105895618

just rename the checkpoint so it loads that instead