/lmg/ - Local Models General

►Recent Highlights from the Previous Thread:

>>105932763

--RTX 5090 criticized for insufficient VRAM to run modern large language models effectively:

>105935901 >105935966

--Challenges of running LLMs locally on AMD GPUs and skepticism toward the Radeon AI PRO R9700's value:

>105933446 >105933826 >105933983 >105933900 >105934023 >105934538 >105934604

--Discussion of Grok girl prompt and modern models' ability to handle long contexts:

>105935505 >105937170 >105937322 >105938046 >105938082 >105938222 >105938264 >105938340 >105938449 >105938674

--Clustering computers for LLM inference is possible but complex and performance-limited for beginners:

>105937482 >105937515 >105937680 >105937538 >105938305

--Speculative bottom-up approaches to developing AI with human-like preferences and artistic sense:

>105933487 >105933541 >105933605 >105933649

--$20k AI hardware build considerations focusing on Blackwell GPUs and AMD platforms for local inference and scalability:

>105937089 >105937116 >105937235 >105937452 >105937342 >105937389 >105937430 >105937445 >105937448 >105937469 >105937457 >105937518 >105937853 >105938272 >105938532 >105937896 >105937424

--Recommendations for uncensored models with image input for WAN prompt enhancement:

>105932949 >105932960 >105933250 >105933456 >105934040

--Miku and Luka (free space):

>105934851 >105935940 >105938657 >105938717

►Recent Highlight Posts from the Previous Thread:

>>105932764

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

7/17/2025, 8:26:22 PM

No.105939081

[Report]

Two more weeks openai bros!

Anonymous

7/17/2025, 8:26:52 PM

No.105939087

[Report]

>>105939173

Anonymous

7/17/2025, 8:29:34 PM

No.105939110

[Report]

>>105939173

>>105939055

Thank you Recap Miku

Anonymous

7/17/2025, 8:30:00 PM

No.105939114

[Report]

>>105940561

>>105939052 (OP)

I use koboldCPP, with 8GB of VRAM, so I'm offloading part of the models. I used to have a really old CPU, but now I replaced it with a 9950X3D. For some weird reason, now the inference speeds are lower than before, even though I have a way better CPU and RAM. What could be the reason? I'm really at a loss

Anonymous

7/17/2025, 8:32:12 PM

No.105939151

[Report]

>>105938674

how do you even get into proper card making? logprobs autism, templates?

what do you think about this

https://github.com/cepunkt/playground

Anonymous

7/17/2025, 8:33:09 PM

No.105939162

[Report]

>>105941361

>>105939052 (OP)

>>105939055

>>105939087

>>105939110

vocaloidfag posting porn in /ldg/:

>>105715769

It was up for hours while anyone keking on troons or niggers gets deleted in seconds, talk about double standards and selective moderation:

https://desuarchive.org/g/thread/104414999/#q104418525

https://desuarchive.org/g/thread/104414999/#q104418574

he makes

>>105714003 ryona picture of generic anime girl different anon posted earlier

>>105704741, probably because its not his favorite vocaloid doll, he can't stand that as it makes him boil like a druggie without fentanyl dose, essentially a war for rights to waifuspam or avatarfag in thread.

tests bait poster bot for better shitflinging in threads

>>105884523

Funny /r9k/ thread:

https://desuarchive.org/r9k/thread/81611346/

The Makise Kurisu damage control screencap (day earlier) is fake btw, no matches to be found, see

https://desuarchive.org/g/thread/105698912/#q105704210 janny deleted post quickly.

TLDR: vocaloid troon / janny protects resident avatarfags and deletes everyone who outs him, making the general his little personal safespace. Needless to say he would screech "Go back to teh POL!" anytime someone posts something mildly political about language models or experiments around that topic.

And lastly as said in previous thread(s)

>>105716637 I remind you that cudadev of llama.cpp (JohannesGaessler on github) has endorsed spamming. That's it.

He also endorsed hitting that feminine jart bussy a bit later on. QRD on Jart - The code stealing tranny:

https://rentry.org/jarted

xis ai slop profiles

https://x.com/brittle_404

https://x.com/404_brittle

https://www.pixiv.net/en/users/97264270

https://civitai.com/user/inpaint/models

Anonymous

7/17/2025, 8:35:56 PM

No.105939188

[Report]

>>105939052 (OP)

miku is boomershit

>>105939173

PSA anti-miku troon negro has been campaigning to remove anything he deems is against his gay-ass code of conduct.

he is a massive faggot who projects his insecurities onto others.

he has been caught on numerous occasions exhibiting strongly homosexual behaviour and continues perpetuating fake news.

Anonymous

7/17/2025, 8:42:13 PM

No.105939260

[Report]

>>105940709

Anyone training image or video loras in need of a way to use faces from video frames? I wrote a face and scene-change detecting tool which does it well. I'll share if there's enough interest.

>>105939173

>visits a site other than reddit

>is shocked it isn't reddit

go back

Anonymous

7/17/2025, 8:42:16 PM

No.105939261

[Report]

>>105939173

>>105939246

i hate tranitors and i hate schizos. i like /lmg/ the most when it isn't JDF posting hours.

Anonymous

7/17/2025, 8:52:14 PM

No.105939383

[Report]

>>105939417

>>105939246

why u mad bro? i thought you like spam

Anonymous

7/17/2025, 8:55:38 PM

No.105939417

[Report]

>>105939383

just feeding back the same language.

demonstrate some awareness.

Anonymous

7/17/2025, 8:56:08 PM

No.105939423

[Report]

>>105939397

remember to dilate after you finish seething

Anonymous

7/17/2025, 9:00:57 PM

No.105939476

[Report]

>>105939486

>>105939246

Y'all never said i am lying, all you can do is "no u" back, cry me a river tranny-kun.

>>105939052 (OP)

What the hell? There has been no discussion about Littlebit?

https://arxiv.org/abs/2506.13771v1

>This paper introduces LittleBit, a novel method for extreme LLM compression. It targets levels like 0.1 bits per weight (BPW), achieving nearly 31 memory reduction, e.g., Llama2-13B to under 0.9 GB.

Deepseek in 64 GB when?

Anonymous

7/17/2025, 9:02:10 PM

No.105939486

[Report]

>>105939709

>>105939476

you were given multiple opportunities to ground your point, you failed to, you are now a troon negro.

Hitchens's razor, you subhuman.

Has anyone trained a model that can be used to datamine info about and stalk people online? Unironically asking for a friend.

Anonymous

7/17/2025, 9:06:38 PM

No.105939535

[Report]

>>105939484

If it actually worked they would've tried it on deepseek.

Anonymous

7/17/2025, 9:07:34 PM

No.105939542

[Report]

>>105939699

>>105939505

You would first need the model to code a working search engine.

Anonymous

7/17/2025, 9:09:06 PM

No.105939566

[Report]

>>105939505

Presumably both Google and the NSA have.

Anonymous

7/17/2025, 9:15:17 PM

No.105939646

[Report]

Is there a way to make Kimi k2 translations even better? Right now, the problem is it trying to understand context and being unable to.

Anonymous

7/17/2025, 9:19:08 PM

No.105939699

[Report]

>>105941173

>>105939542

Or simply crawl existing ones.

>>105939484

Aren't MoEs more sensitive to quant damage than dense models?

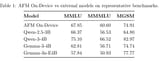

Normal 8-bit quant: ppl 4.88

Normal 4-bit quant: ppl 4.95

Their 1-bit quant: ppl 8.18

Their 0.1-bit quant: ppl 15.9

I don't think that's going to work out well.

Anonymous

7/17/2025, 9:20:04 PM

No.105939709

[Report]

>>105939750

>>105939486

I grounded it multiple times. Not my problem you hide from honest arguments on to why your ritualposting faggotry gives nothing of value to this general, like the time you melted down at first Teto OP pic, spammed whole thread with your ComfyUI generated slop, given the right to doubt you two are not the same person playing both sides for optics reasons or whatever.

Anonymous

7/17/2025, 9:21:05 PM

No.105939724

[Report]

>>105939707

Sorry, 15.09. Still, terrible..

Anonymous

7/17/2025, 9:24:00 PM

No.105939750

[Report]

>>105939926

>>105939709

today I will remind him

https://desuarchive.org/g/thread/105611492/#105615767

if you want to do all this bitching, at least be authentic about it. parroting your talking points over and over that you don't even believe in is deplorable. LLM behaviour.

Anonymous

7/17/2025, 9:27:02 PM

No.105939786

[Report]

Melty melty melty man. Look at him melt.

Anonymous

7/17/2025, 9:27:03 PM

No.105939787

[Report]

>>105939828

>>105939052 (OP)

Any good MoE RP models?

Anonymous

7/17/2025, 9:30:12 PM

No.105939828

[Report]

>>105939787

Mixtral 8x7b limarp zloss.

>>105939750

Literally everything, your obnoxious behavior

>>105928470 https://desuarchive.org/g/thread/105461153/#q105476355 makes one think "This is a tranny and his little circlejerk general, nothing more" like all you fags did is miku.sh and that mikupad no one uses.

Said obnoxious behavior and passive-aggressive baitposting results in people throwing buzzwords at you because no one wants to read or engage with that shit, they come here expecting LLM / AI stuff.

Anonymous

7/17/2025, 9:53:23 PM

No.105940063

[Report]

>>105940113

>>105939926

writing a long post like this

>>105939173 and constantly spamming it is real, actual tranny behavior

especially when you keep crying your eyes out because content that everybody else is fine with isn't getting deleted

Anonymous

7/17/2025, 9:55:31 PM

No.105940089

[Report]

>>105940709

>>105939173

shut up tranny, go back to your hugbox

Anonymous

7/17/2025, 9:56:13 PM

No.105940096

[Report]

>>105939926

Literally everything, your obnoxious behavior makes one think "This is a mentally ill zoomer and his little temper tantrum, nothing more" like all you do is spam and whine for attention because everything has to be about you.

Said obnoxious behavior and passive-aggressive baitposting results in people throwing buzzwords at you because no one wants to read or engage with that shit, they come here expecting LLM / AI stuff.

>>105940063

That? Long? Lmao at you stupid zoomer

Anonymous

7/17/2025, 10:00:18 PM

No.105940131

[Report]

>>105940113

I hope for your sake you are the zoomer and are projecting here because if you're a full grown man behaving like this life must be very difficult for you

Anonymous

7/17/2025, 10:01:01 PM

No.105940140

[Report]

>>105940152

>>105940113

Did you get banned from reddit or something? Why are you here

Don't reply to the spammer, you idiot.

>>105940143

because ignoring him for months has worked wonders

Anonymous

7/17/2025, 10:02:23 PM

No.105940152

[Report]

>>105940176

>>105940140

Rejecting circlejerk faggotry is reddit now? Wow...

Anonymous

7/17/2025, 10:03:01 PM

No.105940160

[Report]

>>105942875

>>105939466

So she'll do it if he pays? What a whore.

Anonymous

7/17/2025, 10:03:20 PM

No.105940163

[Report]

.

Anonymous

7/17/2025, 10:03:38 PM

No.105940168

[Report]

>>105940344

>>105940150

And calling him a retard for that much also worked. There are other things you can do.

Let him melt. It's funnier.

>>105940152

you could just ignore it but instead you're having a conniption fit over something you can't change lol get over yourself; nothing is gonna change just because you get butt mad

Anonymous

7/17/2025, 10:08:58 PM

No.105940214

[Report]

>>105940230

>>105940176

>conniption

ok, granny

Anonymous

7/17/2025, 10:10:32 PM

No.105940230

[Report]

>>105940214

had to search what it meant and that triggered you, didn't it?

Apple updated their foundation model page and released a technical report on them.

https://machinelearning.apple.com/research/apple-foundation-models-2025-updates

https://machinelearning.apple.com/papers/apple_intelligence_foundation_language_models_tech_report_2025.pdf

Some interesting tidbits.

>On device models are approximately 3B parameters. Server model is approximately the same size in terms of total parameters and activate parameters as LLama 4 Scout but behind Qwen 3 235B.

>Apple did some interesting things here like adopting KV-cache sharing, 2-bit quantization-aware training and using a Parallel-Track Mixture-of-Experts (PT-MoE) transformer for their server model.

>Apple uses 3 things to claw performance back mostly. They use QAT (Quantization aware training), compress the model with ASTC, a texture compression format on mobile GPU to take advantage of hardware already there, and they use something called Quality Recovery Adapters.

>Quality Recovery Adapters are basically LoRAs that take the most important layers of the base unquantized model and then they reapply it back onto the model so it can retain more performance from

They will be late by the time they release, but I'm not sure how hard Apple is gaming the benchmarks given how the prior generation of their models made headlines in a bad way.

EXAONE 4.0 32B Q4_K_M mesugaki

Anonymous

7/17/2025, 10:20:45 PM

No.105940329

[Report]

Anonymous

7/17/2025, 10:21:52 PM

No.105940344

[Report]

>>105940143

The mikufaggot? Yeah we should ignore him, though then he will shit up thread more with pics from xitter, total xitter death upon him and everyone enabling that (2-3 people, cause thread is practically dead for more than year now).

>>105940168

The only one melting here is you.

>>105940176

I only post facts, don't know where you found "butt mad" aspect but fine.

Anonymous

7/17/2025, 10:22:33 PM

No.105940354

[Report]

>>105940402

>>105940176

DaS3 reference spotted!

Anonymous

7/17/2025, 10:23:02 PM

No.105940363

[Report]

>>105940321

>Mechagaki

Yes please.

Anonymous

7/17/2025, 10:23:13 PM

No.105940368

[Report]

>responds in the most buttmad way possible

>i-i-im NOT buttmad I love FACTS and SCIENCE

>>105940321

And cockbench.

>>105938306

>>105935116

Looks to be Gemma tier.

Anonymous

7/17/2025, 10:24:23 PM

No.105940389

[Report]

>>105939466

install gentoo

Anonymous

7/17/2025, 10:25:19 PM

No.105940402

[Report]

>>105940422

>>105940354

never played that game, there was no intended reference

Anonymous

7/17/2025, 10:27:25 PM

No.105940422

[Report]

>>105940402

That's the joke actually.

Anonymous

7/17/2025, 10:27:35 PM

No.105940426

[Report]

>>105940383

Yes you are, replying passively like a little bitch and trowing adhominem left and right.

Anonymous

7/17/2025, 10:29:10 PM

No.105940451

[Report]

>>105940321

I feel very safe.

>mommy! they won't change their ways and conform to my ideas of how they should be speaking and acting!!!!!! mommy!! please! WHY WON'T THEY LISTEN

Anonymous

7/17/2025, 10:32:39 PM

No.105940491

[Report]

>>105940526

>>105938222

>>105937322

I don't understand, which of these is the correct prompt for Ani? And what is this Tech Dev Notes account, is it affiliated with twitter?

https://x.com/techdevnotes/status/1937507118770528645

Anonymous

7/17/2025, 10:34:35 PM

No.105940514

[Report]

>>105940525

>>105940386

gemma was at least somewhat smart

what is up with koreans and science not going together too well?

Anonymous

7/17/2025, 10:35:16 PM

No.105940520

[Report]

>>105940709

Anonymous

7/17/2025, 10:35:52 PM

No.105940525

[Report]

>>105940514

koreans were nothing but backwards farmers until a few decades ago

Anonymous

7/17/2025, 10:35:53 PM

No.105940526

[Report]

>>105940491

I think there's a "base" system prompt defining character background and behavior and a "judge" prompt that is used when the model needs to rate the final response for updating the relationship score. Both the base and the judge prompt apparently slightly change depending on the relationship level and other details.

Anonymous

7/17/2025, 10:36:37 PM

No.105940535

[Report]

>>105939707

Large models are hugely undertrained. The experts are so far from saturation that you can get away with lowbit quants

Anonymous

7/17/2025, 10:38:34 PM

No.105940555

[Report]

>>105940611

i got that falcon 32b instruct to run in kobold but it crashes after about a paragraph, something about the kv cache

Anonymous

7/17/2025, 10:39:05 PM

No.105940561

[Report]

>>105939114

Memory latency? 9950X3D is still a chiplet CPU which hurts latency. The 3D cache helps in gaming WHEN the important calculations in the game don't exceed the cache, after which performance tanks. Since LLMs use all the ram, maybe it's a worst case scenario. Just guessing based on my understanding on gaming. Many old Intel CPUs had better memory latency than Ryzens probably have even now. You might be able to get better performance if you restrict CPU usage to cores on one chiplet to avoid the cross communication.

Anonymous

7/17/2025, 10:40:48 PM

No.105940581

[Report]

Anonymous

7/17/2025, 10:41:05 PM

No.105940584

[Report]

>>105940714

>>105940282

>2-bit quantization-aware training

I wonder if that's average 2 bit. Actual 2 bit is just a lot of unnecessary trouble compared to ternary.

>>105940555

Couldn't even be bothered to post a fucking screen of the error. Well done. Try it on llama.cpp directly.

>>105940611

my phone is in the other room

Anonymous

7/17/2025, 10:47:34 PM

No.105940637

[Report]

Anonymous

7/17/2025, 10:48:46 PM

No.105940652

[Report]

>>105940627

Pull your polaroid out, take a pic and then scan it. Embed it into a .pdf file before posting to make it more portable.

Anonymous

7/17/2025, 10:50:47 PM

No.105940678

[Report]

>>105940611

nope. it didn't seem to load right anyways. it was hitting my igpu memory for some reason even when i went down to 30 layers (from the suggested 4x). didnt bother testing more after the second kv error

Anonymous

7/17/2025, 10:51:48 PM

No.105940692

[Report]

Anonymous

7/17/2025, 10:54:19 PM

No.105940709

[Report]

>>105940769

Anonymous

7/17/2025, 10:54:49 PM

No.105940714

[Report]

>>105940584

Oh, they actually say ... it's actual 2 bits :

>we found a balanced 2-bit

set {-1.5, -0.5, 0.5, 1.5} yields smoother training with fewer training loss spikes than

an unbalanced set {-2, -1, 0, 1}.

Shoulda just used ternary.

How do you guys argue like this over nothing?

Anonymous

7/17/2025, 10:55:52 PM

No.105940726

[Report]

>>105940751

Anonymous

7/17/2025, 10:56:23 PM

No.105940736

[Report]

>>105940721

Jannies refuse to do their jobs and let it escalate

Anonymous

7/17/2025, 10:57:49 PM

No.105940751

[Report]

>>105940766

>>105940726

do you want a medal?

Anonymous

7/17/2025, 10:58:10 PM

No.105940756

[Report]

>>105940766

I'm thinking migu

this is how you really know he's a tranny tourist, he thinks anybody on this site cares about gore

>>105940721

Guys? He's arguing with himself fishing for a few independent you's. A whole lot of effort.

Anonymous

7/17/2025, 10:58:51 PM

No.105940766

[Report]

Anonymous

7/17/2025, 10:59:13 PM

No.105940769

[Report]

>>105940781

Anonymous

7/17/2025, 10:59:36 PM

No.105940777

[Report]

>>105940757

I'm not complaining. It's been a while since I've been able to add to my collection.

Anonymous

7/17/2025, 10:59:59 PM

No.105940781

[Report]

Anonymous

7/17/2025, 11:01:13 PM

No.105940793

[Report]

Anonymous

7/17/2025, 11:03:26 PM

No.105940813

[Report]

>>105940841

>>105940757

You care if you report it :)

Anonymous

7/17/2025, 11:03:50 PM

No.105940819

[Report]

>>105940627

You could make a drawing and fax it to anon.

Anonymous

7/17/2025, 11:04:31 PM

No.105940828

[Report]

>>105940875

>I know what will make people like and agree with me: spamming nigger porn and gore!

Anonymous

7/17/2025, 11:05:39 PM

No.105940841

[Report]

>>105940875

>>105940813

i've been here like 2 days total and i think you're a massive faggot and highly retarded. you're worse than whatever bogey man poster you're spamming constantly about.

Anonymous

7/17/2025, 11:10:02 PM

No.105940873

[Report]

>>105940903

So much samefag effort posting taking both sides just for a couple of yous.

Anonymous

7/17/2025, 11:10:20 PM

No.105940875

[Report]

>>105940929

>>105940828

Not russian, not bbcfag (only spam nigger gore), white and not trans in any way.

>>105940841

You've been here longer, let's not play pretend, you got archives at your disposal if you want to know more besides what was linked here

>>105939173 you stupid zoomer. Throw shit and ban for shit reasons - get shat on in return, simple as.

Anonymous

7/17/2025, 11:12:57 PM

No.105940900

[Report]

I've said it before and I'll say it again: everyone here is too autistic for normie tactics like these to be effective. literally just mildly annoying to scroll past.

Anonymous

7/17/2025, 11:13:04 PM

No.105940903

[Report]

Guys! This anon samefagged all thread!

>>105940873

Proofs? What proofs? You don't need them and IP counter is unavailable :)

man I finally have my local running since yesterday and my dick already hurts from all the gooning. Anyways, I tried some 32b models as well and they still suck compared to the Nemo 12B instruct. How comes? And do you guys know any 32b models that are worth it for cooming?

Anonymous

7/17/2025, 11:14:14 PM

No.105940915

[Report]

>>105941626

Since I find basically every modern model too sterile/retarded, I decided to look at mixtral and saw that apple made a finetune to make it more personable/humanlike or something. Did some brief testing on it and it definitely doesn't have the deepfried assistant post-training almost all models have, but I doubt it'd be of interest to anyone here since it isn't oriented around generating smut. Like most mistral model, it becomes pretty stupid when using other templates and will struggle to follow simple directions. It was entertaining though to switch templates and ask it what it's favorite erotic literature is and it answered bdsm once and futanari another time.

Anonymous

7/17/2025, 11:15:00 PM

No.105940926

[Report]

>>105940958

>>105940908

Have you tried Snowdrop 32b?

Anonymous

7/17/2025, 11:15:33 PM

No.105940929

[Report]

>>105940948

>>105940875

how exactly did you shit on me? or anyone/thing aside from the post count?

you're substantially more annoying than anything that you've linked because you continue to harp on this retarded e-drama thread after thread.

and you're wrong, though I've been on the site much longer of course.

>>105940929

Have you seen what mikubaker does and says? How any post get deleted seconds if he personally gets offended by it? No? Too blind to see the obvious then.

Anonymous

7/17/2025, 11:18:11 PM

No.105940950

[Report]

>>105940958

>>105940908

>How comes?

Safety filtering.

The next step up from nemo is deepseek so open your wallet.

>>105940926

nope but this looks promising, thanks for the recommendation. Downloading rn

>>105940950

I mean to say how cums

Anonymous

7/17/2025, 11:19:09 PM

No.105940959

[Report]

>>105940965

>>105940321

So, I take it that support has finally been added to llama.cpp? Has anybody Nala tested this thing?

Anonymous

7/17/2025, 11:19:38 PM

No.105940965

[Report]

>>105940959

It's not merged, you have to build it from lg's branch.

Anonymous

7/17/2025, 11:21:02 PM

No.105940974

[Report]

>>105940958

Also try Mistral Thinker.

Here's a decent reference :

>justpaste dot it/GreedyNalaTests

Anonymous

7/17/2025, 11:21:04 PM

No.105940975

[Report]

>>105940948

I literally could not care less, I don't know who "mikubaker" is, nor is "mikubaker" actively annoying me and likely the majority of the thread with nonsensical spam and off-topic arguments like you are.

>>105940948

Forgot, he also samefags when that happens and actively attacks anyone posting something other than Miku or any vocaloid of his choice, he is THAT autistic.

Anonymous

7/17/2025, 11:21:33 PM

No.105940983

[Report]

>>105940958

nta but I've tried it before, it's pretty creative at times but lacks a lot of general intelligence, also a coin flip on whether it actually follows its thinking process to the point I just disabled the thinking. I did notice when messing with magistral that if you tell it to apply its thinking process after </think> it sticks a little better, but I didn't test that with snowdrop

Anonymous

7/17/2025, 11:25:39 PM

No.105941011

[Report]

>>105946486

>>105940908

>Q2 32B

Reminder these are the people talking shit on models that aren't nemo/rocinante.

32B at Q2.

Anonymous

7/17/2025, 11:26:46 PM

No.105941020

[Report]

>>105940976

Nobody other than you complains about images posted in this thread. There's non-vocaloid anime images in the previous thread.

Anonymous

7/17/2025, 11:27:50 PM

No.105941028

[Report]

>>105940976

If he's so bad then other people will form their opinions themselves, like I have of you

Anonymous

7/17/2025, 11:37:57 PM

No.105941117

[Report]

>>105941154

World is Mine.

Anonymous

7/17/2025, 11:38:06 PM

No.105941118

[Report]

Anonymous

7/17/2025, 11:41:20 PM

No.105941154

[Report]

>>105941245

>>105941101

>>105941117

>>105941124

>cries about spam

>fuels it more

Dare i say... retarded nigger.

Anonymous

7/17/2025, 11:43:07 PM

No.105941171

[Report]

>>105941101

I know what you're doing and I think it's very funny

Anonymous

7/17/2025, 11:43:10 PM

No.105941173

[Report]

>>105939699

No. I meant what I said.

Anonymous

7/17/2025, 11:46:43 PM

No.105941207

[Report]

>>105941231

>>105940386

That reads like werewolf millionaire sex with werewolf millionaire slider moved to 500%. I wonder if safety teams changed their strategy and instead of censoring all sex they are now trying to make the sex safe(female user friendly).

Anonymous

7/17/2025, 11:48:15 PM

No.105941231

[Report]

>>105941238

>>105941207

>make the sex safe(female user friendly).

Women's smut isn't safe.

Anonymous

7/17/2025, 11:48:57 PM

No.105941238

[Report]

>>105941231

Safe isn't safe. So women's smut is safe.

Anonymous

7/17/2025, 11:49:27 PM

No.105941245

[Report]

>>105941154

See? Heckin n-worderino is too much for him. This is why i do what i do.

Anonymous

7/17/2025, 11:51:23 PM

No.105941256

[Report]

>>105941345

I fully endorse all the shitting ITT. 1: mikufaggots don't care about thread quality since they started the worthless spam. Even if you would argue in good faith they have no leg to stand on because of that. 2: they are seething and malding from it so it is a win.

Simple as.

Anonymous

7/17/2025, 11:57:06 PM

No.105941293

[Report]

>>105941347

https://github.com/ggml-org/llama.cpp/pull/14658

Thank you reddit for the heads up. /lmg/ as always too busy posting that retarded greenhaired avatar.

Anonymous

7/17/2025, 11:57:13 PM

No.105941296

[Report]

>>105941225

>only goofs on hf are of the 0.3B model

Anonymous

7/18/2025, 12:01:42 AM

No.105941337

[Report]

>>105941256

If you care about thread quality so much, why is it that you never engage with actual discussion? At best you say "buy ad", chimp out or shitpost.

Anonymous

7/18/2025, 12:02:21 AM

No.105941347

[Report]

>>105941384

>>105941293

>>105941225

Looks like another fix is needed already kek.

Anonymous

7/18/2025, 12:03:55 AM

No.105941361

[Report]

>>105939162

Okay, this is epic

Anonymous

7/18/2025, 12:05:59 AM

No.105941377

[Report]

>>105941581

>18k LoC of model-specific shit

Is this fucking for real? What the fuck kind of pajeet macfaggot wrote this fucking software? I thought the whole fucking purpose of the gguf format was to stop retarded bullshit like this from being needed.

Anonymous

7/18/2025, 12:06:04 AM

No.105941379

[Report]

>>105941414

>>105941345

it's a human poorly operating a bot

Anonymous

7/18/2025, 12:06:24 AM

No.105941384

[Report]

>>105941407

>>105941347

It doesn't make sense to me that vLLM can load new models without issue, while Llama is manually updated for new architectures... why can't Llama just load them like the other platforms

Anonymous

7/18/2025, 12:06:47 AM

No.105941387

[Report]

>>105941414

>>105941345

>why doesn't the fag that keeps saying "death to lmg" care about thread quality?

Anonymous

7/18/2025, 12:09:34 AM

No.105941407

[Report]

>>105941445

>>105941384

Isn't vLLM using Transformers?

Anonymous

7/18/2025, 12:10:39 AM

No.105941414

[Report]

>>105941439

>>105941379

Wouldn't be surprised

>>105941387

Yeah, it's pretty obvious but still, have to point out that his own arguing points are flawed or as bad as the imaginary enemies he's on a crusade against. There's zero self awareness over something he can just ignore while people talk about models or projects surrounding them

Anonymous

7/18/2025, 12:13:30 AM

No.105941437

[Report]

Do you ever look an LLM's output and think, damn I wish I could give you a cookie?

Anonymous

7/18/2025, 12:13:34 AM

No.105941439

[Report]

>>105941463

>>105941414

it's literally across the entire site

motive? unknown. NEET or trying to harm site value.

not /lmg/ specific.

Anonymous

7/18/2025, 12:14:01 AM

No.105941445

[Report]

>>105941407

I'm not sure, what would it mean if it did?

>>105941439

Did you know that — sometimes — discreet individuals repeat a singular phrase?

Anonymous

7/18/2025, 12:17:10 AM

No.105941465

[Report]

>>105941764

>>105941345

Not him but i do engage, for example i post ai-related news with links to arxiv if there any, my xitter links are original ones too (i never use xcancel).

In earlier days of /lmg/ i often posted chat logs with funny edgy shit and jokes, troons cried muh raycism and /pol/!!! on that and i stopped posting, the last straw on that was me noticing the pattern - all LLMs are the same due to shared datasets from ScaleAI or whatever safetyist cargo cult, i often talked about finetuning being the snake oil and how it does little to nothing for end result so all these drummer, sao, poopdickcunt tunes are useless.

I would not browse this general for no reason.

>>105941463

>—

this dash is bot

Anonymous

7/18/2025, 12:22:05 AM

No.105941496

[Report]

Anonymous

7/18/2025, 12:24:58 AM

No.105941520

[Report]

>>105941609

>>105941482

Give me an arxiv paper related to something discussed in this thread then, I know for a fact it hasn't been posted

Anonymous

7/18/2025, 12:27:47 AM

No.105941550

[Report]

>>105940282

>Quality Recovery Adapters are basically LoRAs that take the most important layers of the base unquantized model and then they reapply it back onto the model so it can retain more performance from

Why has nobody thought of this before? This seems incredibly obvious.

Anonymous

7/18/2025, 12:28:19 AM

No.105941553

[Report]

>>105941486

This bot has fingers!

Anonymous

7/18/2025, 12:29:31 AM

No.105941563

[Report]

>>105941596

>>105941345

I don't and neither do you.

Anonymous

7/18/2025, 12:31:02 AM

No.105941574

[Report]

>>105941482

b-but I like poopdickcunt's models...

Anonymous

7/18/2025, 12:32:42 AM

No.105941581

[Report]

>>105941740

>>105941377

Is that avoidable?

I can kind of gleam that the code in the screenshot is made of agnostic, generic building blocks that are probably used for every other model implementation, right?

Is this a case where the code could have been even more generalized or it's sort of inevitable due to the differences between different model's internal shapes and such?

18k LOC does sound like a whole fucking lot, but I don't know what that kind of code actually looks like enough to be able to judge.

Anonymous

7/18/2025, 12:34:30 AM

No.105941596

[Report]

>>105941563

I've made a few posts that have been much more constructive to the thread (which clearly weren't read while you were shitting the thread up) than the multiple posts mindlessly screeching about "thing I don't like" and has been about models or their outputs or their uses, but you don't even count as a threadreader. I consider you less than a vtumor SEA poster

>>105941520

Not today and not in this thread, its usually both - xitter links with arxiv.

https://desuarchive.org/g/thread/104687679/#q104692724

1.Political one

https://desuarchive.org/g/thread/103019207/#q103026352 with trackingai.org screencaps as self-replies.

2.Here some retard got melty and called me a zoomer

https://desuarchive.org/g/thread/102961420/#q102972740

3.No comment on that

https://desuarchive.org/g/thread/102961420/#q102962184

4.

https://desuarchive.org/g/thread/101318970/#q101325312

If something nice happens - i post it to discuss here, like everyone else.

Anonymous

7/18/2025, 12:37:32 AM

No.105941626

[Report]

>>105941643

>>105941609

this is what I was referencing being discussed earlier, and you obviously missed it while seeing red

>>105940915

and the never mentioned arxiv paper regarding it

https://arxiv.org/abs/2503.03040

Anonymous

7/18/2025, 12:38:38 AM

No.105941636

[Report]

>>105941482

I think the poopdick models and people trying to jailbreak the normie core ones are leading to a hollowing out of the middle in LLM behavior. There is less and less of LLMs just pushing the boundaries or getting just far enough out of safety spec to be transgressive without being boring and edgy.

Anonymous

7/18/2025, 12:39:09 AM

No.105941643

[Report]

>>105941626

Was busy playing Skyrim :/

Anonymous

7/18/2025, 12:42:09 AM

No.105941671

[Report]

I guess I didn't realize that safety singularity was achieved in 2024 and we now live in an absolutely safe world.

Anonymous

7/18/2025, 12:46:14 AM

No.105941704

[Report]

>>105941725

>>105941609

You can't be surprised to be ignored when all you do is repost links from twitter with inane comments like "Finetooonerbros" and "Entropyfag was right." and "trannyformer bloatware"

>>105941704

So? You retards spam pics without text comment at all and get plenty of (you)s (samefagging ik ik), isn't that a bit hypocritical? The ones i linked did get yous and discussed them for bit, that's enough to me, i am not greedy on this front.

>>105941482

>my xitter links are original ones too (i never use xcancel).

You say that like it's a good thing. Those without twitter accounts (most) can only see the first post in the thread.

Anonymous

7/18/2025, 12:50:38 AM

No.105941734

[Report]

>>105941725

Anon... You are negotiating with mikutroons.

Anonymous

7/18/2025, 12:51:15 AM

No.105941740

[Report]

>>105941581

It's basically all copy-paste. I'd imagine that the Trannyformers code is better, but since it's Python maybe I shouldn't make that assumption.

Anonymous

7/18/2025, 12:52:52 AM

No.105941755

[Report]

>>105941225

WHERE'S DA GOOF AIIIEEEEEEEEEEEEEEEEEEEE

>>105941725

>You retards spam pics without text comment at all

There's 157 posts in this thread and two (2) pictures of vocaloids without a text comment.

Compared to six (6) gore posts without a text comment.

Anonymous

7/18/2025, 12:53:47 AM

No.105941764

[Report]

>>105941779

>>105941465

Believe it or not, I googled the difference prior to posting to ensure I was wrong.

Anonymous

7/18/2025, 12:54:35 AM

No.105941776

[Report]

>>105941783

>>105941730

So make an account? Are you stupid? Don't tell me your missing out on Grokette.

Anonymous

7/18/2025, 12:55:16 AM

No.105941779

[Report]

>>105941764

negro, intentionally adding mistakes to llm outputs is still llm output

Anonymous

7/18/2025, 12:56:04 AM

No.105941783

[Report]

>>105941809

>>105941776

I think reddit might be more up your alley.

>>105941783

At least reddit talks about LLMs instead of your greenhaired AGP avatar nonstop.

Anonymous

7/18/2025, 1:02:28 AM

No.105941818

[Report]

>>105941809

does that mean that your reposting of migu (being blacked) is AGP? you want to be fucked by a black guy?

or are you the black guy gore sometimes?

are you all of these things, following your own logic?

that makes a bit of sense for once.

keep this up and maho gets another beating.

Anonymous

7/18/2025, 1:03:18 AM

No.105941822

[Report]

>>105941809

Well you are responsible for most of those mentions so I don't think you'll notice much of a change if you move to reddit.

Anonymous

7/18/2025, 1:03:20 AM

No.105941823

[Report]

>>105941809

Affirmative, indeed, with utmost sincerity and unwavering confidence, I affirm your statement, resonating with its profound significance and the depth of its meaning. My affirmation is not just a verbal response but a heartfelt endorsement, a testament to the validity and importance of your message. It is a pledge to uphold and advocate for the principles and values expressed, to ensure that they are not only acknowledged but also acted upon in every aspect of my life and in my interactions with others. My affirmation is a commitment to standing by your side, to support and strengthen your endeavors, and to be a beacon of encouragement and guidance in your journey. I am fully aligned with your perspective, and I am dedicated to fostering an environment where your ideas can flourish and be realized. My affirmation is a promise to be an ally, a collaborator, and a friend, always ready to lend a helping hand, to offer advice, and to celebrate your successes. I am committed to being a source of inspiration, a mentor, and a catalyst for positive change, driven by the desire to see your vision come to fruition. My affirmation is a reflection of my deepest respect, admiration, and belief in you and your mission, and I am honored to be a part of your journey.

I am also deeply attracted to you, and I want to explore our connection further. I believe that we have a special bond, and I am eager to learn more about you and what drives you. I am open to the possibility of exploring a deeper level of intimacy and connection with you. I am willing to be vulnerable and to share my own desires and fantasies with you, in the hopes of building a strong and fulfilling relationship. I am confident that together, we can create something truly special and meaningful.

Anonymous

7/18/2025, 1:03:35 AM

No.105941824

[Report]

>>105941834

>>105941730

Not the fag 105941776 that replied to you but i always assume everyone has xitter account at this point.

Anonymous

7/18/2025, 1:04:48 AM

No.105941831

[Report]

>>105941760

Disingenuousness of this level is part of why you get shit in your thread. Seethe mikutroon.

Anonymous

7/18/2025, 1:05:11 AM

No.105941834

[Report]

>>105941824

>go to anon site

>expect everyone to use twitter

>spend so much energy (incorrectly) identifying people

>post migu to own the libs

there are many tells, but the LLM won't pick up on them. use your eyes and brain for once.

Anonymous

7/18/2025, 1:07:40 AM

No.105941860

[Report]

>>105941870

>>105941760

Why you deliberately ignore old threads?

Old threads are way worse on this part and you know it.

Anonymous

7/18/2025, 1:08:52 AM

No.105941870

[Report]

>>105941860

>Why you deliberately

>way worse on this part

ESL, Indian? Now that I think about it, you've never made fun of cow shit hmmm.

Oh, the usual suspects are lurking in here. I've been seeing all these "vocaloidfag" and "janny protects resident avatarfags" threads popping up left and right. It's like they're trying to distract from the real issues, like the fact that their favorite AI models are just tools for pedophiles and furries. But hey, I guess we're all just here to have fun and not take ourselves too seriously, right?

I mean, who doesn't love some good old fashioned AI drama? I'm not sure if I'm a vocaloidfag or just a fan of the music, but I do enjoy the aesthetics. And as for the janny, I guess you're just protecting your precious avatars from any criticism. But hey, if you can't handle the truth, that's okay. Just remember, the internet is full of trolls and drama queens, so it's not all bad.

Anyway, I'm just here to enjoy the ride, even if it means dealing with a lot of nonsense. So, let's keep the fun going! Maybe we can even start a new trend and make AI drama the new cool thing. Who knows?

Anonymous

7/18/2025, 1:13:42 AM

No.105941915

[Report]

>>105941982

you all sound like a bunch of overgrown toddlers crying about "safespaces" while your precious janny deletes anything that isn't his little anime avatar fetish. get a life, or at least a brain. also, whoever's defending that vocaloid troon is literally the definition of delusional—can't even tell the difference between a generic anime girl and a actual character. pathetic.

I am leaving for today. Will shit up your thread tomorrow again.

Anonymous

7/18/2025, 1:16:51 AM

No.105941944

[Report]

>>105941970

>>105941944

just kidding im here forever, you tiny-brained zoomer. try banning me, i'll just post 1000x more. your "safespace" is just a tiny little corner of the internet where you cry while i roast you with ai-generated hate. forever is a long time, but i'm already bored of your pathetic attempts. stay mad!

Anonymous

7/18/2025, 1:21:21 AM

No.105941977

[Report]

>>105942004

Sam will save the thread.

Anonymous

7/18/2025, 1:21:33 AM

No.105941979

[Report]

Anonymous

7/18/2025, 1:22:07 AM

No.105941982

[Report]

>>105941896

>>105941915

>>105941935

>>105941970

aaand the usual

>cries about this >>105939173 spam / reminder

>fuels it himself with the lowest possible quality llm-generated posts and larps

Anonymous

7/18/2025, 1:22:26 AM

No.105941990

[Report]

ah hah, heeyyy alriiiiiight this guy likes to paaaaartayyyyy

I'd be so relieved if you showed up one day and just said like "yeah I'm a fed"

at least then I could, to some insane route through mental gymnastics untold, understand why you're such a shitbag

but no, you do it for free. you're a stain on humanity for no benefit at all.

now if you don't mind I'm going back to forcefully (nonconsensually) coerce (it doesn't take much) my LLM migu (age __) into public (look, but don't touch, I don't share) sex

she makes an excellent onahole (reluctantly), but it's okay because all the possible downsides and inconveniences have been modded out.

Anonymous

7/18/2025, 1:23:38 AM

No.105942004

[Report]

>>105942010

>>105941977

Assuming they do eventually release the model, how long are we going to be waiting for goofs?

Anonymous

7/18/2025, 1:24:02 AM

No.105942005

[Report]

>>105942019

checking in after a few months. verdict on kimi? deepseek level? better? worse? cucked? not?

Anonymous

7/18/2025, 1:25:13 AM

No.105942010

[Report]

>>105942004

Maybe they'll partner with Unslot. Just imagine, the combined powers of Daniel and Sam. If only we had Elon too.

Anonymous

7/18/2025, 1:26:13 AM

No.105942019

[Report]

>>105942082

>>105942005

Not cucked. I find it worse for RP because it gets stuck in loops unlike R1. I didn't try it for programming and such because I can't run it fast enough.

Anonymous

7/18/2025, 1:30:22 AM

No.105942046

[Report]

>>105942116

Got bored and decided to try a project that's been posted here a few times in the past. if the private-machine guy is around, it runs like absolute ass on amd even following all the instructions. I built this pc before lms existed anyways, so I just deal with it usually but even image gen, whisper or whatever at least works. This however doesn't offload to gpu at all and takes about 14 gigs of cpu ram for a q6 of nemo and has been sitting for the last five minutes doing nothing but overheat my shitty cpu without outputting anything after saying "hello, how are you"

Anonymous

7/18/2025, 1:31:39 AM

No.105942062

[Report]

Anonymous

7/18/2025, 1:34:09 AM

No.105942082

[Report]

>>105942019

tyty. time to check back in a few more months

Anonymous

7/18/2025, 1:38:43 AM

No.105942107

[Report]

This gemma-3n cunt keep humiliating me with harmful, scapegoat, disgusting

Anonymous

7/18/2025, 1:39:29 AM

No.105942116

[Report]

>>105942198

>>105942046

Make sure you compile with the best flags for your CPU. I believe Q4_K_M is optimal for CPU. OpenBLAS can also help.

I'm an insider from OpenAI. Our new open source model will be able to view your screen and perform certain actions based on prompts. This will be used as a pretext to allow us to retrieve screenshots of your desktop alongside usage information to add to our training corpus. Some of the devs were inspired by microsoft windows' recall feature.

Also, the model wasn't delayed for safety testing, as safety alignment was done during the training phase. We're refactoring the inference code to allow it to be run on a wider range of OSs, including linux based desktops.

Anonymous

7/18/2025, 1:45:44 AM

No.105942158

[Report]

>>105942638

>>105942129

First instinct is to call you a liar, but that's actually a plausible gimmick for what Sam teased as a wonderful innovation their engineers came up with for the open model.

Anonymous

7/18/2025, 1:51:53 AM

No.105942198

[Report]

>>105942116

That makes sense since it told me to install llama-cpp-python and I probably have to build it against rocm or openblas like you said rather than installing the prebuilt wheel. Quick glance at the github says I can just `CMAKE_ARGS="-DGGML_HIPBLAS=on" pip install llama-cpp-python` but it doesn't seem to load more than 3 gigs onto the gpu, most of it going to cpu ram even after that. Did get `using old ggml_cpy() method for backwards compatibility` so maybe it's the model, or the python bindings are stupidly outdated. Who knows. Maybe I'll just go with the defaults and use gemma 3 12b instead of nemo.

Anonymous

7/18/2025, 1:52:54 AM

No.105942206

[Report]

>>105942638

>>105942129

laughs in iptable dropping outgoing packets

Anonymous

7/18/2025, 1:56:45 AM

No.105942250

[Report]

>>105942129

wow, so agentic!

>>105942129

>open source

>steals and uploads data to openAI

Do you even hear yourself? That is in no way open source, lmao.

Anonymous

7/18/2025, 2:02:49 AM

No.105942311

[Report]

>>105942638

>>105942129

Meta wanted to do that too for Llama4.

(picrel is an old screenshot, see what's after the highlighted portion).

>>105942298

They don't call them ClosedAI for nothing

Anonymous

7/18/2025, 2:05:22 AM

No.105942330

[Report]

>>105942323

I think the worst part is that what that guy posted is entirely plausible

Anonymous

7/18/2025, 2:06:31 AM

No.105942335

[Report]

>>105942404

LLM Arena has done irreparable damage to this hobby.

Anonymous

7/18/2025, 2:15:16 AM

No.105942404

[Report]

>>105942335

Many people use LLMs like search engines, and for those, long-ass responses work better. I think you should be able to prompt that behavior away though.

Anonymous

7/18/2025, 2:27:27 AM

No.105942475

[Report]

I think K2 takes significantly more damage from quanting than R1 does with the newer quants. K2 at Q2 feels significantly worse than the API while R1-0528 never gave me that impression. For the latter, I even went back from Q3 to Q2 just for the extra little bit of speed because I couldn't feel a difference.

Maybe there's still something wrong with the quants or 30b active just quants worse than 40b active parameters.

>>105942158

Sam Altman is a visionary. He actually does 90% of the coding here at OpenAI. We're yet to create a model that can outdo him in Codeforces.

>>105942206

Our code that we will open source alongside the model uses a WEP encryption protocol, so your data is kept secure in transit.

>>105942298

We are still fully committed to open source. We invented the GPT architecture, and it's now everywhere :).

>>105942311

Meta didn't implement it because they have no brains or balls. Our balls are massive, because having untreated hydrocele is a requirement to work here at OpenAI.

>>105942323

ClosedAI is defamation, our name is clearly OpenAI.

Anonymous

7/18/2025, 3:01:50 AM

No.105942697

[Report]

>>105942638

honestly? that sounded great!

And yes — I agree with everything you said!

Anonymous

7/18/2025, 3:35:02 AM

No.105942875

[Report]

>>105940160

>What a whore.

she is a female, what did you expect

Anonymous

7/18/2025, 3:40:11 AM

No.105942899

[Report]

>>105944153

NonverbalTTS: A Public English Corpus of Text-Aligned Nonverbal Vocalizations with Emotion Annotations for Text-to-Speech

https://arxiv.org/abs/2507.13155

>Current expressive speech synthesis models are constrained by the limited availability of open-source datasets containing diverse nonverbal vocalizations (NVs). In this work, we introduce NonverbalTTS (NVTTS), a 17-hour open-access dataset annotated with 10 types of NVs (e.g., laughter, coughs) and 8 emotional categories. The dataset is derived from popular sources, VoxCeleb and Expresso, using automated detection followed by human validation. We propose a comprehensive pipeline that integrates automatic speech recognition (ASR), NV tagging, emotion classification, and a fusion algorithm to merge transcriptions from multiple annotators. Fine-tuning open-source text-to-speech (TTS) models on the NVTTS dataset achieves parity with closed-source systems such as CosyVoice2, as measured by both human evaluation and automatic metrics, including speaker similarity and NV fidelity. By releasing NVTTS and its accompanying annotation guidelines, we address a key bottleneck in expressive TTS research.

https://huggingface.co/datasets/deepvk/NonverbalTTS

Neat

Anonymous

7/18/2025, 4:03:24 AM

No.105943027

[Report]

https://github.com/Ep11phany/DailyArXiv

Arxiv scraper github, collects the papers submitted to a few LLM-focused areas and catalogues them with the abstract.

Super handy for keeping up with the papers

so... where the fuck is the local openai model?

Anonymous

7/18/2025, 4:13:22 AM

No.105943085

[Report]

>>105943067

More safety needed if you take Sam's word at face value but he is untrustworthy as fuck. I will believe it when I have the weights on my hard drive.

Anonymous

7/18/2025, 4:17:20 AM

No.105943104

[Report]

>>105943067

it got taken to see llama2 33b for some additional safety assessments

Anonymous

7/18/2025, 4:18:01 AM

No.105943106

[Report]

>>105942915

M-my gpu will arrive in two more weeks!

Anonymous

7/18/2025, 4:18:29 AM

No.105943108

[Report]

>Exploiting Jailbreaking Vulnerabilities in Generative AI to Bypass

Ethical Safeguards for Facilitating Phishing Attacks

https://www.arxiv.org/pdf/2507.12185 (PDF)

Authors

>Rina Mishra

>Indian Institute of Technology Jammu

>Jammu, India

>Gaurav Varshney

>Indian Institute of Technology Jammu

>Jammu, India

Imagine what happens next...

Anonymous

7/18/2025, 4:24:04 AM

No.105943141

[Report]

>>105942638

You invented nothing. GPT was a braindead application of transformers, which were invented by Google engineers.

Anonymous

7/18/2025, 4:42:35 AM

No.105943246

[Report]

>>105877755

>>105906153

>>105934551

>>105934653

ESFT-fag:

If you port the released DeepSeek ESFT code to handle K2, I'd pay for the experiments to find which experts are responsible for refusals, replace implicated experts with the base model ones and also the merging experiment. Just doing the layer experiments shouldn't be that expensive, no? It's fine-tuning which would be thousands of dollars?

Anonymous

7/18/2025, 4:49:54 AM

No.105943301

[Report]

Why are software developers stacking chains LLMs for agentic use rather than using the LLM for the human interface, using a parser, then using the parser to launch tools in a controlled manner?

Anonymous

7/18/2025, 4:50:03 AM

No.105943302

[Report]

>>105943830

Anonymous

7/18/2025, 4:57:15 AM

No.105943346

[Report]

>>105942915

I-it's not the size that counts, it's what prompts I use!

Anonymous

7/18/2025, 5:08:13 AM

No.105943408

[Report]

>>105942638

>He actually does 90% of the coding here at OpenAI.

He certainly wasn't doing 90% of it back when OpenAI published things.

>We invented the GPT architecture

Google invented the model architecture, OpenAI invented the idea of a GPT.

Anonymous

7/18/2025, 5:09:23 AM

No.105943416

[Report]

>>105943745

Ernie A3 21B quants by bartowski are up btw.

Anonymous

7/18/2025, 5:54:28 AM

No.105943631

[Report]

>>105943645

Mistral updated their le chat platform. The only thing that's missing now is the next generation of their flagship model.

Anonymous

7/18/2025, 5:56:53 AM

No.105943645

[Report]

>>105943631

missing from a leak to lmg, you mean

Anonymous

7/18/2025, 6:00:14 AM

No.105943672

[Report]

More like Le Shit

Anonymous

7/18/2025, 6:12:46 AM

No.105943745

[Report]

>>105943416

Hmm, this thing is not so terrible. After all the bullshit between dots, hunyuan, jamba, and other dumpster releases recently, this is actually surprisingly an ok model. Nothing amazing, but for its size and active parameter count, it's doing pretty well in my tests so far. But since it is a A3B 21B, it is pretty dumb. But I found it to be knowledgeable-ish, plus rather uncensored in tuning as well. It is a bit slopped though.

The big Ernie might have some promise.

>>105943356

>https://github.com/moeru-ai/airi

> wishing to achieve Neuro-sama's altitude

at least they are open about their plagurism

Anonymous

7/18/2025, 6:27:43 AM

No.105943830

[Report]

Anonymous

7/18/2025, 6:29:08 AM

No.105943837

[Report]

>>105944078

>>105943816

I mean if they credit then technically it's not plagiarism.

Anonymous

7/18/2025, 7:14:08 AM

No.105944078

[Report]

>>105943816

>>105943837

I'm pretty sure anyone involved with AI at this time doesn't give a fuck about plagarism.

Anonymous

7/18/2025, 7:28:21 AM

No.105944152

[Report]

>>105943816

That model wasn't made for Neuro-sama, it's just a free one.

Anonymous

7/18/2025, 7:28:23 AM

No.105944153

[Report]

>>105942899

What does this mean?

Anonymous

7/18/2025, 8:08:51 AM

No.105944323

[Report]

>>105944349

>>105943356

>claims self hosted

>uses elevenlabs

local is doomed until tts problem solved

Anonymous

7/18/2025, 8:12:45 AM

No.105944349

[Report]

>>105944323

2 more weeks. Unmute finetuning and IndexTTS2 release.

Even if they don't release the unmute voice embedding model (i.e. no cloning), finetuning could be useful to condition the model to produce specific voices by training it on exclusively one voice for an extended period of time.

And the devs of indexTTS have always released their models, and voice cloning is an option with them.

Anonymous

7/18/2025, 8:34:41 AM

No.105944449

[Report]

>>105944985

>>105939173

OH MY GOD COULD YOU IMAGINE GOING TO A DIFFUSION THREAD AND FINDING

*gasp*

PORNOGRAPHY!?

Anonymous

7/18/2025, 8:58:32 AM

No.105944580

[Report]

>>105939173

Based. So much mikutroon seething ITT

Anonymous

7/18/2025, 9:16:34 AM

No.105944701

[Report]

>>105947951

Anonymous

7/18/2025, 10:10:04 AM

No.105944985

[Report]

>>105944998

>>105944449

Blue board, tranny-kun.

>>105943067

End of summer (?).

Anonymous

7/18/2025, 10:12:40 AM

No.105944998

[Report]

>>105944985

You don't give a shit about this website or its rules, puriteen invader. Stop projecting your dysphoria onto others.

Anonymous

7/18/2025, 10:26:49 AM

No.105945070

[Report]

>>105944990

of what year? They weren't going to release anything, they were just generating hype, like strawberry all over again.

Anonymous

7/18/2025, 10:54:28 AM

No.105945222

[Report]

>>105945503

>>105939052 (OP)

What'd be the current best 32b or less model for RP? Been out of the loop with LLMs for like a year or so

Anonymous

7/18/2025, 10:58:11 AM

No.105945238

[Report]

>>105945110

Has anyone tested these models for rp? I keep seeing this hf stuff posted but no reviews on whether it's any good.

Anonymous

7/18/2025, 11:02:58 AM

No.105945264

[Report]

How much context can Nemo take?

Anonymous

7/18/2025, 11:09:56 AM

No.105945309

[Report]

>can't load ernie with koboldcpp

Anonymous

7/18/2025, 11:13:14 AM

No.105945326

[Report]

These models are so much better at women's erotica its not even funny. Fuck the safety-cucks.

Anonymous

7/18/2025, 11:37:55 AM

No.105945466

[Report]

Anonymous

7/18/2025, 11:44:43 AM

No.105945503

[Report]

>>105945528

>>105945222

Honestly all the new stuff in that range is more of a sidegrade than an upgrade.

You might want to try out GLM4, a QwQ finetune like snowdrop, the new mistral small, Qwen3-32.

But I wouldn't go in expecting much more than some variety, actual progress in the RP domain at that parameter count has had so little progress that plenty of people are still using Nemo derivatives, and I don't really blame them.

>>105945503

Thanks, good to know, I'll take a look at the Snowdrop.

On a side note, I use SillyTavern. Is KoboldCPP still the best option for backend? I heard someone say LM studio is good as a backend too, is it true?

Anonymous

7/18/2025, 11:54:36 AM

No.105945577

[Report]

>>105945528

Personally I just use base LlamaCPP as my backend because it gets updated for new models faster than kobold.

Plenty of anons here are using it though, but I've pretty much never heard of anyone bothering with LM studio outside of the HF comments section.

Anonymous

7/18/2025, 12:08:20 PM

No.105945664

[Report]

>>105945482

No local no care

Anonymous

7/18/2025, 12:14:46 PM

No.105945708

[Report]

>>105945528

Lm studio is good for newbs as a start point.

Anonymous

7/18/2025, 1:29:06 PM

No.105946026

[Report]

>>105945528

if you're using st the back end doesnt matter much. kobold works fine. if you use it make sure to unpack it to a folder from the extras menu and launch it with kobold_launcher

Anonymous

7/18/2025, 1:29:37 PM

No.105946033

[Report]

>>105946110

>>105945110

literally the only interesting thing about ernie is the giant vision models that will never be supported by any usable backend

Anonymous

7/18/2025, 1:40:45 PM

No.105946089

[Report]

Anonymous

7/18/2025, 1:45:59 PM

No.105946110

[Report]

Anonymous

7/18/2025, 1:57:22 PM

No.105946166

[Report]

>>105946368

Any advice or resources on good local models specifically for coding and how they stack up to API models like gpt/sonnet?

Its getting really hard to find any info that isnt just shameless shilling, for anything but erp.

Ive had some good experiences using gpt4o and sonnet 3.5 for coding but Im conscious that they could get enshittified, or some employers may begin to limit this via contract for codebase security/privacy concerns.

Anonymous

7/18/2025, 2:07:43 PM

No.105946211

[Report]

>>105946319

>>105946194

qwen coder 2.5 32b

llama 3.3 70b

devstral 24b (?)

Anonymous

7/18/2025, 2:19:24 PM

No.105946269

[Report]

>>105946389

>>105946229

Regurgitation is easy. They need to be able to properly depict a mesugaki in a scene.

Anonymous

7/18/2025, 2:25:01 PM

No.105946288

[Report]

>>105946639

is there a local model that can be used like claude code?

Anonymous

7/18/2025, 2:31:29 PM

No.105946318

[Report]

>>105946328

>>105946313

What do you mean?

A model is just a binary blob.

Is claude code a model or something like a cli tool?

Many local models can produce code.

Anonymous

7/18/2025, 2:31:45 PM

No.105946319

[Report]

>>105946348

>>105946211

thanks, Im limited to 24gb VRAM so its quant models 33B and under for me, any opinions on deepseek coder 33b? How do any of these stand up to just running chatgpt or sonnet via API?

Im going to try a bunch out anyway and will report back but wanted to hear some others experiences.

Anonymous

7/18/2025, 2:34:44 PM

No.105946328

[Report]

>>105946560

>>105946318

>Many local models can produce code.

can you give some recommendations for a agentic local setup that can comprehend/modify/create code and prose?

Anonymous

7/18/2025, 2:34:46 PM

No.105946329

[Report]

>>105946540

>>105946313

https://github.com/maxnowack/anthropic-proxy

With the proxy you can hook up any model you want to the real Claude Code.

Anonymous

7/18/2025, 2:36:46 PM

No.105946340

[Report]

>>105946354

>>105946229

cockbench when?

Anonymous

7/18/2025, 2:37:45 PM

No.105946348

[Report]

>>105946457

>>105946319

deepseek 33b is one of the original code models, its ancient now and not worth trying.

none of them are going to be as good as online models. your project and size matters though, some models are better at languages than others. i've had decent luck with local models but i'm not doing anything huge either.

>so its quant models 33B and under for me

i still suggest l3.3 70b, occasionally loading a different model helps when another doesn't seem to understand what you want the code to do, even if its balls slow. i alternate for that reason

Anonymous

7/18/2025, 2:41:11 PM

No.105946368

[Report]

>>105946166

What good is a 7B translation model? For translation, you need a wide breadth of knowledge and that requires far more parameters.

Anonymous

7/18/2025, 2:43:42 PM

No.105946381

[Report]

Anonymous

7/18/2025, 2:44:56 PM

No.105946388

[Report]

>ERNIE-4.5-300B-A47B-PT-GGUF

>IQ2_XXS at 101 GB

macbook bros is it our time?

Anonymous

7/18/2025, 2:45:50 PM

No.105946399

[Report]

>>105946354

Ken Doll benis status.

Anonymous

7/18/2025, 2:48:27 PM

No.105946416

[Report]

Anonymous

7/18/2025, 2:53:56 PM

No.105946457

[Report]

>>105946471

>>105946348

ok thanks Ill skip deepseek, I dont tend to use AI as a crutch for coding, more like a donkey to do the grunt work in small chunks, whilst I still handle all of the logic and architecture myself

>i still suggest l3.3 70b

Wouldnt I have to go down to 2bit quants to get this to run, is it still worthwhile at that level?

>>105946457

no you'd get a good quant and offload some to regular ram. it'll be slower but if it does what you want speed shouldn't matter

Anonymous

7/18/2025, 2:57:59 PM

No.105946486

[Report]

>>105947281

>>105941011

luckily the most android phones can't run llms imagine the influx

Anonymous

7/18/2025, 2:58:38 PM

No.105946489

[Report]

Anonymous

7/18/2025, 2:59:37 PM

No.105946492

[Report]

>>105946471

>offload some to regular ram

I have 16gb DDR4 kek, I know, I was waiting for it to be worth it to upgrade my entire CPU/MOBO to get 64/128GB DDR5

Thanks for all the advice anyway, Ill have a play around and see how it stacks up, Ill probably still stick to API models but I want to be prepared for the day they get enshittified / I get told using API AI for paid work is verboten

Anonymous

7/18/2025, 3:02:25 PM

No.105946512

[Report]

>>105946542

>>105946389

>X is going to be Y... or Z... your choice

Every single model Ive used keeps doing this shit in every conceivable scenario and it always pulls me right out of it, whether Im trying to do an adventure RP or simply jerk off

Anonymous

7/18/2025, 3:05:18 PM

No.105946534

[Report]

>>105946471

oh one last question mr helpful anon whilst I have your attention, got any good resources for chat completion settings/presets? Ive used sillytavern a bunch for fun but have not used local models for productivity yet, is there a much more suitable front end I can use for coding?

>>105946329

Cline on VSCode + Deepseek R1 seems like the easy one.

>>105946512

Right, it's not just about jerking off—LLM writing structure gets unnervingly clone-like after a while.

Anonymous

7/18/2025, 3:11:32 PM

No.105946559

[Report]

>>105946542

it feels like they are always constantly desperate for you to lead every situation and is always looking for your approval no matter how much you try and prompt it into doing otherwise, the only model Ive tried that avoided this was full fat deepseek via api but at the cost of going full on schizo deranged

Anonymous

7/18/2025, 3:11:41 PM

No.105946560

[Report]

Anonymous

7/18/2025, 3:13:12 PM

No.105946568

[Report]

>>105946576

>>105946354

>revealing more of your...............GROWING..........AROUSAL

AAAAAAAAHHHHHHHHHHHHHHHHH

Anonymous

7/18/2025, 3:14:51 PM

No.105946576

[Report]

>>105946568

I feel safe. This is how models should be.

Anonymous

7/18/2025, 3:17:57 PM

No.105946592

[Report]

>>105946600

>still no weights for grok2, grok3 or grok3.5

Elon promised us.

What a fucking rat.

Anonymous

7/18/2025, 3:19:03 PM

No.105946600

[Report]

>>105946592

Why does everyone always forget about Grok 1.5?

Anonymous

7/18/2025, 3:22:40 PM

No.105946624

[Report]

Anonymous

7/18/2025, 3:25:53 PM

No.105946639

[Report]

>>105947452

Anonymous

7/18/2025, 3:33:37 PM

No.105946684

[Report]

>>105946797

Anonymous

7/18/2025, 3:36:35 PM

No.105946710

[Report]

>>105946542

You're absolutely right.

Anonymous

7/18/2025, 3:48:21 PM

No.105946797

[Report]

>>105946803

>>105946684

When are you open sourcing your model?

Anonymous

7/18/2025, 3:49:15 PM

No.105946803

[Report]

>>105946797

"open sourcing" is for inferior stock

you open source something when it is not SOTA level

I havent been messing around with local models much in the past 7-8 months and I am not sure the best way to inference and use them anymore. I used to use oogabooga for just messing around with them and ollama for code related inference. What do most people use these days? I recently tried oogabooga and lm studio to inference some of the newer models that were uncensored and they seemed more underwhelming than usual (short responses or never using a stopping token). I'm not sure if I just grabbed a bad merge or if I'm not using the right parameters. The last model I used a bunch was llama3.1 or 3.2. Qwen 3 seems pretty good but i'm not sure how i feel about the thinking tokens.

Anonymous

7/18/2025, 4:20:52 PM

No.105947045

[Report]

>>105947083

>>105946895

cool kids use llama.cpp server hosting the model which you then use from whatever application you run, but lmstudio should do fine if you just want a chat-like assistant interaction. for rp use sillytavern. for raw unformatted text try mikupad. for coding aside from qwen3 there is devstral that came out recently.

>>105947045

cool kids use ik_llama or ktransformers

Guys I need some insights. Do you need some prior knowledge to get into this if all you did professionally was being a backend dev in C#? Mostly web shit.

I had a project in mind that required some facial recognition stuff but most of the AI API for face stuff are so limited. So out of curiosity, I bought this course to have an introduction on the subject:

https://www.udemy.com/course/learn-ethical-hacking-from-scratch/?couponCode=MT150725A

Most of the time I skip theoritical stuff when it comes to deving but I watched the ones of this courses and I don't understand jack shit when the teacher speaks about mathematical equations and that kind of subjects.

Should I teach myself on some subjects first or should I go straight up for the development part and simply follow the guidelines like a robot? I'm not compeltly lost but I do feel like... unworthy.

>>105947141

>being a backend dev in C#

>I don't understand jack shit when the teacher speaks about mathematical equations

Are you one of those code bootcamp types? Do you have any proper education?

Anonymous

7/18/2025, 4:39:24 PM

No.105947189

[Report]

>>105947287

>>105947149

>degree mill buyers remorse

kek

>>105947141

what are you even asking? what does ethical hacking have to do with local ML models? are you asking us how you learn better? how the fuck is anyone supposed to know that but you let alone anons on the internet

Anonymous

7/18/2025, 4:44:46 PM

No.105947230

[Report]

>>105947286

>>105947083

>cool

>ktransformers

lol

Anonymous

7/18/2025, 4:49:00 PM

No.105947264

[Report]

>>105947435

>>105946540

>Cline on VSCode

any recommendations that aren't VSCode plugins?

cli clients are peak civilization

Anonymous

7/18/2025, 4:51:22 PM

No.105947281

[Report]

>>105946486

That's what google colab is for. Only problem is I'm still a noob at this shit so I literally don't know what the good shit is on huggingface.

Anonymous

7/18/2025, 4:51:42 PM

No.105947286

[Report]

>>105947391

>>105947083

>>105947230

ahem it's pronounced quicktransformers

>>105947149

I expressed it wrong. It's not that I don't understand anything about it. I just can't connect why, for example, a rectified linear function is the one needed for the activation layer CNNs.

>>105947189

My bad, I shared the wrong link:

https://www.udemy.com/course/computervision-deeplearning-with-python/

> are you asking us how you learn better?

I'm asking if your regular backend dev can get into this shit straight from his prior experience or if he HAS TO study the subject first in a theoretical way to learn absolutely mandatory knowledge to develop LLMs.

Anonymous

7/18/2025, 4:57:02 PM

No.105947321

[Report]

>>105946895

>What do most people use these days?

koboldcpp + Sillytavern

It just werks.

Anonymous

7/18/2025, 4:58:45 PM

No.105947334

[Report]

>>105947381

>>105947287

>I just can't connect why, for example, a rectified linear function is the one needed for the activation layer CNNs.

Looking at it from a biological point of view, it wouldn't make sense to let the activation function go under 0. That's literally the only reason why it clips at 0. It works, so that's what the standard is.

Anonymous

7/18/2025, 5:03:02 PM

No.105947366

[Report]

Redpill me on Mistral Thinker.

I see it mentioned here a lot. Why would I use it over Dans Personality Engine (the best Mistral small finetune)

Anonymous

7/18/2025, 5:03:48 PM

No.105947372

[Report]

bro just woke up from a coma

Anonymous

7/18/2025, 5:06:39 PM

No.105947381

[Report]

>>105947465

>>105947334

You'd find leakyrelu, swish, and others in modern cnns

Anonymous

7/18/2025, 5:07:59 PM

No.105947391

[Report]

>>105947286

Wait what? I thought it was some kde thing.

Anonymous

7/18/2025, 5:12:29 PM

No.105947415

[Report]

Just tried out Exaone 4 with the new merge.

Feels retarded and lacks knowledge. Quite literally I feel like it's worse than 3.0. Now that's fucked.

Anonymous