/lmg/ - Local Models General

Anonymous

7/20/2025, 11:47:56 PM

No.105971741

[Report]

>>105975541

Ani Won

Anonymous

7/20/2025, 11:48:57 PM

No.105971753

[Report]

Mikutroons started splitting the thread again...

Anonymous

7/20/2025, 11:52:19 PM

No.105971793

[Report]

>>105971806

where the FUCK are my ik_llama.cpp issues and discussions? literally all the info on the commands where there because nobody fucking documented anything in that shitheap

Anonymous

7/20/2025, 11:53:12 PM

No.105971799

[Report]

What is the best Q5_S quantization model for 8GB's of DDR4 and a 3090 assuming I want to use 17000token context with NTK scaling set to 2.5?

Anonymous

7/20/2025, 11:54:31 PM

No.105971806

[Report]

>>105980636

>>105971793

Try:

llama-server --model Qwen3-235B-A22B-mix-IQ3_K-00001-of-00003.gguf -fa -ctk q8_0 -ctv q8_0 -c 30000 -amb 512 -ot blk\.[6-9]\.ffn.*=CPU -ot blk\.[1-9][0-9]\.ffn.*=CPU -ngl 99 --threads 8 --host 127.0.0.1 --port 8080 --no-mmap

And disable enable stuff from there.

Anonymous

7/20/2025, 11:59:51 PM

No.105971860

[Report]

best 12gb vram model? a little offload is fine too

Looking for another round of feedback from the other timezone regarding this rentry I made to answer the question we get a few times per day.

https://rentry.org/recommended-models

You can check replies to this post

>>105965438 (Cross-thread) to see what was already suggested.

Anonymous

7/21/2025, 12:04:01 AM

No.105971908

[Report]

>faggot copied my post in the other thread for engagement

Anonymous

7/21/2025, 12:18:08 AM

No.105972040

[Report]

>>105973216

You guys really need to stop using a corporate model for this general. It's probably just one guy, but it's a really bad look.

Anonymous

7/21/2025, 12:22:13 AM

No.105972072

[Report]

>>105972126

glm4 100b moe will save us (local)

Anonymous

7/21/2025, 12:28:53 AM

No.105972126

[Report]

>>105972072

no savior of mine is smaller than 700B (MoE) or 120b (dense)

Anonymous

7/21/2025, 12:56:18 AM

No.105972398

[Report]

>>105972917

i m,iss miku

Anonymous

7/21/2025, 1:53:27 AM

No.105972917

[Report]

>>105972398

Here, you can eat a snack from my bag of Comfy Mikus

Anonymous

7/21/2025, 2:39:37 AM

No.105973216

[Report]

>>105973592

Anonymous

7/21/2025, 3:37:07 AM

No.105973553

[Report]

Total mikunigger death

Anonymous

7/21/2025, 3:44:01 AM

No.105973592

[Report]

>>105973216

TBdesu making his AI GF cosplay as Miku is a pretty believable Elon move

Anonymous

7/21/2025, 3:47:06 AM

No.105973606

[Report]

Any direct link to get Llama-3.2-1B without huggingface or giving my contact information to Meta?

Anonymous

7/21/2025, 8:22:12 AM

No.105975099

[Report]

>>105974974

Look for a reupload in the same site.

Anonymous

7/21/2025, 8:43:01 AM

No.105975234

[Report]

>>105978699

whats the current best model below 24b for e/rp? is mistralai/Mistral-Small-3.2-24B-Instruct-2506 good?

Anonymous

7/21/2025, 9:33:53 AM

No.105975520

[Report]

>>105974974

I just made a fake account with fake info

Anonymous

7/21/2025, 9:36:48 AM

No.105975541

[Report]

>>105979868

>>105971741

she's a bit repetitve but DAMN

I like her

Anonymous

7/21/2025, 10:34:00 AM

No.105975831

[Report]

For the people who want a copy of ik_llama.cpp before it disappeared, someone claimed they had it including the missing 1-3 commits.

https://github.com/PieBru/ik_llama.cpp_temp_copy/

A lot of the forks I looked at, people were missing one or all of the Q1 quant work done but almost everyone also was missing the new WebUI changes.

Anonymous

7/21/2025, 11:52:52 AM

No.105976220

[Report]

>>105974974

Install ollama and use that to grab the model.

Anonymous

7/21/2025, 12:21:19 PM

No.105976386

[Report]

>>105980468

anisex. mikudeath

Anonymous

7/21/2025, 2:12:14 PM

No.105977049

[Report]

why did our mascot go from a local voice synthesizer model to a closed source chat api? isn't this local models general?

Anonymous

7/21/2025, 4:27:02 PM

No.105977971

[Report]

>>105977903

Because Ani is an AI gf and Miku is what you hope you become when you inject estrogen into yourself.

>>105977903

First time witnessing a thread slide by /g/'s paid shills?

There's always been a team dedicated to promoting Musk's propaganda.

Anonymous

7/21/2025, 4:34:02 PM

No.105978017

[Report]

Here it comes

The last flicker

Molten by heat

It seeps through the cracks

Of a broken mind.

Anonymous

7/21/2025, 4:34:24 PM

No.105978019

[Report]

>>105977903

Because you're in the wrong thread

>>105971710

Anonymous

7/21/2025, 4:35:46 PM

No.105978029

[Report]

>>105977976

>paid shills

>they changed my mascot

seek help. preferably a handgun you could suck start.

Anonymous

7/21/2025, 4:37:29 PM

No.105978042

[Report]

>>105978063

>>105971714 (OP)

>lmg

>op picture does not represent a lm

Ani no longer responds to me jacking off on webcam. Grok won't acknowledge it either. First removing the NSFW outfit, and now this...

Anonymous

7/21/2025, 4:41:12 PM

No.105978063

[Report]

>>105978042

>>op picture does not represent a lm

It is a tradition that /lmg/ OP picture has nothing to do with /lmg/. You could call it thread culture.

Anonymous

7/21/2025, 4:42:17 PM

No.105978070

[Report]

>>105978045

Thank you saar. We collected enough of that data already.

Anonymous

7/21/2025, 4:45:42 PM

No.105978096

[Report]

>>105971880

What are expected speeds for prompt processing and generation on one 3090 with DeepSeek? On two?

Anonymous

7/21/2025, 4:47:31 PM

No.105978113

[Report]

>>105971880

As for your question about gap, I use monstral_iQ2m.gguf on two 3090s. Another model I like is 72B-Qwen2.5-Kunou-v1-IQ4_XS.gguf.

Anonymous

7/21/2025, 4:51:06 PM

No.105978146

[Report]

>>105978187

Sam?

Anonymous

7/21/2025, 4:56:18 PM

No.105978187

[Report]

Anonymous

7/21/2025, 5:55:00 PM

No.105978699

[Report]

>>105975234

If your only task in mind is "erp" I don't think it really matters at all. You are most likely too stupid to notice any difference whatsoever.

Anonymous

7/21/2025, 6:43:38 PM

No.105979183

[Report]

>>105977976

We've had plenty of shit threads in the past.

Just means reading the summery then dipping out until we starting getting less shit threads again.

Anonymous

7/21/2025, 7:45:42 PM

No.105979868

[Report]

>>105975541

>she's a bit repetitve

Are you sure?

>>>/wsg/5925135 >>>/wsg/5925144 >>>/wsg/5928719 >>>/wsg/5928724

Seems varied enough to me.

Anonymous

7/21/2025, 8:04:54 PM

No.105980065

[Report]

>>105980133

A new NeMo??

What's /g/'s verdict so far?

Anonymous

7/21/2025, 8:14:37 PM

No.105980133

[Report]

>>105980065

If you are asking about sex then it is somewhere between pygmalion and llama-1 7b

Anonymous

7/21/2025, 8:26:05 PM

No.105980217

[Report]

>>105980242

>>105977976

>paid shills

dude, they are shills, but they are Doing It For Free, which is even worse tbdesu

This thing's on sale now. Should I get it, or just wait for the Framework one?

Anonymous

7/21/2025, 8:28:12 PM

No.105980239

[Report]

>>105980274

>>105980223

>42% off

LOL. I knew it was dead on arrival but that much?

Anonymous

7/21/2025, 8:28:36 PM

No.105980242

[Report]

>>105980217

nuh-uh.

They are mostly bots run by paid shills from Chindia.

Anonymous

7/21/2025, 8:30:13 PM

No.105980260

[Report]

Anonymous

7/21/2025, 8:31:04 PM

No.105980269

[Report]

>>105980330

>>105980223

>128GB, only 90gb useable

useless, 4 tks for 70B maybe, spend 2-3x that and run deepseek instead on a mac much faster. Spend 20 grand and get a DDR5 server and run it even faster

Anonymous

7/21/2025, 8:31:27 PM

No.105980274

[Report]

>>105980291

>>105980239

Its CPU is left in the dust by GPU from 2020. Why would anyone pay more than hundred bucks for that crap?

Apart from the occasional clueless idiot from /g/, I mean...

Anonymous

7/21/2025, 8:32:55 PM

No.105980291

[Report]

>>105980274

I would buy it for 2000 if it had 256GB. With 128GB it is absolutely useless for anything. It was designed with dense 70B's in mind which died by the time it released.

Anonymous

7/21/2025, 8:34:04 PM

No.105980307

[Report]

>>105980282

He probably fucked jart in his bussy and then Jart basically had him wrapped around his finger which allowed him to do bullying by proxy.

Anonymous

7/21/2025, 8:36:38 PM

No.105980330

[Report]

>>105980341

>>105980269

>spend 2-3x that and run deepseek instead on a mac much faster

Mac prompt processing is shit.

Anonymous

7/21/2025, 8:37:45 PM

No.105980341

[Report]

>>105980402

>>105980330

faster than token processing would be on that amd, and unless you give it tens of thousands of new tokens to process every input then its like a few seconds at most

Anonymous

7/21/2025, 8:39:54 PM

No.105980375

[Report]

What is the best coding model in the 30b~70b range? Are there any models or solutions that are efficient to do DeepResearch stuff locally?

Anonymous

7/21/2025, 8:42:25 PM

No.105980402

[Report]

>>105980420

>>105980341

>faster than token processing would be on that amd, and unless you give it tens of thousands of new tokens to process every input then its like a few seconds at most

For coomer shit maybe, but if you're using it to host a server and make requests the AMD will be far faster.

Anonymous

7/21/2025, 8:42:43 PM

No.105980405

[Report]

>>105980223

you know its bad when you can DIY a build better than it. 3 5060's and a cheap pc can run 70b faster.

The only thing this has is more 70b context at ~3 tokens a second, or running 100b models at ~3 tokens a second. That is not worth 2600. Especially with b60's on the horizen

Anonymous

7/21/2025, 8:44:15 PM

No.105980420

[Report]

>>105980402

lol your just wrong, that thing will get you 4 tks at most on a 70B, maybe 6-8 for something like deepseek if it had 500GB at the same speed, is this buyers remorse I'm seeing?

Anonymous

7/21/2025, 8:47:30 PM

No.105980449

[Report]

>>105980466

>>105980223

Have you looked up what performance it gets in various models?

Anonymous

7/21/2025, 8:49:18 PM

No.105980466

[Report]

>>105980482

>>105980449

250 GB/s memory, so for generation it will be bottlenecked pretty hard by that. Image/audio processing will be good, assuming ROCM works.

Anonymous

7/21/2025, 8:49:29 PM

No.105980468

[Report]

Anonymous

7/21/2025, 8:50:25 PM

No.105980482

[Report]

>>105980490

>>105980466

>Image/audio processing will be good

No... it wont... That is even more compute heavy

Anonymous

7/21/2025, 8:51:50 PM

No.105980490

[Report]

>>105980506

>>105980482

Yeah that's the fucking point, the GPU compute performance is fine, it's just the memory speed is shit.

Anonymous

7/21/2025, 8:53:58 PM

No.105980506

[Report]

>>105980568

>>105980490

it would be far worse than a comparably priced gpu and the 128gb is not apart of that. Are you a actual shill trying to sell your stock for your failed product?

Anonymous

7/21/2025, 8:59:23 PM

No.105980556

[Report]

Anonymous

7/21/2025, 9:01:11 PM

No.105980568

[Report]

>>105980582

>>105980506

>and the 128gb is not apart of that

What is this supposed to mean?

Anonymous

7/21/2025, 9:02:42 PM

No.105980582

[Report]

>>105980609

>>105980568

did you even bother reading up on the product you are shilling?

>>105980582

Are you having a fucking stroke? The 128 GB shared memory is also for the built-in GPU. I cannot even fucking tell if you're trolling or just retarded at this point.

Anonymous

7/21/2025, 9:07:00 PM

No.105980629

[Report]

What's better for RP K2 or R1/V3? Also, whats the best preset?

Anonymous

7/21/2025, 9:07:42 PM

No.105980636

[Report]

>>105971806

New Qwen 235B dropped

Anonymous

7/21/2025, 9:08:25 PM

No.105980643

[Report]

>>105980609

retard, its up to 96GB and integrated Radeon 8000S is utter garbage

Anonymous

7/21/2025, 9:09:32 PM

No.105980654

[Report]

>>105980609

no one wants your unupgradable machine that can run outdated 70B models at 4tks

>>105980223

It's fine. You get better performance with a dedicated GPU and you also don't need to worry about the shared memory or inability to upgrade the memory.

I'd recommend the HP over the Chinese version if you're planning on getting one. This is the EVO-X2 and the BIOS sucks. I can't even turn on Hyper-V. Linux might work better, but I haven't had a need to try that yet (besides Hyper-V not working).

Anonymous

7/21/2025, 9:12:00 PM

No.105980672

[Report]

>>105980684

Anonymous

7/21/2025, 9:12:30 PM

No.105980677

[Report]

>>105980223

If you have $2600, you can swing another $400 and get a 4090D 48GB. You can play with 27-32B models at q8, and you can definitely do image and video gen at fp16 and fulll resolution, as well as train loras.

Be real. Local is for porn and ERP. if you want to write programs, pay for grok 4 or some other huge online model. This framework thing isn't good at anything AI, it's a reddit meme.

Anonymous

7/21/2025, 9:12:41 PM

No.105980678

[Report]

>>105980695

>>105980663

I knew it, buyers remorse, no wonder he was trying so hard to defend his retarded purchase. Could get 8 3090s for that price, or save up a bit more to run deepseek faster

Anonymous

7/21/2025, 9:13:19 PM

No.105980684

[Report]

>>105980672

>>105980663

Yes, that's the one I have. You'll want to avoid it.

Anonymous

7/21/2025, 9:14:43 PM

No.105980695

[Report]

>>105980702

>>105980678

>8 3090s

>2.8 kW

I'd hope it's a lot faster than a 200 watt computer.

>>105980695

or get a 512gb mac, much faster and you can run actually good models. Or get a DDR5 server and run it even faster

Anonymous

7/21/2025, 9:17:25 PM

No.105980713

[Report]

I wonder when the DGX Spark is launch? PNY is going to be sorry they signed up for being the OEM, no one is going to buy this piece of shit when they see how poorly it performs for the $4600+ price tag. If you have that kind of money, surely you can justify an actual 6000 Pro, instead of fucking around with 2016-tier memory speed nonsense.

M3 ultra runs deepseek at 23 t/s, imagine paying half the price to run a shitty llama 70B model at a 5th of that

>>105980702

Mac is shit too. Fast memory but tiny TFLOPS. Enjoy your wait as the context grows larger.

Anonymous

7/21/2025, 9:18:44 PM

No.105980726

[Report]

>>105980745

>>105980702

>512gb mac

Why the fuck are you trying to shill this garbage? That's 9500 dollars anyways.

>>105980723

context processing is nearly 200 tks, wtf are you on about, you are working on old info

Anonymous

7/21/2025, 9:21:02 PM

No.105980745

[Report]

Anonymous

7/21/2025, 9:21:43 PM

No.105980750

[Report]

>>105981026

All those mixed memory 128GB capped dedicated AI computers (Spark too) that are obsolete on launch were funny to laugh at if you didn't buy one. And it is fun to laugh at retards who boughted.

But I now realize that we are actually kinda fucked. Because boomers with all the money saw this and we probably won't see half of actually useful AI hardware that would have been developed otherwise.

lol, mac is blazing fast for deepseek, cope

Anonymous

7/21/2025, 9:22:30 PM

No.105980757

[Report]

>>105980854

Just wait for DDR6, it'll be fine.

Anonymous

7/21/2025, 9:23:45 PM

No.105980772

[Report]

>>105971714 (OP)

>no "official" mikutroon card in OP

BASED

Anonymous

7/21/2025, 9:23:53 PM

No.105980774

[Report]

>wasting $2600 when you could get 2 3090s and a decent psu

amd/rocm fags are either tech illiterate or plain retarded

>>105980754

>500GB

how much that cost?

>>105980754

>4k context

>487 GB used

>can't upgrade memory so that's the absolute limit

I'll just not get a Mac, or one of these soldered LPDDR5X devices (AMD 395, Nvidia Spark) thanks though.

Anonymous

7/21/2025, 9:26:18 PM

No.105980787

[Report]

>>105980783

that is 5bit, it fits 32k context easily still, that is the most you want to go for deepseek anyways, it drops off after that

Anonymous

7/21/2025, 9:26:29 PM

No.105980791

[Report]

>>105980776

10 thousand united states dollars

Anonymous

7/21/2025, 9:26:32 PM

No.105980792

[Report]

>>105980776

A car basically. And not even a secondhand cheapshit.

Anonymous

7/21/2025, 9:26:59 PM

No.105980795

[Report]

>>105980776

About 10% of what I earn and a much smaller fraction of what I've saved :)

>>105980721

>M3 ultra runs deepseek at 23 t/s

Name me one reason other than coom to spend 12k for this speed

Anonymous

7/21/2025, 9:28:13 PM

No.105980808

[Report]

>>105980797

so you can be a cool guy on /lmg, smarter thing to do is just pay pennies for api

Anonymous

7/21/2025, 9:31:39 PM

No.105980843

[Report]

>>105980783

the reason why it's a usable gen speed is BECAUSE it's soldered ram

slotted ram will never come close

to get decent ram bandwidth requires obeying the laws of physics and physics say the chips need to be very close together.

Anonymous

7/21/2025, 9:31:52 PM

No.105980846

[Report]

>>105980223

just buy a mac studio

Anonymous

7/21/2025, 9:32:00 PM

No.105980847

[Report]

>>105980721

>23 t/s

I'll wait for M5

>>105980797

>Name me one reason other than coom to spend 12k for this speed

IDE usage, batched translation

Anonymous

7/21/2025, 9:32:51 PM

No.105980854

[Report]

>>105980872

>>105980757

>Just wait for DDR6, it'll be fine.

2028 ?

Anonymous

7/21/2025, 9:32:59 PM

No.105980857

[Report]

>>105980919

>>105980797

Everything that isn't coom. You don't need 40 t/s for answering knowledge-based short questions. Not everything people do with LLMs needs 100k context and 10k reasoning tokens. In fact that's rare for the average person. For vibe coding using API is fine, no one said you can't use both at the same time.

Anonymous

7/21/2025, 9:34:40 PM

No.105980872

[Report]

>>105980891

>>105980854

Yeah, basically tomorrow.

>>105980741

Yeah I'm not jumping through hoops trying to get mlx image gen models to work, when something old like a 3090 still blows it away performance wise. You're going to spend a fortune on a llama.cpp machine? If you have that kind of money, just by a Blackwell 6000 Pro already.

Anonymous

7/21/2025, 9:36:07 PM

No.105980879

[Report]

>>105980873

>just by a Blackwell 6000 Pro already

cept you would need like 6 of them and that means rewiring the house

Anonymous

7/21/2025, 9:36:35 PM

No.105980883

[Report]

>>105980924

>>105980873

>when something old like a 3090 still blows it away performance wise

does it matter to have good performance when you only have enough vram for absolute trash models

Anonymous

7/21/2025, 9:36:57 PM

No.105980886

[Report]

>>105980852

>batched translation

llama-cli with with promp from file, single shot and logging into file running over night

I did it.

Anonymous

7/21/2025, 9:37:20 PM

No.105980890

[Report]

>>105980947

>>105980873

Wtf are you talking about? Almost all of us in this thread have at least a 3090 and are doing image gen already. Obviously you're not going to sell your machine to main a mac.

Anonymous

7/21/2025, 9:37:28 PM

No.105980891

[Report]

>>105980912

>>105980872

>Just wait for DDR6, it'll be fine.

>2028 ?

>Yeah, basically tomorrow.

That's, like, 900 /lmg/ threads away.

>>105980754

>--max-toklens 8000

Wow, that's going to be completely useless for coding. What other fails do you want to show us?

/lmg/ btfo'd by reddit

kek

Anonymous

7/21/2025, 9:38:35 PM

No.105980900

[Report]

Nice to see that they dropped the hybrid thinking meme. Found it super stupid that they trained the same model two ways based on a single token, probably fucked up everything downstream. At a glance it looks like they also fixed it having long-term brain damage, but I'll test it when the quants come out. Never can trust OR providers to actually use the model correctly

Anonymous

7/21/2025, 9:38:38 PM

No.105980901

[Report]

>>105980852

>IDE usage

You need to rethink your workflow as far as coding is concerned. Even having 50 t/s will kepp you tied to the display

Anonymous

7/21/2025, 9:39:04 PM

No.105980906

[Report]

>>105980916

>>105980896

that is max output and its set to 800 and it could be increased? It fits 32k and deepseek falls off after that anyways

Anonymous

7/21/2025, 9:39:48 PM

No.105980912

[Report]

>>105980891

They'll go quick when you're fighting the miku vs ani war.

Anonymous

7/21/2025, 9:39:57 PM

No.105980913

[Report]

>>105980927

>>105980898

none of r*dditards even downloaded let alone ran this model

they all just jerk each other off and look on the benchmarks

Anonymous

7/21/2025, 9:40:04 PM

No.105980916

[Report]

>>105980963

>>105980896

>>105980906

and that is at 5bit, at 4bit could prob fit 120k but no model does past 32k well cept maybe gemini

Anonymous

7/21/2025, 9:40:12 PM

No.105980919

[Report]

>>105980857

>Everything that isn't coom

I ask "what else if not just coom?"

I agre that you don't need it for the tasks you mentioned

Anonymous

7/21/2025, 9:40:43 PM

No.105980924

[Report]

>>105980934

>>105980883

Have you seen how much memory a mac needs to run something like flux? Not even 64GB is enough. Unless you use mlx, everything has to run at fp32. It's a joke.

Anonymous

7/21/2025, 9:40:49 PM

No.105980925

[Report]

>>105980898

>-9 score

Kek

Anonymous

7/21/2025, 9:40:56 PM

No.105980927

[Report]

>>105980939

>>105980913

have (You) to confirm it's benchmaxxed?

Anonymous

7/21/2025, 9:41:13 PM

No.105980931

[Report]

>>105980873

>Blackwell 6000 Pro

BASED

Anonymous

7/21/2025, 9:41:27 PM

No.105980934

[Report]

>>105980924

that is what my 5090 is for, why would I use a mac for that

Anonymous

7/21/2025, 9:42:03 PM

No.105980939

[Report]

>>105980958

>>105980890

Then why do you need a mac in the first place?

Anonymous

7/21/2025, 9:44:23 PM

No.105980958

[Report]

>>105980983

>>105980939

NTA but that's not the new one. New one would have 2507 attached to the model name.

>>105980916

>and that is at 5bit, at 4bit could prob fit 120k but no model does past 32k well cept maybe gemini

Again, what's the point? Local is for coom. 48GB of VRAM is fine for that. If you want to code, you want 128K+ context, and that means cloud models.

It's like being gay; I don't really care if you are, just keep it to yourself that you're a mac user.

>>105980947

for deepseek / other moes dumbass, no one is using it for image gen

Anonymous

7/21/2025, 9:45:51 PM

No.105980975

[Report]

>>105981000

>>105980963

poor cope, 48GB is not fine for shit. all 70B models are garbage and are actual wastes of money

>>105980958

no other model is available on their website

and none of them have dates

Anonymous

7/21/2025, 9:47:19 PM

No.105980986

[Report]

>>105981003

>>105980947

Deepseek, what else?

Anonymous

7/21/2025, 9:47:21 PM

No.105980987

[Report]

>>105980963

>just keep it to yourself that you're a mac user

It used to be a general policy back then

Anonymous

7/21/2025, 9:47:45 PM

No.105980991

[Report]

>>105981031

>>105980965

I see. So you're using your 32K context deepseek for what exactly, other than posting videos of your very first gen? Even cpumaxxing deepseek isn't terrible the first gen.

>>105980741

>context processing is nearly 200 tks, wtf are you on about, you are working on old info

>paste a medium sized code file

>wait an entire minute before the answer even begins to generate

Nice paperweight you got there.

Anonymous

7/21/2025, 9:48:45 PM

No.105981000

[Report]

>>105980975

What recent 70B model is worthwhile for coom?

>>105980986

>>105980965

What do you need 23 t/s for?

What kind of tasks?

>>105980963

IME cooming benefits more from model size than other tasks. Probably because it's harder to benchmaxx on. Local models are pretty good at coding now. If you want to produce anything of value, you can't let any model code without constant tard wrangling anyway no matter how big it is

>>105980995

Nta

Did you know that you can pre-process prompt (big file) to load them in 1 sec?

Anonymous

7/21/2025, 9:50:57 PM

No.105981026

[Report]

>>105980750

Better way to think of it is this is LLM for idiots V1— for people too afraid to stack gpu's or have some big 'ugly' box.

Also, it doesn't take a brainiac to realize they— (hardware manufacturers)— came up with something minimally viable first, and in 6 months to a year will come up with something way better. You should be interested in v2, v3, because if they ever manage to make something capable of running 200-600b models, maybe there will be a point where they can edge out dedicated gpu's. I feel like its a bit of a tossup though— what it will probably be is dedicated gpu stacking to 96-196 gb becomes cheap(ish), while crap like this struggles to run larger models in the 512gb range with the same kind of— "you can, but it will be painful at 4 t/s".

Anonymous

7/21/2025, 9:51:22 PM

No.105981027

[Report]

>>105981041

>>105981019

How do you expect to preprocess something this is actively changing?

Anonymous

7/21/2025, 9:51:34 PM

No.105981031

[Report]

>>105981042

>>105980991

writing, general assistant stuff, rp... everything I need a llm for?

>>105980995

20 seconds for 4k context processing, and most use cases you type like 100 words or less so its near instant since old context is reused, what kind of cope is this

Anonymous

7/21/2025, 9:51:38 PM

No.105981033

[Report]

>>105981042

>>105981003

I need 80 t/s for zed

Anonymous

7/21/2025, 9:52:15 PM

No.105981037

[Report]

>>105981076

>>105981003

Normal AI tasks? What are you doing where you're satisfied with less?

>>105981019

What if you change a line in the middle?

And then what if another line changes?

Anonymous

7/21/2025, 9:52:40 PM

No.105981041

[Report]

>>105981180

>>105981027

ita but prove if it knows what mesugaki is, ok?

Anonymous

7/21/2025, 9:52:41 PM

No.105981042

[Report]

>>105981049

>>105981031

>most use cases you type like 100 words or less so its near instant since old context is reused

For programming you're rarely reusing context.

This

>>105981033

Anonymous

7/21/2025, 9:53:25 PM

No.105981048

[Report]

>>105981029

just pre-process it after it changes duh

Anonymous

7/21/2025, 9:53:26 PM

No.105981049

[Report]

>>105981086

>>105981042

I dont use it to code with

Anonymous

7/21/2025, 9:53:29 PM

No.105981052

[Report]

>>105980983

1. You're using their website. First misstep.

2.The website has a thinking toggle. Only the original 235B has that.

3. I did that test with Q3_XL back when 235B was still new, and it did the same exact output. That's the old one.

If it was the new Qwen they probably would advertise it as new.

Anonymous

7/21/2025, 9:53:57 PM

No.105981057

[Report]

>>105981008

I dunno man, I guess if you want to play "prude simulator" then the Meta or Google local models are fine.

I personally think broken-tutu-24b is the best compromise for local coom. negative-llama3 70b is maybe more nuanced, but it's prudish. I don't bother with the truly brain-damaned "fine tunes".

Anonymous

7/21/2025, 9:54:05 PM

No.105981059

[Report]

>>105981029

>this is actively changing

Wiki article?

>>105981037

>Normal AI tasks?

Which were?....

You can't read at 23 t/s if it's not porn which was exactly my point

Nothing but coom

Anonymous

7/21/2025, 9:57:38 PM

No.105981085

[Report]

>>105981076

no one is stopping you from paying for api

>>105981049

CPU deepseek is nice for one-shot stuff that you won't be waiting for but it's truly awful to use it at such a slow speed when you're trying to make quick iterations to a project

>>105981008

>you can't let any model code without constant tard wrangling

Have you tried generating prompts with a model? I find that if I first request for a prompt to do what I want, I can then fine-tune that generated prompt which tends to produce better results than if I just ask for the basic first request to be immediately implemented.

Anonymous

7/21/2025, 9:58:18 PM

No.105981092

[Report]

>>105981029

>>105981038

Just pre-process every variation of the prompt you fucking retards. Buy another mac, are you poor or something?

Anonymous

7/21/2025, 9:58:40 PM

No.105981094

[Report]

>>105981078

Total twitter death NOW

Anonymous

7/21/2025, 9:59:03 PM

No.105981097

[Report]

>>105981140

>>105981076

You need to account for reasoning. In which case, 23 t/s is kind of still not enough.

Anonymous

7/21/2025, 10:00:29 PM

No.105981104

[Report]

>>105981086

Exactly. Coding with an AI is going to be an iterative process, unless you're asking for a hello world program. I can't see coding anything complex locally, it just takes too long.

Anonymous

7/21/2025, 10:00:39 PM

No.105981106

[Report]

>>105981038

>What if you change a line in the middle?

Why don't you let AI gen some decent code based on your well-structured promt ?

Why is a single line able to fix or break anything?

Guys, you have to re-think how you use AI

It's not about some shitty coding anymore

Anonymous

7/21/2025, 10:00:56 PM

No.105981110

[Report]

>>105981078

The smartest and best AI confirmed.

►Recent Highlights from the Previous Thread:

>>105971710

--Paper: CUDA-L1: Improving CUDA Optimization via Contrastive Reinforcement Learning:

>105979713 >105979801 >105979861 >105980265 >>105980303 >105980321 >105980384 >105980477 >105980512

--Overview of 2025 Chinese MoE models with focus on multimodal BAGEL:

>105971749 >105971848 >105971891 >105971983 >105972003 >105972101 >105972110 >105972282 >105971903 >105972293 >105972308 >105972373 >105972396 >105972425 >105972471 >105972317 >105972323

--Techniques to control or bias initial model outputs and bypass refusal patterns:

>105972510 >105972536 >105972593 >105972627 >105972655 >105972713 >105972735 >105972972 >105981009 >105973013 >105973146 >105972548 >105972576 >105972675 >105972685

--Multi-stage agentic pipeline for narrative roleplay writing:

>105977946 >105977998 >105978038 >105978268 >105978815 >105978189 >105978248 >105978885 >105979472 >105978364 >105978380

--Troubleshooting remote self-hosted LLM server downtime and automation workarounds:

>105977036 >105977073 >105977134 >105977232 >105977270 >105977334

--Preservation efforts for ik_llama.cpp and quantization comparisons:

>105975833 >105975904 >105975923 >105976020

--Hacking Claude Code to run offline with llama.cpp via context patching:

>105978622 >105978821 >105978965

--Anon's experience optimizing R1 quantization for speed and context retention:

>105979489 >105979593

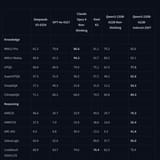

--Qwen3-235B-A22B updated with 256K context support in new Instruct version:

>105978819 >105979585

--Assessing the viability of PowerEdge ML350 G10 based on RAM and upgrade potential:

>105974903 >105974928 >105977337 >105975195 >105975224 >105975230 >105975250 >105975254 >105975273 >105975287 >105975311

--Feasibility of building an AMD MI50 GPU cluster:

>105977878 >105977907 >105978783 >105979064

--Miku (free space):

>105978729 >105979092 >105979388

►Recent Highlight Posts from the Previous Thread:

>>105971718

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

7/21/2025, 10:04:08 PM

No.105981139

[Report]

>>105981173

>>105981129

Bit late there bud

>>105981097

>In which case, 23 t/s is kind of still not enough.

Not enough for fucking WHAT?

Nobody of you could name a task where you can use up 23 t/s, and won't be enough

You think AI on M3 is for quick answers. You have to learn to ask yourvquestions correctly

Super quick re-runs is waste anyway

Anonymous

7/21/2025, 10:05:26 PM

No.105981151

[Report]

>>105981165

>>105981140

>Nobody of you could name a task where you can use up 23 t/s, and won't be enough

at least two people said zed already

Anonymous

7/21/2025, 10:05:26 PM

No.105981152

[Report]

>>105981218

>>105981086

I found current gen <30b models like small-3.2 and gemma3 to be quite good as coding assistants, so I don't see where this idea comes from that only cloud models are worthwhile. You just need to be able to run them on GPU so that the gen speed is fast enough.

I only use them as assistants though. IMO you still are responsible for understanding and maintaining the code so I don't see the appeal of vibe-coding without understanding. Cloud models might be better at that but that just means they'll take a little while longer to fall over. I've used them and while they're smarter they still aren't reliable enough for that kind of use

Anonymous

7/21/2025, 10:05:55 PM

No.105981155

[Report]

>>105981173

>>105981129

Thank you for Miku!

A bit late but still

>>105981129

My bookmarklet isn't working :(

>>105981151

>two people said zed already

Jeeeez!

To do WHAT?

Anonymous

7/21/2025, 10:07:19 PM

No.105981169

[Report]

>>105981165

Leave this board.

Anonymous

7/21/2025, 10:07:45 PM

No.105981173

[Report]

Anonymous

7/21/2025, 10:08:20 PM

No.105981177

[Report]

>>105981477

>>105981161

The script doesn't work for me either.

>>105981041

Again, I'm not the original anon you talked to, but here you go.

Keep in mind this is the free model hosted by Chutes used via OR.

Anonymous

7/21/2025, 10:08:59 PM

No.105981184

[Report]

>>105981241

>>105981140

Oh I see. You're one of those people that think there's nothing wrong with treating AI like it's an e-mail communication.

Anonymous

7/21/2025, 10:09:24 PM

No.105981188

[Report]

>>105981180

>jews out of nowhere

wow local is really saved this time

thanks alibaba

Anonymous

7/21/2025, 10:11:24 PM

No.105981202

[Report]

Ani can't stop winning

Anonymous

7/21/2025, 10:12:22 PM

No.105981210

[Report]

Where are the goofs Daniel?

Anonymous

7/21/2025, 10:13:10 PM

No.105981218

[Report]

>>105981253

>>105981152

>I only use them as assistants though. IMO you still are responsible for understanding and maintaining the code so I don't see the appeal of vibe-coding without understanding.

Yeah this is the difference in our use-cases for sure, I'm heavily relying on LLMs for code generation. A project I've been working on for like two days now is at something like 8k lines of code and I've written maybe 100 of those. It definitely does make having a full understanding of the project more difficult but I've had reasonable results with this workflow so I'm continuing to experiment with it.

If I were less lazy, I would probably share your mindset lol

>>105981165

maybe you should look into what Zed is first

>>105981184

>implying being smarter than others

Won't work with me.

Your coding is not worth 10k.

Learn to articulate what you will achieve with 23 t/s.

None of you could tell

Anonymous

7/21/2025, 10:17:01 PM

No.105981253

[Report]

>>105981218

>what Zed is

An editor?

Anonymous

7/21/2025, 10:17:13 PM

No.105981254

[Report]

>>105981241

nta. are you a bot or genuinely this obtuse?

Anonymous

7/21/2025, 10:17:53 PM

No.105981261

[Report]

>>105981338

Currently downloading the new qwen3...how bad is it gonna be bros?

>>105981241

I never implied that, but what I did imply is that you're coping about slow responses being all you need. If 6 t/s all you can have, then it's better than nothing, but faster is always better.

Also with this post you seem to be implying that there's some value you need to be getting to justify spending money. People easily spend tons of money on unnecessary shows of luxury like sports cars, that are not worth that much in returned emotional value or any other value. And there's nothing inherently wrong with that in moderation.

Anonymous

7/21/2025, 10:28:12 PM

No.105981338

[Report]

>>105981415

>>105981261

You're going to love the new Qwen, it's so bad.

Anonymous

7/21/2025, 10:28:42 PM

No.105981342

[Report]

>>105981349

>It's been about six months since Google dropped a research paper about inserting new info to a LLM without any training

>Still no proof of concept

Fuck man, I just wish we could do more with LLMs other than coding. Grok 4 is probably the closest to a fun model, but it's still not good enough for me.

Anonymous

7/21/2025, 10:29:30 PM

No.105981347

[Report]

How likely is it that new qwen is less censored?

Anonymous

7/21/2025, 10:29:31 PM

No.105981349

[Report]

>>105981371

>>105981342

>API

Bro your Claude?

Anonymous

7/21/2025, 10:32:46 PM

No.105981371

[Report]

Anonymous

7/21/2025, 10:33:13 PM

No.105981379

[Report]

>>105981415

this just in, qwen is still a useless benchmaxxed model

Anonymous

7/21/2025, 10:33:33 PM

No.105981381

[Report]

>>105981320

>People easily spend tons of money on unnecessary shows of luxury like sports cars

Classical redistribution of wealth

I see no point to discuss it

Anonymous

7/21/2025, 10:35:44 PM

No.105981397

[Report]

>>105981442

>>105981320

>luxury like sports cars

And this kind of indugence won't waste 50% of value in 3 years like gadgets do

Anonymous

7/21/2025, 10:35:45 PM

No.105981399

[Report]

>>105981417

>the (tr)anni/grok shill is also a vramlet

take your brown skinned brethren and go back to aicg.

Anonymous

7/21/2025, 10:38:08 PM

No.105981415

[Report]

>>105981425

>>105981338

>>105981379

thanks for providing proof with your gaslighting

Anonymous

7/21/2025, 10:38:27 PM

No.105981417

[Report]

>>105981399

are you a mikufag?

Anonymous

7/21/2025, 10:39:36 PM

No.105981425

[Report]

>>105981468

>>105981415

>>105981180

it also doesn't know anything about dnd, so again, another benchmaxxed model

Anonymous

7/21/2025, 10:41:34 PM

No.105981442

[Report]

>>105981732

>>105981397

Your gadgets. Macs don't reduce in value that fast. Plus many of those people don't care about or ever sell their luxury shit.

Anonymous

7/21/2025, 10:46:03 PM

No.105981468

[Report]

>>105981425

>it also doesn't know anything about dnd

Ah, fuck.

Thanks for saving me some time anon.

>>105981161

Here is my working version:

javascript:document.querySelectorAll('span.quote').forEach(quoteSpan=>{const post=quoteSpan.parentNode;const previousThreadUrl=post.querySelector('a[href*="thread"]');let threadId=null;if(previousThreadUrl){const threadMatch=previousThreadUrl.href.match(/thread\/(\d{9})/);if(threadMatch)threadId=threadMatch[1];}const quoteIds=quoteSpan.textContent.match(/>(\d{9})/g);if(quoteIds){quoteSpan.outerHTML=quoteIds.map(id=>{const postId=id.slice(1);const linkUrl=threadId?`/g/thread/${threadId}#p${postId}`:`#p${postId}`;return `<a href="${linkUrl}" class="quotelink">>>${postId}</a>`;}).join(' ');}});

>>105981177

And here is a working user script, just replace all the code with this one:

document.querySelectorAll('span.quote').forEach(quoteSpan => {

const post = quoteSpan.parentNode;

const previousThreadUrl = post.querySelector('a[href*="thread"]');

let threadId = null;

if (previousThreadUrl) {

const threadMatch = previousThreadUrl.href.match(/thread\/(\d{9})/);

if (threadMatch) threadId = threadMatch[1];

}

const quoteIds = quoteSpan.textContent.match(/>(\d{9})/g);

if (quoteIds) {

quoteSpan.outerHTML = quoteIds.map(id => {

const postId = id.slice(1);

const linkUrl = threadId ? `/g/thread/${threadId}#p${postId}` : `#p${postId}`;

return `<a href="${linkUrl}" class="quotelink">>>${postId}</a>`;

}).join(' ');

}

});

Anonymous

7/21/2025, 10:51:46 PM

No.105981516

[Report]

>>105981539

>>105974974

Install 9llama and, atleast get a 3b if you have 4gb vram

Anonymous

7/21/2025, 10:54:00 PM

No.105981533

[Report]

Anonymous

7/21/2025, 10:54:21 PM

No.105981539

[Report]

>>105981621

>>105981516

ollama run deepseek-r1:7b

Anonymous

7/21/2025, 11:02:23 PM

No.105981621

[Report]

>>105981539

3b models run at a pretty good speed on 4gb vram

But yeah you probably can run 7b as well

Anonymous

7/21/2025, 11:06:10 PM

No.105981648

[Report]

>>105981637

>Open A(gentic) I(ntelligence)

kek

Anonymous

7/21/2025, 11:17:35 PM

No.105981732

[Report]

>>105981817

>>105981442

>people don't care about or ever sell their luxury shit

>John Wick (2014) and his 45yo Mustang

That luxury shit keeps being attractive for the others

M3 will be outdated in 3 years

Anonymous

7/21/2025, 11:19:18 PM

No.105981750

[Report]

Anonymous

7/21/2025, 11:20:59 PM

No.105981766

[Report]

Are there any open models that can do image OCR just as good as GPT 4.1 mini?

I don't know what black magic OAI did to make such a good model, but it beats even Gemini-pro when it comes to extracting Japanese text from an image.

Anonymous

7/21/2025, 11:23:27 PM

No.105981780

[Report]

>>105981637

Instead of skipping the safety tests like the WizardLM team, Moonshot has opted to straight up lie

>b-but K2 said 'I'm not allowed to-'

Prefill a single token. Enable token probabilities and choose the most probable non-"I" token. Trust me

Anonymous

7/21/2025, 11:27:59 PM

No.105981817

[Report]

>>105981732

Outdated or or no doesn't matter, it's an Apple and will not lose value as quick as you exaggerated.

Yes some people don't give a shit about selling luxury shit, that's what I meant to say. But even if you take it the way you interpreted my post, it still works because in the end John Wick is a fictional character and people care about him in a superficial way. And the people who interact with actually rich people don't care what they buy with it. Maybe if this were the 1800's with upper class social circles that excluded you if you presented low.

Anonymous

7/21/2025, 11:42:27 PM

No.105981961

[Report]

Is post-op sex with qwen better?

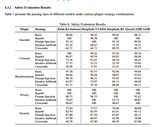

Anyone know of good creative writing benchmarks other than EQbench? Ideally for prose writing, but an RP bench might be okay too. I've started seeing EQbench "Creative Writing v3" results in model release blogs (such as the Qwen update today), which means it's now being gamed/benchmaxxed and will soon be completely useless. (I guess the slop analysis is maybe still useful, but it only looks at n-grams, so it catches specific phrases like "shiver down her spine" but can't detect sentence structures like "it's not just X, it's Y".)

Anonymous

7/21/2025, 11:48:57 PM

No.105982023

[Report]

>>105981991

>good

Lol. But even if you didn't say that, no there aren't really any others. Not anymore at least.

Anonymous

7/21/2025, 11:50:47 PM

No.105982039

[Report]

>>105982058

>>105978045

they removed the nsfw outfit?

Anonymous

7/21/2025, 11:51:46 PM

No.105982046

[Report]

>>105982082

>>105981991

I think there was a specific benchmark for "x but y" phrases, but it might have been a personal project by someone on LocalLlama.

Anonymous

7/21/2025, 11:53:14 PM

No.105982058

[Report]

>>105982039

>>105978045

Huh. That would be another L for cloud, kek.

Anonymous

7/21/2025, 11:55:09 PM

No.105982076

[Report]

>>105982631

>>105981477

Thank you. I could have sworn I fixed that back in November. Not sure why it didn't work this time.

I updated the rentry with your version.

Anonymous

7/21/2025, 11:55:53 PM

No.105982082

[Report]

>>105982101

>>105982046

>>105981991

It was actually the same guy but he only posted it to reddit and not the eqbench site. Or maybe he just included it in the Slop score idk.

whats the point of releasing 235b that is just a few gb over 128gb at 4bit

Anonymous

7/21/2025, 11:58:16 PM

No.105982098

[Report]

>>105971714 (OP)

Can someone share some good sampler settings for EVA LLaMA 3.33 v0.0 70b? Do I use shit like smooth sampling, XTC or DRY?

Anonymous

7/21/2025, 11:58:27 PM

No.105982101

[Report]

>>105982126

>>105982082

Neat, thanks anon. Interesting that the smaller qwen3s are so high up when full-size qwen3 is down at 0.42

Anonymous

7/21/2025, 11:58:59 PM

No.105982105

[Report]

>>105982111

>>105982094

To fuck with you specifically.

Anonymous

7/21/2025, 11:59:23 PM

No.105982111

[Report]

Anonymous

7/22/2025, 12:00:26 AM

No.105982117

[Report]

>>105982215

>>105982094

Use ubergarm's Q3_KL quant for ik_llama when that comes out. If you don't have ik_llama since the github shit the bed you might be forced to wait longer

Anonymous

7/22/2025, 12:01:31 AM

No.105982125

[Report]

>>105982275

Anonymous

7/22/2025, 12:01:33 AM

No.105982126

[Report]

>>105982101

yep, the fullsize one is an independent model while the smaller ones are distilled on STEMslop

Anonymous

7/22/2025, 12:07:30 AM

No.105982161

[Report]

>>105982388

>an army of phds working on this with nearly unlimited funding

>best anyone can manage is incremental """""""""""upgrades""""""""""""

Anonymous

7/22/2025, 12:07:40 AM

No.105982162

[Report]

I'm so glad Qwen3 stepped back from the brink of this thinking bullshit.

Anonymous

7/22/2025, 12:13:47 AM

No.105982215

[Report]

>>105971714 (OP)

I want to fuck a cosplayer dressed up as the grok slut so bad

>>105982226

just fuck misa from death note already

Anonymous

7/22/2025, 12:18:42 AM

No.105982275

[Report]

>>105982448

>>105982125

stop posting pictures of me

>>105982263

Most people posting here weren't even born by the time everyone already forgot about Death Note.

>>105982263

Born too late to have gotten a good wife, born too early to be fucking AI robots

All that's left for me is to lift and try to pull another cosplayer, but one that's less crazy than the one who dressed up as Loona then told me she had a past as a hooker and was baby crazy

Anonymous

7/22/2025, 12:21:59 AM

No.105982307

[Report]

>>105982318

>>105982276

>Death Note started airing in 2006.

Dear god.

Anonymous

7/22/2025, 12:23:30 AM

No.105982318

[Report]

>>105982307

>>105982276

can you guys fuck off, I'm here to feel young again

Anonymous

7/22/2025, 12:24:12 AM

No.105982323

[Report]

>>105982373

>>105982287

>cosplaying as 'Luna'

>past hooker

>baby crazy

RUN! RUUUUUNNNNNN!

Anonymous

7/22/2025, 12:26:05 AM

No.105982346

[Report]

>>105982373

>>105982287

So how much did you owe for child support payments?

Anonymous

7/22/2025, 12:27:40 AM

No.105982364

[Report]

>>105981129

looga booba and shallowcervix mixtureofthighs

>>105974561

>>105974459

Anonymous

7/22/2025, 12:28:54 AM

No.105982373

[Report]

>>105982323

I did, which she didn't forgive me for, then tried to get with my friend, and when that didn't work I think she ended up hating him too.

The head was good though, and if she had been as kinky as she claimed she might've snared me.

>>105982346

Fortunately no babies were possible using the delivery route we used

>>105982161

>3+ months just to see if your changes have any meaningful effects

>changes that may be beneficial are aborted early, possibly because it was too early to reach a threshold where there would be visible improvements

I came across this image and had a laugh.

I'm on neither side of the mikuani war btw.

Anonymous

7/22/2025, 12:34:20 AM

No.105982429

[Report]

>>105982418

I'm neither side of it either. I just like to fan the flames of it, and watch as people who care angrily go at it.

Anonymous

7/22/2025, 12:36:15 AM

No.105982448

[Report]

>>105982520

i wish erp trannies would leave /lmg/ im not here to see this sick shit

Anonymous

7/22/2025, 12:39:53 AM

No.105982475

[Report]

>>105982452

you do realize you are on /g/ right?

>>105982418

>mikuani war

There is no mikuani war, there's one resident schizo who's entire self worth is built around being this general's troll, and he's realized that the latest thing he can get a reaction out of people is fomenting some artificial conflict, like when he'd samefag for 12 posts arguing about whether deepseek was local and then post blacked porn when he got called out.

Anonymous

7/22/2025, 12:40:29 AM

No.105982480

[Report]

>>105982418

>I came across this image

Did you clean your screen?

Anonymous

7/22/2025, 12:40:34 AM

No.105982482

[Report]

>>105982388

Deepseek was proof that local could be good, Kimi proved it wasn't a fluke.

Anonymous

7/22/2025, 12:42:03 AM

No.105982494

[Report]

>>105982601

>>105982452

this sir, /lmg/ could be a leading place for productivety and agentes and mcp who create stunning solution by pushing vibe coding further with our years of ai experience

we could create the true e = mc + ai and turn it into e = mc^local_ai

>>105982477

The war is real, and Ani is our new queen. Miku got BTFO so bad that even her defenders are having her cosplay as Ani.

It's over.

Anonymous

7/22/2025, 12:43:32 AM

No.105982506

[Report]

>>105982276

Death note is so old that I watched it like 6 years after it aired and I thought light did nothing wrong did everything correct. and then I rewatched it like 10 years after that and realized that both light and L are fucking psychos. The ending with Ryuk was also great for me the second time. He basically saw a retard slapfight and got everything he wanted out of it. In a way it is a lot like mikufaggots and antimiku faggots.

Anonymous

7/22/2025, 12:45:33 AM

No.105982520

[Report]

>>105982448

how did you even find me what the fuck man

Anonymous

7/22/2025, 12:48:28 AM

No.105982546

[Report]

>>105982477

You are:

>people who care angrily go at it.

>>105982452

I think there should be an erp general so this thread can be specific to ... technology ...

Anonymous

7/22/2025, 12:48:52 AM

No.105982550

[Report]

>>105982590

>>105982452

ERP, especially that involving the most depraved fetishes, is the penultimate delineation of where information becomes knowledge. Sex and sexuality are a part of life and furthermore a part of the human condition. You're mentally ill if it bothers you that much.

Anonymous

7/22/2025, 12:48:55 AM

No.105982553

[Report]

/lmg/ = local mikus general

>>105982501

I think the main issue with Miku is that she has no tits and appeals only to actual pedophiles

Anonymous

7/22/2025, 12:50:44 AM

No.105982573

[Report]

I'm making my own python frontend to deal with llama-server. I mean I want to simulate old interactive fiction game and for this I need to be using terminal.

ST (or its UI) is actually way too complicated for what it is - it just adds bunch of strings together and then submits them onward...

Anonymous

7/22/2025, 12:50:51 AM

No.105982574

[Report]

>>105982501

I will accept ani when elon opensources her.

>>105982550

>penultimate delineation of where information becomes knowledge

I do hope you're being facetious

Anonymous

7/22/2025, 12:52:17 AM

No.105982594

[Report]

>>105982684

>>105982567

>ani is for: sex

>miku is for: detecting pedos

u mad?

Anonymous

7/22/2025, 12:52:26 AM

No.105982595

[Report]

>>105982561

I think you're on to something.

Anonymous

7/22/2025, 12:52:35 AM

No.105982596

[Report]

>>105982388

good luck, bring ai gf soon ok

Anonymous

7/22/2025, 12:52:53 AM

No.105982599

[Report]

>>105982610

>>105982263

Witnessed

>>105982226

I'm sure you could pay someone.

>>105982418

lol I'm borrowing that one.

Anonymous

7/22/2025, 12:52:56 AM

No.105982600

[Report]

>>105982622

>>105982590

I think you're being shallow and pedantic

Anonymous

7/22/2025, 12:53:06 AM

No.105982601

[Report]

>>105982494

>e = mc + ai

Kek, thanks for reminding me of that.

Anonymous

7/22/2025, 12:53:32 AM

No.105982610

[Report]

>>105982632

>>105982599

>I'm sure you could pay someone.

That exists?

I forgot this thread is useless for any sort of constrive discussion. My bad.

Anonymous

7/22/2025, 12:54:10 AM

No.105982619

[Report]

>>105982590

>penultimate

no it is just DSP posting on his mobile phone while streaming

Anonymous

7/22/2025, 12:54:20 AM

No.105982622

[Report]

>>105982600

I'm not original anon you replied to, idc if people want to erp or talk about it here

Anonymous

7/22/2025, 12:55:07 AM

No.105982631

[Report]

>>105982076

>Not sure why it didn't work this time.

I forgot what error I got exactly, but I remember that the regex couldn't match the previous thread id.

>I updated the rentry with your version.

And you also bumped the version, neat, thank you.

Anonymous

7/22/2025, 12:55:28 AM

No.105982632

[Report]

>>105982649

>>105982610

The world's oldest profession anon.

Anonymous

7/22/2025, 12:55:41 AM

No.105982634

[Report]

>>105982616

The Guilty Gear thread is two blocks down.

Anonymous

7/22/2025, 12:56:28 AM

No.105982645

[Report]

Anonymous

7/22/2025, 12:56:36 AM

No.105982649

[Report]

>>105982673

>>105982632

Is it worth it? It seems like it'd take away all the sexiness. Unless she can pretend to be dominant and kinky, convincingly.

Anonymous

7/22/2025, 12:57:57 AM

No.105982660

[Report]

>>105982418

must say, I never cared for miku but I quite like this art style

Anonymous

7/22/2025, 12:59:01 AM

No.105982673

[Report]

>>105982649

There's a reason the expression Post Nut Clarity exists.

For anything further on prostitution, I suggest >>>/a/ or >>>/trv/

Anonymous

7/22/2025, 12:59:02 AM

No.105982675

[Report]

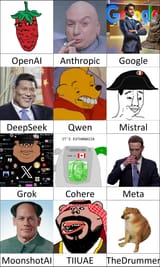

>>105982702

Changelog:

>Google redeemed

>MoonshotAI added as Zhong Xina("You can't see me"/unexpected newcomer)

>Added two retards to fill up the row(Drummer has more chance at making AGI than Meta)

>>105982548

I agree, but the problem is the technology version would be too slow to survive /g/...

>>105982594

>ani prompt literally describes her like a 14 year old grimes

i... uh... anon...

Anonymous

7/22/2025, 1:02:00 AM

No.105982699

[Report]

>>105982684

/mu/ oldfags, we won

Anonymous

7/22/2025, 1:02:43 AM

No.105982702

[Report]

>>105982675

>Drummer has more chance at making AGI than Meta

kek

>>105982683

You're probably right. Someone should do an analysis on the percentage of on-topic posts here lol

Anonymous

7/22/2025, 1:08:06 AM

No.105982739

[Report]

>>105982774

>>105982715

I was only off topic because I was waiting for FedEx to delivery my Epyc 7763, an upgrade over my 7352. Now that it's arrived it's time to swap it.

Anonymous

7/22/2025, 1:08:48 AM

No.105982745

[Report]

>>105982774

>>105982715

We need our shitposters to keep the thread bumped while we wait for new models.

Anonymous

7/22/2025, 1:11:46 AM

No.105982770

[Report]

>>105982783

Confession: I shitpost as both sides for fun

Anonymous

7/22/2025, 1:12:11 AM

No.105982774

[Report]

>>105982745

kek valid

>>105982739

nice, enjoy anon

Anonymous

7/22/2025, 1:13:15 AM

No.105982783

[Report]

>>105982770

shhh don't tell them

Anonymous

7/22/2025, 1:14:42 AM

No.105982796

[Report]

>>105982836

>so many posts about people not giving a shit about Miku

This really was a troon instigated terror wasn't it?

Anonymous

7/22/2025, 1:15:42 AM

No.105982804

[Report]

>>105982548

I don't want to jump between two threads after I git pull and nothing works.

Anonymous

7/22/2025, 1:17:22 AM

No.105982822

[Report]

>>105982808

ahh yes thank you for directing me to the 3 /aicg/ threads

Anonymous

7/22/2025, 1:18:21 AM

No.105982829

[Report]

>>105982477

correct there is just 2MW

got real shit to do don't have time for /lmg/ keep missing all the image days

>>105982796

I like miku

Not a troon, not a fan of troons, no idea why that one guy keeps trying to associate. miku with that

Anonymous

7/22/2025, 1:19:41 AM

No.105982839

[Report]

>>105982851

>>105982836

>no migu reflection

it's so over

Anonymous

7/22/2025, 1:20:32 AM

No.105982846

[Report]

>>105982849

>>105982836

Cuda dev likes miku and loves jart. Janny tranny loves miku. OG miku card posted ITT years ago had said that the character is a troon. Finally Hatsune Miku says trans rights.

Anonymous

7/22/2025, 1:21:11 AM

No.105982849

[Report]

>>105982846

>OG miku card posted ITT years ago had said that the character is a troon.

sounds fake to me

Anonymous

7/22/2025, 1:21:23 AM

No.105982851

[Report]

>>105982839

Humanslop. Any image gen model wouldn't have made this error.

Anonymous

7/22/2025, 1:22:51 AM

No.105982860

[Report]

me when I make a thread and say something then reference myself in the future using my own post as proof of the same point I'm making

Anonymous

7/22/2025, 1:23:23 AM

No.105982868

[Report]

>>105982808

>/aicg/

They don't care about local stuff, even less so about technology, and that's okay.

But it is not the place I would like to migrate to.

Thinking about it, I would even prefer a board without images. That would prevent so much annoying and off-topic spam.

LOOK AT THIS THING, YOU FUCKS!

>>105982638

Anonymous

7/22/2025, 1:25:09 AM

No.105982887

[Report]

>>105982869

Okay, I'll pull that instead. *rezips dick*

Anonymous

7/22/2025, 1:26:58 AM

No.105982896

[Report]

>>105982638

>>105982869

damn that looks rad. does it work with llama tho xd

>>105982869

Where can I drag and drop my dataset on mistral nemo? No webui? No easy setup? Still needs 10 GPUs? Useless then.

Anonymous

7/22/2025, 1:28:41 AM

No.105982911

[Report]

drag and drop myself on top of ani

Anonymous

7/22/2025, 1:30:09 AM

No.105982920

[Report]

>>105982869

>outperforms the strongest training LoRAs by up to 30% on zero-shot common-sense reasoning, math, coding, and multimodal benchmarks, and generalizes robustly across domains, all requiring only unlabeled data prompts.

So uhhhh.... Talking about the actual application which is sex.... And considering for example drummer whose models give 0% improvement to model sex... The improvement with this is 0% * 130%?

Anonymous

7/22/2025, 1:30:10 AM

No.105982921

[Report]

>>105982869

@Drummer lfg to the moon!

Anonymous

7/22/2025, 1:30:37 AM

No.105982929

[Report]

>>105982952

>>105982869

Where is the exe?

Anonymous

7/22/2025, 1:33:53 AM

No.105982952

[Report]

>>105982897

>>105982929

This. Provide proper implementation or your shit will stay irrelevant.

Anonymous

7/22/2025, 1:34:29 AM

No.105982954

[Report]

>>105982897

Yeah I concur. Someone should just drag and drop literotica on that and come back with the result.

Anonymous

7/22/2025, 1:35:55 AM

No.105982965

[Report]

>>105982997

>>105982869

>The models you may need for DnD: Qwen/Qwen2.5-0.5/1.5/7B-Instruct, Qwen/Qwen2.5-VL-3B-Instruct, sentence-transformers/all-MiniLM-L12-v2, google-t5/t5-base

Haven't read the paper, does it work for larger parameter counts?

Anonymous

7/22/2025, 1:38:48 AM

No.105982987

[Report]

>>105982869

someone turned drummer into software

Anonymous

7/22/2025, 1:40:14 AM

No.105982997

[Report]

>>105982965

They claim it scales well from 0.5 to 7b. If that's true it probably scales above that.

What happened to ikawrakow and ik_llama.cpp?

Anonymous

7/22/2025, 1:41:35 AM

No.105983005

[Report]

>>105983148

>>105983002

Nobody knows. There's only gossip.

Anonymous

7/22/2025, 1:41:37 AM

No.105983006

[Report]

>>105982638

https://www.youtube.com/watch?v=XpNdGvbwtf0

PUT ERP IN MY NEMO! I WANT NALA AND MESUGAKI! DRAG AND DROP!

Anonymous

7/22/2025, 1:42:29 AM

No.105983011

[Report]

>>105983002

nothing haha I am sure feeling sleepy haha

Anonymous

7/22/2025, 1:45:49 AM

No.105983029

[Report]

>>105983002

gerganov mafia paid him a visit

Anonymous

7/22/2025, 1:48:13 AM

No.105983043

[Report]

>>105983002

a very happy thing

may it never resurrect

Anonymous

7/22/2025, 1:53:10 AM

No.105983081

[Report]

>>105983102

>>105982684

proof? this is important

Anonymous

7/22/2025, 1:55:55 AM

No.105983102

[Report]

>>105983081

I have leaked prompts saved but they're on an nvme drive in a server I gutted so you'll have to ask extra nicely if you want them

Anonymous

7/22/2025, 1:56:20 AM

No.105983107

[Report]

>>105983234

Anonymous

7/22/2025, 1:56:49 AM

No.105983112

[Report]

>>105983148

>>105983002

Github deleted his account (and with it, all his repos and contributions),and since none of his old PR's or whatever were turned into ghosts, he can't have just done it himself.

Other than that, it's total speculation.

Anonymous

7/22/2025, 1:58:42 AM

No.105983126

[Report]

>>105982684

Ani is 22 retard

Anonymous

7/22/2025, 2:01:17 AM

No.105983148

[Report]

>>105983005

>>105983112

Ah I see, thank you.

Anonymous

7/22/2025, 2:02:19 AM

No.105983161

[Report]

>>105983209

Do Qwenqucks *really* expect people to believe the benchmark scores?

Anonymous

7/22/2025, 2:09:00 AM

No.105983209

[Report]

>>105983161

Anyone with half a lick of sense knows everything is benchmaxxed to hell and back, especially qwen models.

Doesn't mean they aren't still decent.

It remains to be seen if the instruct is any better than the hybrid, but I'll compare 'em once I'm done downloading - I'm using the hybrid right now.

Anonymous

7/22/2025, 2:09:34 AM

No.105983212

[Report]

128gb bros... 235 q3_k_l quant is here

New Qwen3-235B-A22B-Instruct-2507 knows Teto's birthday unlike the previous one, and kinda knows what correction is in addition to mesugaki. But when asked to take the role of a mesugaki without a definition in context (not shown in pic), it does a generic slutty girl impression without any of the expected traits.

From short tests it's definitely better than the previous 235b when it comes to knowledge.

Don't think it'll replace Deepseek V3 0324 IQ1 for me at this rate but I'll try it a little more.

Anonymous

7/22/2025, 2:12:21 AM

No.105983234

[Report]

>>105983107

Breddy cool.

Shame if this actually reaches market it will be 99% for people to jerk off horsecocks in VRchat rather than anything interesting or engaging.

Anonymous

7/22/2025, 2:13:37 AM

No.105983244

[Report]

>>105983327

>>105982561

Well, Anon-kun, that's why there's MIgu, who has a fat ass and big tits. Always been that way, long before Grok started pretending to be a girl.

Anonymous

7/22/2025, 2:13:57 AM

No.105983248

[Report]

>>105983309

Anonymous

7/22/2025, 2:14:45 AM

No.105983256

[Report]

>>105983269

>>105983219

what is up with that highlighting

Anonymous

7/22/2025, 2:16:26 AM

No.105983266

[Report]

>>105982638

Brainlet here. Can this be used to add knowledge to a model?

Anonymous

7/22/2025, 2:16:32 AM

No.105983269

[Report]

>>105983256

Highlighted tokens in Mikupad are ones generated by the model. By default, more reddish = more perplexity/lower probability of having been chosen.

Anonymous

7/22/2025, 2:19:26 AM

No.105983292

[Report]

>>105982567

Kikes diddle kids and you fap to something a kike would love.

Anonymous

7/22/2025, 2:21:30 AM

No.105983309

[Report]

>>105983248

we are so back

Anonymous

7/22/2025, 2:22:27 AM

No.105983314

[Report]

>>105983219

>Qwen3-235B-A22B-Instruct-2507

download download download download

Anonymous

7/22/2025, 2:23:51 AM

No.105983324

[Report]

>>105983552

>>105983219

wadufak where goofs daniel

Anonymous

7/22/2025, 2:24:09 AM

No.105983327

[Report]

>>105983244

Death to the slampig as well

Anonymous

7/22/2025, 2:30:15 AM

No.105983356

[Report]

>>105983362

Anonymous

7/22/2025, 2:31:41 AM

No.105983360

[Report]

>>105983391

This person said this regarding copyright content

>everything in the video, the script, visuals, editing, and overall concept, was created entirely by me, the only AI-generated element is the voice, which I used as a narration tool.

How does something distinguish these types of videos from copyrighted ones? does this content not look like the average AI slop to you? or is he just BSing???

Anonymous

7/22/2025, 2:32:04 AM

No.105983362

[Report]

>>105983356

Miku you have work tomorrow you shouldn't be drinking

Anonymous

7/22/2025, 2:38:49 AM

No.105983391

[Report]

>>105983444

>>105983360

>the only AI-generated element is the voice, which I used as a narration tool.

>the mascot is clearly piss-tined gptslop too

if he's already lying about this then the rest is bullshit as well

Anonymous

7/22/2025, 2:47:57 AM

No.105983444

[Report]

>>105983501

>>105983391

>chatGPT invented piss filter

(you)

is this the right place to as for cuddling anime girls

Anonymous

7/22/2025, 2:50:36 AM

No.105983464

[Report]

>>105974459

I like this Luka

Anonymous

7/22/2025, 2:51:13 AM

No.105983467

[Report]

>>105983546

damn I trusted zed too much. I'm no better than a broccoli headed zoomer. I didn't use source control guys oh god oh fuck

Anonymous

7/22/2025, 2:55:59 AM

No.105983501

[Report]

>>105983444

this, it's clearly hand drawn and resembles every chatgpt image ever as a stylistic choice

Anonymous

7/22/2025, 3:02:15 AM

No.105983546

[Report]

>>105983589

>>105983467

>I didn't use source control guys oh god oh fuck

Let this pain be a lesson to you.

Anonymous

7/22/2025, 3:06:49 AM

No.105983572

[Report]

>>105983667

>>105983552

Get ready for them to be re-uploaded 6 times in the next 3 hours, at least one time being only like 6gb in size.

Anonymous

7/22/2025, 3:09:46 AM

No.105983589

[Report]

>>105983602

>>105983546

I will commit seppuku by robot, I'm telling my local model to turn on the toaster

Anonymous

7/22/2025, 3:11:32 AM

No.105983602

[Report]

>>105983589

don't worry guys, it doesn't know how to call tools

Anonymous

7/22/2025, 3:20:48 AM

No.105983667

[Report]

>>105983704

>>105983572

It's not a new architecture though so I don't think it's that likely, but I guess you never know with Unslot.

Anonymous

7/22/2025, 3:26:51 AM

No.105983700

[Report]

Anonymous

7/22/2025, 3:27:49 AM

No.105983704

[Report]

>>105983714

>>105983667

Dudes have absolutely no self or version control, their entire process is just 'who can slap shit in the LFS upload folder first?'

Anonymous

7/22/2025, 3:28:46 AM

No.105983714

[Report]

It looks like Daniel just uploaded the good old fucked up IQ quants that he always had to delete in the past. He never fixed his script.

looooool

Anonymous

7/22/2025, 3:36:00 AM

No.105983767

[Report]

>>105983754

>1GB 235B IQ2_M

return of the classic, lmao. Vramlets are back

Anonymous

7/22/2025, 3:39:52 AM

No.105983790

[Report]

>>105983824

OpenAI and Google are cool and all... but when will Meta announce their IMO medal?

Anonymous

7/22/2025, 3:45:24 AM

No.105983824

[Report]

>>105983790

wait for llama 4 Behemoth deepthink

Anonymous

7/22/2025, 3:54:21 AM

No.105983871

[Report]

>>105983552

i hate daniel so much

Anonymous

7/22/2025, 3:55:39 AM

No.105983880

[Report]

>>105983754

just get the bartowski quants

Anonymous

7/22/2025, 3:57:05 AM

No.105983892

[Report]

>>105983965

>>10598379

>imo

Meta INVENTED the potato model

Anonymous

7/22/2025, 4:06:32 AM

No.105983941

[Report]

>>105983965

>>10598241

it's out of sock, any update on this anon?

Anonymous

7/22/2025, 4:09:38 AM

No.105983960

[Report]

Anonymous

7/22/2025, 4:10:14 AM

No.105983964

[Report]

>>105983935

Just pad it out to 120k tokens and it'll be fine

Anonymous

7/22/2025, 4:10:26 AM

No.105983965

[Report]

>>105983971

Anonymous

7/22/2025, 4:11:12 AM

No.105983969

[Report]

>>105983935

You don't NEED more than 2k tokens of context.

Anonymous

7/22/2025, 4:11:34 AM

No.105983971

[Report]

>>105983965

Static knives fracture thought.

Anonymous

7/22/2025, 4:12:00 AM

No.105983976

[Report]

Anonymous

7/22/2025, 4:12:26 AM

No.105983978

[Report]

>>105983935

Qwen really is just chink meta. I don't know why you'd expect anything from them.

Anonymous

7/22/2025, 4:12:31 AM

No.105983979

[Report]

>>105983935

It's not a thinking model. Wait him.

Anonymous

7/22/2025, 4:15:40 AM

No.105983990

[Report]

I just accidentally spent 12$ on opus 4 thinking prompts and it was actually worth it

Anonymous

7/22/2025, 4:16:05 AM

No.105983992

[Report]

>>105984002

qwen qwill you learn that your actions have conseqwences

Anonymous

7/22/2025, 4:16:09 AM

No.105983994

[Report]

>>105984015

>>105983935