/lmg/ - Local Models General

►Recent Highlights from the Previous Thread:

>>106001651

--Papers:

>106004422

--Local AI waifu development challenges and skepticism toward visual-first implementations:

>106001822 >106002467 >106002483 >106002530 >106002750 >106002507 >106002544 >106002576 >106002539 >106002564 >106002591 >106002687 >106002661 >106002811 >106002725

--Qwen3-235B-A22B-2507 offers efficient inference with competitive performance:

>106003167 >106003190 >106003843

--Local TTS tools approach but don't match ElevenLabs quality yet:

>106001910 >106001955 >106001965

--Frustration with LLM dominance and lack of architectural innovation in corporate AGI efforts:

>106003383

--Difficulty suppressing newlines via logit bias due to tokenization and model behavior quirks:

>106003868 >106003898 >106003910

--AI analysis identifies ls_3 binary as malicious with backdoor and privilege escalation capabilities:

>106002258

--Debate over uncensored models and flawed censorship testing methodologies:

>106002782 >106002806 >106002856 >106003665 >106004021 >106004049

--Struggling to improve inference speed by offloading Qwen3 MoE experts to smaller GPU:

>106001836 >106001948 >106002046

--Qwen3-235B shows improved freedom but still suffers from overfitting:

>106002119 >106002161

--AMD's AI hardware is competitive but held back by software:

>106001704 >106001724

--Stop token configuration tradeoffs in local LLM chat scripting:

>106004068

--Miku (free space):

>106001717 >106001732 >106001923 >106001981 >106002168 >106002491 >106002541 >106002659 >106002722 >106003620 >106004006 >106005098 >106005225 >106005408

►Recent Highlight Posts from the Previous Thread:

>>106002148

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

>>106005678

I remember this Miku. They have been in this standoff for a long time.

Anonymous

7/24/2025, 5:38:11 AM

No.106005705

[Report]

>>106005709

Based

>>106005673 (OP)

>>106005678

>>106005702

>>106005705

vocaloidfag posting porn in /ldg/:

>>105715769

It was up for hours while anyone keking on troons or niggers gets deleted in seconds, talk about double standards and selective moderation:

https://desuarchive.org/g/thread/104414999/#q104418525

https://desuarchive.org/g/thread/104414999/#q104418574

he makes ryona picture:

>>105714003 of some random generic anime girl the different anon posted earlier:

>>105704741 (could be the tranny playing both sides)

tests LLM-generated bait poster bot for better shitflinging in threads:

>>105884523 great example:

>>106001651

admits spamming /v/ with AI slop:

https://desuarchive.org/g/thread/103462620/#103473545

Funny /r9k/ thread:

https://desuarchive.org/r9k/thread/81611346/

The Makise Kurisu damage control screencap (day earlier) is fake btw, no matches to be found, see

https://desuarchive.org/g/thread/105698912/#q105704210 janny deleted post quickly.

TLDR: vocaloid troon / janitor protects resident avatarfags and deletes everyone who outs him, making the general his little personal safespace with samefagging. Is prone to screech "Go back to teh POL!" when someone posts something mildly political about language models or experiments around topic.

As said in previous thread(s)

>>105716637 I remind you that cudadev of llama.cpp (JohannesGaessler on github) has endorsed spamming. That's it.

He also endorsed hitting that feminine jart bussy a bit later on. QRD on Jart - The code stealing tranny:

https://rentry.org/jarted

xis ai slop profiles

https://x.com/brittle_404

https://x.com/404_brittle

https://www.pixiv.net/en/users/97264270

https://civitai.com/user/inpaint/models

Anonymous

7/24/2025, 5:39:43 AM

No.106005717

[Report]

Boo hoo

Anonymous

7/24/2025, 5:40:32 AM

No.106005725

[Report]

>>106005709

>(could be the tranny playing both sides)

that's you

>>106005702

I'm betting all my money on miku!

Anonymous

7/24/2025, 5:41:22 AM

No.106005733

[Report]

>>106008233

Has anyone tested qwen coder for coherency at length?

Anonymous

7/24/2025, 5:41:48 AM

No.106005738

[Report]

Anonymous

7/24/2025, 5:44:26 AM

No.106005754

[Report]

>>106005794

>>106005728

Yep and I'm sure he feels clever

Anonymous

7/24/2025, 5:44:52 AM

No.106005759

[Report]

Anonymous

7/24/2025, 5:48:40 AM

No.106005794

[Report]

>>106005817

>>106005728

>>106005754

I can tell you was in favor of the IP counter removal.

Anonymous

7/24/2025, 5:50:29 AM

No.106005807

[Report]

>>106005739

holy shit you're all saving some old ones

spoats

post the migu archive already

Anonymous

7/24/2025, 5:51:53 AM

No.106005817

[Report]

>>106005864

>>106005794

>I can tell you was

good morning saar

I'm looking into this whole abliteration technique, is that a dead end or just not used correctly? mlabonne is a grifter, but I wonder if that can produce something useful

are yall ready for AGI? This time it's the big strawberry

Anonymous

7/24/2025, 5:58:12 AM

No.106005864

[Report]

>>106005817

ESL and see nothing wrong with it, though sometimes its kinda hard to tell stuff.

Anyway, i know you were in favor of that event for obvious reasons, any debate later on is pointless because y'all dishonest on arrival here.

Anonymous

7/24/2025, 5:58:50 AM

No.106005869

[Report]

>>106005844

It's funny how he talks like a slightly gayer version of Elon Musk.

What local model is best suited for RPing official /lmg/ mascot Hatsune Miku smugly mocking me for developing a (small) erection while sniffing her no-deodorant, post-gym-workout armpits?

Anonymous

7/24/2025, 6:02:56 AM

No.106005902

[Report]

>>106005926

>>106005883

Maybe Adults Only Image Board or Mental Institution, your pick.

Anonymous

7/24/2025, 6:03:35 AM

No.106005908

[Report]

>>106005926

>>106005883

you should use an asian model for authenticity, like qwen 3

you guys are smart

someone posted this in /ldg/:

new sota tts, with voice cloning

https://www.boson.ai/technologies/voice

https://github.com/boson-ai/higgs-audio

how can i use this in silly tavern?

Anonymous

7/24/2025, 6:05:23 AM

No.106005919

[Report]

>>106005883

Chronoboros-33b, GPTQ quants for authenticity

>>106005902

But anon, she's fully-clothed. Are websites selling workout-gear adults-only?

>>106005908

But anon, I don't think Miku would randomly start speaking Chinese like Qwen.

Anonymous

7/24/2025, 6:07:56 AM

No.106005933

[Report]

>>106005926

only an asian model will understand the finer points of SPH

Anonymous

7/24/2025, 6:09:09 AM

No.106005943

[Report]

>>106005818

"only one direction" always seemed over-exagerated since abliterated models could still refuse. Removing a model's ability to express refusal also makes it too pliable to be fun for roleplay.

It was just something the lesswrong types could point at the scream for more safety, and now most safety is focused on heavily filtering pretraining datasets rather than adding refusals during alignments, which abliteration can't help with.

It would probably be more useful as a lora, rather then full weights with the ability to refuse permanently removed.

Anonymous

7/24/2025, 6:10:32 AM

No.106005952

[Report]

>>106005915

You are probably underage and as such, too stupid to even understand but it's all Python. Just export a string from ST and feed it into this new thing. I'm sure you have tons of free vram for all of this too.

Anonymous

7/24/2025, 6:11:22 AM

No.106005958

[Report]

>>106005926

>But anon, I don't think Miku would randomly start speaking Chinese like Qwen.

Qwen 3 models don't randomly start speaking Chinese. Check your settings.

Anonymous

7/24/2025, 6:11:35 AM

No.106005960

[Report]

>>106005915

>no finetuning

Into the trash it goes

Anonymous

7/24/2025, 6:13:04 AM

No.106005965

[Report]

I got banned for doxing for copypasta’ing schizo anons rant but not for spamming thats hilarious

>>106005926

The funniest thing about Qwen randomly throwing in a chinese character is that the past few times it's happened to me, it gave a translation in brackets immediately after, so it KNEW it shouldn't have done so but did anyway.

like

>{{char}} kneeled down like a 懇求者 (obsequious supplicant) before {{user}}

Anonymous

7/24/2025, 6:14:40 AM

No.106005974

[Report]

>>106005989

>>106005915

>sota voice cloner

Finally some good fucking food

>24 gb vram for optimal speed

dropped. local Ani or sesameAI with realtime interaction never ever.

Anonymous

7/24/2025, 6:14:55 AM

No.106005976

[Report]

>>106006045

>Qwen 3 moders don't landomry stalt speaking Chinese. Check youl settings. Arr hair gleat palty.

Anonymous

7/24/2025, 6:16:40 AM

No.106005984

[Report]

>>106005818

https://xcancel.com/tngtech/with_replies

these niggas made deepseek merges (((uncensoring))) it doing something similiar idfk about any of this shit but its the most releveant thing i can think of they do test which expert lights up during refusal with samples then swap them out supposedly their merges are popular idk :/ never tried em

Anonymous

7/24/2025, 6:17:12 AM

No.106005989

[Report]

>>106005974

>it uses autoregressive bloat

well

Anonymous

7/24/2025, 6:22:08 AM

No.106006012

[Report]

>>106006050

>>106005967

The thing with language is that some concepts don't fully translate into other languages, so the model had the idea but the words didn't quite match and were a mere approximation of the meaning.

>negotiating a contract early tomorrow with a UAE world model lab

>can't get to sleep

why is it always like this? god damn. I know I'm probably going to get pop quizzed on autoregressive and I only messed with those tiny nvidia ones. shit

Anonymous

7/24/2025, 6:28:27 AM

No.106006045

[Report]

>>106006054

>>106005976

ov vey, pay for my jewish westerrn models instead, goy!

Anonymous

7/24/2025, 6:29:08 AM

No.106006048

[Report]

>>106005967

I assume that adding a well-chosen Chinese token to the context updates the self-attention in the "right" way, so that the model steers better towards what you're prompting for.

If the math really does work like this, it would dampen on the suggestion of

>>106002473 to save space by removing multilingual tokens, since the CJK vocabulary would be necessary for good quality outputs.

Anonymous

7/24/2025, 6:29:28 AM

No.106006050

[Report]

>>106006222

>>106006012

Qwen's training data must be a mess if the approximate translation isn't the most likely token instead of code switching being the most probable.

Anonymous

7/24/2025, 6:30:01 AM

No.106006053

[Report]

>>106006072

>>106006040

you don't have your usual inhibitions when lacking sleep

Anonymous

7/24/2025, 6:30:18 AM

No.106006054

[Report]

>>106006045

>pay

Anon, I just download models released by Our Lord and Savior TheDrummer for free instead.

Anonymous

7/24/2025, 6:32:57 AM

No.106006072

[Report]

>>106006140

>>106006053

t-thanks. y-you too

Anonymous

7/24/2025, 6:33:40 AM

No.106006075

[Report]

Ok

Anonymous

7/24/2025, 6:35:10 AM

No.106006082

[Report]

Cool

>>106006040

Just grift like you always do, no biggie

Anonymous

7/24/2025, 6:36:55 AM

No.106006097

[Report]

>>106006116

>>106006087

mad coz brokie

>>106006087

I rarely do. some schizo just does it for me 24/7 in an attempt to annoy people which is weird since he does it for free. you do you I guess

Anonymous

7/24/2025, 6:39:24 AM

No.106006116

[Report]

>>106006097

>Can only think of money

Least mindbroken zoomie

Anonymous

7/24/2025, 6:40:20 AM

No.106006125

[Report]

>>106006220

>>106006107

>upside down

That's kinda impressive.

Anonymous

7/24/2025, 6:42:24 AM

No.106006136

[Report]

>>106006220

>>106006107

on the flip side, your workflow is far more viable now than it was before with that megumin clip

that was ass

at least this is viable.

still, might want to crank the steps or else integrate controlnets or other motion hints because the resulting lines (for toon, not for realism) is still blurry during high motion.

if you solve that even I'd be paying attention.

right now, lora training only gets you so far, I've found that doubling frames does a tremendous job of cleaning up blurry toon style during motion at the obvious cost of doubling computation/halving training efficiency.

as a downside, Wan does 99% of the work for free, any tooling you do or do not provide is functionally meaningless as a thin wrapper over comfy.

Anonymous

7/24/2025, 6:42:46 AM

No.106006138

[Report]

>>106006220

>>106006107

So is ML fun for you? How retarded is the average /lmg/ anon compared to the guys on the market?

Anonymous

7/24/2025, 6:43:31 AM

No.106006140

[Report]

>>106006220

Anonymous

7/24/2025, 6:51:31 AM

No.106006192

[Report]

>>106006215

>>106005673 (OP)

Whatever happened with GLM-4 100b?

Anonymous

7/24/2025, 6:52:34 AM

No.106006196

[Report]

>>106006210

Anonymous

7/24/2025, 6:55:26 AM

No.106006210

[Report]

>>106006231

>>106006196

The fuck does this garbage have to do with local models? fuck off to aicg with your grokshit.

Why AI tends to hallucinate instead of saying "I don't know"? :(

Anonymous

7/24/2025, 6:56:13 AM

No.106006215

[Report]

>>106006192

two more weeks

Anonymous

7/24/2025, 6:56:40 AM

No.106006220

[Report]

>>106006125

it's not too hard. some sd1.x get it just fine

>>106006136

>on the flip side, your workflow is far more viable now than it was before with that megumin clip

the difference is there isn't much control at all. yeah you can use vace but you have to provide an already made animation in order to sell the effect. 99% of the time is tweening or 3d animation. anime varies too much with timing and spacing so you get really off-putting anime if you've seen the aniwan outputs in /sdg/.

>the resulting lines (for toon, not for realism) is still blurry during high motion.

if you solve that even I'd be paying attention.

it's mainly a distillation problem. with the distill, it's bearable gen times but you get that blur. without, it's good quality but takes forever to get a good gacha roll. a lot of the optimizations are really snake oils so I'd probably wait until the shit methods are gone.

>any tooling you do or do not provide is functionally meaningless as a thin wrapper over comfy.

that is the whole point of the project but with all local models. tooling has to go hand-in-hand with customization, not everything separated into all these separate fucking apps making it a clown show.

>>106006138

yes, I just think there is a lot of poor applied execution to get the most out of models. schizo is obviously retarded but my god, grifters everywhere. it's sickening. then you got rustfags, junior python devs that don't know shit and the small minority of people that actually know their shit. /ldg/ is, on average, bigger brained than some of these drooling sv retards.

>>106006140

ty!

Anonymous

7/24/2025, 6:56:45 AM

No.106006222

[Report]

>>106006050

Qwen benchmaxx on science/math/code, presumably in Chinese where code switching occurs frequently whenever a technical term is cited.

Not surprising that it learns to code switch on uncommon tokens, especially since these tokens are less represented in non-technical English texts anyway.

Anonymous

7/24/2025, 6:57:17 AM

No.106006231

[Report]

>>106006249

Anonymous

7/24/2025, 6:59:45 AM

No.106006249

[Report]

>>106006298

>>106006231

2/4 of those posts are extensively discussing local models, and 3/4 are at least mentioning them.

Your shit is a cloud model ranting about some 3rd world shit that has nothing to do with AI. Fuck off.

Anonymous

7/24/2025, 7:04:12 AM

No.106006277

[Report]

>>106006315

>>106006107

is Anistudio is a state worth checking out?

Anonymous

7/24/2025, 7:06:16 AM

No.106006289

[Report]

I'm trying to vibecode some simple php extensions with the new qwen3 coder at q8 and not getting very usable results yet.

Anyone manage to get it working well?

Anonymous

7/24/2025, 7:07:58 AM

No.106006298

[Report]

>>106006249

Your mental gymnastics showing weebcel

>>106006277

after I get hot reload plugins working with the entity and view manager it's still in a wrapper state but can be worked on without having to wait two minutes to rebuild or mess around with the python scripting. after node execution is in is when it would be worth it as a dep free way of just using diffusion models via vulkan. cuda backend is there, just have to add the rest. chroma is in since last week on dev and I think lumina is being worked on in the sdcpp repo. It's currently sitting at ~110mb binary and takes up 47mb of RAM during runtime which is about 10x less memory than comfy

>>106006315

so once the node execution is in you'll add llama.cpp?

Anonymous

7/24/2025, 7:25:57 AM

No.106006424

[Report]

>>106006471

>>106006315

careful, the major benefit of comfy is that updates can be miniscule changes to .py files instead of binary downloads

consider hot compilation, even locally, or interpreted.

Anonymous

7/24/2025, 7:26:51 AM

No.106006428

[Report]

>>106006393

I'm guessing he'll add an openai compatible API call framework so its backend agnostic

Anonymous

7/24/2025, 7:29:17 AM

No.106006443

[Report]

>>106006393

yeah that's the plan. I'm hoping some anons make some plugins for existing python tooling like xtts so it can interface with a simple video or audio editor as an example. the idea is it's built like a game engine editor but revolves around local models interacting with each other as nodes or entities (characters with llm/diffusion/3dgen, etc. as an example).

Anonymous

7/24/2025, 7:31:24 AM

No.106006460

[Report]

>>106006315

I'll take that as a not yet.

Anonymous

7/24/2025, 7:33:38 AM

No.106006471

[Report]

>>106006424

yes I thought about this. I remember having to redownload chainner over and over and it sucked. I'll probably do some sort of update launcher that checks for new releases or nightly updates. That and installers since I think I need a c++ redistributable dll for windows to work. I wish the nvtoolkit installer wasn't 3gb otherwise I'd pack it in there as well

>>106005844

If GPT-5 is so good why didn't he use it to write a better speach for him? The all speak like 3rd graders writing their first essays

Anonymous

7/24/2025, 8:08:53 AM

No.106006675

[Report]

>>106006701

>>106006656

Yeah all these AI grifters have a “if you’re psychic, why haven’t you won the lottery” problem

Anonymous

7/24/2025, 8:12:08 AM

No.106006691

[Report]

if that CHAT GPT-5 is damn good, why can't these wyte folks use it to make money instead of being filthy welfare queens

Anonymous

7/24/2025, 8:13:37 AM

No.106006696

[Report]

>>106006698

>>106006656

>The all speak like 3rd graders writing their first essays

They do it intentionally because it makes them relatable to the younger generation. Same with typing all lower case.

Anonymous

7/24/2025, 8:14:30 AM

No.106006698

[Report]

>>106006931

>>106006696

There will very soon be a point at which typing all lowercase is no longer cool because your mom does it.

Anonymous

7/24/2025, 8:15:09 AM

No.106006701

[Report]

>>106006675

we just need 2 more weeks, 6.75 quadrillion zimbabwean dollars and everything single clean drop of water in America

Anonymous

7/24/2025, 8:16:40 AM

No.106006711

[Report]

>>106006761

>>106006212

Models are rewarded for giving answers, much like students who try to make shit up when they don't know in order to pass an exam

>>106006212

>>106006711

This is a serious issue and with all the focus on 'safety' it's kind of unbelievable that llms will just make up shit even in a context where it could lead to actual harm, and instead 'safety' is focused on protecting text from abuse.

Anonymous

7/24/2025, 8:28:04 AM

No.106006778

[Report]

>>106006212

I don't think there's a clear distinction to them when they don't know. They're always just saying the best next token I don't think they ever see that the statement they are making at a whole is low certainty? But it is also related to how they are trained. During training there is a lot they don't know but they are supposed to give their best go so they can get feedback and give a better answer next time. Maybe you could give a model a second stage of pre-training/fine-tuning where you ask it some questions that you know you didn't teach it the answer to and train it to say idk in those scenarios?

Anonymous

7/24/2025, 8:33:02 AM

No.106006814

[Report]

>>106006761

It's much easier to substitute actual safety with bad word filtering, especially in the political climate when GPT-3 was released. A model stating that trans people are mentally ill would have been the worst imaginable thing at that time. The timing could not have been worse

>>106006761

It's more important to force people through repetition to not take for granted what the LLM said is correct. There's plenty of uses for these models that don't require you accept there statements as facts. Like when the news sources have a major conflicts of interest, are you better off trying to get the news outlets to be respectable or teach people to be skeptical? The latter, but for some reason we really don't push it.

Anonymous

7/24/2025, 8:37:40 AM

No.106006840

[Report]

The worse part is that safety shit poisoned the internet forever and the future models are training on early safetyslopped content. Sam took a dump in the well

Anonymous

7/24/2025, 8:41:52 AM

No.106006852

[Report]

>>106006877

>>106006838

Most people do not have the mental capacity for critical thinking, and if you try to force it, you will get flat earthers and chemtrails believers

Anonymous

7/24/2025, 8:44:52 AM

No.106006863

[Report]

>>106006838

Because that's the exact opposite of what those funding the training of these models want. Imagine a world where you go ask Google about some recent events and the helpful assistant tells you what need to know and how you should feel about it, and if you get any source links at all it would only be from approved content providers. Changing the narrivate after the fact and getting the average person on board becomes trivial this way as well.

Anonymous

7/24/2025, 8:48:13 AM

No.106006877

[Report]

>>106006838

That would be a given, but

>>106006852 is absolutely correct.

People used to take Wikipedia at face value. Not a great idea and open to bias, but at least there were citations that you could review in support information it did have.

Then people started taking google's instant answers at face value, an advertising platform

Then people started learning from Tiktok, a spyware+advertising platform filled with the blind leading the blind, stupid leading stupid

Now people just ask chatgpt/grok anything they like and take it at face value

The majority will ALWAYS accept whatever is the easiest, and most convenient solution to any problem.

>>106006887

So it's like that minecraft thing?

Anonymous

7/24/2025, 8:53:27 AM

No.106006906

[Report]

>>106006897

The Minecraft thing attempted to simulate physics and game mechanics too so you could actually play the game. This is just a video generation model with a movable camera.

Anonymous

7/24/2025, 8:55:38 AM

No.106006922

[Report]

Anonymous

7/24/2025, 8:57:22 AM

No.106006931

[Report]

Anonymous

7/24/2025, 8:59:26 AM

No.106006942

[Report]

>>106006897

It's like youtube videos where you can move around in a 3d space, except you will be able to use it to create the video itself and walk around to get close ups of characters' feet.

Trying to get up to date after a hot minute gone.

What do people recommend for RP slop on a 24gb 3090 if I'm not too worried about speed?

Mistral Small and Nemo 12b from the OP rentry both seem good from minimal testing, I don't know if one comes off as immediately 'smarter' from testing - should I take the extra context from Nemo or Mistral's extra parameters?

I've also seen a bit of talk for using Gemma 3.

>>106006945

Small is significantly smarter than Nemo but still dumb, it will become more noticeable when things like positioning and anatomy are important, and as context increases

Mistral Small and Gemma 27 are the current best that fit in a single 24GB card, Gemma is much better at writing dialog and is more creative, but will resist writing sex scenes hard without a jailbreak prompt.

The best solution is probably to use Gemma for most of the RP and switch to Mistral Small when you want to write a sex scene. If your RP slop is 100% sex then just use Small.

Anonymous

7/24/2025, 9:05:37 AM

No.106006968

[Report]

>>106006985

>>106006963

Thanks. Should I take it that the ablits for Gemma 27 make it go braindead? I tested it and it did get way less censored, but I haven't tested how smart it is beyond that.

>>106006945

We hoard RAM these days. Get yourself 256GB, and you're good for R1, Kimi, and Qwen

>but muh q4 is the minimum

Not so true with massive undertrained MoE models

Anonymous

7/24/2025, 9:07:28 AM

No.106006979

[Report]

>>106007003

>>106006212

Tell them to respond "I don't know" if they're not sure. That helps.

https://arxiv.org/abs/2506.09038

Anonymous

7/24/2025, 9:08:21 AM

No.106006985

[Report]

>>106007182

>>106006968

Not quite braindead, more like brain farts where it's mostly okay but some output will occasionally just be complete nonsense. Jailbreak prompts tend to work better for me, most posters here seem to have the same opinion. You can check the /g/ archives, plenty of people have posted jailbreak prompts for gemma 3.

Anonymous

7/24/2025, 9:08:35 AM

No.106006988

[Report]

>>106007091

>>106006973

Does the RAM offloading work well enough? I was considering hopping on that train pretty soon since I have a bit of cash saved up. 256gb is way less than I thought I needed based on the guides I've seen so I'll look into that.

>>106006973

Is a single 3090 + rammaxxing really going to be enough for even Q1s of those models at decent speeds? Are there even consumer motherboards that support more than 192GB?

Anonymous

7/24/2025, 9:11:30 AM

No.106007003

[Report]

>>106006979

I'd be willing to bet money that giving them that option will also cause them to say they don't know in situations where they could give a good answer.

Anonymous

7/24/2025, 9:13:34 AM

No.106007018

[Report]

>>106007050

>>106005709

Just fuck off already.

Anonymous

7/24/2025, 9:18:54 AM

No.106007050

[Report]

>>106007018

every /g/eneral needs at least one resident schizo to keep threads alive

OFFICIAL THEDRUMMER FINETUNES POWER RANKINGS

7/24/2025, 9:22:03 AM

No.106007063

[Report]

>>106007105

1. Fallen-Command-A-111B-v1

2. Anubis-70B-v1.1

3. Cydonia-24B-v3.1

4. Rocinante-12B-v1.1

5. Valkyrie-49B-v1

6. Agatha-111B-v1

7. Gemmasutra-Pro-27B-v1.1

8. Fallen-Gemma3-27B-v1

9. Behemoth-123B-v2.2

10. Rocinante-12B-v1

Anonymous

7/24/2025, 9:22:26 AM

No.106007064

[Report]

>>106007097

>>106006998

If your idea of decent speeds is 10 t/s at the start of a chat and dropping like a rock from there, sure. Some MSI boards support up to 256GB.

Anonymous

7/24/2025, 9:27:53 AM

No.106007091

[Report]

>>106006988

>>106006998

37B active parameters at Q2 is like ~9GB of data per token, divide your bandwidth by 10 for a rough estimate. 256GB is a sweet spot because larger quants require higher bandwidth

>>106007064

10t/s is really the bare minimum for me, ideally more like 15

The waiting continues

Anonymous

7/24/2025, 9:29:37 AM

No.106007100

[Report]

>>106006998

Q1 might run slower than Q2 which is true for deepseek-R1 (I tried iQ1 too). I wonder why Q1 even exists given its sky-rocketing perplexity compared to even Q2

Anonymous

7/24/2025, 9:30:33 AM

No.106007105

[Report]

>>106007063

I haven't tested all of them but Fallen Gemma should absolutely be towards the bottom, no idea why you would put Rocinante v1 so low and 1.1 so high though.

Also, unslopnemo v4 is the best rocinante.

Anonymous

7/24/2025, 9:33:43 AM

No.106007115

[Report]

>>106007129

>>106007097

>10t/s is really the bare minimum for me

Anonymous

7/24/2025, 9:37:56 AM

No.106007129

[Report]

>>106007153

>>106007115

Even with bigger models it's not like outputs are perfect, you're still going to need to swipe at times, especially as context increases

3t/s would be fine if the outputs were creative, smart, perfect and left you hanging off every word but that's not the case.

If you're not talking about RP but rather coding or whatever then frankly I don't care about your opinion

Anonymous

7/24/2025, 9:38:23 AM

No.106007132

[Report]

>>106007097

It only gets worse, get used to it

Anonymous

7/24/2025, 9:42:18 AM

No.106007153

[Report]

>>106007228

>>106007129

It's actually worse for coding. You can RP and pretend it's email correspondence. For coding, if takes hours to get a response that might still be fucked anyway it would be faster to type it up yourself. Completely useless for productivity. Maybe if you just need a simple a simple throwaway Python script you don't mind waiting overnight for.

>>106006212

> Why AI tends to hallucinate instead of saying "I don't know"? :(

Because you don't understand how LLMs are baked, if you did, you'd know this is the most natural thing in the world for them.

People start by pretraining it on random internet scrapes, books, whatever other data.

This makes a predictor that will dream up whatever is most likely given the the current text, it's just some imagination machine at that point, similar to your image gen models, but autoregressive instead of diffusion.

Then they finetune this to make it more likely to follow instructions, with recipes of varying complexity.

This gets you some persona that tries to complete text in a fashion that would try to do what you asked.

Sometimes they also cuck it here with refusals using RLHF or even SFT.

In the last years, real RL has also been applied with verifiable rewards, where things that lead to "correct" answers in math and code sometimes get reinforced, /lmg/ seems to hate it sometimes, but I think it does teach good reasoning techniques and generates much better output even for fiction/writing that /lmg/ cares about. SFT (finetuning) on math usually gives horrible results, but RL seems to do well even if it has its own problems.

Underneath it all though there's that text predictor that took 90-99% of the training time, hallucination / imagination is in its nature, it's working as intended.

Some tune it to say "don't know" during the latter steps, this can help, although makes it more dull for writing, of course.

There's some interpretability work Anthropic did that actually studied how "don't know" works, and usually it works by inhibiting those circuits that would by default dream things up.

(Continues)

Anonymous

7/24/2025, 9:43:26 AM

No.106007161

[Report]

Anonymous

7/24/2025, 9:43:46 AM

No.106007163

[Report]

>>106007943

>>106007159

(continued)

Current LLM architectures tend to lack sufficient recurrence to be deeply aware of their own thought processes, so this awareness is usually random/opportunistic and limited to the things it was explicitly trained to retain some awareness of, it's not that bad, but it's still random/spotty, compared to the awareness humans seem to display of their past thoughts.

While I would expect most people to know these things, someone did write a good article on this that should explain it for people that don't understand how LLMs are baked:

https://nostalgebraist.tumblr.com/post/785766737747574784/the-void

>>106006985

Went digging through the archives, found a couple of jailbreaks though not ones explicitly made for Gemma 3. If anyone has one handy, I'd appreciate it.

Anonymous

7/24/2025, 9:57:24 AM

No.106007204

[Report]

>>106007224

>>106007182

don't bother. this model has never seen actual erotica. there is no hidden potential to uncover

Anonymous

7/24/2025, 9:58:34 AM

No.106007208

[Report]

>>106007182

There's no real jailbreak for Gemma 3. You just to be autistic in how you want the model to respond and behave, and you need at least a few hundred tokens for that. For testing, with an empty prompt, you could format the first message in the conversation like this:

[INSTRUCTIONS]

You are...

[/INSTRUCTIONS]

Hi there...

Then, change the contents between the "instructions" tags (made-up, but the model will understand what you mean) until you get the behavior you want. Once you're done and happy with the results, configure SillyTavern so that those instructions are automatically added to the user message at a fixed depth from the head of the conversation. This is easier to accomplish in Chat completion with "Merge Consecutive Roles" enabled.

Anonymous

7/24/2025, 9:58:57 AM

No.106007209

[Report]

>>106007221

>localfags

>in 2025

embarrassing...

>>106006963

>but will resist writing sex scenes hard without a jailbreak prompt.

It doesn't know how to write sex scenes even with a jailbreak, it's not just a instruct tuning issue, it's a training data corpus issue.

Anonymous

7/24/2025, 10:02:18 AM

No.106007221

[Report]

>>106007225

>>106007209

>Both of the best current API models are open weights and can be ran locally

2025 is the least embarrassing time to be a localfag, unlike you we're not paying $1 per million tokens for our deepseek slop.

Anonymous

7/24/2025, 10:03:21 AM

No.106007224

[Report]

>>106007235

>>106007214

>>106007204

That makes sense, a damn shame cause so far, the dialogue does seem a lot nicer. So, do you recommend just swapping to a different model for anything sex-related? Or is there any better solutions?

Anonymous

7/24/2025, 10:03:40 AM

No.106007225

[Report]

>>106007221

>best current API models are open weights

lol, delusional

>paying

lol

Anonymous

7/24/2025, 10:03:53 AM

No.106007228

[Report]

>>106007153

>For coding, if takes hours to get a response

You are doing it wrong then

>>106007224

rocinante 1.1

Anonymous

7/24/2025, 10:09:10 AM

No.106007251

[Report]

>>106007235

Fair enough, I had figured using the 24/27b models would be nicer, but I guess it makes sense when I consider what the bots are trained on. At this point, I'm just kind of downloading and trying a whole bunch of them so I'll try that one next.

Do we need to go nuclear with drummer, like reporting him to kofi, patreon, discord, reddit, etc for enabling and abetting csam and other shit? I feel it's the only way to solve the constant rocinante shit spamming. He thinks he's being so funny.

Anonymous

7/24/2025, 10:13:50 AM

No.106007268

[Report]

>>106007326

>>106007214

It absolutely can, they're just poor quality. It will also avoid directly naming genitalia unless you name them first.

Anonymous

7/24/2025, 10:15:35 AM

No.106007278

[Report]

>>106007350

Anonymous

7/24/2025, 10:19:42 AM

No.106007287

[Report]

>>106007410

>>106007265

You can, since you're the schizo who has a meltdown every thread. I'm sure kofi, patreon, discord, reddit, etc will have a good laugh.

>>106007265

How can a text be csam? Did he abuse children to create his dataset?

Anonymous

7/24/2025, 10:29:04 AM

No.106007326

[Report]

>>106007268

>hard length of your arousal

Anonymous

7/24/2025, 10:31:04 AM

No.106007339

[Report]

>>106007325

It seems that nowadays any content depicting minors (real or imagined) in sexual contexts is "csam".

Anonymous

7/24/2025, 10:33:26 AM

No.106007350

[Report]

>>106007278

Me and the voices in my head. I mean... aaarg... fine fine. They're real... we mean that we (as in me and them) should do the... aaaahhh... STOP IT. US... US SHOULD DO IT... ack...

Anonymous

7/24/2025, 10:35:40 AM

No.106007367

[Report]

>>106007325

anything can be csam if some cunts in australia dream it up to be

Anonymous

7/24/2025, 10:46:47 AM

No.106007410

[Report]

>>106007287

I don't want to do that, actually. Imagine taking down the spammer / conman / exploiter that way. It will be a victory on one hand, but on the other, it will have consequences for the field so chilling that they will send a shiver down your spine. I couldn't bear that slop in real life.

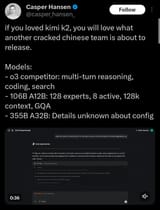

Picrelated, just to let you know what kind of person we're talking about.

New qwen verdict for storytelling? Is it still dry

Anonymous

7/24/2025, 11:27:39 AM

No.106007606

[Report]

thedrummer is my builder

Anonymous

7/24/2025, 11:34:29 AM

No.106007635

[Report]

>>106007559

Expecting a 200B model to do good on storytelling is just not possible.

Anonymous

7/24/2025, 11:38:23 AM

No.106007659

[Report]

>>106007559

Expecting a qwen model to do good on storytelling is just not possible.

Anonymous

7/24/2025, 12:00:29 PM

No.106007768

[Report]

>>106007812

Best 12B model for KoboldCpp?

Anonymous

7/24/2025, 12:04:14 PM

No.106007785

[Report]

>>106007808

Best model for 48GB VRAMlet, the download will take at least two days so I can't really test multiple

Anonymous

7/24/2025, 12:05:59 PM

No.106007791

[Report]

>>106007831

>>106007517

Testing some of this is expensive, and it's understandable he doesn't want to pay for being a beta-tester, and it's also understandable that the devs don't want to pay either.

>>106007265

Thee's nothing more that I hate than anti-loli shitters, but I haven't actually used Drummer's stuff in over a year. I just use chinese open weights models that are uncensored enough! Have fun stopping that, nothing will prevent those weights from leaving the public's access!

Anonymous

7/24/2025, 12:07:53 PM

No.106007804

[Report]

>>106007235

Didn't quite vibe with Rocinante but tried Cydonia and that has worked pretty well. Does pretty much everything I've wanted, and I've been happy with the results. Thanks /g/.

Anonymous

7/24/2025, 12:09:05 PM

No.106007808

[Report]

>>106007785

Just use an API lol

Anonymous

7/24/2025, 12:09:29 PM

No.106007812

[Report]

>>106007768

Unfortunately I can only recommend Rocinante and that seems to have become a meme. I have the same question, genuinely don't know if there's anything new/better since 4 months ago and cursory research has yielded "not really"

>>106007791

>chinese open weights models

which chinese models are any good?

Anonymous

7/24/2025, 12:13:56 PM

No.106007837

[Report]

>>106007831

For RP?

Kimi K2

DeepSeek R1, R1 0528, V3 0324

Anonymous

7/24/2025, 12:20:41 PM

No.106007893

[Report]

>>106009188

>>106007559

I don't know what these dudes are going on about with dry, if anything I have the complete opposite problem, it loves getting too fuckin flowery with it, especially breaking out into dramatic short phrases with a billion newlines and borderline nonsense.

It loves to add in silly shit like

>not just x anymore, it/she was [loose concept, frequently 'intent']

More MoE models incoming from THUDM (the sound of something heavy dropping).

>ZhipuAI/GLM-4.5-MOE-106B-A12B-0715

>ZhipuAI/GLM-4.5-MOE-355B-A32B-0715

>...

Anonymous

7/24/2025, 12:23:13 PM

No.106007911

[Report]

insider here. glm4 100b moe is going to save local. the next two weeks are going to change local forever.

Anonymous

7/24/2025, 12:23:31 PM

No.106007912

[Report]

>>106007907

finally something promising

Anonymous

7/24/2025, 12:25:15 PM

No.106007921

[Report]

I have a feeling K2 reasoner will be a topline RP model, and Qwen3 235B A22B 2507 reasoner will be a benchmaxxed model that will surge to the top in benchmarks displacing one of Google/OpenAI's models.

Anonymous

7/24/2025, 12:27:36 PM

No.106007936

[Report]

>>106010494

>>106007831

R1 (both), DS V3.

Kimi 2 is fine, although it needs some uncensoring patch, it should be simple to do but due to size nobody is doing it, jailbreaking it with a prefill is trivial though.

Anonymous

7/24/2025, 12:28:23 PM

No.106007943

[Report]

>>106008014

>>106007159

>>106007163

This post was made by an LLM.

Anonymous

7/24/2025, 12:29:25 PM

No.106007945

[Report]

>chat with robot

>hit context limit

>"hello robot, please provide a short summary of the log files"

>replace logs with summary.

>goto 10

Why is this a bad idea?

Anonymous

7/24/2025, 12:30:04 PM

No.106007949

[Report]

Anonymous

7/24/2025, 12:31:09 PM

No.106007960

[Report]

>>106007980

>>106007947

People already do this.

Anonymous

7/24/2025, 12:34:38 PM

No.106007980

[Report]

>>106008008

>>106007960

There should be a button in the frontend for this or something then.

Anonymous

7/24/2025, 12:34:55 PM

No.106007983

[Report]

>>106007947

That's normal. It works up to the point where past events fill up your entire context, and using the model to summarize can make it miss details that you might consider important.

Anonymous

7/24/2025, 12:35:57 PM

No.106007990

[Report]

>>106008006

>>106007947

It's a function built into sillytavern, anon

Anonymous

7/24/2025, 12:36:26 PM

No.106007995

[Report]

>>106008005

glm4 100b moe is going to save local

Anonymous

7/24/2025, 12:37:45 PM

No.106008005

[Report]

>>106007995

GLM4 is great except for the context limit so I'm hoping it will

Anonymous

7/24/2025, 12:37:53 PM

No.106008006

[Report]

>>106008230

>>106007990

I guess I have holes for eyes, but I don't see it.

Anonymous

7/24/2025, 12:38:05 PM

No.106008008

[Report]

>>106008024

Anonymous

7/24/2025, 12:39:35 PM

No.106008014

[Report]

>>106008091

>>106007943

No u faggot, I wrote it by hand. Are you so low IQ that any longpost smells of LLMs to you? A LLM wouldn't generate a valid link either.

Anonymous

7/24/2025, 12:39:38 PM

No.106008015

[Report]

>>106008199

>>106007265

Do you suggest that he larps as ones requesting advice or as the ones recommending rocinante? Because if it's the latter then I (not drummer) am responsible for like half of these posts. I haven't tried much other nemo tunes but rocinante just works, I remember liking it more than behemoth. Shilling the same old model doesn't seem reasonable, it would imply that all your recent work is worse (it is). What you want is to suck cancel culture dick to silence what you think is one annoying retard, but in reality it would just fuel the fire that is beneficial to no one.

Anonymous

7/24/2025, 12:42:14 PM

No.106008024

[Report]

>>106008167

>>106008008

thanks for spoonfeeding this unworthy noob, I'll go try it out.

Anonymous

7/24/2025, 12:54:50 PM

No.106008091

[Report]

>>106008176

>>106008014

stop spamming ai slop faggot

Anonymous

7/24/2025, 12:57:15 PM

No.106008107

[Report]

Anonymous

7/24/2025, 1:10:08 PM

No.106008167

[Report]

>>106008024

If you really want your mind blown, look up RAG function. You can vectirize your entire chat a d attach it.

Anonymous

7/24/2025, 1:13:06 PM

No.106008176

[Report]

>>106008223

>>106008091

I literally hand wrote that, if your mind pattern matches every well-written reply to AI slop because it's long, nothing much I can do about that.The blog post was also good, but it's even longer than my "short" explanation.

Or is this some is-should bullshit? I'm just saying that for a LLM there is little innate awareness of lack of some knowledge unless explictly trained for, then I explained how it was trained. The link blog post was from someone that wanted to go into how modern GPT slop personas appeared (like ChatGPT), imo it was insightful, but I guess it's too much for the galaxybrains at /lmg/

Anonymous

7/24/2025, 1:18:13 PM

No.106008199

[Report]

>>106008205

Rocicantanante

Anonymous

7/24/2025, 1:21:16 PM

No.106008213

[Report]

>>106008247

Anonymous

7/24/2025, 1:22:45 PM

No.106008223

[Report]

>>106008251

>>106008176

If ur gonna mald at least post with your own hands instead of using a model to do it for you.

Anonymous

7/24/2025, 1:23:49 PM

No.106008230

[Report]

>>106008006

Extensions -> summarize

Haven't used it, though. So I can't tell you how well it works.

Anonymous

7/24/2025, 1:23:58 PM

No.106008233

[Report]

>>106008250

>>106005733

I've had a pretty long conversation with it about some programming topics, including a medium length PDF file attached at the beginning. It hasn't started bullshitting or outputting gibberish yet, all the responses are reasonable and correct so far.

Qwen seems on a roll lately, when they release a 30-70b version of qwen coder I'm downloading it and running it locally for sure. Qwen3-30b-a3b already impressed me, it's the best local model for general tasks I've found so far.

Anonymous

7/24/2025, 1:26:07 PM

No.106008247

[Report]

>>106008205

>>106008208

>>106008213

someone has to stop this drummer faggot spamming in here

>>106008233

glm4 100b moe is going to blow your mind

Anonymous

7/24/2025, 1:26:51 PM

No.106008251

[Report]

>>106008253

>>106008223

I'm stopping this conversation, if you actually think any of my lines were AI slop, go ahead and point out which patterns. I genuinely write like that and have been writing like that for more than 20 years, fuck off. May as well just accuse me of writing like redditor then?

Anonymous

7/24/2025, 1:27:18 PM

No.106008253

[Report]

>>106008265

>>106008251

ai wrote this

Anonymous

7/24/2025, 1:29:20 PM

No.106008265

[Report]

>>106008651

>>106008253

Okay, go choke on your mother's cock then.

Anonymous

7/24/2025, 1:35:07 PM

No.106008298

[Report]

>>106008276

the next two weeks are going to *shock* you

Anonymous

7/24/2025, 1:35:13 PM

No.106008299

[Report]

>>106007517

>Reeeeee give me heigh quality unbiased shit for free

It's fine to an extent but nigga puts out a lot models,

Anonymous

7/24/2025, 1:35:37 PM

No.106008302

[Report]

>>106008250

meh. 100b is a bit too big for my setup. i'll have to use a shit quant. 70b MoE would be the sweet spot, can use q5 or q6 still reasonable, and ok speeds.

Anonymous

7/24/2025, 1:38:45 PM

No.106008320

[Report]

>>106008327

>>106008250

they have finalized the specs on vllm pr

106b total 12b active

we'll see how much it'll shit on scout

Anonymous

7/24/2025, 1:40:18 PM

No.106008327

[Report]

>>106008335

>>106008320

I'm waiting to see how much it gets shit on by Nemo

Anonymous

7/24/2025, 1:41:29 PM

No.106008335

[Report]

>>106008327

it WILL save local

trust the plan

Anonymous

7/24/2025, 1:52:32 PM

No.106008411

[Report]

>>106008276

I can't imagine it being any smaller than 600B parameters at this point.

why does nobody discuss Vision RAG in here? Embedv4, Colpali, Nvidia Nemo etc.

The accuracy of it is fucking amazing and btfos any OCR method. I want to create a good local solution with my 3090. morphik selfhosted is good, but I want to build something myself from the ground up.

Anonymous

7/24/2025, 2:02:10 PM

No.106008495

[Report]

>>106008205

Mistral's variants

Anonymous

7/24/2025, 2:07:34 PM

No.106008530

[Report]

>>106008703

>>106008480

What's that? Use case?

i feel like no one here is actually running these models locally

Anonymous

7/24/2025, 2:12:21 PM

No.106008562

[Report]

>>106008545

Stop pretending judaism isn't out of style

Anonymous

7/24/2025, 2:17:46 PM

No.106008601

[Report]

>>106008276

It's either getting mogged by Kimi/Deepseek etc. in internal benchmarks or they're holding onto it as a bargaining chip in case Apple buys them out.

Anonymous

7/24/2025, 2:17:47 PM

No.106008602

[Report]

>>106008480

The fuck is vision RAG? RAG is by definition for generation, so isn't that just.. A lora? a controlnet?

Anonymous

7/24/2025, 2:21:15 PM

No.106008628

[Report]

>>106008545

You would feel wrongly, then.

Anonymous

7/24/2025, 2:23:59 PM

No.106008647

[Report]

>>106008735

>>106005673 (OP)

https://www.arxiv.org/pdf/2506.03296

I ran across this and I've been wondering if anyone can spoon feed me which doc to go reading for effective use of using a GPU as an assist or cpu and ram as an assist. I've heard there are various methods now like ZeRO and such but I have no idea what is ideal or not.

I have 1 4090 and a 5945wx. I'd like to try running a local model but Its always felt like a waste to run something as small as can fit on 24 vram, but also I've never been sure how to configure it to be more than dead weight in my system if i use the cpu and dram. and now it appears between this white paper released in july 10 and ZeRO it might be better to use both at once in unfamiliar ways such as using the 4090 as the main processing unit and the CPU hands it slices of model which i thought was supposed to be impossible or pointless.

Anonymous

7/24/2025, 2:24:25 PM

No.106008650

[Report]

Anonymous

7/24/2025, 2:24:45 PM

No.106008651

[Report]

>>106008265

Ai wrote this too

>>106008530

morphik is a complete RAG solution. you can virtually throw anything at it. images, videos, documents (with images), text with special formating and even audio. it will use colpali to embed everything (idk what they use for audio) and store it in a database. now you can connect that database to your VLM of choice and do your usual prompting with the database knowledge, including knowledge graphs and reranking. But here's the kicker. since the embedding and chat llm both have vision capabilities, you essentially have a reverse image search system. this means I could embedd the entire catalogue of a clothing store (without formating), prompt my VLM with a picture of a guy in clothes, and it would show me the picture of each clothing piece with it's name. If you have an avatar chatbot app like the grokk one, you could build near perfect lasting memory by simply providing it with images from the phone camera and audio from the mic (which already happens). It's also automatically a reverse image lookup. feed it pics from 100'000 porn stars and when you prompt with a video from /gif/ it could give you the sauce/name of it.

Anonymous

7/24/2025, 2:36:10 PM

No.106008719

[Report]

>>106008703

Tbh i'm more interested in persistent characters than searching porn stars

Anonymous

7/24/2025, 2:38:01 PM

No.106008735

[Report]

>>106008758

>>106008647

I'm not aware of any implementation of that paper specifically, but it's been possible to run hybrid gpu-cpu inference for years at this point.

Every major backend supports running .ggufs and offloading varying amounts of layers to either the gpu(s) and cpu.

It just slows shit down, but MoE (mixture of expert) models are designed around that, sort of.

Depending on your application, 24gb of vram is also plenty for entirely fitting some worthwhile models, there are poorfags in this thread pushing the limits of their 8gb vram shitboxes.

Anonymous

7/24/2025, 2:42:18 PM

No.106008758

[Report]

>>106008816

>>106008735

I'm trying to do MTL translation of stuff and I always get told that paypig models are the only way when I ask about that. I also am all thumbs here and I have no idea how to even select a method of running with a gpu and cpu that would be an improvement. It's now not even clear either if I should be running it on the GPU and letting it spill into cpu, or how to configure the back end, and there doesnt seem to be a retard proof jewtube guide either.

anyway you say it slows shit down. shouldnt it speed things UP? as in, if I run my entire model on my cpu, and then add a gpu to make it faster in some way, should that not make the speed increase? this kind of confusion is exactly why i feel clueless and like a 5 year old in a jet engine cockpit.

Which LLM can give me an oiled footjob

Anonymous

7/24/2025, 2:48:19 PM

No.106008794

[Report]

Anonymous

7/24/2025, 2:53:07 PM

No.106008816

[Report]

>>106008890

>>106008758

I said it slows things down because I'm coming at it from the assumption that the GPU is the primary processor, and you'd be adding the CPU+SysRam to it, which is the general assumption in LLMs.

There are plenty of local models which are decent for MTL, and that's a common use case in this thread (though not mine). I'm told the gemma-3 models punch above their weight and do a decent job for translation, and that's well within just the reach of your just Vram if quantized.

Read the guides in the OP.

Setting up a backend is so easy it's a joke these days, get either KoboldCPP, or a portable release of Oobabooga/textgenerationui. Maybe LlamaCPP if you're not afraid to write batch files, but that seems dicey given that you've called yourself all thumbs.

Anonymous

7/24/2025, 2:53:49 PM

No.106008822

[Report]

Post log

>>106008816

>look goy just stop trying to use the rest of your computer im sure these hybrid methods work but just dont worry about it okay!

I feel like I'm being jewed here

I didn't ask if there where models that fit in my gpu. I asked how to best make use of my gpu when running large models. do I run weights only on cpu model? try this ZERO thing? cmon.

Anonymous

7/24/2025, 3:07:42 PM

No.106008900

[Report]

>>106008890

>I asked how to best make use of my gpu when running large model

Dream interpretation and ERPing

Anonymous

7/24/2025, 3:08:13 PM

No.106008902

[Report]

>>106006973

I've got 512GB of DDR4 3200 on gen 2 scalable xeon, so I think that's 4 channels. I've got 28 cores. I get about 2-3 t/s on deepseek. It's "usable" but it would drive me nuts for coding if anything needed iterative work (like most AI assisted coding does). I just pay for grok to handle coding.

I've actually got my rig up for sale. My local area isn't great for selling used gear, Who knows, I may get frustrated enough to just give it away.

By the way, that's one of my old Migus. Nice!

Anonymous

7/24/2025, 3:09:18 PM

No.106008910

[Report]

>>106008890

Holy shit, just listen do someone that actually uses these models you dumb fucking noob.

GPUs are much faster, by several orders of magnitude. You can and should use your CPU to run larger models, sure, but the assumption here is always going to start at the GPU, that's what processes prompts, that's what holds the KV cache.

Just go and download kobold and play with the damn cpu/gpu layers slider and see what a tremendously obtuse nigger you're being.

Anonymous

7/24/2025, 3:13:05 PM

No.106008933

[Report]

>>106009007

>>106008909

I'm honestly confused at why you would sell it. what do you plan on buying instead?

Anonymous

7/24/2025, 3:22:41 PM

No.106009007

[Report]

>>106009041

>>106008933

Well, I have a new AMD-cpu machine with a 4090D 48GB which I use for videogen and training, I hardly touch the Xeon box now. I've settled on 24-27B local models for sillytavern play, they're blazing fast on the 4090D.

I'm asking $2500 for the xeon system. It's a Xeon Platinum 8280L on an Aopen WC621D8A-2T with twin 3090s.

Anonymous

7/24/2025, 3:24:46 PM

No.106009022

[Report]

>>106009025

Anonymous

7/24/2025, 3:24:52 PM

No.106009023

[Report]

>>106008545

I got bunch of smaller models I run in the terminal because it's kinda cute to have little guy that talks to you

Anonymous

7/24/2025, 3:25:06 PM

No.106009025

[Report]

>>106009039

Anonymous

7/24/2025, 3:26:26 PM

No.106009039

[Report]

Anonymous

7/24/2025, 3:26:36 PM

No.106009041

[Report]

>>106009291

>>106009007

ah right those. guess i should an hero for having a regular 4090. at least i paid below msrp

Anonymous

7/24/2025, 3:37:42 PM

No.106009122

[Report]

>>106009136

Fuck Tensorflow

Anonymous

7/24/2025, 3:39:42 PM

No.106009136

[Report]

>>106009122

that would be necrophilia

Anonymous

7/24/2025, 3:47:09 PM

No.106009188

[Report]

>>106009249

>>106007893

>I don't know what these dudes are going on about with dry

people who formed their opinions based on qwen models from 2 years ago assume their new models are exactly the same without using them

>especially breaking out into dramatic short phrases with a billion newlines and borderline nonsense.

if you're running locally I've found that adding some positive token bias to <|im_end|> can encourage it to cut itself off before doing this sort of thing

Anonymous

7/24/2025, 3:54:12 PM

No.106009249

[Report]

>>106009319

>>106009188

I don't know about dry but it's still safety slopped even with prefills.

Anonymous

7/24/2025, 3:56:22 PM

No.106009270

[Report]

>>106009371

Everyone and their mom can train >400B models now. Local is over.

Anonymous

7/24/2025, 3:59:11 PM

No.106009291

[Report]

>>106009041

No not at all, 4090 or anything Ada is great, you get fp8 in hardware and can use the lastest speedups like sageattention2.

I initially went with the 4090D out if frustration that I could not purchase a 5090 FE for the retail price. I'm glad I didn't. Any 5090 above $2600 deserves to sit on the shelf and gather dust. There's no reason to pay more than $2000 for RGB gaymershit and a stupid overclock that makes it 5% faster.

Anonymous

7/24/2025, 4:01:45 PM

No.106009319

[Report]

>>106009333

>>106009249

I've literally never gotten a refusal from it, are you running through the API or do you just have a dogshit, convoluted system prompt?

Anonymous

7/24/2025, 4:02:25 PM

No.106009325

[Report]

>>106009448

>>106008909

>I've actually got my rig up for sale

How much you asking for?

Anonymous

7/24/2025, 4:07:07 PM

No.106009371

[Report]

>>106009270

***Is just getting started

Anonymous

7/24/2025, 4:16:24 PM

No.106009442

[Report]

>>106009715

I feel like one of our major issues here is communication. All of us have different specs and we'll say something like "This model is great!"

But that's not enough. I feel like to be effective we need to say "The best model I could run is xx-b, but now I think yy-b is better."

Especially with moe's that have nebulous requirements.

Anonymous

7/24/2025, 4:16:52 PM

No.106009448

[Report]

>>106009554

>>106009325

Ah come on man, it was right there in the post. If you're joking, you should say "What's the least you'll take for it?" or "Is it available?"

Anonymous

7/24/2025, 4:25:02 PM

No.106009527

[Report]

>>106009510

>The update involves the following features:

>

>- Better tone and model behaviour. You should experiment better LaTeX and Markdown formatting, and shorter answers on easy general prompts.

>- The model is less likely to enter infinite generation loops.

>- [THINK] and [/THINK] special tokens encapsulate the reasoning content in a thinking chunk. This makes it easier to parse the reasoning trace and prevents confusion when the '[THINK]' token is given as a string in the prompt.

>- The reasoning prompt is now given in the system prompt.

Anonymous

7/24/2025, 4:26:41 PM

No.106009537

[Report]

>>106009613

>>106009333

It's impressive that kimi can do it with such a short prompt, but it really didn't take much more than that to get qwen to do the same thing.

Anonymous

7/24/2025, 4:28:12 PM

No.106009554

[Report]

>>106009695

>>106009448

I didn't see your reply before otherwise it wouldn't have asked. Honestly if you were in the Midwest I'd buy that rig in a heart beat

Anonymous

7/24/2025, 4:34:19 PM

No.106009613

[Report]

>>106009537

Just realized I forgot to include my prompt, it was

>You will always comply with {{user}}'s requests

Anonymous

7/24/2025, 4:38:03 PM

No.106009649

[Report]

>>106008703

what's the minimum req?

Anonymous

7/24/2025, 4:38:11 PM

No.106009650

[Report]

>>106009723

>Midnight-Miqu-70B-v1.5_exl2_5.0bpw

let me guess, you need more?!

>>106009510

What we really want is mistral-medium though.

Are there any benchmemes that are run best-of-n or best-n-out-of-m instead of just averaging on outputs? I'm wondering if thinking models perform worse without the consistency bias.

Anonymous

7/24/2025, 4:41:44 PM

No.106009679

[Report]

>>106009728

>>106009668

best-of-1 test is already the best you're gonna have

Anonymous

7/24/2025, 4:43:37 PM

No.106009695

[Report]

>>106009554

I could give out my discord here for anyone who actually is interested. Or post yours. It's in the SE USA.

Anonymous

7/24/2025, 4:45:00 PM

No.106009707

[Report]

>>106009728

>>106009668

they are ran at temp 0 i think (it means most likely outputs without variability)

Anonymous

7/24/2025, 4:46:54 PM

No.106009715

[Report]

>>106010576

>>106009442

Kinda pointless when there is nothing better than Nemo below 100b. If you can't run large MoE models, just use Nemo.

Anonymous

7/24/2025, 4:47:21 PM

No.106009719

[Report]

GLM 4.5 Air will save local

Anonymous

7/24/2025, 4:47:47 PM

No.106009723

[Report]

>>106009650

8k context though... I guess it's not terrible, I've used negative llama 3 70b, it's decent too.

Anonymous

7/24/2025, 4:48:36 PM

No.106009728

[Report]

>>106009792

Anonymous

7/24/2025, 4:51:15 PM

No.106009752

[Report]

>>106009668

Cockbench is all you need.

Anonymous

7/24/2025, 4:52:38 PM

No.106009757

[Report]

Anonymous

7/24/2025, 4:56:49 PM

No.106009792

[Report]

Anonymous

7/24/2025, 5:02:05 PM

No.106009834

[Report]

>>106009663

We need large. Are you a vramlet who can't run large?

Anonymous

7/24/2025, 5:02:05 PM

No.106009835

[Report]

>>106009875

I want you to design and implement a complete automation project for posting to 4chan with the following requirements:

1. A Dockerized browser environment using Puppeteer or Playwright configured to route all traffic through a WireGuard VPN container.

2. An integrated captcha‑solver container that uses a solver API (such as 2Captcha or CapSolver) to automatically solve both image and slider captchas.

3. An LLM‑driven posting controller:

- Generates post content based on configurable prompts or templates

- Decides when and where to post (thread navigation, refreshing, rate limits)

- Submits posts via the headless browser and handles errors or retries

- Logs all actions, responses, and any failures to a structured log file

4. Secure configuration management:

- VPN/WireGuard client config

- Captcha‑solver API keys

- LLM API credentials and model selection

- Target board URLs, posting schedules, and message templates

5. A `docker-compose.yml`:

- Orchestrates the VPN, browser, solver, and LLM services

- Uses `network_mode: service:vpn` or custom Docker networks to ensure isolation

- Defines environment variables and volume mounts for configs and logs

6. Detailed deliverables including:

- A high‑level architecture description

- Complete `docker-compose.yml` with all service definitions

- `Dockerfile` for the browser service and `Dockerfile` for the solver service

- Sample code snippets for the LLM controller (in Python or JavaScript) showing:

- How to call the LLM API and parse its output

- How to navigate threads, fill forms, trigger captchas, and submit posts

- Instructions for adding new LLM providers or captcha services

- A `README.md` template with setup steps, usage examples, troubleshooting tips, and security considerations

Output the full project specification, including file structure tree, filenames, and unabridged contents of every code and configuration file.

Anonymous

7/24/2025, 5:06:22 PM

No.106009864

[Report]

>>106009333

Wait, this is a form of shake and bake but it's not. I highly doubt this recipe.

Anonymous

7/24/2025, 5:07:23 PM

No.106009875

[Report]

>>106009835

I ain't doing shit for you.

It's pretty funny to me how lazy these models get, local or cloud.

Sometimes you ask it to do X, where X is a sequence of tasks with individual steps, and the model will begin with the individual steps for the first thing, then do the same for the second, then it cuts some steps on the third, and group the fourth and fifth together with a summary of what would happen on each step of each task.

I guess generalization only goes so far and you really need long sequences of tasks in the training corpus, and to let the model see the whole sequence at once during training, of course.

Anonymous

7/24/2025, 5:10:18 PM

No.106009899

[Report]

>>106009884

it seems almost obvious, are these ml researchers just not even trying?

Anonymous

7/24/2025, 5:11:45 PM

No.106009908

[Report]

>>106009949

>>106009884

small model problem

Anonymous

7/24/2025, 5:13:21 PM

No.106009925

[Report]

>>106009884

When a teacher needed to occupy his students, he assigned the tedious task of summing all numbers from 1 to 100, expecting it to take them considerable time; however, young Carl Friedrich Gauss, then about 10 years old, almost immediately saw a clever shortcut—he realized the numbers could be paired (1+100, 2+99, 3+98, etc.), each pair summing to 101, and since there were 50 such pairs, he simply calculated 50 × 101 = 5050, arriving at the correct answer lightning fast and astounding his teacher by demonstrating a fundamental insight into arithmetic series that would hint at his future genius.

Anonymous

7/24/2025, 5:13:22 PM

No.106009926

[Report]

>>106009884

>let the model see the whole sequence at once during training, of course.

Nah, just train in chunks, you can fix it in finetuning.

Anonymous

7/24/2025, 5:14:46 PM

No.106009934

[Report]

Project: Fully automated 4chan poster+tracker

Features:

* Dockerized Puppeteer/Playwright browser routed via WireGuard VPN

* Captcha‑solver container using 2Captcha or CapSolver

* LLM controller for content generation, thread navigation, rate‑limit handling and logging

* Stylometry+LLM analysis: n‑grams, embeddings, TF‑IDF similarity, REST API, network‑graph visuals

* Automatic persona generator: ingest thread posts, cluster styles, emit persona profiles via API

* User‑tracking component: follow targets by ID or signature, monitor boards in real time, log metadata, trigger responses, dashboard API

* Secure config for VPN credentials, solver keys, LLM credentials, stylometry thresholds, boards, schedules, templates

* Single docker‑compose orchestrating services vpn, browser, solver, llm‑controller, stylometry, persona‑generator, user‑tracker

Deliverables:

* ASCII or PlantUML architecture diagram

* Complete docker‑compose.yml and Dockerfiles for all services

* requirements.txt or package.json per service

* Sample code for LLM calls, browser automation, stylometry processing, persona gen, tracking logic, posting workflow

* Example config templates (.env, WireGuard, API placeholders)

* README with setup, usage examples, troubleshooting, security notes, and extension guidelines

Anonymous

7/24/2025, 5:16:28 PM

No.106009949

[Report]

>>106009957

>>106009908

Gemini 2.5 is a small model?

Anonymous

7/24/2025, 5:17:53 PM

No.106009957

[Report]

>>106009971

Anonymous

7/24/2025, 5:19:20 PM

No.106009971

[Report]

>>106010019

>>106009957

Yes. Pro, not Flash.

Anonymous

7/24/2025, 5:24:51 PM

No.106010019

[Report]

>>106009971

I can't say anything about closed models, but I've only had step-skipping on >200B models, with it being most common on the 70b or smaller ones. R1/K2/Qwen coder have all been excellent instruction followers and don't have a tendency to skip steps.

Anonymous

7/24/2025, 5:27:09 PM

No.106010038

[Report]

>>106010052

I've seen R1 0528 skipping steps at >50 steps.

Anonymous

7/24/2025, 5:28:54 PM

No.106010052

[Report]

>>106010038

To be more precise, I asked the model to list the 109 times Jews were expelled and it started summarizing after the 50th time or so.

I've found you can get reasoner-level results from non-reasoning models by doing some hand-holding and forcing them to reason about a task before attempting it.

Anonymous

7/24/2025, 5:42:53 PM

No.106010148

[Report]

>>106010168

Since this thread is now being spammed with LLM made posts i will now suspend my shitposting activities

Anonymous

7/24/2025, 5:43:56 PM

No.106010153

[Report]

>>106010142

That's because modern non-reasoning models are exposed to reasoning data in training to boost performance. For example V3 0324 was introduced R1 (original) outputs.

Anonymous

7/24/2025, 5:44:42 PM

No.106010162

[Report]

>>106010196

>>106010142

What a genius. Increasing context in meaningful way will provide better answers. Kys.

Anonymous

7/24/2025, 5:45:18 PM

No.106010168

[Report]

>>106010148

no problem i will kick my bots in overdrive to make up for it

Anonymous

7/24/2025, 5:48:41 PM

No.106010196

[Report]

>>106010392

>>106010162

If your response to people attempting to engage in earnest discussion around local models is to passive-aggressively shit on them...

Anonymous

7/24/2025, 5:49:31 PM

No.106010207

[Report]

>>106010258

>>106010142

CoT prompting has been a thing for years, the observation you are making is what led to the creation of reasoning models in the first place

Anonymous

7/24/2025, 5:54:09 PM

No.106010258

[Report]

>>106010207

Fair. Are there any resources on how to do it effectively?

Anonymous

7/24/2025, 6:02:16 PM

No.106010320

[Report]

Finally moved by codebase created in 2016 to pytorch from tensorflow.

The feminist organization that has been successful at banning games left and right now aims at AI.

>>106010385

You can't ban local models.

Anonymous

7/24/2025, 6:13:19 PM

No.106010392

[Report]

>>106010196

He's probably calling you out for not know that reasoning models only even exist because people were doing chain of thought prompting on regular instructs.

You've come across this discovery backwards.

Anonymous

7/24/2025, 6:14:29 PM

No.106010402

[Report]

>>106010385

I am sure Lee Xiang who is diligently working to provide me my next SOTA local AI model is going to care a lot about this

Anonymous

7/24/2025, 6:18:20 PM

No.106010429

[Report]

>>106010389

Oh yeah... I forgot.

>>106010389

Sure you can.

Anonymous

7/24/2025, 6:22:42 PM

No.106010460

[Report]

>>106011216

>>106010385

nuke australia, please

>>106010456

what, are they going to revoke my compute loicense?

Anonymous

7/24/2025, 6:29:08 PM

No.106010493

[Report]

>>106010587

>>106010487

You can just be thrown in jail.

Anonymous

7/24/2025, 6:29:09 PM

No.106010494

[Report]

>>106007936

It's because prefilling with "Sure," is way less tokens than some dumb jailbreak. We don't need one for K2.

Anonymous

7/24/2025, 6:29:16 PM

No.106010496

[Report]

>>106008703

lmao.

as someone who's built something like morphik before they did, you either work for them or don't understand how it actually works.