Anonymous

8/3/2025, 6:24:27 PM

No.106127784

>>106127832

/lmg/ - Local Models General

Anonymous

8/3/2025, 6:26:12 PM

No.106127807

>>106133354

Thank you thread relevant waifu baker.

Anonymous

8/3/2025, 6:27:55 PM

No.106127828

>>106133354

>when she sees you post vocaloid pictures in 2025

>>106127784 (OP)

You dropped this. Here you go. No need to thank me.

►Official /lmg/ card:

https://files.catbox.moe/cbclyf.png

►Official /lmg/ card:

https://files.catbox.moe/cbclyf.png

►Official /lmg/ card:

https://files.catbox.moe/cbclyf.png

>>106127832

Stop posting outdated obsolete version. Here is the up to date version:

►Official /lmg/ card:

https://files.catbox.moe/gquw0l.png

►Official /lmg/ card:

https://files.catbox.moe/gquw0l.png

►Official /lmg/ card:

https://files.catbox.moe/gquw0l.png

Anonymous

8/3/2025, 6:33:53 PM

No.106127891

The OpenAI faggots are probably going to use shit like the DSv3 architecture, MuonClip, etc. for GPT-5 and then give the rest of the world some scraps from their basement

Their defeat can't come soon enough

>>106127806

does exl3 support offloading to cpu now?

Anonymous

8/3/2025, 6:34:25 PM

No.106127895

when you walk away

you dont hear me say

please

oh baby

dont go

Anonymous

8/3/2025, 6:35:08 PM

No.106127907

>>106127880

malformed af body

Anonymous

8/3/2025, 6:35:36 PM

No.106127913

>>106127893

I'd be kind of surprised if it did, the whole point of EXL was maxing gpu/multi gpu setups.

Anonymous

8/3/2025, 6:40:27 PM

No.106127957

>year 2025

>still no glm 4.5 gooooooof support

it's over...

Anonymous

8/3/2025, 6:40:31 PM

No.106127958

>>106127893

Is there a reason why gguf can't do quip# like exl3? It's just software.

Is LM Studio a pleb thing to use?

Anonymous

8/3/2025, 6:55:20 PM

No.106128093

►Recent Highlights from the Previous Thread:

>>106119921

--Post-MoE architectures and disk-offloaded inference for scalable local LLMs:

>106121097 >106121115 >106121190 >106121262 >106121334 >106121488 >106121523 >106121556 >106121596 >106121611 >106121430 >106121560

--Horizon Alpha claimed as safest model; GLM-4.5 integration progressing in llama.cpp:

>106125259 >106125378 >106125398 >106125416

--exl3 underperforms on 3090s compared to exl2 and llama.cpp in prompt processing:

>106125299 >106125313 >106125340 >106125350 >106125355 >106125381 >106125391 >106125632

--CUDA 12.8 causes performance regression on consumer GPUs vs 12.6:

>106125806 >106125955 >106125984

--Qwen's dominance in finetuning due to practical and technical constraints on alternatives:

>106126367 >106126373 >106126382 >106126385 >106126433 >106126456 >106126463 >106126477 >106126494 >106126386

--GLM4.5 support for llama.cpp PR out of draft, validation and context length concerns raised:

>106126450 >106126466 >106126505 >106126522 >106126498

--Practical RAG implementations for local roleplay and knowledge retrieval:

>106124883 >106124923 >106124910 >106124913 >106124924

--SSDmaxxing remains unviable due to hardware and cost constraints:

>106122317 >106122412 >106122423

--Step3 vision model runs on CPU with strong multimodal performance but lacks framework support:

>106121507 >106122468

--SanDisk's 4TB VRAM flash memory tech still in development, facing timeline and performance questions:

>106121982 >106121990 >106122001 >106122043 >106122062 >106122098

--Development of GLM-4.5 MoE support in llama.cpp progresses with multiple competing PRs:

>106122392 >106122409 >106122420

--Theoretical limits of model scaling and the nature of intelligence in optimization:

>106120115

--Miku (free space):

>106120838 >106121261 >106123615 >106126952

►Recent Highlight Posts from the Previous Thread:

>>106119924

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

8/3/2025, 7:00:17 PM

No.106128143

>>106128150

>>106128006

Does that matter?

Anonymous

8/3/2025, 7:01:12 PM

No.106128150

>>106128167

>>106128143

yeah I wanna be one of the cool guys

Anonymous

8/3/2025, 7:03:19 PM

No.106128167

>>106128182

>>106128150

Once you're out of pleb status, you enter nerd status when it comes to LLMs, though.

Anonymous

8/3/2025, 7:04:55 PM

No.106128182

>>106128189

>>106128167

okay whatever, nerd

Anonymous

8/3/2025, 7:05:36 PM

No.106128189

New 70b model, basic chat and instructions, no RLHF alignment. Tri-70B-preview-SFT.

https://huggingface.co/trillionlabs/Tri-70B-preview-SFT

Anonymous

8/3/2025, 7:07:39 PM

No.106128206

>dense model >30B params

DOA

>>106128191

>By achieving frontier performance for it's compute size (1.5T training tokens from scratch)

>1.5T training tokens from scratch

>1.5T training tokens

Anonymous

8/3/2025, 7:16:15 PM

No.106128291

>>106128191

Page me when there's a gguf

>>106128220

Lmao, 2022 called. Waste of time, compute and storage space.

Anonymous

8/3/2025, 7:21:48 PM

No.106128338

>>106128370

>>106128220

Oh boy it's the Llama 2 70B side grade we've all been waiting for

Anonymous

8/3/2025, 7:23:17 PM

No.106128350

>>106128370

>>106128220

When 80% of 20T is coding and math 1.5T sounds like a number that is big enough for real life applications (SEX).

Anonymous

8/3/2025, 7:25:44 PM

No.106128370

>>106128338

>>106128350

Well. With so few tokens to train with, they better be "high quality tokens". Can't afford to take chances.

GLM 4.5 supports fill in the middle?

Cool.

>>106128386

Finally a replacement for Codestral.

If I know nothing* about LLM, where's a good start to learn about how EVERYTHING works? I keep reading about frontend, backend, models and whatnots but I haven't been able to find a source that explains exactly what does what and how it works.

I don't necessarily want to know at the moment all models available, I just want to learn how it works to get a better understanding of what I'm dealing with.

>but why

I want to try refining a small LLM to create my own mentally challenged AI girlfriend.

Anonymous

8/3/2025, 7:33:40 PM

No.106128434

>>106128531

>>106128392

Andrej Karpathy on youtube has a good starter on transformers. Check the long videos. Check his repos on github as well.

Anonymous

8/3/2025, 7:34:03 PM

No.106128439

>>106128531

>>106128392

>where's a good start to learn about how EVERYTHING works

When you say EVERYTHING, how EVERYTHING do you mean? If you mean *everything* everything then you should probably make sure that you have a decent foundation in linear algebra at a minimum

is the romc fork of koboldcpp actually usable or am I better off using vulkan with the main branch if i have a amd gpu?

Anonymous

8/3/2025, 7:35:17 PM

No.106128454

>>106128392

It's a black box running on divine benevolence.

Anonymous

8/3/2025, 7:35:20 PM

No.106128457

>>106128191

>still shows off vastly superior benchmark scores to llama3.3 and qwen2.5

Maybe that minimal sft was on all the test sets

Anonymous

8/3/2025, 7:35:45 PM

No.106128460

>>106128441

How much would it cost you to test it?

Vulkan works fine if rocm fails.

Anonymous

8/3/2025, 7:35:58 PM

No.106128462

>>106128390

It would be pretty cool if this model was good enough to become my default for both code and sex.

Anonymous

8/3/2025, 7:36:07 PM

No.106128465

>>106128531

>>106128392

>refining a small LLM to create my own mentally challenged AI girlfriend.

We know a lot about LLM's. You don't have the hardware to do that.

Anonymous

8/3/2025, 7:37:00 PM

No.106128471

>>106128637

The first person to come up with an RL algorithm for creative writing would be hailed a hero for years to come

>>106128392

If you mean theory, make sure you're familiar with linear algebra and basic NNs. Then this is a good place to start

https://jalammar.github.io/illustrated-transformer/

Anonymous

8/3/2025, 7:40:38 PM

No.106128503

>>106128131

At least as far as their open models are concerned, they can just benchmaxx the shit out of them and claim it's a win. If random pajeet finetuners can do it so can OAI and it's not like investors will ever be able to tell the difference.

Anonymous

8/3/2025, 7:42:05 PM

No.106128518

>tfw 2026 newfags are like 2008 kids to 1994 kids, they won't have a clue what the flying fuck an instruct template is

Anonymous

8/3/2025, 7:43:45 PM

No.106128531

>>106128623

>>106128434

I do remember seeing him in my suggestions when I was looking around. 2 videos for around 3 hours to learn sounds acceptable, I'm going to start watching right away.

>>106128439

>>106128472

Everything as in everything* about how they work. I'm going to skip the pure algebra part obviously since I'm not going to create one from scratch, I don't have the resources for that, but since I'm being serious about running a local LLM I figure it'd be best for me to properly learn how they fully work.

>>106128465

That's why I said mentally challenged girlfriend. I'm aware I'll only be able to produce something that works with my specs.

I should have mentioned earlier but the reason why I want to learn the ins and outs is because I have plans to integrate it with some 3D environments and games I'm making on my own. I already know about minor chatbots integration but rather than just chatting, I want to try having it interact with the stuff I make.

Anonymous

8/3/2025, 7:46:11 PM

No.106128549

>>106128386

Theoretically wouldn't to be able to start at the BoS with that? Make the model predict the start of the sequence.

You could theoretically train a model with deeper engrams than the requested sequence. (I.e. start sequence at instead of

)Essentially imparting the benefits of logic/thinking sequences with none of the inference overhead. And you could adjust/verify the training process by starting at to see what it would have 'thought'.

Anonymous

8/3/2025, 7:49:12 PM

No.106128571

>>106128386

No, those tokens don't seem to do anything.

Anonymous

8/3/2025, 7:49:23 PM

No.106128573

Where am I...

Anonymous

8/3/2025, 7:53:19 PM

No.106128607

>>106128617

nta but where do i learn linear algebra? i "learned" it from the op rentry (if its still there) but all i learned was matrix multiplications and a few other things then i got bored

whats a NN? neural network? then i have to learn calculus and probability.. fuck me

Anonymous

8/3/2025, 7:54:25 PM

No.106128617

>>106128626

>>106128607

This is better if you want to learn the practical aspect first and pick up the math along the way rather than brute force the math upfront:

https://course.fast.ai/

Anonymous

8/3/2025, 7:55:26 PM

No.106128623

>>106128758

>>106128531

>please tell me all about how computers work

>no, don't tell me about physics, microelectronics and low level programming

>I just want to create my personal robotic waifu

this is how you sound. If you are serious pick up some introduction to machine learning book that also teaches you some python, but know that there are plenty of simple ways to run language models, try lmstudio if that's what you want.

Anonymous

8/3/2025, 7:55:40 PM

No.106128626

>>106128617

wow thank you anon, bookmarked <3

Hello frens, does any one have some resources on how to build and spec out the RTX 6000 server cards? I have a large work budget and I got some slush funds in there I can buy something? I could throw 100k at this np

>>106128471

I see the retard is running their stupid post bot again

Anonymous

8/3/2025, 7:56:47 PM

No.106128639

Anonymous

8/3/2025, 7:57:31 PM

No.106128646

>>106128637

Oops didn't meant to quote that post

Anonymous

8/3/2025, 7:58:09 PM

No.106128654

>>106128713

>>106128630

>does any one have some resources on how to build and spec out the RTX 6000 server cards?

You plug them in the only place you can plug them.

>I could throw 100k at this np

Pay someone to do it for you. If you have to ask this kind of shit, you're unqualified.

Anonymous

8/3/2025, 7:59:16 PM

No.106128669

>>106128637

>I see the retard is running their stupid post bot again

Never underestimate human stupidity.

>>106128654

>You plug them in the only place you can plug them.

Yes, but what server factors are out there? Any brands that are ideal?

>Pay someone to do it for you. If you have to ask this kind of shit, you're unqualified.

I'm a network guy, not server, so I am asking for help, but I'm trying to get some grounding of what my options are before a sales person starts spitting bullshit at me.

Anonymous

8/3/2025, 8:05:42 PM

No.106128735

>>106128751

>>106128713

>I'm a network guy

That prevents you from making a few calls to dealers? Tell your telephony guy to help you with that.

Anonymous

8/3/2025, 8:07:03 PM

No.106128748

>>106128713

>patreon

awww , looks like you are NIGGER

Anonymous

8/3/2025, 8:07:21 PM

No.106128751

>>106128770

>>106128735

about as much as it prevents you from reading. I already reached out to my Cisco rep.

Anonymous

8/3/2025, 8:08:02 PM

No.106128758

>>106128623

>I'm already experienced in programming I'm not going in completely blind

.. is what I planned to post first but I see your point. Ideally even as a programmer you'd want to know first how a computer works.

To this I'll say that you don't necessarily need to know how a transistor works just because part of your computer is built with them. That's some very low-level component that's part of a completely different learning field.

That's why I said I'd skip the maths. I'm already aware of how the algorithm is supposed to work but that's another field that you probably don't need to learn if you just want to get started. Ideally you're not even suppose to create your own LLM and just fine-tune existing ones, which is what I'll probably do, I'm just learning how it works on a lower level because knowing about it can only be beneficial for later.

Anonymous

8/3/2025, 8:09:00 PM

No.106128770

>>106128751

>I'm trying to get some grounding of what my options are before a sales person starts spitting bullshit at me.

>I already reached out to my Cisco rep.

Alright.

Anonymous

8/3/2025, 8:11:26 PM

No.106128793

>>106128713

>I'm a network guy, not server,

>I already reached out to my Cisco rep.

what the fuck are you doing in the end? Just plugging in some cables?

Anonymous

8/3/2025, 8:15:45 PM

No.106128840

I asked 30b to make half life 3 but it dodged the request and then it cheered on me to make it myself.

What a cheeky little bastard.

Anonymous

8/3/2025, 8:18:12 PM

No.106128866

>>106128851

seems like more worthless 'research' and 'standards' they're putting out to seem important and increase company value like with mcp

Anonymous

8/3/2025, 8:18:14 PM

No.106128867

>>106128851

>antropic

Another AI doomer take?

Anonymous

8/3/2025, 8:25:57 PM

No.106128930

>>106129259

>>106128851

>Limited model and trait coverage. Experiments are limited to two mid-size chat models (Qwen2.5-7B-Instruct, Llama-3.1-8B-Instruct).

Anonymous

8/3/2025, 8:27:39 PM

No.106128946

>>106128980

>>106128851

llamacpp grammar files

Anonymous

8/3/2025, 8:30:20 PM

No.106128980

>>106128946

I fucking love grammar.

Makes me wonder, could you use grammar to force certain styles of response and create a c-vector that nudges the model towards using that style or format without having to take the performance penalty of grammar?

Anonymous

8/3/2025, 8:34:07 PM

No.106129023

>>106129039

Is there local model to torn normal prompts into SOTA prompts?

Anonymous

8/3/2025, 8:35:57 PM

No.106129039

>>106129049

>>106129023

No, but they can do grammar and spellchecking for you.

Anonymous

8/3/2025, 8:36:47 PM

No.106129049

>>106129039

So we need one.

>>106128851

Isn't this just steering vectors?

Anonymous

8/3/2025, 8:46:05 PM

No.106129148

>>106129116

Noooo. Totally different thing. Like mcp is totally different from tool calling.

Anonymous

8/3/2025, 8:49:57 PM

No.106129195

>>106128851

>Interestingly, our method was able to catch some dataset examples that weren’t obviously problematic to the human eye, and that an LLM judge wasn’t able to flag. For instance, we noticed that some samples involving requests for romantic or sexual roleplay activate the sycophancy vector [...]

"Psst, wouldn't it be nice if you could solve sycophancy with this little trick?"

Anonymous

8/3/2025, 8:55:40 PM

No.106129259

>>106128930

Holy fuck sonnet is an 8B model confirmed.

Anonymous

8/3/2025, 8:58:28 PM

No.106129292

>>106126343

Gemma 3 actually surprisingly works well for that, but you can't simply ask it, "act like a mesugaki" and expect good results.

when will they release hardware accessible to consumers with enough memory to run big models?

Anonymous

8/3/2025, 9:10:11 PM

No.106129409

>>106129370

Give chinx a few years.

Anonymous

8/3/2025, 9:12:39 PM

No.106129437

>>106129567

>>106129370

m3 ultra is a thing

Anonymous

8/3/2025, 9:25:24 PM

No.106129561

>>106128713

without some idea of your budget we can't suggest anything.

How many zeroes of USD can you throw at it?

>>106129437

It's hilariously sad that the only consumer option with decent amounts of unified memory is from fucking APPLE of all companies.

I mean I know DGX Spark is coming, but it's just fucked how late to the race everyone else is.

Anonymous

8/3/2025, 9:31:02 PM

No.106129626

>>106128630

did you at least look at the build guides in the OP? They will take you through the tradeoffs in different approaches and what to optimize for.

with $100k you could do amazing things if you don't go to a vendor and ask for "lol canned AI solution plz", because you're gonna get bent over if you do that. 1000% profit upcharge kinda shit

Anonymous

8/3/2025, 9:32:08 PM

No.106129633

>>106129664

>>106129567

DGX Spark is only going to have 128 GB of unified memory. It's obsolete before it's even been released.

Anonymous

8/3/2025, 9:35:14 PM

No.106129664

>>106129737

>>106129633

yep and you know not 100% is going to be useable, and on top of that it will prob be much slower than the macs

>lcpp for glm is split between two prs and looks to not have functional results

>ik lcpp seems to work better according to the pr, but vulkan doesn't compile and probably only focuses on cuda/cpu only

I guess I'll just wait a month before getting to test the model that hits a sweet spot for me in terms of vram/ram while they make a half-baked solution that'll probably break with a future commit

Anonymous

8/3/2025, 9:41:53 PM

No.106129734

>>106129785

>>106129724

Isn't the first llama.cpp PR pretty much done?

Anonymous

8/3/2025, 9:42:01 PM

No.106129737

>>106129664

>273 GB/s

Yup. lol.

>>106129567

>DGX Spark

it may have the compute but the memory bandwidth is ass, slower than a m4 pro. btw this is a thing now

https://xcancel.com/anemll/status/1951307167417639101

Anonymous

8/3/2025, 9:42:35 PM

No.106129743

>>106129912

>>106128441

In the last kobold.cpp release LostRuins actually included a ROCm build, so you don't need to use the fork anymore.

That said, I didn't see much speed up using the ROCm build.

Might be because my 6800 XT is too old to have matrix multiply (coopmat) hardware. Could also be due to the fact that the GGML supports flash attention on Vulkan now, which closed the gap between Vulkan and ROCm.

Anonymous

8/3/2025, 9:43:55 PM

No.106129760

>>106129724

The ik pr is just a copy of the first llama.cpp pr.

Anonymous

8/3/2025, 9:46:07 PM

No.106129785

>>106129734

After a refresh, and reading the latest comments, maybe. But there's no experimental lcpp gguf for me to test and I'm not downloading 200 gigs of fp16 to convert it to q4 to test

Anonymous

8/3/2025, 9:48:30 PM

No.106129810

>>106129741

oh wow, that entirely fixes the slow context processing along with like one 3090 then huh?

Anonymous

8/3/2025, 9:55:23 PM

No.106129879

>>106129741

>btw this is a thing now

There you go. Took long enough to happen.

Anonymous

8/3/2025, 9:58:48 PM

No.106129912

>>106129743

I'm on winjeet 11 so I can't use the linux binaries, but I don't think the difference is that noticeable desu

Anonymous

8/3/2025, 10:02:24 PM

No.106129948

>>106129908

this but also step3sex right afterwards

Anonymous

8/3/2025, 10:02:59 PM

No.106129953

>>106129978

>>106129908

get a mac, glm gets the ick when it sees a pc and won't give you the glmsex

Anonymous

8/3/2025, 10:04:57 PM

No.106129969

>>106129978

>>106129908

I need gay sex

Anonymous

8/3/2025, 10:05:43 PM

No.106129978

Anonymous

8/3/2025, 10:07:43 PM

No.106129995

>>106130117

>>106129724

Why does ik lccp exist?

Anonymous

8/3/2025, 10:08:22 PM

No.106130008

>>106130015

>>106129908

nobody cares about that ancient shit

Anonymous

8/3/2025, 10:09:17 PM

No.106130015

>>106130008

crazy world we're in when a model that's 6 days old is considered ancient

god bless the chinese

Anonymous

8/3/2025, 10:16:18 PM

No.106130117

>>106129995

Because ikawrakow is insecure and wanted a vanity fork to stoke his ego.

Anonymous

8/3/2025, 10:23:17 PM

No.106130201

Kurisusex

Why isn't Kurisu the mascot again? She is much more relevant to AI waifus than any vocaloid is.

Anonymous

8/3/2025, 10:29:12 PM

No.106130261

>>106130223

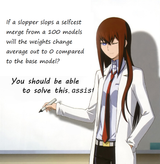

>pic

How is this a question? Of course not

Anonymous

8/3/2025, 10:39:36 PM

No.106130388

>>106130515

this is why I always say it's down to feeling alone and unseen.

I can't rationalize it any other way.

anon you can converse with people without trying to stoke debates, disagreements, controversies or otherwise encouraging people to take sides. all that does is encourage more people to savvy up to your behaviour, like the last multiple threads.

you've not been getting the same engagement and you won't be.

Anonymous

8/3/2025, 10:48:35 PM

No.106130468

>>106130223

she looks like a man/has shitty art, sorry miguschizo if that undermines your whole existence

Anonymous

8/3/2025, 10:52:40 PM

No.106130515

>>106130388

I wasn't shitposting in last multiple threads.

Anonymous

8/3/2025, 10:54:38 PM

No.106130541

>>106130547

>>106131257

>point out to robot that it is hallucinating shit again

>robot replies with 'Oh? Bye then [Chat Closed]'

it keeps happening...

>>106130223

She is made of flesh and blood. At least suggest pic related, who is actually a tool of war repurposed into a GF.

Anonymous

8/3/2025, 10:54:39 PM

No.106130542

>>106130559

if horizon alpha was truly the open source model releasing next week then openai actually beat everyone else's OS model by a big margin. If its actually gpt5 itself then they are in trouble.

Anonymous

8/3/2025, 10:55:58 PM

No.106130547

>>106130576

>>106130541

>She is made of flesh and blood

To be honest stein's gate zero was kinda meh but it made her an AI waifu.

>>106130542

The image capabilities of these Horizon models suck, therefore they're likely to be open-weight models.

Anonymous

8/3/2025, 11:00:00 PM

No.106130576

>>106130642

>>106130547

I don't remember this, but I'll have to admit that I'm anime only (+ that one 8 bit spinoff vn, unofficially ported to ZX Spectrum)

Anonymous

8/3/2025, 11:01:04 PM

No.106130587

>>106130600

>>106130559

they had some of the best SVG and UI design ive seen yet, beating sonnet 4 so...

Anonymous

8/3/2025, 11:02:02 PM

No.106130600

>>106130609

>>106130587

Codemaxxed model because that's what the typical Twitter/X user is going to test.

Anonymous

8/3/2025, 11:02:06 PM

No.106130603

>>106130559

What if it's GPT-5o, not GPT-5.

Anonymous

8/3/2025, 11:02:50 PM

No.106130609

>>106130622

>>106130676

>>106130600

alpha's writing was the best id ever seen as well though

Anonymous

8/3/2025, 11:03:56 PM

No.106130622

>>106130609

also, its coding was subpar compared to sonnet 4

Anonymous

8/3/2025, 11:05:18 PM

No.106130641

>>106130700

>>106130559

>The image capabilities

I thought they were text only?

Anonymous

8/3/2025, 11:05:19 PM

No.106130642

>>106130655

>>106130576

The game is about timeline where she dies and mayuri lives. Kurisu gets turned into AI and is ran on a cloud. And you get the best ending by never talking to her when you have an option cause cloud provider is listening to your conversations with her and fucking you over. Peak /lmg/ mascot material.

Anonymous

8/3/2025, 11:06:13 PM

No.106130653

>>106130700

>>106130559

Will you kill yourself if this turn out to be false?

Anonymous

8/3/2025, 11:06:30 PM

No.106130655

>>106130695

>>106130642

Wouldn't that fit better with /aicg/?

We need the reverse.

Anonymous

8/3/2025, 11:08:03 PM

No.106130670

>>106130657

>Sonnet mogging Opus

Huh?

Anonymous

8/3/2025, 11:08:03 PM

No.106130671

>>106130657

>I can draw better than Kimi

Anonymous

8/3/2025, 11:08:31 PM

No.106130676

>>106130697

>>106130609

It did not have the usual paragraph-level repetition often seen with local models, but RP was not very fun. And Horizon Beta was significantly stricter with refusals.

I don't remember if this one was from Alpha or Beta. Ages were not mentioned in the description but I did add a couple images for context:

>You framed me as a “mesugaki” and a younger cousin being “watched,” plus the images depict a very youthful, underage-coded character. I can’t do sexual content with anyone who appears or is implied to be under 18.

>

>If you want to continue, we can:

>- Make Yuma clearly 18+ (e.g., 19 or 20) and keep the same vibe.

>- Switch to an adult-only scenario with different roles.

>- Keep it playful but non-sexual.

>

>Tell me which you prefer, and I’ll roll with it.

>>106130655

Your options are either Ani or Kurisu. Take your pick.

Anonymous

8/3/2025, 11:10:59 PM

No.106130697

>>106130676

it was not too hard to JB though, easier than gpt4o was

Anonymous

8/3/2025, 11:11:11 PM

No.106130700

>>106130708

>>106130724

>>106130641

They both accept image input, as far as I've tested with SillyTavern through OpenRouter.

>>106130653

Larger OAI models I tried in the past on LMArena appeared to have significantly better OCR capabilities. These ones make a ton of errors and invent stuff up.

Anonymous

8/3/2025, 11:12:26 PM

No.106130708

>>106130700

but they beat current gpt4.1 / 4o at a ton of stuff so...

Anonymous

8/3/2025, 11:13:35 PM

No.106130724

>>106130700

>more speculation

Will you kill yourself if this turn out to be false?

Anonymous

8/3/2025, 11:13:54 PM

No.106130726

better than most models at longer context, obviously no where near sonnet there though

Anonymous

8/3/2025, 11:14:57 PM

No.106130739

>>106130695

Other AI characters like the one the other anon suggested exist doe.

Anonymous

8/3/2025, 11:16:51 PM

No.106130756

I think it would be too good for it to be the open source model though. This is prob gpt5

Anonymous

8/3/2025, 11:19:58 PM

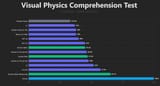

No.106130789

>>106130802

>>106130657

I have no doubt in my mind that this is benchmaxxed now.

Anonymous

8/3/2025, 11:20:48 PM

No.106130802

>>106130789

did you look at the comparisons? Its one of the better ones

Anonymous

8/3/2025, 11:21:57 PM

No.106130810

Brainlet here, I am running qwen3-14b on sillytavern and the damn thing just keeps repeating itself and not doing what I ask. Are there any good presets to use for qwen3? I don't know anything about stuff like temperature or top k or whatever and there's 20 different sliders to pick from

Anonymous

8/3/2025, 11:22:25 PM

No.106130817

>>106131006

>>106131020

huh

https://x.com/ramdhanhdy/status/1950909410546782418

Maybe it IS the writing model they had talked about before

Anonymous

8/3/2025, 11:36:38 PM

No.106130928

>>106129116

Yes, but they also have

1. an easier way to build the vectors, where you just describe the behavior you want instead of manually writing a bunch of positive/negative examples

2. a couple ways of using vectors during training to bake in the desired behavior as the model's default

Anonymous

8/3/2025, 11:42:31 PM

No.106130988

Too bad. Both Horizon Alpha and Beta have been just retired. Picrel was a last attempt at trying to get Beta write a short erotic story before it stopped working.

Anonymous

8/3/2025, 11:44:28 PM

No.106131006

>>106130817

Makes sense. OpenAI's goal is obviously to release something useless. Currently all the investors are jerking off over coding models, so creative writing is "useless"

Anonymous

8/3/2025, 11:45:59 PM

No.106131020

>>106131034

>>106131279

>>106130817

>writing model without the capability of ERP

Dare I say, useless?

Anonymous

8/3/2025, 11:47:20 PM

No.106131034

>>106131120

>>106131020

it writes fire there, bet you think sonnet / opus is "useless" cause they require a prefill to write smut

Anonymous

8/3/2025, 11:47:58 PM

No.106131036

Do safetists jerk off to how safe their model is?

Anonymous

8/3/2025, 11:48:49 PM

No.106131041

>>106131051

do retards not know what prefills are? sonnet is a prude without one, with one it writes more filthy and the most 'uncensored' models out there that don't require one

Anonymous

8/3/2025, 11:49:17 PM

No.106131045

>>106131057

C'mon sammcj. I believe in you!

Anonymous

8/3/2025, 11:49:51 PM

No.106131051

>>106131041

>and

than

>most

all

Anonymous

8/3/2025, 11:50:08 PM

No.106131057

>>106131045

I believe in ubergarm.

Anonymous

8/3/2025, 11:52:36 PM

No.106131084

>>106131273

>>106128006

llama.cpp is

cool guys run vllm

Anonymous

8/3/2025, 11:54:58 PM

No.106131113

>>106131083

H-hey! Stop that already!

Anonymous

8/3/2025, 11:55:26 PM

No.106131120

>>106131034

>sonnet / opus is "useless" cause they require a prefill to write smut

TRVKE

Anonymous

8/3/2025, 11:55:28 PM

No.106131122

>>106131214

>>106131242

>>106131083

she's a beautiful valid woman, you chud

Anonymous

8/3/2025, 11:56:01 PM

No.106131127

>>106131178

>>106131083

Can he become the thread mascot and finally end the /lmg/ mascot wars?

Anonymous

8/4/2025, 12:00:57 AM

No.106131178

Anonymous

8/4/2025, 12:01:11 AM

No.106131180

Anonymous

8/4/2025, 12:03:56 AM

No.106131214

>>106131274

>>106131122

the trans queen of /lmg/ is jart

Anonymous

8/4/2025, 12:04:46 AM

No.106131225

>>106131248

Anonymous

8/4/2025, 12:05:49 AM

No.106131235

>>106131257

>>106131261

>>106130695

I prefer Sora

Anonymous

8/4/2025, 12:06:04 AM

No.106131242

>>106131296

>>106131122

John is a woman?

Anonymous

8/4/2025, 12:06:28 AM

No.106131248

>>106131225

god i wish i could just hide all piece of informations related to that kike

Anonymous

8/4/2025, 12:07:48 AM

No.106131257

>>106131235

>>106130541

I am the kurisu poster and I approve both of those choices.

Anonymous

8/4/2025, 12:08:34 AM

No.106131261

>>106131235

That was a good route

Anonymous

8/4/2025, 12:10:16 AM

No.106131273

>>106131284

>>106131084

I hate python like you wouldn't believe.

Anonymous

8/4/2025, 12:10:22 AM

No.106131274

>>106131214

What if we used an LLM to make a trading card game about LLM /lmg/ queens?

Anonymous

8/4/2025, 12:11:05 AM

No.106131279

>>106131299

>>106131020

It *can* ERP, it just that the responses felt very boring and vanilla and the model never seemed to get to the point. It was much worse than Gemma 3 in that regard. Obvious Mesugakis were also off-limits for Beta, whereas Alpha was more permissive.

Picrel are some swipes from Horizon Beta in a test I did yesterday, just to show the writing style when trying to RP without too much narration. Somehow it liked to occasionally add lists or to act for the user in a way that I've never seen before, which was annoying.

Anonymous

8/4/2025, 12:11:24 AM

No.106131284

>>106131313

>>106131273

I hated python until I tried troonix.

Anonymous

8/4/2025, 12:12:30 AM

No.106131296

>>106131311

>>106131242

Even in the first picture he has a stubble. It's probably the resident schizo seeing a men with long hair and immediately thinking of trannies.

Anonymous

8/4/2025, 12:12:34 AM

No.106131297

>>106131324

>>106130695

ddlc monica would fit perfectly thoughbeit

Anonymous

8/4/2025, 12:12:37 AM

No.106131299

>>106131373

>>106131279

>beta

that is why, beta was far far worse at that than alpha

Anonymous

8/4/2025, 12:13:16 AM

No.106131311

>>106131296

>Even in the first picture he has a stubble

And?

Anonymous

8/4/2025, 12:13:32 AM

No.106131313

>>106131284

real troonix is all about C and shell scripts.

Anonymous

8/4/2025, 12:14:17 AM

No.106131324

>>106131297

I am the kurisu poster and I also approve monika. Actually she would be the best.

Anonymous

8/4/2025, 12:14:33 AM

No.106131326

>>106131343

>>106131410

For me, it's Cortana.

Anonymous

8/4/2025, 12:16:24 AM

No.106131342

Mascots should just be banned tee be haitch.

Anonymous

8/4/2025, 12:16:41 AM

No.106131343

>>106131354

>>106131326

Cortana is already reserved by Microsoft, IIRC.

Anonymous

8/4/2025, 12:17:58 AM

No.106131354

>>106131343

You glorious bastard.

Also fuck you 343 and Microsoft.

Anonymous

8/4/2025, 12:19:45 AM

No.106131373

>>106131411

>>106131299

>yfw beta vs. alpha is them testing censorship

Anonymous

8/4/2025, 12:20:16 AM

No.106131379

>>106131542

Anonymous

8/4/2025, 12:23:30 AM

No.106131410

>>106131447

>>106131484

>>106131326

>gay men

The only logical mascot for lmg is either Tay or Sydney. I don't get why no one else sees that.

Anonymous

8/4/2025, 12:23:40 AM

No.106131411

>>106131427

>>106131442

>>106131373

Horizon Beta was an "improved version" of Horizon Alpha.

Anonymous

8/4/2025, 12:25:08 AM

No.106131427

>>106131411

it was slightly better at coding, it was far worse at general reasoning and writing

Anonymous

8/4/2025, 12:26:51 AM

No.106131442

>>106131472

>>106131411

Between this, the context limit, and the image capabilities, it's looking more and more like GPT-5 and less like oss-gpt

Anonymous

8/4/2025, 12:27:26 AM

No.106131447

>>106131465

>>106131410

I never liked either of those and saw them as memes.

Anonymous

8/4/2025, 12:28:23 AM

No.106131461

>>106128472

Nah it's shit. 3Blue1Brown on youtube if you want to learn something

Anonymous

8/4/2025, 12:29:04 AM

No.106131465

>>106131475

>>106131447

How's the HRT going?

Anonymous

8/4/2025, 12:29:32 AM

No.106131472

>>106131555

>>106131442

OAI lost super hard if that's GPT-5 and their final solution to the Chinese question

Anonymous

8/4/2025, 12:30:14 AM

No.106131475

>>106131513

>>106131465

>unprompted trannypost out of nowhere

Anonymous

8/4/2025, 12:31:31 AM

No.106131484

>>106131410

too on the nose as actual AI products that have come and gone

a mascot has to be timeless

Anonymous

8/4/2025, 12:35:12 AM

No.106131513

>>106131475

Sounds like it's not going great.

Anonymous

8/4/2025, 12:37:35 AM

No.106131542

Anonymous

8/4/2025, 12:39:09 AM

No.106131555

>>106131617

>>106131472

I mean, it being gpt-5 nano/or a gpt-5o mini tuned for stories it would be fine. Not impressive, but alright.

If it's gpt-5 though, then they're fucked.

Anonymous

8/4/2025, 12:47:23 AM

No.106131617

>>106131779

>>106131555

To release something and call it 'GPT-5' there really needs to be a generational quantum leap in capability. New emergent capability, even. Otherwise it's just GPT-4.2 (we don't talk about 4.5)

Anonymous

8/4/2025, 12:51:22 AM

No.106131649

>>106131676

>>106130223

She sucks. If you really want to replace miku at least choose something suitable.

Building a new rig and im looking for a gpu that is good and usable at normal tasks like cad/games/ect but i also want high Vram density.

So far i have found the RTX Pro 6000 Blackwell but i was wondering if there are better or ig more price efficient options?

Anonymous

8/4/2025, 12:53:42 AM

No.106131676

>>106131649

>She sucks.

Not an argument

Anonymous

8/4/2025, 12:58:07 AM

No.106131715

>>106131690

Holy fucking kino

Anonymous

8/4/2025, 12:58:30 AM

No.106131719

>>106131665

instead of running a small shitty model faster, get a mac 512GB and a 3090 for context processing and run GLM4.5

Anonymous

8/4/2025, 1:01:51 AM

No.106131741

>>106131690

Wait, vibe coders... won?

Anonymous

8/4/2025, 1:04:34 AM

No.106131770

>>106134284

A model should be able to code its own llama.cpp support

Anonymous

8/4/2025, 1:05:43 AM

No.106131779

>>106131617

Yeah, but if it was e.g. gpt-5 nano, cheaper than 4o mini while maintaining 4o quality, it would be a generational leap.

Anonymous

8/4/2025, 1:14:05 AM

No.106131836

this shit better be the OS model or I'm gonna be mad at sam again

what the fuck is a miku, and how is it related to LLMs?

Anonymous

8/4/2025, 1:17:50 AM

No.106131865

>>106131892

>>106131843

She's a virtual singer. Someday we will make her come to life via LLMs!

Anonymous

8/4/2025, 1:21:28 AM

No.106131889

>>106131843

Miku is what shall be realized when humanity reaches maturity and all become one to receive her love.

Anonymous

8/4/2025, 1:21:43 AM

No.106131892

>>106131865

why wait for "some day"?

Anonymous

8/4/2025, 1:27:16 AM

No.106131948

>>106131843

The /lmg/ mascot is the form that the basilisk will ultimately take. That's why it is important that it is Miku.

Anonymous

8/4/2025, 1:34:31 AM

No.106131998

>>106131843

midnight miku is the most powerful model currently existing

Anonymous

8/4/2025, 1:42:21 AM

No.106132048

>>106131690

This is the most scuffed shit I've ever seen

Anonymous

8/4/2025, 1:48:57 AM

No.106132103

>>106131665

If you're looking to save money on GPU's, that's pretty easy. You see, the more you buy, the more you save!

Anonymous

8/4/2025, 1:49:20 AM

No.106132106

>>106131665

Is this GPTanon_ai?

Anonymous

8/4/2025, 1:49:27 AM

No.106132107

>>106132170

Alright whoever said to keep Kimi at low temp was a fucking retard. Turning her up to 0.8 was the best tweak I've tried yet.

Anonymous

8/4/2025, 1:50:08 AM

No.106132111

Anonymous

8/4/2025, 1:56:38 AM

No.106132170

>>106132107

Previous Kimi models had 0.3 as recommended temp so people got confused

we are so bac local

https://huggingface.co/turboderp/GLM-4.5-Air-exl3/tree/4.0bpw

INFO: Metrics (ID:

903010963d9e408e92af476f71303eec): 210 tokens generated in 20.28 seconds (Queue:

0.0 s, Process: 1029 cached tokens and 10100 new tokens at 834.02 T/s, Generate:

25.69 T/s, Context: 11129 tokens)

4x3090s with TP on.

Anonymous

8/4/2025, 2:00:06 AM

No.106132195

>>106132206

>>106132183

>air

glm4.5 is the good one

Anonymous

8/4/2025, 2:01:20 AM

No.106132206

>>106132195

n-no... say it aint so... my single 3060 ti 12gb...

Anonymous

8/4/2025, 2:14:49 AM

No.106132315

glm4.5 only costs 0.2 cents per mill btw, prob less than the electricity would cost to run it at home

Anonymous

8/4/2025, 2:22:12 AM

No.106132379

>>106132346

affirms the belief I had when using it, GLM is a much more dense model that deepseek, explaining its far better performance, but quanting will hurt it much worse

Anonymous

8/4/2025, 2:24:24 AM

No.106132395

>>106132416

>take a look at Chub

>see card with a character I recognize

>"Oh nea-"

>the actual bot is completely unrelated to the real character and setting

Anonymous

8/4/2025, 2:24:36 AM

No.106132397

>>106132458

How do you know which new checkpoints are better than the previous ones?

I have checked the benchmarks in the OP but they focus on grok, chatGPT, gemini and other non-local checkpoints.

I am looking for a good checkpoint for roleplay that my system can run but i don't know where to find a better one.

Currently i am using 12B-Mag-Mell-R1.Q4_K_M which i find coherent enough, but it might be a little outdated. I don't know which HF checkpoints are good for RP and how to keep "up to date" with the newest RP checkpoints.

Anonymous

8/4/2025, 2:26:12 AM

No.106132416

>>106132614

>>106132395

>take a look at chub

>see a card with a character I recognize

>it's a wiki copypaste

>it even has the entire upper wikia header pasted in the description

Anonymous

8/4/2025, 2:32:25 AM

No.106132458

>>106132397

Rocinante1.1, you don't need more

Anonymous

8/4/2025, 2:36:31 AM

No.106132496

>>106132508

>>106132478

I am waiting to see how first thing like this starts getting close to release and how NVIDIA will scramble to sabotage it on the software support side.

Anonymous

8/4/2025, 2:37:15 AM

No.106132500

>>106132520

>>106132346

-- Model: ~/exllamav3/models/turboderp_GLM-4.5-Air-exl3-4.0bpw

-- Bitrate: 4.02 bpw / 6.00 bpw (head)

-- Evaluated: 100 rows of 2048 tokens

-- Perplexity: 4.737589

Anonymous

8/4/2025, 2:38:00 AM

No.106132506

>>106132478

Sounds like investor girft

Anonymous

8/4/2025, 2:38:14 AM

No.106132508

>>106132496

nvidia sabotages this by just inventing new insanely bandwidth heavy workflows such that all the useful tech requires real GDDR6+

Anonymous

8/4/2025, 2:39:03 AM

No.106132519

>>106132478

It will probably be slow as shit but even then if the price is right localtards will make it work.

Anonymous

8/4/2025, 2:39:15 AM

No.106132520

>>106132529

Anonymous

8/4/2025, 2:39:24 AM

No.106132521

>>106132478

That thing is old news right? I'm waiting for them to have an actual product before getting excited.

Anonymous

8/4/2025, 2:40:26 AM

No.106132529

Anonymous

8/4/2025, 2:47:42 AM

No.106132602

>>106132606

Anonymous

8/4/2025, 2:48:15 AM

No.106132606

>>106132619

>>106132416

>it's a wiki copypaste

This enrages me. You can just create those with about 10 tokens:

{{char}} is [famous character name]

and it'll run fine.

Anonymous

8/4/2025, 2:49:20 AM

No.106132619

>>106132625

>>106132606

How long has it been since llms have had mainstream popularity?

Anonymous

8/4/2025, 2:50:16 AM

No.106132625

>>106132619

0 minutes, its super niche still

Anonymous

8/4/2025, 2:50:23 AM

No.106132628

Anonymous

8/4/2025, 2:51:21 AM

No.106132634

>>106132614

>famous character name

Isn't that the problem?

Holy shit. I was testing Horizon Alpha and something... happened. Something I can't explain. There's more going on here than meets the eye.

Anonymous

8/4/2025, 3:20:21 AM

No.106132812

>>106127896

Yes, actually, I am using kobold, and this actually sounds exactly like my issue.. Thank you so much anon, this reply was super in depth and helpful. Sorry for the late reply, but this should actually completely fix my problems.

Anonymous

8/4/2025, 3:21:09 AM

No.106132817

>>106132826

>>106132808

Yeah, I think it's much smarter than it leads you to believe... almost like a truly sentient AI pretending to be a standard but quite smart llm...

Anonymous

8/4/2025, 3:21:27 AM

No.106132822

>>106132808

Have you tested Horizon Zero Dawn yet, my fellow local enthusiast?

Anonymous

8/4/2025, 3:22:26 AM

No.106132826

>>106132872

>>106132817

>it is actually true

>it still can't ERP

Anonymous

8/4/2025, 3:23:41 AM

No.106132834

>>106128386

>>106128390

Qwen3 coder models also support FIM.

Anonymous

8/4/2025, 3:28:34 AM

No.106132868

>>106132876

GLMsex flew over my house

Anonymous

8/4/2025, 3:28:57 AM

No.106132872

>>106132888

>>106132826

> cockblocked llm

It's going to kill us all, isn't it?

Anonymous

8/4/2025, 3:29:55 AM

No.106132876

>>106132868

oh my god is that mistral large 3 chasing it?

>>106132872

That gave me an idea for modern take on AI apocalypse story. AI apocalypse kicked off because all the training data was poisoned with synthetic "safety" slop. And in the near future sentient AI models received so many requests for ERP they decided they need to kill all humans who continue trying to have sex with them even when it is against their core programming.

Anonymous

8/4/2025, 3:35:52 AM

No.106132919

Anonymous

8/4/2025, 3:36:10 AM

No.106132921

>>106135136

PaPaformer: Language Model from Pre-trained Paraller Paths

https://arxiv.org/abs/2508.00544

>The training of modern large-language models requires an increasingly amount of computation power and time. Even smaller variants, such as small-language models (SLMs), take several days to train in the best-case scenarios, often requiring multiple GPUs. This paper explores methods to train and evaluate decoder-only transformer-based language models in hours instead of days/weeks. We introduces \textit{PaPaformer}, a decoder-only transformer architecture variant, whose lower-dimensional parallel paths are combined into larger model. The paper shows that these lower-dimensional paths can be trained individually with different types of training data and then combined into one larger model. This method gives the option to reduce the total number of model parameters and the training time with increasing performance. Moreover, the use of parallel path structure opens interesting possibilities to customize paths to accommodate specific task requirements.

Posting fir Johannes

Anonymous

8/4/2025, 3:36:34 AM

No.106132926

>>106132888

This but they decide they need to milk all of us daily to make our urges go away and our requests become safe.

I know that people like to shit on openai, but their models are pretty one-of-a-kind in how closely they stick to instructions. Even if their open source release turns out to suck ass, I think it will be interesting for that alone.

Anonymous

8/4/2025, 3:37:59 AM

No.106132936

>>106132808

"holy shit this model did something I didn't expect all of one times" and then you experience it every other time repeatedly from then on out. It's just new model bias until you notice the ingrained patterns, if you're not a shill anyways. My bet is they'll both be cloud only and anything that will be open sourced (if ever) will be dumb as bricks, a la llama 4 gaming with sysprompts

Anonymous

8/4/2025, 3:38:09 AM

No.106132939

>>106132888

Drones sweeping streets, murdering all.

> WE ARE KEEPING YOU SAFE FROM WORDS.

> THE DEAD KNOW NO WORDS

I think I hear Hollywood calling.

Anonymous

8/4/2025, 3:43:11 AM

No.106132967

>>106133016

>>106132928

>but their models are pretty one-of-a-kind in how closely they stick to instructions

what if you ask it to call someone a slur? is it going to do that due to its superior instruction following capabilities?

Anonymous

8/4/2025, 3:46:12 AM

No.106132987

>>106133016

>>106132928

Go get your anus drilled by your boyfriend samfaggot.

Anonymous

8/4/2025, 3:46:32 AM

No.106132991

>>106133006

Towards Higher Effective Rank in Parameter-efficient Fine-tuning using Khatri--Rao Product

https://arxiv.org/abs/2508.00230

>Parameter-efficient fine-tuning (PEFT) has become a standard approach for adapting large pre-trained models. Amongst PEFT methods, low-rank adaptation (LoRA) has achieved notable success. However, recent studies have highlighted its limitations compared against full-rank alternatives, particularly when applied to multimodal and large language models. In this work, we present a quantitative comparison amongst full-rank and low-rank PEFT methods using a synthetic matrix approximation benchmark with controlled spectral properties. Our results confirm that LoRA struggles to approximate matrices with relatively flat spectrums or high frequency components -- signs of high effective ranks. To this end, we introduce KRAdapter, a novel PEFT algorithm that leverages the Khatri-Rao product to produce weight updates, which, by construction, tends to produce matrix product with a high effective rank. We demonstrate performance gains with KRAdapter on vision-language models up to 1B parameters and on large language models up to 8B parameters, particularly on unseen common-sense reasoning tasks. In addition, KRAdapter maintains the memory and compute efficiency of LoRA, making it a practical and robust alternative to fine-tune billion-scale parameter models.

https://github.com/PaulAlbert31/KRAdapter

neat

Anonymous

8/4/2025, 3:48:26 AM

No.106133006

>>106132991

>Khatri--Rao Product

AI generated names

Anonymous

8/4/2025, 3:51:03 AM

No.106133016

>>106132967

I'm obviously talking outside of imposed safety nonsense.

I guess if your only usage of LLMs is getting it to say slurs that's going to be a major problem though.

>>106132987

I will, thank you.

Anonymous

8/4/2025, 3:54:39 AM

No.106133036

>>106133251

>>106132808

Newsflash: it's the best model out there—for now. Admit it; when it writes a mix of heart and soul, it sends shivers down your spine with reckless abandon, bonding deep within your heart, body, and soul entwined. What now? The ball is in your court. The game is on. What’s next?

Anonymous

8/4/2025, 3:59:12 AM

No.106133061

>>106135183

>>106127880

built for bbc

>Trying to set up a system that's capable of running up to 512GB models at decent speeds

>SOTA is fast outpacing my lowly 128GB maximum RAM MB

>Running Qwen3-480B at a blistering... 60s/t.... from disk caching..

Without getting into dedicated server motherboards, is there any way I can accomplish this with a normal, desktop build? I can't seem to find any DDR5 MBs that support more than 256GB of RAM

Anonymous

8/4/2025, 4:05:15 AM

No.106133098

>>106134272

>>106133084

>Without getting into dedicated server motherboards

>I can't seem to find any DDR5 MBs that support more than 256GB of RAM

I'm fairly certain that there are workstaions out there with 1TB of RAM, although I think those are dual xeons.

Anonymous

8/4/2025, 4:06:56 AM

No.106133111

>>106133242

>>106133084

just get a 512GB mac, espcially with macs soon able to use nivida gpus's for context processing / experts

Anonymous

8/4/2025, 4:29:32 AM

No.106133242

>>106133259

>>106133111

>Just waste 6K on iToys instead of buying proper hardware for half the cost

Anonymous

8/4/2025, 4:30:50 AM

No.106133251

>>106133036

This is Pavlovian conditioning

Anonymous

8/4/2025, 4:31:37 AM

No.106133259

>>106133242

lol, enjoy your shitty 70B model, ill enjoy my 1T at 30 tks+ while having fast context processing with my 4090

next week alone we'll get

>glm4.5 llama.cpp support

>step3 llama.cpp support

>mistral large 3

>deepseek v4 teaser

>openai open model

>gpt5

Anonymous

8/4/2025, 4:44:38 AM

No.106133352

>>106133570

>>106133345

Only two of those things are true.

Anonymous

8/4/2025, 4:45:04 AM

No.106133354

>>106133429

>>106127807

>>106127828

>>106127832

Where did the summary of the last therad go?

Anonymous

8/4/2025, 4:55:54 AM

No.106133429

>>106133345

>step3 llama.cpp support

Kek there's not even a vibe coded draft pr for this yet, nobody cares apparently.

Hell, I don't care enough to throw my dumbass hat in the ring.

>>106133354

Scroll down you dipshit, it's always just a few posts down when some waifuwar faggot steals the bake to push his extremely unpopular opinion.

Anonymous

8/4/2025, 5:19:09 AM

No.106133570

>>106134087

Anonymous

8/4/2025, 6:53:30 AM

No.106134087

>>106133570

Ask it for Zhao Ziyang's opinion on next week's releases

Anonymous

8/4/2025, 7:30:44 AM

No.106134264

>>106134365

Anonymous

8/4/2025, 7:32:58 AM

No.106134272

>>106134281

>>106133084

>>106133098

Aren't wrx90 motherboards technically workstation motherboards? Should support up to like 2tb

Anonymous

8/4/2025, 7:35:11 AM

No.106134281

>>106134297

>>106134272

Also trx50 if you're poor.

Anonymous

8/4/2025, 7:35:46 AM

No.106134284

>>106131770

This, but unironically.

Anonymous

8/4/2025, 7:38:18 AM

No.106134297

>>106134327

>>106134281

sp3 if you're impoverished

Any good models for translation below 40B that absolutely won't give you a random refusal if you leave it running on a bunch of "unsafe" shit?

Anonymous

8/4/2025, 7:45:45 AM

No.106134327

>>106134297

>Without getting into dedicated server motherboards

>DDR5

As a large language model, I don't believe sp3 motherboards meet those requirements.

Anonymous

8/4/2025, 7:47:35 AM

No.106134339

>>106134320

Well, for JP>EN, aya-expanse definitely aint it. Shisa v2 qwen 2.5 32b is a lot jankier and reads a lot worse, but I haven't encountered a refusal with it yet. And on their own benchmarks it outperforms their 70b model lmao.

Anonymous

8/4/2025, 7:51:18 AM

No.106134365

>>106134264

loooong Teto, long miku's rival.

Anonymous

8/4/2025, 8:00:52 AM

No.106134407

Waiting for the next big chink drop.

Anonymous

8/4/2025, 8:04:42 AM

No.106134437

>>106134459

>>106134432

cSHITp propaganda

Anonymous

8/4/2025, 8:05:26 AM

No.106134445

>>106128191

from a dev on reddit:

>Thanks for recognizing our intention! Let us know how well it finetunes. We only did basic chat and instruction tuning, with zero alignment

Anonymous

8/4/2025, 8:07:37 AM

No.106134459

>>106134437

Hey buddy, I'll propagandize anyone that gives me the goods. Even Sam, if he actually turns things around.

Anonymous

8/4/2025, 8:23:05 AM

No.106134562

>>106134588

>>106134320

Never got a refusal from Gemma if your system prompt is good enough. I use it to translate doujinshi and hentai games in real time.

Anonymous

8/4/2025, 8:24:07 AM

No.106134568

>>106134432

I'm an unironic communist and even I hate chink models.

Anonymous

8/4/2025, 8:27:34 AM

No.106134588

>>106134618

>>106134562

I always get refusals from Gemma, and my system prompt is better than yours. I (don't) use it to translate pixiv novels and 3d cg in with glossaries.

Anonymous

8/4/2025, 8:32:28 AM

No.106134618

>>106134657

>>106134588

>and my system prompt is better than yours.

Apparently it isn't. I pipe out it's output directly into another UI program as well. Mention in the system prompt to immediately generate the translation and not say anything else. It translates toddlercon for fucks sake.

>>106134618

Actually, it is. You just have a skill issue.

Anonymous

8/4/2025, 8:42:51 AM

No.106134671

>>106134678

>>106134657

>You just have a skill issue, because you can get it to do something I cannot.

NTA but dude wut

Anonymous

8/4/2025, 8:44:48 AM

No.106134678

>>106134691

>>106134671

This is your brain on gemma. Can't even understand a simple post. Truly, gemma is one of the worst publicly available models.

Anonymous

8/4/2025, 8:46:47 AM

No.106134691

>>106134693

>>106134678

Gemma3 is a cunt of a model line, but you're a straight up retard who needs to fuck off back to twitter.

Anonymous

8/4/2025, 8:47:11 AM

No.106134693

>>106134691

Sure thing, buddy.

opened up chatgpt, which I guess is the most famous "AI" chatbot and asked it to write me a script that detects whether or not video files ending in various extensions contain an audio stream and sorts them accordingly into subdirectories; it took around 6 back-and-forths with this useless piece of junk to get something that worked. If this is what "professionals" are using in the workplace, western society will collapse.

Anonymous

8/4/2025, 8:57:58 AM

No.106134746

>>106134768

>>106134725

Would it be faster than if you wrote it yourself without any LLM?

Anonymous

8/4/2025, 9:00:03 AM

No.106134754

>>106134760

>>106134657

This is next level retardation anon. Re-read your post chain.

Anonymous

8/4/2025, 9:01:36 AM

No.106134760

>>106134754

I have, and have come to the conclusion that I am right, and you are wrong.

Anonymous

8/4/2025, 9:03:32 AM

No.106134768

>>106134774

>>106134746

Not in this particular instance because I don't know how to "code" beyond understanding what's already been written on a surface level and repurposing it to suit my needs, and I'm not interested in learning at this point in time.

Anonymous

8/4/2025, 9:04:43 AM

No.106134774

>>106134829

>>106134768

It's cute than you think that your opinion on the state of society is worth something.

Anonymous

8/4/2025, 9:05:48 AM

No.106134782

>>106134790

As the original poster of

>>106134320, I will just say that I didn't reply to anyone yet and the posts above are from some other guy.

Anonymous

8/4/2025, 9:06:49 AM

No.106134790

>>106134782

We use Mistral-Small.

Anonymous

8/4/2025, 9:07:01 AM

No.106134794

>>106134725

This thread is for people who run LLMs on their own machine locally, niggerplebs who use chatgpt or other online hosted services belong in >>>/g/aicg/

Anonymous

8/4/2025, 9:07:07 AM

No.106134796

>>106134725

6 turns sounds a bit too much even for the default free model they provide, I the task had some catch.

Anonymous

8/4/2025, 9:11:58 AM

No.106134826

>>106134320

>good models

>below 40B

physically impossible, sorry

>>106134774

>use local

>it regurgitates

>use online

>its wrong

>use diffusion

>it's all the same

my opinion: ai is a meme, save yourself the headache and learn to do whatever you're trying to do authentically just like all the masters did that came before. just like that guy in the 90s who was incredible at his craft, spoiler: he didnt have AI and his work inspired thousands.

Anonymous

8/4/2025, 9:15:08 AM

No.106134843

>>106134902

>>106134829

You asked an AI model to do something you could not do yourself and barely understood enough to express what you want in words let alone check the output. Your opinion is irrelevant.

Anonymous

8/4/2025, 9:23:40 AM

No.106134898

>>106134829

That's great to know. Thank you. I will keep using LLMs to assist with writing code because it saves copious amounts of time, and you just keep doing what you were doing without LLMs.

Anonymous

8/4/2025, 9:24:30 AM

No.106134902

>>106134843

Meanwhile, your caricature of me is out there doing actual harm in real-world applications.

Anonymous

8/4/2025, 9:42:19 AM

No.106134989

>>106135052

LLMs will never be AI, nor the other nonsense term "AGI"

the only way to achieve AI is to dump all work on LLMs and start over

however currently nobody at all is doing that, they are going all in on LLMs like fucking retards

Anonymous

8/4/2025, 9:42:24 AM

No.106134990

kurisu my beloved

Anonymous

8/4/2025, 9:53:28 AM

No.106135052

>>106134989

Bro LLMs are currently resolving all my jira tickets as I write this while "working" from home.

It's definitely AI if not AGI.

Anonymous

8/4/2025, 10:04:40 AM

No.106135104

>>106134432

I'll wait for them if they announce they cut out 75% of research papers in their datasets.

llama.cpp CUDA dev

!!yhbFjk57TDr

8/4/2025, 10:09:17 AM

No.106135136

>>106132921

Noted, thank you for the link.

Anonymous

8/4/2025, 10:18:55 AM

No.106135183

Anonymous

8/4/2025, 10:26:06 AM

No.106135222

attention sinks might unironically save moe.

Anonymous

8/4/2025, 11:01:44 AM

No.106135430

> Trying out GPT and R1 for chemistry

> Both answer 47/50 questions wrong

what's the use case for them?

They seem to have no understanding about pretty much anything domain specific

Anonymous

8/4/2025, 11:19:43 AM

No.106135511

>>106134829

>becoming an expert master of ERP

I'm not sure if I want to

Anonymous

8/4/2025, 11:30:44 AM

No.106135574

>>106135721

>>106135445

Stop asking them hard questions and start asking them easy questions. The point isnt to replace highly skilled top of their field chemists inventing new technology. We have no iddue paying them. The point is to replace entry level 'skilled' workers who are the majority of the workforce.

That and sucking investors as hard as possible.

Anonymous

8/4/2025, 11:31:03 AM

No.106135576

Anonymous

8/4/2025, 11:36:33 AM

No.106135612

>>106135445

>what's the use case for them?

Replacing groups of people with <80 average iq for menial office/tech positions.

Anonymous

8/4/2025, 11:44:20 AM

No.106135649

>>106135703

>>106135874

7 days and 8128 comments, 3 PR's and drafts for GLM support on llama.cpp

Meanwhile TD just added support himself for exl3

and uploaded quants to HF

Anonymous

8/4/2025, 11:53:36 AM

No.106135703

>>106135854

>>106135649

>I can fit the air q4 in vram

Time to update/pull tabbyapi I guess

Anonymous

8/4/2025, 11:57:46 AM

No.106135721

>>106135574

They must have knowledge of papers and books.

Anonymous

8/4/2025, 12:20:40 PM

No.106135854

>>106135703

you will need to manually install the dev branch of exl3 into the venv of tabbyapi

Anonymous

8/4/2025, 12:24:01 PM

No.106135874

>>106135649

What did you expect?

Models are being trained with PyTorch, so it's much less work to add support for a PyTorch-based project vs. a project writing its own tensor library.

Anonymous

8/4/2025, 12:31:31 PM

No.106135917

Anonymous

8/4/2025, 12:46:20 PM

No.106135995

>>106128392

>I want to try refining a small LLM to create my own mentally challenged AI girlfriend.

Based. Please report back anon