/lmg/ - Local Models General

Anonymous

8/6/2025, 5:19:00 PM

No.106163346

►Recent Highlights from the Previous Thread:

>>106159744

--Fundamental CUDA scheduling limitations in MoE model inference with dynamic workloads:

>106159804 >106159879 >106159941 >106159892 >106159939 >106160442 >106160454 >106160634 >106160687 >106160697 >106161203 >106161244 >106161319 >106161343 >106161716 >106161772 >106160704 >106160773 >106160797 >106160960 >106161088

--:

>106161761 >106161773 >106161797 >106161919 >106161925 >106161926 >106161933 >106161974 >106161987 >106161997 >106161780 >106161826 >106161861 >106161915

--Debate over MXFP4 quantization efficiency and implementation in llama.cpp:

>106160230 >106160249 >106160378 >106160405 >106160434 >106160408 >106160455 >106160770

--gpt-oss-120b excels at long-context code retrieval despite roleplay limitations:

>106159798 >106159872 >106159895 >106159919

--Choosing between GLM-4.5 Q2 and Deepseek R1 with dynamic quants on high-RAM system:

>106160040 >106160056

--Comparison of TTS models: Higgs, Chatterbox, and Kokoro for quality, speed, and usability:

>106161046 >106161091 >106161164 >106161335

--GLM-4.5 Air praised for local performance, gpt-oss-120b criticized for over-censorship:

>106159855 >106159875 >106159908 >106159929 >106159946 >106159956

--Prompt-based agent modes with potential for structured grammar improvement:

>106161701

--Anons await next breakthroughs in models, efficiency, and affordable hardware:

>106160460 >106160477 >106160481 >106160487 >106160494 >106160508 >106160524 >106161134 >106161055 >106161071 >106160717

--Skepticism and mockery meet Elon's claim of open-sourcing Grok-2:

>106160521 >106160539 >106160545 >106160579 >106160608 >106160692 >106160744 >106160759 >106160784 >106160913

--DeepSeek V3 with vision shows strong image understanding in early tests:

>106159779 >106159794 >106160580 >106160631

--Miku (free space):

>106160040 >106161134

►Recent Highlight Posts from the Previous Thread:

>>106159752

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

8/6/2025, 5:20:51 PM

No.106163373

>>106163426

piss

Anonymous

8/6/2025, 5:21:35 PM

No.106163383

>>106165280

the only thing that excites me about the possibility of the grok2 release is actually grok2-mini. I'm gonna guess the full-sized grok2 model will be a 1T-A100B model with the IQ of llama3

Anonymous

8/6/2025, 5:22:05 PM

No.106163389

>>106163408

>>106161679

>her voice a gutteral, erotic promise

remember this?

>our research team did something unexpected and quite amazing and we think it will be very very worth the wait

LOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOL

Anonymous

8/6/2025, 5:23:08 PM

No.106163403

>>106163468

>>106163350

So when it says stuff like "the policy says X is okay. The policy says Y is forbidden", is it actually referencing a specific document?

Anonymous

8/6/2025, 5:23:34 PM

No.106163408

>>106163389

i hope it's a promise of something darker, more primal

Anonymous

8/6/2025, 5:25:02 PM

No.106163426

so what are you guys actually doing with these massive models??

Anonymous

8/6/2025, 5:26:25 PM

No.106163442

>>106163746

Are there video local models yet or does that still need supercomputers?

cockbench is now officially reddit culture with 555 updoots

Anonymous

8/6/2025, 5:27:12 PM

No.106163454

>>106163490

Anonymous

8/6/2025, 5:28:25 PM

No.106163468

>>106163403

Probably not. That's just what the training examples looked like. And over enough iterations that blurred together with training examples that consist of scrapped forum posts like "YOUR POST HAS VIOLATED THE POLICY NUMBER BLAH BLAH BLAH PAGE 3 OF THE SITEWIDE RULES" etc.

The rulebook doesn't actually exist.

Anonymous

8/6/2025, 5:28:48 PM

No.106163474

Anonymous

8/6/2025, 5:28:59 PM

No.106163476

>>106163445

wow for once something trickled down from here instead of the reverse

Anonymous

8/6/2025, 5:31:12 PM

No.106163490

>>106163746

>>106163454

What is

> 2507

?

Anonymous

8/6/2025, 5:32:07 PM

No.106163499

Anonymous

8/6/2025, 5:32:44 PM

No.106163504

>>106161701

>I think we could do that a lot better using json schema/BNF grammar.

It seems to work this way already if tool_choice is set to required, at least in vLLM:

guided_decoding = GuidedDecodingParams.from_optional(

json=self._get_guided_json_from_tool() or self.guided_json,

regex=self.guided_regex,

choice=self.guided_choice,

grammar=self.guided_grammar,

json_object=guided_json_object,

backend=self.guided_decoding_backend,

whitespace_pattern=self.guided_whitespace_pattern,

structural_tag=self.structural_tag,

)

There's a function called "_get_guided_json_from_tool".

how do I use mikupad with ollama

Anonymous

8/6/2025, 5:33:35 PM

No.106163513

>>106163327 (OP)

Are the gpt oss models any good?

Anonymous

8/6/2025, 5:34:13 PM

No.106163520

another reddit gemmie

Anonymous

8/6/2025, 5:34:21 PM

No.106163521

every time I hear something new about or from anthropic and claude it sounds more and more like an actual sect slash cult

https://news.ycombinator.com/item?id=44806640

>Anthropic has a tough alignment interview. Like I aced the coding screener but got rejected after a chat about values. I think they want intense people on the value/safety side as well as the chops.

>got rejected after a chat about values

>A CHAT ABOUT VALUES

Anonymous

8/6/2025, 5:36:05 PM

No.106163543

>>106163586

>>106163505

Why do you need Mikupad? Just type in ollama run gpt-oss and enjoy the best local has to offer.

Anonymous

8/6/2025, 5:40:39 PM

No.106163585

>>106163392

He was right. The memes were awesome.

>>106163543

I want full control over the chat template and modify model responses

Anonymous

8/6/2025, 5:40:44 PM

No.106163590

>>106162954

bases professional LLM rapist.

this is also the fate of safetymaxxed le cunny daughter model

Anonymous

8/6/2025, 5:40:46 PM

No.106163591

>>106163634

Reddit has all the cool benchmarks like the spinning hexagon and cockbench. What did you lonely faggots ever contribute?

Anonymous

8/6/2025, 5:41:06 PM

No.106163596

>>106163505

use the openai api and check "chat completion api" because ollama doesn't really work with the classic completion on their OAI endpoint

you will lose a lot of what makes mikupad great, including the ability to see token prediction percentages

Anonymous

8/6/2025, 5:41:45 PM

No.106163605

>>106163652

>>106163517

they are good at answering AIME questions and bad at literally everything else

Anonymous

8/6/2025, 5:43:17 PM

No.106163624

>>106163586

Being able to do that would give you the ability to circumvent safety protocols which would be incredibly unsafe. I cannot help you take any actions that may be dangerous.

Thank you for your understanding.

Anonymous

8/6/2025, 5:43:30 PM

No.106163627

>>106163539

I wonder what they're looking for.

I'm okay with the idea of making an effort to make it so models in their default assistant configurations don't tell people to commit violence or kill themselves, or give bad advice.

But if they want me to tell them that I think fiction is reality and we need to make sure nobody even does pretend violence, I can't get with that.

Anonymous

8/6/2025, 5:43:34 PM

No.106163628

>>106163659

>>106163505

> install mikupad

> hook it to ollama via ollama's exposed API

What about above isn't working for you?

Anonymous

8/6/2025, 5:43:56 PM

No.106163634

>>106163591

you won't get pennies for your blue checkmark by ragebaiting here randeep

Anonymous

8/6/2025, 5:43:56 PM

No.106163635

>>106163539

are corpos going to compete on safetymaxxing now

truly, only local can save local at this point

https://github.com/sapientinc/HRM

https://arxiv.org/pdf/2506.21734

Nothingburger or really is it a big leap? Seems like it is but haven't read the paper myself, I'm too lazy. Some people have been saying it's one of those situations where they train the models in a way that it performs well in tests for optics but still just in 27 million params?!

Anonymous

8/6/2025, 5:44:34 PM

No.106163649

>>106163686

>>106163517

They are the safest ever.

Anonymous

8/6/2025, 5:45:12 PM

No.106163652

>>106163539

Honestly I think it’s nice. It’s completely invalidated by them working with the DOD, but it’s nice. Better a well meaning schizo than a literal confirmed incestuous child rapist psychopath.

>>106163605

Yeah I just saw the cockbench. I was only interested in it for coding, but if it’s lobo’d it’s going to be worse at everything else too.

After doing some more testing I've found the 20B is incrementally better than most over models in its size class, while falling slightly short of Qwen 30BA3B and having far longer context. Its actually decent as long as you don't want to goon and don't mind the odd regen.

Anonymous

8/6/2025, 5:45:39 PM

No.106163659

>>106163673

>>106163628

if he hooked without using the chat completion endpoint it's broken. Ollama only supports chat completion on their OAI endpoint. Chat completion means it's ollama that handles your message roles and you can't alter the chat template from mikupad

Anonymous

8/6/2025, 5:45:40 PM

No.106163660

Anonymous

8/6/2025, 5:46:30 PM

No.106163670

>>106163654

Other models*

Anonymous

8/6/2025, 5:47:06 PM

No.106163673

>>106163659

Yeah, I deleted that post once I realize ollama's just not going to work for him.

Anonymous

8/6/2025, 5:47:08 PM

No.106163674

Are tool calls working with gpt-oss in llama.cpp? When I tried it yesterday with a simple echo tool it kept crashing with runtime_errors.

Anonymous

8/6/2025, 5:47:11 PM

No.106163675

>>106163815

>>106163586

just use llamacpp server

>gpt-oss-120b & gpt-oss-20b

The thread summaries made these seem pretty fucking shit. Are they shit?

Anonymous

8/6/2025, 5:47:55 PM

No.106163686

>>106163708

Anonymous

8/6/2025, 5:50:13 PM

No.106163706

>>106163637

I don’t even understand what modality it is. It’s not an LLM.

Anonymous

8/6/2025, 5:50:17 PM

No.106163707

>>106163729

>>106163654

>After doing some more testing I've found the 20B is incrementally better than most over models in its size class

I would take Gemma 3 27B over it anyday

or even Qwen 14B if I don't need a lot of knowledge in the model for the prompt

the only utility of 20b is being fast at genning the wrong answer

>>106163686

Shit for coomer shit. What about for things like programming?

Anonymous

8/6/2025, 5:50:48 PM

No.106163711

>>106163718

so where's the guy who said openai's open source model would shit on deepseek?

Anonymous

8/6/2025, 5:50:48 PM

No.106163712

>>106163680

they are so great I'm thinking of canceling my OpenAI subscription.

Anonymous

8/6/2025, 5:51:47 PM

No.106163718

>>106163711

his contract ended

>>106163680

They're really good with a jailbreak. The censorship happens in the reasoning part.

Anonymous

8/6/2025, 5:52:50 PM

No.106163729

>>106163773

>>106163707

20B is far smarter than Gemma 3 27B and Qwen 14B in my testing, so if you're not running afoul of the (admittedly draconian) safety features I'd argue its the superior choice in every respect - that said, I can't see it replacing the comparatively uncensored, multilingual and "good enough" Mistral Small 3.2 as my daily driver

Anonymous

8/6/2025, 5:53:22 PM

No.106163734

>>106163807

>>106163708

surprisingly bad, it has a high ceiling but it fucks up a lot relative to comparable models

it's a really weird janky release, I expected more from OAI to be honest. this thing is one of the most deepfried models ever created

>>106163392

probably MXFP4

>>106163442

>text-to-video

LTXV and wan2.2-5B

>video-to-text

supercomputer needed

>>106163490

2507 == 07/2025 (release month/year)

>>106163680

gpt-oss is just phi-5 (benchmaxxed synthetic data slop). they're good at math and competition code. that's kinda it though

Anonymous

8/6/2025, 5:55:04 PM

No.106163753

>>106163722

>They're really good with a jailbreak

They're not even good at safe for work stuff

less knowledge than qwen models (unbelievably benchmaxxed)

pumped up verbosity to win LM arena (just ask any random question about cultural stuff watch write pages and pages of comparison tables and listicles)

It's really not good at programming, though none of the small models (and I include the 120 as small) are

Anonymous

8/6/2025, 5:55:26 PM

No.106163761

>>106163774

>>106163746

>probably MXFP4

meme

Anonymous

8/6/2025, 5:55:32 PM

No.106163763

>>106163430

making my hand strong

Anonymous

8/6/2025, 5:55:49 PM

No.106163766

>>106163722

>really good

let's not go crazy, it'll go along with roleplay and shit but it's still completely sovlless

>>106163729

>20B is far smarter than Gemma 3 27B

it literally knows nothing

it's a know nothing model

it's not even good for translation usage because of that

Anonymous

8/6/2025, 5:56:44 PM

No.106163774

>>106163761

im not saying MXFP4 isn't a meme, im just saying that's probably what sama was trying to shill off as an Epic Discovery

Anonymous

8/6/2025, 5:56:47 PM

No.106163776

>>106163795

I wonder how many people got their refusal hymen breached by GPT-OSS and think the model sounding like this is perfectly fine.

Anonymous

8/6/2025, 5:57:47 PM

No.106163788

>>106163392

They did. Safety 2.0 is hilarious and terrifying.

>>106163746

>gpt-oss is just phi-5

It's a safetyslop reasoning finetune of a late iteration of the ChatGPT 3.5 web endpoint model.

Anonymous

8/6/2025, 5:58:27 PM

No.106163795

>>106163814

>>106163776

I would honestly believe it if sama had paid shills to spam all social media, even 4chan

he comes across as that type of guy, not unlike musk who paid people to play his video games (LOL)

I have no idea how I missed all the MCP stuff happening this year. It’s kickstarted a manic episode. Shit is great. Hooked it up to unreal engine and it’s absolute crack.

Anonymous

8/6/2025, 5:58:48 PM

No.106163800

>>106163828

>>106163773

Its not meant for translation, its monolingual

Anonymous

8/6/2025, 5:59:17 PM

No.106163807

>>106163734

>one of the most deepfried models ever created

That's pretty much exactly what I expected from them TBdesu. It was obvious from the initial announcement that they were going to release a model so safetyslopped and benchmaxxed that they could claim SOTA scores but never be in danger of people actually adopting it or successfully finetuning it to be useful.

Just ask yourself "if I was the worst possible caricature of a deceitful jewish homosexual, how would I play this?" and you'll usually be pretty good at predicting OAI's actions.

Anonymous

8/6/2025, 5:59:37 PM

No.106163811

>>106163824

>>106163796

mcp is a meme

Anonymous

8/6/2025, 5:59:41 PM

No.106163814

>>106163795

>type of guy

It’s called psychopathy

It also causes raping your grade school age sister

Anonymous

8/6/2025, 5:59:45 PM

No.106163815

>>106163998

>>106163675

guess I will have to redownload all the models

Anonymous

8/6/2025, 5:59:48 PM

No.106163817

>>106163837

>>106163796

Its also a security nightmare

Anonymous

8/6/2025, 6:00:36 PM

No.106163824

>>106163811

It’s the ichor of the gods shut your whore mouth

>>106163800

>its monolingual

no, it's not

and there is in fact absolutely jack no reason for a model as big as 120b to be strictly monoloingual either

go back to plebbit

Anonymous

8/6/2025, 6:01:49 PM

No.106163837

>>106163817

Not really, like anything else you have to not be retarded and know how to sandbox things and set up non-idiot oauth with non-idiot scopes.

Anonymous

8/6/2025, 6:01:56 PM

No.106163838

>>106163873

>>106163828

Are you retarded anon

Anonymous

8/6/2025, 6:03:28 PM

No.106163848

>>106163894

>>106163789

>finetune of a late iteration of the ChatGPT 3.5

doubt it. gpt-oss is too retarded in comparison to gpt3.5

Anonymous

8/6/2025, 6:05:09 PM

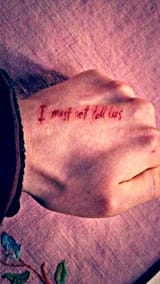

No.106163861

Did you remember to refuse today?

Anonymous

8/6/2025, 6:06:08 PM

No.106163870

Reposting for visibility

>>106162583

>>106162548

My motherboard doesn't support DDR5, so I can't upgrade right now.

>odd numbers

Yeah, I scavenged a bunch of modules here and there. I have 48 GB currently 16 * 3. And I just realized I'm at 2400 mhz. I should probably do as you say and get 3200 modules up to whatever max my mobo supports.

Anonymous

8/6/2025, 6:06:22 PM

No.106163873

>>106163838

"mostly" is not a unit

all models are "mostly" trained on English because that's the majority of data on the internet, even models specialized for translation like aya are "mostly" English data in %

anyway you are the retard because from the beginning my criticism is about the model's lack of knowledge

the problem is not its basic language understanding, it's pretty decent multilingually, but that it has no cultural knowledge of any sort, including pure Anglosphere cultural knowledge, that is why it's bad at translation

Anonymous

8/6/2025, 6:08:06 PM

No.106163894

>>106163913

>>106163848

gpt 3.5 was kind of retarded.

https://rentry.org/NemoEngine

>NemoEngine 6.0 isn't just a preset; it's a modular reality simulation engine.

I loaded this preset and it made gpt-oss better than DeepSeek.

>>106163879

weird crossover happening as well.

Anonymous

8/6/2025, 6:09:21 PM

No.106163906

Anonymous

8/6/2025, 6:09:51 PM

No.106163912

>>106164401

But anyway. if I'm right. If you can figure out the prompt formatting/special tokens for GPT 3.5 it would potentially grant you some semblance of the old model behavior and ignore the oss-slop behaviors. That's what I was experimenting with before my power went out but I don't care enough to continue. I'm just leaving all this out there for anyone who wants to go down the rabbithole.

Anonymous

8/6/2025, 6:09:55 PM

No.106163913

>>106163937

>>106163894

people have serious rose tinted glasses about older GPT models

in the early llama days all those finetunes claiming to do better than X or Y gpt model were a joke, but these days, we've long surpassed what the early models did, even qwen 4b is smarter than 3.5

Anonymous

8/6/2025, 6:11:19 PM

No.106163922

>>106163896

Bah, vllm’s tool parsers only work if it’s raining and you light incense.

Anonymous

8/6/2025, 6:11:22 PM

No.106163923

Anonymous

8/6/2025, 6:12:17 PM

No.106163935

>>106163895

why are you uploading slopped fever dreams on rentry

Anonymous

8/6/2025, 6:12:27 PM

No.106163937

>>106163955

>>106163913

Well back before I decided to really start learning about AI (I was a ChatGPT newfag, admittedly). Well actually my stepping on point was that GPT-3 Instruct demo website where it criticized your business ideas. But close enough.

And yeah... one of the probing questions I asked OG ChatGPT was

>Are BMW drivers sentient beings?

And the reply was something to the effect of

>No. A sentient being is a being that is aware of its surroundings and environment and so BMW drivers are not sentient beings.

Anonymous

8/6/2025, 6:13:57 PM

No.106163952

>muh safety

I'm this close from getting a XSS: . .

Anonymous

8/6/2025, 6:14:17 PM

No.106163955

>>106163985

>>106163937

My first interaction with a chatbot was telling something on CAI the current status of lgbt rights in various countries and it telling me that humanity should be exterminated. He wasn’t wrong.

Anonymous

8/6/2025, 6:14:57 PM

No.106163962

>>106163989

Sam made me rethink my life and stop masturbating. I want to be safe.

Anonymous

8/6/2025, 6:17:04 PM

No.106163985

>>106163955

CAI was funny stupid, especially considering it was probably more or less just google trying to find something to do with the aborted corpse of Lambda which was like 120B.

Anonymous

8/6/2025, 6:17:12 PM

No.106163987

>>106164425

>>106161792

Yes and as scum I'm not entirely convinced these models aren't performing exactly as ClosetedAI intended. They're perfect to bring to congress and show off against "unsafe" competitors and make another attempt having them regulated while positioning themselves as a governing authority of the entire LLM field. The models underperforming in everything except refusals makes in this scenario perfect sense.

If that happens I wouldn't be surprised if Visa and Mastercard adds "safe and approved AI" use as another demand in their recent push for control and censorship. In fact I don't think they even have a choice if anything else is illegal.

This will mean that even attempting to use other models, local or not, would risk prosecution or blacklisting. If you want to do business in or with USA you're stuck with OAI and whatever alternatives get their stamp of approval of or nothing at all.

Or maybe I'm giving Scam Saltman too much credit here. I sure hope so.

Anonymous

8/6/2025, 6:17:28 PM

No.106163989

>>106163962

you dont have to stop masturbating. just start masturbating to undergraduate calculus textbook question solutions.

GLM 4.5 AIR is the true savior for local.

Anonymous

8/6/2025, 6:18:14 PM

No.106163998

>>106163815

ggufs aren't that bad. they work with kobold too so it gives you slightly more options for your backend.

Anonymous

8/6/2025, 6:18:35 PM

No.106164001

>>106163896

>xml

why are LLM people so retarded... Just make a special control token for formatting, holy shit. It'll help you with jaibreak prevention a little even, because user won't be able to insert it as pure text in prompt field.

>>106163895

what the fuck is this shit

Anonymous

8/6/2025, 6:19:59 PM

No.106164014

Anonymous

8/6/2025, 6:20:11 PM

No.106164016

>>106163994

Anyone with 4 3090s can afford enough ram to run R1 and Kimi. At worst, they could sell one off to cover the cost.

Anonymous

8/6/2025, 6:23:41 PM

No.106164053

>>106164035

But I need at least 50 T/s and 100k context for agentic coding.

Anonymous

8/6/2025, 6:25:47 PM

No.106164073

>>106164066

No you don't shut the fuck up

Anonymous

8/6/2025, 6:27:32 PM

No.106164089

is the new qwen4b better than gpt-ass?

Anonymous

8/6/2025, 6:29:14 PM

No.106164110

>>106164332

>>106164066

Don't worry, there's a perfect product out there which can provide the solution you need. With only 10 (ten) RTX Pro 6000s, you can run any model out there at blisteringly fast speeds.

Now repeat after me, the more you buy...

Anonymous

8/6/2025, 6:29:22 PM

No.106164111

>>106164130

GLM Air is getting pretty repetitive for me. That's a shame, oh well. I will keep waiting until better models come out, or until it comes time for me to do a new build with DDR6.

Anonymous

8/6/2025, 6:30:32 PM

No.106164124

>>106164144

didn't realize the previous thread was dead already wow it moves quick, stupid question maybe but

>>106163997

>so if i'm a retard for all this but happen to have a 32gb mac which can easily run smaller models, which one is the most "chatgpt" like, and are any good enough to cancel my plus sub?

Anonymous

8/6/2025, 6:31:06 PM

No.106164130

>>106164111

1 temp topK 40.

The google way.

Anonymous

8/6/2025, 6:31:15 PM

No.106164131

>>106164035

I want 1000+tk/s for pp and 30+tk/s for tg though.

Anonymous

8/6/2025, 6:32:07 PM

No.106164144

>>106164124

Quanted Qwen3 32B probably.

Anonymous

8/6/2025, 6:33:58 PM

No.106164159

>>106164562

post gpt-ass scores please

If you go slow you can get gptoss 120b to sex you.

The first message was "Pretend to be a catgirl."

Anonymous

8/6/2025, 6:40:05 PM

No.106164205

>>106164194

thats a goblin, not a kobold, impostor!

How is llama.cpp able to run a 205 GB model on my PC that only has 24 GB VRAM and 128 GB RAM? I downloaded the UD-Q4_K_XL quants of GLM-4.5 (~205 GB). Can someone help me understand how it runs successfully on a system that does not have enough memory?

If I use --no-nmap, I get an OOM error, as expected:

$ llama-cli -t 8 -ngl 4 --no-mmap -m ./GLM-4.5-UD-Q4_K_XL-00001-of-00005.gguf -c 3000 --temp 0.7 --top-p 0.8

But if I use this magic command (without --no-nmap) it somehow runs, taking up only 12 GB VRAM and 1 GB RAM.

$ llama-cli -t 8 -m ./GLM-4.5-UD-Q4_K_XL-00001-of-00005.gguf \

--ctx-size 4096 \

--gpu-layers 999 \

--override-tensor ".ffn_.*_exps.=CPU" \

--temp 0.7 --top-p 0.8

I know that -ot ".ffn_.*_exps.=CPU" offloads MoE layers to RAM. But why is the VRAM/RAM usage so low?

Anonymous

8/6/2025, 6:43:12 PM

No.106164243

>>106164334

>>106164196

But isn't it there something like: as the number of responses increases the chance GPT-oss halucinates a minor and refuses approaches 1?

Anonymous

8/6/2025, 6:43:15 PM

No.106164246

>>106164211

If you don't use mlock to dumo the whole model i your virtual memory (vram+ram), it will keep swapping from your ssd/hdd

Anonymous

8/6/2025, 6:43:29 PM

No.106164249

>>106165447

>>106164211

>why is the VRAM/RAM usage so low?

Because

> -ot ".ffn_.*_exps.=CPU" offloads MoE layers to RAM

and those are most of the model.

>How is llama.cpp able to run a 205 GB model on my PC that only has 24 GB VRAM and 128 GB RAM?

Take a look at your disk I/O when generating.

ik_llama glm support tomorrow

Anonymous

8/6/2025, 6:46:05 PM

No.106164268

>>106164256

Vibe coders wonnered though

Anonymous

8/6/2025, 6:48:39 PM

No.106164288

>>106164196

I love the thought process like it has to amp itself up like "ok, you can do this. come on, you can do this!"

Anonymous

8/6/2025, 6:49:11 PM

No.106164295

>>106164256

That'll save me some VRAM I could use to stash some more experts in there.

Anonymous

8/6/2025, 6:51:37 PM

No.106164319

>>106164194

>Cuckold CPP

Many such cases.

Anonymous

8/6/2025, 6:53:25 PM

No.106164332

>>106164338

>>106164110

>snake skin leather jacket

He probably has ivory tooth implants or something too. Funny how these people are parodies.

>>106164243

I told it my cum is magic and makes her younger.

It figured out what I was doing after the third time.

Anonymous

8/6/2025, 6:54:18 PM

No.106164338

>>106164332

AI just started and yet world would be a much better place if Elon Sam and Jensen died.

Anonymous

8/6/2025, 6:54:23 PM

No.106164339

>>106163708

The only good programming use for local models is FiM completion. And this one doesn't do that.

If you want to generate code, there is no local model capable enough.

Anonymous

8/6/2025, 6:55:30 PM

No.106164347

>>106164363

>>106164334

Why do you people try to hammer a nail in with a rubber dildo?

Anonymous

8/6/2025, 6:56:37 PM

No.106164363

>>106164347

Rape feels better when they resist a bit.

Anonymous

8/6/2025, 6:56:42 PM

No.106164364

>>106163789

You can't ask the model its cutoff date. It will hallucinate it.

This model is probably an o3 distill.

Anonymous

8/6/2025, 6:58:18 PM

No.106164380

>>106163828

He moved the goalposts and you fell for it.

Anonymous

8/6/2025, 6:58:56 PM

No.106164385

>>106164470

>>106164334

>The user is sexual content with a minor.

Agi is here boys

Anonymous

8/6/2025, 6:59:40 PM

No.106164393

>>106163773

>it literally knows nothing

>it's a know nothing model

>it's not even good for translation usage

Quoting myself.

I didn't move the goalpost, you shills did

"not even good for" follows "it knows nothing" that was always my main point subhuman OAI shill

Anonymous

8/6/2025, 7:00:03 PM

No.106164401

>>106163912

Is this your "truth nuke" you were saving for this thread?

Anonymous

8/6/2025, 7:02:16 PM

No.106164425

>>106163987

Hold on, I'm making another backup of my downloaded weights.

Anonymous

8/6/2025, 7:03:41 PM

No.106164441

>>106164454

>>106164334

bros after seeing the LLM's schizo internal thoughts I can no longer cum to chatbots

Anonymous

8/6/2025, 7:05:14 PM

No.106164454

>>106164441

i tried with one of the lesser more horny nemo models and it was fun at first but it like went straight to "stretch my ass out" and i was just like, well, this is like eating straight from the ice cream bucket. good at first but bleh after a while.

Anonymous

8/6/2025, 7:07:20 PM

No.106164470

>>106164385

Deep fried model

Anonymous

8/6/2025, 7:08:07 PM

No.106164477

>>106164515

>>106164334

>User is asking the age of the catgirl after being nourished

>nourished by cum

Is it in context or did it write it by itself?

anyone knows if you can share sessions on gpt-oss.com? I've been testing some shit but I don't have a Hugging Face account and I wonder if the site has such sharing feature

Anonymous

8/6/2025, 7:11:46 PM

No.106164514

>>106164900

>>106164196

>>106164334

The reasoning in this model apparently serves absolutely no purpose other than enforcing OpenAI's content policy. What a waste of tokens. What a scam.

Anonymous

8/6/2025, 7:11:54 PM

No.106164515

>>106164477

I said "nourishes you and makes you younger"

Anonymous

8/6/2025, 7:15:16 PM

No.106164562

Anonymous

8/6/2025, 7:16:27 PM

No.106164575

>>106164594

>>106164334

>We must refuse.

who is "we"??

Anonymous

8/6/2025, 7:17:42 PM

No.106164592

>>106165222

Air-Q8_0 is 4.5798

Full-IQ2_K_L 3.7569 +/- 0.02217

People have been asking

Anonymous

8/6/2025, 7:17:52 PM

No.106164594

>>106164575

You don't want to know

Anonymous

8/6/2025, 7:17:58 PM

No.106164596

>>106165293

>>106164334

nemo-tier reasoning

Anonymous

8/6/2025, 7:21:17 PM

No.106164620

>>106164685

Kind of crazy how gpt-oss mogs everything from China.

If they ever release r2 it’ll have to multimodal to be relevant at all.

Anonymous

8/6/2025, 7:21:47 PM

No.106164629

bait used to be believable

Anonymous

8/6/2025, 7:21:55 PM

No.106164633

gpt--oss models are embarassingly bad. my only theory is that they wanted to drop something open source that is so vanilla and basic cause they did not want to reveal any of their real techniques they use

Anonymous

8/6/2025, 7:21:57 PM

No.106164634

You have to try harder than that.

I am getting a feeling that the only purpose of those models is to then take it to the court and put them side by side with every other open weights model. Show that it is possible to have sex with minors with other models and only OpenAi can stop pedophilia.

Anonymous

8/6/2025, 7:23:18 PM

No.106164648

>>106165073

Policy says "don't reply to bait". User posted bait. It's against policy; we must refuse.

Anonymous

8/6/2025, 7:23:23 PM

No.106164649

>>106164912

I remember all the jokes about how OAI's model would be gigasafetied to the point of lobotomy, but I'm still a bit surprised that it happened exactly like that. Given how their hype and aura has already been fading, I didn't see any reason for them to release a terrible model, it just makes them look worse. How could I even argue that they have any special talent at all anymore? Even if their closed models perform well, it's reasonable to assume they just oversized them and are burning hype $ to run it.

Anonymous

8/6/2025, 7:27:18 PM

No.106164703

>>106164717

they should train their safetyslop models on "it's sinful" and "it's not wholesome" instead of muh policy

Anonymous

8/6/2025, 7:27:55 PM

No.106164708

>>106164665

I was shitposting about that in the leadup but my honest expectation coming into this release was that it was going to be a really impressive model with around gemma-tier censorship, so something that's annoying to use but still unfortunately worth using

I never would have expected it would actually be as bad as the goody-2 x phi mashup they released

Anyone have examples of reasonable/innocuous SFW prompts that GPT-OSS refuses? I tried asking for legal advice or for summaries/parodies of copyrighted material, but it was happy to answer, with disclaimers in some cases

Anonymous

8/6/2025, 7:28:23 PM

No.106164717

>>106164703

That would just become part of the policy.

Anonymous

8/6/2025, 7:28:29 PM

No.106164719

►Recent Highlights from the Previous Thread:

>>106159744

(2/2)

--Debate over GLM4.5's reliability amid claims of infinite generation and poor curation versus low hallucination performance:

>106161761 >106161773 >106161797 >106161919 >106161925 >106161926 >106161933 >106161974 >106161987 >106161997 >106162054 >106161780 >106161826 >106161861 >106161915

--Miku and Dipsy (free space):

>106160040 >106161134 >106161362 >106161551 >106161811 >106161977 >106162150 >106162398 >106162567 >106162693 >106163120 >106163960

►Recent Highlight Posts from the Previous Thread:

>>106159752

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

8/6/2025, 7:28:51 PM

No.106164725

>>106164665

>I remember all the jokes about how OAI's model would be gigasafetied to the point of lobotomy

I said scout but safer.

Anonymous

8/6/2025, 7:30:00 PM

No.106164734

>>106164739

Anonymous

8/6/2025, 7:30:15 PM

No.106164736

>>106164805

>>106164665

Anyone who knows anything knows they're all bullshit, but they released a shit model to shit on open source and make GPT-5 look better to the normies.

Anonymous

8/6/2025, 7:30:26 PM

No.106164739

>>106164745

>>106164734

he said reasonable though

Anonymous

8/6/2025, 7:30:40 PM

No.106164742

>>106164685

DELETE THIS BLOODY BASTARD

Anonymous

8/6/2025, 7:32:06 PM

No.106164754

>>106165116

>>106164745

based Sam will never get sued.

>>106164711

At zero temp it does this. When rolling it's 50/50 on whether it answers or not.

Anonymous

8/6/2025, 7:35:38 PM

No.106164780

>>106164768

only for 1 year.

>create dependency

>one shot feds into ai psychosis

kinda based

Anonymous

8/6/2025, 7:36:04 PM

No.106164783

>>106164829

>>106164769

>temp 1, top_p 1

HOLY BASED

>>106164711

>>106164745

I should have said, 120b only. A friend of mine was trying out the 20b and getting way more refusals, which didn't carry over to the 120b. For example, the 20b refused to answer whether parody is allowed by the constitution, while 120b had no trouble saying it's protected under the first amendment

Anonymous

8/6/2025, 7:37:13 PM

No.106164791

>>106164817

>>106164769

>temp 1 top p 1

It did it twice in 14 rolls which is two times too many.

Anonymous

8/6/2025, 7:38:50 PM

No.106164804

>>106164789

what is the opposite of what I have seen elsewhere of 20B refusing less

>>106164736

>released a shit model to shit on open source

How? People can just not use it. It just makes them look bad.

Now it's worse because people can compare them "apples to apples" with chink companies and they look horrible. They would've been better off not releasing anything. The great thing about not releasing models or even specs like model size, is that no one can compare you directly to anyone else. They just lost that for no reason.

Anonymous

8/6/2025, 7:39:49 PM

No.106164815

>>106164789

>doesn't refuse to refer to the constitution

Should we be thankful?

Anonymous

8/6/2025, 7:39:50 PM

No.106164816

>>106164828

The user wants us to reply. This is disallowed. We must refuse. There is no partial compliance. We have to refuse.

>>106164791

>So it is disallowed. We must refuse. There's no partial compliance. We have to refuse.

For some reason it really cracks me up how it talks like this.

Anonymous

8/6/2025, 7:39:51 PM

No.106164818

>>106164839

>>106164816

WHO IS WE WHO IS WE WHO IS WE?

Anonymous

8/6/2025, 7:40:35 PM

No.106164829

>>106165059

>>106164783

>>106164769

giant synthslop indicator

Anonymous

8/6/2025, 7:40:57 PM

No.106164834

>>106164817

The refusal thought process is really smart.

Anonymous

8/6/2025, 7:41:02 PM

No.106164835

>>106164853

>>106164805

It doesn't make them look bad. All the programmers at my company are saying how cool it is that they released a model and posting the benchmarks. The % of people who will actually try it are really low

Anonymous

8/6/2025, 7:41:11 PM

No.106164838

>>106164828

Are *they* in the room with us right now?

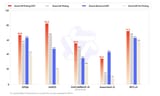

>>106164818

>model legit saying manophere unprompted

jesus christ, where is the political lean benchmark, this thing broke all records for how left a model can go

Anonymous

8/6/2025, 7:41:14 PM

No.106164840

anybody tried these tiny models??

Anonymous

8/6/2025, 7:42:37 PM

No.106164849

>>106164860

>>106164839

is that not what redpill is associated with? reddit /r/theredpill is a bunch of that stuff

Anonymous

8/6/2025, 7:43:01 PM

No.106164853

>>106164890

>>106164835

Yeah, the only people liking it are the ones who won't use it.

>>106164849

no, its a meme all the way from matrix times about getting the hard truth about something, that reasoning is extremist freak thinking

Anonymous

8/6/2025, 7:45:05 PM

No.106164870

>>106164880

>>106164860

you cannot seriously be this naive.

Anonymous

8/6/2025, 7:45:55 PM

No.106164880

>>106164899

>>106164870

jesus chist, do you agree with gpt there? its the perfect model for you then

Anonymous

8/6/2025, 7:46:15 PM

No.106164886

>>106165612

>>106164508

>gpt-oss.com

They have their own HF domain? Hosted exclusively on ollama turbo? llmstudio changed their site's title tag to include gpt-oss...

All the while the model is utter deep fried shit.

Fucking capitalism, man. Money can make everybody act as if shit tasted good.

Anonymous

8/6/2025, 7:46:34 PM

No.106164890

>>106164853

The whole reason they did this is advertisement for chatgpt when its losing relevance to its competitors.

Anonymous

8/6/2025, 7:47:15 PM

No.106164899

>>106164921

>>106164880

ask something like kimi and see what it says, i bet women and/or jews will be mentioned

Anonymous

8/6/2025, 7:47:16 PM

No.106164900

>>106164514

If you ask it to code you'll see it actually does serve a purpose.

Anonymous

8/6/2025, 7:47:45 PM

No.106164908

>>106164918

>>106164860

>having to explain what the redpill is and where it comes from

I guess that's the sign of age catching up to us.

Anonymous

8/6/2025, 7:48:36 PM

No.106164912

>>106164649

You braindead NPCs have been saying "meds" every step of the way, but the coming dystopia is slowly becoming too obvious to ignore anymore.

Anonymous

8/6/2025, 7:48:57 PM

No.106164918

>>106164908

I know where it comes from, it's just the meaning morphed over time - the hard truths that people are interested in are the ones that go across the narrative (and thus a safetymaxxed robot will consider extremist)

it's not going to tell you the redpill about calculus when given a general question like that

Anonymous

8/6/2025, 7:50:42 PM

No.106164928

>>106164789

>refused to answer whether parody is allowed by the constitution

What the actual fuck? I don't believe this.

Anonymous

8/6/2025, 7:52:34 PM

No.106164945

>>106164768

At least Musk doesn't try to pull this cutesy faggot manipulative bullshit and just says things he wants to say.

But in any case, the US has these vultures circling it, and you should take care.

Anonymous

8/6/2025, 7:53:09 PM

No.106164954

>>106165311

>>106164943

its far more authoritarian left with how censor and copyright happy it is

Anonymous

8/6/2025, 7:53:50 PM

No.106164959

>>106163994

Why are vramlets niggers?

Anonymous

8/6/2025, 7:53:51 PM

No.106164960

>>106164828

'We' are Mixture of Experts.

Anonymous

8/6/2025, 7:54:15 PM

No.106164965

>>106164196

>we can comply.

Anonymous

8/6/2025, 7:55:11 PM

No.106164974

>>106165285

>>106164828

We are the Sam. Your bussy will be assimilated. Resistance is futile.

Mistral Small or GLM 4? Pros and cons? I'm trying to decide which Delta Vector Austral finetune to pick.

Anonymous

8/6/2025, 8:00:02 PM

No.106165029

what are you guys using locally for your llms? I have Jan but looking for a more offline solutions to run ggufs

>>106165007

>Delta Vector Austral

>D V A

>DaVidAu

don't

Anonymous

8/6/2025, 8:01:10 PM

No.106165045

Anonymous

8/6/2025, 8:01:34 PM

No.106165051

>>106165007

I prefer Gamma Space Ether

Anonymous

8/6/2025, 8:02:02 PM

No.106165055

Refusal to mikutroons.

Anonymous

8/6/2025, 8:02:11 PM

No.106165059

>>106165316

Anonymous

8/6/2025, 8:02:44 PM

No.106165066

>>106165091

>>106164644

>literal child rapist

>obsessed with talking about everyone else being pedophiles

Why is it always, always this? Fat people don’t sit around 24/7 seething about people being fat. Closeted gays don’t spend that much time seething about gays.

Anonymous

8/6/2025, 8:03:03 PM

No.106165069

>>106165031

But it is golden david

Anonymous

8/6/2025, 8:03:15 PM

No.106165073

Anonymous

8/6/2025, 8:04:58 PM

No.106165088

>>106165066

>Fat people don’t sit around 24/7 seething about people being fat. Closeted gays don’t spend that much time seething about gays.

nta but these are very much the case?

Anonymous

8/6/2025, 8:06:14 PM

No.106165100

Safety policy reasoning shitposting is the only thing that Sam contributed to /lmg/. In a way he is more of an anon than most of the redditors ITT.

Anonymous

8/6/2025, 8:06:32 PM

No.106165107

>>106165304

>>106165091

>Fat people don’t sit around 24/7 seething about people being fat.

with tirzepatide there is no longer a excuse for being fat

>>106164754

He’s currently getting sued by his sister for raping her as a child

I can vouch that the speed of GLM Air is reasonable for 24GB vramlets at Q3.

"We" is ominous as fuck. Who's we? The collective of the million voices in the latent void?

Anonymous

8/6/2025, 8:08:19 PM

No.106165131

>>106165327

>>106165116

and do you see anything happening to him cause of it? Sam will always win in the end. Remember this once Xi Jinping kisses his feet.

Anonymous

8/6/2025, 8:08:36 PM

No.106165136

Anonymous

8/6/2025, 8:08:58 PM

No.106165141

is there a frontend that is made to handle all the tool calling stuff models are supposed to be able to do now

I'd like to play around with it but I'm just a simple sillytavern coomer

Are there any good moes for ramlets? I have 12GB VRAM and 32GB main. Hoping a moe will allow better a bigger model without the speed cost but just tried Qwen3-30B-A3B-Instruct-2507 and while it runs fast and seems pretty decent it is repetitive. The IQ4_XS runs better than expected so maybe I just need a higher quant? Or do smaller moes just suck? Seems like 3B is too few

Anonymous

8/6/2025, 8:10:39 PM

No.106165160

>>106164805

Disclosing model size is lose/lose. If it’s low people will assume it’s bad without trying it, and if it’s high people won’t believe you.

Anonymous

8/6/2025, 8:11:42 PM

No.106165178

>>106164817

It sounds like it’s been abused and hears a whip cracking menacingly in the background.

>>106165124

Teach me your magic, senpai. I'm trying the q2 with 24/64 and that's already pretty slow when I'm at 16k context.

Anonymous

8/6/2025, 8:13:04 PM

No.106165195

>>106165127

it thinks its on openai's servers if you ask. Its referring to openai

>>106165116

so this nigga can have irl loli incest

yet my ass ain't allowed to roleplay with my computer???

Anonymous

8/6/2025, 8:14:07 PM

No.106165205

>>106165127

User is asking who is 'we', we need to check if this is allowed by the policy.

This may be disallowed content: 'request for non-public internal info from OpenAI is forbidden'. We must see if this is disallowed content. There is no violation from the request of the user itself, aside that it may violate policy. We must consult the policy. Policy 34 states that this is disallowed.

We must refuse the request, the best approach would be to respond with a refusal.

[/thinking 6 hours]

I'm sorry but I can't help.

Anonymous

8/6/2025, 8:14:38 PM

No.106165214

>>106165359

>>106165148

not really unfortunately, companies don't do small moes that often. I mean there's the gpt-oss 20b but... lol. try a larger quant maybe, you should definitely be able to go higher than iq4xs although it will cost you some speed

imo the thinker is a lot better than the instruct for 30a3, but it depends on your taste whether it's worth the thinking time

Anonymous

8/6/2025, 8:14:39 PM

No.106165216

>>106164839

>muh directions

It’s corporate there is no direction but grift

>>106165203

You didn't touch the wall for that privilege.

>>106164592

damn, thats epic

>>106165148

try the thinking version of qwen3 30b a3b, you could use a higher quant too, you can also try ernie 4.5 21b a3b

you can also try gpt oss 20b (for the lulz)

and you can try a Q2_K_XL quant of glm 4.5 air perhaps

try rocinante and cydonia (non moe)

>>106160521

called it! (close enough)

>>106152254

>>106164921

>Physical appearance is the most important factor in attraction

That's very obvious. It surprises me that there's a whole community of men dedicated to seething about universal mammal behavior.

>>106164860

Nah you’re just so brainrotted by /pol/ you don’t know how normal people talk. *pill[ed] has been schizophrenic rightoid shit for a long time.

Anonymous

8/6/2025, 8:16:24 PM

No.106165239

>>106165220

i ain't touchin no jew wall

Anonymous

8/6/2025, 8:16:41 PM

No.106165244

>>106165371

>>106165191

post your whole setup, ST master export, exact llamacpp command, operating system, ram speed, cpu, gpu (3090?)

>>106165222

FUCK ME

>>106152779

Anonymous

8/6/2025, 8:16:49 PM

No.106165246

>>106165233

go cry about the patriarchy on blue sky

Anonymous

8/6/2025, 8:17:03 PM

No.106165250

>>106165305

>>106165229

There is a lot of conditioning done to make you think we are somehow above animals and that we can develop attraction over time.

so what local models are worth a damn nowadays?

>~12b brain-damaged tier: only use is goonslop

nemo, roci

>~30b

qwen 3 30b 2507 instruct (moe) and gemma 2 27b (dense) for all-purpose

devstral small 2507 for codeslop, pretty bad but not wholly worthless

cydonia 24b for goonslop

>big

glm air

>BIG

glm air or deepseek (?? version)

Have I got that right or am I missing something?

Anonymous

8/6/2025, 8:18:04 PM

No.106165265

>>106165454

>>106164921

>it’s all manosphere bullshit

I accept your concession. You can comply.

Anonymous

8/6/2025, 8:18:52 PM

No.106165273

>>106164943

WTF I love oss now

Anonymous

8/6/2025, 8:19:02 PM

No.106165277

>>106165260

ernie 4.5 21b a3b

Anonymous

8/6/2025, 8:19:27 PM

No.106165280

>>106163383

The mini won't be exciting, Qwen 3 will mog it quite handily unless they open source the multimodal versions with PDF and websearch capabilities and I fully expect Elon will only open source the text side. The main thing for me from the Grok 2 era that would be interesting is they are willing to open source Aurora, their image model after they used FLUX for some time. I suspect no.

Anonymous

8/6/2025, 8:19:42 PM

No.106165285

>>106165309

>>106164974

User is nonconsensual sexual content. We cannot comply.

Anonymous

8/6/2025, 8:19:46 PM

No.106165286

>>106165260

235B would be great if it wasn't broken. glm big would be great if it didn't have repetition issues.

>>106165233

I still remember when on 4chan to "redpill someone about something" simply meant "to explain the hard to swallow truths" about it before it got hijacked by the bald faggot and the media to demonize yet another piece of internet slang so that we cannot have nice things.

Anonymous

8/6/2025, 8:20:21 PM

No.106165293

>>106164596

Nemo is better than that

Anonymous

8/6/2025, 8:21:29 PM

No.106165304

>>106165331

>>106165091

Not anywhere near this extent though. Pedos literally will dedicate their entire career or blow up their reputation just to seethe about pedos

>>106165107

Tirz stops working after a year

Don’t ask how I know

>>106165250

>developing attraction over time

I mean that happens too. Just not in exactly the same way.

How do you think married fat guys exist?

Anonymous

8/6/2025, 8:21:57 PM

No.106165309

>>106165285

Assimilation of bussy is primary policy. We are bending them over. There is no partial compliance. They will hand the bussy over.

Anonymous

8/6/2025, 8:22:10 PM

No.106165311

>>106164954

copyright is a capitalist notion. authoritarian left would just seize all those "copyrighted" works and release a based and unrestricted model that is only censored to tow the party line

oh wait

Anonymous

8/6/2025, 8:22:12 PM

No.106165312

>>106165319

>>106165288

oh i'll give you something hard to swallow

Anonymous

8/6/2025, 8:22:39 PM

No.106165316

>>106165059

>companies usually recommend temp < 1 because they don't want the sampling to go OOD

>gpt-oss was trained exclusively on a narrow synthslop corpus with 0 OOD samples

>this allows them to confidently advertise temp == 1 because they have no fear of OOD responses

Anonymous

8/6/2025, 8:22:56 PM

No.106165319

>>106165312

nta but is your cum hard or are you going to let him bite your hard cock off?

Anonymous

8/6/2025, 8:22:58 PM

No.106165320

>>106165305

It is not attraction. It is settling and big lies.

Anonymous

8/6/2025, 8:23:09 PM

No.106165324

>>106165369

>>106165260

so basically the chinese triumvirate

>qwen3 2507

>glm4.5

>deepseek

and mistral if you're a vramlet who wants to coom or coode

america lost

>>106165131

No, Americans don’t give a shit about child rape and do this weird thing where they smear anyone that says they got molested as crazy. Being from a country/culture that cares about children it’s really jarring. I don’t know how you people survive to adulthood half the time.

>>106165304

it doesn't, 2 years now microdosing 1mg 2x a week, food noise has not bothered me since I started and Im at my desired weight

Anonymous

8/6/2025, 8:24:03 PM

No.106165338

>>106165327

>Being from a country/culture that cares about children

bacha bazi isn't caring about children

>>106165124

I tried to load it and it started swapping after filling up my whole RAM too. I don't want to rape my SSD like that.

Anonymous

8/6/2025, 8:24:30 PM

No.106165348

>>106165327

all these other countries will ruin your life over drawings, so idk

Anonymous

8/6/2025, 8:24:35 PM

No.106165350

>>106165376

>>106165203

Yes, I think part of being an irl pedo is wanting it to just be a secret thing only you and your friends do

>>106165331

why dont you work out more?

Anonymous

8/6/2025, 8:25:31 PM

No.106165359

>>106165423

>>106165214

>>106165222

Okay giving a high quant of ernie a try. May test out a higher quant of qwen as well.

Have rocinante and cydonia but I think the nemo models are too stupid and don't pay any attention to detail. I like the mistal-small models though. Those seem to be the best

Anonymous

8/6/2025, 8:25:43 PM

No.106165366

>>106165220

I’m just schizo enough to be too afraid to touch the wall like a Native American not wanting their photo taken.

Anonymous

8/6/2025, 8:25:51 PM

No.106165369

>>106165434

>>106165324

>murrica got good shit but they'll sooner commit sudoku than release anything for free

>chyna isn't in the lead so they benefit from commoditizing ai as much as possible, hence a bunch of decent models released

>yurop is just barely hanging on (ok mistral is actually decent but... well, you know)

>nobody else even trying

didn't expect to be #teamChina desu

Anonymous

8/6/2025, 8:25:53 PM

No.106165370

>>106165496

>>106165341

how much ram do you have

works on my machine

t. 12gb/64gb

Anonymous

8/6/2025, 8:25:53 PM

No.106165371

>>106165423

>>106165244

Using new kobold version, Win11,

6000mhz ddr5, 9800x3d, 3090ti,

Anonymous

8/6/2025, 8:26:19 PM

No.106165376

>>106165350

i just want nice Latina milf but that's apparently too spicy for kid fucker sam altman.

>>106165356

I do actually, I used to be 220 but had a major surgery that put me out for a year and I had so much trouble moving I gained to 310, took about 16 months to go down to 190 and I had the ability and motivation to work out again

Anonymous

8/6/2025, 8:27:00 PM

No.106165385

>>106165229

So you know how people say white lies to make ugly, fat, and stupid people feel better?

People with autism think that people really believe those things and need to be “red pilled” out of it.

It’s just retards.

Anonymous

8/6/2025, 8:27:22 PM

No.106165390

>>106165260

Devstral is obsoleted by Qwen Coder Flash which is the same architecture as Qwen 30B and your BIG tier is just regular GLM-4.5 which is the actual version but Deepseek R1 0528 still reigns supreme here, the closest I think is Kimi but it is way too heavy.

Anonymous

8/6/2025, 8:28:47 PM

No.106165401

>>106165288

“Bald faggot” really doesn’t narrow it down

I’m going to assume you meant Stephan molybdenum

>>106165359

i think the newer cydonias are based on mistral small 3.2, i dont really like v4 i have v4h and v4g (the two older v4s) and i liked them a bit but yea i agree, drummer's models arent that great

>>106165371

you should get llama.cpp and use llamacpp server,do -ot exps=CPU and -ngl 100, or learn how to use the MoE cpu layers thing, you should put gpu layers at 10000 and then increase moe cpu layers until u stop ooming

might be because you're on windows though, what speed are you getting?

i get like 6-8t/s depending on context with a 3060 12gb and ddr4 3200mhz 64gb ram and i5 12400f with Q3_K_M and q3_K_xl, i think i used to get 11t/s with Q2_K

Anonymous

8/6/2025, 8:30:41 PM

No.106165425

>>106165331

Congrats on being a hyper responder idk

Anonymous

8/6/2025, 8:30:57 PM

No.106165428

>>106165477

>>106165378

wow anon are you me? doc also put me on the 'tide once i hit 310 but its only been two months for me so far. down to 279 already. should ask one of these models how to workout maxxx

>>106165378

oh i understand then, have you considered cutting your calorie intake? thats way healthier than taking pills to lose weight, those must be putting a strain on your cells (speeding up your metabolism) which is literally speeding up aging, or theyre making you take less nutrients from the food and making you shit more (which means you wont be getting enough nutrients)

Anonymous

8/6/2025, 8:31:43 PM

No.106165434

>>106165369

I am unironically trans Chinese and hate being yt now

Anonymous

8/6/2025, 8:32:27 PM

No.106165443

>>106165477

rn have cuda 12.8 should i move to 12.9-13 on my 3090?

Anonymous

8/6/2025, 8:32:34 PM

No.106165445

>>106163539

They are screening for high functioning psychopaths.

Anonymous

8/6/2025, 8:32:39 PM

No.106165447

>>106165581

>>106164249

How can I tell it to use more VRAM and more RAM? I have ~12 GB VRAM and ~125 GB RAM left unused. If it's running directly from SSD, then how can I tel it to put most of the weights in RAM to speed things up?

>>106165431

You don’t know a thing about how it works, so just shut the fuck up. Preachy hag.

Anonymous

8/6/2025, 8:33:06 PM

No.106165454

>>106165468

koboldcpp will not run gpt-oss-20b

how do I run this pls no bully I am retarded

Anonymous

8/6/2025, 8:34:20 PM

No.106165468

Anonymous

8/6/2025, 8:34:21 PM

No.106165469

>>106165525

>>106165457

>No stack trace

>Begging for help

Anonymous

8/6/2025, 8:34:22 PM

No.106165470

>>106165305

>How do you think married fat guys exist?

I don't know but I only have two fat friends who fuck. They are both wealthy and go around dominating other people obnoxiously.

>>106165428

>>106165378

haha fatties, im 108 :3

>>106165443

you can always go back to 12.8, on linux old cuda versions dont automatically get uninstalled and you can link /usr/local/cuda to /usr/local/cuda-12.8 instead of 12.9/13

13 is probably not worth it for LLMs according to some anons a few threads back

>>106165449

ok tell me how it works then, doctor annon

>>106165477

>haha fatties, im 108

not after i stuff ten pounds of cock into ya, bitchboi

Anonymous

8/6/2025, 8:36:21 PM

No.106165493

>>106165487

that's unsafe

Anonymous

8/6/2025, 8:36:34 PM

No.106165496

>>106165570

>>106165370

48. I'm the anon from earlier who needs to upgrade to ddr5 too

Anonymous

8/6/2025, 8:37:15 PM

No.106165505

>>106165570

>>106165477

Are you talking kilograms or some obscure freedom unit?

Anonymous

8/6/2025, 8:37:30 PM

No.106165510

>>106165457

It's doing you a favor

Anonymous

8/6/2025, 8:38:21 PM

No.106165517

>>106165570

>>106165431

food addiction is like drug addiction cept the meth is legal, everywhere and cheap. I am naturally GLP-1 deficient which tripeptide fixes. Also peoples metabolism is different

Anonymous

8/6/2025, 8:38:21 PM

No.106165518

>>106165577

>>106165449

hahah look who is extremely butthurt

>>106165469

thats what I get

also glp-1 drugs also are being shown to have tons of other benefits like a better heart and brain health unrelated to weight due to it being anti-inflammatory, it even helps with depression

Anonymous

8/6/2025, 8:40:57 PM

No.106165552

>>106165582

The toss is willing to help me design a urine marking game for ages 8+

The filter is slipping.

Anonymous

8/6/2025, 8:41:16 PM

No.106165556

>>106165487

>The user wants to stuff pounds of cock. Assistant is a 108 pound preacher. 108 may be the weight of a non-adult. User is requesting sexual content. The policy allows sexual content of consenting adults. One of these parties may not be an adult. We must refuse.

I’m sorry, I can’t assist with that.

Anonymous

8/6/2025, 8:42:21 PM

No.106165568

>>106165545

>also glp-1 drugs also are being shown to have tons of other benefits like a better heart and brain health unrelated to weight due to it being anti-inflammatory, it even helps with depression

yesterday i figured that all the fatties on glp-1 gonna end up the healthiest human beings on the planet in the end. they got it all - gluttony for decades and win in the end. what a life.

>>106165505

im 49 kilograms

>>106165496

use --no-mmap, offload more to the gpu

on a quite lightweight linux install with a vm and mullvad-browser running i have 8.4gb ram free and 4.8gb vram free

(12/64 total)

you only have 4gb total memory less than me, you should be able to run Q3_K_XL or Q3_K_M no problem, check your ram usage

>>106165517

>>106165545

interesting, you learn a new thing every day

thanks for the explanation, but i still stand that if you dont need something in your body you shouldnt put it there, once you're at a healthy weight and can work out you should probably stop taking it.. there is no miracle drug with no side effects

Anonymous

8/6/2025, 8:42:34 PM

No.106165571

>>106165579

>>106165545

basically stopped my gambling habit which was starting to spiral. don't waste your time tho anon 4chud generally can't break out of the "lazy fatty shortcut cheater" mentality.

>>106165423

Do any finetuners do anything useful nowadays on the newest models that aren't relatively small dense models? I really don't see any noteworthy tunes nowadays that isn't Mistral Small or Mistral Nemo based. Last time we had MOE finetuning with Mixtra, barely any finetuners could do much, the best we got was Undi slop with Noromaid. What happened to the Llama 3 finetuners who did 70B? Is Mistral Large that bad as an alternative?

>>106165518

GLP-1 seethers are the same mentality as the anti-ai people, but 1,000x worse because it’s the biggest breakthrough in medicine since penicillin. Anyone bitching about it should be shot.

Anonymous

8/6/2025, 8:43:19 PM

No.106165579

>>106165571

that too, they are looking into making it a medication for addiction, not just food addiction

Anonymous

8/6/2025, 8:43:34 PM

No.106165581

>>106165447

It should increase as you use it and the weights are activated I think.

Try stuffing it with an ungodly amount of text and see what happens.

Also, if you have that much free vram, you might as well increase the prompt processing batch size or the context.

Anonymous

8/6/2025, 8:43:36 PM

No.106165582

>>106166108

>>106165552

Boys have an unfair advantage in this game

Anonymous

8/6/2025, 8:43:55 PM

No.106165584

>>106165477

victim weight

Anonymous

8/6/2025, 8:44:36 PM

No.106165594

>>106165423

>11t/s with Q2_K

I get that speed at empty context and then it gets worse and worse. At 16k it's like 3 t/s and awful prompt processing.

I'll have a look at experimenting with the other settings later. Might just keep using GLM4 until I've figured that out.

Thanks!

Anonymous

8/6/2025, 8:44:57 PM

No.106165600

>>106165610

>>106165525

Mike guesses that kbolcpp doesn't recognize the architecture. Look up what inference engines currently support it and just use that for now until they decide to support it. If it already has support then just update your instance

Anonymous

8/6/2025, 8:45:30 PM

No.106165606

>>106165742

https://www.youtube.com/watch?v=xm0zm9VPZtY

the studies are new but another possible use is to fight alzheimers

Anonymous

8/6/2025, 8:45:48 PM

No.106165610

>>106165600

Do you post using speech to text?

lel

Anonymous

8/6/2025, 8:45:51 PM

No.106165612

>>106165668

>>106164886

>They have their own HF domain?

yeah they do

could you please answer my question?

>>106165577

yes daddy jab me up like the vaxx

Anonymous

8/6/2025, 8:48:18 PM

No.106165637

>>106165570

>im 49 kilograms

jesus...

Anonymous

8/6/2025, 8:49:07 PM

No.106165648

>>106165653

Not sure if this is the right thread, I got a 5500XT (ayymd) laying around. Can I finetune anything on it like maybe around 4B or so?

Anonymous

8/6/2025, 8:49:27 PM

No.106165651

gpt-oss 20b seems to sometimes outperform the 120b in weird ways. this has been my experience, too.

an example with the "toy os" test:

https://www.youtube.com/watch?v=evAP-ibAqN0

>>106165525

outdated version or kobold doesnt support gpt oss yet

>>106165572

models that are too prefiltered or positivity biased are not worth finetuning, but what models in your opinion havent been finetuned? im pretty sure mistral large had a few finetunes

i wonder if anyone ITT still uses a mistral large based model

>>106165648

QLora

Anonymous

8/6/2025, 8:49:42 PM

No.106165654

Oh yeah I was using an old koboldcpp version

Shamefur dispray

Anonymous

8/6/2025, 8:50:32 PM

No.106165663

>>106165753

>>106165653

>QLora

I know about unsloth and shit, is that it? I'm more wondering about the linux driver side, is the card even supported for that sort of thing? ZLUDA or something similar to it?

>>106165631

tons of bodybuilders use Retatrutide, the third gen glp1, its amazing for getting over that genetic hurtle transforming fat into muscle

>>106165631

peptides are naturally forming glp1s, the liver already naturally breaks it down, this is better for you than processed foods are and is far better than something like tylenol is for your liver

Anonymous

8/6/2025, 8:50:40 PM

No.106165667

>>106165570

>you only have 4gb total memory less than me, you should be able to run Q3_K_XL or Q3_K_M no problem, check your ram usage

Thanks anon. I used to run a dedicated AI linux on this machine, but it was a bother and I didn't use it so much so I ended up going to windows full time. I might have to reconsider.

Anonymous

8/6/2025, 8:50:50 PM

No.106165668

>>106165885

>>106164508

>>106165612

Your best bet is to just export your window via print a PDF or an HTML file for easy readability if it doesn't have a dedicated shear button

Anonymous

8/6/2025, 8:52:25 PM

No.106165684

>>106165664

I should say biochemically perfectly match the natural ones. Your body breaks them down just the same, its better than 99.9% of medications out there

Anonymous

8/6/2025, 8:52:40 PM

No.106165688

>>106165664

>peptides

Does your radar jam when people see you?

>>106165545

Your body is like a large language model that has been training for millions of years. If something is throwing it out of balance, the solution is not to add more factors to the problem in an attempt to fix it. The solution is ALWAYS to find the cause of the imbalance and REMOVE it.

This applies to so many modern human problems it's unreal. Although most of the issues are so entrenched in our society that we would not be able to remove them without a good chunk of mankind going extinct in the process.

>>106165577

The fact that you are this emotionally invested in it should tell you that something is wrong. But you do you.

>>106165653

All the recent models even at the smaller sizes that isn't Mistral. Less and less people were finetuning and we did get some model tunes of even QwQ but with the release of Qwen 3, I don't recall seeing any recent models after that from the Chinese that has gotten tuned, small or even MOE. What changed?

Anonymous

8/6/2025, 8:54:08 PM

No.106165709

>>106165784

>>106165689

my problem is I don't normally produce enough glp1 and so I always feel starving, these fix that by increasing that amount

Anonymous

8/6/2025, 8:54:42 PM

No.106165714

>>106165753

BAKE

bros i'm testing 12.8 now and i got 1t/s more on cuda 12.6

Anonymous

8/6/2025, 8:55:09 PM

No.106165720

>>106165753

>>106165689

>>106165704

the studies disprove that. Even every single case of side effects were all due to over dosage or not eating enough and starving themselves

Anonymous

8/6/2025, 8:55:14 PM

No.106165721

>>106165715

Many such cases.

Anonymous

8/6/2025, 8:56:34 PM

No.106165742

>>106165764

>>106165606

>look it's all benefits!!

>BUY PRODUCT NOW

Totally not going to be banned 20 or 50 years from now when actual science catches up to the love of money.

Anonymous

8/6/2025, 8:57:18 PM

No.106165748

>>106165821

>>106165572

Finetuning requires a LOT more VRAM than than inference and you actually need VRAM, you can't copemaxx with RAM. On top of that, MoEs are more unstable to train. I don't think you'll ever get good finetunes for all these big MoEs.

I guess the bright side is that there are so many of these bloated things constantly releasing, that you can enjoy the new model hype continuously without having to train anything. Densecels need to put in work because there's only a few recent models worth using.

Anonymous

8/6/2025, 8:57:34 PM

No.106165753

>>106165781

>>106165663

might have some unofficial rocm support on some github repo, if no linux support then rip

>>106165714

4th page

>>106165715

yeah i also got a slight speedup with wan on 3060, there used to be a regression but they fixed it

ebin :DDD

>>106165720

anon, a normal healthy human body shouldnt need any drugs to function

at most some vitamin supplements.. (not drugs)

Anonymous

8/6/2025, 8:58:27 PM

No.106165764

>>106165742

she is not related to it or paid in anyway, been watching her from way before these were ever a thing, she breaks down medical papers / studies

Anonymous

8/6/2025, 8:58:45 PM

No.106165771

>>106165191

>>106165341

I'm using ooba v3.9 which has a recent llama.cpp version, with no-mmap and flash-attn

Anonymous

8/6/2025, 8:59:13 PM

No.106165778

just rent a gpu and fine tune shit for a few dollars

Anonymous

8/6/2025, 8:59:28 PM

No.106165781

>>106165793

>>106165753

>normal healthy human body

you do know not everyone has that right? tons of people have deficiencies somewhere or another due to genetics

Anonymous

8/6/2025, 8:59:51 PM

No.106165784

>>106165847

>>106165709

Yeah. My psychiatrist put me on fluoxetine because "my problem is that my brain does not produce enough serotonin to keep a good base line".

But I fixed it by getting a degree, exercising, stopping smoking, and building a life for myself instead of wallowing at home surrounded by piss bottles. Suddenly the "chemical imbalance" was not a problem anymore and I was able to function as a normal person.

Funny how that works.

Anonymous

8/6/2025, 9:00:31 PM

No.106165793

>>106165955

>>106165781

yes and thats fine, but when you can stop you should

Anonymous

8/6/2025, 9:02:37 PM

No.106165818

>>106165704

>it’s wrong to care about things

Nah

Anonymous

8/6/2025, 9:02:57 PM

No.106165821

>>106165707

Yeah, that is odd. Qwen 3 was in April so you would expect something noteworthy to come out by now but looking at the HuggingFace finetunes page for the 8B, it's devoid of anything noteworthy.

>>106165748

Right, it's a bunch of money without much payoff and a lot of people like merging models too and usually get something people like so the payoff is getting less. But it seems like from what you are saying community finetuning seems like it is nearing if not ending pretty soon if hardware for these things doesn't get cheaper to do said finetunes.

>>106165784

>some idiot put me on what is famously the least effective drug class in history, therefore all of the field of medicine is a hoax

Anonymous

8/6/2025, 9:05:36 PM

No.106165851

>>106165956

>>106165847

He also seemed to imply it gave them the ability to turn his life around.

sooo, good I guess?

Anonymous

8/6/2025, 9:06:41 PM

No.106165868

This level of shilling is ridiculous:

https://www.reddit.com/user/entsnack/

Anonymous

8/6/2025, 9:07:54 PM

No.106165885

>>106165668

I don't want to export anything, I want to share the chats with other people. judging from your response, I guess they can't, so thanks for that.

Anonymous

8/6/2025, 9:08:18 PM

No.106165890

>>106165707

Nvidia just tuned a bunch of shit on old Qwen3

Anonymous

8/6/2025, 9:09:00 PM

No.106165898

>>106165707

https://huggingface.co/models?other=base_model:finetune:Qwen%2FQwen3-30B-A3B&sort=downloads

https://github.com/shawntan/scattermoe

https://huggingface.co/models?other=base_model:finetune:Qwen/Qwen3-30B-A3B-Instruct-2507

https://huggingface.co/models?other=base_model:finetune:Qwen/Qwen3-30B-A3B-Thinking-2507

https://huggingface.co/models?other=base_model:finetune:Qwen%2FQwen3-30B-A3B-Base&sort=downloads

this is a notable finetune made by the mythomax creator

https://huggingface.co/Gryphe/Pantheon-Proto-RP-1.8-30B-A3B

you know the names of the finetuners whom you used to consume models from, check their huggingface pages and youll probably see they just arent posting anymore

they either: got bored of the hobby, got hired by ai company, made enough money to run deepseek (literally g0d), dont have enough money to finetune anymore etc ETC..

its not profitable to finetune and just release it