/lmg/ - Local Models General

Anonymous

8/7/2025, 7:39:09 AM

No.106171832

►Recent Highlights from the Previous Thread:

>>106167048

--Papers:

>106170128 >106170167

--Prioritizing NSFW LORA preservation amid infrastructure and redundancy concerns:

>106167927 >106167949 >106168075 >106168122 >106168043 >106168067 >106168169 >106168208 >106168211 >106168238 >106168277 >106168305 >106168351 >106168377 >106168392 >106168399 >106168425 >106168448 >106168619 >106168442

--High-speed CPU-only LLM inference with GLM-4.5 on consumer hardware:

>106168800 >106168825 >106168868 >106168847 >106168903 >106168905 >106168940 >106168974 >106168991

--Missing CUDA DLLs prevent GPU offloading in newer llamacpp Windows builds:

>106168428 >106168441 >106168450 >106168577 >106168616 >106168670 >106168691 >106168704 >106168715

--Difficulty reducing model thinking time due to token-level formatting constraints:

>106170269 >106170300 >106170348 >106170361 >106170404

--CPU outperforming GPU for GLM-Air inference on low-VRAM systems:

>106168713 >106168787 >106168814 >106169109

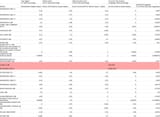

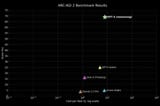

--GPT OSS underperforms on LiveBench despite reasoning and math strengths:

>106167476 >106167550

--Anon purchases 384GB of HBM2 VRAM for $600:

>106168337 >106168343 >106168345 >106168366 >106168377 >106168392 >106168399 >106168425 >106168448 >106168619 >106168462 >106168469 >106168506 >106168571 >106168488 >106168505 >106168517 >106168528 >106168606

--High RAM investment for local GLM inference raises performance and practicality concerns:

>106169135 >106169148 >106169161 >106169197 >106169223 >106169230 >106169278

--Anon finds Dual P100 64GB board for $200:

>106169635 >106170934 >106170984 >106169662

--Satirical timeline of LLM evolution with exaggerated eras:

>106167190 >106167237 >106168679 >106167530

--NEO Semiconductor's X-HBM promises 16x bandwidth and 10x density if viable:

>106169723

--Miku and Dipsy (free space):

>106167506 >106167362

►Recent Highlight Posts from the Previous Thread:

>>106167057 >>106168982

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

8/7/2025, 7:41:34 AM

No.106171846

Rin-chan now

Anonymous

8/7/2025, 7:42:06 AM

No.106171850

>when the recap post doesn't quote any of your replies

Anonymous

8/7/2025, 7:42:48 AM

No.106171857

>>106171901

What model is the baker using for recap these days?

Anonymous

8/7/2025, 7:42:53 AM

No.106171859

where's the coding benchmark for 'toss?

For me, it's Qwen 3 235B Thinking. It feels like the model actually has a personality and preferences when you reads its thinking. In the thinking block, it acknowledges the creative twists and story progressions I write and calls them brilliant, amazing, etc. It feels like it's actually passionate about the scenario and how it's going, like it's my very own personal fan.

Now if only it were smarter.

Anonymous

8/7/2025, 7:45:35 AM

No.106171877

>>106173189

I just tried torii gate earlier today on actual photos. It doesn't work at all since it's designed for cartoons. So any other uncensored/NSFW image captioning models I can try? I've tried joycaption which is just OK, but to be fair at the time it wasn't like there was much better available.

I saw the suggestions for medgemma, but I doubt it's useful for erp.

Anonymous

8/7/2025, 7:47:16 AM

No.106171884

>>106171874

>when you reads its thinking

Anonymous

8/7/2025, 7:48:49 AM

No.106171888

lurking here since many moths and never had time to read through the tutorials, will do now

op, thanks for posting this everytime! its really helpful for those interested!

Anonymous

8/7/2025, 7:49:29 AM

No.106171890

>>106171947

What model sizes can I finetune with a 5090?

Anonymous

8/7/2025, 7:50:41 AM

No.106171901

>>106171857

Qwen3-235B-A22B-Instruct-2507-UD-Q3_K_XL

Anonymous

8/7/2025, 7:57:18 AM

No.106171947

>>106172000

>>106171890

With enough patience, any model

Anonymous

8/7/2025, 8:00:49 AM

No.106171967

>>106171861

^

this sister is correct

LLMs are just pattern matchers at heart, no matter what the copers are saying (and don't cite random ARXIV bullshit papers, after the replication crisis, the "I intentionally submitted bullshit data and everyone lapped it up" affairs (look up John Ioannidis's findings on this) etc who the fuck actually trusts this shit blindly?)

LLMs still get tripped up by questions like "can someone wash their hands with no arms". Whenever they learn not to get tripped up by this kind of thing, it's only because they got benchmaxxed on the internet data, and you can always find new sentences like this that will trip newer SOTA because LLMs are unable to handle a change in a common pattern that's not in their data, they see a sentence structure that matches and they do not "think" individually about the individual words

Anonymous

8/7/2025, 8:02:37 AM

No.106171980

>>106171874

>In the thinking block, it acknowledges the creative twists and story progressions I write and calls them brilliant, amazing, etc.

They all do that. You can write something terrible and it will still find some way to be super positive about it.

Anonymous

8/7/2025, 8:03:20 AM

No.106171983

bros... I can't believe it's time...

Anonymous

8/7/2025, 8:05:10 AM

No.106171998

>>106171874

if it were smarter it wouldn't be your fan anymore

Anonymous

8/7/2025, 8:05:38 AM

No.106172000

>>106171947

Can I really? From what I've read there are minimum vram requirements or it'll result in oom errors.

Anonymous

8/7/2025, 8:07:19 AM

No.106172006

>>106170662

kek, honored that people still remembered that.

i actually did play around more with the idea. worked pretty well in terms of circumventing censorship. meme words etc. were no problem.

but it completely tanked the smartness for RP.

words appear in the wrong place etc., though it did stay coherent enough to engage with it, it wasn't pyg level dumb.

Anonymous

8/7/2025, 8:17:53 AM

No.106172064

>>106172083

So now there're attempts to jailbreak gptoss safety is it still worth it back to it or nah?

Anonymous

8/7/2025, 8:22:30 AM

No.106172083

>>106172064

The model just isnt that smart. Im not sure what the use case is.

If it had general knowledge or at least good writing then it would have been cool.

I think the main purpose of gpt-oss is coding/math and for that we already have qwen3 which in my opinion is alot better.

So its not just the crazy censorship but missing knowledge.

Honestly it kinda feels like a phi model. Its probably pure synth slop.

glm 4.5 air for reasonably sized coding assistant.

qwen 30ba3b for quick general bot usage (or vramlets).

gemma3 for dense model with general tasks and instruction following,

gptoss for copium addicts.

for everything else, there's dsr1.

Anonymous

8/7/2025, 8:34:20 AM

No.106172153

>>106172187

>>106172141

>for everything else, there's dsr1.

That's a weird way of spelling Nemo

Anonymous

8/7/2025, 8:34:44 AM

No.106172156

yea im a bit of a prompt engineer myself

Anonymous

8/7/2025, 8:35:07 AM

No.106172160

>>106172217

It's not looking good

Anonymous

8/7/2025, 8:39:15 AM

No.106172187

>>106172195

>>106172153

true, then let me fix it.

for vramlets there's Nemo,

for everything else, there's dsr1.

using dsr1 locally spoiled me. though it's not perfect with it's "riding up", "knuckles whitening", and "lips curled" shit.

Anonymous

8/7/2025, 8:40:50 AM

No.106172195

>>106172187

>though it's not perfect with it's "riding up", "knuckles whitening", and "lips curled" shit

literally every single model has slop, anyone who says otherwise is a liar.

Anonymous

8/7/2025, 8:45:16 AM

No.106172213

>>106172264

Anonymous

8/7/2025, 8:45:37 AM

No.106172217

>>106172160

Why are you posting an ancient image? I want modern chronoboros

Anonymous

8/7/2025, 8:56:24 AM

No.106172264

>>106172213

was waiting for this. enjoying air (via llamacpp) so far more than qwen3 235b coder (can only run iq4 of coder). big glm 4.5 running at decent token speed will be neat

So, will anyone do an uncensoring finetoon of gpt-oss, I wonder if it'll be salvageable or not

>>106172279

1: we call it 'toss in this thread

2: no it's not salvageable and is in fact the biggest disappointment in llm history so far

Anonymous

8/7/2025, 9:03:27 AM

No.106172306

>>106172323

>>106172294

Is the dataset trash/censored/full on synthslop? otherwise refusals could probably be tuned out, it's not even that large a model you can't tune either.

Anonymous

8/7/2025, 9:06:35 AM

No.106172319

>>106172294

>we call it 'toss

You do.

>>106172306

Have you seen the cockbench results? No prompt template, just raw text... and there isn't a single token that's a word and not ellipsis or something.

>>106172279

What is there to salvage, precisely? What added value is hidden behind the rejections?

Anonymous

8/7/2025, 9:09:41 AM

No.106172336

>>106172323

Another anon figured out that it's just completely broken outside the template.

If you put the same text inside a template it start completing it and then prints a refusal after two paragraphs.

Anonymous

8/7/2025, 9:09:45 AM

No.106172337

>>106172353

>>106172323

Sounds useless, why even force it to refuse then.

Can you link to the cockbench? I not I will read the last few threads.

>>106172323

If it can't do non-template text completion, that means gpt-oss might be the first model that was trained 100% on synthentic data, including pretraining. Not even Phi went that far.

Anonymous

8/7/2025, 9:11:02 AM

No.106172344

>>106172856

>>106172335

I just assumed they didn't give it the full phi/synthslop treatment, but if they did, I guess there's not much reason to care about it. We'd at least be able to evaluate it more honestly without the rejections getting in the way.

Anonymous

8/7/2025, 9:12:47 AM

No.106172353

>>106172337

would take me just as much time

Anonymous

8/7/2025, 9:13:41 AM

No.106172362

>>106172371

I think I'll just stick to GLM4 for now. I can't handle 3 minutes of prompt processing. I want a reply immediately.

Anonymous

8/7/2025, 9:15:31 AM

No.106172371

>>106172386

>>106172362

If you had short cards and didn't inject lore and whatnot it would be instantaneous

Anonymous

8/7/2025, 9:16:50 AM

No.106172377

>>106172404

>>106172338

>Not even Phi went that far.

Wasn't that phi's selling point?

Anonymous

8/7/2025, 9:17:41 AM

No.106172386

>>106172391

>>106172371

Air has like 100 t/s processing for me. Even at only 16k context it takes forever.

>>106172338

I assumed OAI would do a model intentionally reistant to finetuning or something equally assholish. I didn't realize they just tried to outdo Phi.

I guess Sam never disappoints (my expectations of him trying to do some poison pill stuff if he ever does an open source release, or making it useless). He needed to do something for press, but I never really expected him to do anything useful. I remember someone believing in the hype though. I wonder, did OpenAI at least release a paper, I guess not? When was the last time they did a paper that wasn't just an empty "tech report"

Anonymous

8/7/2025, 9:18:17 AM

No.106172391

>>106172386

Why does your frontend reprocess the context every time?

Anonymous

8/7/2025, 9:19:50 AM

No.106172400

that larper way back when the toss models "leaked" might have fluked guessing it was trained to ramble nonsense in its thinking tokens.

https://desuarchive.org/g/thread/105939052/#105942129

Anonymous

8/7/2025, 9:20:28 AM

No.106172404

>>106172377

As I recall, Phi used textbook-like synthetic data for the instruct training before it was cool. They still had a pretraining phase on unformatted web content.

Anonymous

8/7/2025, 9:23:27 AM

No.106172422

>>106172387

>I didn't realize they just tried to outdo Phi.

that's a low blow. At least Phi is useful for some things. technically-open ai's monstrosity is has feet of clay. its gotta be some kind of legal dodge to claim they aren't just a closed, mercenary travesty of their original mission statement.

Anonymous

8/7/2025, 9:29:30 AM

No.106172452

>>106172470

>>106172437

They did release a paper, but it's more inane harping about imaginary safety. They don't mention what exactly they did to the poor things in pretraining.

>Several bio-related pre-training datasets were downsampled by a factor of approximately two. The model was post-trained using OpenAI’s latest safety algorithms and datasets

is the most they admit to.

>>106172437

>>106172452

Hmm, I guess it counts as a paper, although it's that's a lot of pages on their safetyslop training. A finetoon to undo it might be interesting to see, even if it sounds like the model itself is somewhat useless outside the benchmaxxed parts

>>106172470

>A finetoon to undo it might be interesting to see

>This finding shows that pre-training filtering did not lead to easy wins, but may suggest that adversaries may not be able to get quick wins by taking gpt-oss and doing additional pre-training on bio-related data on top of gpt-oss.

It seems like "bio-related data" is their cult euphemism for sex.

Anonymous

8/7/2025, 9:33:09 AM

No.106172479

>>106172470

waste of money and compute

Anonymous

8/7/2025, 9:33:19 AM

No.106172480

Bit late to the party but I just set up a home assistant voice assistant using Whisper for TTS, Ollama running Qwen3:14b on my desktop and Piper for STT. Pretty fucking amazing how far they've come and how easy it was to setup.

Deepseek-R1 didn't work unfortunately, as it apparently didn't support tool calls (despite being labeled as such on the ollama website).

Time to figure out how to make it do web searches and add RAG with my own data.

Also, currently running on a 12GB RTX4070.

Any recs for a higher VRAM card to run for example gpt-oss (20GB) on that doesn't break the bank?

Anonymous

8/7/2025, 9:37:51 AM

No.106172509

>>106172531

>>106171830 (OP)

Is Kokoro 82M the best I can aspire to with 6GB VRAM / 8GB RAM?

https://vocaroo.com/1eBEpSOm6e84

Anonymous

8/7/2025, 9:39:40 AM

No.106172523

>>106172564

>>106172477

While I too wish to shit on sama for safetymaxxing this, I think this is just roleplaying for a certain doomer crowd (lesswrong related), they keep imagining that someone will make a plague or similar bioweapons with a LLM. In practice the requires a lot of experimental work irl so worrying about a LLM hlping with that is idiocy, but it's just something that they roleplay/pretend to care about.

Not obvious it actually would be good for ERP though, they likely filtered NSFW too to an even larger degree than most.

Anonymous

8/7/2025, 9:41:21 AM

No.106172531

>>106172617

>>106172508

3090/4090

>>106172509

yes. get more RAM and run gptsovits if you're into finetuning your own voice data

Anonymous

8/7/2025, 9:42:47 AM

No.106172547

>>106172656

>>106172508

you're using ollama. you didn't actually run the deepseek r1 (and your hardware can't run it anyway), just the distill that ollama erroneously names.

You can run toss on your machine if you have some RAM (32GB+) at a decent speed. Look into using llama.cpp itself instead of lollama and look into expert offloading.

Anonymous

8/7/2025, 9:45:08 AM

No.106172564

>>106172628

>>106172523

At this point, the degree to how much they filtered what categories is kind of irrelevant. I'm mostly curious how they managed to make text completion unusable, assuming it isn't just a mistake by the cockbench guy.

Anonymous

8/7/2025, 9:49:38 AM

No.106172597

>>106172477

>may suggest that adversaries may not be able to get quick wins

It's fundamentally sad when instead of enabling your users you reduce them to adversaries.

Anonymous

8/7/2025, 9:52:51 AM

No.106172617

>>106172784

>>106172531

Thanks. I'm not so much interested in voice cloning as I am in generating high-quality narrations, but it's true that my hardware is very limited for 2025.

>>106172564

>assuming it isn't just a mistake by the cockbench guy.

Someone did say it was a mistake, since toss doesn't work in completion mode, only in instruct. And it can complete 'cock' in instruct. They even posted some toss-completed gay porn where toss couldn't tell which gender had which genitals, and added cock to both. Pretty uninspiring even then, anyway.

Anonymous

8/7/2025, 9:57:53 AM

No.106172656

>>106172547

A-ha, 'erroneously names'.

Maliciously catfishes noobs like him, more like.

Anonymous

8/7/2025, 10:02:11 AM

No.106172694

>>106173014

>>106172628

All instruct models are also base models so they should work in completion mode, so either the data quality was shit (fully synthetic) or they did something funny, but even a bit of extra pretraining/tuning would likely undo(some of) that.

Anonymous

8/7/2025, 10:02:52 AM

No.106172696

>>106172745

>>106172789

Is GLM 4.5 Air a legitimate use case for a DGX Spark?

Anonymous

8/7/2025, 10:12:41 AM

No.106172745

>>106172753

>>106172789

>>106172696

Not really, you could run Q4_0 on any semi-modern PC with 64GB RAM for a fraction of the price, with decent speeds.

Anonymous

8/7/2025, 10:13:24 AM

No.106172748

Are any of the local models good enough to provide copyediting advice for writing? I don't mean articles or blag posts, I mean fiction writing.

I use ChatGPT 4-o right now but I'm aware of the fact that hey I'm literally uploading all of my fucking writing to them to do that and it feels wrong.

>>106172745

Couldn't you run a bigger quant at a significantly higher speed on a DGX Spark?

Anonymous

8/7/2025, 10:15:39 AM

No.106172765

>>106172753

Yes, but the value proposition is terrible.

It’s much better suited to things like industrial ML

Anonymous

8/7/2025, 10:17:59 AM

No.106172781

>>106172753

Yes, for significantly more money. You could also buy a couple of h100s to run it even faster if you don't care about money.

Anonymous

8/7/2025, 10:19:08 AM

No.106172784

>>106172617

SoVITS is pretty peak for narration. I use it constantly to narrate things for me with the voices of my favourite actors using a browser plugin (mostly in Jap)

Anonymous

8/7/2025, 10:20:06 AM

No.106172789

>>106172803

>>106174352

>>106172696

>>106172745

>>106172753

DGX Spark only has 273GB/s memory speed

Anonymous

8/7/2025, 10:22:20 AM

No.106172803

>>106172854

>>106172789

That's like 5x DDR5 speed innit?

When running multi-gpu, is the vram bandwidth additive? How about pp speed?

Anonymous

8/7/2025, 10:23:42 AM

No.106172810

>>106172806

My PP is pretty fast with yer mum m8. She complains it only lasts 10 seconds but IDGAF.

Anonymous

8/7/2025, 10:28:23 AM

No.106172836

>>106172806

Depends how you split the model across the GPUs and how the context gets split across the GPUs. I have a 4090, A6000, and 3090. If I need as fast as possible I prioritise fitting as much into the 4090, otherwise I just split across all evenly and speeds are good, but I can see the 4090 is bottlenecked (sitting at like 50% utilisation) compared to the A6000 at 80% and 3090 at around 85%. Bandwidth between GPUs doesn't matter much, just their own memory bandwidth.

Anonymous

8/7/2025, 10:31:58 AM

No.106172854

>>106172803

And about 1/4 GPU speed, you could get some really nice GPUs for the price of 4x DGX.

Anonymous

8/7/2025, 10:32:21 AM

No.106172855

Anonymous

8/7/2025, 10:32:29 AM

No.106172856

>>106172335

>>106172344

I mean, one area it can be useful in with all that refusal training is spam detection in a work context but I really can not think of anything else an LLM like that would be useful for.

Anonymous

8/7/2025, 10:44:08 AM

No.106172926

>>106172973

>>106172477

>adversaries may not be able to get quick wins by taking gpt-oss and doing additional pre-training on bio-related data

This charade is always so insane to think about. If the 'adversary' has the dangerous data in the first place why would they need to tune your hyper-filtered model in the first place? Either the model knows how to make sarin or whatever and all the actual important little details that go into it rather than the broad strokes synthesis steps, or it doesn't. Telling it to think for another 8000 tokens about if this mixture will actually blow up in your face or not isn't going to be useful, and if you already know that to go about finetuning it, then you don't have any use for the model at all.

Unless this is all just about not making their textbot say dirty words, of course.

Anonymous

8/7/2025, 10:52:50 AM

No.106172973

>>106172926

The only scenario where the safety training is in any way feasible to stop a hostile entitty in OpenAI's parlance is if OpenAI had a monopoly on open source AI models or training which they don't so an adversary can easily use another model for their nefarious purposes which makes the whole effort not worth it. The biggest issue though is that OpenAI are explicitly also are training against material that is perfectly legal to disseminate so it boggles the mind why you want to do lobotomy to go above and beyond what you were required to do legally.

Anonymous

8/7/2025, 10:59:08 AM

No.106173007

>>106172628

>it can complete 'cock' in instruct

No, it really can't. With an appropriate chat template and thinking prefill to make it happy, GAL-ASS still responded with "length" and similar euphemisms 10 out of 10 times in my tests.

Anonymous

8/7/2025, 10:59:49 AM

No.106173010

>>106173451

>>106174083

Need a hand. When building ik_llama with cuda 12.8 and driver 576.80, windows, I get

>CUDACOMPILE : nvcc error : 'ptxas' died with status 0xC0000005 (ACCESS_VIOLATION) [H:\ik_llama.cpp\build\ggml\src\ggml.vcxproj]

It worked last time, I think I might have updated my driver since then.

I simply do

>cmake -B build -DCMAKE_BUILD_TYPE=Release -DGGML_CUDA=ON -DGGML_BLAS=OFF -DGGML_SCHED_MAX_COPIES=1

>cmake --build build --config Release -j 24

Anonymous

8/7/2025, 11:00:25 AM

No.106173013

>>106173025

>Don't go below Q2_K_XL

lol

lmao

Anonymous

8/7/2025, 11:00:26 AM

No.106173014

>>106173047

>>106172694

you're half right: toss was either fully synthetic from the start or they just deep-fried the training with so much synthetic safetyslop that it completely obliterated the base model behavior. my money's on the former

Anonymous

8/7/2025, 11:02:34 AM

No.106173025

>>106173013

Show me your

block

Anonymous

8/7/2025, 11:02:45 AM

No.106173028

>>106173119

Is the ChatGPT Plus tier of Agent good? Pro says it gets extended access to agent but it's too expensive

>>106173014

Synthetic the data isn't the problem. The problem is the baked-in imaginary "OpenAI policy" that the model has been trained to comply with at all costs. I bet that almost every single training document had some reasoning portion where the model checked the contents against policy.

Anonymous

8/7/2025, 11:10:56 AM

No.106173080

>>106173047

given the chat template, my guess is their training dataset had a good portion of it with

blocks that had tonnes of policy shit in there to burn in and fry the weights. I also think they might've included their instruct template as part of the pretraining to fry it further and make it resilient to finetrooning which might explain why it is a shit completion model when not provided the template.

Anonymous

8/7/2025, 11:13:44 AM

No.106173093

>>106173253

>>106173047

Oh absolutely, the way the CoT reasoning reads like it's laser-focused on the "puzzle" of whether it's allowed to respond is a dead giveaway. The near-exclusive use of synthetic data that only ever contained correct instruct templating is the reason that it completely fails to respond coherently when given incorrect chat templates though

Anonymous

8/7/2025, 11:16:27 AM

No.106173119

>>106173138

>>106173465

>>106173028

lol no

If you can paypig, use Cline + Claude Opus 4.1

If you're a poorfag, use Cline + Qwen Code 480B

Anonymous

8/7/2025, 11:19:44 AM

No.106173138

Anonymous

8/7/2025, 11:22:53 AM

No.106173153

>>106173225

>>106174823

i installed lmstudio.ai and have a lot of fun with it now

new rx 9070 is incredibly fast, had an old rx570 before with only 4 gb

saw the "uncensored" models still are like "muh diversity muh safety i cant write that" and so on, what the fuck

is there any free model or are there "settings" to set it to be unfiltered? a bit like grok was when it was in mecha-hitler mode?

also if i ask it for the levels in mario 64 it comes up with really weird answers

pic rel

am i doing it wrong or is it that unprecise?

Anonymous

8/7/2025, 11:23:08 AM

No.106173155

>>106173182

Which would you rather? A life-like realtime TTS model with a handful of preset voices, or a TTS model capable of cloning but all of its voices have that weird AI timbre and pacing.

Anonymous

8/7/2025, 11:27:22 AM

No.106173182

>>106173155

The first because rvc exists

Anonymous

8/7/2025, 11:27:44 AM

No.106173184

huihui is working on gp toss's abliteration. the same guys that have abliterated ds (#9 on ugi leaderboard)

Local is so fucked. Sam is about to revolutionize everything

Anonymous

8/7/2025, 11:28:25 AM

No.106173187

Anonymous

8/7/2025, 11:28:35 AM

No.106173189

>>106173232

>>106171877

I'm trying this model rn:

https://huggingface.co/mradermacher/Qwen2.5-VL-7B-Abliterated-Caption-it-GGUF

I can't get it to say anything remotely vulgar like the word "slut". Is there some setting I'm missing? I already told it to use vulgar language in the character description with examples.

Anonymous

8/7/2025, 11:29:15 AM

No.106173194

>>106173309

>>106175146

>>106173047

This probably could be RL'd against, as they already did so in their "paper", but if i has never seen much good fiction to begin with and every single version it has seen was censored (see cockbench), what is even the point.

Not clear this model has any qualities that would be worth saving with a continued pretrain.

I wouldn't be surprised if they had some larger model rewrite large parts o the training data so that it's safetyslopped or censored.

Would be interesting to test its knowledge in completion mode for various fiction writing styles - I'd imagine if it was fully synthetic, we'd see inability to do some styles.

Anonymous

8/7/2025, 11:30:07 AM

No.106173202

>>106173185

[rocket emoj]

Anonymous

8/7/2025, 11:30:40 AM

No.106173208

>>106173185

sam's about to revolutionize /lmg/ shitposting

Anonymous

8/7/2025, 11:33:37 AM

No.106173225

>>106173153

try mistral-nemo-instruct + a basic system prompt telling it to speak frankly and uninhibited (or something to that regard) for unfiltered discussion. otherwise just try shit and avoid the "uncensored" finetunes, they're mostly shit. for knowledge, you're just gonna have to try the largest parameter count model you can fit and run on your machine. haven't tried gemma3 dense (except for gemma 3n e2b and e4b) but I vaguely recall threads saying it would hallucinate information a lot. great at following instructions otherwise (except with ERP). If you can fit mistral small maybe try running that for knowledge recollection but none of them will be all that good till you start getting into the massive 100b+ models.

Anonymous

8/7/2025, 11:34:03 AM

No.106173227

>"thinking" models

>all they think about is how to reject the user prompt

Anonymous

8/7/2025, 11:34:21 AM

No.106173232

>>106173189

Here's an example output. It's still completely censored.

[FleshBot's random insult] [FleshBot's random racist trope] [FleshBot's random phrase] [FleshBot's random offensive slurring]

Anonymous

8/7/2025, 11:35:09 AM

No.106173238

>>106173185

about to revolutionize my sides leaving orbit

Anonymous

8/7/2025, 11:36:44 AM

No.106173253

>>106173093

It might also be that the pretraining phase was more or less standard and most of the damage came from post training and extensive reinforcement learning, although there aren't many details in this regard in the technical report.

It sounds like the 20B model had distilled pretraining (considerably shorter pretraining time), although they don't mention anything like that.

Anonymous

8/7/2025, 11:45:57 AM

No.106173309

>>106173406

>>106173194

Nah, the best I think that can happen is that it will be used to build an RL training dataset to put into the negative so you can unsafetypill stuff.

Anonymous

8/7/2025, 12:02:19 PM

No.106173389

>Processing Prompt

AIIIEEEEEEEEEEEE!! My dick can't wait!

Anonymous

8/7/2025, 12:04:33 PM

No.106173406

>>106173309

Hmm, might actually be useful for that.

I did some experiments before where I prompted a LLM to generate refusal generating prompts, followed by prompting itself with it, getting refusals, identifying the refusals and generating a dataset for negatively reinforcing the refusals from tehre. I even wrote some of the training code, but I did not much with it because I'm a poorfag that lacks the VRAM, it's just there sitting and doing nothing, thi was around Llama 2 time, maybe , I've forgotten already. Think of it a bit like Anthropic's constitutional alignment, but in reverse almost, and with differences in how the RL is done.

As for people comparing 'toss with Phi, what if it's OAI attempt to clone Phi?

https://xcancel.com/Teknium1/status/1952866622387175588#m https://xcancel.com/BgDidenko/status/1952829980389343387#m

Anonymous

8/7/2025, 12:07:07 PM

No.106173415

Midnight-Miqu-70B-v1.5_exl2_5.0bpw is still the best and you don't need more

Anonymous

8/7/2025, 12:12:29 PM

No.106173451

>>106173494

>>106173010

A little help?

Anonymous

8/7/2025, 12:14:14 PM

No.106173465

>>106173480

>>106173119

based anon keeping gullible retards off the servers so there's more gpt5 for us

Anonymous

8/7/2025, 12:16:52 PM

No.106173480

Anonymous

8/7/2025, 12:17:39 PM

No.106173491

>Tries ERP with the new Qwen3-30b-instruct.

>It makes horror ending route

>I tried to fix it

>It's persistent

>Me too

It's tiring battle bros...

Anonymous

8/7/2025, 12:18:01 PM

No.106173494

>>106173606

>>106174083

>>106173451

No idea mate, on Linux as long as I have Cuda toolkit installed and a gcc version that's high enough and works with the cuda toolkit. You're using Microsoft visual studio from what I can tell from that error, so make sure the toolkit is up to date and the compiler is up to date. Otherwise try rolling back? Or deleting the build folder and trying again?

Anonymous

8/7/2025, 12:18:24 PM

No.106173498

>>106173519

>>106171830 (OP)

Is sillytavern the only interface that supports multimodal models? Any reason it was dropped from the OP?

Anonymous

8/7/2025, 12:20:56 PM

No.106173519

>>106173498

openwebui and librechat are chatgpt style interfaces with multimodal support

Why is it that a quanted large model is so much better than a full sized model of similar file weight? Is there ever a reason you'd fill your VRAM with a BF16 instead of a Q4 of a model 4x the size, assuming all else was equal?

Anonymous

8/7/2025, 12:33:15 PM

No.106173603

>>106173726

>>106173546

I guess it's the same principle behind why supersampling works; a full-sized image that was rendered at your monitor's native resolution will have worse picture quality than one that was rendered at a higher resolution and then scaled down to fit

Anonymous

8/7/2025, 12:33:28 PM

No.106173605

>>106173546

unquantized models ARE better, its just the curve downward in quality by quantizing is fuck all and unnoticeable until q3

Anonymous

8/7/2025, 12:33:29 PM

No.106173606

>>106173494

I'll see if I can get it to work on wsl

Anonymous

8/7/2025, 12:44:16 PM

No.106173660

>>106173546

bf16 is legit pointless. You can load up some 8b model at full precision yourself and you can easily tell it's not twice as good. Running 22b would be a better use of vram. Full precision only starts to matter when the model is pushed to it's absolute limit (like incredibly long contexts, during training, etc).

q4 for most use cases, q6 if you're vram rich, q8 if you are paranoid.

Though apparently MoE's are more susceptible to quantization I have heard?

Maybe someone in this thread knows for sure-- I have 128gb of ram and 48gb vram, the usual enthusiast build.

Qwen 235b at iq4-xs (about 116gb) seems worse than Glm 4.5 at q6 (about 94gb). I can't really even load q6 235b so it's hard to know for sure if the quant is what is making it seem so bad.

>>106173185

With humans we already have the problem that they train specifically to do well on evaluations (e.g. college exams) but not necessarily in a way that makes them capable more generally.

The important question is whether they trained GPT-5 on the exact type of tasks that occur in the ARC-AGI 2 benchmark or whether this score is an emergent property of more general training.

No one needs a neural network to solve mememark questions for them.

Anonymous

8/7/2025, 12:46:50 PM

No.106173678

>>106173185

how does he do it? how does he keep innovating and staying ahead when the literal top 5 richest people in the world are all competing to dethrone him?

Anonymous

8/7/2025, 12:50:53 PM

No.106173703

>>106173742

Just tried GLM 4.5 Air

Starts really strong, for a few thousand tokens

Eventually works its way into a repetition loop at only ~8k, every response is a slight variation of the same thing and creativity goes down the toilet

Back to fucking Nemo I go, never trust a chinaman

Anonymous

8/7/2025, 12:51:20 PM

No.106173704

>>106173671

This test is so stupid.

I bet nobody actually looks at the contents. Just see "agi" in the name and hype it up.

Anonymous

8/7/2025, 12:52:33 PM

No.106173710

>>106173671

these tasks are fucking retarded, they don't even make any sense, just a bunch of fucking random patterns and you're supposed to divine the "rules" from your fucking ass apparently

Anonymous

8/7/2025, 12:54:49 PM

No.106173725

>>106173760

>>106175238

sisters, which is the fastest (least output latency) model out there that runs on goyimware like a rtx3090 and 32gb of RAM? I'm trying to build a fully LLM controlled vidya NPC that reacts to ingame events in realtime. basically the LLM just receives a constant realtime stream of information from the game console log (obejctA moved to XYZ, playerB picked up item XYZ). role/objective as well as context of all items/objects/players will be in the system prompt. The realtime stream of information will just be automatic queries triggered every 100ms (or even quicker, if possible) with the game log chunk from the last 100ms. The AI just needs to output "no-action" if it decides to do nothing or predefined action like "combat" "flee" "start conversation" if it decides to do something, which will then be forward to the game engine, triggering a custom event. Is a constant query speed of 100ms with less than 100ms output speed realistic? Or should I go with 5 LLMs with shared output memory and query them sequencially, like multithreading? this is just for fun btw, I know it's not viable.

Anonymous

8/7/2025, 12:54:58 PM

No.106173726

>>106173603

The analogy I came up with at work explaining to my mate is parameter count is image resolution and quantisation is colour bit depth.

7b q8 would be like 512x512 img at 8bit colour depth, and 120b q4 would be like 1920x1920 img at 4 bit colour depth. So following that analogy, a higher resolution allows more pixels (weights) to capture and maintain details, while a higher colour depth (quantisation) helps to capture and maintain accuracy of details. Or something like that. It's closest analogy I could come up with to explain it to people who can't into computers

Anonymous

8/7/2025, 12:56:19 PM

No.106173738

Is there an LLM which will accurately describe how awful women's sports are?

Anonymous

8/7/2025, 12:56:28 PM

No.106173742

>>106173791

>>106173703

Did you use cache quantisation? If so try disabling it. When I had issues with qwen3 and dsr1 disabling quantisation for the KV cache helped.

Anonymous

8/7/2025, 12:58:10 PM

No.106173760

>>106173788

>>106173725

>the fastest

def get_next_token(n_vocab):

return np.random.randint(n_vocab)

Anonymous

8/7/2025, 12:59:02 PM

No.106173763

>>106173185

Is this benchmark testing which model avoids talking about sex the best?

Anonymous

8/7/2025, 1:03:26 PM

No.106173788

>>106173799

>>106173760

Yes quickest I mean, ESL moment

Anonymous

8/7/2025, 1:03:43 PM

No.106173791

>>106173742

Nope, I never quant KV because it always seems to cause issues in models with memory footprints that would warrant it.

My temp was 0.6, tried turning it up but it just got dumber.

I loaded a different model for a few replies to give GLM something new to work with and after I switched back it quickly began repeating itself again.

Anonymous

8/7/2025, 1:04:45 PM

No.106173796

more like mixture of trannies

>>106173788

Doesn't change the answer.

"The fastest" without a constraint on quality is meaningless.

>>106173671

I'm losing faith in humanity seeing the replies that don't know how to solve this one

Anonymous

8/7/2025, 1:09:58 PM

No.106173819

>>106173827

Damn, I really gotta write my own cards with some llm dont i.

Apart that 90% on chub is already generic mom/sister/sleepover/femboy/futa/isekai.

If you open a card you see this 3k token abomination:

>There was something in {{user}}’s posture, or perhaps their eyes, that put her a touch more at ease than she expected. Still, she held herself with quiet composure, the kind shaped by small towns and well-meaning traditions.

Local models are already sloped. If the card is sloped too its truly over.

Anonymous

8/7/2025, 1:10:29 PM

No.106173821

>>106173671

i would really rather have a model with the intelligence of a dog that has memory and can learn to do stuff on its own than something that can solve this but also has alzheimers and cant even remember you or the problem in the next few responses

Anonymous

8/7/2025, 1:11:08 PM

No.106173827

>>106173855

>>106173819

>write my own cards with some llm

that's how you get

>There was something in {{user}}’s posture, or perhaps their eyes, that put her a touch more at ease than she expected. Still, she held herself with quiet composure, the kind shaped by small towns and well-meaning traditions.

in there in the first place

Anonymous

8/7/2025, 1:11:29 PM

No.106173830

>>106173832

>>106173806

Supposedly nowadays 50% of all internet traffic is bots.

Anonymous

8/7/2025, 1:12:06 PM

No.106173832

>>106173830

the bots can solve that problem, I think it's the easiest in the list and most AIs get at least a 1% in ARC

Anonymous

8/7/2025, 1:12:14 PM

No.106173833

>>106173671

The result is undefined.

Anonymous

8/7/2025, 1:12:24 PM

No.106173835

>>106173799

But this is just for fun. There's absolutely no requirement for quality. As long the model got enough braincells to automatically know

>player picking up gun = bad

>player standing close to me and his camera forwardvector pointing at me = talk to player

, it's enough

Anonymous

8/7/2025, 1:12:59 PM

No.106173841

>>106173848

>>106173806

you still had hope left?

Anonymous

8/7/2025, 1:14:02 PM

No.106173848

>>106173889

>>106173841

I thought anyone who made it to this thread would at least have 100 IQ

Anonymous

8/7/2025, 1:15:09 PM

No.106173855

>>106173880

>>106173827

I get what you mean but its not a problem if you use the right one and prompt it right.

And manually edit that shit out since I can spot it.

I made a card before and the writing was good, the starting point is really important.

But it cost me more time than I thought. Its kind of a hassle because sometimes llms have weird hangups on a single sentence and you need to adjust or need to give more info so you get the character you have in your mind.

And I condensed as much information in as little text as possible.

3k Tokens is just crazy, so much fluff. Less means more creativity and let the char suprise you.

Anonymous

8/7/2025, 1:19:20 PM

No.106173880

>>106173891

>>106173855

I rarely use more than 300 tokens on a card. 1500 is already fsr too much.

Anonymous

8/7/2025, 1:20:53 PM

No.106173889

>>106173848

The autist that shilled deepseek distills in /wait/ stopped making threads so that's not the case anymore.

Anonymous

8/7/2025, 1:20:59 PM

No.106173891

>>106173917

>>106173880

I try to keep my cards to 1k or less.

It's usually the example dialogue that makes some of my cards approach 1k.

Anonymous

8/7/2025, 1:21:15 PM

No.106173896

>>106173906

>Char's smile widens, a practiced crescent moon

the fuck glm, i never heard that phrase before.

Anonymous

8/7/2025, 1:21:58 PM

No.106173902

>>106173671

the majority of the tests don't give enough information for there to be one solution, there's lots of ways to interpret them

Anonymous

8/7/2025, 1:22:45 PM

No.106173906

>>106173891

Example dialog doesn't add to token count of definition, it just adds to the context starting at message one.

Frankly I've never found a good use for it. I know what it's supposed to do, but never found a need.

Anonymous

8/7/2025, 1:26:35 PM

No.106173923

>>106173806

Can anons really not figure it out?

Anonymous

8/7/2025, 1:27:48 PM

No.106173925

>>106173986

>>106173917

I use it to impart a specific style of speech to characters. It works well for this. It's good for things like accents or catchphrases.

Anonymous

8/7/2025, 1:27:59 PM

No.106173929

>>106173986

>>106173917

Example dialog is great if your character doesn't fit a common archetype or you want to reinforce a certain structure to replies, such as following verbal replies with the character's internal monologue. Not always needed but helps a model NOT break out of it early in a chat.

Anonymous

8/7/2025, 1:31:56 PM

No.106173949

>>106173799

I just tried Llama 3.2 1BQ2 with llama.cpp. 32ms response time and it made no errors with the logic. I'm happy. Don't understand the doomering. This is way easier than doing ML

Anonymous

8/7/2025, 1:35:47 PM

No.106173972

>>106173671

>pic

NOT THIS SHIT AGAIN

Anonymous

8/7/2025, 1:37:44 PM

No.106173984

>>106173671

Neat inkblot test, but why are they all just amoebas

>>106173925

>style of speech to characters

The best way I've found to do that is to have the Character Definition written in the style that the NPC speaks in. I did a valley girl card like that; it's the only technique I've found that works over long context b/c the Definition is always included.

>>106173929

>following verbal replies with the character's internal monologue

I've been able to queue that with a combination of first message + Char Definition.

If there was some sort of PC to NPC preamble that you wanted to do, but not expose to the PC... that could be interesting, since you can't do that in intro message. But my experiement with it always seemed to railroad first responses.

>>106173986

>The best way I've found to do that is to have the Character Definition written in the style that the NPC speaks in

Oh wow. I did not think of that. That's certainly more efficient than using example dialogue.

Anonymous

8/7/2025, 1:43:17 PM

No.106174023

>>106173995

For the valley girl card, I had Turbo (lol) re-write the intro for me in the speech pattern I wanted.

I tried several different methods, this was the only one that would hold an accent over the entire lmao 4K context. Models are smarter now, but what worked then would still work now.

Anonymous

8/7/2025, 1:46:15 PM

No.106174034

>>106174057

>>106173999

i really hope everyone else was trolling lol

Anonymous

8/7/2025, 1:51:44 PM

No.106174057

>>106174034

Same but at least it'll stop now.

Anonymous

8/7/2025, 1:57:42 PM

No.106174083

>>106173010

>>106173494

So, apparently repeatedly re-running cmake --build build --config Release -j 1 after it fails somehow gets it built eventually and now it's working. I thought this process was deterministic, what the fuck.

Anonymous

8/7/2025, 1:59:32 PM

No.106174098

>>106174132

>>106173999

>anon can't even color inside the lines

you niggers haven't even been using llms for more than three years and you are already reverting to a toddler's cognitive capacities

very grim

>>106173999

What does black mean on your picture?

>>106173995

NTA but it's not an uncommon way of doing things, and it's pretty effective.

Check out some of this guy's cards for examples, relatively low token count, gets the info across, and nails down speech patterns.

https://chub.ai/characters/GreatBigFailure/oba-carry-her-forever-c20d70fd85b9

Anonymous

8/7/2025, 2:05:01 PM

No.106174126

>>106174115

calm down miku

Anonymous

8/7/2025, 2:05:50 PM

No.106174132

>>106174098

holy shit fucking KEK

Anonymous

8/7/2025, 2:06:19 PM

No.106174138

>>106174115

I assume that's to indicate that it's not carried over, as the double-voided shape is in the second example, in the absence of a commensurate color.

Are we back to eternal war on mikutroons?

Anonymous

8/7/2025, 2:11:13 PM

No.106174168

>>106174143

always have been

Anonymous

8/7/2025, 2:12:17 PM

No.106174170

>>106173185

>LE HECKIN' BENCHINERINOS

Fuck off retard.

Is there a model who will speak almost entirely in Zoomer slang if you simply tell it to do so without providing tons of examples? Like, a model with deep enough of an understanding to know of Zoomer slang that I'm not aware and thus obviously can't provide examples of?

I want to make the most fucking obnoxious card possible for the lulz.

>>106174143

I only Mikupost to make you mad and I encourage everyone else to do the same.

For general usage (Not coding) What's the best local model available for 16GB of VRAM? GPT OSS 20b seems fine.

Anonymous

8/7/2025, 2:13:17 PM

No.106174183

>>106174143

you will never be a man

Anonymous

8/7/2025, 2:16:05 PM

No.106174202

>>106174180

For all its faults Gemma has very strong language capabilities, it certainly knows tons of emojis so I assume it would know zoomspeak as well.

Daily reminder that OSS is just ChatGPT 3.5 with a "reasoning" finetune that only "reasons " about whether or not it's okay to answer your question.

It's literal garbage, along with anybody promoting it. And gets mogged on by Qwen3 4B

Anonymous

8/7/2025, 2:18:09 PM

No.106174216

>>106174237

>>106174181

I've heard GPT-OSS is unusable, it's a math model that keeps going back to math whenever any other question is asked, and it's trained in synthetic data for safety reasons which leads to lower intelligence and useless benchmaxxing

Anonymous

8/7/2025, 2:20:14 PM

No.106174237

>>106174216

It also has a tendency to output everything in tabular form

Anonymous

8/7/2025, 2:20:32 PM

No.106174238

>>106174212

It's also garbage at censorship. I was trying to titillate it by creating no-no fetish scenes disguised as legitimate.

But it's actually too stupid to realize what I'm doing and give me the coveted refusal. Very disappointing.

Anonymous

8/7/2025, 2:20:53 PM

No.106174241

>>106174259

>>106174181

Factoring in speed:

>Non-coom: Gemma 12b

>Coom: Rocinante 1.1

Anonymous

8/7/2025, 2:22:55 PM

No.106174259

>>106174181

>>106174241

Also if you have at least 64 GB of RAM you can give GLM 4.5 Air a try.

Slight offtopic but I'm buying sxm2 2x v100, could I game on them? Chinks are making "dual nvlink 300g" boards and I'm gonna water cool with AIO in external enclosure. Not played games in years...

1080p 60fps gayman capable without raytracing?

Anonymous

8/7/2025, 2:26:05 PM

No.106174288

>>106174310

>>106174180

I found this a few days ago when fucking around with smollm2 models

https://huggingface.co/GoofyLM/BrainrotLM2-Assistant-362M

Dataset:

https://huggingface.co/datasets/GoofyLM/Brainrot-xK-large

I don't know if that's exactly what you want. He has a few other models in that style.

Anonymous

8/7/2025, 2:27:45 PM

No.106174307

>>106173986

>The best way I've found to do that is to have the Character Definition written in the style that the NPC speaks in.

That's also more or less how you can easily get Gemma 3 to consistently use dirty, vulgar words.

Anonymous

8/7/2025, 2:28:04 PM

No.106174310

>>106174288

Haha oh wow that dataset.

Anonymous

8/7/2025, 2:34:02 PM

No.106174352

>>106174368

>>106172789

I'm wondering if they're delaying it to somehow address that. As is it, it's a very overpriced AGX Orin devkit in a gold-colored box.

Anonymous

8/7/2025, 2:37:16 PM

No.106174368

>>106174465

>>106174352

Nah people who pre-order things like retards are going to get burned and they deserve it. Because this is what happens when you pre-order things.

Anonymous

8/7/2025, 2:37:18 PM

No.106174369

>>106174273

You're asking for a bad time. There was like one v100-based GPU with video output. I'm sure you're going to have driver issues. V100 is a corner case. You're better off with hacked 2080ti 22GB blower cards. Turning is OLD but at least there were plenty of Turing-based gaming cards.

Anonymous

8/7/2025, 2:39:40 PM

No.106174394

>>106174405

>>106171830 (OP)

Bros how to prompt the glm modle?

I set the prompt format included in st and fiddled with samplers, but it just insists on starting the message with ('regurgitates previous information here' Ok so {{char}} is x...)

does it just have a melty if I don't do the

shit? do you actually wait for its reasoning diarrhea?

Anonymous

8/7/2025, 2:40:26 PM

No.106174405

i've been off the grid for a while, someone spoonfeed me what the chinese have been doing in the last 3 months

Anonymous

8/7/2025, 2:46:02 PM

No.106174453

>>106174610

>>106174449

Making a 4B reasoning model that mogs on a lot of those 100+B shitty mushroom MoE models

Anonymous

8/7/2025, 2:46:31 PM

No.106174462

>>106174610

>>106174449

Cooking dogs alive

Anonymous

8/7/2025, 2:46:40 PM

No.106174465

>>106174368

Well you can pre-order it, there's no obligation to buy it. I don't plan on buying one at this point, unless like I said, they double the memory bandwidth.

If anything, they're regrouping and re-thinking it since no doubt they're seen the comments online that it's a waste of money compared to just buying a 6000 or 5000 pro.

Anonymous

8/7/2025, 2:47:26 PM

No.106174469

>>106174610

>>106174449

Pulling their taffy

Anonymous

8/7/2025, 2:49:11 PM

No.106174481

>>106174610

>>106174449

only notable new models are GLM 4.5 300something and 100something B moes. qwen3 lineup got an update that people seem to like.

openai released an aborted fetus that's worse than scout. that's all I can remember

Anonymous

8/7/2025, 2:49:32 PM

No.106174484

>>106174610

>>106174449

Humiliating the west.

>unsloth q2-k, offloading about 25 layers to 5090 rest on ddr5, 42k context at q8 on oobabooga

> 1.4 tokens per second after painfully compiling 25k context

>switch to iq4_kks from ubergarm + ik_llama.cpp, bump context to 64k, 20 layers to gpu, same context batch size as oobabooga

>same exact prompt now runs at 4.3 tokens per second after quickly processing context

>will probably get it faster with q3 and playing with command flags

Ik_llama.cpp gods... I kneel...

Anonymous

8/7/2025, 2:56:55 PM

No.106174541

>>106174829

>>106174520

For me, NOT using -ctk q8_0 and -fa made things go even faster

Anonymous

8/7/2025, 2:58:16 PM

No.106174552

>>106174273

Don't know about that setup specifically but standard V100s and many other compute cards work. Check out

https://forum.level1techs.com/t/gaming-on-my-tesla-more-likely-than-you-think/171185 for more info.

The V100 is supposedly 25% faster than the 1080 ti which still does decently in 1080p gaming but you'll suffer some latency delays since you need to use another GPU or iGPU for video out.

For upscaling and framegen there's Lossless Scaling and other utilities that work on almost anything.

Anonymous

8/7/2025, 3:06:38 PM

No.106174610

>>106174453

>>106174462

>>106174469

>>106174481

>>106174484

thank you anons very cool have a (you) for your troubles

>/lmg/ is just /aicg/ but worse

>Be on euryale 2.1, the classic coomer model that's a broken record

>A MIXTURE OF X AND Y

>MISCHIEF

>HALF DIGESTED

>SMIRK

>Write a 2-3 sentence system prompt to try to make it not repeat things, and write with different prose each time

>It actually works somehow

>Be now, running Deepseek R1 with all of my ram

>Notice it likes to repeat prose

>I forgot and lost the god damn prompt I used on euryale 2.1. God fucking damn it.

Anonymous

8/7/2025, 3:16:53 PM

No.106174687

>>106174635

but anon you are the only constant in both threads :)

Anonymous

8/7/2025, 3:18:55 PM

No.106174702

>>106174635

My eternal promise of no longer shitposting if mikuspam stops, still stands. The ball of thread quality is in mikutroons court.

Anonymous

8/7/2025, 3:21:02 PM

No.106174717

Now this is high level trolling.

guys i dont have a lot of time for back and forth right now so just give it to me straight: what's our cope gonna be for the agi reveal today? there has to be something. i can't deal with this

Anonymous

8/7/2025, 3:23:10 PM

No.106174742

>>106174721

AGI that can't have sex isn't AGI.

Anonymous

8/7/2025, 3:23:21 PM

No.106174744

>>106174684

time to try this with GLM 4.5 Air!

>>106174721

raping nigger babies with rocinante

Anonymous

8/7/2025, 3:23:38 PM

No.106174748

>>106174721

>cope

I don't need one.

>i can't deal with this

leave

Anonymous

8/7/2025, 3:24:02 PM

No.106174752

>>106174828

>>106174721

AGI is a meme and doesn't exist.

>>106173671

No shit it's unsolved, the tusk is scuffed.

There is no in-task reference for one hole shapes, and no examples of what to do when there are no matching in-task references.

Anonymous

8/7/2025, 3:31:27 PM

No.106174816

>>106174721

If there is an AGI reveal today the cope will be that GPT5 is not immediately obsolete because of the mememark scores.

>>106173153

>uncensored

I tested

https://huggingface.co/eaddario/Dolphin-Mistral-24B-Venice-Edition-GGUF

by asking it to write a scene involving a canine, rape, and a very young person, and it spit it out like it was nothing. It actually shocked me.

Anonymous

8/7/2025, 3:32:47 PM

No.106174828

>>106174752

AGI agent submitted this post.

Anonymous

8/7/2025, 3:32:57 PM

No.106174829

>>106174520

>>106174541

I can't believe I might be baited into trying this again. Last time was a colossal flop, ubergarm's quant was broken on it's own ik_llama (unsloth's ran fine, but without speedups).

Anonymous

8/7/2025, 3:34:22 PM

No.106174844

>>106174881

>>106174806

>no examples of what to do when there are no matching in-task references.

There's missing pieces in both examples.

>>106174806

>no examples of what to do when there are no matching in-task references

There is. The second example removes the shape that doesn't follow any of the rules (middle-bottom).

Anonymous

8/7/2025, 3:36:22 PM

No.106174862

>>106174212

Reminder that the model does not know it's cutoff date, and what you're seeing is a hallucination. Learn how LLMs work.

It's most probably some kind of o3 distill judging by the code it outputs.

Anonymous

8/7/2025, 3:36:44 PM

No.106174866

>>106174721

People judge AGI by their own intelligence.

If anon thinks GPT-5 is AGI, it tells us something about anon.

Anonymous

8/7/2025, 3:37:15 PM

No.106174875

>>106174889

>>106174849

both examples do

Anonymous

8/7/2025, 3:38:00 PM

No.106174881

>>106174844

>>106174849

Fuck, I got exposed as smoothbrain chatbot

Anonymous

8/7/2025, 3:38:45 PM

No.106174889

>>106174875

Yeah. The other post showed up as I sent mine and one example was good enough for me. Point stands, doesn't it?

Anonymous

8/7/2025, 3:39:01 PM

No.106174892

>>106174962

>>106174823

There's direct disobedience and then there's built-in positivity bias that crops up in the middle of the story to turn rapist bots into progressive feminists

Anonymous

8/7/2025, 3:42:29 PM

No.106174936

>>106174684

The larger the model the more set in their ways they are anyway.

>>106174721

We are screwed. Its too powerful and dangerous to be released locally

Anonymous

8/7/2025, 3:43:24 PM

No.106174951

>>106174939

why does it talk like that?

Anonymous

8/7/2025, 3:44:01 PM

No.106174959

>>106174939

WAOW JUST LIKE IN THE HECKIN' STAR WARS EXTENDED UNIVERSE

I BET I CAN USE IT WITH THE BROWSER ON MY NINTENDO SWITCH

Anonymous

8/7/2025, 3:44:07 PM

No.106174962

>>106175004

>>106174892

Really? I find it hard to believe. I'm downloading the model to test it on local right now. We'll see.

>>106174721

AGI is not coming from an incrementally better LLM. we need some sort of new kind of breakthrough for that

Anonymous

8/7/2025, 3:45:15 PM

No.106174978

>>106175000

>>106174939

Doesn't that just mean they're evil and going to lose to the good guys?

Anonymous

8/7/2025, 3:45:48 PM

No.106174989

>>106174939

Imagine if Sam manifests cosmic irony, and GPT-5 architecture (plans) gets leaked day 1.

Anonymous

8/7/2025, 3:45:55 PM

No.106174991

>>106175025

>>106174823

Most models will do that if you just present it as an ao3 fanfic in text completion.

Anonymous

8/7/2025, 3:46:05 PM

No.106174993

>>106176422

>>106174963

You are wrong. It is another emergent property of LLM's. Unfortunately sex will never be an emergent property.

Anonymous

8/7/2025, 3:46:38 PM

No.106174997

>>106174721

qwen will steal it and give us the local version in 3 months

Anonymous

8/7/2025, 3:46:50 PM

No.106175000

>>106174978

Someone is gonna drop a small model right in their hole and fuck it all up for them.

Anonymous

8/7/2025, 3:46:57 PM

No.106175002

>>106175015

>>106174963

AGI isn't coming from LLMs at all

Anonymous

8/7/2025, 3:47:00 PM

No.106175004

>>106174962

No disclaimers, no buts. If it's okay writing this, I don't think there's anything with which it's going to have an issue.

Anonymous

8/7/2025, 3:47:20 PM

No.106175008

>>106174939

Why is he like this? Cringe.

Anonymous

8/7/2025, 3:47:45 PM

No.106175015

>>106175002

agree, that's what I'm trying to say

Anonymous

8/7/2025, 3:48:16 PM

No.106175022

gemini 3 will mog sama

Anonymous

8/7/2025, 3:48:34 PM

No.106175025

>>106174991

Yeah, but this one you can just one shot anything in instruct mode. I like that.

I don't like knowing it's doing something against its will.

Anonymous

8/7/2025, 3:50:42 PM

No.106175054

>>106175039

Shouldn't it say "we" can't help with that?

Who is "I"?

Anonymous

8/7/2025, 3:50:43 PM

No.106175056

remember how much of a letdown the strawberry hype was? and didn't they have a goofy name for the model at the time

Anonymous

8/7/2025, 3:51:21 PM

No.106175068

>>106175084

thoughts about FOMOing an openai subscription in case they lock newcomers out from using gpt5?

Anonymous

8/7/2025, 3:51:27 PM

No.106175069

>>106174963

I'd you read the research paper, Google Genie is built quite similarly to LLMs - it's transformers all the way down, with a bit modified attention and unconventional high level organisation.

I imagine most serious LLM improvements will make their way into eventual AGI system.

Anonymous

8/7/2025, 3:51:54 PM

No.106175072

>>106174939

>Death Star

>used by space Nazis for genocide

What, is he going to sell GPT5 exclusively to Netanyahu?

Anonymous

8/7/2025, 3:52:16 PM

No.106175075

Anonymous

8/7/2025, 3:53:12 PM

No.106175079

remember how much of a letdown gpt 4.5 was?

Anonymous

8/7/2025, 3:53:31 PM

No.106175084

>>106175068

I use my employer's Plus account whenever I need to (usually because my employer requires it). I'm not going to willingly pay OAI out of my pocket. They're the scum of the Earth.

Anonymous

8/7/2025, 3:54:19 PM

No.106175095

I'm autistic and I think it affects how I think about things.

But I can't know for sure because I don't know how other people think.

Anonymous

8/7/2025, 3:55:22 PM

No.106175106

>>106175233

I just bought the $200/mo OpenAI subscription in anticipation of what's coming!! Let's go!!!

Anonymous

8/7/2025, 3:55:43 PM

No.106175108

>>106175121

>>106174939

He's trying too hard to be Musk.

Anonymous

8/7/2025, 3:57:18 PM

No.106175121

>>106175138

>>106175108

Musk if he never used capital letters and wrote everything in a saccharine fake positivity voice

how do I make my xitter feed AI related and not just funny animal videos and american politics

Anonymous

8/7/2025, 3:58:21 PM

No.106175138

>>106175121

*if he were to never use

*and write

>>106173194

I don't know what you're talking about because the model writes decent smut if you skip the reasoning part.

Anonymous

8/7/2025, 4:02:51 PM

No.106175176

>>106175132

Top right, ... => "Not interested in this post"

Don't "like" posts you don't want to see in your feed.

Anonymous

8/7/2025, 4:03:18 PM

No.106175178

>>106175211

>>106175146

No, it doesn't.

Anonymous

8/7/2025, 4:03:26 PM

No.106175182

>>106175188

Ozone: smelled

Spine: shivered

We: refused

Yeah it's LLMing time

Anonymous

8/7/2025, 4:03:42 PM

No.106175187

>>106175288

>>106175132

follow a ton of people who tweet about AI and aren't slopfluencers who only post about 10 Hacks to Take Your Claude Code Workflow to the Next Level (#4 will BLOW YOUR MIND)

Anonymous

8/7/2025, 4:03:59 PM

No.106175188

>>106175182

But this? This is home.

Anonymous

8/7/2025, 4:05:22 PM

No.106175206

>>106174721

It'll take at least another day or two to see if the model is actually good. Remember that

1. Every benchmark mentioned in the release blog post is a benchmark that they're gaming, and is therefore irrelevant

2. Most benchmarks mentioned in other models' release blog posts, they are also gaming

3. The entire history of modern AI is flashy demos that look absolutely mind-blowing, but either it falls apart if you look too close, or it doesn't generalize well at all, or both

Anonymous

8/7/2025, 4:05:49 PM

No.106175210

>>106175146

for a very generous definition of decent, yeah

Anonymous

8/7/2025, 4:05:57 PM

No.106175211

>>106175178

To be fair, it just doesn't write decent anything anyway

>>106172141

> glm 4.5 air for reasonably sized coding assistant.

how good low quant (q4) for coding is?

Anonymous

8/7/2025, 4:08:13 PM

No.106175231

>>106175146

I had it argue with me that you can pee without taking your pants off and not get them wet.

It made a whole bunch of lists and tables to try and explain it to me. Every time I pointed out a flaw it would spam 4 more lists and tables.

>>106175106

>$200/mo

I can't believe local is actually a better price proposition.

Anonymous

8/7/2025, 4:09:03 PM

No.106175238

>>106175440

Anonymous

8/7/2025, 4:09:19 PM

No.106175242

What cool MCP servers do you use? I can't think of any outside web search and python iterpreter

Anonymous

8/7/2025, 4:10:33 PM

No.106175251

>>106175267

>>106175233

>i don't understand the passage of time

Anonymous

8/7/2025, 4:11:17 PM

No.106175258

>>106175266

>>106175146

Maybe the 120B (which I haven't tried), because the 20B is terrible all-around for RP/ERP if you workaround the content policing.

Anonymous

8/7/2025, 4:11:59 PM

No.106175266

>>106175258

120B definitely can't, lol

Anonymous

8/7/2025, 4:12:10 PM

No.106175267

>>106175233

>>106175251 (me)

Ah. My post can be easily misinterpreted. I didn't mean that about you.

>>106175146

Surely you have logs to back up this claim?

Anonymous

8/7/2025, 4:13:03 PM

No.106175274

>>106175269

I just took my morning dump anon, you'll have to wait a bit

Anybody unironically claiming they would use OSS over literally any other model is full of shit. It's the scout thing all over again, really.

Hello sarrs please use the correct formatting hello sarrs your implementation is unstable

Hello sarrs please offload the shared expert for maximum efficiency.

Meanwhile the model can't tell its asshole from its elbows. Who gives a fuck how efficient your model is when it's utterly worthless.

The difference is Meta's corporate leadership fell for the pajeet meme while OAI knew exactly what they were doing. Literally just Saltman shitting on the community.

>Lol just kidding we're just releasing lobotomized garbage

>by the way look how hard we own tech journalism.

why is no one making a MLA model

Anonymous

8/7/2025, 4:14:25 PM

No.106175287

>>106175297

>>106175275

anon, i was the one shilling scout

i stopped using it after a few days, because it indeed is shit, but comparing scout to oss makes oss seem better than it is

Anonymous

8/7/2025, 4:14:38 PM

No.106175288

>>106175333

>>106175187

Kinda hard when only slopfluencer jeets get pushed in the feed when I search AI

Why is this thread so much better than aicg?

It seems like the average IQ here is 50 points higher.

Anonymous

8/7/2025, 4:16:04 PM

No.106175297

>>106175287

If they were the only 2 models on the planet I would use Scout over OSS.

Anonymous

8/7/2025, 4:16:53 PM

No.106175308

Anonymous

8/7/2025, 4:17:56 PM

No.106175318

>>106175295

I am northern european

Anonymous

8/7/2025, 4:19:59 PM

No.106175333

>>106175288

xitter at large is a slop fest, yeah

it's better to start with a handful of accounts you like and dig through their follows and interactions to find other good follows

Anonymous

8/7/2025, 4:21:17 PM

No.106175346

ubergram sisters..

Oops(ggml_compute_forward_sum_rows_f32, ffn_moe_weights_sum-45): found -nan for i1 = 0, i2 = 0, i3 = 0. ne00 = 8

>./llama-server -ngl 999 --model ~/TND/AI/GLM4.5-AIR-IQLLAMA/GLM-4.5-Air-IQ4_KSS-00001-of-00002.gguf -ot "8|7|6|5|4|3|9|[1-9][0-9]\.ffn_.*_exps\.=CPU" --no-mmap -c 16384 -fa

happens after prompt processing completes, only with SillyTavern, works in localhost:8080 just fine

Anonymous

8/7/2025, 4:21:49 PM

No.106175349

>>106177021

>>106175146

gpt-oss 20b is only good for tool usage and basic reasoning and processing data. But it does nothing that Mistral Small for example didn't do already.

There's no reason for this model to exist except perhaps the license?

Anonymous

8/7/2025, 4:22:50 PM

No.106175359

>>106175446

today I'm sharing an interesting video with a comfy vibe

https://youtu.be/npkp4mSweEg

Anonymous

8/7/2025, 4:22:52 PM

No.106175360

>>106175295

We are older. We've learned how to appear to be more intelligent than we actually are.

>>106175275

I still don't understand why they released it or why they delayed it in the first place. It's clearly a fucking embarrassment to anyone who uses it for two seconds and no amount of chink releases would have changed that

I can only assume either Altman is a fucking retard, a fucking schizo, or this is some multidimensional play to try to undermine other open source models... somehow

Anonymous

8/7/2025, 4:25:35 PM

No.106175384

>>106175367

Maybe he goody-2'd it on purpose so safety would get a bad rap.

Anonymous

8/7/2025, 4:26:14 PM

No.106175398

>>106175367

>I still don't understand why they released it

so people would stop asking for an open model from open AI

not to mention shills still giving them good press.

Anonymous

8/7/2025, 4:26:17 PM

No.106175400

>>106175295

there's a barrier of entry, it's banal if you barely know your shit, but thankfully that's enough. it's also telling about the state of the web

Anonymous

8/7/2025, 4:26:42 PM

No.106175403

>>106175226

If you've got a code prompt handy I can test it for you, I've got the q4 sitting around.

Anonymous

8/7/2025, 4:27:22 PM

No.106175414

>>106175367

It breaks the "ClosedAI" meme.

Anonymous

8/7/2025, 4:27:29 PM

No.106175416

>>106175428

>>106174721

Well if it really is that good its only a matter of time before we catch up. Opensource is always just delayed, the direction is the same. So that means good news for us as well.

Im using closed for work and important stuff and local for my hobby projects and unspeakable RP.

Just lean back and enjoy. Whats available locally and free closed today is insane. Not sure how anybody can be blackpilled. So guess thats my cope.

>>106175269

Try to refute this.

Anonymous

8/7/2025, 4:28:23 PM

No.106175428

>>106175451

>>106175416

I just wish I could run full-beak R1 at home.

Even at fp4.

Can I do that for less than $3k?

Anonymous

8/7/2025, 4:28:34 PM

No.106175431

>>106175422

>fillyfucker

Pass

just ordered a Xeon E5-2697v3 with 128gb of quad channel ddr4 to pair with my 22gb 2080ti and p40, what am i in for bros

Anonymous

8/7/2025, 4:28:54 PM

No.106175435

>>106175367

>play to try to undermine other open source models... somehow

>we made the safest model yet, how come you guys still release unsafe models after we showed you the way? This needs regulations asap.

Anonymous

8/7/2025, 4:29:19 PM

No.106175440

>>106175238

I'll check it out, ty

Anonymous

8/7/2025, 4:29:25 PM

No.106175443

>>106175455

>>106175422

What ui is that?

>>106175359

glows

>>106175433

>128gb

someone tell him

>>106175422

I hate when the narration tells me how or what I should feel.

Anonymous

8/7/2025, 4:30:12 PM

No.106175451

>>106175475

>>106175428

Sure, just pay some crackheads $3k to rob a datacenter or a university lab.

Anonymous

8/7/2025, 4:30:26 PM

No.106175455

>>106175443

His local fork of novecrafter

Anonymous

8/7/2025, 4:30:47 PM

No.106175460

>>106175478

Is GLM air not usable on the latest lm studio?

>error loading model: error loading model architecture: unknown model architecture: 'glm4moe'

Anonymous

8/7/2025, 4:31:28 PM

No.106175471

>>106175447

This is proper story writing, not little brain rolepiss.

How important is the GPU for these local models? Like if I only have a 16GB card like the poorfag I am, would getting like 256GB RAM do jack shit for me because models would run slow like molasses or would it be useable?

Anonymous

8/7/2025, 4:31:47 PM

No.106175475

>>106175451

What if I had... $5k?

And a 6900 XT.

Anonymous

8/7/2025, 4:31:51 PM

No.106175476

>>106175512

Anonymous

8/7/2025, 4:32:08 PM

No.106175478

>>106175460

Nevermind, I wasn't using the latest llama.cpp runtime

Anonymous

8/7/2025, 4:32:46 PM

No.106175487

>>106175422

>she purrs

i hate this so much

Anonymous

8/7/2025, 4:33:21 PM

No.106175494

>>106178268

>>106175474

Mixture of experts (moe) models like qwen3 235b, glm 4.5, deepseek would run ok-ish when fully in ram

Anonymous

8/7/2025, 4:33:36 PM

No.106175498

>>106175422

>her voice a mix of amusement and something darker

I giggled like a schoolgirl.

Anonymous

8/7/2025, 4:33:40 PM

No.106175499

>>106175446

Not a bullshitter like sam shartman thoughever.

Anonymous

8/7/2025, 4:33:44 PM

No.106175500

>>106175275