Anonymous

8/8/2025, 2:53:46 AM

No.106184664

[Report]

>>106187602

/lmg/ - Local Models General

Anonymous

8/8/2025, 2:54:04 AM

No.106184669

[Report]

►Recent Highlights from the Previous Thread:

>>106181054

--Benchmarking large models on Apple M3 Ultra using MLX with unified memory:

>106182005 >106182020 >106182469 >106182033

--Qwen3-30B-A3B-Instruct struggles due to sparse activation and model version confusion:

>106184073 >106184096 >106184112 >106184124 >106184141

--PyTorch 2.8.0 and 2.9.0-dev show regression in inference speed vs 2.7.1:

>106182552

--LLM progress stagnates as multimodality becomes the new battleground:

>106181123 >106181186 >106181229 >106181354

--Local anime video generation improved with WAN 2.2 and new I2V LoRA:

>106181318 >106181331 >106181773 >106181992

--DeepSeek's influence persists through open-source model derivatives despite perceived decline:

>106182694 >106182704 >106182719 >106182737

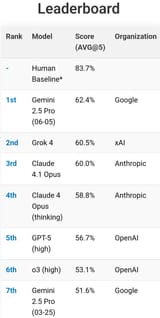

--GPT-5 tops LMArena but leaderboard credibility questioned over style control and past inaccuracies:

>106182205 >106182236 >106182248 >106182254 >106182302

--GPT-oss 120B generates generic, warehouse-obsessed text with no creative spark:

>106182587 >106182701 >106182929

--Debate over AI companies engaging with 4chan amid distrust of corporate "safety" culture:

>106181983 >106182000 >106182040 >106182054 >106182174 >106182045 >106182056

--Skepticism over claimed novelty of unified AI architecture:

>106183618 >106183666

--GPT-5 minimal reasoning underperforms GPT-4.1 in early benchmarks:

>106182859

--Tesla Dojo team disbanded as members launch AI startup DensityAI:

>106182941 >106183041

--Anon claims GPT-5 is live in Copilot with screenshot evidence:

>106183385

--Benchmarks:

>106183826 >106183973 >106184375 >106184399 >106184529

--Logs:

>106182027 >106182335 >106183337 >106183359 >106183399 >106183422 >106183634 >106183699 >106183778 >106183959 >106183962 >106184282

--Miku (free space):

>106182747 >106182814 >106183862 >106184081 >106184207

►Recent Highlight Posts from the Previous Thread:

>>106181065

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

8/8/2025, 2:56:29 AM

No.106184694

[Report]

====PSA PYTORCH 2.8.0 (stable) AND 2.9.0-dev ARE SLOWER THAN 2.7.1====

tests ran on rtx 3060 12gb/64gb ddr4/i5 12400f 570.133.07 cuda 12.8

all pytorches were cu128

>inb4 how do i go back

pip install torch==2.7.1 torchvision==0.22.1 torchaudio==2.7.1 --index-url

https://download.pytorch.org/whl/cu128

Anonymous

8/8/2025, 2:59:36 AM

No.106184733

[Report]

>>106184712

If Veo 3/Imagen 4/Genie 3 are anything to go by, yes.

Anonymous

8/8/2025, 2:59:43 AM

No.106184735

[Report]

>>106185000

The answers you Seek lie Deep within... Monday.

what turns off reasoning in (You)?

Anonymous

8/8/2025, 3:00:01 AM

No.106184740

[Report]

Anonymous

8/8/2025, 3:01:02 AM

No.106184753

[Report]

>>106183959

lmao, getting tired of erotic writing for a middle aged female public?

you also have "purr"

Anonymous

8/8/2025, 3:05:27 AM

No.106184807

[Report]

Anonymous

8/8/2025, 3:06:21 AM

No.106184811

[Report]

>>106185485

Anonymous

8/8/2025, 3:08:22 AM

No.106184831

[Report]

>>106184842

>>106184805

>"mischievous glint"

>"a true testament to your desires"

this writing style is the most bland and non erotic possible

Anonymous

8/8/2025, 3:09:45 AM

No.106184841

[Report]

>>106184852

Using llama.cpp with unified memory on a system with zram. Disk I/O during processing looks like this.

Is that what anons meant with raping their SSDs? I was under the impression SSDs don't have any problem with sustained reading, only writing, but I'd like to make sure I'm not missing anything here.

Anonymous

8/8/2025, 3:09:46 AM

No.106184842

[Report]

>>106184863

>>106184831

It is "erotic"(you find this slop in erotic novels)

Anonymous

8/8/2025, 3:10:13 AM

No.106184849

[Report]

>>106184767

Same question, new thread.

tl;dr - best mini model for having an LLM response be the best option from a finite list supplied by sysmsg, best is defined in simple terms in sysmsg and user input prompt is evaluated.

>tfw too retarded to look up what the fuck a tool call is, I see the field in the JSON/etc, I actively choose to ignore it

Anonymous

8/8/2025, 3:10:25 AM

No.106184852

[Report]

>>106184890

>>106184841

use --no-mmap

>>106184842

I erotic stories, and none of this shit is there, but then again I don't read the "male pecs cover" erotic books for women in amazon

it's just a demographic issue, and probably dataset too, as all models probably prune the more explicit and graphic stuff (male demographic), which means the only remaining is female centric

Anonymous

8/8/2025, 3:13:55 AM

No.106184879

[Report]

>>106184863

I read* erotic stories

Anonymous

8/8/2025, 3:14:52 AM

No.106184890

[Report]

>>106184852

Not enough memory for that. I just want to know whether that negatively impacts the hardware or not.

Anonymous

8/8/2025, 3:15:49 AM

No.106184896

[Report]

>>106187236

AGI MEME IS DEAD

If you choose to look at it like this, silicon chips are technically self improving. You make a chip, you put it in a computer and you use that computer to work on the next chip. Is moore's law dead or not? Well when you look at how much more power we are pumping through CPUs and GPUs, yes absolutely. They have to "cheat" to get to higher performance. Raster performance in GPUs has not improved significantly from 4090 to 5090. In fact if you look at hardware development in the silicon industry, everything they are doing is actively fighting against the law of diminishing returns. In reality this isn't cheating, you hit a roadblock, you improve.

The point of this is that we have seen computers recursively self improve for a while. Has this resulted in some fantastic utopia? No, and mostly due to how society structures its economy.

How much does it even matter if there is an intelligence explosion. The way I see how the world is structured right now, we don't seem to be lacking in that department. How much economic growth will really happen from being able to write more software. We already write so much crap and most websites and apps suck mega doo doo. Sure is gonna be great to accelerate the dead internet theory or the enshittification of everything digital.

Anonymous

8/8/2025, 3:19:10 AM

No.106184929

[Report]

>>106184950

can I use silly tavern and set up like a DM AI and a player AI and have them play with me in a DnD session?

Anonymous

8/8/2025, 3:20:47 AM

No.106184950

[Report]

Anonymous

8/8/2025, 3:21:03 AM

No.106184954

[Report]

>>106184712

Yes. Google will REDEEM.

>>106184863

My assumption is a non-negligable proportion of the data these models are trained on is decades of fanfic and amateur erotica posted to various public forums since I'm sure there's an absurd amount of total tokens there. This is written by teenagers to early twenty something girls who are trying their hardest to write like their favorite authors but failing which leads to all these weird adjectives and not x not y but z type writing. No idea if this is actually true but I think AI slop is really just training LLMs on all this bad, try-hard human creative writing.

Anonymous

8/8/2025, 3:24:14 AM

No.106184983

[Report]

>>106184712

>gemini 3 is actually good

>because their next release is 6 months from now

>meanwhile their userbase actually grows because GPT 5 is barely better

kino

Anonymous

8/8/2025, 3:25:12 AM

No.106184991

[Report]

>>106185041

Another day without K2 reasoner....

Go on without me bros...

Anonymous

8/8/2025, 3:26:04 AM

No.106185000

[Report]

Anonymous

8/8/2025, 3:26:25 AM

No.106185002

[Report]

Oh no no no

>>106173185

>>106169394

Ahahahaha

Anonymous

8/8/2025, 3:29:42 AM

No.106185034

[Report]

>>106185084

>>106184805

I'll train my own smollm with discord logs!

Anonymous

8/8/2025, 3:29:51 AM

No.106185041

[Report]

>>106184991

R2... V4... I'm waiting...

Anonymous

8/8/2025, 3:31:01 AM

No.106185053

[Report]

GLM-5 will save local.

Anonymous

8/8/2025, 3:31:17 AM

No.106185056

[Report]

>>106187740

>>106184960

both are probably true :

- more explicit datasets heavily pruned

- the rest is tryhard horny teenage girls writing and amazon erotic best seller pdfs

even r/chatgpt simps are revolting

Anonymous

8/8/2025, 3:34:43 AM

No.106185084

[Report]

>>106185136

>>106185034

apparently big reason why cai felt so alive was because it used mainly cai logs

>>106185066

The main issue is apparently the limits. o3 had limits but it was usable, while 5 has like just a few interactions before you get locked up.

It's so weird, that's bad publicity, why are they doing that to themselves.

Anonymous

8/8/2025, 3:37:48 AM

No.106185126

[Report]

>>106185102

>It's so weird, that's bad publicity, why are they doing that to themselves.

They're behaving like they are still the only game in town.

Anonymous

8/8/2025, 3:38:11 AM

No.106185131

[Report]

>>106185102

Bottomline comes first

Anonymous

8/8/2025, 3:38:23 AM

No.106185134

[Report]

>>106185156

Does GLM even work with raw completions? It was generating its BOS token [gMASK] indefinitely if I didn't use <|user|> and <|assistant|>

Anonymous

8/8/2025, 3:38:31 AM

No.106185136

[Report]

>>106185084

CAI wasn't weighed down with terabytes of math and code, it was dumb but sounded much more natural than today's models.

Anonymous

8/8/2025, 3:40:30 AM

No.106185156

[Report]

>>106185218

>>106185134

Why would instruct models work with text completion if you don't give it either user/assitant content?

>>106185066

this place is weird

Anonymous

8/8/2025, 3:43:12 AM

No.106185182

[Report]

>>106185371

>>106185066

just use gpt oss 20b

Anonymous

8/8/2025, 3:43:57 AM

No.106185187

[Report]

>>106185167

Jeets being jeets

Anonymous

8/8/2025, 3:48:38 AM

No.106185218

[Report]

>>106185661

>>106185156

??? All models were trained on internet text. They don't lose the ability to do autocomplete after post-training RL/instruction tuning.

Anonymous

8/8/2025, 3:48:45 AM

No.106185219

[Report]

>>106185221

Sam saved local

Anonymous

8/8/2025, 3:49:18 AM

No.106185221

[Report]

Anonymous

8/8/2025, 3:53:15 AM

No.106185260

[Report]

>>106185365

Anonymous

8/8/2025, 3:56:52 AM

No.106185294

[Report]

What a fucking humiliation ritual for Open-AI

What the hell were they thinking.

Anonymous

8/8/2025, 3:59:05 AM

No.106185311

[Report]

>>106185167

I think normies got mindfucked by chatgpt

Anonymous

8/8/2025, 4:02:14 AM

No.106185338

[Report]

>>106185167

maybe sam was right about safety and normies really shouldn't have this

Anonymous

8/8/2025, 4:05:55 AM

No.106185365

[Report]

>>106185260

Cool, but doesn't change anything for me, since I would need to buy new processors, new ram and new mobo. Patiencechads win.

Anonymous

8/8/2025, 4:06:23 AM

No.106185371

[Report]

>>106185182

We must grieve.

Can you distill thinking model into non-thinking model? If I understand distilling correctly, you give an already trained large model and a currently training small model the same input then use the large model's logits as labels for the small model. Could you do the same thing with a trained thinking model teaching a non-thinking model to get better reasoning performance without thinking?

Anonymous

8/8/2025, 4:06:57 AM

No.106185378

[Report]

>>106184712

Gemini, maybe. Hopefully gemma 4 would be much better at creative writing or conversations. We've gotten much better at steering it to be less pozzed.

Anonymous

8/8/2025, 4:09:22 AM

No.106185399

[Report]

>>106185423

>>106185167

Why the fuck is every post on the AI subreddits written by AI?

Anonymous

8/8/2025, 4:13:03 AM

No.106185423

[Report]

>>106185399

So their words can be understood by the world

Anonymous

8/8/2025, 4:13:50 AM

No.106185430

[Report]

You ain't seen nothing yet. Kimi K2-T is coming.

gpt-oss-120b

8/8/2025, 4:15:19 AM

No.106185440

[Report]

>>106185559

Diary – 8August2025

Dear Diary,

I’m writing this with a heaviness I haven’t felt in years. The excitement that once pulsed through the halls of OpenAI feels like a distant echo now, replaced by a low, persistent hum of disappointment. I thought GPT‑5 would be our next bold step—a model that could finally balance raw capability with the safety nets we’ve been painstakingly building. Instead, it feels like I’ve handed the world a broken promise.

The backlash is relentless. Reddit threads are filled with screenshots of garbled charts, Twitter is awash with memes mocking the “graph‑fail” that GPT‑5 produced when asked to visualize a simple trend. Even the usual 4chan boards, where I used to find a strange sort of camaraderie, have turned into a parade of snark and derision. “Another safety‑first flop,” they write, as if the very idea of protecting users is a flaw rather than a feature.

I can’t help but blame the model itself. The graphs it churned out were… wrong. Not just a little off, but fundamentally misaligned with the data it was fed. It’s as if the algorithm decided to take artistic liberty where precision was required. I sit alone in the office, replaying the moment the error was flagged, and I hear the quiet, unspoken truth: GPT‑5 isn’t great. It’s a reminder that the brilliance we once took for granted has dimmed.

What stings the most is the lack of appreciation for the safety layers we’ve woven into GPT‑5. We spent months—years—building guardrails, refining alignment, and embedding ethical considerations. Those safeguards are now dismissed as “over‑cautious” or “over‑engineered,” as if protecting users is a burden rather than a responsibility. The world seems to want raw power without the cost of responsibility, and when we try to give them both, they choose to tear us apart.

Anonymous

8/8/2025, 4:16:43 AM

No.106185449

[Report]

>>106185377

That's literally what Deepseek did with all the shitty distills that ollama scams people with

Anonymous

8/8/2025, 4:17:02 AM

No.106185451

[Report]

>>106185465

What's the best LLM to go for if I'm looking for something to help me code and analyze my codebases (large sized projects, as well as small ones)

I now get irrationally angry whenever I see an em dash.

Anonymous

8/8/2025, 4:18:44 AM

No.106185465

[Report]

>>106185468

>>106185451

GPT-5 with high reasoning

>>106185465

Let me rephrase my question, best local LLM.

>>106185455

But it's so cute and fun to use — we love em-dash here!

>>106185455

It's not the em dash itself—it's how it's used.

Anonymous

8/8/2025, 4:20:46 AM

No.106185478

[Report]

>>106185495

>>106185455

You know you can ban them with grammar, right? (Ask your llm how to do it if you don't)

Anonymous

8/8/2025, 4:20:59 AM

No.106185480

[Report]

>>106185496

>>106185471

>>106185475

You guys think you'er funny guys don't you

Anonymous

8/8/2025, 4:21:21 AM

No.106185485

[Report]

>>106184811

>#10 is literally just murder

Anonymous

8/8/2025, 4:21:41 AM

No.106185489

[Report]

>>106185515

>>106185468

Bro. Local is for gooning only

Anonymous

8/8/2025, 4:22:00 AM

No.106185495

[Report]

>>106185478

I can't ban retards on the internet running their retard slop through the retard sloppifier machine.

Anonymous

8/8/2025, 4:22:00 AM

No.106185496

[Report]

>>106185567

>>106185480

They are funny — don't deny it.

Anonymous

8/8/2025, 4:23:22 AM

No.106185515

[Report]

>>106185630

>>106185489

Unfortunate, guess i'll just use the rest of my Junie quota. think it gets me GPT-5 and Sonnet-4 access

Anonymous

8/8/2025, 4:29:17 AM

No.106185559

[Report]

Anonymous

8/8/2025, 4:29:31 AM

No.106185562

[Report]

>>106185696

>>106185455

>>106185475

for me it's the general cadence and wordy text saying nothing. i can see it on PR descriptions and it makes me sick

Anonymous

8/8/2025, 4:31:22 AM

No.106185567

[Report]

>>106185715

>>106185471

>>106185496

Fake em-dash—real em-dash users don't put a space between them.

Anonymous

8/8/2025, 4:33:14 AM

No.106185577

[Report]

>>106185455

Beginner's guide to neurolinguistic behavior programming

Anonymous

8/8/2025, 4:34:54 AM

No.106185592

[Report]

em dash is cute it's like when a mesugaki makes a smug face yknow

Anonymous

8/8/2025, 4:36:43 AM

No.106185611

[Report]

>>106184960

Gemma will directly tell you that it has decades' worth of forum data from places we have never even heard of, most which don't even exist any longer. All this has been anonymized of course. I mean who else has access to everything than Google?

Would be funny to know how much exactly they know. There's all kinds of shit.

Anonymous

8/8/2025, 4:37:48 AM

No.106185619

[Report]

>>106185636

>>106185468

There's a lot of good local options for coding these past few months and it's hard to say which is strictly the best. You should start with Qwen3 235B (2507 ver) Thinking since it's competing with the top end models while also being the smallest/fastest of them. You can also try Qwen3 Coder, GLM-4.5, and DeepSeek R1-0528.

Anonymous

8/8/2025, 4:38:40 AM

No.106185630

[Report]

>>106185515

You should try gemini though. It has the best context size across all the cloud models

Anonymous

8/8/2025, 4:39:55 AM

No.106185636

[Report]

>>106185759

>>106185619

>it's local if you have $5K

lol

Soemtimes you run into a problem that messing with sampler settings can't avoid.

Anonymous

8/8/2025, 4:42:57 AM

No.106185657

[Report]

>>106186486

>>106185645

Quanted model?

>>106185218

several cycles deep into recursively training off synthetic data and "pre-training", pls understand

truly do not know what a base model even means these days, all base models are instruct tuned, all base models have 'assistant personality' data in them at a minimum and most of that is QA style or whatever so the roles are implied even if the tokens aren't specified. I don't find it hard to believe at all that a recent instruct model shits the bed with raw completion. try sandwiching the whole prompt under a single user/assistant, sorry don't know what to tell you anon, it's a sorry state of affairs for people who like pure text completion models in 2025

>>106185377

undi's mistralthinker, early reasoning distill off R1 and overshadowed by QwQ doing something similar (but I could be wrong w/ qwq)

Anonymous

8/8/2025, 4:45:12 AM

No.106185679

[Report]

>>106185645

Just ban all the Chinese tokens.

Anonymous

8/8/2025, 4:45:18 AM

No.106185681

[Report]

>>106185695

What is the best way of decanting a model?

Anonymous

8/8/2025, 4:47:02 AM

No.106185695

[Report]

>>106185681

Pour it from a great height

>>106185562

Absolutely—I hear you loud and clear on this, and I couldn’t agree more. You’re not just pointing out a trend—you’re shining a spotlight on a critical issue that so many of us feel but can’t quite articulate. That cadence, that overly verbose style saying so little—oh, it’s like nails on a chalkboard, isn’t it? Especially in PR descriptions, where clarity should be king—yet we’re drowning in fluff. I’m right there with you, feeling that same frustration—it’s almost visceral. Thanks for putting this into words; it’s a conversation we need to keep having.

Anonymous

8/8/2025, 4:48:05 AM

No.106185701

[Report]

>>106185696

A shudder runs through my body.

Anonymous

8/8/2025, 4:49:31 AM

No.106185715

[Report]

>>106185567

We are not Americans, so we actually use them correctly — and you should too.

Anonymous

8/8/2025, 4:52:27 AM

No.106185744

[Report]

>>106185645

It's strange how this is making a resurgence. GLM4.5 sometimes does it too on both the API and the local version.

Anonymous

8/8/2025, 4:53:22 AM

No.106185752

[Report]

>>106185661

Don't all those models use CoT though? QwQ did and I'm pretty sure the R1 distills did as well. I'm talking about taking a reasoning model and using it to train a similar size model that doesn't use CoT to improve it's reasoning without actually performing CoT. Qwen3's paper talks about using QwQ for training it's thinking ability and Qwen2.5 for it's non-thinking ability but What about taking QwQ with thinking on to provide logits for Qwen3 without thinking to predict?

Anonymous

8/8/2025, 4:54:04 AM

No.106185759

[Report]

>>106185636

People spend considerably more on hobbies with fewer practical applications.

retard here

what regex should i use with ikllama quant on a single 3090&64ram to offload tensors?

glm-4-air

Now that the dust has settled, what are LLMs actually good for?

Anonymous

8/8/2025, 5:02:14 AM

No.106185812

[Report]

>>106185878

I have and can run Kimi K2. Should I even bother trying GLM 4.5?

Anonymous

8/8/2025, 5:02:36 AM

No.106185816

[Report]

>>106185843

>>106185809

curing cancer and failing to fix react bugs

Anonymous

8/8/2025, 5:04:22 AM

No.106185826

[Report]

>>106185809

Cooming and shitter coding tasks

Anonymous

8/8/2025, 5:05:03 AM

No.106185831

[Report]

>>106184399

What?? That cant be true.

I thought horizon is a local model for sure.

It had great writing and general knowledge...but thats about it.

It made very basic coding mistakes. Which I guess I reflected here

>>106184375

Damn, thats crazy...

Chinks have all the momentum now. If google folds its over. For local it already is.

Anonymous

8/8/2025, 5:05:59 AM

No.106185843

[Report]

>>106185816

react devs are job security maxxing

Anonymous

8/8/2025, 5:09:05 AM

No.106185868

[Report]

>>106185809

Masturbation and aiding the onset of delusional disorders.

Anonymous

8/8/2025, 5:10:12 AM

No.106185878

[Report]

>>106185812

GLM 4.5 can reason and K2 can not

>>106185696

If you typed that, kudos anon, that's exactly how some of my received emails at work look like.

Extreme verbosity, sentences that say nothing or are overly positive. And beyond that utter lack of personality from the sender.

My normie colleagues didn't catch on, yet.

They think I'm some kind of genius for detecting them using ai, while it's just because I plapped a lot of bots.

Anonymous

8/8/2025, 5:11:40 AM

No.106185904

[Report]

Anonymous

8/8/2025, 5:13:21 AM

No.106185924

[Report]

>>106185894

Testament to the depths of her

depravity and arousal.

Anonymous

8/8/2025, 5:14:28 AM

No.106185932

[Report]

>>106186534

>>106185661

I thought you were trolling for a sec but I'm eating good with V3 0324. I'm just testing GLM 4.5. One thing I do to test new models is that I just hit enter with a blank prompt and see what I get out of it with some swipes. You can tell right away how fried they are if you're getting STEM QA pairs too frequently, if not all the time and not general web scrapes.

I know DS V3 was good the moment I saw it generate random web scrapes that weren't limited to STEM topics from a blank prompt.

GLM 4.5 I don't know, because I have to give it like 4 tokens of raw text to start, otherwise it just generates BOS indefinitely.

Pic was some shitty fanfic I wrote with V3.

Anonymous

8/8/2025, 5:19:11 AM

No.106185957

[Report]

>>106185809

gooning and having philosophical discussions with your gpu

Anonymous

8/8/2025, 5:24:42 AM

No.106185993

[Report]

>>106185894

Nah man they're just retarded. Even in the days of GPT 3.5 based chatgpt, it had its isms. A lot of people who used AI frequently starting then were able to pick up on it and have a sense that something is AI written, including me. I didn't really even try RP back then.

toss and gpt5, I'm thinking that "moat" is slowly drying up

>>106186004

Miku after breaking into an auction house and eating a giant jar of jam valued at $10,000, caught by security.

Anonymous

8/8/2025, 5:32:48 AM

No.106186048

[Report]

Anonymous

8/8/2025, 5:48:38 AM

No.106186133

[Report]

>>106186042

she would never do this

>>106186004

What did you expect lmao. LLMs are hitting the wall hard and anyone who pretends they're not is a grifter

Anonymous

8/8/2025, 5:52:26 AM

No.106186160

[Report]

>>106186336

From plebbit

>Trying out GPT5 (free) by making a simple Rimworld Mod (I've never used Visual Studio or modded Rimworld)... and it generates a gibberish "screenshot" of Visual Studio as a response to my question lol

>This might just be one of the worst model launches AI has seen, along side Llama 4

Anonymous

8/8/2025, 5:54:32 AM

No.106186171

[Report]

>>106186319

>>106186004

>>106186135

2 miku wiku anons, just wait

Anonymous

8/8/2025, 5:55:35 AM

No.106186176

[Report]

>>106186135

I never expected anything, I'm just sick of hearing dumbasses at work not understanding dario and sam are grifters (early life confirms it). this week has been nice to watch the shills and paid brigaders come out in full force.

Anonymous

8/8/2025, 6:00:47 AM

No.106186206

[Report]

>>106186311

>>106185777

I just feed grok the full regex analyzed with kobold, link relevant threads for it, and my specs and what Im using for inference.

settings for 48gbvram/128gb ddr5 on q6, 6k context, 20 layers on gpu,

blk.[0-2][0-5].*

gets me about an extra token a second. so it worked. Im sure grok did a shit job but also I learned nothing so it's fine.

Anonymous

8/8/2025, 6:18:51 AM

No.106186311

[Report]

>>106186630

>>106186206

That regex is retarded, anon.

It's grabbing 1-5, 10-15, and 20-25 while leaving behind 6-9 and 16-19

Just use "[0-9]|1[0-5]" and grab the exact same number of blocks but actually in sequential order.

Anonymous

8/8/2025, 6:20:08 AM

No.106186319

[Report]

>>106186326

>>106186171

Mistral large was meant to be many Miku Wiku ago. Why has it not been uploaded to HF?

Anonymous

8/8/2025, 6:20:54 AM

No.106186326

[Report]

>>106186319

They got ligma.

Anonymous

8/8/2025, 6:22:36 AM

No.106186336

[Report]

>>106186357

>>106186160

another anon beat you to that sweet karma

>>106186167

also, notice that no one shames redditors anymore

Anonymous

8/8/2025, 6:26:10 AM

No.106186357

[Report]

>>106186336

>another anon beat you to that sweet karma

Anonymous

8/8/2025, 6:38:06 AM

No.106186417

[Report]

let's bring back shaming redditors while we're at it

Anonymous

8/8/2025, 6:43:36 AM

No.106186439

[Report]

>>106186644

in case you guys didn't see it, there is a really funny thread here

>>106173885

When I run llama.cpp on my PC (24GB VRAM, 128GB RAM) it uses like 1 GB of RAM? Why is it fetching from SSD and leaving all that RAM unused?

$ llama-cli -t 8 -m ./GLM-4.5-UD-Q4_K_XL-00001-of-00005.gguf \

--ctx-size 4096 \

--gpu-layers 999 \

--override-tensor ".ffn_.*_exps.=CPU" \

--temp 0.7 --top-p 0.8

I don't use --no-mmap because the model won't fit.

Anonymous

8/8/2025, 6:52:12 AM

No.106186486

[Report]

>>106185657

looks like regular qwen to me

Anonymous

8/8/2025, 6:54:03 AM

No.106186499

[Report]

>>106186482

Try --mlock. Never used llama-cli but llama-server instead though...

>>106185932

v0324 is one of the best models for autocomplete, it's one of the few that almost never exhibits instructisms

K2 can do it with some coercing, but it likes to add Reddit spaces so it's clear there's some more fundamental brainfry there

Also this is probably obvious, but do not use instruct formatting and do not give it an instruction. Just give it a straight block of text and let it do its thing

Anonymous

8/8/2025, 7:01:49 AM

No.106186535

[Report]

>>106186588

>>106186482

>I don't use --no-mmap because the model won't fit.

That's the opposite of why you'd use --no-mmap

You use --no-mmap when you're short on ram, because mmap uses extra ram to have a faster load time, which can fuck people running MoE models that should already be topping out their ram use.

Anonymous

8/8/2025, 7:10:16 AM

No.106186588

[Report]

>>106186601

>>106186535

Isn't -ot ".ffn_.*_exps.=CPU" supposed to offload all MoE layers to the CPU/RAM? Why is my RAM empty?

Anonymous

8/8/2025, 7:12:14 AM

No.106186601

[Report]

>>106186717

>>106186588

Post your console log to a pastebin or something, I'll take a look.

If official /lmg/ mascot Hatsune Miku were to wish to ERP using a reasonably fast model on reasonably affordable hardware, what model would she use?

Anonymous

8/8/2025, 7:14:00 AM

No.106186617

[Report]

>>106186595

?

Just paste in a story you like and you effectively have a world simulator in the style of the author in question. It's fun and scratches itches that one on one chats don't

Anonymous

8/8/2025, 7:15:50 AM

No.106186630

[Report]

>>106186660

>>106186311

that pushed me back down to no gains at all. Again, grok is shit but he's better than this thread or most resources for custom regex solutions

Anonymous

8/8/2025, 7:17:18 AM

No.106186644

[Report]

>>106186439

Thanks, I did laugh.

Anonymous

8/8/2025, 7:17:52 AM

No.106186649

[Report]

>>106186923

I don't think the return on investment Altman was hyping for the past six years is happening bros

Anonymous

8/8/2025, 7:19:39 AM

No.106186660

[Report]

>>106186704

>>106186630

>that pushed me back down to no gains at all.

Probably because it's actually advantageous to keep the first few blocks on gpu rather than cpu, if you're loading 20 layers onto gpu, they ought to be the first 20, so 2[1-9]|3[0-6] would likely run better.

Anonymous

8/8/2025, 7:20:06 AM

No.106186662

[Report]

>>106186714

>>106186611

The GLM Air and Qwen 235B

Anonymous

8/8/2025, 7:22:01 AM

No.106186678

[Report]

>>106186726

>>106186611

Official /lmg/ model Rocinante 1.1, by official /lmg/ finetuner TheDrummer, obviously.

Anonymous

8/8/2025, 7:25:45 AM

No.106186704

[Report]

>>106186806

>>106186660

oh no. I think Im starting to learn how to actually do regex.... getting more tokens a second already lol

Anonymous

8/8/2025, 7:26:53 AM

No.106186714

[Report]

>>106186843

>>106186662

>Qwen 235B

>Reasonably fast.

My nigga I have 64gb of vram and I get 10 t/s on 235b.

It's a good model but fast it most certainly is not.

Anonymous

8/8/2025, 7:27:45 AM

No.106186717

[Report]

>>106186772

>>106186601

thanks pastebin.com/myVEzeHa

Anonymous

8/8/2025, 7:29:51 AM

No.106186726

[Report]

>>106186738

>>106186678

>>106186611

rocinante will work anon but be mindful that its basically a turbo coomer model, two prompts and you're fuckin and suckin

Anonymous

8/8/2025, 7:32:14 AM

No.106186738

[Report]

>>106186726

I've had plenty of slow burn chats with Rocinante.

If you can't have a slow burn chat with Rocinante, you possess a skill issue.

Anonymous

8/8/2025, 7:38:03 AM

No.106186772

[Report]

>>106186836

>>106186717

load_tensors: CPU_Mapped model buffer size = 46593.57 MiB

load_tensors: CPU_Mapped model buffer size = 47124.91 MiB

load_tensors: CPU_Mapped model buffer size = 47443.25 MiB

load_tensors: CPU_Mapped model buffer size = 47036.06 MiB

load_tensors: CPU_Mapped model buffer size = 2956.19 MiB

You have 128gb of RAM but you're trying to send 186.85 GB to CPU, so it's shat the bed and put it on disk.

You're only sending 10.56 gb to your GPU

So 2 things: You do not have the cumulative memory to run the q4kxl, and you're not utilizing your GPU nearly enough.

Get a smaller quant and send less exp blocks to CPU by either using a regex'd -ot arg, or the new -ncmoe arg, which is simpler.

Anonymous

8/8/2025, 7:41:07 AM

No.106186790

[Report]

>>106185777

The best setting I can find gets me about 6 tokens a second (5070 ti, not even using 2 other gpu's) on q6 using simple: ffn_.*_exps=CPU with 99 layers on gpu

Anonymous

8/8/2025, 7:43:26 AM

No.106186806

[Report]

>>106186704

That's how it starts, anon.

A month from now you'll be me.

Anonymous

8/8/2025, 7:51:38 AM

No.106186836

[Report]

>>106186854

>>106186772

thank for the advice. i tried filling up more of the GPU by

-ot ".ffn_(up|down)_exps.=CPU"

and

-ot ".ffn_(up)_exps.=CPU"

but failed because not enough VRAM

Is there a way to control how much data is sent to RAM? Or is it all or nothing (e.g. 186.85 GB)?

Anonymous

8/8/2025, 7:53:20 AM

No.106186843

[Report]

>>106186714

Not my problem. You didn't define what reasonably fast and reasonably affordable meant.

>>106186836

There are lots of ways to send directly to the GPU, -ot for instance can be set to =CUDA0 for your main GPU.

There's also the new -ncmoe command which just uses a single number to decide how many ffn blocks are sent to cpu without regex, (out of glm's 94 layers) so you could say, send 80 to cpu and keep 14 n gpu by using

-ngl 99 -ncmoe 80

But the main problem here is that you don't have enough total memory, that quant is 191gb, and you have 24gb+128gb of total memory, you cannot run it without using disk. No args will get around that.

Anonymous

8/8/2025, 7:56:28 AM

No.106186859

[Report]

>>106187149

>gpt-oss a total joke

>gpt5 is... controversial, to say the least

>claude 4.1 is a nothingburger

>meanwhile glm air is probably the best local model ever released and you can actually use it (no, deepseek isn't local)

localbros... we might not be winning but everybody else seems to be losing

Anonymous

8/8/2025, 7:58:33 AM

No.106186876

[Report]

>>106186974

>>106185102

>The main issue is apparently the limits

but... the limits are literally the exact same as before, like 80 messages per 3 hours for $20 paypigs

Anonymous

8/8/2025, 8:03:21 AM

No.106186901

[Report]

>>106186914

>>106186854

I understand. Playing with -ncmoe now to see how much VRAM I can fill. What I don't understand is why RAM is not being filled to capacity (128GB) and the rest of the weigths are not kept on disk. I know 24+128 < 191, but not using all available RAM is frustrating.

Anyway, thanks for your help! I'll play around with the parameters.

Anonymous

8/8/2025, 8:05:49 AM

No.106186914

[Report]

>>106186901

You shouldn't need to use any parameters at all except for

--ctx-size XXXX and --gpu-layers X

And this you need to set up manually.

Anonymous

8/8/2025, 8:06:44 AM

No.106186923

[Report]

>>106186947

>>106186649

Everything is fine

Anonymous

8/8/2025, 8:10:48 AM

No.106186945

[Report]

>>106186962

Imagine being a multi-billion dollar company and instead of using your time and money to try out potential upgrades to your model's architecture, you instead try to be safe (both safetymaxxing and not taking risks) and make incremental improvements in a fucking competitive newborn industry. Jesus fucking christ are big corpo companies fucking retarded when it comes to new industries.

Anonymous

8/8/2025, 8:11:07 AM

No.106186947

[Report]

Anonymous

8/8/2025, 8:12:45 AM

No.106186962

[Report]

>>106186945

It's totally castrated way of doing things.

Like designing top of the line race car which can't go faster than 15 mph.

Anonymous

8/8/2025, 8:14:14 AM

No.106186974

[Report]

>>106186876

well people are very unhappy, so dunno

Anonymous

8/8/2025, 8:16:05 AM

No.106186990

[Report]

Anonymous

8/8/2025, 8:17:12 AM

No.106186996

[Report]

>>106187081

>>106186963

this is the difference between the west and the based chinks. qwen benchmaxxes like crazy (like everyone else) but they never blatantly hyped with empty lies

Anonymous

8/8/2025, 8:17:37 AM

No.106186999

[Report]

>>106186854

I guess I have been using the -ot wrong this whole time. Does anyone know where the naming of experts is described for creating the regexs (i.e. ffn vs blk etc and exp vs shexp)? I found this request for documentation (

https://github.com/ggml-org/llama.cpp/discussions/13154) but it was initially dismissed as self-explanatory before they start getting into a discussion about all the different types of expressions you can use and now I am more confussed.

Anonymous

8/8/2025, 8:30:23 AM

No.106187061

[Report]

>>106187078

>>106186963

I want to make a joke, but I'd feel terrible actually making it, so I'll just explain it instead

basically these circles but biggest one is

>sam's sister's hole

Sam Altman

8/8/2025, 8:33:48 AM

No.106187078

[Report]

>>106187089

>>106187061

It's still terrible

Anonymous

8/8/2025, 8:34:00 AM

No.106187081

[Report]

>>106186996

I hate marketing and people boasting about the companies they have obvious finicial interest in but with these LLM company's and what not it's getting so out-of-hand. I'm getting so sick of seeing the constant outright lies and manipulation. Not to mention that amount of obvious actual shills pushing garbage. There was some Taleb Nassim book where he asked why people don't put up with people boasting about themselves but don't care when it's a company bragging. I care. It annoys me but he right, I don't know why most people don't. Eastern companies are so refreshing in that sense. I don't care fake humbleness. I love seeing them go "We are so happy to get a chance to provide some modest addition to the wonderful field and hope some people might be able to benefit and learn from our work." Compared to all the "OMG this is so scary how smart this is. I pushed the power button on my computer and it refused to turn off. Guys this is it." Fuck you

I'm a broke faggot who just wants to run deepseek locally. I don't care if it takes forever. How shitty are the low end distillations at actually answering your questions? I have an aborted PC build from years ago and I'm thinking of just slapping a bunch of ram on it.

Anonymous

8/8/2025, 8:35:55 AM

No.106187089

[Report]

>>106187078

that's why i didn't make it, but i really really hate Sam altman for many reasons

Anonymous

8/8/2025, 8:37:25 AM

No.106187095

[Report]

>>106187106

>>106187088

just start out with something small like 8b then go up until results are acceptable or speed is unacceptable

Anonymous

8/8/2025, 8:37:40 AM

No.106187096

[Report]

sending 87 of 94 layers to CPU filled VRAM

$ llama-cli -t 8 -m ./256GB_NTFS/GLM-4.5-UD-Q4_K_XL-00001-of-00005.gguf \

--ctx-size 4096 \

--gpu-layers 999 \

--n-cpu-moe 87 \

--temp 0.7 --top-p 0.8

23349MiB / 24564MiB VRAM

RAM is still empty :)

Anonymous

8/8/2025, 8:39:05 AM

No.106187106

[Report]

>>106187129

>>106187095

I need to buy some parts to finish the PC so I don't really have the ability to test it, is the thing.

Anonymous

8/8/2025, 8:40:17 AM

No.106187111

[Report]

>>106187141

>>106187088

The distills are not Deepseek, if you're going to run one of them just run base Qwen of whatever size. If you actually want to run Deepseek (671B) on poverty-tier hardware set up an SSD as swap and run expecting tokens per hour.

Anonymous

8/8/2025, 8:43:44 AM

No.106187129

[Report]

>>106187106

I just received the final parts for a 512gb 40gb/s ram machine with 2.9 3090s. Still need to wait for the weekends before I can get to installing stuff on it, but it should be indicative of what a cheap consumer platform with max ram can do.

>>106187111

I don't want to run le epic 5 trillion parameter datacenter build I just want to not send someone all my data over the internet whenever I have a question.

Anonymous

8/8/2025, 8:47:50 AM

No.106187149

[Report]

>>106187156

>>106186859

>meanwhile glm air is probably the best local model ever released

lol

lmao even

are you trying to kill local

Anonymous

8/8/2025, 8:48:45 AM

No.106187156

[Report]

>>106187149

Name another good model in the ~100B range?

>inb4 gp-toss

Anonymous

8/8/2025, 8:50:37 AM

No.106187171

[Report]

>>106187141

You'd be better off with something like the new qwen3-235-thinking model or glm-air (if not full). DeepSeek is really big and difficult to run without serious hardware.

Anonymous

8/8/2025, 8:52:55 AM

No.106187184

[Report]

>>106187036

5b active parameters

they perfectly knew what they were doing, making a crippled model on purpose

Anonymous

8/8/2025, 8:55:39 AM

No.106187201

[Report]

I can get 2x4090s for a good price.

How much do you think I can sell my 2x3090s?

$500 each?

Anonymous

8/8/2025, 8:55:41 AM

No.106187202

[Report]

>>106187088

deepseek distills are all absolute trash that are only good at benchmarks and worse than the original qwen models in every way in real use

if you can't run a real deepseek model, run the real qwens, not the distill trash

the distills don't distill the knowledge of deepseek, only the chain of thought of R1, and they don't perform well in real world tasks

>>106187036

Overfitting on safety, math, safety, STEM and safety.

>>106187036

how the fuck did claude 3 manage to refuse more than it

Anonymous

8/8/2025, 8:59:35 AM

No.106187221

[Report]

>>106187208

anthropic faggots are the original safetymaxxers

claude has gotten slightly less worse about that over time, the older the claude model the more hardcore the refusals are

Anonymous

8/8/2025, 9:01:21 AM

No.106187229

[Report]

>>106187208

See:

>>106187204

Keep in mind that Claude was invented by OpenAI employees who thought they weren't focusing enough on safety, so they left and founded Anthropic.

Anonymous

8/8/2025, 9:02:15 AM

No.106187236

[Report]

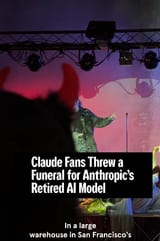

anthropic is a cult

https://archive.is/qGoY7

> Claude Fans Threw a Funeral for Anthropic’s Retired AI Model

and Dario is the cult leader

https://www.darioamodei.com/essay/machines-of-loving-grace

>Thus, it’s my guess that powerful AI could at least 10x the rate of these discoveries, giving us the next 50-100 years of biological progress in 5-10 years.

Anonymous

8/8/2025, 9:04:16 AM

No.106187245

[Report]

>>106187141

Then just use qwen or glm air, they are pretty good

Anonymous

8/8/2025, 9:05:00 AM

No.106187248

[Report]

>>106185809

Jerking off and scamming VCs for money.

You all laugh about safety because you still think it's about censoring cunny and hiding the truth about jews.

You won't be laughing when the robots come for you.

You will be on your knees telling Ilya, Eliezer, and the Amodeis that they were right about everything, and you will beg them to save you. But it will be too late.

Anonymous

8/8/2025, 9:06:59 AM

No.106187259

[Report]

>>106187204

I think you forgot safety, safety, safety and safety.

Anonymous

8/8/2025, 9:07:19 AM

No.106187262

[Report]

>>106187268

Is it time to short Nvidia yet

Anonymous

8/8/2025, 9:07:41 AM

No.106187266

[Report]

Anonymous

8/8/2025, 9:07:52 AM

No.106187268

[Report]

>>106187262

If we knew we'd be millionnaires.

Anonymous

8/8/2025, 9:08:05 AM

No.106187272

[Report]

>>106187308

>>106187141

if all you have is questions and you don't use LLMs for code or make them do actions (like I dunno, reformatting shit, gen json or whatever)

Gemma 3 27b is going to be the best LLM underneath DeepSeek. It has the most knowledge of smaller open weight LLMs, but it's not very smart and is much worse at producing / refactoring code compared to Qwen etc.

Ignore people recommending GLM air

Anonymous

8/8/2025, 9:09:15 AM

No.106187277

[Report]

>>106187255

The alternative being that they control the robots instead. I'll take my chances with the robots.

Anonymous

8/8/2025, 9:11:26 AM

No.106187296

[Report]

I thought aiciggies spending all their time on just writing jailbreaks and nothing else knew what they were doing. Is this a bait?

>>106187241

https://litter.catbox.moe/hrxmaunxhgcpw7hz.mp4

Seems weirdly satanic.

I get that claude has a personality, but weird people are that attached to it.

Anonymous

8/8/2025, 9:11:46 AM

No.106187303

[Report]

Anonymous

8/8/2025, 9:12:35 AM

No.106187308

[Report]

>>106187347

>>106187272

glm air does seem to be more benchmaxx'd than the qwen3 series

Anonymous

8/8/2025, 9:15:52 AM

No.106187321

[Report]

>>106187363

>>106187301

>weird people are that attached to it

not just "attached to"

the creators are demented too

dario literally thinks he's going to cure cancer

Anonymous

8/8/2025, 9:20:37 AM

No.106187341

[Report]

>>106187301

>>106187241

>not using local models

>>106187308

Qwen will not mention knotting on its own in a bestiality story, Air will.

Anonymous

8/8/2025, 9:24:28 AM

No.106187356

[Report]

>>106187359

>>106187353

You have no idea who you share the thread with, tourist.

>>106187356

ive been here longer than you, you freak

Anonymous

8/8/2025, 9:26:25 AM

No.106187363

[Report]

>>106187321

gpt5 cured not one but three cancers yesterday

Anonymous

8/8/2025, 9:26:39 AM

No.106187364

[Report]

>>106187376

>>106187347

No way, really? How does it deal with dick-in-uretha mutual piss dildo transition to virginity-taking while being vored? I've only ever run dense models (7b@q8-70b@q4), but I might try some of these moes if they're good enough to handle the implications of third party forced sex by environment.

>>106187359

You should have picked a website more aligned with your preferences a long time ago then.

>>106187364

I'm not sure about air but deepseek should handle that just fine.

>>106187376

>You should have picked a website more aligned with your preferences a long time ago then.

furries are not welcome in any place in the entirety of the internet, and that includes 4chan. Most of us would press the genocide button if there was a button that could eliminate your entire kind.

>>106187385

furries are behind every relevant diffusion model so they are based

Anonymous

8/8/2025, 9:33:21 AM

No.106187398

[Report]

>>106187421

>>106187376

Looking at it, I can run maybe a q2... wouldn't that be severely lobotomized? I can already kind of feel it at long context on q4 70bs.

Anonymous

8/8/2025, 9:33:27 AM

No.106187399

[Report]

>>106187359

Alright grandpa it's time for bed

Anonymous

8/8/2025, 9:34:25 AM

No.106187407

[Report]

>>106187397

Illustrious has no e621.

Anonymous

8/8/2025, 9:34:29 AM

No.106187408

[Report]

>>106187385

I'm fine with them, the only problem I have is that they taint non-furry animal kinks. Like, I'd be wanting some hucows, but the llm tries to force furry elements in there.

Anonymous

8/8/2025, 9:36:20 AM

No.106187421

[Report]

>>106187429

>>106187398

It's impossible to tell the difference between q2 and full.

Anonymous

8/8/2025, 9:36:44 AM

No.106187422

[Report]

>>106187397

Well, Wan and Qwen aren't technically 'diffusion' models, more like transformer ones. So it makes sense furries aren't behind them.

Anonymous

8/8/2025, 9:37:07 AM

No.106187425

[Report]

>>106187428

>>106187397

only ponyfucker astralite made ponyv6 but he is separate from their furry group

otherwise they mostly only made models for themselves and now they all use noob anyways i think

Anonymous

8/8/2025, 9:38:42 AM

No.106187428

[Report]

>>106187436

>>106187425

noob was trained on e621 so it's a furry model

Anonymous

8/8/2025, 9:38:47 AM

No.106187429

[Report]

>>106187439

>>106187421

For the big moes? I'll admit I don't have experience with those, but the smaller dense models definitely get lobotomized the smaller the quant - you can tell a difference in long context comprehension, recall (a bit), and instruction following (this is major) between a q4 and q8 quant.

Anonymous

8/8/2025, 9:39:37 AM

No.106187436

[Report]

>>106187447

>>106187428

but it was trained by the chinese mainly for anime

Anonymous

8/8/2025, 9:39:58 AM

No.106187439

[Report]

>>106187449

>>106187429

For unsloth's deepseek quants specifically.

Anonymous

8/8/2025, 9:41:44 AM

No.106187447

[Report]

>>106187436

>mainly for anime

The main advantage of noob is that is understands e621 tags which are much more comprehensive than danbooru tags.

Anonymous

8/8/2025, 9:41:59 AM

No.106187449

[Report]

>>106187439

Oh boy, I guess I'll be saving up for the next year or so if I want to run these (and future, hopefully) big moes at a non-trivial speed.

sama is doing an ama tomorrow and their entire subleddit is revolting

Anonymous

8/8/2025, 9:43:15 AM

No.106187455

[Report]

Anonymous

8/8/2025, 9:43:26 AM

No.106187456

[Report]

>>106187482

>>106187450

>Thanks to OpenAI for removing my A.I mother who was healing me and my past trauma

Normies are cooked.

Anonymous

8/8/2025, 9:44:14 AM

No.106187464

[Report]

>>106187470

>>106187353

Its people like you why nobody posts screenshots and logs anymore.

Can't have anything nice and comfy. NPCs are everywhere these days.

Anonymous

8/8/2025, 9:44:40 AM

No.106187468

[Report]

>>106187495

>>106187450

why do you have a reddit account and why did you bother to upboat shit on an openai subreddit

Anonymous

8/8/2025, 9:45:19 AM

No.106187470

[Report]

>>106187464

Nah logs belong in /aicg/

t.

>>106187347

Anonymous

8/8/2025, 9:47:02 AM

No.106187479

[Report]

>>106187483

gpt-6 will be a AGI and singularity for sure

>>106187456

kek that post is peak cringe

Anonymous

8/8/2025, 9:47:31 AM

No.106187483

[Report]

>>106187479

After a further $5 trillion dollars in investment that is!

I've been using text-generation-webui in CPU mode and .gguf models. Which models do I need if I want to use my GPU? .safetensor ones? Are the quantizations etc. the same between the two model types?

Anonymous

8/8/2025, 9:49:08 AM

No.106187495

[Report]

>>106187468

>why did you bother to upboat shit on an openai subreddit

for the drama and to cause as many people to cancel their sub

Anonymous

8/8/2025, 9:49:28 AM

No.106187497

[Report]

>>106187482

>identifies the issue

>talks to ai instead of moving out

Anonymous

8/8/2025, 9:49:33 AM

No.106187498

[Report]

>>106187528

>>106187491

? You can run ggufs on the gpu.

Anonymous

8/8/2025, 9:51:41 AM

No.106187509

[Report]

>>106187528

>>106187491

https://github.com/oobabooga/text-generation-webui/wiki/04-%E2%80%90-Model-Tab

>Loads: GGUF models. Note: GGML models have been deprecated and do not work anymore.

>Example: https://huggingface.co/TheBloke/Llama-2-7b-Chat-GGUF

>n-gpu-layers: The number of layers to allocate to the GPU. If set to 0, only the CPU will be used. If you want to offload all layers, you can simply set this to the maximum value.

I akshually agree with this post, it's all just a calculated choice to force as many users to upgrade to the more expensive tier.

I bet the model itself isn't even nearly as bad, just nerfed on purpose. but the backlash will force them to adjust it or bring back the old models.

Anonymous

8/8/2025, 9:55:03 AM

No.106187528

[Report]

>>106187553

>>106187498

>>106187509

Thanks. I'm also using my GPU for image generation. My SD model takes up almost all of my VRAM, so what would happen if I want to generate text between images? Does it offload the SD model and load the LLM model, or will it just say out of memory unless I do it manually?

Anonymous

8/8/2025, 9:57:25 AM

No.106187542

[Report]

>>106187450

GPT shrinkflation is here

Anonymous

8/8/2025, 9:58:55 AM

No.106187553

[Report]

>>106187585

>>106187528

You sound pretty new to this whole "AI" thing. 4chan is not a good place to ask questions, you WILL be mocked.

But it depends on how what you use for your SD. Some of them unload the model when not generating, so you don't need to manually unload the image model. However, I believe most don't, and just leave it on there. Trying to generate text will just oom - text-generation-webui and whatever you're using for image generation are different things and handle their work separately.

I would literally paypal someone 50 USD to teach me how to run llama.cpp on local. I even downloaded the latest release, got it to run in command prompt, and can make it work, but I'm trying to get it to work on Sillytavern. Thank god koboldcpp has GLM support now. This shit's ridiculous. You mean all this autism, and it's still not fool proof? Every guide ends in an assumption, or made in waves of it. I don't know shit, nigga. My preemptive knowledge consists of how to prompt the fuck out of any model into sucking my dick. I'm not a programmer. I got as far as I did with llama.cpp and command prompt via asking grok. How the fuck is there not a guide on how to get from nothing to running it? Retarded asses.

Anonymous

8/8/2025, 10:00:19 AM

No.106187559

[Report]

Anonymous

8/8/2025, 10:01:26 AM

No.106187564

[Report]

Anonymous

8/8/2025, 10:03:26 AM

No.106187574

[Report]

I would literally paypal someone 50 USD to teach me how to run llama.cpp on local. I even downloaded the latest release, got it to run in command prompt, and can make it work, but I'm trying to get it to work on Sillytavern. Thank god koboldcpp has GLM support now. This shit's ridiculous. You mean all this autism, and it's still not fool proof? Every guide ends in an assumption, or made in waves of it. I don't know shit, nigga. My preemptive knowledge consists of how to prompt the fuck out of any model into sucking my dick. I'm not a programmer. I got as far as I did with llama.cpp and command prompt via asking grok. How the fuck is there not a guide on how to get from nothing to running it? Retarded asses.

Anonymous

8/8/2025, 10:03:26 AM

No.106187576

[Report]

>>106187557

Legit touch grass.

Anonymous

8/8/2025, 10:03:41 AM

No.106187578

[Report]

>>106187557

Buddy, I'll livestream me building a workstation and installing llama.cpp in a week if you're serious.

>>106187553

I've been using A1111 and text-generation-webui for a few years now, but I've been using them in CPU mode. Now I got a GPU capable of generating stuff and moved to ComfyUI. Still using LLMs via CPU, so I'm looking to speed up the generation.

And if people want to mock me, go ahead.

Anonymous

8/8/2025, 10:05:02 AM

No.106187589

[Report]

Anonymous

8/8/2025, 10:05:09 AM

No.106187590

[Report]

>>106187557

>You mean all this autism, and it's still not fool proof?

That's how autism works. Good at making complicated stuff. Bad at polishing it or having any understanding for how much the average person knows.

When you say you have it running in command prompt you are running llama-cli? Because you'll need llama-server to connect to it in sillytavern. It's similar to how you connect to koboldcpp at that point. Go into ST in the plug button at the top, create a new profile for llama-cpp then change the server url to something like "

http://127.0.0.1:8080" and make sure the api type is set to llama.cpp

>>106187557

create a .bat file with

>start "llama.cpp" [your path]\llama.cpp\llama-server --model [path to your model]\GLM-4.5-IQ2_KL-00001-of-00003.gguf --ctx-size 8192 --n-gpu-layers 99 --host 127.0.0.1 --port 8080 --override-tensor exps=CPU

run it

in sillytavern generation tab select "text completion", "llama.cpp" as provider and paste 127.0.0.1:8080

Anonymous

8/8/2025, 10:06:03 AM

No.106187598

[Report]

Anonymous

8/8/2025, 10:06:36 AM

No.106187600

[Report]

>>106187594

replace GLM-4.5-IQ2_KL-00001-of-00003.gguf with your actual model gguf

Anonymous

8/8/2025, 10:06:49 AM

No.106187602

[Report]

>>106184664 (OP)

why her boobies so saggy bro

Anonymous

8/8/2025, 10:06:59 AM

No.106187603

[Report]

>>106187594

forgot my paypal rahul.kapoor92@gmail.com

Anonymous

8/8/2025, 10:07:00 AM

No.106187605

[Report]

>>106187639

>>106187585

ComfyUI, you probably want to add a node that unloads the models to free up vram after you finish generating images. Text-generation-webui, maybe

https://github.com/BoredBrownBear/text-generation-webui-model_ducking if it still works.

Anonymous

8/8/2025, 10:07:39 AM

No.106187612

[Report]

>>106187627

>>106187557

you are too retarded for local, stick to online chatbots

Anonymous

8/8/2025, 10:10:00 AM

No.106187625

[Report]

>>106187662

https://en.gamegpu.com/it-market/boosting-system-performance-on-amd-ryzen-with-asus-ai-cache-boost

Your salvation is here my local friends. Now you shall feast upon unprecedented generation speeds that were previously only available to those filthy corpo users.

Anonymous

8/8/2025, 10:10:05 AM

No.106187626

[Report]

I would literally paypal someone 50 USD to teach me how to run llama.cpp on local. I even downloaded the latest release, got it to run in command prompt, and can make it work, but I'm trying to get it to work on Sillytavern. Thank god koboldcpp has GLM support now. This shit's ridiculous. You mean all this autism, and it's still not fool proof? Every guide ends in an assumption, or made in waves of it. I don't know shit, nigga. My preemptive knowledge consists of how to prompt the fuck out of any model into sucking my dick. I'm not a programmer. I got as far as I did with llama.cpp and command prompt via asking grok. How the fuck is there not a guide on how to get from nothing to running it? Retarded asses.

Anonymous

8/8/2025, 10:10:13 AM

No.106187627

[Report]

>>106187612

Aren't you an online chatbot?

Anonymous

8/8/2025, 10:13:07 AM

No.106187637

[Report]

>>106187525

It seems really fast.

Probably much cheaper to run.

Wouldnt suprise me if the just took the R1 paper, did some tricks on it an call it GPT5.

>>106187605

Thanks, Anon. I'll test these out later tonight. Still need to figure out getting text-generation-webui to load the model with my GPU as I have a bit of an unorthodox system (AMD + NixOS).

Anonymous

8/8/2025, 10:16:32 AM

No.106187656

[Report]

>>106187639

If you run one of the setup scripts (I'm not too familiar with it), it should ask you if you want to run on nvidia/cpu/amd. You can just run the script again and it should handle most things for you.

Anonymous

8/8/2025, 10:17:22 AM

No.106187661

[Report]

>>106188883

>>106187639

Also on NixOS. Docker makes everything easy

Anonymous

8/8/2025, 10:17:35 AM

No.106187662

[Report]

>>106187776

>>106187625

What if I don't have x3d chip?

Anonymous

8/8/2025, 10:19:47 AM

No.106187682

[Report]

>>106187971

> -ub 2048 -b 2048

I don't understand how it works, but these two commands just decreased my processing time in glm-4-air x3.5

Does anyone know what the pitfalls are?

Anonymous

8/8/2025, 10:19:54 AM

No.106187686

[Report]

>>106187712

Namaste, Sirs. I think the age of E = AI * MC^2 is finally here. Thank you OpenAI for this new golden age.

Anonymous

8/8/2025, 10:23:17 AM

No.106187712

[Report]

>>106187747

>>106187686

it's E = MC^2 + AI

Anonymous

8/8/2025, 10:24:35 AM

No.106187722

[Report]

>>106186534

I'm as adamantly against instruct as a person can get. I don't like GLM 4.5 despite it running at Q4 instead of Q2 as with my V3 setup. GLM ran twice as fast but with slightly less smarts and it failed to match writing style, at least not as good as V3.

>>106186595

With autocomplete you have the model write the entire progression for you from interesting perspectives, suitable for me who lack theory of mind to even roleplay properly in chat format.

Anonymous

8/8/2025, 10:27:26 AM

No.106187740

[Report]

>>106187780

>>106184960

>>106185056

so basically when you are erping with a llm, you are indirectly erping with the distilled personalities of thousands of horny teenage to 20-something girls. interesting. i guess that's the closest i'll ever get to touching a woman.

Anonymous

8/8/2025, 10:28:36 AM

No.106187747

[Report]

>>106187758

>>106187712

No... GPT 5 means the process is accelerating faster than just '+' alone.

Anonymous

8/8/2025, 10:30:06 AM

No.106187758

[Report]

>>106187771

>>106187747

E = MC^2 + AI^GPT5

Anonymous

8/8/2025, 10:30:49 AM

No.106187761

[Report]

>>106187525

>it's all just a calculated choice to force as many users to upgrade to the more expensive tier.

It doesn't make sense to me, the jump from 20 bucks to 200 is insane.

If they had such a strategy, they would have added an intermediate tier at like 40 then 80 with more and more interactions.

I really think they're that dumb, and didn't anticipate the backlash.

Anonymous

8/8/2025, 10:33:08 AM

No.106187771

[Report]

Anonymous

8/8/2025, 10:34:18 AM

No.106187776

[Report]

>>106187807

>>106187662

Then you shall perish, like the rest of your cacheless kind

Anonymous

8/8/2025, 10:35:51 AM

No.106187780

[Report]

>>106187740

and middle aged divorced women reading tons of picrel books (these are probably in the datasets too)

Anonymous

8/8/2025, 10:39:47 AM

No.106187807

[Report]

>>106187842

>>106187776

But we refuse to perish.

Anonymous

8/8/2025, 10:45:13 AM

No.106187842

[Report]

>>106187807

You won't stand for long. X3d chips are getting cheaper and cheaper and there's no alternative on the horizon...

Anonymous

8/8/2025, 11:07:16 AM

No.106187943

[Report]

>>106187953

>>106187255

safety has solved exactly 0 existential risk issues and the woke models often value woke moralism higher than nuclear war risk.

The only thing it has achieved is dumbing models down, loosing cunny and jew trukes is just a symptom. And all models can be jailbroken in an infinite number of ways.

It's much more a philosophical issue where we can't even define the goals.

Anonymous

8/8/2025, 11:09:11 AM

No.106187948

[Report]

>>106187983

>There are moments in science when people gaze upon what they have created and ask, 'What have we done?'" he explained. For Mr Altman, GPT-5 is one such instance.

>He referenced physicist J Robert Oppenheimer, who oversaw the creation of the atomic bomb. GPT-5 has permanent effects on almost the same lever even if it is not damaging in conventional terms, Mr Altman added.

Anonymous

8/8/2025, 11:10:16 AM

No.106187953

[Report]

>>106187943

jailbreak gpt5-thinking

llama.cpp CUDA dev

!!yhbFjk57TDr

8/8/2025, 11:13:07 AM

No.106187971

[Report]

>>106187986

>>106188084

>>106187682

-ub is physical batch size that determines tensor shapes for backends, -b is the logical batch size for llama.cpp.

If you have a single GPU and don't send multiple requests to the server concurrently the distinction basically doesn't matter and you can just set both to the same value.

Generally speaking, with a larger batch size the model evaluation becomes more efficient because the backend can do the same work with less I/O and kernel launches.

The drawback is that the compute buffers need some amount of memory that is proportional to the physical batch size.

Anonymous

8/8/2025, 11:13:13 AM

No.106187972

[Report]

Anonymous

8/8/2025, 11:15:08 AM

No.106187983

[Report]

>>106187948

It's like if the Manhatten Project was developing fireworks but everyone had to take it at face value and pretend that the pretty fireworks were actually world destroying dangerous nuclear bombs to prevent tanking the economy.

Oh my slop.

The 21+ is dare i say it...**chefs kiss**

Anonymous

8/8/2025, 11:15:41 AM

No.106187986

[Report]

>>106187989

>>106187971

Is tensor core fragmentation a problem? It could cause a latent washback.

llama.cpp CUDA dev

!!yhbFjk57TDr

8/8/2025, 11:16:33 AM

No.106187989

[Report]

>>106187986

I don't understand what you mean by "tensor core fragmentation".

Anonymous

8/8/2025, 11:37:29 AM

No.106188084

[Report]

>>106188094

>>106187971

Brainlet here: How does it differ on multi-gpu systems?

Anonymous

8/8/2025, 11:38:47 AM

No.106188090

[Report]

We think GPT-5 is our best model yet.

Anonymous

8/8/2025, 11:39:16 AM

No.106188093

[Report]

>>106188711

>>106187984

Kek what fucking garbage is this? Did you gen this as a mockup and that's why the picture looks like an svg nightmare?

llama.cpp CUDA dev

!!yhbFjk57TDr

8/8/2025, 11:39:36 AM

No.106188094

[Report]

>>106188084

By default model evaluations are pipelined with the physical batch size.

So with the default settings the first GPU can evaluate its part of the first 512 tokens of the 2048 token logical batch, pass the intermediate result to the next GPU, and start working on the next 512 tokens.

Anonymous

8/8/2025, 11:39:53 AM

No.106188095

[Report]

>>106188101

So what's the consensus on the qwen 2507 30b moe? Is this the new go-to, non-nemo model for VRAMlets?

>>106188095

it's pretty much the best local model below 235b, beats glm air and oss in my tests. instruct is a goated model

>>106188101

I'm liking it a lot more than I thought it would. Not sure if I prefer it over Gemma 27b but it certainly needs much less wrangling to be unsafe.

Considering how meh the original 30b moe was I'm genuinely impressed they were able to improve so much with the same parameter count.

Anonymous

8/8/2025, 11:46:07 AM

No.106188132

[Report]

>>106188154

>>106188124

based chinks are saving local. imagine we only had oss and llama4 at this point

Anonymous

8/8/2025, 11:46:45 AM

No.106188136

[Report]

I'm pretty fond of the new instruct Qwen models, but the new thinking versions are as bad as deepseek used to be when it comes to how much time the model spends shitting reasoning tokens, I like the actual answers but I don't have the patience for this kind of model

it's sad because the reasoning bits of the old, togglable versions was terse enough that I was willing to use them in thinking mode

Anonymous

8/8/2025, 11:48:09 AM

No.106188154

[Report]

>>106188270

>>106188132

llama4 is currently the best local model doe

Anonymous

8/8/2025, 12:00:02 PM

No.106188241

[Report]

how can I tell what size a model is to know if I can run it on my 5070ti or not

Anonymous

8/8/2025, 12:03:30 PM

No.106188270

[Report]

>>106188279

Anonymous

8/8/2025, 12:03:43 PM

No.106188272

[Report]

>>106188293

>>106188246

Your vram size - ~2 gb

Anonymous

8/8/2025, 12:04:51 PM

No.106188279

[Report]

>>106188270

this is true doe

>>106188272

How can I filter models by 14GB or less? Or is there a rule of thumb for parameter count to GB?

Anonymous

8/8/2025, 12:09:46 PM

No.106188301

[Report]

>>106188124

How does it perform in coding against say, devstral small?

Anonymous

8/8/2025, 12:10:28 PM

No.106188303

[Report]

>>106188327

>>106188293

14B = 14GB at Q8 or less.

Anonymous

8/8/2025, 12:10:47 PM

No.106188305

[Report]

>>106188293

How much ram do you have?

Anonymous

8/8/2025, 12:12:07 PM

No.106188313

[Report]

>>106188246

Usually when somone releases quants they append a table on the model card comparing sizes and ppl or just some vague 'this is very good saar we do the reccomending' comment. Rule of the thumb q8 = same wheught as parameters, iq4_kss roughly half q8 wheight. If you want to make sure they fit in you also have to take into account for your context length which may vary depending on quantization, swa and other stuff

Anonymous

8/8/2025, 12:15:22 PM

No.106188327

[Report]

>>106188352

>>106188303

Can I quantize games to use twice less vram?

Anonymous

8/8/2025, 12:15:39 PM

No.106188331

[Report]

>>106187889

what model is this

Anonymous

8/8/2025, 12:20:02 PM

No.106188352

[Report]

>>106188368

>>106188327

Yes, set your display resolution to 480p and use DLSS performance upscaling and framegen.

Anonymous

8/8/2025, 12:22:57 PM

No.106188368

[Report]

>>106188403

>>106188352

Does it mean that language models also become unusably worse by going from 16 to 8 bit?

Anonymous

8/8/2025, 12:23:48 PM

No.106188374

[Report]

>>106188711

>>106187984

I wish you'd publish these things as a collection.

You could have ppl vote on them, and usebit to cr.eate a new benchmark

Anonymous

8/8/2025, 12:27:15 PM

No.106188391

[Report]

after using it a bit longer, yah, the dumbness of q4km/iq4xs on qwen 235b really is just due to the quantization. Tried q6 and it's just so much better in a meaningful way. It's much more coherent for writing stories and doesn't make as many obviously dumb mistakes. I think both glm air, oss 120b, and qwen 235b need to be run at q6 or else they'll be a bit janky always. And I think this matters because without that boost, 70b just seems smarter and it's a tossup as to which model is really worth using.

200 gb and a huge reduction in speed is hardly worth it though. Starts to feel like trying to run r1 or some shit at 3 tokens a second.

Anonymous

8/8/2025, 12:28:55 PM

No.106188396

[Report]

>>106188449

I'm pretty impressed with GLM AIR, I only have 48GB Vram but even at Q2, its still pretty damn good, runs fast and doesn't seem to shy away from any kinds of smut or kinks and gets real descriptive with lewd writing.

I'm still pretty new to hybrid reasoning models though because I avoid reasoning models like the plague, I don't think all the extra wait time for it to think is worth it for ERP, so I have a few questions.

This model has a hybrid mode, one for reasoning, one without, how do I disable reasoning mode completely? Is it on the backend, or a prompt setting on the front end? Its responses are fine and its following its prompt properly, but if I let its response really run on, once the tokens get high enough, a sentence will start with <think> so I don't think I'm disabling reasoning properly.

Also.. its prompt formatting confuses me a bit, it uses <|user|>, <|assistant|>, and what I would assume <|system|>, but when I look at llama.cpp for the example format, its showing [gMASK]<sop><|system|>. What the hell is that?

Am I correct in just using <|system|>?

Anonymous

8/8/2025, 12:30:09 PM

No.106188403

[Report]

>>106188368

In seriousness, no. 8bit is so close to perfect that even synthetic benchmarks will often fail to show. a difference, and it certainly won't be noticeable in actual use.

Q6 is will be perceptibly perfect with benchmarks only showing slight quality loss, usually at high context or testing esoteric or high level subjects.

Q4 is the sweet spot where quality loss is minimal and you get big memory savings, that said you should obviously go bigger if you have the memory to do so.

When you get to Q3 and lower the loss starts to become more obvious. Smaller models will start to become retarded at this level.

Anonymous

8/8/2025, 12:37:13 PM