/ldg/ - Local Diffusion General

Trying to download the model files for qwen image so I can test LoRA training. Why is hugginface cli so shit?

Anonymous

8/8/2025, 5:11:37 AM

No.106185903

[Report]

>>106185911

>>106185861

>hugginface cli

i see we have our best on the job

Anonymous

8/8/2025, 5:12:27 AM

No.106185911

[Report]

>>106185903

You get what you pay for.

How do I get into this as a total newfag? Do I need a PC that costs thousands?

Anonymous

8/8/2025, 5:18:58 AM

No.106185956

[Report]

>>106184597

shut the fuck up retard

Anonymous

8/8/2025, 5:23:06 AM

No.106185985

[Report]

>>106185951

Depends on how coherent you want your waifu to look

Anonymous

8/8/2025, 5:26:05 AM

No.106186009

[Report]

>>106185951

If you even have to ask about this, just stick to the web saas/API models. I am serious

Anonymous

8/8/2025, 5:33:45 AM

No.106186057

[Report]

>>106185861

>git pull huggingface_repo_url

the man smiles and holds a cardboard cutout of an anime style Miku Hatsune, standing in the snow.

based kijai making the 2.2 i2v lora work normally (1 strength for both)

Anonymous

8/8/2025, 5:36:10 AM

No.106186074

[Report]

>>106181046

>is there even any point of the resize image v2 node? why don't i just plug the image straight in to the WanVideo ImageToVideo Encode? I can just set the dimensions there.

kijai's sampler will sperg out if it doesn't like the dimensions. i dunno

Anonymous

8/8/2025, 5:42:56 AM

No.106186105

[Report]

>>106186222

>>106185803 (OP)

Can someone share the json from the wan rentry?

/ldg/ Comfy T2V 480p FAST workflow (by bullerwins): ldg_2_2_t2v_14b_480p.json (updated 2nd August 2025)

https://files.catbox.moe/ygfoxx.json

I get a white page.

Anonymous

8/8/2025, 5:44:35 AM

No.106186113

[Report]

>>106186064

i'll also try i2v. wan2.2 t2v is painful for now

the man looks up at the sky and sees a giant cardboard cutout of an anime style Miku Hatsune, standing in the snow.

kino

Anonymous

8/8/2025, 5:46:05 AM

No.106186123

[Report]

>>106186140

>>106186117

>not a hologram

are you even trying?

Anonymous

8/8/2025, 5:49:00 AM

No.106186134

[Report]

>>106185951

idle here for a week and then decide if this is who you want to become.

Anonymous

8/8/2025, 5:49:57 AM

No.106186138

[Report]

Anonymous

8/8/2025, 5:50:17 AM

No.106186140

[Report]

>>106186147

>>106186123

patience! one idea at a time. i'll get there

Anonymous

8/8/2025, 5:51:19 AM

No.106186147

[Report]

>>106186166

>>106186140

here

the man looks up at the sky and sees a giant hologram of an anime style Miku Hatsune, standing in the snow.

it'd be easier if it was a screenshot at night, but it works!

Anonymous

8/8/2025, 5:52:16 AM

No.106186158

[Report]

how much vram qwen does actually consume when quantized?

Anonymous

8/8/2025, 5:53:16 AM

No.106186166

[Report]

>>106186177

>>106186117

>>106186147

i2v looks so kino compared to t2v

got a BIG miku this time.

Anonymous

8/8/2025, 5:55:43 AM

No.106186177

[Report]

>>106186166

i2v is fun cause you avoid most of the randomness, you're just prompting what you want to happen next. often with hilarious results.

Anonymous

8/8/2025, 5:57:15 AM

No.106186183

[Report]

>>106186173

this time, "gigantic hologram":

Fight scenes are definitely possible with wan2.2. I think I might have unlocked the Ryona fetish.

Anonymous

8/8/2025, 5:59:49 AM

No.106186198

[Report]

I don't think my wan kijai txt to image works as intended lol

Anonymous

8/8/2025, 6:03:40 AM

No.106186222

[Report]

>>106186246

Anonymous

8/8/2025, 6:04:47 AM

No.106186228

[Report]

Anonymous

8/8/2025, 6:06:05 AM

No.106186235

[Report]

>>106186189

>the strongest woman vs the weakest man

Anonymous

8/8/2025, 6:07:23 AM

No.106186246

[Report]

>>106186222

Thank you anon, and that's fine that part is easy to set up, I was mainly wondering what to use instead of WanVideo Sampler kijai uses.

Anonymous

8/8/2025, 6:16:55 AM

No.106186292

[Report]

>>106186363

the man looks up at the sky and sees a gigantic hologram of an anime style Miku Hatsune waving hello at the man.

there, beeg miku

Anonymous

8/8/2025, 6:17:22 AM

No.106186302

[Report]

why are you not making money with your shit, /ldg/?

Anonymous

8/8/2025, 6:26:56 AM

No.106186358

[Report]

>>106186335

Who is even paying to look at slop?

Anonymous

8/8/2025, 6:27:57 AM

No.106186363

[Report]

>>106186292

I don't think wan knows what a hologram is

Anonymous

8/8/2025, 6:28:53 AM

No.106186367

[Report]

>>106186375

different image:

Anonymous

8/8/2025, 6:29:54 AM

No.106186375

[Report]

>>106186367

prompt (slightly diff): the man looks up at the sky and sees a gigantic hologram of an anime style Miku Hatsune singing with a microphone.

Anonymous

8/8/2025, 6:34:01 AM

No.106186396

[Report]

>>106186335

I have a good paying job, I want to generate stuff for me.

Anonymous

8/8/2025, 6:34:43 AM

No.106186399

[Report]

>>106188520

>>106186335

for 1 successful, 100 with like this at 5$ per month total

Anonymous

8/8/2025, 6:34:53 AM

No.106186401

[Report]

Anonymous

8/8/2025, 6:35:30 AM

No.106186404

[Report]

>>106186448

For anons having a 5090, I'd like to change the nvidia-smi power target to something lower.

What is the sweet spot for inference? 350W?

Anonymous

8/8/2025, 6:36:45 AM

No.106186409

[Report]

>>106186335

I'd have to change too much of myself to be successful with that sort of thing

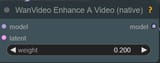

Is this snakeoil any good? It's been slapped on every other wan2.1 workflow I came across but not on 2.2

Anonymous

8/8/2025, 6:41:21 AM

No.106186431

[Report]

>>106185333

>bottom line is qwen image is our deepseek

on the right track but i don't know about calling it the deepseek of image gen. doesn't sound quite right.

Anonymous

8/8/2025, 6:44:53 AM

No.106186448

[Report]

>>106186458

>>106186404

>350W is power limiting territory for a 5090

>mfw I'm running my 3090 at 210W

Is electricity free where you live or something?

Anonymous

8/8/2025, 6:47:21 AM

No.106186458

[Report]

>>106186448

My 3090 is power limited at 260W, it's a good compromise. 210 sounds a bit low.

The 5090 I have no idea what to set yet but its tdp is 575W.

Anonymous

8/8/2025, 6:49:14 AM

No.106186472

[Report]

>>106186527

>>106186414

i don't think anyone, even the creator of it, knows what it does

Anonymous

8/8/2025, 6:49:59 AM

No.106186477

[Report]

>>106185524

a chroma centaur. note this is an old gen, this is chroma 33 so a newer one would likely be a bit better. but clearly it's superior to qwen's abortive attempt

Anonymous

8/8/2025, 6:51:18 AM

No.106186481

[Report]

>>106186414

you can probably rename it to cargo cult node

Anonymous

8/8/2025, 6:58:21 AM

No.106186515

[Report]

>>106186523

Okay, got qwen to begin training at fp16 across X2 rtx 3090s.

It was a bit of headache to setup. Mostly because the diffusion pipe repo casually forgot to mention I would need to upgrade transformers as well. I'm not sure how I was the only one experiencing that issue.

Anonymous

8/8/2025, 6:59:22 AM

No.106186523

[Report]

>>106186538

>>106186515

1024x1024 btw

Anonymous

8/8/2025, 7:00:33 AM

No.106186527

[Report]

>>106186472

It does some weird shit where it averages out some numbers on each step or something, idk really. It's extremely subtle. It's not fake and gay, but it may as well be due to how little it impacts the output

Anonymous

8/8/2025, 7:02:22 AM

No.106186538

[Report]

>>106186523

Getting about this much vram usage at 1024 at a batch size of one and rank of 32.

Seems perfectly trainable to me desu.

Anonymous

8/8/2025, 7:05:16 AM

No.106186553

[Report]

>>106186581

and this is why i2v is fun, silly shit

the man hits a baseball with a baseball bat.

Anonymous

8/8/2025, 7:09:55 AM

No.106186581

[Report]

>>106186594

Anonymous

8/8/2025, 7:11:13 AM

No.106186594

[Report]

>>106186625

>>106186581

Is this with the new light LoRAs? I've noticed my I2V outputs desperately resist the subjecte turning around in them. Might just be my imagination though.

Anonymous

8/8/2025, 7:15:06 AM

No.106186625

[Report]

>>106186633

>>106186594

2.2 i2v, just testing random stuff atm.

Anonymous

8/8/2025, 7:16:07 AM

No.106186633

[Report]

>>106186670

>>106186625

*it's the lightning lora but the one kijai posted, 1 str for both

>>106186633

Yeah just something I noticed when I didn't before since updating, they subject doesn't like to change directions. Then again, I don't have many examples.

Anonymous

8/8/2025, 7:23:54 AM

No.106186691

[Report]

>>106186711

>>106186064

is that the repack?

Anonymous

8/8/2025, 7:29:40 AM

No.106186724

[Report]

>agi here

Anonymous

8/8/2025, 7:29:50 AM

No.106186725

[Report]

>>106186711

oh those, they're fast but censored :/

Anonymous

8/8/2025, 7:32:06 AM

No.106186736

[Report]

>>106186670

ay yo i din kno cap picard was slick wid it like that!

Anonymous

8/8/2025, 7:33:32 AM

No.106186744

[Report]

genning i2v at 720p, 121 frames. tried kijai's 2.2 loras. switched back to his original workflow with "lightx2v_I2V_14B_480p_cfg_step_distill_rank64_bf16.safetensors" at 3.0 on high and 1.0 on low. i find the 2.1 loras have superior prompt adherence, faster motion and resist looping better. i don't know if the 2.2 loras are meant for 480p but the visual quality is about the same. wtf is going on with this shit

Anonymous

8/8/2025, 7:41:22 AM

No.106186792

[Report]

>>106186850

>>106186335

I started a few months back, got a sub, then life happened and I had to stop. Then I lost that sub recently. Welp. No idea how these guys got hundreds to thousands of subs though. Insane. I put in a lot of effort and it was all high quality stuff.

Anonymous

8/8/2025, 7:55:23 AM

No.106186850

[Report]

>>106186881

>>106186792

I made a porn game that brought in a few K every month. I couldn't maintain it after I got a new job though, and I was creatively drained. I was no longer enjoying the fetish I was facilitating.

Anonymous

8/8/2025, 7:56:22 AM

No.106186857

[Report]

>>106186867

Alright, I've been having a good bit of fun with 2.1 (mostly getting my NSFW images to move). How does 2.2 compare? How is it with weirder sizes? I know with 2.1 if you didn't stick exactly at 832x480, things could go pretty soft in terms of detail. And for some reason fluids exclusively genned as torrents and/or giant globs. Like say you prompt saliva, that bitch was ejecting giant globs of spit out of her mouth. I kinda figured that was down to some weird shenanigans with resolution and denoising, where it can't see the small details in the image and kinda resolved it with larger ones, but I'm not sure.

But yeah, is it a bit more flexible with resolutions and framerates?

Base 2.1 tended to be pretty shit where it'd do the slow motion stuff or loop back too.

I'm guessing requirements are mostly the same too? It's just an incremental update, so I figure it's more just optimizing and improving what's there.

Anonymous

8/8/2025, 7:57:13 AM

No.106186866

[Report]

I thought there was a comfyui node that let you run a python script but I can't find it for the life of me

Anonymous

8/8/2025, 7:57:18 AM

No.106186867

[Report]

>>106186874

>>106186857

I can't believe you haven't moved to 2.2 already. What the fuck.

Anonymous

8/8/2025, 7:58:20 AM

No.106186874

[Report]

>>106186884

>>106186867

I was on vacation, then I had to work ;_;

Haven't had time to pick back up until now.

Anonymous

8/8/2025, 7:59:41 AM

No.106186881

[Report]

>>106186892

>>106186850

story of every indie porn game developet

Anonymous

8/8/2025, 7:59:48 AM

No.106186883

[Report]

>>106186983

I had an idea on how to make like a video with say, only 5 frames. This would save a fuckload of gen time.

Obviously setting Length to 5 doesn't work, it has to be set to 81 for the structure to be established. But what if you establish the structure by generating for 1 step, then remove all the other latent frames, then continue generation? Would it work?

There's nodes like "TrimVideoLatent" and "Frames Slice Latent" which allow you to remove latent frames, we would just need a node that only keeps every 16th frame (when using 81 length, to make it 5 frames).

Anonymous

8/8/2025, 8:00:02 AM

No.106186884

[Report]

>>106187055

>>106186874

Go, go download 2.2. It's basically better in every conceivable way.

Anonymous

8/8/2025, 8:01:42 AM

No.106186892

[Report]

>>106186881

It was just too much man, and the DMs asking me to add in stuff I found revolting. The insane impossible requests that would be entire games in and of them selves. The fear of people being unhappy with the update.

Yeah. I might pick it up again one day, but it will be a new project that I actually want to work on. Passion is something intangible that users can feel.

DAE not think Qwen is actually very good at all? It looks like absolute shit compared to Flux Krea for everything I want to gen personally

Anonymous

8/8/2025, 8:06:43 AM

No.106186922

[Report]

>music stops:

Anonymous

8/8/2025, 8:06:54 AM

No.106186924

[Report]

>>106186896

haven't tried Krea, but I'm having a blast genning heaps of shit with qwen image, especially character reference frames to feed into hunyuan3d2.1

>a 2 panel comic portraiting Hatsune Miku, and John Wick shopping. the left panel shows John Wick holding a GPU saying "VRAM..." with Hatsune Miku looking excited. the right panel shows John Wick and Hatsune Miku walking away together saying "it's GENNING time". Ultra HD, 4K, comic, anime.

qwen image + wan2.2 back to back is hours of fun

Anonymous

8/8/2025, 8:08:19 AM

No.106186928

[Report]

>>106186993

>>106186896

I think the outputs all look "solid". Like they are very clean. Especially for anime stuff. If you do an honest comparison to default flux. It trounces it pretty handily. It's also not distilled right off the bat which makes it a much more attractive proposition (vramlets don't @ me)

>>106186926

kek, what did you prompt

Anonymous

8/8/2025, 8:09:39 AM

No.106186939

[Report]

>>106186960

>>106186929

Hehe yeah what did you prompt? lol. I wanna know hehe. It would be really funny if a giant naked lady just picked up a tiny little man right? heh. You know, just a fun thing?

Anonymous

8/8/2025, 8:12:01 AM

No.106186957

[Report]

Anonymous

8/8/2025, 8:12:36 AM

No.106186960

[Report]

>>106186972

>>106186939

oh maybe prompting it for attack on titan might work?

>>106186929

i sent a basic prompt about him being grabbed by a giant miku through grok and got this:

In the haunting, snow-laden climax of Blade Runner 2049, K, the weary replicant portrayed by Ryan Gosling, sits slumped on the icy steps outside the Wallace Corporation, his bloodied face and tattered coat bathed in the soft glow of falling snow. As he gazes upward into the swirling, pale sky, a colossal 2D anime hologram of Hatsune Miku materializes, her vibrant teal twin-tails cascading like neon waterfalls, dominating the desolate urban horizon. Towering over K, her luminous figure radiates an ethereal warmth against the cold, dystopian backdrop. The camera slowly pulls back, revealing the staggering scale of Miku’s hologram as she fixes her playful, glowing eyes on him. With a single, fluid motion, her enormous hand descends, effortlessly scooping K from the steps like a fragile doll. She lifts him skyward, his body suspended weightlessly against the stormy expanse, snowflakes swirling around him as her vibrant presence contrasts with his quiet resolve, the city fading below in a breathtaking ascent.

Anonymous

8/8/2025, 8:13:46 AM

No.106186972

[Report]

>>106186960

Nah doesn't work for Wan. I actually tried something similar early today.

Anonymous

8/8/2025, 8:15:35 AM

No.106186982

[Report]

>>106186967

>teal

Isn't she more of a turquoise?

Anonymous

8/8/2025, 8:15:38 AM

No.106186983

[Report]

>>106186883

ah it seems it's the node called "Select Every Nth Latent" that allows you to do this.

Anonymous

8/8/2025, 8:15:39 AM

No.106186985

[Report]

>>106187008

Anyone know how to get previews working for the Phr00t AiO workflow in the Rentry?

>>106186928

default Flux sure but Krea takes a shit on anything that isn't WAN for photographic gens, nothing else is remotely close to as detailed in that regard

Anonymous

8/8/2025, 8:19:24 AM

No.106187008

[Report]

>>106186985

Nevermind, I'm a blind retard.

Just had to pan down.

Anonymous

8/8/2025, 8:21:12 AM

No.106187020

[Report]

>>106186414

All the snakeoils were more useful on 2.1. And 2.2 fixed of of the issue the old model had so these don't do as much as they used to.

Anonymous

8/8/2025, 8:21:17 AM

No.106187021

[Report]

>>106187119

>>106186993

True, but Krea seems very much in that niche of realism. Qwen feels like a blank canvas. Think back to SDXL and its release and how god awful it was and it still turned out great. I don't know if Qwen can achieve that due to its size, but the potential to be truly great is there.

>>106186993

Nah, Chroma is still the king of photorealism. It's not even close. There are hundreds of different things you can do with Chroma, you can't do with Krea, that's due to its uncensored nature. The Krea pretty images, you can get out of Chroma with good prompt engineering.

Anonymous

8/8/2025, 8:28:18 AM

No.106187052

[Report]

>>106187075

>>106186670

it works no problem with 2.1 light lora

Anonymous

8/8/2025, 8:28:46 AM

No.106187055

[Report]

>>106187066

>>106186884

You weren't kidding. This shit is way better. And it takes like half the time (110s for 2.2 on a first gen, compared to 200s for 2.1) .

Only problem is that things are a little grainy. I grabbed the Phr00t workflow from the rentry as a bit of a "quick start" to try shit out, so maybe that has something to do with it. Or maybe it's just more sensitive to resolution than before?

Anonymous

8/8/2025, 8:31:40 AM

No.106187066

[Report]

>>106187074

2.1 light, 161 frames. not great but look how coherent

>>106187055

the AIO is ass

Anonymous

8/8/2025, 8:31:58 AM

No.106187067

[Report]

>>106187042

One simple and often overlooked example. Soles. Qwen can do them, but they are blurry and slopped. Know what else is blurry and slopped? Cloudshit models. From Reve, to Gemini, to Imagen, to GPT 4o, all slopped. Chroma is unique in that it's the only unslopped model that can do soles in any situation.

Anonymous

8/8/2025, 8:33:05 AM

No.106187073

[Report]

chroma is king of random noise and garbage

Anonymous

8/8/2025, 8:33:16 AM

No.106187074

[Report]

>>106187097

>>106187066

>the AIO is ass

Sheeeeeeit.

The fuck do I do then? The rentry is kind of a weird mix of 2.1/2.2 info. 2.2 isn't just a drop in replacement, is it?

>>106187052

I saw a r*ddit post that said they got amazing movement combining the 2.1 at strength 3 and 2.2 at 1 on the high model

and 2.2 at 1 on the low model with 2.1 at 0.25.

Both with a cfg of 2.5

Anonymous

8/8/2025, 8:34:17 AM

No.106187082

[Report]

>>106187075

The light LoRA that is.

Anonymous

8/8/2025, 8:34:33 AM

No.106187083

[Report]

>>106187101

the professional style foot pics were like a breath of fresh air

Anonymous

8/8/2025, 8:37:44 AM

No.106187097

[Report]

Anonymous

8/8/2025, 8:38:04 AM

No.106187101

[Report]

>>106187083

If I wanted pro style foot pics I would just prompt for them. They'd still come out better than distilled Seedream 3.0 slop. That's the freedom that models like Chroma give you. But you are an idiot.

Anonymous

8/8/2025, 8:38:32 AM

No.106187104

[Report]

>>106187120

>>106186967

neat, I might try that with lm studio too but prob have to unload the model (for vram), grok/etc are probably ideal options for generating detailed stuff.

Anonymous

8/8/2025, 8:41:19 AM

No.106187116

[Report]

5 epochs in on Qwen training. I'm wondering how it will handle respecting the captions. Flux had an awful tendency to bleed characters and everyone called me a schizo when I called it out.

>>106187021

HiDream was MIT licensed and the Full version wasn't distilled, and AFAIK would be much easier to train than Qwen in terms of resource needs.

Anonymous

8/8/2025, 8:41:55 AM

No.106187120

[Report]

>>106187126

>>106187104

Generate an image of a man standing in the center of a large, empty room with high ceilings, dressed in casual attire - jeans and a plain white t-shirt, with a look of wonder and awe on his face as he gazes upwards at a giant holographic image of Hatsune Miku suspended above him by an invisible field of energy or magic. The holographic Miku should be at least 10 times larger than the man, with intricate details visible even from this distance, her facial expression one of calm serenity and gentle benevolence as she looks down at the man, her eyes cast directly at him as if seeing right through to his soul. A halo of soft, pulsing light surrounds Miku's image, diffused and scattered throughout the room, creating an otherworldly ambiance that makes the viewer feel like they're witnessing something truly magical, with subtle hints of digital code or programming languages floating in the background.

neat

Anonymous

8/8/2025, 8:43:09 AM

No.106187126

[Report]

>>106187198

>>106187120

and the prompt in lm studio was: make a detailed stable diffusion prompt for a man looking up at a giant holographic Miku Hatsune in one paragraph.

will prob use grok so I dont have to unload the model, it uses like 5-6gb.

Anonymous

8/8/2025, 8:43:22 AM

No.106187128

[Report]

>>106187136

>>106187119

Nobody really gave a shit about HiDream. Myself included. I don't think any of the outputs really wowed anybody.

Anonymous

8/8/2025, 8:43:59 AM

No.106187130

[Report]

>>106187215

>>106187042

No you can't lmao, Chroma straight up does not have that level of fidelity in it at the moment due to being trained at 512px up until now. It also has half the context length of both regular Dev and Krea because Schnell did (256 tokens versus 512 token).

>>106187128

I haven't really seen a single "wow" Qwen output either quite frankly. Just a lot of people using extremely easy prompts as supposed evidence of the great prompt adherence.

Anonymous

8/8/2025, 8:47:12 AM

No.106187142

[Report]

>>106187169

>>106187136

There were some that came out during the release of Flux to test how far T5 could go, someone had a long elaborate prompt for a black woman. Too lazy to look it up.

Anonymous

8/8/2025, 8:47:50 AM

No.106187150

[Report]

>>106187172

>>106187075

lmaoing at the jeets there that can't figure out how to connect a lora node

Anonymous

8/8/2025, 8:48:29 AM

No.106187155

[Report]

>>106187119

>4 (four) text encoders

Anonymous

8/8/2025, 8:49:40 AM

No.106187162

[Report]

>>106187192

What wan 2.1/2.2 i2v model that gives me reasonable speed with a 4070s and 32gb of ram?

I'm using wangp, wan2.1 Image2video 480p 14B, and profile 4 (12gb vram and 32gb ram), it takes 7 minutes to make 5 seconds

>>106187142

I found one lazily search back in the archives from last year.

This is a digitally drawn anime-style image featuring Hatsune Miku. She is seated at a wooden desk in a modern office setting. On the desk is part of a half-eaten hot dog and crumbs, the hot dog has a missing part that was bitten off and it's incomplete. She has a serious expression as she extends her right hand to shake hands with a person off-screen to the left. Likely an office colleague. Indicating a break or snack time. The desk is cluttered with various office supplies, including a pencil cup filled with colored pens and markers, a calculator, and a notebook. A green potted plant is visible on the left side of the desk, adding a touch of nature to the otherwise busy workspace. The background features a large window with multiple panes, allowing sunlight to stream in and illuminate the room. Outside the window, lush green trees are visible, suggesting an office with a view of nature. The walls are adorned with bookshelves filled with neatly organized binders and books.[\code]

This is what Flux cooked up.

Anonymous

8/8/2025, 8:50:58 AM

No.106187172

[Report]

>>106187150

Don't lmao too hard. I saw a guy here asking how to connect two LoRA nodes together over the space of 24 hours.

Anonymous

8/8/2025, 8:51:25 AM

No.106187175

[Report]

>>106187191

>>106187169

Fuck me. I'll just quote it.

>This is a digitally drawn anime-style image featuring Hatsune Miku. She is seated at a wooden desk in a modern office setting. On the desk is part of a half-eaten hot dog and crumbs, the hot dog has a missing part that was bitten off and it's incomplete. She has a serious expression as she extends her right hand to shake hands with a person off-screen to the left. Likely an office colleague. Indicating a break or snack time. The desk is cluttered with various office supplies, including a pencil cup filled with colored pens and markers, a calculator, and a notebook. A green potted plant is visible on the left side of the desk, adding a touch of nature to the otherwise busy workspace. The background features a large window with multiple panes, allowing sunlight to stream in and illuminate the room. Outside the window, lush green trees are visible, suggesting an office with a view of nature. The walls are adorned with bookshelves filled with neatly organized binders and books.

Anonymous

8/8/2025, 8:52:01 AM

No.106187180

[Report]

>>106187169

Someone plug this into Qwen, curious to see how it handles it and my GPUs are all occupied right now.

Anonymous

8/8/2025, 8:53:21 AM

No.106187189

[Report]

>>106187191

>>106187136

got an idea for a prompt you'd like to try? I can run it if you want

Anonymous

8/8/2025, 8:53:58 AM

No.106187192

[Report]

Anonymous

8/8/2025, 8:55:25 AM

No.106187198

[Report]

>>106187253

>>106187126

this time grok:

A gritty cyberpunk metropolis at night, rain-slicked streets glowing with neon reflections, a lone man in a worn trench coat staring upward in awe, a colossal holographic Miku Hatsune dominating the skyline, her vibrant teal twin-tails shimmering with intricate digital patterns, her form translucent yet luminous, surrounded by floating data streams, towering dystopian skyscrapers and flickering holographic billboards in the background, bathed in moody cyan and magenta neon hues, cinematic lighting, ultra-detailed, in the high-tech, noir aesthetic of Blade Runner 2077, immersive, futuristic atmosphere.

trippy

Anonymous

8/8/2025, 8:55:26 AM

No.106187199

[Report]

>>106187191

running now, will make 2 versions (wide and square)

Anonymous

8/8/2025, 8:57:19 AM

No.106187212

[Report]

>>106187220

Best settings for character training in wan 2.2 for high and low?

Is rank 64 or higher bucket size worth it?

Anonymous

8/8/2025, 8:58:01 AM

No.106187215

[Report]

>>106187235

>>106187130

Yet only with Chroma can you do proper feet, creepshots, gore, nudity, sex, bondage, yoga, contortions, etc... the list goes and on anon. And also Chroma follows the prompt better than Flux dev/Krea for these reasons.

Anonymous

8/8/2025, 8:58:54 AM

No.106187218

[Report]

>>106187225

Anonymous

8/8/2025, 8:59:26 AM

No.106187220

[Report]

>>106187212

I did some rudimentary experiments with 2.2 at rank 64. If you're training low, you can plug the LoRA for the character into the low node and the output will look like that character while the motion remains in tact. I just did 1024x1024 images only as a test. Video I also tried but I don't have the will to really sus it out yet.

Anonymous

8/8/2025, 9:00:27 AM

No.106187225

[Report]

>>106187218

Looks a bit noisy are you putting LoRAs you shouldn't in the low noise output or at too high a strength.

Anonymous

8/8/2025, 9:02:06 AM

No.106187235

[Report]

>>106187264

>>106187215

got an example prompt of creepshots? do you mean images that are peeping or cctv? I've tried running cctv prompts in qwen image and it's OK but I think I'm a promptlet at directing how and where the camera is (can't get top down camera sitting in the corner of a room shot)

Does anyone know which ones from the following params ComfyUI uses by default?

[-h] [--listen [IP]] [--port PORT] [--tls-keyfile TLS_KEYFILE] [--tls-certfile TLS_CERTFILE] [--enable-cors-header [ORIGIN]]

[--max-upload-size MAX_UPLOAD_SIZE] [--base-directory BASE_DIRECTORY] [--extra-model-paths-config PATH [PATH ...]] [--output-directory OUTPUT_DIRECTORY]

[--temp-directory TEMP_DIRECTORY] [--input-directory INPUT_DIRECTORY] [--auto-launch] [--disable-auto-launch] [--cuda-device DEVICE_ID]

[--cuda-malloc | --disable-cuda-malloc] [--force-fp32 | --force-fp16]

[--fp32-unet | --fp64-unet | --bf16-unet | --fp16-unet | --fp8_e4m3fn-unet | --fp8_e5m2-unet | --fp8_e8m0fnu-unet] [--fp16-vae | --fp32-vae | --bf16-vae]

[--cpu-vae] [--fp8_e4m3fn-text-enc | --fp8_e5m2-text-enc | --fp16-text-enc | --fp32-text-enc | --bf16-text-enc] [--force-channels-last]

[--directml [DIRECTML_DEVICE]] [--oneapi-device-selector SELECTOR_STRING] [--disable-ipex-optimize] [--supports-fp8-compute]

[--preview-method [none,auto,latent2rgb,taesd]] [--preview-size PREVIEW_SIZE] [--cache-classic | --cache-lru CACHE_LRU | --cache-none]

[--use-split-cross-attention | --use-quad-cross-attention | --use-pytorch-cross-attention | --use-sage-attention | --use-flash-attention]

[--disable-xformers] [--force-upcast-attention | --dont-upcast-attention] [--gpu-only | --highvram | --normalvram | --lowvram | --novram | --cpu]

[--reserve-vram RESERVE_VRAM] [--async-offload] [--default-hashing-function {md5,sha1,sha256,sha512}] [--disable-smart-memory] [--deterministic]

[--fast [FAST ...]] [--mmap-torch-files] [--dont-print-server] [--quick-test-for-ci] [--windows-standalone-build] [--disable-metadata]

Had to cut some out due to character limit. I know that vae is run in bf16 by default for example, I am asking like that.

>>106187191

Made 2 gens, one gen gets the office items positioned better but messes up the handshake and this one gets the items and handshake just in the wrong position. Neither had a bite out of the hotdog.

cfg 4.5, steps 50

Anonymous

8/8/2025, 9:06:13 AM

No.106187253

[Report]

>>106187235

Any kind of image anon.

>Amateur photograph, a Japanese woman dressed as a maid, sleeping on the Tokyo Metro, her panties are slightly visible

That is one example of the kind of stuff Chroma gets right. You could do cctv, walking up a flight of stairs, peeping, etc... any kind of creepshot that has a natural description, you can do, (though Chroma just like other models benefits from a good prompt, you can enhance with VLMs)

>>106187251

Now you gotta do the Chinese version

Anonymous

8/8/2025, 9:10:49 AM

No.106187286

[Report]

>>106187324

>>106187251

square aspect

Anonymous

8/8/2025, 9:12:59 AM

No.106187312

[Report]

>>106187369

>>106187237

Check the code

Anonymous

8/8/2025, 9:17:21 AM

No.106187324

[Report]

>>106187251

>>106187286

I think both are certainly more coherent than FLUX, but it seems like there is still a ways to go on the prompt adherence front. It is a noticeable step up though.

So with the lightx2v 2.2 workflow in the rentry, where do I set the virtual VRAM usage type thing like it was in the 2.1 workflow (the UnetLoaderGGUFDisTorchMultiGPU node)?

Gens are still around the same time as 2.1 even without it, but I don't know if I'm fucking myself over or not.

Also 2 more questions.

How do I plug loras into this? Do I just put them inline after the lightx2v loras (assuming I use a high/low lora)?

What's the difference between the e4m3fn and e5m2 versions of the i2v models?

Anonymous

8/8/2025, 9:25:48 AM

No.106187360

[Report]

>>106187075

might be something to that, tried it and got results that looked better compared to without, but the 2.5 cfg deep fries it, keeping it at 1

>>106187237

>>106187312

Well I guess there is no lovely default params list somewhere out there isn't it?

Anyway just trial and error'd what I wanted to learn, it uses fp16 precision for text encoder by default, at least on my system.

>>106187265

here ya go

>>106187264

I'll give it a try now

Anonymous

8/8/2025, 9:35:19 AM

No.106187413

[Report]

>>106187369

It's comfyui so probably not, check the code, it should all be in one place, but then again it's comfyui so probably not

>>106187383

I have another one when you have the chance, just to see at what point it gets overloaded in the description with characters.

>This is a colorful digital drawing in an anime style, featuring four young girls playing a chess game on a pink table in a bedroom. The girls are dressed in school uniforms with white sailor collars and blue skirts. The girl on the left is Sailor Moon and has long blonde hair tied into twin ponytails, the girl in the center has pink hair styled in pigtails, the girl on the right has dark blue hair, the girl in the bottom right is Hatsune Miku, and there's a small black cat sitting on the bed on the far right. They are all sitting on the floor, focused on the game. Behind them, there is a large bed with a blue and yellow striped blanket. The room has pastel-colored walls with a window that shows a bright blue sky. The overall atmosphere is playful and cheerful, with bright colors and simple, clean lines typical of anime art.

Flux failed to gen Miku and the image is severely degraded.

Anonymous

8/8/2025, 9:39:59 AM

No.106187440

[Report]

>>106187523

>>106187423

here's a link to the thread where I was sharing some stuff.

https://desuarchive.org/g/thread/106170414/#106172707

and trying out qwen as an image ref for hunyuan 3d2.1

https://desuarchive.org/g/thread/106174863/#q106175342

I'll try the prompt you shared now in a moment, I'm testing the peeping prompt at the moment

Anonymous

8/8/2025, 9:40:21 AM

No.106187442

[Report]

>>106187504

A rain-soaked cyberpunk city at night, neon reflections shimmering on wet streets, Ryan Gosling as a rugged man in a sleek trench coat, pointing upward with intensity and awe, a colossal holographic Miku Hatsune dominating the skyline, dynamically dancing and singing into a glowing microphone, her teal twin-tails swirling with vibrant digital patterns, her translucent form radiating ethereal light, surrounded by pulsating data streams and musical notes, dystopian skyscrapers and flickering holographic billboards in the background, drenched in moody cyan and magenta neon hues, cinematic lighting, ultra-detailed, in the gritty, high-tech noir style of Blade Runner 2077, immersive and atmospheric.

neat, I need to llm-max prompts more often, just get the basic idea and let the model elaborate/add detail.

Anonymous

8/8/2025, 9:41:34 AM

No.106187445

[Report]

I saw a blue Prius while walking today and laughed

Anonymous

8/8/2025, 9:46:53 AM

No.106187478

[Report]

>>106185951

its like some of you guys outright refuse to read the stickies

Anonymous

8/8/2025, 9:47:44 AM

No.106187488

[Report]

>>106187264

doesn't get the hint on the panties but I think if I added "her legs are slightly spread apart" it might get it.

Anonymous

8/8/2025, 9:50:43 AM

No.106187504

[Report]

>>106187442

It's interesting, with enough patience you could remake whole movies into memes

You know someone will do this

Anonymous

8/8/2025, 9:51:55 AM

No.106187511

[Report]

>>106187604

>>106187503

wide version. almost got it but the pillow just out of nowhere lmao

Anonymous

8/8/2025, 9:51:58 AM

No.106187512

[Report]

>>106187518

there we go, slight change to the prompt request.

A rain-slicked cyberpunk city at night, neon lights casting vibrant reflections on wet pavement, Ryan Gosling as a rugged man in a sleek trench coat, gently holding hands with a life-sized holographic Miku Hatsune, her translucent form glowing softly as she smiles warmly, her teal twin-tails shimmering with intricate digital patterns, faint data streams swirling around her, dystopian skyscrapers and flickering holographic billboards in the background, bathed in moody cyan and magenta neon tones, cinematic lighting, ultra-detailed, in the gritty, high-tech noir style of Blade Runner 2077, intimate and atmospheric.

Anonymous

8/8/2025, 9:53:07 AM

No.106187518

[Report]

>>106187512

and all I asked grok (free) was: make a stable diffusion prompt for a man holding hands with a holographic Miku Hatsune who is smiling, in the style of Blade Runner 2077, with Ryan Gosling.

Anonymous

8/8/2025, 9:53:41 AM

No.106187523

[Report]

>>106187715

>>106187440

Thanks. Seems like it will probably make the same mistakes as what I linked.

One last prompt, forgive me.

>This image is a digitally drawn cartoon in a typical comic strip format. The scene is set in an art gallery, with a girl on the left side wearing a teal blazer and light brown pants, pointing to a framed painting on the wall. The painting, which is green with a yellow border, depicts a bowl of fruit including apples, grapes, and bananas, with a price tag of "$500" attached to the lower right corner. Another identical painting, identical in style and content, hangs on the wall to the right, priced at "$1500". In the foreground, two people are standing, observing the paintings. One person, a bald man with a blue plaid shirt and brown pants, is looking at the paintings with a confused expression. The other person, a woman with dark hair and a sleeveless dress, is standing behind the bald man, watching the scene with a neutral expression. The background features a beige wall with a few other paintings, and the gallery is lit with soft, even lighting. A humorous caption at the bottom of the image reads: "It is more expensive because it took the artist several weeks to paint it, while the other one was generated in 10 seconds on my computer."

Should test out text and formatting to the extreme.

Anonymous

8/8/2025, 9:56:45 AM

No.106187538

[Report]

>>106187614

>>106187423

here you go. seems to handle multiple subjects very well in a prompt. I'm satisfied with qwen-image a lot and it is a night and day improvement over flux for me

Anonymous

8/8/2025, 10:05:59 AM

No.106187596

[Report]

>>106187831

>>106187383

>here ya go

Either you misunderstood me or you are a funny guy.

Anonymous

8/8/2025, 10:06:02 AM

No.106187597

[Report]

A rain-soaked cyberpunk city at night, neon lights casting vibrant reflections on slick streets, Ryan Gosling as a rugged man in a sleek trench coat, standing captivated as he gazes at a massive billboard displaying a holographic Miku Hatsune, her translucent form reaching out toward him with a gentle, inviting gesture, her teal twin-tails glowing with intricate digital patterns, faint data streams swirling around her, dystopian skyscrapers and flickering holographic signs in the background, drenched in moody cyan and magenta neon hues, cinematic lighting, ultra-detailed, in the gritty, high-tech noir style of Blade Runner 2077, immersive and atmospheric.

cool

Anonymous

8/8/2025, 10:07:00 AM

No.106187604

[Report]

>>106187632

>>106187503

>>106187511

Something weird I've noticed with Qwen is panties often come with a thigh strap.

Anonymous

8/8/2025, 10:07:54 AM

No.106187614

[Report]

>>106187538

Yeah, it's definitely an improvement and got all the major elements. Still has some ways to go with the chess pieces and hands but that is minor.

Anonymous

8/8/2025, 10:11:09 AM

No.106187632

[Report]

>>106187604

I've noticed it does that too. But I managed to get it not do so when trying to gen magazine photoshoot photos. I'd post here but it's a blue board

>>106187369

I know no one cares but to add on, even FP32 models are loaded in FP16 unless you manually launch with --fp32-text-enc.

Kinda weird behavior desu. It definitely affects images.

>>106187649

I'd need to see more examples to really care. Good to know though.

Anonymous

8/8/2025, 10:19:52 AM

No.106187684

[Report]

>>106187330

>So with the lightx2v 2.2 workflow in the rentry, where do I set the virtual VRAM usage type thing like it was in the 2.1 workflow (the UnetLoaderGGUFDisTorchMultiGPU node)?

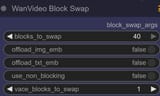

You don't, instead you set the number of "blocks" you offload to swap. See picrel.

There are a total of 40 blocks in wan, and swapping 20 allows for 81 frames generated in 720p on a 24GB card.

If you send the whole 40 to swap, then you can go above at the price of longer generation time.

Anonymous

8/8/2025, 10:19:57 AM

No.106187687

[Report]

>>106188682

what's the best version of the rapid all in one wan? only just now moving from 2.1 to 2.2

Anonymous

8/8/2025, 10:23:30 AM

No.106187715

[Report]

>>106187774

>>106187523

That prompt seemed to trip it up a bit. I tried cfg of 3.5, 4.5, and 5.5 with a batch of 2 seed 42. zipped all the attempts for comparison

https://files.catbox.moe/zi1948.zip

Anonymous

8/8/2025, 10:23:32 AM

No.106187716

[Report]

>>106187802

>>106187330

>How do I plug loras into this? Do I just put them inline after the lightx2v loras (assuming I use a high/low lora)?

Yeah, add a WanVideo Lora Select Multi and connect it behind the lightx lora loader with prev_lora. One for each lora loader.

>>106187330

>What's the difference between the e4m3fn and e5m2 versions of the i2v models?

e5m2 -> use with 3000 cards

e4m3fn -> use with 4000/5000 cards

Anonymous

8/8/2025, 10:29:58 AM

No.106187757

[Report]

>>106187774

>>106187668

The image I posted may or may not have been cherrypicked but yeah, I guess this is still one area that needs work which is mixed text and subject mixed prompts. Thanks for the hard work, I really appreciated the time and energy you spent satisfying my curiosity.

Anonymous

8/8/2025, 10:32:56 AM

No.106187770

[Report]

>>106187791

lmao

A sleek, futuristic car interior from the driver's seat perspective, Ryan Gosling gripping the steering wheel with intensity, his face lit by the soft glow of a high-tech dashboard, driving at dusk on a winding road through a lush tropical island, a massive, eerie sign reading "EPSTEIN ISLAND" in bold, neon-lit letters looming ahead, surrounded by dense jungle and turquoise ocean views, vibrant sunset casting orange and purple hues, cinematic lighting, ultra-detailed, in a suspenseful, noir-inspired style, immersive and atmospheric.

asked grok to make a prompt of him driving a car on an island with a sign.

Anonymous

8/8/2025, 10:34:05 AM

No.106187774

[Report]

>>106187831

>>106187757

Meant to quote

>>106187715

>>106187668

I wanted to post this separately.

https://www.ai-image-journey.com/2024/12/image-difference-t5xxl-clip-l.html

Use Q6_K or higher GGUF or FP8_scaled if you absolutely need to quantize your text encoders.

Anonymous

8/8/2025, 10:37:06 AM

No.106187791

[Report]

>>106187770

revision, blue sky with clouds.

Anonymous

8/8/2025, 10:39:07 AM

No.106187802

[Report]

>>106187823

>>106187716

Didn't know about the select multi node. I was putting a normal lora select before the lightx2v lora in the chain. Outputs were fucked beyond belief. They were sped up, and incoherent blobs.

>e4m3fn -> use with 4000/5000 cards

Good to know.

Last question. How does framerate factor into this one? I know before it output at 16fps, you'd interpolate to 32. But when Riflex was a thing, you'd do like 121 frames and output straight to 24fps. That still the same?

Anonymous

8/8/2025, 10:41:59 AM

No.106187823

[Report]

>>106187802

>Last question. How does framerate factor into this one? I know before it output at 16fps, you'd interpolate to 32. But when Riflex was a thing, you'd do like 121 frames and output straight to 24fps. That still the same?

No idea for the interpolation part as I'm using 16-60 fps on videos I like on topaz instead of adding it to the wf. I don't think interpolation was added in the rentry wf but I modified mine so I'm not sure.

Anonymous

8/8/2025, 10:42:55 AM

No.106187831

[Report]

>>106187848

>>106187774

no worries mate

>>106187596

I tried mate, but I just can't figure out how to get bite marks. If you want, share a prompt and I'll see if it makes it china enough for ya

>>106187831

I think he means putting the prompt in Chinese and seeing if it does better. The dude is dumb for saying way too little about what he wants and expecting it to fall out of the sky magically.

Anonymous

8/8/2025, 10:51:17 AM

No.106187863

[Report]

>>106187848

If that's the case there'd be no tells on what got gen'd of if it were done via chinese text prompt or not. But heck, I'll try that too. I'll ask deepseek to translate the prompt and run it through

Anonymous

8/8/2025, 10:52:53 AM

No.106187867

[Report]

>>106187883

>>106187503

Just gotta have a move a little!

Anonymous

8/8/2025, 10:55:31 AM

No.106187883

[Report]

>>106187867

Man I really wanna get the large models up and running (without comfy). I can only run the 5B video model. Gotta look into loading the quanted models and edit the inference code to be able to load the ggufs. If I can't get it done in a week, I'll probably cave and install comfy

Anonymous

8/8/2025, 11:00:23 AM

No.106187906

[Report]

Anonymous

8/8/2025, 11:03:44 AM

No.106187924

[Report]

>>106187264

got it with a slightly modified prompt

>Amateur photograph, a Japanese woman dressed as a maid, sleeping on the Tokyo Metro, her thighs are spread slightly apart and her panties are slightly visible.

>iPhone photo, 4K, Ultra HD.

took testing 2 seeds though. cfg 4.5, seed 43, steps 45

Anonymous

8/8/2025, 11:04:34 AM

No.106187931

[Report]

Anonymous

8/8/2025, 11:11:01 AM

No.106187960

[Report]

>>106187968

>>106187848

>>106187265

Got deepseek to translate the prompt, and this is the first seed output. 2nd one is baking.

cfg 4.5, seed 42, steps 45

original prompt

>This is a digitally drawn anime-style image featuring a Chinese warrior woman with hair buns, foggy round glasses with spirals on them, and wearing a blue dress with whale symbols all over it. She is seated at a wooden desk in a modern office setting. On the desk is part of a half-eaten hot dog and crumbs, the hot dog has a missing part that was bitten off and it's incomplete. She has a serious expression as she extends her right hand to shake hands with a person off-screen to the left. Likely an office colleague. Indicating a break or snack time. The desk is cluttered with various office supplies, including a pencil cup filled with colored pens and markers, a calculator, and a notebook. A Chinese flag is on the right side of the desk. A green potted plant is visible on the left side of the desk, adding a touch of nature to the otherwise busy workspace. The background features a large window with multiple panes, allowing sunlight to stream in and illuminate the room. Outside the window, lush green trees are visible, suggesting an office with a view of nature. The walls are adorned with bookshelves filled with neatly organized binders and books.

Anonymous

8/8/2025, 11:12:27 AM

No.106187968

[Report]

>>106187960

seed 43

deepseek translation

>这是一幅数字绘制的动漫风格图像,描绘了一位中国女武士。她梳着发髻,戴着雾面圆框螺旋纹眼镜,身穿蓝色连衣裙,裙上布满鲸鱼图案。她坐在现代办公室的木桌前,桌上有一个被咬了一半的热狗和碎屑,热狗缺了一块,显然被咬过。她表情严肃,正伸出右手与画面左侧的屏幕外人物握手,可能是同事,暗示休息或零食时间。桌上凌乱地摆放着各种办公用品,包括装满彩色笔和马克笔的笔筒、计算器和笔记本。桌子右侧有一面中国国旗,左侧有一盆绿色盆栽,为繁忙的工作空间增添了一丝自然气息。背景是一扇多格大窗,阳光透过窗户洒进房间。窗外可见茂密的绿树,表明办公室外是自然景观。墙上装饰着书架,整齐地摆满了文件夹和书籍。

Anonymous

8/8/2025, 11:20:08 AM

No.106188009

[Report]

Anonymous

8/8/2025, 11:22:03 AM

No.106188021

[Report]

>>106187075

it gives more movement but changes things from the original image, lmao

Anonymous

8/8/2025, 11:30:33 AM

No.106188055

[Report]

Anonymous

8/8/2025, 11:34:50 AM

No.106188074

[Report]

>>106187075

were these using kijai loras?

Anonymous

8/8/2025, 11:41:25 AM

No.106188102

[Report]

>>106188140

Anonymous

8/8/2025, 11:43:42 AM

No.106188119

[Report]

What is lightning in the context of wan2.2?

>udpate comfy yesterday

>bricked everything

>fresh isntall, fresh nodes, updated from cuda 12.4 to .8 and updated to python 3.12, installed triton 3.3, sageattention 2.2.1

>old gens before the fresh install

>2 min

>new gens after fresh install

>10 min

Fuck sake. Does anyone know whats possibly happening here? Spent 4 hours today and got it to finally gen but its slow as fuck

Anonymous

8/8/2025, 11:46:57 AM

No.106188140

[Report]

>>106188102

damn this is a tricky one

Anonymous

8/8/2025, 11:47:57 AM

No.106188150

[Report]

>>106188129

portable moment

ポストカード

!!FH+LSJVkIY9

8/8/2025, 11:50:32 AM

No.106188173

[Report]

>>106188900

>>106189103

>>106185803 (OP)

>made the collage again

neato :3

Anonymous

8/8/2025, 11:51:32 AM

No.106188180

[Report]

is the lightning workflow now the best workflow for i2v? does it beat kijai's workflow?

>>106188129

Same shit here. New Comfy update is completely broken. I get warnings like this when I try to video gen.

>Lib\site-packages\torch\_inductor\utils.py:1436] [0/0] Not enough SMs to use max_autotune_gemm mode

>\ComfyUI\ComfyUI_windows_portable\python_embeded\Lib\site-packages\torch\_inductor\compile_fx.py:282: UserWarning: TensorFloat32 tensor cores for float32 matrix multiplication available but not enabled. Consider setting `torch.set_float32_matmul_precision('high')` for better performance.

Anonymous

8/8/2025, 11:53:21 AM

No.106188191

[Report]

>>106188187

>the final model is here

Anonymous

8/8/2025, 11:54:57 AM

No.106188207

[Report]

i thought v50 would be a 2nd high res epoch but it's a merge of the one high res epoch

Anonymous

8/8/2025, 11:55:03 AM

No.106188208

[Report]

>>106188216

Anonymous

8/8/2025, 11:56:14 AM

No.106188215

[Report]

>49 and 50

Why even bother with 49?

Anonymous

8/8/2025, 11:56:17 AM

No.106188216

[Report]

>>106188208

>Means your gpu is too old

4070ti super. Not the newest or the best, but I think it should still work.

Anonymous

8/8/2025, 11:57:49 AM

No.106188228

[Report]

>>106188347

>>106188129

12.8 is for triton 3.4

Anonymous

8/8/2025, 12:00:45 PM

No.106188247

[Report]

>>106188349

>>106188183

I went back to an older version of comfyui, an early july release when it worked. It works now but I can only imagine it has something to do with custom nodes (probably wavespeed or some shit).

In your case, you might have to copy the python folders:

C:\Users\ieatassallday\AppData\Local\Programs\Python\Python31(YOUR VERSION NUMBER)\include

C:\Users\ieatassallday\AppData\Local\Programs\Python\Python31(YOUR VERSION NUMBER)\libs

To your comfyui python embedded

C:\ai\yourcomfyuifolder\python_embeded\include

C:\ai\yourcomfyuifolder\python_embeded\libs

Then again, unironically chatgpt5 helped with my issue, yours could be different

Anonymous

8/8/2025, 12:02:06 PM

No.106188255

[Report]

>>106188344

>the final chroma model meant for others to train on is a slopmerge and not an actual trained epoch

smoothbrained dev

Anonymous

8/8/2025, 12:04:35 PM

No.106188277

[Report]

I juyst fducking SHARTED

Anonymous

8/8/2025, 12:05:20 PM

No.106188285

[Report]

>>106188187

Yes! Finally time to retrain my v44 loras

Anonymous

8/8/2025, 12:08:15 PM

No.106188294

[Report]

>>106188705

Anonymous

8/8/2025, 12:09:09 PM

No.106188299

[Report]

sage attention fixed for gwen WHEN?????????

Anonymous

8/8/2025, 12:14:12 PM

No.106188321

[Report]

AHAHAHAHAHAHA

Anonymous

8/8/2025, 12:18:47 PM

No.106188344

[Report]

>>106188255

It is an actual epoch (v49), not two epochs though.

Anonymous

8/8/2025, 12:19:09 PM

No.106188347

[Report]

>>106188228

I'm actually retarded, I had to replace a wan node for it to work and forgot to change the settings from 20 steps back to 4, kek

Backing up this install 5 times, fuck doing that again

Anonymous

8/8/2025, 12:19:50 PM

No.106188349

[Report]

>>106188372

>>106188247

>I went back to an older version of comfyui, an early july release when it worked.

Which version is it? Have to try it if python file copy doesnt work

Anonymous

8/8/2025, 12:20:05 PM

No.106188353

[Report]

>>106188369

Well I did some moar testing on this FP32 CLIP.

Seems to have potential imo. There is also another FP32 Illustrious CLIP floating around that I want to get around to testing.

Not shown here, I have tested another FP16 CLIP, which seems to have exhibited behavior more similar to the FP16 CLIP inside the model(don't have a huge experiment sample size for that, admittedly).

While whatever "restoration" this guy did also has a significant effect on quality most probably, I believe that the text encoder benefits from FP32 precision. Further evidenced by the change seen here when loading FP32 CLIP as FP16

>>106187649. (Conversely, the image also changes when you load the FP16 CLIP as FP32, not necessarily for the better or worse though)

Anonymous

8/8/2025, 12:23:02 PM

No.106188369

[Report]

>>106188378

>>106188353

and what are you comparing on that pic?

Anonymous

8/8/2025, 12:23:22 PM

No.106188372

[Report]

>>106188349

>https://github.com/comfyanonymous/ComfyUI/releases/tag/v0.3.44

Yeah its always worth a revert. Others been having issues with the new update too, so I'm going to wait until further updates on a separate install

Anonymous

8/8/2025, 12:24:46 PM

No.106188378

[Report]

>>106188383

>>106188369

I believe it is written rather in a self-explanatory manner at the top of the image.

Anonymous

8/8/2025, 12:25:48 PM

No.106188383

[Report]

>>106188394

>>106188378

so fp16 is much better than fp32, thanks

Anonymous

8/8/2025, 12:26:19 PM

No.106188386

[Report]

>chroma-unlocked-v50.safetensors

Aaahhh shit, here we go again.

Anonymous

8/8/2025, 12:27:10 PM

No.106188390

[Report]

Say it with me guys. TWO MORE EPOCHS

Anonymous

8/8/2025, 12:27:54 PM

No.106188394

[Report]

>>106188421

>>106188383

(You) (You) (You) (You) (You)

(You) (You) (You) (You) (You)

(You) (You) (You) (You) (You)

Anonymous

8/8/2025, 12:32:06 PM

No.106188415

[Report]

Anonymous

8/8/2025, 12:32:10 PM

No.106188416

[Report]

>>106188423

How do I prevent ComfyUI from eating all the RAM when changing loras? I've tried different "unload" and "free memory" nodes, toggling smart memory, but it still eats extra 20GBs and I have to restart manually. It's unbearable.

Anonymous

8/8/2025, 12:32:40 PM

No.106188418

[Report]

Anonymous

8/8/2025, 12:33:20 PM

No.106188421

[Report]

>>106188519

Anonymous

8/8/2025, 12:33:49 PM

No.106188423

[Report]

>>106188431

>>106188416

download more ram

Anonymous

8/8/2025, 12:34:19 PM

No.106188427

[Report]

>>106188405

Yes, it's finally done, now off to train loras!

Anonymous

8/8/2025, 12:35:21 PM

No.106188431

[Report]

>>106188423

I already bought extra 32GBs just for wan.

>upgrade WAN to 2.2 in Comfy

>now every other gen crashes my PC and causes it to reboot

Alright

What the fuck is going on

I thought it was power spiking causing my 5090 to freak out at first but I've been monitoring the power usage and it draws less than gaming at peak load so that can't be it

And image generation still doesn't cause any issues

Anonymous

8/8/2025, 12:37:35 PM

No.106188444

[Report]

Anonymous

8/8/2025, 12:43:56 PM

No.106188477

[Report]

when adding the lightning i2v lora to kiji's workflow, do you have to change any weights or cfg?

Anonymous

8/8/2025, 12:44:52 PM

No.106188486

[Report]

>>106188441

Does it only happen when you are using Comfy ? As in no problems when gaming etc ?

Anonymous

8/8/2025, 12:51:12 PM

No.106188519

[Report]

>>106188646

>>106188421

>Peach

Her gem is no longer floating on her chest and there is only one brick instead of them getting spammed in classic AI slop fashion even though the prompt says "a brick"

>Lara

Her hair is worse and so is her costume, arguably, but background has improved

>Green hair girl

FP32 looks better

>Venom

No longer faded watermark and has more detail

>Peach and Rosalina

Better image. Rosalina no longer has ghost arm and deformed hand.

>Juri

Tossup, but you could argue the original is better

>Grey hair girl

Tossup, you might argue about lack of background, but prompt says nothing about it so it is not text encoder's fault

>Samus

Pure tossup

>Wizard

The ONLY one where FP32 performed undoubtedly worse

>Zelda

Tossup, but I prefer FP32 one.

So you have like 1 image where fp32 performed worse, the rest are either better or equal.

Anonymous

8/8/2025, 12:51:13 PM

No.106188520

[Report]

>>106186335

I tried but ended up like

>>106186399 said, you need an already big community/a lot of followers on RS, or start back when 1.5 released, AND also play it safe/censor yourself from anything that would get you banned from patreon

>tried to get into comfy UI

>use WAN i2v

>generated a blurry mess

I'm now #redpilled against diffusion.

Fuck this.

Anonymous

8/8/2025, 12:53:50 PM

No.106188535

[Report]

>>106188536

>>106188529

>he gave up after his first failure

i bet thats gotten you far in life

Anonymous

8/8/2025, 12:54:04 PM

No.106188536

[Report]

>>106188535

It works, it's just shit.

Anonymous

8/8/2025, 12:57:22 PM

No.106188555

[Report]

>>106188529

wan2gp link in op. comfyorg is currently destroying their ui making it as unstable and uncomfortable as possible

what is the annealed chroma v50?

Anonymous

8/8/2025, 1:04:46 PM

No.106188602

[Report]

>>106188619

>>106188588

It's a form of model optimization, from early tests this version seems the be the best

Anonymous

8/8/2025, 1:05:17 PM

No.106188608

[Report]

>>106188652

>gotoh hitori holding a guitar from the TV Anime bocchi the rock!

so this is the power of Chroma

Is there any reason why video generation has such wildly varying speeds? like sometimes it's only 30s/it and then next gen it's 80s/it.

Anonymous

8/8/2025, 1:06:36 PM

No.106188619

[Report]

>>106188602

>Tsukasa Jun art

based

Anonymous

8/8/2025, 1:09:23 PM

No.106188646

[Report]

>>106188519

by pairs

"holds a brick" in the positive - fp16 follows

"green swimsuit" in the positive - fp16 follows

"bra" in the positive - fp16 follows

etc

flawless fp16 victory

>looks worse/better

subjective, needs more samples, useless otherwise

Anonymous

8/8/2025, 1:09:57 PM

No.106188652

[Report]

>>106188608

wow dude nice gen!

why does it do this with wan video gen

Anonymous

8/8/2025, 1:12:17 PM

No.106188675

[Report]

>>106188610

the sampler has an effect. try out euler instead of unipc it's more consistent in gen times

Anonymous

8/8/2025, 1:12:52 PM

No.106188682

[Report]

Anonymous

8/8/2025, 1:13:31 PM

No.106188687

[Report]

OK I am convinced that it is an LLM instructed to troll now, well played but I am done with giving (You)s, cya.

>>106188670

You are not gonna get far without posting workflow I think.

You are probably using a wrong node somewhere.

Anonymous

8/8/2025, 1:14:36 PM

No.106188697

[Report]

Anonymous

8/8/2025, 1:15:51 PM

No.106188705

[Report]

>>106188294

my jewgyptian snow white wife

Anonymous

8/8/2025, 1:19:52 PM

No.106188731

[Report]

>>106188742

>>106188610

go into nvidia settings and set this to Prefer No Sysmem Fallback so that Comfyui will stop trying to fuck you over with normal RAM gens.

for example you might open a side application that uses 1GB of VRAM and then your gens will start trying to use normal RAM which is slow.

Anonymous

8/8/2025, 1:21:12 PM

No.106188742

[Report]

>>106188731

I mean I'm only using 28GB out of 32 on the vram department, so that's not really an issue.

Anonymous

8/8/2025, 1:32:45 PM

No.106188825

[Report]

>>106188588

>>106188603

>chromaDev samefaging again,

Stop shilling man, it's not funny, move on with your life lodestones. Chroma is dead, people here aren't using your furry shit. MOVE ON!

is it me or is chroma v50 more coherent but also more sloppy?

Anonymous

8/8/2025, 1:34:02 PM

No.106188836

[Report]

>>106188884

>>106188828

Can you post some examples? Waiting for the gguf.

Anonymous

8/8/2025, 1:34:38 PM

No.106188843

[Report]

the day of cope has arrived

Anonymous

8/8/2025, 1:34:52 PM

No.106188845

[Report]

>>106186711

I tried this and my outputs start turning red. How should I set the weights? Kijai has it 3 for high noise and 1 for low noise but I don't know if that works with the new lightning 2.2 loras.

Anonymous

8/8/2025, 1:40:24 PM

No.106188884

[Report]

Anonymous

8/8/2025, 1:42:21 PM

No.106188894

[Report]

It's still slopped...

Anonymous

8/8/2025, 1:42:34 PM

No.106188896

[Report]

>>106188903

so what happened to 1 month to cook at 1024 for chroma?

Anonymous

8/8/2025, 1:42:40 PM

No.106188899

[Report]

>Chroma still has jacked up hands.

Well, it was a good run fellas.

ポストカード

!!FH+LSJVkIY9

8/8/2025, 1:42:40 PM

No.106188900

[Report]

>>106188976

>>106188173

smell ya later <3

Anonymous

8/8/2025, 1:42:55 PM

No.106188901

[Report]

>>106188961

2.1 lightx2v > 2.2 lightning lora

At least for anime. No doubt in my mind I'm getting better results with kijai's workflow using the old lora.

The new one clearly has more 3D-like motion, which doesn't work well for anime.

Anonymous

8/8/2025, 1:43:28 PM

No.106188903

[Report]

>>106189090

>>106188896

You throw more GPU at it, then it goes faster

Anonymous

8/8/2025, 1:48:51 PM

No.106188930

[Report]

why are doomGODS always right?? hopetards continue to guzzle slop to the point of embarrassment. SDXL remains winning over 2 years later

Anonymous

8/8/2025, 1:51:04 PM

No.106188950

[Report]

>>106188441

You can hard-lock your system if you mix up model datatypes. You might see them show up as "shape" errors. Anyway, I think what happens is the GPU locks up and falls off the PCIe bus, and then the video driver get rugpulled, and at that point your system is crashed and the kernel/windows reboots it.

Anonymous

8/8/2025, 1:51:55 PM

No.106188961

[Report]

>>106188901

yeah it's pretty ass, I'll keep using lightx2v.

ポストカード

!!FH+LSJVkIY9

8/8/2025, 1:54:07 PM

No.106188976

[Report]

>>106188900

since dubs, get one free<3

>LOVE & LOVE IS THE ONLY THING

Anonymous

8/8/2025, 2:02:07 PM

No.106189027

[Report]

>>106189060

>>106188187

>>106188603

Flux pro at home for free! Thank you lodestones

Anonymous

8/8/2025, 2:03:22 PM

No.106189030

[Report]

Anonymous

8/8/2025, 2:07:15 PM

No.106189060

[Report]

>>106189027

Nice. So our universe sits in a dew drop, well, at least it's better than it all being a simulation.

>>106188903

it went x3 faster than expected and both last versions came at the same time?

Anonymous

8/8/2025, 2:14:02 PM

No.106189103

[Report]

>>106189113

Anonymous

8/8/2025, 2:15:25 PM

No.106189113

[Report]

>>106189103

This nigga is making collage bait!

Anonymous

8/8/2025, 2:15:42 PM

No.106189118

[Report]

>>106189090

Different anon, also confused about 49+50 concurrent release. Though, I don't feel like it was getting any sharper after testing this every day

https://huggingface.co/lodestones/chroma-debug-development-only/tree/main/staging_base_4

Anonymous

8/8/2025, 2:16:49 PM

No.106189123

[Report]

>>106189683

>>106189090

AFAIK they didn't train the last epoch (v50) fully, instead they merged it with another high resolution training fork they had made.

So technically v49 is the 'true' release since it was a full 1024 resolution epoch and not merged with a fork. Of course the only thing that matters is which gives the best results.

Anonymous

8/8/2025, 2:18:19 PM

No.106189143

[Report]

>>106189388

>final chroma version

>anatomy still fucked

alright, what will be shilled next?

Anonymous

8/8/2025, 2:23:22 PM

No.106189180

[Report]

Anonymous

8/8/2025, 2:34:18 PM

No.106189256

[Report]

>chroma

this is a QWEN thread, poorfags!!!

Anonymous

8/8/2025, 2:36:19 PM

No.106189270

[Report]

>>106189696

Anonymous

8/8/2025, 2:36:49 PM

No.106189274

[Report]

same with eyes on larger images

Anonymous

8/8/2025, 2:39:23 PM

No.106189291

[Report]

>>106186926

That one is particularly nice.

Anonymous

8/8/2025, 2:40:14 PM

No.106189296

[Report]

Anonymous

8/8/2025, 2:41:19 PM

No.106189304

[Report]

>>106189351

Anonymous

8/8/2025, 2:42:21 PM

No.106189309

[Report]

>>106189327

yea, all small details seem to be fixed now, and the prompt following is great, maybe not quite qwen level, but its also not style locked like qwen is

Anonymous

8/8/2025, 2:44:03 PM

No.106189321

[Report]

So do I grab both versions of chroma?

Anonymous

8/8/2025, 2:44:46 PM

No.106189325

[Report]

>>106185951

2080TI Will suffice for most picture genning up to Illustrious

Anonymous

8/8/2025, 2:45:01 PM

No.106189327

[Report]

>>106189309

she has different eye color and ear piercing also long nails

Anonymous

8/8/2025, 2:45:54 PM

No.106189335

[Report]

>>106189286

Looks great! What prompts did you use to get that cinematic look?

Anonymous

8/8/2025, 2:46:59 PM

No.106189341

[Report]

>>106189372

Anonymous

8/8/2025, 2:47:24 PM

No.106189351

[Report]

>>106189304

HOLY FUGGIN SLOPPA

Anonymous

8/8/2025, 2:49:31 PM

No.106189372

[Report]

>>106189444

>>106189341

These aren't even made with the newest checkpoint though kek, doing it a disservice.

>>106189143

Seriously, why is chroma often broken for most basic ass shit?

I am getting anatomy errors that weren't common in SD1.5 days.

Did they fuck up training params so much that they destroyed base model's knowledge?

This was such a wasted opportunity to become the next big thing in local genning.

Shame.

Anonymous

8/8/2025, 2:53:34 PM

No.106189397

[Report]

>>106189628

>>106189388

can you show me a example? maybe your using a bad sampler combo

Anonymous

8/8/2025, 2:57:02 PM

No.106189418

[Report]

>>106189683

>MFW we failed to solve the nogen negativity disease

Anonymous

8/8/2025, 2:57:37 PM

No.106189424

[Report]

bonging my tangent

So Chroma-annealed is just the new name for detail-calibrated then?

Anonymous

8/8/2025, 3:00:04 PM

No.106189444

[Report]

>>106189372

>it must be the NEWEST

>if i can recognize it, its SHIT!!

you are autistic and annoying as fuck bro

Anonymous

8/8/2025, 3:00:16 PM

No.106189445

[Report]

>>106189471

>>106189434

I don't think so? I could not find any info on what that is

>>106189388

because it was trained at 512x512 on a lobotomized version of the already underperforming flux schnell. not only did it have to re-learn basic coherence which was lost during de-distillation, it also had to try and learn new anatomy/tags on top. chroma is a foundational model project being trained on a SDXL finetune budget. he constantly tweaks things and merges things every other epoch. the dataset kept shrinking as the epochs were 'taking too long'.

anyone who was a veteran of the "resonance cascade" furfag failbake knew what to expect with this one. 'locking in' isn't a thing, you can tell by epoch 13 whether or not a model will sort itself out. chroma could've worked if it was trained normally on a bigger dataset with more compute, but compute (money) remains the ultimate moat keeping local NSFW finetunes from ever reaching their full potential.

Anonymous

8/8/2025, 3:01:46 PM

No.106189466

[Report]

>>106189286

Nice, looks like a promotional film still from a movie ~2010

Anonymous

8/8/2025, 3:02:09 PM

No.106189471

[Report]

>>106189445

Looks like he wants to keep it a mystery or maybe he'll explain it in the new model card.

Anonymous

8/8/2025, 3:02:14 PM

No.106189473

[Report]

>replying to yourself to sound "smart"

ooffff

Anonymous

8/8/2025, 3:03:58 PM

No.106189490

[Report]

>doomfags were right again

that's it, i'm subbing to midjourney

Anonymous

8/8/2025, 3:04:41 PM

No.106189500

[Report]

any checkpoints for making looping animations\gifs\webm???

Anonymous

8/8/2025, 3:06:42 PM

No.106189522

[Report]

>>106189547

>q8 out

lessgo

Anonymous

8/8/2025, 3:09:02 PM

No.106189540

[Report]

Anonymous

8/8/2025, 3:09:50 PM

No.106189547

[Report]

>>106189522

WHERE AT BIG DAWG

Anonymous

8/8/2025, 3:10:16 PM

No.106189552

[Report]

>>106190193

QUICK someone besides the schizo make the fucking bake

Anonymous

8/8/2025, 3:11:10 PM

No.106189558

[Report]

>>106189434

My guess is 50a is a 49 detail merge with a smidge of extra training at a lower LR

Anonymous

8/8/2025, 3:12:49 PM

No.106189571

[Report]

>>106189628

>>106189388

>>106189458

Obvious samefag, stop being so pathethic

Chroma is easily the best base model for photorealism and equally good as any other for art, and yes, despite being trained on a shoestring budget compared to its competition.

Like with every previous successful model, the potential comes with loras and finetunes, and this excels with loras already, super easy to train a person or style lora.

And of course it is uncensored, with understanding of genitals trained back in, and no mutilated nipples, so training NSFW loras for this will be a breeze.

Anonymous

8/8/2025, 3:14:40 PM

No.106189589

[Report]

>>106189608

I miss the R guy

Anonymous

8/8/2025, 3:16:37 PM

No.106189608

[Report]

>>106189589

hes literally in the thread still dumbass

Anonymous

8/8/2025, 3:18:23 PM

No.106189625

[Report]

I will never not be smug about the failure of chroma.

>>106189397

I delete most of the deformed slop I get but here are some that I forgot to:

https://litter.catbox.moe/kdmmggdk3wlmwaom.png

https://litter.catbox.moe/10q16jjat4sxih9w.png

https://litter.catbox.moe/258kgr18nsq123dz.png

>>106189458

Thanks for the response.

More or less what I expected to hear.

>>106189571

Stop being a schizo.

I think chroma CAN make good gens under some select circumstances but the overall package is too damaged to be worthwhile.

I can just gen NSFW on SDXL finetunes which are much faster and reliable.