/ldg/ - Local Diffusion General

Chroma thread of disappointment

Anonymous

8/8/2025, 4:34:46 PM

No.106190466

>>106190488

Chroma thread of happiness

Anonymous

8/8/2025, 4:35:18 PM

No.106190472

Anonymous

8/8/2025, 4:35:19 PM

No.106190473

Blessed thread of frenship

ポストカード

!!FH+LSJVkIY9

8/8/2025, 4:35:29 PM

No.106190476

>>106190496

>>106190593

blessed thread of frenzone ;3

>>106189589

<3

Anonymous

8/8/2025, 4:35:46 PM

No.106190477

looks like he is gonna make a low step version then next is likely wan2.2

ポストカード

!!FH+LSJVkIY9

8/8/2025, 4:36:33 PM

No.106190483

>>106190496

>>106190689

>>106190450 (OP)

made the catalog again ;3

its like a fun game for me to see if the schizoid detects me or not muehehee

Anonymous

8/8/2025, 4:37:03 PM

No.106190486

>>106190507

>>106190464

>this epoch for sure!

>just waitmaxx bro!

>this epoch for sure!

>just waitmaxx bro!

>this epoch for sure!

>ok we are out of epochs but finetunes and loras are coming to save us!

>just waitmaxx bro!

>just wait...

>anytime now, it will be amazing!

Anonymous

8/8/2025, 4:38:03 PM

No.106190494

Chroma thread of racism

. : P 0 $ U T 0 K ā D O : .

8/8/2025, 4:38:10 PM

No.106190496

>>106193724

Anonymous

8/8/2025, 4:38:22 PM

No.106190498

>>106191173

>>106190488

no one knows what annealed is but definition wise its prob a version made for finetuning?

Anonymous

8/8/2025, 4:38:38 PM

No.106190502

>>106190517

>>106190488

>chroma-1hd

just a renamed v50

>gguf

version for poorfags who should off themselves

Anonymous

8/8/2025, 4:39:04 PM

No.106190507

>>106190486

Chroma has already been good for awhile

Tried a Chroma v44 trained lora against v49, v50, v50 annealed respectively.

Technically v49 should be best since it's 'closest' to v44, and I think it kind of is, v50 is very close and 'v50 annealed' had the worst likeness.

. : P 0 $ U T 0 K ā D O : .

8/8/2025, 4:39:24 PM

No.106190510

>>106190450 (OP)

>Rinko Kobayakawa

cute, CUUTE!

Anonymous

8/8/2025, 4:39:59 PM

No.106190517

>>106190502

okay, but no one knows what annealed is?

Anonymous

8/8/2025, 4:40:03 PM

No.106190519

>>106190509

makes sense, its gonna be the most offput from V44

does 2.2 have a fucking memory leak or something? why does it become basically non functional after 2-3 gens

Anonymous

8/8/2025, 4:40:34 PM

No.106190525

Anonymous

8/8/2025, 4:41:03 PM

No.106190530

>>106190521

64GB+ ram or if will have major issues when swapping

Anonymous

8/8/2025, 4:42:06 PM

No.106190542

>>106190560

>>106190509

okay so 50 annealed looks more realistic, got it

Anonymous

8/8/2025, 4:43:36 PM

No.106190560

>>106190542

? just more smaller details cause that was the entire point, and its HD / V50, annealed I think is one made for finetuning

Hey hey Anon, Anon here.

Quick comparison of Euler on Chroma-HD-Annealed and Chroma-HD.

Since I'm retarded, the annealed version is on the LEFT of the plots.

Got that?

Left: Chroma-HD-Annealed.safetensors

Right: Chroma-HD.safetensors

More stuff to come, surely.

Buh-bye.

Titty fairy:

https://files.catbox.moe/0uuist.png

Trash girl:

https://files.catbox.moe/f42ft0.png

Rat girl:

https://files.catbox.moe/tv35gr.png

Mixed media:

https://files.catbox.moe/jl7vfm.png

Oil painting:

https://files.catbox.moe/vfjiaj.png

Anonymous

8/8/2025, 4:45:39 PM

No.106190568

>>106190605

Anonymous

8/8/2025, 4:46:09 PM

No.106190571

Has any of you had any luck with this version of radial attention?

https://github.com/woct0rdho/ComfyUI-RadialAttn if so

- Does gen stay consistent over 10 seconds?

- Is it faster?

I have sage2.2 and its already pretty fast, just curious

Anonymous

8/8/2025, 4:48:11 PM

No.106190590

>noise? check

>nonsensical details? check

>blurry? check

>vintage analogcore y2k slop? check

it's chromapostin time!

>>106190476

nice to see you again

Anonymous

8/8/2025, 4:49:02 PM

No.106190600

>>106190648

>>106190593

said no one ever

Anonymous

8/8/2025, 4:49:04 PM

No.106190601

>>106190633

This is a popular thread I see.

Anonymous

8/8/2025, 4:49:21 PM

No.106190604

>>106190640

I thought Qwen-Image could do image editing like Kontext? Why isn't there any workflow for that?

Anonymous

8/8/2025, 4:49:21 PM

No.106190605

>>106190568

>VR

it sucks anon

unless your vision is SO shitty you cant see the pixels\lens-smear\bezel

How do I prevent ComfyUI from eating all the RAM when changing wan loras? I've tried different "unload" and "free memory" nodes, toggling smart memory, but it still eats extra 20GBs and I have to restart manually. It's unbearable.

Anonymous

8/8/2025, 4:50:27 PM

No.106190618

>>106190664

>>106190593

>tripcode-user

he never left dumbass

ポストカード

!!FH+LSJVkIY9

8/8/2025, 4:51:56 PM

No.106190633

>>106190653

>>106190601

the schizo baker just talks to himself to race to the bump limit ;3

>>106190593

<3

>>106190562

Mixed media errors out.

Anonymous

8/8/2025, 4:52:30 PM

No.106190640

>>106190655

>>106190604

read the repo

ポストカード

!!FH+LSJVkIY9

8/8/2025, 4:52:58 PM

No.106190648

>>106190600

its not that serious mate ;3

Anonymous

8/8/2025, 4:53:33 PM

No.106190653

>>106190689

>>106190633

Whatever helps you sleep at night :]

Anonymous

8/8/2025, 4:53:46 PM

No.106190654

>>106190697

>>106190615

>bro how do I modify the contents of a 20 GB file without using any memory?

Anonymous

8/8/2025, 4:53:58 PM

No.106190655

>>106190692

>>106190640

> When it comes to image editing, Qwen-Image goes far beyond simple adjustments. It enables advanced operations such as style transfer, object insertion or removal, detail enhancement, text editing within images, and even human pose manipulation—all with intuitive input and coherent output. This level of control brings professional-grade editing within reach of everyday users.

now what?

Anonymous

8/8/2025, 4:54:21 PM

No.106190662

Feels like i should pay some visits to n*pt again

Bad doggo!

Anonymous

8/8/2025, 4:54:40 PM

No.106190664

Anonymous

8/8/2025, 4:56:35 PM

No.106190676

>>106190509

Yeah the third looks the most natural out of the three.

Anonymous

8/8/2025, 4:57:34 PM

No.106190687

>>106190699

>>106190562

vfjiaj is broken too.

ポストカード

!!FH+LSJVkIY9

8/8/2025, 4:57:43 PM

No.106190689

>>106190755

>>106190653

my favorite is when he debates himself ;3

>>106190615

invest in nvme m.2

reboot entire pc in 6 seconds

>>106190483

it was the soup spoon ;3

Anonymous

8/8/2025, 4:57:57 PM

No.106190692

>>106190714

>>106190655

you skipped the relevant part

Anonymous

8/8/2025, 4:58:02 PM

No.106190694

>>106190615

>how do I fix a rat's next of poor memory management, dependancy hell and bloat?

Anonymous

8/8/2025, 4:58:22 PM

No.106190697

>>106190713

>>106190654

Are you retarded?

1. this is not how lora works

2. it modifies, not duplicates

3. it eats few GBs after each lora change

Anonymous

8/8/2025, 4:58:35 PM

No.106190699

>>106190687

>>106190638

Maybe its my internet I dunno

Anonymous

8/8/2025, 4:58:42 PM

No.106190701

>>106191165

Anonymous

8/8/2025, 4:59:18 PM

No.106190709

>>106190716

ALERT

chroma-unlocked-v50-flash-heun.safetensors

flash version use heun 10 steps CFG=1

4 minutes ago

Anonymous

8/8/2025, 4:59:38 PM

No.106190713

>>106190789

>>106190697

What do you suppose a LoRA does you retard

Why would you think a LoRA might use memory whenever its changed? Maybe it's because it's doing math on a multi billion parameter matrix?

>>106190692

> We are thrilled to release Qwen-Image, an image generation foundation model in the Qwen series that achieves significant advances in complex text rendering and precise image editing. Experiments show strong general capabilities in both image generation and editing, with exceptional performance in text rendering, especially for Chinese.

Anything else?

Anonymous

8/8/2025, 5:00:18 PM

No.106190716

>>106190709

>flash

is this distilled

Anonymous

8/8/2025, 5:00:27 PM

No.106190721

>>106190738

mogao remains winning

Anonymous

8/8/2025, 5:01:01 PM

No.106190732

Anonymous

8/8/2025, 5:01:40 PM

No.106190738

>>106190743

>>106190721

If qwenimg wasn't slopped it would be seedream/mogao tier

>>106190738

>if local wasn't slop it would be good!

we knew this since midjourney

Anonymous

8/8/2025, 5:03:02 PM

No.106190749

>>106190743

Well I can't wait for your serious finetune you release for free.

Anonymous

8/8/2025, 5:03:19 PM

No.106190754

>>106190447

>>106190471

Thanks. Didn't expect qwen to stubbornly try to make sense of so many purely danbooru tags.

Anonymous

8/8/2025, 5:03:34 PM

No.106190755

>>106190689

What else do you like about the baker?

Anonymous

8/8/2025, 5:03:38 PM

No.106190756

>>106190743

are they still being sued

>testing simple porn i2v prompts

>no matter what, if a woman spreads her legs she begins touching her vagina

>even if i tell it specifically to not have her do that

>thats assuming it listens to the instructions regarding a bare vagina in the first place

am i fucking retarded? is it a lora issue?

Anonymous

8/8/2025, 5:04:47 PM

No.106190768

>>106190882

>>106190759

can she do something else with her hands? the devils playthings n all

Anonymous

8/8/2025, 5:05:08 PM

No.106190773

>>106190882

>>106190759

Do you know the concept of how prompts work? >Don't think about the elephant.

It does not "think". It only knows images that are associated with think and with elephants. Everything should be prompted positively, so you describe her hands doing something other than touch her vagina.

Anonymous

8/8/2025, 5:05:39 PM

No.106190777

you know the latent preview that shows in a node?

what if it were to the side of it, or above it?

do we have the knowledge to do this?

Anonymous

8/8/2025, 5:06:29 PM

No.106190789

>>106190808

>>106190713

Retard. It does not add new layers and weights.

Anonymous

8/8/2025, 5:06:52 PM

No.106190796

>>106191196

>>106190714

the editing model isn't out yet. learn to fucking read.

Anonymous

8/8/2025, 5:06:54 PM

No.106190797

>>106191196

>>106190789

Retard, when you do math on anything it must put them in memory. Are you really this programming illterate?

x(model) * y(lora)

both must be in memory to do the calculation

>follow 2.2 guide in op

>i2v workflow works fine

>t2v doesnt

do i need extra shit the guide just doesn't mention?

Anonymous

8/8/2025, 5:08:26 PM

No.106190815

Anonymous

8/8/2025, 5:08:29 PM

No.106190816

>>106190615

if you pulled recently, it has a really comfy memory leak.

Anonymous

8/8/2025, 5:09:07 PM

No.106190824

>>106190851

>>106190808

> what is overwrite

Anonymous

8/8/2025, 5:10:07 PM

No.106190843

>>106190813

I have faith that you can figure out t2i

Just think about it, think hard

>>106190824

Think really hard about why this might be a problem if you keep changing LoRAs.

>>106190768

>>106190773

i must be retarded because my attempts at a nsfw prompt are just creating sfw content you could post on fucking tiktok.

it honestly has really solid motion it's just... missing the point?

>postive prompt

[spoiler]The woman spreads her legs, revealing her bare vagina. She has no underwear on. She uses her hands to support herself. Her toes wiggle.[/spoiler]

>negative prompt

[spoiler]色调艳丽,过曝,静态,细节模糊不清,字幕,风格,作品,画作,画面,静止,整体发灰,最差质量,低质量,JPEG压缩残留,丑陋的,残缺的,多余的手指,画得不好的手部,画得不好的脸部,畸形的,毁容的,形态畸形的肢体,手指融合,静止不动的画面,杂乱的背景,三条腿,背景人很多,倒着走, camera shake, touching vagina, masturbating[/spoiler]

I want my chroma danbooru finetune, NOW

More seriously, we only managed to get an underbaked lumina 2 finetune recently, and an auraflow one later this year, what makes you think someone would finetune chroma with weeb stuff, which would cost much more than both auraflow and lumina put together?

At least for a weeb finetune I'm not holding my breath for a chroma illust, I don't know about realistic stuff, they might have more money

Anonymous

8/8/2025, 5:13:45 PM

No.106190888

>>106191073

>>106190813

>replace I2V models in model loaders with T2V ones

>replace I2V loras in lora loaders with T2V ones

>replace WanImageToVideo with EmptyLatentVideo

I can see what kind of people complain about comfyui being too much

>>106190887

Cause finetunes on those needed to make the model learn nsfw from scratch, chroma already knows that and tons of nsfw concepts so they just need like 1000 images of the style

Anonymous

8/8/2025, 5:15:50 PM

No.106190915

>>106190887

It knows everything, a simple LoRA of what's relevant to you will make it do what you want. There's an insane false expectation that any model can do anything for everything for everyone. Even your brain works this way. There is always going to be signal to noise and the only way to get hyper-competent is to FOCUS.

Anonymous

8/8/2025, 5:16:49 PM

No.106190929

>>106190937

>ram usage goes up 30gb around vae decode with qwen image

cant imagine having less than 128gb ram

>>106190929

More than 64gb is for pretentious fags

>>106190882

>positive [...] underwear

>negative [...] vagina

Anonymous

8/8/2025, 5:18:20 PM

No.106190947

>>106190988

>>106190851

So following your logic weights taking 10GBs in RAM will require another 10GBs to apply a lora in order to prevent the restoring original weights problem?

Anonymous

8/8/2025, 5:18:39 PM

No.106190956

>>106190908

>1000

actually a hundred should be enough I would think

>>106190937

64GB is bare minimum for using video models / text models

Anonymous

8/8/2025, 5:18:42 PM

No.106190957

>>106190937

routinely need more than 64gb with video gen

Anonymous

8/8/2025, 5:19:45 PM

No.106190969

>>106191015

>>106190887

auraflow and lumina guys clearly misunderstood how you are supposed to train a model

you just have to train a lora for every single artist style and sex position

Anonymous

8/8/2025, 5:20:21 PM

No.106190971

>>106190942

lets give it a whirl without that

>>106190937

>looks at wan2.2, At 8bit that is 15GB for low noise + 15GB for high noise + 12GB for T5 + 1GB for vae + a few GBs for loras

And im probably forgetting some stuff

>>106190947

Yes, pretty much. If your model takes 10GB in RAM and you want to safely apply a LoRA, you have two real options:

- Keep a copy of the original weights (another ~10GB) so you can restore them without reloading,

or

- Reload the base model every time before applying a new LoRA, which still means allocating that memory again.

If you don’t do either, and just keep stacking LoRAs, the math piles up and you end up with corrupted weights.

The only alternative would be some kind of tensor streaming method to apply LoRAs on-the-fly, but that’s complex, slower, and easier to break. Most systems don’t bother, it's simpler and safer to reload and no one frankly cares about someone who can't afford 64 GB of RAM.

And then you'll just bitch about how long it takes to change a LoRA.

Anonymous

8/8/2025, 5:22:16 PM

No.106190996

>>106191011

>>106190986

+ a few GB for web browser cause that shit eats ram + a few GB for OS

Anonymous

8/8/2025, 5:23:53 PM

No.106191011

>>106190986

>>106190996

oh and if you want to use the qwen prompt extender that is like 5GB more

>>106190969

Where's your dataset again with every sex position properly labeled with thousands of examples and every artist style someone could ever want to prompt also accurately labeled? Based on what you said, you must know it exists so could you link it?

Anonymous

8/8/2025, 5:24:57 PM

No.106191027

>>106191040

>>106191015

didn't chroma guy make it public? I heard he used gemini pro + whatever the dan tagger was

Anonymous

8/8/2025, 5:26:00 PM

No.106191036

>>106191047

>>106191015

im sure someone (that is not me) who is going to train all those things into chroma will be right on it

>>106191027

Holy shit you actually think that's accurate enough for some anon with a missionary position fetish where the cum is tastefully dripping from her larger than average clit and painted by J.C. Leyendecker from his 1930s grey period?

Anonymous

8/8/2025, 5:27:04 PM

No.106191042

>>106191068

you can finetune layers of chroma. it is entirely possible to do this on a commercial 24gb gpu

Anonymous

8/8/2025, 5:27:55 PM

No.106191047

>>106191036

I mean yes, I'll make a LoRA of things that are relevant to me and then I won't share it, please understand.

Anonymous

8/8/2025, 5:28:08 PM

No.106191050

>>106191040

what? it does some freaky art styles

Anonymous

8/8/2025, 5:28:33 PM

No.106191054

>>106191040

>missionary position where the cum is tastefully dripping from her larger than average clit and painted by J.C. Leyendecker from his 1930s grey period?

I can imagine the kino soul. Best gen ITT.

Anonymous

8/8/2025, 5:29:08 PM

No.106191059

>>106190882

holy fucking sloppa

Anonymous

8/8/2025, 5:29:11 PM

No.106191063

>>106191040

if you've ever used chroma you'd know this is entirely possible and quite easy. just don't be a promptlet and i don't mean that as bait, you just have to know how to prompt by using joycaption or gemini to get a rough idea of how.

Anonymous

8/8/2025, 5:29:45 PM

No.106191068

>>106191042

i will be sure to enjoy your finetune in 2 years

Anonymous

8/8/2025, 5:30:14 PM

No.106191073

>>106191352

>>106190888

this is what i get using the provided workflow in the 2.2 op. ive already tried changing the models anon.

I'm gonna sound like a dummy for saying this, but have you guys ever tried upscaling your videos? They don't just get bigger, they get sharper and better. It's amazing.

Anonymous

8/8/2025, 5:30:42 PM

No.106191081

>>106191166

>>106190988

So ok, I've applied the lora A and now there are W` (W x lora A) and W (original weights) taking 10GBs each in RAM (20GBs in total)

After that I've applied the lora B, so there are now W` (W x lora A), W , W`` (W x lora B) and W again in RAM, taking 10GBs each (40GBs in total)

Correct?

Statler/Waldorf

8/8/2025, 5:31:30 PM

No.106191089

>>106191148

>>106190882

>色调艳丽,过曝,静态,细节模糊不清,字幕,风格,作品,画作,画面,静止,整体发灰,最差质量,低质量,JPEG压缩残留,丑陋的,残缺的,多余的手指,画得不好的手部,画得不好的脸部,畸形的,毁容的,形态畸形的肢体,手指融合,静止不动的画面,杂乱的背景,三条腿,背景人很多,倒着走

>spoiler

BEAHAGAHAGAH

Anonymous

8/8/2025, 5:32:08 PM

No.106191095

>he doesn't know

>>106191079

They always come out with that nasty filtered look for me, what do you use?

Anonymous

8/8/2025, 5:33:52 PM

No.106191115

>>106190942

removed that, the underwear is still present, and she's back to touching her still covered vagina for whatever reason. probably too nsfw to post even though everything is covered for context

Anonymous

8/8/2025, 5:33:53 PM

No.106191116

>>106191079

720x1280 wan is already good enough, no need for more, and if you need more, u use starlight mini with pirated topaz

Anonymous

8/8/2025, 5:35:42 PM

No.106191134

>>106191124

looks like the original video was too shit already, use another workflow

Anonymous

8/8/2025, 5:35:44 PM

No.106191135

>>106193212

Anonymous

8/8/2025, 5:36:34 PM

No.106191142

>>106191152

>>106191100

>VLC play video fullscreen

>record screen with OBS

done

Anonymous

8/8/2025, 5:37:02 PM

No.106191148

>>106191089

isnt the chinese sloppa pretty standard for negatives to not take up too much space

Anonymous

8/8/2025, 5:37:24 PM

No.106191152

>>106191329

>>106191142

put your trip back on

Anonymous

8/8/2025, 5:38:03 PM

No.106191158

>>106191169

>>106191124

Nice upscale, but how would it look on a human though ?

Anonymous

8/8/2025, 5:38:22 PM

No.106191165

>>106190701

Alright thanks.

Anonymous

8/8/2025, 5:38:25 PM

No.106191166

>>106191296

>>106191081

No, not quite. It's unlikely each LoRA keeps a full copy of the model in memory.

More realistically:

- The base model sits in RAM (10GB)

- Applying LoRA A modifies the weights in-place or overlays them, using some memory but not another full 10GB

- Applying LoRA B likely reuses the same memory used for LoRA A’s operations, unless it’s larger, in which case it may allocate a bit more

You're not stacking full model copies, but here's the catch: If you don’t reset or reload the base model before applying a new LoRA, you’re doing math on already-modified weights. That leads to cumulative errors or corrupted outputs.

So when switching LoRAs, you have to

- Unload the modified model from memory

- Reload the base model from disk

- Then apply the new LoRA(s) cleanly

And yes, that reload process is rarely 100 percent memory-efficient. You’ll probably get memory creep until the garbage collector kicks in and clears unused objects.

Something Comfy does, and you could call it a feature, is keep models dangling in memory when it can. That’s why something like Wan, assuming it fits in RAM, can be switched to instantly, and why you can go from Wan to Chroma nearly instantly as well if you've loaded the models once.

Anonymous

8/8/2025, 5:38:59 PM

No.106191169

Anonymous

8/8/2025, 5:39:25 PM

No.106191173

>>106190498

Makes sense to me. I've tested both a bit and I am going to stay with v50 for generation.

Anonymous

8/8/2025, 5:41:07 PM

No.106191196

>>106191246

Anonymous

8/8/2025, 5:43:42 PM

No.106191223

>>106191233

chroma bros...

Anonymous

8/8/2025, 5:45:05 PM

No.106191233

>>106191223

just train a lora

Anonymous

8/8/2025, 5:45:42 PM

No.106191238

>>106191289

>>106190908

I don't know, I think the community would rather use an sdxl model with already fucktons of characters in the model itself, and just train loras for specific character/styles, than build the missing 1M of characters and styles from scratch with loras, even if the later is a better model

Dunno about multiple characters bleeding and style mixing with chroma, maybe a weeb finetune would beat NAI

Upgrading my vram has made me realize what insects vramlets are

Anonymous

8/8/2025, 5:47:29 PM

No.106191257

>>106191273

vramchads of today are tomorrows vramlets

Anonymous

8/8/2025, 5:47:46 PM

No.106191263

>>106191247

>t. went from 1060 3gb to 1060 6gb (laptop)

Anonymous

8/8/2025, 5:48:53 PM

No.106191273

>>106191257

Such is the the circle of life

Anonymous

8/8/2025, 5:49:23 PM

No.106191282

>>106191247

Truer words have never been spoken. Vramlet's are lower than the scum to be scraped off my boot.

Anonymous

8/8/2025, 5:49:52 PM

No.106191289

>>106191238

and? people still use 1.5 cause they are poor with only 4GB of vram

Anonymous

8/8/2025, 5:50:48 PM

No.106191296

>>106191327

>>106191166

> So when switching LoRAs, you have to

> - Unload the modified model from memory

> - Reload the base model from disk

> - Then apply the new LoRA(s) cleanly

But original weights W in the memory already right next to the modified W`?

>>106190808

>>106190851

>>106190988

So why first you claimed that these extra 20GBs eaten by ComfyUI is how it's supposed to be and now say it's a "feature"?

And how to turn off that "feature"?

Anonymous

8/8/2025, 5:53:43 PM

No.106191327

>>106191410

>>106191296

At this point I don't care. Write a patch since you are so smart. I really don't really care what someone who can't afford RAM for $50 thinks.

Anonymous

8/8/2025, 5:53:47 PM

No.106191329

using grok to elaborate on simple prompts, seems to work

A sleek, futuristic car interior from the driver's seat perspective, Ryan Gosling gripping the steering wheel with quiet intensity, his face bathed in the vibrant glow of a high-tech dashboard, driving down a rain-slicked street at night, surrounded by towering neon signs in vivid pinks, blues, and greens, casting reflections on the wet pavement, a massive, bold sign with the text "LDG" in glowing white letters dominating the skyline, dystopian urban buildings in the background, cinematic lighting, ultra-detailed, in the gritty, high-tech noir style of Blade Runner 2077, immersive and atmospheric.

Anonymous

8/8/2025, 5:56:52 PM

No.106191352

>>106191468

>>106191073

I'm pretty sure you need fp8 scaled clip if you're using fp8 scaled checkpoints

So is nu chroma good or should I just kill myself

Anonymous

8/8/2025, 5:57:55 PM

No.106191361

>>106191348

Goose is a /ldg/ lurker, I knew it!

Anonymous

8/8/2025, 5:59:25 PM

No.106191371

>>106191348

there is a node called qwen extender that works good as well and runs local. Otherwise gemini is the best cloud model to use if you want to go that route, there is a node for that as well

Anonymous

8/8/2025, 5:59:28 PM

No.106191372

>>106191358

It's good, but you should still kys since you can't run it on your moms laptop

Anonymous

8/8/2025, 6:00:26 PM

No.106191380

>>106191358

yes, the details issue was fixed, it is now by far the best base model, now give it a few weeks for anime finetunes to drop though it already does it well imo

>>106191348

another one, "make a stable diffusion prompt for Ryan Gosling making a pizza with a wood stove in a bakery." became this:

A rustic yet modern bakery interior, Ryan Gosling rolling out pizza dough with focused intensity, standing beside a glowing wood-fired stove, its warm orange light casting flickering shadows, flour-dusted countertops cluttered with fresh ingredients like tomatoes, basil, and mozzarella, the air filled with the aroma of baking dough, wooden beams and exposed brick walls in the background, soft natural light streaming through large windows, ultra-detailed, in a cozy, artisanal style, warm and atmospheric.

kek

I have 12gb of VRAM and 32gb of RAM, should I bother with Wan?

Anonymous

8/8/2025, 6:03:29 PM

No.106191403

>>106191399

if you dont mind waiting 30+ mins a gen since you will be constantly loading and unloading your quanted models from the hard drive

Anonymous

8/8/2025, 6:03:40 PM

No.106191405

>>106191438

>>106191399

I did, but now I have 24gb VRAM and 80gb RAM

food for thought

Anonymous

8/8/2025, 6:04:41 PM

No.106191410

>>106191327

Yes, because you don't know how lora works.

> can't afford RAM for $50

And how extra RAM will save from the need to restart? These 32GBs will be eaten after changing loras 6 times in the worst case. Not speaking of lack of free slots.

Anonymous

8/8/2025, 6:04:51 PM

No.106191415

>>106191442

>>106191246

thanks. No idea where it says that

Anonymous

8/8/2025, 6:05:12 PM

No.106191420

Anonymous

8/8/2025, 6:05:37 PM

No.106191425

>>106191387

Hollywood is all fake

Wan really tries to make the prompt make sense, what a trooper

Anonymous

8/8/2025, 6:06:39 PM

No.106191436

>>106191399

At q4 wan 2.1 or merged 2.2 will work almost fine.

Anonymous

8/8/2025, 6:06:48 PM

No.106191438

>>106191445

>>106191405

How do you end up with 80gb system ram ?

Anonymous

8/8/2025, 6:07:16 PM

No.106191442

>>106191481

>>106191415

Guess they have a different readme on huggingface

https://github.com/QwenLM/Qwen-Image

Anonymous

8/8/2025, 6:07:30 PM

No.106191445

>>106191474

>>106191438

32 + 32 + 8 + 8

Anonymous

8/8/2025, 6:09:53 PM

No.106191468

>>106191639

>>106191352

do you know where to find something like that? ive looked at the links int he op and im not seeing anything.

Anonymous

8/8/2025, 6:10:12 PM

No.106191474

>>106191483

>>106191445

Doesn't that fuck up timings ?

Anonymous

8/8/2025, 6:11:15 PM

No.106191481

Anonymous

8/8/2025, 6:11:41 PM

No.106191483

>>106191474

he will prob see a 10-20% decrease in speed on top of it being the lower out of whatever they are but I guess that is better than not having it, ram is still far far faster than even a SSD

Anonymous

8/8/2025, 6:16:53 PM

No.106191534

>>106191542

>using woct0rdo radial attention

>have every thing recommended installed (even information that should be on the github read me)

>errors

>"if X error appears just restart comfy"

>restart comfy

>radial attention working

>fuck yeah its at 75% done

>errors with no description

Well, at least his sageattention 2.2 is very good

Anonymous

8/8/2025, 6:17:33 PM

No.106191542

>>106191630

>>106191534

Are you feeling comfortable?

Anonymous

8/8/2025, 6:17:58 PM

No.106191544

>>106191548

wan is fun but kontext is fun for edits/fast meme images as well, or you can kontext edit an image to use with wan, they are all tools that do something a bit different.

green cartoon frog is sitting in a white beach chair on a sunny beach holding a beer. they are wearing a blue shirt, and red shorts. keep the frog's expression the same. the ocean is nearby, and the sky is cloudy.

source is a regular pepe, kontext q8 model

Anonymous

8/8/2025, 6:19:00 PM

No.106191548

>>106191655

kontext anon is going to commit seppuku once qwen edit drops

Anonymous

8/8/2025, 6:20:10 PM

No.106191558

>>106191553

god I hope so

Anonymous

8/8/2025, 6:20:16 PM

No.106191561

where are all the 1girls

I wonder why they didn't just release an "all in one" version of qwenimg instead of making it two separate releases. Technically you can generate images from the scratch with an editing model, right?

Anonymous

8/8/2025, 6:23:55 PM

No.106191587

>>106191625

>>106191579

because nobody would be talking about it right now

in the industry it's called a teaser, this is a business after all

Anonymous

8/8/2025, 6:24:18 PM

No.106191590

>>106191619

>>106191553

Won't he just switch to qwen ?

Anonymous

8/8/2025, 6:27:19 PM

No.106191615

>>106191579

Releasing model weights is just marketing for SaaS

See

>>106191246 DashScope, WaveSpeed and LibLib are all Chinese companies btw

Anonymous

8/8/2025, 6:27:49 PM

No.106191619

>>106191590

if only things were that simple

Anonymous

8/8/2025, 6:28:18 PM

No.106191625

>>106191637

>>106191587

???

It would generate a lot more buzz than it is currently

If they released a "complete" version right away, people would be spamming social media with "guys look at this amazing open source model that can generate pretty images AND can edit them on top of that!"

Now people will just handle the edit model as an incremental update

Anonymous

8/8/2025, 6:28:39 PM

No.106191630

>>106191542

yea all good bro

Anonymous

8/8/2025, 6:29:28 PM

No.106191637

>>106191625

you seem pretty eager

Anonymous

8/8/2025, 6:29:38 PM

No.106191639

>>106191468

Just google umt5 fp8 scaled? Don't confuse it with t5xxl though, that's for flux

Anonymous

8/8/2025, 6:30:57 PM

No.106191655

>>106193602

>>106191548

fishin

green cartoon frog is sitting on a fishing boat, holding a fishing rod. the frog is wearing a blue shirt, and red shorts. keep the frog's expression the same. the sky is cloudy.

Anonymous

8/8/2025, 6:32:04 PM

No.106191663

I hope they release a 1.1 version of Qwen-Image with less slopped outputs. It would be easy for them to do it, it's aesthetic tuning/like a lora. They likely won't though.

i'd love to see a "prompt travel" type of wan workflow, if it only does 5 seconds, have say 4 lines/prompts you enter, and it generates each one and stitches them sequentially.

yes, you can do it with ffmpeg or whatever, I mean something you can click "prompt" with and it does all of it.

Anonymous

8/8/2025, 6:37:46 PM

No.106191721

>>106191579

all in one models are shittier

Anonymous

8/8/2025, 6:42:12 PM

No.106191767

>>106191688

ani has to add it. he's the only one to work on it

Anonymous

8/8/2025, 6:45:17 PM

No.106191791

>>106191688

This does exactly that, and it's one of the best extended video nodes/workflows I've seen so far:

https://github.com/bbaudio-2025/ComfyUI-SuperUltimateVaceTools/

Anonymous

8/8/2025, 6:49:54 PM

No.106191828

>>106191847

How do I filter results so I can actually download something on CivArchive? 99% are dead ends with no mirrors.

>>106191828

should be taken out of the sticky then

are there any alternatives?

>>106191847

I'll give you analternative

Anonymous

8/8/2025, 6:58:55 PM

No.106191909

>>106191946

green cartoon frog is holding a bag of potato chips that say "SIPS" on the bag in scribbled text with one hand, and his other hand is holding a potato chip. the frog is standing on a sunny beach. the frog is wearing a blue shirt, and red shorts. keep the frog's expression the same. the sky is cloudy.

Anonymous

8/8/2025, 6:59:02 PM

No.106191911

No more genjam? :(

Anonymous

8/8/2025, 6:59:40 PM

No.106191919

genjam will return

Anonymous

8/8/2025, 7:01:50 PM

No.106191941

as goonjam

Anonymous

8/8/2025, 7:02:16 PM

No.106191946

>>106191909

one more

green cartoon frog is sitting in a beach chair on a sunny beach, under a palm tree. the frog is holding a tropical drink in a margarita glass. the frog is wearing a blue shirt, and red shorts. keep the frog's expression the same. the sky is cloudy.

Anonymous

8/8/2025, 7:02:29 PM

No.106191948

>>106191954

Anonymous

8/8/2025, 7:03:18 PM

No.106191953

is there a reason t2v takes way longer than i2v?

Anonymous

8/8/2025, 7:03:22 PM

No.106191954

>>106191876

>>106191948

is this a ani/comfy erp?

Anonymous

8/8/2025, 7:04:59 PM

No.106191968

has anyone been running hunyuan world in comfyui yet?

Anonymous

8/8/2025, 7:05:55 PM

No.106191982

>>106191996

im using the wan 2.2 guide and using self forcing

https://rentry.org/wan22ldgguide. i thoguht 2.2 was suppose to be 24 frames per second, but the video i generate seem to be 16 fps like wan 2.1

Anonymous

8/8/2025, 7:07:00 PM

No.106191996

>>106191982

that's not the only 2.2 model they put out

Anonymous

8/8/2025, 7:14:49 PM

No.106192079

inpaint bros.. whats our model of choice?

Anonymous

8/8/2025, 7:19:17 PM

No.106192117

invoke vs forge inpainting? anything better

ioinpaint?

krita is kinda limited in some ways but ok

Anonymous

8/8/2025, 7:19:48 PM

No.106192120

>>106192179

>Chroma still mangles feet and hands

Nice

Anonymous

8/8/2025, 7:20:52 PM

No.106192132

>>106192148

I know the best inpaint method but I won't share. Enjoy being steps behind me

Anonymous

8/8/2025, 7:21:19 PM

No.106192139

>>106192219

>my day is ruined.

Anonymous

8/8/2025, 7:22:01 PM

No.106192148

>>106192132

It's OK, this place welcomes browns too

Anonymous

8/8/2025, 7:24:11 PM

No.106192176

Anon wasn't lying

AniStudio just hard crashes when you try to gen a second picture

Oh well

Anonymous

8/8/2025, 7:24:17 PM

No.106192179

>>106192120

yeah, I noticed that too with the latest release

reducing the parameter count was a mistake

Anonymous

8/8/2025, 7:25:58 PM

No.106192199

>>106192396

how the hell are you supposed to know what to write to induce animation

>A video of a side-view running animation of a voluptuous elf-like blonde woman.

and she doesn't move.

Anonymous

8/8/2025, 7:27:48 PM

No.106192219

>>106192230

>>106192139

this time with a grok'd prompt:

A hyper-realistic scene of a frustrated man, face contorted in anger, forcefully throwing a pizza against a textured wall, sauce and cheese splattering chaotically, dimly lit rustic kitchen, moody atmosphere, dramatic lighting, cinematic composition, detailed textures, intense emotion, 4k resolution.

Anonymous

8/8/2025, 7:28:50 PM

No.106192230

>>106192254

Anonymous

8/8/2025, 7:31:52 PM

No.106192254

>>106192435

>>106192230

and a happier variation:

Anonymous

8/8/2025, 7:45:22 PM

No.106192381

cozy

Anonymous

8/8/2025, 7:46:21 PM

No.106192396

grok 2 is getting released next week, thoughts?

>>106192199

how about you post a workfloW?

Anonymous

8/8/2025, 7:49:26 PM

No.106192435

>>106192254

kontext test for fun: A man holding a square cardboard box saying "LDG" in black marker. keep his expression the same.

Recently I saw a couple of gemmy webms where george floyd and that cop were put in famous scenes from movies, the whole thing looked like wonky motion transfer. I think it had a watermark of some cloud service. Any local alternatives?

I don't get it... Chroma still looks like shit!

>>106192440

wan2.2

works on 12gb vram 64gb ram

Anonymous

8/8/2025, 7:53:06 PM

No.106192476

>>106192442

pics with WF or it didn't happen

Anonymous

8/8/2025, 7:54:33 PM

No.106192494

>>106192523

>>106192442

chroma is dead, use WAN or Qwen

Anonymous

8/8/2025, 7:56:31 PM

No.106192519

how many steps for high and low sampler do you usually use for t2v?

Anonymous

8/8/2025, 7:56:41 PM

No.106192523

>>106192442

>>106192494

back for more are you?

Asking again. How do I make her punch more like a punch instead of a slow approach? I've tried words like high speed, aggressive, strength, strong, etc. in the prompting but it doesnt do too much

Anonymous

8/8/2025, 8:00:23 PM

No.106192565

>>106192806

>>106192473

I know wan, but it's much more sophisticated than what those seemed to be. Almost puppet warp animation overlaid on original video but more realistic, it could spin around and stuff.

Anonymous

8/8/2025, 8:01:09 PM

No.106192575

>>106192543

what I've resorted to is asking chatgpt to write my prompts for me (you need to tell it that it's a video model for animation though, otherwise it'll give you still-image prompts).

Anonymous

8/8/2025, 8:01:23 PM

No.106192580

>>106192616

>>106192543

edit the video externally or make a lora

>>106192442

it looks like shit? just train a lora

it fucks up anatomy? just train a lora

its blurry? just train a lora

it doesnt know any anime artists? just train a lora

just train a lora

Anonymous

8/8/2025, 8:04:45 PM

No.106192616

>>106192589

>>106192580

I don't know how to train a LoRA

Anonymous

8/8/2025, 8:05:02 PM

No.106192622

>>106192635

>>106192543

speed it up and cut out frames in the middle where fist is still

i look down at your shitty fetish

Anonymous

8/8/2025, 8:06:06 PM

No.106192635

>>106192673

>>106192622

>i look down at your shitty fetish

nigga im new to this and just trying to make reaction images

Anonymous

8/8/2025, 8:09:26 PM

No.106192673

>>106192690

Anonymous

8/8/2025, 8:10:33 PM

No.106192690

>>106192673

believe me when i say im using it for porn too but im practicing a bit on prompting and learning workflows with shit like this that i found on twitter

Anonymous

8/8/2025, 8:17:58 PM

No.106192771

>>106192790

>>106192589

none of those are issues thankfully

Anonymous

8/8/2025, 8:19:51 PM

No.106192790

>>106192771

exactly, for us chromagods they are features

>>106192440

>>106192565

ok this shit is called viggle.ai, can I do it on my computer?

Anonymous

8/8/2025, 8:23:27 PM

No.106192843

>>106192984

Anonymous

8/8/2025, 8:23:35 PM

No.106192846

>>106192933

Is there any info on what the actual minimum and maximum resolutions for Qwen are supposed to be, in terms of megapixels?

I'd hope the super weird ones on their model card (like who does 1328x1328 as square, why wouldn't it be 1344x1344 lol) aren't the only ones "officially" supported

This is all gonna matter a lot for e.g. Lora training and stuff

A 1024 base Lora wouldn't work that well at 1328

And so on

Anonymous

8/8/2025, 8:32:08 PM

No.106192928

>>106192543

(Fast movement. Quick motions:2)

Anonymous

8/8/2025, 8:32:28 PM

No.106192933

>>106193118

>>106192846

Flux works best at 1448x1448 so what's the difference

is t2i -> i2v generally better or is t2v better?

Anonymous

8/8/2025, 8:35:47 PM

No.106192984

>>106192993

Anonymous

8/8/2025, 8:36:27 PM

No.106192993

>>106193010

>>106192984

post an example of what u want to make and say the magic word

Anonymous

8/8/2025, 8:38:22 PM

No.106193010

>>106193187

Anonymous

8/8/2025, 8:40:37 PM

No.106193035

>>106193051

>>106192950

i2v is better. t2v has no use case

Anonymous

8/8/2025, 8:41:18 PM

No.106193051

>>106193058

>>106193035

so i should just generate an image with like flux then use i2v?

Anonymous

8/8/2025, 8:42:04 PM

No.106193058

>>106193067

>>106193051

do you only get one chance at this? why can't you experiment, this is supposed to be fun

Anonymous

8/8/2025, 8:42:22 PM

No.106193062

>>106192806

viggle my nuts lol

Anonymous

8/8/2025, 8:42:56 PM

No.106193067

>>106193478

>>106193058

i get plenty of chances but its obviously time consuming. just wanted to know what was best for making stupid videos or porn to post

anons lora still works just fine

Anonymous

8/8/2025, 8:44:08 PM

No.106193082

Anonymous

8/8/2025, 8:44:59 PM

No.106193094

>>106193124

the man is holding a birthday cake with candles. the top of the cake says "LDG" in red icing.

Anonymous

8/8/2025, 8:46:20 PM

No.106193118

>>106192933

No it doesn't lol, you need significant aid from ClownShark samplers to get non-deformed stuff from Flux above like 1344x1344 even

Anonymous

8/8/2025, 8:47:13 PM

No.106193124

>>106193094

/ldg/'s birthday was magical

Anonymous

8/8/2025, 8:47:23 PM

No.106193127

here's an idea for a jam, MJ refusals

Anonymous

8/8/2025, 8:48:54 PM

No.106193142

>>106193333

I saw Mayli IRL and laughed

Anonymous

8/8/2025, 8:49:37 PM

No.106193151

Anonymous

8/8/2025, 8:50:08 PM

No.106193160

>>106193179

using a source reviewbrah image without holding the pizza box:

Anonymous

8/8/2025, 8:52:15 PM

No.106193179

>>106193160

this workflow works pretty well

it's a json. not mine but I had it bookmarked.

https://gofile.io/d/faahF1

Anonymous

8/8/2025, 8:53:12 PM

No.106193187

>>106193704

>>106193010

Where is my deserved spoonfeeding?

Anonymous

8/8/2025, 8:53:42 PM

No.106193194

>>106193278

why does ani hate waldorf so much?

Anonymous

8/8/2025, 8:56:08 PM

No.106193212

>>106191135

nfs 2012 remake looking good

Anonymous

8/8/2025, 9:03:12 PM

No.106193264

Anonymous

8/8/2025, 9:04:06 PM

No.106193272

Anonymous

8/8/2025, 9:04:36 PM

No.106193274

anon posts the best gens

Anonymous

8/8/2025, 9:05:03 PM

No.106193278

>>106193756

>>106193194

when did he say that?

Anonymous

8/8/2025, 9:06:33 PM

No.106193290

>so they took away my favorite fast food item...

Anonymous

8/8/2025, 9:07:41 PM

No.106193303

>>106193318

>>106192473

>64gb ram

oh, once again i got filtered

Anonymous

8/8/2025, 9:08:16 PM

No.106193313

flying backwards

Anonymous

8/8/2025, 9:08:43 PM

No.106193318

>>106193303

post ur setup, u can probably make do with ggufs

Anonymous

8/8/2025, 9:10:54 PM

No.106193333

Anonymous

8/8/2025, 9:11:11 PM

No.106193337

>>106193520

Anonymous

8/8/2025, 9:12:57 PM

No.106193359

>>106193520

Anonymous

8/8/2025, 9:13:47 PM

No.106193371

>>106193573

i hate comfyui

>i hate comfyui

i hate comfyui

>i hate comfyui

i hate comfyui

>i hate comfyui

Anonymous

8/8/2025, 9:16:24 PM

No.106193406

Anonymous

8/8/2025, 9:20:03 PM

No.106193436

>>106193573

I LOVE comfyui

Thank you comfyanonymous I love your fennec

Anonymous

8/8/2025, 9:22:01 PM

No.106193465

>>106193573

I love to hate fennec girls

Anonymous

8/8/2025, 9:23:22 PM

No.106193473

>>106193513

I LOVE comfyui

I HATE avatarfagging

Anonymous

8/8/2025, 9:24:06 PM

No.106193478

>>106192950

>>106193067

i2v is easier to work with and less time consuming due to the source image already establishing the subject, scene, and style. Prompting is easier as well as you can just focus on describing the desired motion and camera movement.

>>106193474

who is your favorite /ldg/ avatarfag?

Anonymous

8/8/2025, 9:26:53 PM

No.106193499

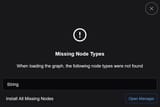

>>106193573

any idea as to why this node wont download / install? ive clicked the button to install, downloaded the pack its from, etc. and it still says its missing

Anonymous

8/8/2025, 9:28:50 PM

No.106193513

>>106193473

the new colossus

Anonymous

8/8/2025, 9:28:55 PM

No.106193516

>>106193562

go back

Anonymous

8/8/2025, 9:29:12 PM

No.106193520

>>106193337

>>106193359

Mirror's Edge SEXOOO!!!

>>106193507

some custom nodes don't support windows and only work on linux

>>106193526

so this workflow just isnt usable for me then? its one of the few t2i workflows that arent an overcrowded nightmare

Anonymous

8/8/2025, 9:32:04 PM

No.106193545

>>106193562

>>106193526

that is a lie

>>106193535

update your custom nodes, press update all, also if its a nightly only thing for some pack you might have to use that instead

Anonymous

8/8/2025, 9:32:15 PM

No.106193547

>>106193507

You need arch linux

Anonymous

8/8/2025, 9:32:49 PM

No.106193558

>>106193395

add the kijai 2.2 i2v lora with 6 steps (3/3) and it works fast too:

Anonymous

8/8/2025, 9:33:08 PM

No.106193562

>>106193535

it is usable for you, just install debian

>>106193545

see

>>106193516

Anonymous

8/8/2025, 9:34:58 PM

No.106193573

>>106193584

Anonymous

8/8/2025, 9:36:02 PM

No.106193584

>>106193594

>>106193573

Sdg tranny, ignore

Anonymous

8/8/2025, 9:36:43 PM

No.106193594

>>106193584

>Sdg tranny, ignore

you just replied to him

Anonymous

8/8/2025, 9:37:02 PM

No.106193602

>>106191655

isn't that apu tho?

Anonymous

8/8/2025, 9:38:59 PM

No.106193621

>>106193650

can local into realtime already?

for example like:

https://mirage.decart.ai/

Anonymous

8/8/2025, 9:41:18 PM

No.106193650

>>106193662

>>106193621

that's a world model and they are pretty much walking simulators. also yes local has some

Anonymous

8/8/2025, 9:42:29 PM

No.106193662

>>106193686

>>106193650

no, this does video to video in realtime. no interactive or 3d elements

Anonymous

8/8/2025, 9:42:58 PM

No.106193669

>>106193678

Anonymous

8/8/2025, 9:43:47 PM

No.106193678

>>106193669

if youre trying to make chroma look good youre failing

Anonymous

8/8/2025, 9:44:07 PM

No.106193682

>>106193699

You ever look back on old gens and realize how good they were? I'm not talking about anything ancient even, just XL gens from June.

Anonymous

8/8/2025, 9:44:34 PM

No.106193686

>>106193662

oh. idk v2v is lame as fuck but there is probably something out there

Anonymous

8/8/2025, 9:45:14 PM

No.106193699

>>106193682

Newer are always better

Anonymous

8/8/2025, 9:45:50 PM

No.106193704

>>106193187

i said magical word, not to call me a subhuman

Anonymous

8/8/2025, 9:47:58 PM

No.106193724

Anonymous

8/8/2025, 9:49:37 PM

No.106193740

Anonymous

8/8/2025, 9:51:56 PM

No.106193756

>>106193778

>>106193278

anime general on /g:

Anonymous

8/8/2025, 9:53:54 PM

No.106193778

>>106193791

Anonymous

8/8/2025, 9:54:52 PM

No.106193791

Anonymous

8/8/2025, 9:55:56 PM

No.106193801

>>106193817

>now he wishes he was waldorf

truly sad

Anonymous

8/8/2025, 9:57:37 PM

No.106193817

Anonymous

8/8/2025, 9:58:23 PM

No.106193827

when this niggra not lurkin?

Anonymous

8/8/2025, 10:01:44 PM

No.106193847

him always do

17hrs a day

Anonymous

8/8/2025, 10:02:24 PM

No.106193854

>>106190882

How much VRAM do I need to run that kind of model? Does it run inside ComfyUI?

I can run SDXL no problem, but this...

ポストカード

!!FH+LSJVkIY9

8/8/2025, 10:04:01 PM

No.106193869

new theory:

if i do TOO well he mass-reports my posts

i will now add generation\workflow data to ALL posts & nothing else.

>report bombing

y i k e s

https://desuarchive.org/g/thread/106190450/

Anonymous

8/8/2025, 10:04:23 PM

No.106193874

>>106195075

Anonymous

8/8/2025, 10:11:25 PM

No.106193969

>>106194001

>>106191847

Nah, it's actually not 99%, most of the popular celeb LoRAs still work, downloaded many Belle Delphine ones this way.

Anonymous

8/8/2025, 10:14:54 PM

No.106194000

ポストカード

!!FH+LSJVkIY9

8/8/2025, 10:14:59 PM

No.106194001

>>106190450 (OP)

Tried v50 (HD) and while the result has better prompt adherence (I asked for 4 women, it gets that right), and though fuzzy the finger count seems about right, the result is very poor in terms of texture compared to regular Chroma. Doesn't happen every image, but I noticed that it's happening to other anon's gens as well. Seems undetrained/blurred in many gens. Maybe the Chroma dev foresaw this downgrade in photorealism gens due to only giving this 2 epochs hence the 2 separate versions.

Anonymous

8/8/2025, 10:44:50 PM

No.106194345

>>106194334

is there V50 non HD? compare that too

Anonymous

8/8/2025, 10:46:31 PM

No.106194363

Anonymous

8/8/2025, 11:52:04 PM

No.106195055

>>106195075

>>106194334

Okay, so from what I understand the Chroma dev shrank the dataset size for this version. Since now if I remove the neg entirely, I get a very fuzzy looking image for that prompt even when asking for a photograph. So my guess is that I need a much stronger neg (but I tried a bunch of new words and it barely improved), and the v50/v50 annealed are strongly biased towards drawings. Here is one of my usual footfag prompts I test with no changes in neg. Yeah, that is exactly what is happening.

Anonymous

8/8/2025, 11:53:26 PM

No.106195075