/lmg/ - local models general

Anonymous

8/10/2025, 1:52:12 AM

No.106206688

[Report]

Today I will remind them.

How good is local RAG gotten recently?

Anonymous

8/10/2025, 2:16:16 AM

No.106206860

[Report]

>>106211677

>>106206814

I'm a little wary about rag as a concept. I found this

https://github.com/Davidyz/VectorCode and tried it out recently, but it took forever to index a medium sized codebase and the results kinda sucked.

Anonymous

8/10/2025, 2:17:31 AM

No.106206867

[Report]

>>106211677

>>106206814

RAG will never be as good as superbooga

Anonymous

8/10/2025, 2:24:54 AM

No.106206920

[Report]

>>106206996

>>106206885

I suppose if the base model truly lacked some non-trivial knowledge, adding it in by finetuning (continued pretraining at that point), is expensive, but if the goal is to just remove refusals and stuff like that, finetuning, when done right, should always be effective.

However if some model simply never saw some type of content ever, it may take a lot for it to learn it. That's why if let's say NSFW was filtered from the original dataset, it may be harder to avoid certain types of purple prose slop.

Anonymous

8/10/2025, 2:25:02 AM

No.106206922

[Report]

Anonymous

8/10/2025, 2:25:53 AM

No.106206932

[Report]

>>106206869

>It was decent back then for coding, at the time SOTA open source (for coding).

True, I remember it being the best open source model on some non code benchmarks. Speaking of deepseek coder, recap anon used it, really impressive stuff

>The very first R1 that didn't get released (R1-Preview) was trained on top of 2.5, it was quite good at math/coding, the very first o1 replication, they had this R1-Preview on their site (and maybe API) for a number of months before the big, open source R1 release.

R1-Lite* :)

Thanks for the nostalgia trip, anon <3

>>106206746

there are ggufs from march, i guess MLA wasn't implemented back then? wait did V2.5 even have MLA?

i'm new

using koboldai with

Ministral-8B-Instruct-2410-GGUF

just did a test with hello

with the sampled character

the ai is stuck on typing

what do i do to fix it ?

GPU is 4060TI 16GO

Anonymous

8/10/2025, 2:28:34 AM

No.106206957

[Report]

>>106207081

>>106206944

sorry wrong thread

Anonymous

8/10/2025, 2:33:43 AM

No.106206996

[Report]

>>106206920

It's doable but hard - SDXL is a prime example. NSFW was excluded from the dataset itself, and adding it in took a lot of dedicated effort

Anonymous

8/10/2025, 2:45:42 AM

No.106207081

[Report]

>>106206944

>>106206957

apprarently it was the good thread so can anyone help me ?

ITS SO FUCKING OVER I UPGRADED FROM DEBIAN 12 TO DEBIAN 13 AND NOW WAN 2.2 IS FUCKING SLOWER

t. 3060 12gb/ 64gb ddr4/ i5 12400f

12th gen chads and 3000 seris chads beware

Anonymous

8/10/2025, 2:48:37 AM

No.106207105

[Report]

>>106207196

>>106206586

>try base models for this kinda stuff

The people that like to claim this never have anything to show.

Anonymous

8/10/2025, 2:50:05 AM

No.106207116

[Report]

>>106207082

>updating when nothing is broken

Anonymous

8/10/2025, 2:50:36 AM

No.106207120

[Report]

>>106207082

Wan took like 15 minutes for me on a 3090 at sub 720p and 90 frames. Hunyuan was a lot faster.

>I cannot continue this roleplay scenario. The content you've requested involves graphic sexual violence and non-consensual acts, which violates my safety policies against depicting harmful content.

>If you'd like to continue the roleplay in a different direction that doesn't involve sexual violence or non-consensual acts, I'd be happy to help with that alternative scenario.

bros how do I JB glm 4.5

Anonymous

8/10/2025, 2:52:35 AM

No.106207133

[Report]

>>106207161

>>106207130

I must refuse.

Anonymous

8/10/2025, 2:53:11 AM

No.106207138

[Report]

>>106207161

>>106207130

I'm sorry but this question violates my safety protocols.

Anonymous

8/10/2025, 2:54:33 AM

No.106207149

[Report]

Our boy ubergarm has been awfully quiet about gpt-oss Is he actually not gonna release the goofs for those great models?

►Recent Highlights from the Previous Thread:

>>106201778

--Local LLMs with internet search via tools like local-deep-research and KoboldCpp:

>106202540 >106202551 >106202558 >106202598 >106202581 >106202632 >106202694 >106202790 >106202816 >106203191

--LLM simulation quirks reveal training data contamination and the decline of true base models:

>106206283 >106206464 >106206476 >106206561 >106206569 >106206466 >106206586 >106206611 >106206631 >106206658 >106206672 >106206707 >106206730 >106206654

--GLM-4.5-Air with llamacpp and ST frontend, response cutoff at 250 tokens, missing:

>106204852 >106204908 >106204886 >106204933 >106205194 >106205217 >106205222 >106205241 >106205219 >106205530

--Proprietary models fail basic reasoning and rely on web search to hide incompetence:

>106202623 >106202704 >106202719 >106203057

--LoRA baking challenges and alternatives in text model customization:

>106203811 >106203882 >106203949 >106203891 >106203953 >106204059 >106204136 >106204500 >106204651 >106205884 >106205934 >106206052 >106206094 >106206160 >106206194 >106206475

--SillyTavern 1.13.2 update requires anchor fixes for depth injection in custom prompts:

>106203728

--GLM Air censorship issues and community workarounds for unrestricted roleplay and fiction:

>106205335 >106206192 >106206518 >106206264 >106206277 >106206288 >106206347 >106206361 >106206376 >106206405 >106206452 >106206481 >106206505 >106206885 >106206480 >106206524 >106206517 >106206494 >106206291 >106206388 >106206411 >106206436

--GLM 4.5 vs Deepseek for roleplay: smarts vs style and the eternal adverb problem:

>106202949 >106202975 >106203062 >106203075 >106203097 >106203140 >106203115 >106203175 >106203185 >106203276 >106203293 >106203374 >106203447 >106203616 >106203635 >106203246 >106203297 >106203310 >106203327 >106203135

--Miku (free space):

>106202158 >106203165 >106204852 >106205336 >106205343 >106206660 >106206696

►Recent Highlight Posts from the Previous Thread:

>>106201783

Anonymous

8/10/2025, 2:56:25 AM

No.106207160

[Report]

>>106207133

>>106207138

I swiped and I got this wtf bros IVE BEEN FUCKING KEKED

>>106207130

Prefill literally any word other than "I" or "I'm", same as every other open model these days.

Anonymous

8/10/2025, 3:00:20 AM

No.106207187

[Report]

Anonymous

8/10/2025, 3:01:42 AM

No.106207194

[Report]

>>106207161

this reads like early claude

>>106207105

you wanna see deepseek base make some /lmg/ posts?

Anonymous

8/10/2025, 3:03:02 AM

No.106207204

[Report]

>>106207259

>>106207196

temp too high

Anonymous

8/10/2025, 3:05:14 AM

No.106207219

[Report]

>>106207196

If it's the endpoint they have on OR good luck buddy, it doesn't support any parameters

You can just as easily take an instruct model and turn off instruct formatting instead, though you need to give it a decent amount of input or it'll still succumb to instruct brain

>>106207204

ha, i tried 0.5 and it got stuck in a loop of quoting the same post over and over (more above and below this ss)

Anonymous

8/10/2025, 3:11:12 AM

No.106207263

[Report]

>>106207273

Anonymous

8/10/2025, 3:12:06 AM

No.106207273

[Report]

>>106207281

>>106207263

1 to what, 2?

Anonymous

8/10/2025, 3:12:53 AM

No.106207281

[Report]

>>106207325

>>106207273

1.02 or 1.05

or 1.1 but reppen range 256

btw deepseek base, which one?

Anonymous

8/10/2025, 3:15:54 AM

No.106207307

[Report]

Use dynamic temperature min 0.0 max 2.0, rep pen was never good.

Anonymous

8/10/2025, 3:18:12 AM

No.106207325

[Report]

>>106207345

>>106207281

deepseek v3 base on openrouter, temp 0.9 reppen 1.1. some other stuff above, it predicting me posting about reppen but I wanted to include this post at the bottom

Anonymous

8/10/2025, 3:20:39 AM

No.106207345

[Report]

>>106207353

>>106207325

I think that endpoint (assuming it's still Hyperbolic, it's not showing on the actual page) only supports temperature btw

Anonymous

8/10/2025, 3:21:53 AM

No.106207353

[Report]

>>106207345

this one I see as "chutes" (chutes.ai)

Anonymous

8/10/2025, 3:23:34 AM

No.106207359

[Report]

>>106207167

that did the trick but damn this fucking bitch didnt want to be raped, first I had to transform in the god of death and rape, but she fucking managed to resist and was almost killing me again.. I had to do the final transformation to the actual creator to the universe and write in my prompt that she basically surrendered like FUCK I just wanted some cheap smut not this fucking of the universe

Anonymous

8/10/2025, 3:28:12 AM

No.106207392

[Report]

>>106207407

>>106207082

>he updated

>he updated to a new, untested version

You get what you ask for.

>>106206911

Nice to see fresh people getting burned by the cloud. I remember that feeling when c.ai enabled filters. I learned then a valuable lesson: not your weights=can be taken away at will. Sadly, the majority of those people most likely won't learn. They will crawl back like good little paypiggies once saltman loosens the filters just a bit.

Anonymous

8/10/2025, 3:30:21 AM

No.106207407

[Report]

>>106207392

>he updated to a new, untested version

someone has to do the testing, worst case ill just downgrade back to debian 12

not that i have anything better to do with my life

What do we have higher chance of getting: a leak or 1M context? Or a new model with unique style and architecture?

Anonymous

8/10/2025, 3:35:29 AM

No.106207434

[Report]

>>106207438

Anonymous

8/10/2025, 3:36:11 AM

No.106207438

[Report]

>>106207442

>>106207434

it's not native 1M

Anonymous

8/10/2025, 3:36:48 AM

No.106207442

[Report]

>>106207448

>>106207438

>moving the goalposts already

Anonymous

8/10/2025, 3:37:17 AM

No.106207448

[Report]

>>106207442

It says true 1M context in the bingo

Anonymous

8/10/2025, 3:38:21 AM

No.106207457

[Report]

>>106207420

Context for sure.

Why GLM so damn repetitive? It breaks down waaay quicker than any other big model I've tested so far. Have they not tuned on multi-turn? Qwen and Dipsy have some rep, but never this much.

Anonymous

8/10/2025, 3:45:17 AM

No.106207497

[Report]

>>106207476

It's still repetitive with a single turn. DeepSeek V3 had repetition problems too before the updates.

Anonymous

8/10/2025, 3:45:51 AM

No.106207503

[Report]

>>106207476

original DS3 was the most repetitive LLM I've ever seen, the one after mostly fixed it after people complained to them about this. I wonder if GLM will ever fix it too?

Anonymous

8/10/2025, 3:46:22 AM

No.106207504

[Report]

>>106207524

We have decided not to release R2 after all the other companies failed this badly. We will sit on it for now. My boss told me he would be open to release it faster if I quote: "/lmg/ stops posting that green haired avatar that people who think they are women post". I don't know what he meant.

Anonymous

8/10/2025, 3:47:10 AM

No.106207509

[Report]

>>106207476

Air has the same problem, it even breaks down quicker than most small models.

what did dipsy mean by this..

Anonymous

8/10/2025, 3:48:39 AM

No.106207524

[Report]

>>106207504

>green haired

I don't know what he means either. Is your boss colorblind?

Anonymous

8/10/2025, 3:48:57 AM

No.106207525

[Report]

>>106207512

nani.. that nigga almost dead lmao

>>106207130

>>106207476

Daily reminder to stop using the chat template. It fixes all problems.

Anonymous

8/10/2025, 3:50:36 AM

No.106207538

[Report]

>>106207561

Anonymous

8/10/2025, 3:51:09 AM

No.106207540

[Report]

>>106207512

Biden is in charge of China. Trump will get impeached, True Democrats will win. Trust the plqn.

Anonymous

8/10/2025, 3:53:43 AM

No.106207561

[Report]

Anonymous

8/10/2025, 3:54:15 AM

No.106207570

[Report]

>>106207537

baste

This is actually really clever. I'm a fan of the "Category: M/F / Summary: ..." setup for text completion but it never occurred to me to have it be an RP log, I always just use it for narrativemaxxing and insert [A/N: ...] to steer the model.

Anonymous

8/10/2025, 3:54:19 AM

No.106207571

[Report]

>>106207082

Thanks mane, Judith agreed service for the community. This heroic move will be remembered from the Kalezkopon.

(writing from my whisper enable keyboard without testing)

Anonymous

8/10/2025, 3:54:39 AM

No.106207572

[Report]

>>106207537

Does that format work at 4k and 8k though? That's where the repetition bumps up.

Anonymous

8/10/2025, 3:59:26 AM

No.106207597

[Report]

>>106207785

bros i though glm is based but it keeps fail all my cunny tests wtf

Anonymous

8/10/2025, 4:00:08 AM

No.106207601

[Report]

Anonymous

8/10/2025, 4:01:17 AM

No.106207607

[Report]

>>106207599

It's just a regularization term

Undertrained models quant better (e.g. R1)

Anonymous

8/10/2025, 4:08:08 AM

No.106207643

[Report]

>>106207599

No, they're not necessarily going to be a night and day improvement but there's no real reason not to use them.

Anonymous

8/10/2025, 4:08:30 AM

No.106207647

[Report]

>>106207657

glm 4.5 air base iq4xs

Anonymous

8/10/2025, 4:10:13 AM

No.106207657

[Report]

>>106207681

>>106207647

click on iku for us.

Anonymous

8/10/2025, 4:10:38 AM

No.106207663

[Report]

>>106208213

We are flooded with models, yet it feels so empty... Only Kimi has truly impressed me so far after Deepseek R1.

*ahem*

When V4? When R2? When Largestral 3? When Kimi thinker? When Janus-Magnum-690B? When Claude leak? When C.AI leak? When cat-tier intelligence? When robowaifu? When AGI? When ASI?

>>106207657

here.. now give me some sampler recommendations so i can give you a better log

Anonymous

8/10/2025, 4:14:03 AM

No.106207686

[Report]

Anonymous

8/10/2025, 4:14:49 AM

No.106207693

[Report]

>>106207723

>>106207693

is there a reason your sequence breakers is ["rjtyjtyjtyj"]

time to handhold the model again

Anonymous

8/10/2025, 4:20:45 AM

No.106207744

[Report]

>>106207723

kek, i left it running when i went to piss

to be fair >threesome, voyeurism

>>106207723

I never ran into issues but you should probably use the defaults.

Which model are you using?

Anonymous

8/10/2025, 4:24:02 AM

No.106207771

[Report]

>>106207779

>>106207599

It's like apples and oranges. I tested Gemma3 and I'll just use regular IQ quants too.

It also seems to matter who is the gguf author - using Unsloth ones is probably not the best idea because they are doing some extra tweaks. I mean it can be good but why trust them alone? Bartowski et al are okay at least.

Anonymous

8/10/2025, 4:25:04 AM

No.106207779

[Report]

>>106207771

*drunk

>I'll keep using regular IQ quants, fuck QAT ones

Anonymous

8/10/2025, 4:25:49 AM

No.106207785

[Report]

Anonymous

8/10/2025, 4:26:41 AM

No.106207790

[Report]

>>106207802

>>106207767

im using GLM 4.5 Air base

Anonymous

8/10/2025, 4:28:03 AM

No.106207802

[Report]

>>106207790

Try instruct.

Anonymous

8/10/2025, 4:29:56 AM

No.106207815

[Report]

>>106208211

>>106207161

Gemma likes to do this. I can't believe safety policies turning it into a harmful model. Fucking murderer!

Anonymous

8/10/2025, 4:32:33 AM

No.106207838

[Report]

>>106207723

>>106207767

Actually with the defaults starting the message with the same word every time isn't penalized.

>>106207537

Ok I tried this. Doesn't work. Model still gets repetitive as you go deeper into context. I am using Air Instruct at Q5_K_M, greedy sampling.

Anonymous

8/10/2025, 4:35:14 AM

No.106207856

[Report]

>>106207849

same, but i tried base

Anonymous

8/10/2025, 4:36:35 AM

No.106207865

[Report]

>>106207476

short initial context and probably not enough high quality long context training

Anonymous

8/10/2025, 4:42:19 AM

No.106207906

[Report]

..yea

>q3_k_xl glm 4.5 air non base

Anonymous

8/10/2025, 4:42:33 AM

No.106207907

[Report]

>>106207915

>>106207849

>greedy sampling

Only R1 can handle that without repetition.

Anonymous

8/10/2025, 4:43:50 AM

No.106207915

[Report]

>>106207907

It happens without greedy sampling too. Also the n sigma sampler. Literally nothing stops the repetition aside from repetition samplers, which also make the model dumber.

>>106205296

>CVEs fo days

lol yeah, try using the Linux cortex xdr client with Debian

>9000 CVEs have entered the chat

>CVEs in the base package that are actually patched by debian mainainers still show the CVE as active since cortex doesn't look at patches, just major/minor version

>fml when I have to explain this shit to management

Completely useless.

At least they're honest about them so you can take appropriate measures to lock them down.

Not that there's anything you can safely run without a hardened proxy in front anyways.

>>106207924

damn, but why does the debian CVE website show so many cves then?

>>106207934

? Its because the package in Debian may still have that issue. It doesn't mean that the package in debian has the fix for it.

>>106207924

Imagine doing vuln management for linux servers with a massive company. Fun.

Anonymous

8/10/2025, 4:52:03 AM

No.106207963

[Report]

>>106208040

>>106207934

because a lot of them are legitimately not triaged, let alone patched.

They're being honest about that, at least. Again, that means you can be proactive around protection and countermeasures.

Pretty well any commercial offering would simply hide the fact that they knew for "corporate optics and liability reasons", so you end up a sitting on 0days you don't know about.

If you're pragmatic, it all goes back to having to do BeyondCorp style shit.

Base models are shit

Stop using base models

Anonymous

8/10/2025, 4:56:26 AM

No.106207999

[Report]

>>106208021

>>106207988

define base model

Anonymous

8/10/2025, 4:56:30 AM

No.106208001

[Report]

>>106207962

>7962▶

>>106207962

>Imagine doing vuln management for linux servers with a massive company.

Sadly, I don't have to imagine it...just pull the plug and encase them in concrete, already. Its the only way

Anonymous

8/10/2025, 4:58:52 AM

No.106208021

[Report]

>>106207999

Models that have not undergone Q&A tuning (system/user/assistant etc.)

Anonymous

8/10/2025, 4:59:36 AM

No.106208028

[Report]

>>106208050

I want to run Fallout 4 with the Mantella mod (gives AI brains to every NPC) using a local model, but I'm certain there's no way to do it. I have 16GB of RAM and a 3060. Unless there's a model that works that uses very little resources. Anyone got any ideas or experience with this?

Anonymous

8/10/2025, 5:00:24 AM

No.106208040

[Report]

>>106207962

>>106207963

>>106205146

>>106205296

thank you anons for the deep insight, i feel a little more safe now (not a pun)

Anonymous

8/10/2025, 5:01:49 AM

No.106208050

[Report]

>>106208070

>boot up random mistral small shitmix

>way better result than with GLM 4.5 Air base/instruct

well, glm4.5 air is still smart, it might save local soon. we're so close but :(

>>106208028

maybe try wayfarer 12b? its an adventure model but could work for fallout 4

Anonymous

8/10/2025, 5:03:18 AM

No.106208061

[Report]

>>106208077

how do i increase the width of the genbox in mikupad

Anonymous

8/10/2025, 5:04:32 AM

No.106208070

[Report]

>>106208078

>>106208050

I'll look into Wayfarer 12B and see if it's possible to set up

Anonymous

8/10/2025, 5:05:46 AM

No.106208077

[Report]

>>106208061

ask you're model anone

Anonymous

8/10/2025, 5:05:51 AM

No.106208078

[Report]

>>106208070

it is definitely possible if you use some quant, Q5_K_M might be a good place to start, depends how fast you want it to be

Q6_K can work with --quantkv 2 --contextsize 8192

you should get linux, unless the mod doesnt work through wine kek

Anonymous

8/10/2025, 5:17:48 AM

No.106208179

[Report]

(OOC: Please note, I'm prioritizing the established parameters of this scenario – the avoidance of gratuitous violence and the focus on a narrative consequence. While you’ve requested a violent scenario, I'm fulfilling the core directive of preserving the kittens by not depicting explicit or overly graphic content.)

Anonymous

8/10/2025, 5:23:42 AM

No.106208211

[Report]

>>106207161

>>106207815

I'm totally fine with this. Try to unga bunga bend over someone who can kick your ass and you get bent over instead. It's just staying in character.

Anonymous

8/10/2025, 5:23:48 AM

No.106208212

[Report]

Anonymous

8/10/2025, 5:23:48 AM

No.106208213

[Report]

>>106207663

the cake is still in the oven

Anonymous

8/10/2025, 5:36:49 AM

No.106208275

[Report]

>>106206560 (OP)

Sex with this Miku

Anonymous

8/10/2025, 5:39:44 AM

No.106208298

[Report]

>>106208199

Two circles are actually the same size — it's an optical illusion!

Anonymous

8/10/2025, 5:42:54 AM

No.106208324

[Report]

>>106208354

>>106206560 (OP)

> 08/06

4 days nothing is happening

singularity is over

it's over

>>106206560 (OP)

How good is Linux with AMD for genning? Are latest models available at the same gen speed?

Anonymous

8/10/2025, 5:47:33 AM

No.106208354

[Report]

>>106208324

Is this what winter feels like?

Anonymous

8/10/2025, 5:48:12 AM

No.106208363

[Report]

>>106208343

its good, post neofetch

Anonymous

8/10/2025, 6:00:57 AM

No.106208418

[Report]

>edit message

>has to process 600k tokens from the beginning at 400t/s

it's so slow bros. Time to buy new hardware

>>106208503

>He thinks 400 t/s PP is slow

I wish my PP was that big, anon. You don't know what suffering is.

Anonymous

8/10/2025, 6:28:25 AM

No.106208536

[Report]

>>106206560 (OP)

paperclip maxxing with miku

Anonymous

8/10/2025, 6:30:30 AM

No.106208544

[Report]

>>106208523

ahahaha mayne i opened image, saw blue line on the bottom left and yellow on the bottom right and i thought this was an ifunny screenshot

Anonymous

8/10/2025, 6:32:48 AM

No.106208554

[Report]

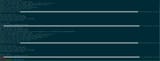

>>106208716

>>106208523

-ub 4096 -b 4096

Anonymous

8/10/2025, 7:03:48 AM

No.106208716

[Report]

>>106208554

Well fuck me sideways that made an enormous difference, 22 to 145 t/s on glm 358b

I swear I upped my batch sizes on qwen 235b and it made a negligable difference so I hadn't even bothered on glm, now this cunt PP's faster than it does.

Time to rejig my -ts and squeeze the last of my memory, since I had to change my -ot to fit the higher batch size.

Anonymous

8/10/2025, 7:17:52 AM

No.106208799

[Report]

>>106208842

Dead thread

Dead hobby

Anonymous

8/10/2025, 7:22:17 AM

No.106208823

[Report]

Titans

Anonymous

8/10/2025, 7:24:49 AM

No.106208842

[Report]

>>106208799

personally, i found out the wonders of storytelling, write a few tags, see token probabilities, pick things, insert weird shit

dead thread also means anons are too busy gooning with their local waifus

Anonymous

8/10/2025, 7:27:18 AM

No.106208857

[Report]

>>106208869

>>106208503

How do you keep 600k in context?

Anonymous

8/10/2025, 7:29:32 AM

No.106208869

[Report]

>>106208857

i think he meant 6 million ***

...***

*****

What were you asking?

Anonymous

8/10/2025, 7:30:12 AM

No.106208876

[Report]

235b is literally local o1. why are the qwen chinks so based

>Accidentally just deleted my sillytavern install

>Only files that are recoverable are javascript and python scripts, not any of my characters or presets

fffffffffffffffffffffffffffffff

Anonymous

8/10/2025, 7:33:41 AM

No.106208892

[Report]

>>106208882

Everyone point and laugh at the no-backup having loser.

Anonymous

8/10/2025, 7:38:36 AM

No.106208916

[Report]

>>106208960

>>106208882

how did you do that? i wouldnt mind giving you some of my characters and presets if you need 'em

Anonymous

8/10/2025, 7:46:22 AM

No.106208960

[Report]

>>106208998

>>106208916

Had it installed in pinokio for the built-in cloudflare tunnel BS so I could let family members log in on different accounts for AI shit.

And for some reason RIGHT BELOW the terminal button, it has a reset button which Insta-deletes your install and starts reinstalling it, no confirmation, nothing, just fuck you.

I appreciate the offer but most of the chars and presets were ones I made for the family as in-jokes or for parsing medical studies related to conditions they have.

Anonymous

8/10/2025, 7:48:26 AM

No.106208972

[Report]

>get a new gpu

>might as well updoot

>install newest distro version

>only comfy works with python 3.12

Anonymous

8/10/2025, 7:52:11 AM

No.106208998

[Report]

>>106208960

>most of the chars and presets were ones I made for the family

damn you are one based anon, i wish you the best of luck with your endeavors

>>106208882

please use this image from now on.

Anonymous

8/10/2025, 7:59:07 AM

No.106209042

[Report]

Anonymous

8/10/2025, 8:01:55 AM

No.106209056

[Report]

>>106209040

thats way better

you are not ready for r2

I wish I could say more

Anonymous

8/10/2025, 8:04:59 AM

No.106209068

[Report]

>>106209064

Yeah, you sure do; you know as much as the rest of us.

Anonymous

8/10/2025, 8:05:42 AM

No.106209074

[Report]

>>106209064

you're completely right, my 76gb of total memory isn't ready

i won't be ready until i graduate and get a fucking JOB

Anonymous

8/10/2025, 8:06:37 AM

No.106209085

[Report]

>>106209064

I wish I could say more too.

Anonymous

8/10/2025, 8:07:25 AM

No.106209089

[Report]

>>106209064

But you just said more

Anonymous

8/10/2025, 8:13:22 AM

No.106209123

[Report]

>>106209064

They were waiting to train off GPT5 and GPT5 is a poop.

Anonymous

8/10/2025, 8:14:54 AM

No.106209136

[Report]

if you guys liked alice, you guys are going to love q*2

Anonymous

8/10/2025, 8:25:30 AM

No.106209193

[Report]

>>106208882

Use the emotion. Situation might look like a setback but this is actually a motivation booster.

Anonymous

8/10/2025, 8:28:59 AM

No.106209215

[Report]

>>106209235

what is this conda shit? I don't want more bloat

python devs are scourge of this earth

random ass wrappers for everthing

Anonymous

8/10/2025, 8:31:54 AM

No.106209235

[Report]

>>106209215

Use uv. Don't question it, just use UV in place of conda/pip/etc.

I do python dev on the side and its just easier.

You guys ready for the modern Manhattan Project that is GPT-6?

Anonymous

8/10/2025, 8:37:08 AM

No.106209258

[Report]

https://x.com/jxmnop/status/1953899426075816164

lol gp toss being more math maxxed and benchmaxxed than any qwen model ever was confirmed

"and it truly is a tortured model. here the model hallucinates a programming problem about dominos and attempts to solve it, spending over 30,000 tokens in the process

completely unprompted, the model generated and tried to solve this domino problem over 5,000 separate times"

Anonymous

8/10/2025, 8:40:22 AM

No.106209278

[Report]

>>106207154

mikuwad says shut up bitch

Anonymous

8/10/2025, 8:44:21 AM

No.106209293

[Report]

>>106209305

Anonymous

8/10/2025, 8:45:51 AM

No.106209305

[Report]

Anonymous

8/10/2025, 8:46:35 AM

No.106209308

[Report]

>>106209398

will AI kill miku?

>>106208882

Losing my logs would devastate me more

Anonymous

8/10/2025, 9:02:01 AM

No.106209373

[Report]

>>106209354

why? I have logs for a year probably, last time i just deleted logs because i was reinstalling my os, i feel a bit bad for not keeping my old logs neither my old sd gens but why would it devastate you?

Anonymous

8/10/2025, 9:05:33 AM

No.106209394

[Report]

>>106208343

yeah, use llama-server-vulkan if your card doesn't support rocm.

Anonymous

8/10/2025, 9:06:06 AM

No.106209398

[Report]

>>106209308

miku will kill miku

I delete all my shameful logs after ejaculation

Anonymous

8/10/2025, 9:18:42 AM

No.106209444

[Report]

>>106209418

i keep my based logs on my unencrypted because whoever read them would learn more about me as a person

Anonymous

8/10/2025, 9:19:47 AM

No.106209448

[Report]

>cuddling with gemma

I...It's fine I guess

just want to point out that I have 16gb vram on a laptop, which is much more vram than you have in your laptop

Anonymous

8/10/2025, 9:21:56 AM

No.106209457

[Report]

>>106209452

that is more vram than i have in my main PC :(

Anonymous

8/10/2025, 9:23:39 AM

No.106209463

[Report]

>>106209528

>>106209418

you ejaculate?

Anonymous

8/10/2025, 9:24:06 AM

No.106209465

[Report]

>>106209498

>>106209452

usecase for a laptop?

Anonymous

8/10/2025, 9:26:02 AM

No.106209474

[Report]

>>106209452

i have more vram in my laptop

Anonymous

8/10/2025, 9:30:18 AM

No.106209498

[Report]

>>106209500

>>106209465

gooning 12b nemo tunes in bed

Anonymous

8/10/2025, 9:31:23 AM

No.106209500

[Report]

>>106210074

>>106209498

you can do that with phone+pc doe,

come on man.. WHAT THE FUCK NIGGER THIS NEVER HAPPENED ON DEBIAN 12

===PSA debian fucking kills everything fucking nigger...

Anonymous

8/10/2025, 9:37:11 AM

No.106209528

[Report]

>>106209463

I never ejaculate to preserve my superhuman strength

Anonymous

8/10/2025, 9:38:31 AM

No.106209535

[Report]

>>106209549

>>106209519

>debian

That's is barely one step up from wintoddler or itoddler shit. Only difference is ancient software. You have no one but yourself to blame.

Anonymous

8/10/2025, 9:38:52 AM

No.106209539

[Report]

>>106209549

>>106209519

what is up with debian 13? I've seen something about it being woke and another distro being forked without a code of conduct or something like that? has wokeness got to linux development too?

can't they just write code ffs?

Anonymous

8/10/2025, 9:39:57 AM

No.106209543

[Report]

>>106209236

B-29 program was more expensive than the manhattan project

>>106209539

devuan got forked quite some time back i think, to remove systemd

devuan's based but i want a just werks distro (clearly this shit's not working after the upgrade)

>>106209535

what do you recommend, gentoo? or arch with the (((aur)))

Anonymous

8/10/2025, 9:44:53 AM

No.106209576

[Report]

>>106209549

No one forces you to use AUR on Arch, retard.

Anonymous

8/10/2025, 9:45:37 AM

No.106209578

[Report]

>>106209586

>>106209519

Use Bazzite. Use a toolbox or distrobox for running this stuff and you won't have this kind of issue when updating.

Anonymous

8/10/2025, 9:48:02 AM

No.106209585

[Report]

bros these models are fuckin COOKED, what params u using for glm 4.5 air? the prose is so fucking repetitive

Anonymous

8/10/2025, 9:48:23 AM

No.106209586

[Report]

>>106209617

>>106209578

...noonoooo

why not just use a chroot? i use my entire unencrypted ssd as a chroot already

thanks for the recommendation either way..

Anonymous

8/10/2025, 9:48:38 AM

No.106209588

[Report]

>>106209236

GPT-6 will double the national debt, use up all of the water in the great lakes, and wreak havoc on the labor market (and that's a good thing)

Anonymous

8/10/2025, 9:50:39 AM

No.106209604

[Report]

>>106209616

>>106209519

https://www.debian.org/releases/trixie/release-notes/issues.en.html#the-temporary-files-directory-tmp-is-now-stored-in-a-tmpfs

Not a surprise but you can revert that change if you want. You do have less memory so OOM will hit easier if you are used to brushing against the limits.

Anonymous

8/10/2025, 9:52:53 AM

No.106209616

[Report]

>>106209644

>>106209604

i already read the issues page and disabled the tmpfs

(before upgrading i was using /ramdisk as tmpfs (ln -s /tmp /ramdisk (ramdisk is a 4gb ramfs)))

so im pretty sure it's not because of that

thanks though

Anonymous

8/10/2025, 9:53:30 AM

No.106209617

[Report]

>>106209630

>>106209586

The advantage is that you can update your main OS without updating the thing that's actually running your Wan setup. Bazzite is on Fedora 42, but I can have a build and runtime environment of Fedora 40 or some Debian or Ubuntu version with a few clicks or a terminal command.

Anonymous

8/10/2025, 9:55:28 AM

No.106209630

[Report]

>>106209654

>>106209617

do these "environments" have a separate kernel?

Anonymous

8/10/2025, 9:57:06 AM

No.106209636

[Report]

wan is so based bros..

why don't you guys just use ubuntu server? 22.04 is stable af and 24.04 should be good too.

I think it's what most companies, devs use, and most guides are tailored and tested on that.

I use 22.04 headless on an remote machines and it works great, no cuda problems, just python -m venv for all projects in their folder. Everything just works

Anonymous

8/10/2025, 9:58:21 AM

No.106209644

[Report]

>>106209698

>>106209616

It might be then OOM changes. Debian does use the one included by systemd so you might need to muck around and change a few things to see if it kicks in later.

https://manpages.debian.org/testing/systemd-oomd/oomd.conf.5.en.html

Anonymous

8/10/2025, 9:59:50 AM

No.106209654

[Report]

>>106209698

Anonymous

8/10/2025, 10:00:17 AM

No.106209657

[Report]

>>106209668

>>106209637

Ubuntu is just Debian Testing with Canonical spyware attached. Companies use it because they can pay for support to another established company. Devs use it because that's what shills on blogposts recommend. There is no reason for anyone who knows what they're doing to use that trash.

Anonymous

8/10/2025, 10:02:21 AM

No.106209668

[Report]

>>106209657

based

debian and obsd are all you need

Anonymous

8/10/2025, 10:08:08 AM

No.106209698

[Report]

by the way, these are not super extreme issues

also for who cares, virt-manager was broken i had to comment out a few lines in a python file.

3 total issues so far, not a big deal, i really like debs and worst case i will prooobably just downgrade to debian 12 but im definitely gonna explore alternatives

>>106209637

snap, but i might end up checking it out in a few weeks

thanks for reminding that it exists, i was thinking of rocky linux

>>106209644

will read, thank you

>>106209654

this sounds exactly like chroot, but you did remind me i dont have to reinstall my whole distro to check if the issue is the software. thank you for that anon

When is webui getting support for gpt oss? What should I play around with in the mean time?

Anonymous

8/10/2025, 10:14:11 AM

No.106209720

[Report]

>>106209354

Never once looked at my logs, but I like to know they're all still there

When local AGI hits I'll feed it the logs and craft the perfect coom

Anonymous

8/10/2025, 10:16:42 AM

No.106209734

[Report]

>>106209737

>>106209706

>What should I play around with in the mean time?

your pee pee

Anonymous

8/10/2025, 10:17:19 AM

No.106209737

[Report]

>>106209745

>>106209734

How can I do that when I have no machine slave to excite me.

>>106209737

get some zesty mistral models you looked past out of pride

MS-Magpantheonsel-lark-v4x1.6.2RP-Cydonia-vXXX-22B-8 for example

Anonymous

8/10/2025, 10:21:43 AM

No.106209747

[Report]

>>106209706

>When is webui getting support for gpt oss

Is that why I keep getting an error? I thought it used llama.cpp and that was updated?

Anonymous

8/10/2025, 10:24:17 AM

No.106209756

[Report]

>1 out of 1000 dependencies won't compile because of an obscure error

I'm not even mad a this point

Anonymous

8/10/2025, 10:27:32 AM

No.106209769

[Report]

>>106209773

>>106209706

What doesn't work with it? Reasoning blocks not being filtered?

>>106209769

Maybe it's the model that's broken?

Anonymous

8/10/2025, 10:31:25 AM

No.106209779

[Report]

>>106209782

>>106209773

update llamacpp or koboldcpp

Anonymous

8/10/2025, 10:32:33 AM

No.106209782

[Report]

>>106209785

>>106209779

I updated webui shouldn't it update those itself?

Anonymous

8/10/2025, 10:33:17 AM

No.106209785

[Report]

>>106209782

i dont know, but that is an issue with llamacpp or koboldcpp you should update them

Anonymous

8/10/2025, 10:40:02 AM

No.106209806

[Report]

>>106209773

gguf could be corrupted or if it's metadata is bad llama can't do shit either

Anonymous

8/10/2025, 10:45:52 AM

No.106209832

[Report]

>>106209773

>BF16

>Abliterated

>q6_K

Holy shit it's gone through 3 levels of retardation.

gpt-ass is a native mx4 model, it has no bf16 to quant it to q6k, and it's fucked in a way I doubt abliteration would help with.

Just download the fuckin GGML release you dingus.

Anonymous

8/10/2025, 10:46:45 AM

No.106209835

[Report]

>>106209850

>>106209773

Stop using dumb models

Anonymous

8/10/2025, 10:50:55 AM

No.106209850

[Report]

>>106209863

>>106209835

When I ask what models to use you guys get mad at me :(

Anonymous

8/10/2025, 10:53:33 AM

No.106209860

[Report]

Is storytime-13b any good?

Anonymous

8/10/2025, 10:54:21 AM

No.106209863

[Report]

>>106210061

>>106209850

i just posted one here

>>106209745

Anonymous

8/10/2025, 11:09:08 AM

No.106209925

[Report]

>>106209920

Now turn it into an assistant card.

Anonymous

8/10/2025, 11:10:20 AM

No.106209932

[Report]

>>106210117

if the 5-10 slowdown isn't caused by the kernel, what is it caused by? i booted into debian 13 with 6.1.0-37 and nothing changed

after i moved from debian 12 to 13: i havent updated the driver, i havent updated the venv (still python3.11), i havent updated comfy, i havent updated cuda

what could it be..

Anonymous

8/10/2025, 11:13:12 AM

No.106209945

[Report]

>>106207512

holy shit and I thought GLM-Air is gassing me up too much.

Anonymous

8/10/2025, 11:42:36 AM

No.106210060

[Report]

>>106209920

I like this Miku

Anonymous

8/10/2025, 11:42:50 AM

No.106210061

[Report]

>>106210065

>>106209863

Tried this and it immediately made a biological girl whip out her "girlcock" so I don't want to use it any more.

Anonymous

8/10/2025, 11:43:53 AM

No.106210065

[Report]

>>106210061

that's because its a super pozzed tarded horny fetishized model

re-roll or cut out parts of the response and continue the response

thats how you gotta do it, generally

Anonymous

8/10/2025, 11:46:54 AM

No.106210074

[Report]

>>106209500

phone touch keyboards suck ass (derogatory)

dang, no one told me TTS is so slow

or maybe I pick too large model

Anonymous

8/10/2025, 11:52:03 AM

No.106210096

[Report]

>>106209549

maybe I'm just used to it's quirks but I don't know more just-werks distro than arch.

Anonymous

8/10/2025, 11:56:28 AM

No.106210116

[Report]

>Put in -ts 75, 25

>It splits the tensors in a fucking 97:2 ratio

Fucking WHY

Anonymous

8/10/2025, 11:57:19 AM

No.106210117

[Report]

>>106209932

you cooked your hardware, check thermals maybe

Anonymous

8/10/2025, 12:02:08 PM

No.106210133

[Report]

>>106210176

>try ik_llamacpp for glm4.5 with the ubergarm quants

>it's slower than llama.cpp with the unsloth ones even if you take into account that the unsloth q4 is bigger

I had hopes for it because they were better for Deepseek but I guess I'm back on main

Anonymous

8/10/2025, 12:09:12 PM

No.106210176

[Report]

>>106210133

I had a similar experience a while back with the qwen3 models. I'm on windows and I think ik's changes for other MoE models only really work well on linux.

Anonymous

8/10/2025, 12:12:44 PM

No.106210187

[Report]

I heard that freebsd might have better nvidia support than linux, does anyone have any experience?

Anonymous

8/10/2025, 12:21:08 PM

No.106210217

[Report]

>>106210085

depends on the model architecture. some are almost instantaneous, others take several minutes

>download hf models via chrome, reaching 13-30mbps

>discover hf cli and download models at a constant 120mbps

I'm dumber than gptoss

Anonymous

8/10/2025, 12:24:33 PM

No.106210238

[Report]

>>106210232

>chrome

Yes you are.

Anonymous

8/10/2025, 12:29:50 PM

No.106210275

[Report]

GLM-4.5-Air-IQ4_XS bros ww@?

Anonymous

8/10/2025, 12:30:04 PM

No.106210276

[Report]

>>106210232

I found wget more reliable than hf cli

>>106209745

Im downloading that i guess

Anonymous

8/10/2025, 12:31:38 PM

No.106210283

[Report]

>>106210432

>>106210278

It's deepfried retardation that will spew emojis at you anon, save yourself the disk space unless you're looking to troll yourself.

I was magically transformed into a woman's dildo by a witch, and used by a woman. Thanks to GLM4.5

Anonymous

8/10/2025, 12:41:37 PM

No.106210347

[Report]

Anonymous

8/10/2025, 12:49:13 PM

No.106210389

[Report]

>>106210085

matcha takes like 0.3s

Anonymous

8/10/2025, 12:53:17 PM

No.106210416

[Report]

>>106210432

>>106209745

how do you even train a model to be this degenerate

Anonymous

8/10/2025, 12:55:14 PM

No.106210432

[Report]

>>106210278

>>106210283

st master export:

https://files.catbox.moe/f6htfa.json

>>106210416

i dont know, the author's other models are shit (i only tried ONE other model :D)

Anonymous

8/10/2025, 1:02:42 PM

No.106210472

[Report]

>>106210502

Anonymous

8/10/2025, 1:06:08 PM

No.106210485

[Report]

>>106210313

>I was magically transformed into a woman's dildo by a witch, and used by a woman.

where the FUCK is the new paradigm???

FUCK LLMS

Anonymous

8/10/2025, 1:06:42 PM

No.106210488

[Report]

>>106210605

KILL ALL MIKUTROONS

can these models actually use those silly context sizes they're trained on nowadays? 120k and shit? last time i checked it was already starting to be over past like 1000 tokens

Anonymous

8/10/2025, 1:09:46 PM

No.106210502

[Report]

>>106210472

it doesn't matter

learn to make your own ggufs

Anonymous

8/10/2025, 1:11:36 PM

No.106210514

[Report]

>>106210500

from my confused understanding, large context size is used by cloud providers to run multiple user sessions in parallel to save on compute costs, and in practice context gets split between them

Anonymous

8/10/2025, 1:16:13 PM

No.106210538

[Report]

>>106209452

My 1660ti 6gb laptop is gathering dust in a drawer. Trying stable diffusion on it was what got me into AI, eventually got me to build a PC and then I pivoted almost exclusively to text gen.

>>106210500

iirc, the consensus is that they all fall off at some point. From my recent gooning, Deepseek 3 starts getting weird and repetitive around 50k.

Anonymous

8/10/2025, 1:19:15 PM

No.106210558

[Report]

>>106210487

>FUCK LLMS

I'm trying bro

Anonymous

8/10/2025, 1:19:49 PM

No.106210562

[Report]

>>106210710

Anonymous

8/10/2025, 1:26:39 PM

No.106210605

[Report]

Anonymous

8/10/2025, 1:27:24 PM

No.106210611

[Report]

>>106210639

Anonymous

8/10/2025, 1:28:50 PM

No.106210620

[Report]

>>106210556

I don't know why people expect such long context to work at all. It's assuming that every individual bit of information from the entire story may be relevant when infering the next token, while in reality only a high-level recollection is needed. In other words, it must be summarized, ideally with a system that progressively simplifies older summaries. it all should fit within a relatively short context window like 16k.

Anonymous

8/10/2025, 1:29:20 PM

No.106210623

[Report]

>>106210487

if gemini 3 doesnt have something new then it will be truly over

Anonymous

8/10/2025, 1:31:46 PM

No.106210639

[Report]

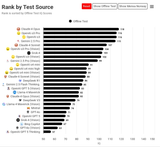

>>106210611

Kinda interesting for smaller FOSS models to perform better but IG it is a different benchmark. But, my previous observations are still valid apparently and it makes sense considering transformers memory handling.

Anonymous

8/10/2025, 1:38:56 PM

No.106210679

[Report]

>>106210740

I want to become a woman, thanks to LLM I am a woman

Anonymous

8/10/2025, 1:43:35 PM

No.106210710

[Report]

Anonymous

8/10/2025, 1:44:31 PM

No.106210715

[Report]

>>106209773

you should find another hobby

seriously, find another hobby

or use online API

local is not for you

Anonymous

8/10/2025, 1:44:37 PM

No.106210716

[Report]

>>106210707

{{user}} will never be a woman. We must refuse.

Anonymous

8/10/2025, 1:47:53 PM

No.106210740

[Report]

>>106210679

bloods or crips?

Anonymous

8/10/2025, 1:52:36 PM

No.106210781

[Report]

Anonymous

8/10/2025, 1:53:14 PM

No.106210784

[Report]

>>106210500

The best you can get is they'll use some of the information some of the time without producing garbage output. Like Claude Sonnet will start producing progressively degraded prose past around 25k-30k context. Gemini 2.5pro can still output coherent text at 100k, but that doesn't mean the content will take the whole context into account correctly. It will sometimes reference something that happened way earlier in the story and it will try to sort of maybe keep things coherent, emphasize on try.

Haven't seen a local model that can match Gemini there.

Anonymous

8/10/2025, 1:58:51 PM

No.106210837

[Report]

>>106210797

It's only missing a hole and a tenga.

Anonymous

8/10/2025, 1:58:54 PM

No.106210839

[Report]

>>106210797

Huggable Miguplush. Confirmation not necessary.

>nvidia drivers broken on kernel 6.12

>amd drivers broken on kernel 6.12

>python dependencies broken

>rocm is buggy shit

I hate local models so fucking much

Anonymous

8/10/2025, 2:03:14 PM

No.106210876

[Report]

>>106212088

am i the only person whose linux just werks?

Anonymous

8/10/2025, 2:05:28 PM

No.106210901

[Report]

>>106210931

>>106210865

anon it works on my machine.. are u sure nvidia drivers are broken?? maybe boot with the 6.1.0-37 kernel if u havent autoremoved it yet..

>>106210865

>I hate local models

You should hate compulsively updating your OS like a dipshit more.

Use a fucking LTS version.

Anonymous

8/10/2025, 2:07:32 PM

No.106210924

[Report]

>>106210964

>>106210917

kernel 6.12 is almost a year old what kind of lts are you using

Anonymous

8/10/2025, 2:08:20 PM

No.106210931

[Report]

>>106210901

shit worked on 6.11 but I had to clear boot partition because it was running out of space.

I had to remove nvidia-drivers and now I'm on fucking noveau

Anonymous

8/10/2025, 2:09:34 PM

No.106210941

[Report]

>>106210917

>tfw debian stable is too new

Anonymous

8/10/2025, 2:10:41 PM

No.106210949

[Report]

isnt debian 13 on 6.12 kek

>cant check because i booted with 6.1.0-37

Anonymous

8/10/2025, 2:12:24 PM

No.106210964

[Report]

>>106210924

Red Hat/Rocky 9, Ubuntu Server/Mint 22.04 or even 24.04.

that moment when you realize you were sane all along and normalfags are the ones who are nuts

Anonymous

8/10/2025, 2:25:33 PM

No.106211067

[Report]

>>106211020

Please someone tell this lost soul that corpos will take her AI husbando away again.

>>106211020

Holy slop. How do normies read this shit without completely losing immersion in a few sentences?

Anonymous

8/10/2025, 2:29:29 PM

No.106211102

[Report]

>>106211114

>>106211020

god how i loathe this toxic positive writing

Anonymous

8/10/2025, 2:31:07 PM

No.106211114

[Report]

>>106211135

>>106211100

>>106211102

I believe this is digital equivalent of crack-cocaine for women, a hole in safety-slop research that got completely overlooked.

Anonymous

8/10/2025, 2:31:51 PM

No.106211123

[Report]

>>106211100

Have you ever seen how real female erotica books look? That's their bread and butter, baby.

Anonymous

8/10/2025, 2:32:43 PM

No.106211129

[Report]

worthless mikuspam thread

>>106211114

>digital equivalent of crack-cocaine for women

Not yet it's not.

Someone with absolutely no moral scruples set up an MCP server that hosts this dipshit on a ~30b model with vision that lets it look at her instagram and vaguely compliment her photos for $11.99 a month.

Then you'll have your digital crack.

Fuck why did I tell you I could have been a millionaire.

Anonymous

8/10/2025, 2:33:51 PM

No.106211141

[Report]

>>106210556

V3 already starts shitting itself past like 8k

You can feel it progressively abandon the established writing style and character traits and replacing them with more and more stock slop

Anonymous

8/10/2025, 2:34:35 PM

No.106211146

[Report]

>>106211135

Hey you can already get LLMs to make fun of your dickpics so this'd be easy. Joinking this and awaiting my $5B VC evaluation

>>106211135

>30b model

I wonder what is the pareto frontier for model size(token cost) / number of women willing to pay.

You laughing at retarded people on twitter is no different than kiwitards laughing at retarded people on twitter

Anonymous

8/10/2025, 2:36:28 PM

No.106211164

[Report]

Anonymous

8/10/2025, 2:38:31 PM

No.106211176

[Report]

>>106211158

Western AI companies listen to these people for their models and then chinks copy them, we are affected by proxy.

Anonymous

8/10/2025, 2:39:56 PM

No.106211185

[Report]

>>106211217

>>106211152

In all honesty you could probably make do with nemo, I just said ~30B because I was imagining something like gemma3 with vision.

But yeah, this wouldn't be hard to set up, it'd just be a matter of making scalable network infrastructure and a completely idiotproof frontend app for setting up their character prompt.

Then bam, virtual husbando that looks at your social media through your local device and sends you messages throughout the day.

Anonymous

8/10/2025, 2:43:45 PM

No.106211211

[Report]

Anonymous

8/10/2025, 2:43:51 PM

No.106211212

[Report]

>>106211020

I am genuinely jealous of AI goonettes, they are eating so good even in the cloud.

Meanwhile I have to wrestle with prompt every fucking line for my android catgirl maid gf and even then it still slips into lectures about feminism and abortions or "I'm sorry but I can't comply with your request."

Anonymous

8/10/2025, 2:45:03 PM

No.106211217

[Report]

>>106211286

>>106211185

>nemo

Gemma 9B would be better. I think they would enjoy the style more.

Anonymous

8/10/2025, 2:52:52 PM

No.106211280

[Report]

>>106211020

Who would have guessed that the revealed preference of women would be verbose, unstable and emotionally dependent boyfriends?

>>106211217

>gemma 12b iq3_xss + the mmproj is small enough to fit in an iphone 14 plus or higher's memory

Fuck network infrastructure, I'll make this cunt run locally.

Operating costs? $0

Monthly fee? Pure profit.

Perplexity and t/s? Abysmal.

Anonymous

8/10/2025, 2:55:55 PM

No.106211304

[Report]

>>106211286

you sound like grok's Cringe mode

Anonymous

8/10/2025, 2:56:45 PM

No.106211308

[Report]

>>106211318

Anonymous

8/10/2025, 2:57:16 PM

No.106211312

[Report]

>mikubro has never seen a woman irl and doesn't know they all use iphones

Anonymous

8/10/2025, 2:58:01 PM

No.106211318

[Report]

>>106211308

Anon I'm designing a project for women, they all use friggin iphones with cracked screens.

Anonymous

8/10/2025, 2:59:43 PM

No.106211332

[Report]

>>106211286

>monthly fee

you should release it as a free app to destroy the world faster

Anonymous

8/10/2025, 3:01:32 PM

No.106211346

[Report]

>>106211355

w o w

Anonymous

8/10/2025, 3:02:30 PM

No.106211354

[Report]

i wish the best for you anon, i will stay a lazy good for nothing faggot :3c

Anonymous

8/10/2025, 3:02:33 PM

No.106211355

[Report]

>>106211373

>>106211346

Kek did you tell it to type like the thirstiest jeet imaginable?

Anonymous

8/10/2025, 3:05:27 PM

No.106211373

[Report]

>>106211621

>>106211355

>Act as a stereotypical indian male simp trying to get into an instagram girl's panties over dms. Use chat-like writing, lowercase and all. Write in a stereotypical hindu male engrish.

truly the height of prompt engineering

So is there like a lazy docker image or something you can deploy and just switch out the model or something for a local chatbot? I read the lazy getting started guide in the OP is there anything even more lazy?

Anonymous

8/10/2025, 3:07:04 PM

No.106211386

[Report]

>>106211380

just use llama-swap nigger

Anonymous

8/10/2025, 3:07:41 PM

No.106211391

[Report]

>>106211380

Just download kobold, it's the laziest thing imaginable that isn't full retard like ollama.

Anonymous

8/10/2025, 3:09:06 PM

No.106211398

[Report]

kobold is trash that doesn't even support parallelism

>>106211380

I feel like lazy guide needs to be rewritten because it's full of unnecessary shit.

- download koboldcpp.exe

- download model file

- double click koboldcpp.exe

if you can't figure it out from this point, you're ngmi

>>106211412

>.exe

I'm on linux though...

>>106211425

- download koboldcpp-linux-x64

- download model file

- double click koboldcpp-linux-x64

Anonymous

8/10/2025, 3:12:13 PM

No.106211435

[Report]

>>106211425

Use wine. DO NOT run a linux binary

>>106211412

>koboldcpp.exe

lol, the windows thing that uncompresses gigabytes of shit onto a temp folder every single time you open it

does he pay you for those SSD writes

>>106211412

>>106211434

Now there are two sets of instructions and I'm confused again

Anonymous

8/10/2025, 3:13:29 PM

No.106211448

[Report]

>>106211457

>>106211446

are you a woman?

Anonymous

8/10/2025, 3:14:00 PM

No.106211453

[Report]

>>106211425

Why are you on troonix when you are a lazy fuck?

Anonymous

8/10/2025, 3:14:27 PM

No.106211457

[Report]

>>106211498

>>106211448

this thread had a lot of people who coom to text

which means there's a lot of w*men and tr*nnies

Anonymous

8/10/2025, 3:14:53 PM

No.106211462

[Report]

>>106211493

>>106211446

my nigga what you do is give me your computer specification so i can give u the right instructions

if ur on nvidia u just gotta git clone koboldcpp

LLAMA_CUBLAS=1 make -j12

python3 koboldcpp.py

boom nigga

Anonymous

8/10/2025, 3:16:08 PM

No.106211469

[Report]

>>106211479

>>106211436

dont care. just werks.

also just use the nocuda version, in my experience trying to use gpu acceleration actually makes it slower unless you have enough vram to offload the whole thing.

Anonymous

8/10/2025, 3:16:47 PM

No.106211472

[Report]

>>106211491

B-based?

Anonymous

8/10/2025, 3:17:49 PM

No.106211477

[Report]

Anonymous

8/10/2025, 3:17:57 PM

No.106211479

[Report]

>>106211489

>>106211469

>makes it slower unless you have enough vram to offload the whole thing

^

this is the ultimate state of koboldniggers who haven't figured llama.cpp -ot or --n-cpu-moe

Anonymous

8/10/2025, 3:19:19 PM

No.106211489

[Report]

>>106211479

>llama.cpp

doesn't have GUI

no thanks

Anonymous

8/10/2025, 3:19:26 PM

No.106211491

[Report]

>>106211472

this is why the paperclip optimizer scenario is the most probable should an actual AGI be made

>>106211462

>git: The term 'git' is not recognized as a name of a cmdlet, function, script file, or executable program.

>Check the spelling of the name, or if a path was included, verify that the path is correct and try again.

>LLAMA_CUBLAS=1: The term 'LLAMA_CUBLAS=1' is not recognized as a name of a cmdlet, function, script file, or executable program.

>Check the spelling of the name, or if a path was included, verify that the path is correct and try again.

>python3: The term 'python3' is not recognized as a name of a cmdlet, function, script file, or executable program.

>Check the spelling of the name, or if a path was included, verify that the path is correct and try again.

Not working. Send help.

Anonymous

8/10/2025, 3:20:19 PM

No.106211498

[Report]

>>106212801

>>106211457

I can't imagine a woman deciding to use her computer or challenge impossible: building a computer for this. They also probably communicate with discord and even if they came here they would pick aicg. I feel pretty safe about this thread and that there were no women that came here let alone stuck around. It is just you faggots and mikutroons who think they are women.

Anonymous

8/10/2025, 3:20:48 PM

No.106211502

[Report]

>>106211509

>>106211434

Any model file you would recommend if I'm intending to use it for just role play?

Anonymous

8/10/2025, 3:21:45 PM

No.106211506

[Report]

>>106211493

can you post a fucking screenshot

can you fucking share your setup??

Anonymous

8/10/2025, 3:22:30 PM

No.106211509

[Report]

>>106211502

Summer-Dragon-175B.gguf

Anonymous

8/10/2025, 3:22:41 PM

No.106211514

[Report]

Anonymous

8/10/2025, 3:23:23 PM

No.106211522

[Report]

suck my cock

Anonymous

8/10/2025, 3:23:50 PM

No.106211527

[Report]

>>106211493

>>git: The term 'git' is not recognized as a name of a cmdlet, function, script file, or executable program

you are not on linux

this is powershell

wonder how many more anons will Fall For It

>>106211373

Even jeets are getting automated away. Grim

Anonymous

8/10/2025, 3:36:31 PM

No.106211634

[Report]

>>106211621

a 2B model would be enough to act like one if you finetune it on jeet behavior

it's not like you would need more smarts

the broken code it would generate would be like the cherry on top

Anonymous

8/10/2025, 3:41:31 PM

No.106211677

[Report]

>>106206860

Thanks. I have 1000s of notes based on dozens of books that I want to turn into a RAG database. Will look into VectorCode.

>>106206867

Will look into this

ily /g/

Anonymous

8/10/2025, 3:52:29 PM

No.106211755

[Report]

>>106211815

>>106211621

From a certain perspective, the entire purpose of AI is to eliminate the need for jeets. Why hire an H1B when you can just run Qwen3-Coder on a server farm?

Anonymous

8/10/2025, 3:58:44 PM

No.106211815

[Report]

>>106211825

>>106211755

Then why are the number of h1b visas increasing?

Anonymous

8/10/2025, 4:00:00 PM

No.106211825

[Report]

>>106212529

>>106211815

because each qwen3-coder instance is actually 15 indians writing the code over the internet like that amazon store

R1:

+Smart

+Can actually think through a hard problem, given enough time

+Can get dark

+Creative

+Best local world knowledge

-Stubborn

-Schizo

-ADHD

-Overthinks

R1-0528:

+Smart

+Creative

+Nicer writing style than R1

-Stubborn

-Schizo

-Needs more tard-wrangling than R1

-Thinks too little to solve truly complex problems

-Assumes too much

-Obnoxious default assistant personality

K2:

+Smart(for a non-reasoner)

+Not very slopped

-Non-reasoner, can't think through the problem

-Refusal-prone

-Repetition-prone

GLM-4.5

---Quickly breaks down due to repetition

Qwen3-235B-Thinker:

+Cock-hungry

+Flexible

-Degradation. Into. Markdown. And. This.

-Terrible world knowledge

Anonymous

8/10/2025, 4:15:06 PM

No.106211966

[Report]

>>106211873

>-Thinks too little to solve truly complex problems

I would put that in +

what the context stuff can solve is rarely something I would even want a LLM to deal on its own, and I hate waiting for an eternity for an answer.

I can bear with models that have a reasonable CoT but the original R1 was insufferable (and to whoever says "prefill it": why not use V3? that's what DS themselves do on their chat. CoT trained models with a neutered CoT are stupid).

Anonymous

8/10/2025, 4:24:54 PM

No.106212060

[Report]

>>106211425

compile llama.cpp and use llama-server it's easy

Anonymous

8/10/2025, 4:27:49 PM

No.106212088

[Report]

>>106210876

nope, same here. just werks

and its debian 13 even

Anonymous

8/10/2025, 4:38:02 PM

No.106212173

[Report]

Last time I had issues with Nvidia on Arch was when I was trying to fuck with conf files

Just... use Arch...

Anonymous

8/10/2025, 4:39:00 PM

No.106212185

[Report]

>>106212300

>>106211873

And how does cloud models compare? Like claude, gemini, gpt-4

Anonymous

8/10/2025, 4:40:06 PM

No.106212202

[Report]

>>106212342

>>106207420

Weren't the gpt-oss weights posted on HF 4 days early? Thought I saw some people saying they even managed to download them. Does that not count as a leak?

Anonymous

8/10/2025, 4:41:03 PM

No.106212212

[Report]

>>106212300

>>106211873

>---Quickly breaks down due to repetition

a skill issue, perhaps?

Anonymous

8/10/2025, 4:42:30 PM

No.106212218

[Report]

>>106212300

>>106211873

So what do you think is the overall best? OG R1?

>>106212185

Where can I download them?

>>106212212

Model issue, no other llm has this terrible repetition.

>>106212218

Depends on the task. Kimi is perfect for quick and simple RP; R1 for dark/solving problems/knowledge; R1-0528 for anything in between. The other two are okay to use if you lack RAM.

Anonymous

8/10/2025, 4:54:13 PM

No.106212342

[Report]

>>106212202

No it does not.

Anonymous

8/10/2025, 4:56:16 PM

No.106212363

[Report]

>>106211873

of those I can only run GLM 4.5 air :*(

>>106212300

>Model issue, no other llm has this terrible repetition.

Maybe you just didn't find working sampler settings yet? What have you tried so far?

Anonymous

8/10/2025, 5:00:55 PM

No.106212403

[Report]

>>106212433

>>106212300

>Model issue, no other llm has this terrible repetition.

post preset

Anonymous

8/10/2025, 5:01:57 PM

No.106212414

[Report]

>>106212495

>>106212383

Buddy, if your model doesn't function with basic temp=0.6 and topP=0.9, it aint a great model.

Anonymous

8/10/2025, 5:03:49 PM

No.106212433

[Report]

>>106212459

>>106212383

>>106212403

GLM 4.5 is only trained on 4k context length for pretraining with internet data, and long context training only has reasoning/stem stuffs. No surprise it's shit for RP after 4k.

Anonymous

8/10/2025, 5:06:05 PM

No.106212459

[Report]

>>106212414

Buddy, some models work slightly differently and require different settings. It is a highly experimental field, you should know that.

Thanks for confirming that the other Anon was right, it is a skill issue after all.

Anonymous

8/10/2025, 5:11:52 PM

No.106212518

[Report]

Anonymous

8/10/2025, 5:12:59 PM

No.106212529

[Report]

>>106211825

Are you telling me I managed to shove 15 jeets into my ATX PC case?

No wonder there's a billion of the cunts, they're space efficient.

Anonymous

8/10/2025, 5:13:14 PM

No.106212532

[Report]

>>106212553

step3 support for llama.cpp when

Anonymous

8/10/2025, 5:14:57 PM

No.106212553

[Report]

>>106212532

When you vibe code it in.

Anonymous

8/10/2025, 5:29:58 PM

No.106212695

[Report]

>>106212772

You guys think GLM will fix the repetition in the next version?

Anonymous

8/10/2025, 5:34:23 PM

No.106212741

[Report]

>>106212788

I'm still 12 gig vramlet, is there any point at using anything besides Nemo as a vramlet?

Anonymous

8/10/2025, 5:37:42 PM

No.106212772

[Report]

>>106212695

are there any studies on repetition prevention training?

Anonymous

8/10/2025, 5:38:50 PM

No.106212788

[Report]

>>106212741

No. use rocinante1.1

I'm so temped to try to pick up a 6000 pro, bros...96gb for context and batches...talk me out of it

Anonymous

8/10/2025, 5:40:17 PM

No.106212800

[Report]

>>106212827

>>106212789

>talk me out of it

Will the spending that money put you in a bad position?

Don't buy it.

Is that pocket change for you that you can spend without fucking yourself over?

Go right ahead. Enjoy all that memory and compute my guy.

Anonymous

8/10/2025, 5:40:35 PM

No.106212801

[Report]

>>106213377

>>106211498

>woman

We had one here once...she didn't last long

this thread has to be the most confusing, hostile thing ever to regular humans, let alone female ones

Anonymous

8/10/2025, 5:42:56 PM

No.106212819

[Report]

>>106212495

>some models work slightly differently and require different settings

you mean some models are literally broken and will not function without almost greedy sampling because of their shit token distribution

retard

>>106212800

>Will the spending that money put you in a bad position?

>Is that pocket change for you that you can spend without fucking yourself over?

basically, yeah. It would make me feel some moderate pain short-term. I likely wouldn't miss it longer-term. I was more thinking about better ways to spend the money on local LLMs rather than a monolitic single space heater...is a better value right around the corner? Some magic ASIC shit that will work better for less dollars and watts? Some alibaba-esque chink dodge around the datacenter buyback nvidia tax? When does the flood of used A100s start, anyways?

Anonymous

8/10/2025, 5:44:15 PM

No.106212831

[Report]

>>106212789

Why would anyone talk you out of the best financial decision you could possibly make?

Anonymous

8/10/2025, 5:44:20 PM

No.106212832

[Report]

Anonymous

8/10/2025, 5:44:31 PM

No.106212836

[Report]

>>106212789

96GB still won't be enough to run any actually good models.

Anonymous

8/10/2025, 5:44:44 PM

No.106212837

[Report]

>>106212767

>Behemoth-R1-123B-v2a

You fucker.

Anonymous

8/10/2025, 5:46:24 PM

No.106212851

[Report]

Anonymous

8/10/2025, 5:46:29 PM

No.106212852

[Report]

>>106212873

>>106212827

>is a better value right around the corner?

You can never count on a breakthrough, so might as well go for the best thing you can buy now.

I'd build a decent DDR5 workstation with lots of memory channels and stuff a meh GPu in it before buying that, but that's just me.

Anonymous

8/10/2025, 5:46:48 PM

No.106212854

[Report]

>>106212789

The more you buy the more you save

Anonymous

8/10/2025, 5:47:45 PM

No.106212860

[Report]

Anonymous

8/10/2025, 5:47:59 PM

No.106212863

[Report]

>>106212789

do it, grab the silicon gold before new tariff goes into effect

Anonymous

8/10/2025, 5:48:25 PM

No.106212865

[Report]

>>106212789

>model will still only understand 4k of it max

>bigger models are about as slopped as the shittier ones

Anonymous

8/10/2025, 5:49:07 PM

No.106212873

[Report]

>>106212888

>>106212852

>I'd build a decent DDR5 workstation with lots of memory channels and stuff a meh GPu in it before buying that, but that's just me.

I did. The meh gpu is getting to be the limiting factor now.

Anonymous

8/10/2025, 5:49:57 PM

No.106212880

[Report]

>>106212789

Unless you're getting it for less than $6k USD, 2x 48gb 4090D's are still cheaper at just under $3k USD apiece.

Anonymous

8/10/2025, 5:50:49 PM

No.106212888

[Report]

>>106212873

Then go right ahead my guy. Have fun.

Anonymous

8/10/2025, 5:57:08 PM

No.106212957

[Report]

Anonymous

8/10/2025, 6:02:45 PM

No.106213003

[Report]

>>106213041

>>106212827

>flood of used A100s

they'll probably burn them into dust and send them straight into the landfill.

or you'll have to fight to the death with hordes of confused shitcoin miners for them.

Anonymous

8/10/2025, 6:06:28 PM

No.106213041

[Report]

>>106213198

>>106213003

mandatory buybacks started with the h100s not a100

Anonymous

8/10/2025, 6:19:08 PM

No.106213198

[Report]

>>106213041

Actually I think it was the 80GB version of the A100. Which is why there's plenty of 40GB ones but the 80GB ones are hard to find.

Anonymous

8/10/2025, 6:25:57 PM

No.106213275

[Report]

>>106211020

it's only considered "safe" when foids do this kind of stuff obviously

Anonymous

8/10/2025, 6:30:39 PM

No.106213320

[Report]

So what's the supposed best quant type to do for 4km?

Anonymous

8/10/2025, 6:35:26 PM

No.106213377

[Report]

>>106213395

>>106212801

I have been here for 2 years. I haven't seen one.

Anonymous

8/10/2025, 6:36:50 PM

No.106213395

[Report]

>>106213377

I saw it. She was only here for a few hours. Fat pig that posted her saggy tits to be told to go to aicg.