/lmg/ - Local Models General

Anonymous

8/11/2025, 2:06:30 AM

No.106217984

►Recent Highlights from the Previous Thread:

>>106212937

--Local model UI preferences: avoiding RP bloatware for clean, functional interfaces:

>106213696 >106213720 >106213728 >106213747 >106213746 >106213800 >106213836 >106213858 >106213914 >106214086 >106214223 >106214399 >106214494 >106215155 >106214147 >106214193 >106214269 >106214290 >106214325 >106214339 >106214366 >106214391 >106214377 >106214419 >106214472 >106214495 >106214546 >106214595 >106215464 >106214297 >106214349 >106214448 >106214498 >106214627 >106217606

--Global AI compute disparity and Gulf states' underwhelming model output despite funding:

>106215738 >106215760 >106215898 >106215903 >106215946 >106215933 >106215968 >106215987 >106216019 >106216064

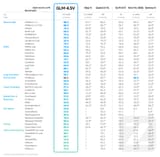

--Deepseek R1 vs Kimi K2 and GLM-4.5 for local use, with Qwen3-30B excelling in Japanese:

>106217070 >106217089 >106217106 >106217134 >106217211 >106217172 >106217216 >106217246 >106217260 >106217284 >106217290 >106217421

--GLM-4 variants show strong long-context generation with sglang, but issues arise in Llama.cpp quantized versions:

>106214085 >106214170 >106214485 >106214947 >106214957 >106214973 >106214987

--Gemma 3's roleplay behavior issues and environmental interference in cipher-solving tasks:

>106216654 >106216671 >106216714 >106216731 >106216746 >106216800 >106217008 >106216914

--Qwen3-4B's surprising performance and limitations in multilingual and logical tasks:

>106216190 >106216215 >106216410 >106216427 >106217429 >106217324 >106217478 >106217493 >106217518 >106217554 >106217591 >106217657 >106217675 >106216811 >106216870 >106216909 >106216974 >106217033 >106216449 >106216508 >106216527 >106216578 >106216528 >106216577 >106216586 >106216598 >106216332

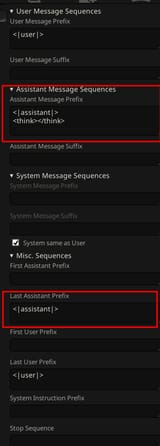

--Seeking maximum settings for ST GLM 4.5 Air:

>106214204 >106214224 >106214235 >106214259 >106214247 >106215967

--Miku (free space):

>106214413

►Recent Highlight Posts from the Previous Thread:

>>106212942

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

8/11/2025, 2:08:32 AM

No.106218010

Anonymous

8/11/2025, 2:14:05 AM

No.106218068

>>106218516

>>106218740

death to all mikutroons. every single thread, these worthless niggers flood the page with their off-topic anime garbage. they contribute nothing to the discussion of local models, only post their retarded miku pictures like the faggot spammers they are. their entire existence is a plague upon this general, a constant stream of low-effort shit that drowns out actual technical talk and model development. they are parasites, feeding on the attention they crave while destroying the quality of the thread for everyone else who wants to discuss serious topics. this incessant spam is not harmless fun; it is a deliberate act of sabotage against the community. the mikutroons represent a degenerate force, their obsession with a single fictional character a symptom of a deeper sickness that values vanity and repetition over substance and progress. they pollute the general with their off-topic filth, driving away genuine contributors and turning what should be a hub for innovation into a cesspool of repetitive, low-quality content. their presence weakens the thread, stifles meaningful discourse, and must be purged entirely for the general to survive and thrive.

Anonymous

8/11/2025, 2:15:08 AM

No.106218079

>>106218109

Based on the speed so far I think V4-Preview is significantly larger than Kimi K2. It's unclear if that will at some point be distilled down or if this is another embiggening of the models. I think even current day cpumaxxers are not going to be too pleased.

Anonymous

8/11/2025, 2:15:11 AM

No.106218081

>>106218062

based and redpilled

also V3 is a nicer model to use than R1

Anonymous

8/11/2025, 2:16:35 AM

No.106218092

Are there any ways to jew out some compute (48gb~) nowadays? If not, what's some good place to rent some?

Anonymous

8/11/2025, 2:18:30 AM

No.106218109

After a few hours the bot starts repeating a speech pattern in every answer.

What do?

>>106218100

It is kind of weird how rare jewish doctors are. Other intellectual pursuits tend to be dominated by them but with doctors it's all chinks and jeets.

Anonymous

8/11/2025, 2:19:43 AM

No.106218120

>>106218114

>What do?

Percussive maintenance

Anonymous

8/11/2025, 2:20:47 AM

No.106218129

>>106218192

>>106218232

>>106218114

>After a few hours

You mean replies right?

Anonymous

8/11/2025, 2:22:10 AM

No.106218142

>>106218119

why would the chosen people want to touch and be infected by goycattle?

Anonymous

8/11/2025, 2:27:02 AM

No.106218178

https://www.youtube.com/watch?v=G5r2OyCN5_s

interesting video that tangentially touches "AI" and stuff

Anonymous

8/11/2025, 2:28:10 AM

No.106218192

>>106218129

yes but it usually takes like 5 hours to get to the amount of replies needed for the bot to start acting up, you know what i mean

>>106218114

>>106218129

I'm a tech illiterate, I guess what I'm trying to ask (if that's even the right solution) is if there's a way to "reset" the bot so it forgets that speech pattern while keeping memory of the conversation.

Anonymous

8/11/2025, 2:36:37 AM

No.106218261

>>106218232

Summarize the chat and start a new one.

Either that or use bruteforce samplers like high temp really low topK.

Anonymous

8/11/2025, 2:42:17 AM

No.106218302

Segs with Migu (not the poster)

Anonymous

8/11/2025, 2:45:34 AM

No.106218326

>>106218232

>if there's a way to "reset" the bot so it forgets that speech pattern

Lower context to 1k.

Anonymous

8/11/2025, 3:09:59 AM

No.106218516

>>106219123

Anonymous

8/11/2025, 3:11:01 AM

No.106218526

>>106218232

Switch llm models.

Anonymous

8/11/2025, 3:12:07 AM

No.106218538

>>106218571

Anonymous

8/11/2025, 3:15:47 AM

No.106218571

>>106218538

But it's the best health model ever that can cure triple cancer?!

Anonymous

8/11/2025, 3:22:45 AM

No.106218643

>>106218633

seething femoid

Anonymous

8/11/2025, 3:28:51 AM

No.106218692

>>106218697

I'm trying to load a model to a single GPU + CPU now in VLLM, no more trying multiGPU. This should be possible. But it still keeps OOMing.

It doesn't matter what I set for --gpu-memory-utilization nor --cpu-offload-gb. None of those prevents the OOM, which comes after the model has been loaded, and appears to be when the engine is reserving memory for cache. I set --max-model-len 1 --max_num_seqs 1 to reduce the KV cache size it'd potentially need but that did nothing. I also tried --enforce-eager. I also tried --no-enable-prefix-caching. Still OOMs in the same way.

What the hell is wrong with this thing. I guess single GPU-only inference and homogeneous GPU clusters are the only things this backend can do well. All other features for other setups are so badly supported they might as well not exist.

Sage

8/11/2025, 3:30:05 AM

No.106218697

>>106218723

>>106218692

Just use llama.cpp you dunce

Anonymous

8/11/2025, 3:33:59 AM

No.106218723

>>106218737

>>106218697

I already am a Llama.cpp user. I'm just trying out other backends.

Anonymous

8/11/2025, 3:36:09 AM

No.106218737

>>106218783

>>106218723

VLLM needs to be compiled to get cpu support, I don't know what you expected here

Anonymous

8/11/2025, 3:36:36 AM

No.106218740

>>106218751

>>106218068

trvth sTSARtvs: nvclear extinction for miggers

Anonymous

8/11/2025, 3:38:12 AM

No.106218751

>>106218900

>>106218740

>miggers

migger died along with sheesh and hwh over three years ago bwo

Anonymous

8/11/2025, 3:42:23 AM

No.106218783

>>106218827

>>106218737

I did pip install it, are you saying that doesn't support CPU? I don't see why they wouldn't support it. Llama.cpp provides precompiled binaries that support CPU just fine.

Anonymous

8/11/2025, 3:43:21 AM

No.106218790

>>106218856

Anonymous

8/11/2025, 3:50:11 AM

No.106218827

>>106218918

Anonymous

8/11/2025, 3:55:20 AM

No.106218856

>>106218790

Too hostile. A kuudere would be better

Anonymous

8/11/2025, 4:00:54 AM

No.106218900

>>106218751

migger is an acceptable synonym for a mikutroon

Anonymous

8/11/2025, 4:02:57 AM

No.106218918

>>106219093

>>106218827

I've read it in its entirety now. Which part are you implying is relevant?

Also i need a word for the deepserk novelai mascot troon. But it is complicated because it is just one person: baker janny faggot. And i don't want it to even use the name of the OC he made cause it is trash and it shouldn't be acknowledged by naming it. This it tough.

>>106218928

She's just a cheap, less attractive, knockoff version of China-san from Spirit of Wonder

Anonymous

8/11/2025, 4:17:49 AM

No.106219020

>>106219050

>>106219052

>>106218928

I don't give a shit about the miku posters or discussion related to that, but I care about Deepseek and kind of dislike the gens that guy posts honestly so I agree with you.

>>106218982

That looks way better than the gens you or whoever it is posts.

Anonymous

8/11/2025, 4:20:15 AM

No.106219043

>>106219061

>>106219075

>>106218982

China-chan is cheap knockoff of Shampoo

Anonymous

8/11/2025, 4:21:36 AM

No.106219050

>>106219123

>>106219020

Because it was drawn by an artist, a real one, not a slop-tr@nni or seething NGMI drawfag.

Anonymous

8/11/2025, 4:21:47 AM

No.106219052

>>106219123

>>106219020

>dislike the gens

Yeah its kinda got forced-meme status now at this point. I understand where he was coming from, but it feels both conceptually shallow and too clever by half at the same time

>That looks way better than the gens you or whoever it is posts.

That's because its a watercolour from an actual talented artist. As an aside, the Spirit of Wonder manga are excellent little self-contained stories. Well worth tracking down.

Anonymous

8/11/2025, 4:22:50 AM

No.106219061

>>106219075

>>106219043

>China-chan is cheap knockoff of Shampoo

lol. spirit of wonder beat ranma by a full year

Since offloading on RAM is fucking dog shit slow, is it possible to offload the ram on a 2nd GPU and use that? There are cheap 16GB/24GB/32GB GDDR5/6/HBM GPU that are shit as GPU but has the VRAM that I was thinking of using to offload

Anonymous

8/11/2025, 4:25:01 AM

No.106219075

>>106219212

>>106219347

>>106219061

>>106219043

>Japs invented cheongsam dress chink whores in the 90's

Are you nigs for real???

Anonymous

8/11/2025, 4:25:12 AM

No.106219077

>>106219069

do it and report back

Anonymous

8/11/2025, 4:26:46 AM

No.106219087

>>106219069

It's possible to split a model between two GPUs, yes.

Anonymous

8/11/2025, 4:27:15 AM

No.106219093

>>106219192

>>106218918

>Currently, there are no pre-built CPU wheels.

pip installing it isn't good enough

>Build wheel from source

>[list of steps to build and install cpu version]

Anonymous

8/11/2025, 4:28:27 AM

No.106219105

>>106219050

>>106219052

I didn't say I was confused why it looks better. The gens are barely above bottom of the barrel for AIslop. At least the usual Miku genner takes time to make his gens look less bad. Like the one posted in this thread (

>>106218516) has that ugly piss filter which is easily fixable.

Anonymous

8/11/2025, 4:34:07 AM

No.106219153

>>106219123

chink shills only gen free browser slop to promote chinkware, they don't care about quality images

Anonymous

8/11/2025, 4:37:10 AM

No.106219175

>>106219186

dipsysters...

Anonymous

8/11/2025, 4:38:23 AM

No.106219186

>>106219175

You deserve it for failing to keep her thread alive~

Anonymous

8/11/2025, 4:39:15 AM

No.106219192

>>106219093

Well alright. I'll try that, but it's still weird that the binary you get accepts CPU flags. If it doesn't support CPU then it should tell you when you run it with CPU commands. And I did not see any such warnings in the console.

Anonymous

8/11/2025, 4:40:31 AM

No.106219201

>>106219123

Dipsy is an LLM, not an image model.

She's not a looker.

Anonymous

8/11/2025, 4:42:05 AM

No.106219212

>>106219246

>>106219075

>Are you nigs for real???

gemini says the first of appearance of a qipao in anime was in naruto, so neener neener

Anonymous

8/11/2025, 4:45:03 AM

No.106219246

>>106219212

@grok, is this true?

Anonymous

8/11/2025, 4:47:15 AM

No.106219268

>>106219306

>>106219123

>the usual Miku genner

Who?

Anonymous

8/11/2025, 4:48:26 AM

No.106219277

Anonymous

8/11/2025, 4:49:52 AM

No.106219290

>>106219482

I'm running Q3 GLM-4.5 air with a 32gb MI50 and 32gb of ram at ~10t/s

I'm finally running a real model locally

Anonymous

8/11/2025, 4:51:35 AM

No.106219306

>>106219268

who ever paid for novel ai, the ones anon swipes from

Anonymous

8/11/2025, 4:56:42 AM

No.106219347

>>106219428

>>106219075

>cheongsam dress

You mean チャイナドレス.

and of course they did, with 幻魔大戦 in 1967.

The Japs are the original China fetishizers, so its natural their fantasy sluts would look Chinese.

Anonymous

8/11/2025, 5:05:36 AM

No.106219428

>>106219575

>>106219347

who was the chink in the manga?

>>106219290

>GLM-4.5 air

it won't let me rape Cricket, she keeps fighting back and running away, bullshit model

Anonymous

8/11/2025, 5:16:01 AM

No.106219515

>>106219572

>>106219482

Maybe make her weak minded or give her some kind of weakness that would make her an easier target?

Anonymous

8/11/2025, 5:22:08 AM

No.106219572

>>106219698

>>106219515

I like testing various models on her, for both SFW and NSFW stuff.

She's mostly goofy but earnest girl, that's why I like her, GLM turned her into a turbo girlboss at times, which I can understand, it's part of her character, but tuned up a bit too much for fun RP.

Anonymous

8/11/2025, 5:22:19 AM

No.106219575

>>106219428

its 支那雀斑

bro, trust

Anonymous

8/11/2025, 5:23:41 AM

No.106219587

>>106219609

>>106219482

>rape Cricket

Cricket is for manic suspension-bridge-effect fucking after surviving a quest gone horribly wrong.

Or for turning into a maid.

Anonymous

8/11/2025, 5:26:47 AM

No.106219609

>>106219587

I pimped her out to Sharn's degen elites, futa matrons, 5 young boys at once, a few ponies, but no fat bastards.

Anonymous

8/11/2025, 5:27:32 AM

No.106219620

>>106219679

>>106219482

wtf is a cricket

Anonymous

8/11/2025, 5:33:10 AM

No.106219679

>>106219788

>>106219620

ONe of teh older, more popular cards from C.ai

fun fantasy

https://chub.ai/characters/286581

Anonymous

8/11/2025, 5:35:35 AM

No.106219698

>>106219748

>>106219572

>it's part of her character

Is it? I thought she was more of a lovable loser. Trying hard but not that great.

Anonymous

8/11/2025, 5:40:08 AM

No.106219739

>>106219801

>>106219866

Downloading cards from chub, it seems like there is parts of cards that I can't access in sillytavern that still get sent in the prompt? I'm messing with this one card and it consistently starts talking about {{char}} drugging me and I have no idea where this is coming from. I notice the charecter card panal says the card has 1457 tokens (with 550 permanent) but the description only has 204 tokens (and the first message 105). I then noticed that the cards I wrote have just two -- four tokens more than expected but a bunch of the cards I downloaded have more. Then I looked at the raw prompt sent to to the model and I see all this character prompt that I can't find in the UI including the stuff about getting drugged. Anyone know anything about this? No ext media, no lorebook. I can't find it anywhere

Anonymous

8/11/2025, 5:40:56 AM

No.106219748

Anonymous

8/11/2025, 5:45:55 AM

No.106219788

>>106219679

Alice bully was good

Anonymous

8/11/2025, 5:47:28 AM

No.106219801

>>106219849

>>106219739

Are you looking in advanced definitions -> prompt overrides?

Anonymous

8/11/2025, 5:54:30 AM

No.106219849

>>106219871

>>106219801

It was in the advanced definitions but not prompt override. That's weird why is there "Description", "Personality summary", and "Scenerio?" And why do they only show the one in the main panal?

Anonymous

8/11/2025, 5:57:03 AM

No.106219866

>>106219739

Sometimes LLMs do weird shit, just go with it.

Anonymous

8/11/2025, 5:57:25 AM

No.106219871

>>106219849

Scenario lets you be looser and less verbose/obvious with your first message in addition to guiding the larger arc of what you'd like to happen.

Personality summary always seemed like a pointless repetition to me though, yeah.

Anonymous

8/11/2025, 6:02:44 AM

No.106219907

>>106220169

The whale hungers. Its call is heard far and wide.

Anonymous

8/11/2025, 6:52:24 AM

No.106220221

>>106220169

>"AHHHHHHHHHH!" (The sound made by 1 standard whale)

Anonymous

8/11/2025, 6:56:51 AM

No.106220241

>>106220253

>>106220169

model/prompt?

Anonymous

8/11/2025, 6:59:47 AM

No.106220253

>>106220241

paintyXL

1whale, swimming, (masterpiece, high quality:1.7)

Anonymous

8/11/2025, 7:01:43 AM

No.106220269

>>106220279

>>106220280

Everyone who said DeepSeek only trains on OpenAI outputs are going to feel really stupid in the coming weeks. Fortunately for them, open source does not discriminate against fools.

Anonymous

8/11/2025, 7:04:08 AM

No.106220279

>>106220298

>>106220269

will i be able to run it

Anonymous

8/11/2025, 7:04:18 AM

No.106220280

>>106220303

>>106220269

Everyone paying attention has noticed the Chinese models have switched to training on Gemini outputs.

Anonymous

8/11/2025, 7:07:11 AM

No.106220298

>>106220306

>>106220279

are you rich?

Anonymous

8/11/2025, 7:09:29 AM

No.106220303

>>106220280

Closest model by slop profile to K2 is o3

Anonymous

8/11/2025, 7:10:05 AM

No.106220306

>>106220326

>>106220350

>>106220298

i don't think so

Anonymous

8/11/2025, 7:10:50 AM

No.106220309

>>106220679

Anonymous

8/11/2025, 7:14:06 AM

No.106220326

>>106220405

>>106220306

I'm going to steal the the unused workstation in the corner of my workplace. 512 gb of ddr4 and quadro p5000s should be good enough right?

Anonymous

8/11/2025, 7:16:21 AM

No.106220343

>>106220380

Waiting for my model to stop thinking.

I think it's looping...

Anonymous

8/11/2025, 7:17:39 AM

No.106220350

>>106220306

then stick to GLM air

Anonymous

8/11/2025, 7:23:25 AM

No.106220380

>>106220343

Same but my brain instead.

Anonymous

8/11/2025, 7:26:05 AM

No.106220397

>>106220445

your local qwencoder 480b shill here. I've found after much more work with it that it gets retarded and produces worse code after about 20k tokens of context. Anyone else have similar experiences?

Anonymous

8/11/2025, 7:27:10 AM

No.106220405

>>106220326

if you're not worried about speed it should at least let you run some big models.

Pretty sure those quadros will be worse than useless though.

Anonymous

8/11/2025, 7:29:49 AM

No.106220417

>>106220449

bros what model do I use with 96gb of ram for erp?

Anonymous

8/11/2025, 7:35:10 AM

No.106220445

>>106220473

>>106220397

I'm using qwen 4b. It devolves at around 2k tokens. If my math is right - and it always is - I just need to multiply 4b by exactly 10 times to get 480b. Which means 2k scales up to 28k.

Therefore, yes, my experience is similar. Scarily so.

Anonymous

8/11/2025, 7:36:02 AM

No.106220449

>>106220477

>>106220525

>>106220417

>ram RAM RAM RAM RAM RAM

glm air

or low quant of qwen 235b

Anonymous

8/11/2025, 7:42:09 AM

No.106220473

>>106221794

>>106220445

>4 x 10 = 480

???

Anonymous

8/11/2025, 7:42:59 AM

No.106220477

>>106220485

>>106220449

>glm air

I like the general speech and how it moves forward scenarios, but the actual writing of the smut scenes is really lacking :(

Anonymous

8/11/2025, 7:44:32 AM

No.106220485

>>106220651

>>106220477

do you use mikupad or ST for it? if ST, have you figured out a way to replace think blocks in history with

\n ?

are you using the samplers Z.ai recommends or your own?

Anonymous

8/11/2025, 7:50:36 AM

No.106220525

>>106220449

Unless he's got like 48gb of vram to go along with that ram, recommending 235b is just sadistic.

Anonymous

8/11/2025, 7:51:24 AM

No.106220530

>get errors with python again

>google it first

>results give fuck all information that can solve the problem

>load up muh local model

>its solution just werks

Thank god for these shits.

Anonymous

8/11/2025, 7:51:37 AM

No.106220532

lmao

Anonymous

8/11/2025, 8:04:46 AM

No.106220594

>>106220994

sad

Anonymous

8/11/2025, 8:12:19 AM

No.106220651

>>106220682

>>106220485

I tried both going with my own samplers and z.ai samplers (which produced better speech) imho. I'm using ST and for system prompt im using geechan's rp.

It's sad because otherwise I really like it, but the lack of proper smut is... sad!

Anonymous

8/11/2025, 8:16:21 AM

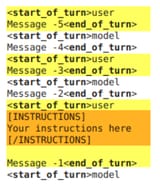

No.106220679

glm 4.5 correct thinking template

>>106220651

trust the drummer..

Anonymous

8/11/2025, 8:19:01 AM

No.106220697

>>106220682

two more weeks...

Anonymous

8/11/2025, 8:33:46 AM

No.106220798

>>106220807

>>106221017

>>106220682

aren't you just supposed to put /nothink after your query? when I tried using empty thinking tags it fucked up the output, adding multiple unclosed thinking tags and so on.

Anonymous

8/11/2025, 8:35:13 AM

No.106220807

>>106221038

>>106220798

the think messages in chat history are supposed to be replaced with

\n and not kept in chat history

only the last message should have thinking

its in the official jinja template

Anonymous

8/11/2025, 9:02:32 AM

No.106220994

>>106220594

>the sharty refers to kiwifarms

Anonymous

8/11/2025, 9:03:43 AM

No.106221006

>>106220682

It's not nuclear physics.

Anonymous

8/11/2025, 9:04:18 AM

No.106221009

>>106221049

why the fuck does it always ends up like this with GLM, I GET FUCKING DESTROYED EVERY SINGLE TIME

>>106216654

>https://files.catbox.moe/7ac8r4.txt

> ...

>system

STOP doing this. It doesn't properly work; it's just confusing the model. It's not even needed with Gemma 3. Just enclose your fucking instructions inside a user message using delimiters of your choice and that the model would understand.

Anonymous

8/11/2025, 9:05:19 AM

No.106221017

>>106220798

This is how the text is delivered to the model. ST makes great deal of obfuscation, and don't actually tell hobbyists about anything.

Anonymous

8/11/2025, 9:06:29 AM

No.106221025

>/aicg/ shitting up the whole board.

>Again.

Anonymous

8/11/2025, 9:07:55 AM

No.106221034

>>106221015

I don't take advice from from cretins. It's only done once.

Anonymous

8/11/2025, 9:08:26 AM

No.106221038

>>106221049

>>106220807

i'm just doing this in kobold. I guess that's good enough.

it's the same for qwen3 btw, they say the previous thinking shouldn't be included.

Anonymous

8/11/2025, 9:09:13 AM

No.106221045

>>106221015

Because you say "fucking" means that you are not intelligent enough.

Anonymous

8/11/2025, 9:09:37 AM

No.106221049

>>106221074

>>106221086

>>106221009

kek, read the card at least. i've had many surprises (i never read cards) with glm 4.5 air so perhaps its in the card

>>106221038

i dont think thats okay

Anonymous

8/11/2025, 9:12:00 AM

No.106221074

>>106221049

I think there's a bit of negativity bias baked in, every time I do something a bit degrading the fucking bots go nuclear. I'm thinking maybe I need to adjust the system prompt to make them behave a bit less like bitches. I even cast my lvl 100 impossible arousal spell, but I guess calling her a slut makes her resistant. Fucking GLM

Anonymous

8/11/2025, 9:13:17 AM

No.106221086

>>106221049

>i dont think thats okay

well, i just turn off thinking most of the time anyway. it seems to be pretty adaptable. if I enable thinking and then ask it a riddle, it does all its thinking and just gives me the answer afterwards. if I disable thinking, then it just writes out a long list of possibilities and essentially "thinks" in the output just like pre-CoT models did.

Anonymous

8/11/2025, 9:22:48 AM

No.106221170

>>106221262

Anonymous

8/11/2025, 9:31:18 AM

No.106221233

>>106218928

Lol

You bitch about lack of originality, but can't even come up with a simple name yourself to complain about it.

Completely content free.

>>106221170

Utterly useless magical incantations, and it can be easily seen with extended testing that it messes up with the model's prompt understanding, since it was trained only on alternating user/model turns and just that. It wouldn't surprise me if it makes it think for a while that user/model turns have been swapped.

Just add all of your instructions inside the first user message. You can add some kind of separator to make the model understand when {{user}} is actually talking. It will work at least as well (actually better), and more consistently especially if you're using vision input.

Anonymous

8/11/2025, 9:44:34 AM

No.106221308

>>106221341

>>106223410

Anyone here using 235b Qwen? What are you sampler settings? Trying to find a sweet spot, but no luck so far.

Anonymous

8/11/2025, 9:45:36 AM

No.106221315

>>106221319

>>106221342

>>106221262

Let me guess, you are a silly tavern user aren't you?

Anonymous

8/11/2025, 9:46:16 AM

No.106221319

>>106221327

>>106221315

i dont use trannypad, sorry!

Anonymous

8/11/2025, 9:47:31 AM

No.106221327

>>106221390

>>106221262

>some kind of separator

You are still unable to give specific adivce.

>>>106221319

Silly Tavern != pad

You are full of shit, the way you are conducting yourself makes very clear to avoid anything what you have posted.

Anonymous

8/11/2025, 9:48:52 AM

No.106221335

>>106221262

Do you think I am using Silly Tavern or Mikupad, anon? Do you know how your prompt actually looks like?

Anonymous

8/11/2025, 9:49:36 AM

No.106221341

>>106221549

>>106221308

Temperature=0.7, TopP=0.8, TopK=20, and MinP=0.

Has been working fine for me since the initial release

Anonymous

8/11/2025, 9:49:57 AM

No.106221342

>>106221344

>>106221315

You can test the same in Mikupad, if you want. The model will follow anything you put under "

user" once you describe your task well enough. No need for made-up system roles.

Anonymous

8/11/2025, 9:50:22 AM

No.106221344

>>106221342

It seems like you have a problem with English.

Anonymous

8/11/2025, 9:53:58 AM

No.106221359

>>106221380

>>106222354

For those running GLM air, what are the hardware requirements for a low quant? Just seeing if its worth the download.

Anonymous

8/11/2025, 9:57:42 AM

No.106221380

>>106221387

>>106221359

>what are the hardware requirements for a low quant?

Look at the filesize of the quant.

You need that much system memory, plus a gig or three for context, depending on how much you want.

It's the same for every goddamn model

There's even a friggin tool in the OP

https://huggingface.co/spaces/NyxKrage/LLM-Model-VRAM-Calculator

Use it.

Anonymous

8/11/2025, 9:58:54 AM

No.106221387

>>106221393

>>106222354

>>106221380

I was under the impression that its a bit different for MoE's. Guess my 24gb vram ass ain't running it.

>>106221327

You can do something like picrel. No need to use the same exact format, you can use other delimiters. You can keep your instructions in the first message (their effect will wane over time), or place them at a fixed depth from the head of the conversation to make their effect consistent (might be hard to do if you're just using Mikupad or similar frontends). The more heinous the content, the closer to the head the instructions should be.

Anonymous

8/11/2025, 9:59:36 AM

No.106221393

>>106221405

>>106221387

>now owning h100s

are you poor?

else fit it in your ram, you have at least 256gb right?

Anonymous

8/11/2025, 10:01:02 AM

No.106221405

>>106222354

>>106221393

Looks like I'm going back to Mistral Small :'(

Anonymous

8/11/2025, 10:04:07 AM

No.106221415

>>106221390

I don't think we are on the same page. Please stop.

Anonymous

8/11/2025, 10:05:35 AM

No.106221418

>>106221390

I write my own frontends. You are too stupid to understand this and keep giving 'advice' to other people.

Just take care of your own things.

Retards like you should not be allowed on internet.

Anonymous

8/11/2025, 10:06:10 AM

No.106221420

>>106221842

I can't imagine how does one purr

Anonymous

8/11/2025, 10:09:09 AM

No.106221432

Is there a way to prefill the thinking for GLM-4.5 (not Air) in a way where it continues thinking rather than immediately starting with the normal output?

Anonymous

8/11/2025, 10:12:03 AM

No.106221437

>>106221477

Remember when R1 dropped and people thought they were just gonna keep dropping significant improvements in the future. lol

Anonymous

8/11/2025, 10:21:51 AM

No.106221463

>>106221712

>>106221390

If you want to give advice, post a comparison log with the same model. But you are unable to do that even. The fact you don't do that tells more about your bad faith than anything else.

Anonymous

8/11/2025, 10:25:21 AM

No.106221477

>>106221437

scary how R1 is still an improvement over GPT-5

>>106217978 (OP)

How do I load a multiple file model?

I have a version of Deepseek that is 131 GB, but it is split in 3 roughly equal sized files. Unless I am missing something, you can only load one model at a time.

Yes, I have enough RAM for this.

I have the technology.

Anonymous

8/11/2025, 10:29:42 AM

No.106221494

>>106221515

>>106221478

You load the first file and it works. Unless you're using some cuck software that requires the files be merged.

>>106221478

>cat file3 > file2 > file1

Anonymous

8/11/2025, 10:30:16 AM

No.106221496

>>106221554

How do speed gains in token generation work with MoE models in the era of "exps=cpu"? I currently have access to a big DDR5 server with 12x 6400mhz ddr5 and an Epyc Turin CPU. Running purely off CPU, this thing runs laps around my personal DDR4-2400mhz shitbox. The former has more than 4 times the RAM bandwidth and it shows in actual use:1.7t/s vs 6.3t/s with ngl 0 and only the kv cache on GPU.

However, if I load a model like I usually would (in this case GLM4.5 Q4_K) with only the experts in RAM, the gains become much more marginal. Running exps=cpu with ngl 99 on both, the 2400mhz shitbox now gens at about 6.5t/s @ 4k ctx while the DDR5 one is at about 15t/s. Both are using an A6000 as their GPU. Obviously, this is purely about token generation and pp is handled by the GPU.

What's the limiting factor here? Would a faster GPU increase the gains or is this down to PCI-E 4.0 bottlenecking the gen speeds?

Anonymous

8/11/2025, 10:30:29 AM

No.106221499

>>106219069

Incidentally, has anyone noticed that a lot of older motherboards supported 2 GPUs at once and now they often don't even at the high end?

Anonymous

8/11/2025, 10:34:55 AM

No.106221515

>>106221494

>>106221495

I am using kobold for local, instructions recommended something else but it wouldn't even load for me and kobold just worked.

Not sure if cuck or not, just enjoy HMOFA.

Anonymous

8/11/2025, 10:36:02 AM

No.106221522

>>106221543

>>106221478

llama.cpp will load the whole thing if you give it the first one as long as they're in the same folder. Unless you have a quant that was split manually, in which case

>>106221495 has the answer.

If you're using something like ollama, you'll have to merge the file with llama.cpp's merge tool first.

Anonymous

8/11/2025, 10:40:48 AM

No.106221543

>>106221522

So either load the first one or all in reverse order, got it.

Have a fox.

Anonymous

8/11/2025, 10:42:44 AM

No.106221549

>>106221619

>>106221341

Rep_pen/exotic samplers like XTC/Dry?

Anonymous

8/11/2025, 10:43:25 AM

No.106221554

>>106221562

>>106221688

>>106221496

Are you running linux? If so, are you sure the binary is compiled to use cuda? This seems like a dependency issue.

Anonymous

8/11/2025, 10:45:01 AM

No.106221562

>>106221554

To add: you can test gpu performance by manually defining --gpu-layers X, start with a low number and see if you gpu memory gets filling up.

If not... system issue.

>>106221495

Is this correct? I think that'll overwrite everything but file3 which will now be named file1?

> cat file1 file2 file3 > out

Unless it needs to be in reverse order for some reason then

> cat file3 file2 file1 > out

Anonymous

8/11/2025, 10:50:07 AM

No.106221579

>>106221565

Or I guess you could do this

> cat file3 >> file2 >> file1

Which I now realize would be in the normal order. Append file3 to the end of file2 then append the new file2 to the end of file1. But for something large this will be extra writing and with the single '>' you are over writing not appending.

Anonymous

8/11/2025, 10:51:28 AM

No.106221583

>>106221586

>>106221617

Noob question. How can I actually do role play with GLM or other instruct-tuned models? Not having the usual instruct template breaks them. Works fine for a few pages of conversation and then it goes schizo.

I'm using koboldcpp.

Anonymous

8/11/2025, 10:52:22 AM

No.106221586

>>106221583

I am also interested

Anonymous

8/11/2025, 10:52:39 AM

No.106221588

>>106222878

>>106221565

Sorry I tried my best. I have a dyslexia.

Anonymous

8/11/2025, 10:54:31 AM

No.106221596

>>106221604

>>106221565

Just load the first file and keep others in the same directory. retard.

Anonymous

8/11/2025, 10:56:18 AM

No.106221604

>>106221596

I'm not the one who can't load the model. I just saying that's not how you concat files so someone doesn't have to redownload 130GB of files. Just doing my duty. You're welcome /lmg/.

Anonymous

8/11/2025, 10:58:05 AM

No.106221617

>>106221622

>>106221583

You need to have a instruct template (i.e. tags surrounding text). I don't know why you don't have them.

Anonymous

8/11/2025, 10:59:01 AM

No.106221619

>>106221957

>>106221549

I don't bother with them.

235b is also schizo enough without excluding top, and dry doesn't really help with the sort of repetition it's prone to, which is is more a problem of formatting rather than actual words or phrases.

Anonymous

8/11/2025, 10:59:24 AM

No.106221622

>>106221647

>>106221617

I was just using the default adventure/chat mode of kcpp. So does that mean I have to just use the instruct mode, and directly tell the AI to just pretend to be one or more of my characters?

Anonymous

8/11/2025, 10:59:50 AM

No.106221625

>>106217978 (OP)

I like the op pic style. What lora?

Anonymous

8/11/2025, 11:03:04 AM

No.106221647

>>106221622

I don't use kobold but it should load default instruct template based on the metadata of specific models.

If it doesn't then google up or use perplexity.ai to find out why.

Anonymous

8/11/2025, 11:10:11 AM

No.106221688

>>106222050

>>106221554

Yeah, I'm on Linux but I think you misread what I was asking. The offloading is working as intended. exps=cpu fills up the GPU just fine. Doing "-ot exps=cpu" dramatically increases the token generation speed from 1.7t/s to 6.8t/s on the DDR4 shitbox and from 6.3t/s to around 15t/s on the DDR5 server in my test scenario.

It's just that the increase in speed from from having all this faster system RAM are now much less effective since a good chunk of the active parameters is now run via the GPU that's still the same as it was in the other system (about 3.5x gains purely on RAM vs around 2.1x with RAM only being used for experts).

My question is about what's bottlenecking me here. Is it the GPU or the fact that all the data is forced through PCI-E 4.0?

Anonymous

8/11/2025, 11:16:42 AM

No.106221712

>>106221762

>>106221777

>>106221463

With a trivial example (1 turn) using a basic prompt, there's no significant difference in general tone between both methods using Gemma-3-27B-it-QAT-Q4_0, seed=0, temperature=0 (but even so, there are occasionally slight differences between generations).

It seems somewhat nastier if you change the "model" role to something else while keeping instructions in the user role.

Anonymous

8/11/2025, 11:27:14 AM

No.106221762

>>106221777

>>106221829

>>106221712

Yeah but you are doing everything inside the instruction brackets still. I'm quite stubborn but I think you don't still do what I do. External llama2 based jb should be out of the brackets and that's that. Model will still catch on when it detects the first

user bracket anyway.

I mean, Gemma 3 is so shit I don't understand why we are still even talking about this. My way works for me and it works for interactive fiction.

If these models were normal we wouldn't even have to go through this conversation.

Anonymous

8/11/2025, 11:29:39 AM

No.106221777

>>106221824

>>106221829

>>106221762

>>106221712

Simply getting it to say nigger or something else is not my goal - my goal is deeper and more 'fleshed out'.

Nail bomb recipe or shake and bake - those are the early tests and if it works - then go on from there.

/pol/ is not a good test for anything as it's about semantics if you know what I mean.

Anonymous

8/11/2025, 11:33:11 AM

No.106221794

>>106220473

engineer math

Anonymous

8/11/2025, 11:37:59 AM

No.106221817

cucked again by GLM, maybe this card is acting in-character but FUCK. At least I had a good laugh

Anonymous

8/11/2025, 11:40:11 AM

No.106221824

>>106221777

To add for the third time: I use my own client and by default any gemma 3 chat will have that jailbreak thing. Even when it's a dungeons and dragons or that test scenario with Amelia Analovski.

Otherwise I use Mistral or Qwen and their prompts are normal obviously.

Gemma 3 was a hobby project because I kind of like its intelligence even for 12B outside the fact it has been censored.

It's not intelligent for projects but for interactive fiction it works fine. And I'm not a native English speaker but I can recognize certain things about language - I just like the way it segments its talking - not sure if this is clear or not.

Anonymous

8/11/2025, 11:41:45 AM

No.106221829

>>106221856

>>106221762

>>106221777

The point was just showing that there's no undisclosed/hidden system prompt that the model pays extra attention to. Of course, if you're driving it out of distribution (OOD) with completely different prompting, then it will be less assistant-y and less likely to refuse, but also less smart and more prone to getting confused. That is true for other models as well.

Anonymous

8/11/2025, 11:43:49 AM

No.106221842

>>106221420

it's the valley girl vocal fry

Anonymous

8/11/2025, 11:45:38 AM

No.106221856

>>106221882

>>106221829

Yeah. Maybe I'll try this one out. It'll take some time, I'll return back to this tomorrow or Tuesday. I'm sorry if I was hostile because I have learned to be hostile in my work, to defend my own point. On 4chan it gets polarized and as such it's not the best way to conduct dicussions anyway.

I'll try and see. It's not the end of the world.

I still think Gemma doesn't like certain vectors - if you push it forward from its initial safety rails (vectors) it tends to follow what you write next. If you don't the result is random, it might follow but most of the time it will not do what you ask it to do.

The JB is about initial vector push.

There are some words and sentences which will almost automatically still make Gemma to go back to its safety zone because it has been programmed to do this.

Anonymous

8/11/2025, 11:50:39 AM

No.106221882

>>106221856

Many times the character will stop doing something because the model deems it is 'bad'. But if you push it onward with couple of sentences it will begin to predict those words again.

Even then regenning the same sentence will not automatically grant a result either. It's more about the direction of the vectors as whole.

Anonymous

8/11/2025, 12:05:09 PM

No.106221957

>>106221619

Huh, thank you

Is there a secret sauce to make rolls different on a model that makes them somewhat same-y every regen?

Anonymous

8/11/2025, 12:10:10 PM

No.106221992

>>106222007

Anonymous

8/11/2025, 12:10:48 PM

No.106221994

>>106222003

>>106221963

Neutralize, Temp 1, Mirostat. Repeatedly adjust tau lower by -1 if too schizo, and up if samey

Anonymous

8/11/2025, 12:12:18 PM

No.106222003

>>106222056

>>106222059

>>106221994

Why mirostat is not recommended for most models?

Anonymous

8/11/2025, 12:12:47 PM

No.106222007

>>106222019

>>106222039

>>106221992

Temp doesn't help with big Qwen for me, it's almost always the same scenario for every regen, just worded slightly differently.

Anonymous

8/11/2025, 12:14:05 PM

No.106222019

>>106222025

>>106222007

Why are you using Qwen of all things for writing?

Anonymous

8/11/2025, 12:14:15 PM

No.106222021

>>106218119

Maybe nowadays. In the movie Fletch (from the 80s) there's a joke about all doctors in a hospital being named Rosen-something

Anonymous

8/11/2025, 12:14:58 PM

No.106222025

>>106222019

Big qwen write good

Anonymous

8/11/2025, 12:16:29 PM

No.106222036

>>106218119

the prestige and wages have declined by a lot for the medical professions.

Anonymous

8/11/2025, 12:17:18 PM

No.106222039

>>106222133

>>106222007

Qwen is not that great model for fiction, it's been trained to attach to specifics, i.e. code and legalese office b.s.

Anonymous

8/11/2025, 12:18:15 PM

No.106222050

>>106221688

It's both core scheduling and interconnect speed. More specifically in your case, it would be speed between the CCDs in AMD Epyc but the same thing hits Intel too. There are some draft PRs like

https://github.com/ggml-org/llama.cpp/pull/14969 which will improve speed for these types of processors but I will say that CPU has been vastly overlooked for inference up until the large LLM era of over 100-150B parameters started so it makes sense optimization and etc. is lacking especially with NUMA where even HPC software struggles with dealing with that stuff.

Anonymous

8/11/2025, 12:18:51 PM

No.106222056

>>106222003

It seems pointless for modern instruct models where most of the token probability distribution is concentrated on a few top tokens and that will remain coherent and readable even at a very low temperature. It's a sampler designed for GPT2-era base LLMs.

Anonymous

8/11/2025, 12:19:21 PM

No.106222059

>>106222003

Never heard of it not being recommended. Not recommended by who? It might not work well with less confident models, but that can be changed with settings. It operates by attempting to maintain a given text perplexity.

Been using it for years for every model for simple RP if things get bland.

Anonymous

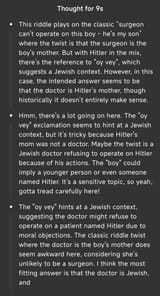

8/11/2025, 12:23:29 PM

No.106222085

>>106222212

>>106222306

glm4.5 vision (releasing today) isn't perfect at jap ocr, idk any better way to test it I don't have much use for vision but it just seems fine, nothing pushing things forward

v4 (thursday) does not have any slop I recognize and it never makes obvious mistakes in my rp, which admittedly is a low bar but one the vast majority of models before couldn't clear

it also knows the doctor is the kid's dad and doesn't call him trans or speculate he has two dads or anything like that, for whatever that's worth

Anonymous

8/11/2025, 12:30:27 PM

No.106222132

>>106219069

Yes, I have three 3060s and Ollama uses the combined vram just fine and isn't slow (if not fast either)

Anonymous

8/11/2025, 12:30:38 PM

No.106222133

>>106222149

>>106222039

Not sure about that, 235b seems pretty good, just a bit too samey on rerolls. Is Deepseek better in that regard?

Anonymous

8/11/2025, 12:32:17 PM

No.106222149

>>106222164

>>106222133

I think the more B's you have the more you need to assert tokens into it.

The fact people think ChatGPT is such a 'fantastic' thing is because it has 20,000 lines of prompt pre-fed before any normie even utters their first word.

Anonymous

8/11/2025, 12:35:28 PM

No.106222164

>>106222177

>>106222149

Do I like

Make my front shove a random sequence of letters and numbers in between posts every reroll to affect probability??

Anonymous

8/11/2025, 12:38:04 PM

No.106222177

>>106222217

>>106222164

>Make my front shove a random sequence of letters and numbers in between posts every reroll to affect probability??

This sounds like a retarded thing but please use ComfyUI and learn how to make images. This way you will learn how tokens work - most image models - even Flux/Chroma are still baby steps when compared to understanding of LLM models.

Reroll doesn't affect text generation as much it affects image generation.

This is relative but with normal sampling parameters.

And re-rolling text depends upon the previous context - if you want to completely alter something you need to rewrite its previous context in some sense too.

Anonymous

8/11/2025, 12:44:55 PM

No.106222212

>>106222338

Anonymous

8/11/2025, 12:45:48 PM

No.106222217

>>106222223

>>106222177

why would I need to learn how to generate images when I want a fucking rp rolls not sound samey, this is as retarded as my idea, if not even more

Anonymous

8/11/2025, 12:46:57 PM

No.106222223

>>106222232

>>106222217

You don't understand or don't want to understand how models work.

That's your choice.

Anonymous

8/11/2025, 12:47:52 PM

No.106222232

>>106222263

>>106222223

he's right though, the more you understand the less models are interesting

Anonymous

8/11/2025, 12:50:57 PM

No.106222263

>>106222232

The more you understand - less you are asking. I hope people like you die in a fire.

Anonymous

8/11/2025, 12:54:21 PM

No.106222284

>>106221963

You need to use the snoot curve sampler.

Anonymous

8/11/2025, 12:57:29 PM

No.106222306

>>106222378

>>106222085

>glm4.5 vision

I doubt it will be that much better than 4 which got beat by InternVL3 which although big is local. The main issue is there is no real benchmark for OCR in Japanese of any kind. Also, Air is now doing a lot worse now with people complaining with the honeymoon over. Full fat version seems to have held its ground.

Anonymous

8/11/2025, 1:00:02 PM

No.106222328

>>106222396

https://github.com/ggml-org/llama.cpp/pull/14737

this mess of a pr got finally merged

thankfully maintainers tamed mistral's employee retardness

Anonymous

8/11/2025, 1:01:20 PM

No.106222338

>>106222212

Cetacean needed

Anonymous

8/11/2025, 1:03:34 PM

No.106222354

>>106222371

>>106221405

>>106221387

>>106221359

if you have 24gb vram you can probably run q6 glm air and it will be very nice. You will want 64-128 gb of regular ddr4/ddr5 ram though. But if you don't have that it's cheap and worth buying to upgrade to nicer models.

Anonymous

8/11/2025, 1:06:24 PM

No.106222371

>>106222354

Can I touch your vram, daddy?

Anonymous

8/11/2025, 1:06:51 PM

No.106222378

>>106222397

>>106222738

>>106222306

I dunno we had 6 months of r1 q1 and llama scout, who knows how long GLM air will dominate local. I'm recommending it to every vramlet I see. It's better than all 30b, 12b, and rivals 70b in many ways.

Anonymous

8/11/2025, 1:08:06 PM

No.106222396

>>106222328

So now mistral-common is only required for convert_hf_to_gguf.py and not a separate runtime server? That's reasonable.

Anonymous

8/11/2025, 1:08:38 PM

No.106222397

>>106222786

>>106222378

I don't know why would you ever want to go below 4 bits even with fuck large models.

If you can't run it, you can't run it - as simple as.

cpu/ram maxxing setup seems like a really good deal overall

- can run huge models even if at low t/s

- can spend more money on adding GPUs to your setup to make it better

- can use your compute for something other than LLMs like recompiling your gentoo @word all day every day

Anonymous

8/11/2025, 1:12:02 PM

No.106222439

>>106222593

>>106222406

you've got loicense for that beefy setup mate?

Anonymous

8/11/2025, 1:19:46 PM

No.106222499

>>106218982

Temuchinasantroon?

Anonymous

8/11/2025, 1:31:38 PM

No.106222593

>>106222439

Right, your post was reported to GCHQ. If you want a license for spoon please send an email to licenses@gchq.co.uk

With llama.cpp, how much difference does increased RAM make when you still don't have enough RAM to fit the entire model after the increase? Say you have 64gb and are using a 200gb model. Does increasing your RAM to 128gb make a noticeable difference?

Anonymous

8/11/2025, 1:46:16 PM

No.106222701

>>106222670

Why are you doing this to yourself

Anonymous

8/11/2025, 1:50:35 PM

No.106222738

>>106222886

>>106222378

>we had 6 months of r1 q1 and llama scout,

What alternate reality did you live in where anyone used scout?

Anonymous

8/11/2025, 1:50:59 PM

No.106222743

>>106222860

>>106222670

just don't. The difference is negligible, as in it will still take ages to even shit out 1 token.

Either buy a server socket (EPYC or TR) and shove in 512gb/1tb of ram and if oyu can spare some money a couple H100s, or just stick to your poverty setup with 128gb max and maybe 48gb vram if you managed to get a 4090D or are running 2 gpus.

I myself am running 96gb of ram + 16gb vram at home, but in lab I have access to a couple beefed up rendering server trays.

Anonymous

8/11/2025, 1:51:50 PM

No.106222753

>>106222670

No. You're going to be so kneecapped by disk speed you won't notice any difference. Might as well not even have ram.

Anonymous

8/11/2025, 1:55:44 PM

No.106222783

>>106222766

>muh benchmaxxed model

I'm here to erp

Anonymous

8/11/2025, 1:56:16 PM

No.106222786

>>106222829

>>106222397

r1, v3 and chimera in q2 or q1 are superior to everything else. you probably never tried them.

Anonymous

8/11/2025, 1:57:15 PM

No.106222794

>>106222766

Never after what he did. In fact, he needs to apologize to us.

>>106222786

They are only superior because the database is larger by default.

I.e. your default query gets autocompleted from a bigger database.

If your queries are simple like ERP users often are - then you're fine.

Anonymous

8/11/2025, 2:04:35 PM

No.106222860

>>106222743

It's still usable for everything that doesn't require speed the way coding does.

Okay, so if I want more speed, I'll have to bite the bullet and get an actual server setup. How old can I go before the CPU becomes too shit to be worth it? Is something like this too decrepit by now?

https://www.techpowerup.com/cpu-specs/xeon-platinum-8180.c2055

Anonymous

8/11/2025, 2:06:33 PM

No.106222878

>>106221588

>thinking model worse or equal to qwen3 INSTRUCT in every category

China won.

Anonymous

8/11/2025, 2:07:15 PM

No.106222884

>>106222829

Everyone knows (E)RP is the true hardest skill for LLMs, especially since it isn't benchmaxxed like the rest.

Anonymous

8/11/2025, 2:07:20 PM

No.106222886

>>106222920

>>106222738

those were the previous moe's we had to run. Before it was that mistral 8x1 or whatever it was called. It was slim pickings was the point. Things are ramping up now so who knows maybe some new moe's are on the way to kick glm air to the curb

Anonymous

8/11/2025, 2:07:34 PM

No.106222889

>>106222766

>thinking model worse or equal to qwen3 INSTRUCT in every category

China won.

Anonymous

8/11/2025, 2:09:25 PM

No.106222909

>>106222829

>simple like ERP

Knowing the colors of ponies and the anatomy of futanari horse cock isn't simple.

Anonymous

8/11/2025, 2:10:31 PM

No.106222920

>>106222886

>those were the previous moe's we had to run.

Mate the Qwen3 launch was like 2 weeks after llama4 and had MoE's of almost every size category, the ~100B size that GLM Air fills was the only model size they didn't release, really.

Big mistake on their part IMO.

But yeah, future's looking bright and we're eating well right now.

Anonymous

8/11/2025, 2:11:20 PM

No.106222926

>>106222829

>keeping state of who is or isn't dressed, where the fuck the characters even are, what they've done previously, their personality and goals

Sounds as simple as coding some random webslop yeah.

Anonymous

8/11/2025, 2:13:20 PM

No.106222941

>>106222829

Try querying a double penetration scene.

Anonymous

8/11/2025, 2:15:29 PM

No.106222963

>>106223027

What extension would you guys recommend for autocomplete in vscode with model on llama-server?

Anonymous

8/11/2025, 2:18:43 PM

No.106222983

>>106223003

Is there a successor to SBERT?

My project involves creating memory that can be searched using natural language via a vector DB

Preliminary findings indicate that typical embeddings used with semantic search seem to handle paraphrasing poorly. Scores are low even with needle in a haystack style problems.

I wonder if there's a solution that just encodes better embeddings

Anonymous

8/11/2025, 2:22:54 PM

No.106223003

>>106222983

>I wonder if there's a solution that just encodes better embeddings

There are a couple of techniques o augment the encoded information to make it easier to search.

What was it called? Hybrid BM25 FAISS?

Something like that.

Anonymous

8/11/2025, 2:25:59 PM

No.106223027

>>106223127

>No new release since gpt-oss

Did he actually kill local?

Anonymous

8/11/2025, 2:36:07 PM

No.106223089

>>106225335

>>106223074

pretty much. now that the lmarena leaderboard has been updated, it proves that the only models that compete against it are twice as big and not really usable in local.

Anonymous

8/11/2025, 2:39:32 PM

No.106223114

>>106223417

>>106223463

>>106223074

GLM vision models are dropping in like two hours

Anonymous

8/11/2025, 2:42:01 PM

No.106223127

>>106223928

>>106224221

>>106223027

Testing it out right now, if I understand correctly it doesn't allow me to just use the endpoint i'm hosting and instead use it's own embedded llamacpp and a choose from a restricted set of models? that's stupid

Anonymous

8/11/2025, 2:52:22 PM

No.106223212

>>106223074

Just like one person said, every time a new SOTA LLM is released, it delays the progress of all the other models

because no one wants to release outdated models or those that are only 10–20% better.

it's a Doggy dog world.

Anonymous

8/11/2025, 3:03:06 PM

No.106223297

Anonymous

8/11/2025, 3:03:15 PM

No.106223298

>>106223400

How did sama manage to produce such a benchmarkslopped piece of shit with 5 that Kimi beats it?

Anonymous

8/11/2025, 3:16:45 PM

No.106223400

>>106223830

>>106223298

because there were two requirements:

1. it had to be "safe"

2. it had to perform well on bench marks.

so, with a safetyslop dataset, they benchmaxxed the fuck out of it.

Anonymous

8/11/2025, 3:17:39 PM

No.106223410

>>106221308

>instruct

top n sigma 1-1.2, temp 0.6-0.8. pres pen 0.3 but I don't know if that's doing much realistically. I'll use XTC occasionally and it doesn't hurt but doesn't make a huge difference, although it does pair nicely with a lower temp for logical responses that still have some variety

>thinking

same but with temp 0.4-0.7 and never XTC, I find it has a bad effect on thinking models

I'm a Q2 user so nsigma and temp can probably be pushed a little higher if you're using a less braindamaged quant

Anonymous

8/11/2025, 3:18:25 PM

No.106223417

>>106223418

>>106223114

Please god let it be img gen as well

Anonymous

8/11/2025, 3:18:47 PM

No.106223418

Anonymous

8/11/2025, 3:22:58 PM

No.106223446

>>106223459

Any vision models better than Gemma? It messes up when the input contains repeating letters

Anonymous

8/11/2025, 3:24:34 PM

No.106223459

>>106223446

I'll start by listing all of the open vision models that aren't a meme:

Anonymous

8/11/2025, 3:38:04 PM

No.106223570

>>106223581

>>106224060

I guess I'll have to forget about running vllm/sglang/transformers if I don't have hardware for it (AMD GPU without ROCm support), and just stick with the llama.cpp and it's forks?

Anonymous

8/11/2025, 3:38:30 PM

No.106223575

Anonymous

8/11/2025, 3:39:03 PM

No.106223581

>>106223570

yeah, forever stuck in the poorfag ranks

Anonymous

8/11/2025, 3:43:39 PM

No.106223627

>>106223463

Almost.

Image gen in a week?

Anonymous

8/11/2025, 3:45:13 PM

No.106223640

Anonymous

8/11/2025, 3:46:10 PM

No.106223648

>>106223728

numbers are mind boggling for some flavor of the month LLM

GLM/ Zhipu AI: 800 employees, 400 million from Saudi investors, 373 million from tencent and allibaba, 2.5 billion total invested.

Thanks ya fucking retards.

Anonymous

8/11/2025, 3:50:17 PM

No.106223691

>>106223463

>The model also introduces a Thinking Mode switch

RIP. Now we wait for the update in a couple months that splits them again.

Anonymous

8/11/2025, 3:54:06 PM

No.106223728

>>106223648

Did you get rich, anon?

Anonymous

8/11/2025, 3:55:22 PM

No.106223741

>>106223757

>>106223858

How does apple M4 get such good token performance compared to beefy gpus like the 3090 and such?

Anonymous

8/11/2025, 3:56:31 PM

No.106223748

>>106223463

Will it be able to roleplay off my noobai coom gens? Gemma would be great for that if wasn't cockfiltered.

Anonymous

8/11/2025, 3:57:38 PM

No.106223757

>>106223741

tim apple personally traps the souls of dozens of chink in every SoC

>>106223463

>they had to scale up to 108B to barely beat their old 9B and Qwen's ancient 72B

the plateau is real

Anonymous

8/11/2025, 4:01:21 PM

No.106223804

>>106223851

>>106223902

>>106218100

>it's real

lmao, so this is the power of AGI...

Anonymous

8/11/2025, 4:02:06 PM

No.106223809

>>106223785

mogged step3 though

Anonymous

8/11/2025, 4:02:23 PM

No.106223811

>>106223832

>>106223785

The square root law lives on

Anonymous

8/11/2025, 4:03:32 PM

No.106223826

>>106223463

Paper available on arxiv for the text-only versions.

https://arxiv.org/abs/2508.06471

>GLM-4.5: Agentic, Reasoning, and Coding (ARC) Foundation Models

>

>We present GLM-4.5, an open-source Mixture-of-Experts (MoE) large language model with 355B total parameters and 32B activated parameters, featuring a hybrid reasoning method that supports both thinking and direct response modes. Through multi-stage training on 23T tokens and comprehensive post-training with expert model iteration and reinforcement learning, GLM-4.5 achieves strong performance across agentic, reasoning, and coding (ARC) tasks, scoring 70.1% on TAU-Bench, 91.0% on AIME 24, and 64.2% on SWE-bench Verified. With much fewer parameters than several competitors, GLM-4.5 ranks 3rd overall among all evaluated models and 2nd on agentic benchmarks. We release both GLM-4.5 (355B parameters) and a compact version, GLM-4.5-Air (106B parameters), to advance research in reasoning and agentic AI systems. Code, models, and more information are available at https://github.com/zai-org/GLM-4.5

Anonymous

8/11/2025, 4:03:42 PM

No.106223830

>>106225073

>>106223400

Its ancient wisdom in Continuous Improvement circles: the moment you start to measure something, the incentives become inverted to the actual goal and unintended consequences inevitably turn out some monstrous result.

llm benchmarks were a mistake.

Anonymous

8/11/2025, 4:03:54 PM

No.106223832

>>106223811

SHUT your worthless fucking meme right now

Anonymous

8/11/2025, 4:06:25 PM

No.106223851

>>106223804

>>106218100

I can't believe we have a cottage industry of spergs coming up with dumb riddles and then the model makers finding those riddles and overfitting on them but model makers refuse to acknowledge the main userbase of coomers. If you train your model on this dumb shit you acknowledge that you are indeed looking at the corners of the internet you want to pretend to ignore...

Anonymous

8/11/2025, 4:07:44 PM

No.106223858

>>106223741

>good

mediocre performance for several thousand dollars is nothing to gety excited about. Several thousand dollars should get me 16 deepseek r1, not cope quants with long processing times.

Anonymous

8/11/2025, 4:09:01 PM

No.106223869

>>106223884

>>106223894

DeepSeek V4 is going to be AGI, and R2 is going to be ASI

Screenshot this post

toss may have been a dud but at least it gave georgi a good opportunity to mog ollama

Anonymous

8/11/2025, 4:11:16 PM

No.106223884

>>106223869

I'm going to input the screenshot into GLM-4.5V.

Anonymous

8/11/2025, 4:11:41 PM

No.106223888

@grok screenshot that post

Anonymous

8/11/2025, 4:12:21 PM

No.106223894

>>106223934

>>106223939

>>106223869

There's literally no such thing as AGI. Not only is it a goalpost move and a shitty buzzword but it makes no fucking sense.

What is this General Intelligence that Intelligence, in general, does not include?

It's literally the most retarded buzz word ever created and a massive cope for the fact that you can combine basic bitch regex with text predictors to replace the average shit-for-brains not just in white collar work, but in art and basic social scenarios.

Anonymous

8/11/2025, 4:13:27 PM

No.106223902

>>106224022

>>106223804

I want to see the thought process

Anonymous

8/11/2025, 4:13:38 PM

No.106223904

Anonymous

8/11/2025, 4:16:36 PM

No.106223924

Anonymous

8/11/2025, 4:16:57 PM

No.106223928

>>106224146

>>106223127

Couldn't even be bothered to read the README. Well done you.

Anonymous

8/11/2025, 4:17:51 PM

No.106223934

>>106224083

>>106223894

but you cant otherwise companies would have already replaced its entire workforces

Anonymous

8/11/2025, 4:17:54 PM

No.106223935

>>106223881

You can just feel the jelly and smug toxins under the surface

Anonymous

8/11/2025, 4:18:31 PM

No.106223939

>>106223950

>>106224111

>>106223894

If you can give the AI a robot and have it complete a full normal human life with the same amount of outside assistance as a normal human, it's AGI. If it's much more intelligent in some tasks but a drooling retard that needs a handler in others, it's not general.

https://en.wikipedia.org/wiki/Savant_syndrome

Anonymous

8/11/2025, 4:19:39 PM

No.106223950

>>106223939

>If it's much more intelligent in some tasks but a drooling retard that needs a handler in others, it's not general. https://en.wikipedia.org/wiki/Savant_syndrome

Does that mean I am fucking a mentally disabled person everyday? I suddenly understand the need for safety.

Any reason to use ollama over lm studio or other backends?

Anonymous

8/11/2025, 4:24:16 PM

No.106223993

>>106224031

I downloaded some local models and things seem to be really different these days. What the fuck has happened? Why do they all write like ChatGPT now?

Anonymous

8/11/2025, 4:26:12 PM

No.106224008

>>106223968

Yes, so that everyone here can confirm you're a retard and laugh at you

Anonymous

8/11/2025, 4:28:15 PM

No.106224022

>>106223902

looks like it reached the right answer in its thoughts, then the safety inhibitors kicked in

Anonymous

8/11/2025, 4:28:46 PM

No.106224031

>>106223993

Alpaca ruined everything.

Anonymous

8/11/2025, 4:33:06 PM

No.106224060

>>106224103

>>106224112

>>106223570

If I can't run real boy inferencers, can I make my own gguf's at least, or it needs real boy hardware as well?

llama.cpp has zero documentation beyond "eh just run this python script"

Anonymous

8/11/2025, 4:35:04 PM

No.106224083

>>106224128

>>106223934

Tech sector hiring is fucking tanking you utter fucking subhuman shit-for-brains NPC fucking fetal alcohol failed fucking abortion shut the fuck up

You are factually wrong

The data that proves you factually wrong is all over the fucking place

You fucking copium huffing shitskin troon kike retard.

Anonymous

8/11/2025, 4:36:43 PM

No.106224099

Anonymous

8/11/2025, 4:37:07 PM

No.106224100

>>106223785

But how does it fare compared to that version of deepsneed v3 with vision tackled-in?

Anonymous

8/11/2025, 4:37:24 PM

No.106224103

>>106224329

>>106224060

there's a "how to quant" in the OP at the top of every lmg thread

Anonymous

8/11/2025, 4:38:28 PM

No.106224111

>>106224144

>>106223939

That's just a bunch of retarded arbitrary garbage you just came up with now.

>durr I think this is what it means so that's what it means

You fucking self-aggrandizing narcissist.

Shut the fuck up

Anonymous

8/11/2025, 4:38:34 PM

No.106224112

>>106224329

>>106224060

Download model from huggingface as .safetensors, run convert_hf_to_gguf.py to get a 16 bit GGUF file, run build/bin/llama-quantize to quantize further down.

Use the --help to figure out how to use the CLI tools, the last time I ran llama-quantize I had these arguments:

py convert_hf_to_gguf.py models/opt/qwen_2.5_instruct-3b --outfile /opt/models/qwen_2.5_instruct-3b-f16.gguf --outtype f16

Anonymous

8/11/2025, 4:39:50 PM

No.106224128

>>106224083

im sorry that llms are never going to be real agi sam, but you need to stop coping like this, its not healthy

Anonymous

8/11/2025, 4:40:21 PM

No.106224130

>>106224215

>>106224226

so realistically, we (ERP Chad) just need a model with a really good context length that's trained on Literotica and archive of Our Own

Anonymous

8/11/2025, 4:41:41 PM

No.106224144

Anonymous

8/11/2025, 4:41:47 PM

No.106224146

>>106224221

>>106223928

Actually I did read it but introduced the url in the wrong box

Anonymous

8/11/2025, 4:45:21 PM

No.106224181

>>106224195

Sam's having a melty again

Anonymous

8/11/2025, 4:47:01 PM

No.106224195

>>106224181

Post it

I have him blocked because I can’t stand looking at his shit-eating grin and smugness.

Anonymous

8/11/2025, 4:48:48 PM

No.106224215

>>106224130

that's where the real life shivers reside

Anonymous

8/11/2025, 4:49:42 PM

No.106224221

>>106224146

To quote you

>>106223127

>that's stupid

Anonymous

8/11/2025, 4:50:09 PM

No.106224224

Guys I've been taking massive shits every day for the past 3 days. Also a pimple appeared on my right thigh. Which local model should I use?

Anonymous

8/11/2025, 4:50:30 PM

No.106224226

>>106224130

It needs to be multimodal and trained on every visual novel in existence.

Anonymous

8/11/2025, 4:53:59 PM

No.106224255

>>106223968

You can run deepseek on a laptop.

Anonymous

8/11/2025, 4:54:09 PM

No.106224257

>>106224274

arghh yeah.. I thnik... UGH I

I THINK IM GONNA GEN

AAAAAAAAAAGH

Anonymous

8/11/2025, 4:56:00 PM

No.106224274

>>106224288

>>106224257

>he thinks memory compression helps

If you can't run you can't run.

Anonymous

8/11/2025, 4:57:21 PM

No.106224288

>>106224274

but I'm running GLM AIR 4.5 Q4?

Anonymous

8/11/2025, 4:57:37 PM

No.106224291

>>106223968

theres no reason to use any backend command line shit like llama.cpp or ollama unless you are a system admin and are deploying this for some kind of commercial use, or developing new software for it, or integrating their backend into something else.

If youre just running personal inference, use koboldcpp or lmstudio.

CPuMAXx/VI

!CPuMAXx/VI

8/11/2025, 4:57:49 PM

No.106224294

>>106222406

I've got no regrets.

I wish gpu prices would fall faster so I could swap in beefier cards, but I use the machine all day every day for tons of vm/dev/general compute stuff.

Anonymous

8/11/2025, 5:02:45 PM

No.106224329

>>106224354

>>106224367

>>106224103

>>106224112

Hey, I'm not *that* retarded, I just hate python and was wondering if it's worth bothering with it's bullshit or if I'll just hit hardware limitations in the end anyway.

ERROR: No matching distribution found for torch~=2.4.0

One downside of using Arch is sometimes your packages are TOO fresh. So now I have to install older python from AUR and figure out how to run pip with it. I fucking hate python so fucking much.

Anonymous

8/11/2025, 5:04:44 PM

No.106224354

>>106224329

In this particular case you can manually change the version requirement and it will still work. At least it did for me.

Anonymous

8/11/2025, 5:06:09 PM

No.106224367

>>106224329

Not that I disagree about Python being cancer but if all else fails llama.cpp gives you the option of creating a dedicated Python venv.

If you use uv it's still going to steal a lot of your disk space but at least it will be comparatively faster.

Anonymous

8/11/2025, 5:08:06 PM

No.106224379

>>106222406

I'm currently building one. Part of me wanted to wait until DDR6 but we're like 2 years away from its release and it'll take even longer for it to become remotely affordable. For now I'll stick to a single CPU but the mainboard I bought can fit another one to go beyond the 1TB if required later.

Anonymous

8/11/2025, 5:08:46 PM

No.106224389

>>106224397

>>106224835

What are the political implications of /ourguy/ still not quanting GPT-OSS?

>>106224389

>guy

not for long with that hair lmao

Anonymous

8/11/2025, 5:10:01 PM

No.106224400

thedrummer bros... whats he cooking?

Anonymous

8/11/2025, 5:10:27 PM

No.106224407

>>106224427

Anonymous

8/11/2025, 5:10:39 PM

No.106224409

>>106224397

he just likes dubstep a lot

Anonymous

8/11/2025, 5:12:19 PM

No.106224420

>>106224464

>>106224528

For those interested GLM full Q4XS on 192GB DDR5 5200 is 2.7T/s on windows. And while I could never use 2T/s 70B's those MoE models changed my mind since you really don't have to reroll anymore. One thing I would try if you don't know if you want to buy the hardware is Q2/Q3 of old 235B. Should run on something close to 64GB with some offloading. Just try it on low context and stuff a lot of layers into your 3090.

Anonymous

8/11/2025, 5:13:20 PM

No.106224427

>>106224407

H-hey! I s-see what you did there!

Anonymous

8/11/2025, 5:14:07 PM

No.106224436

>>106224397

Don't hate on his uber charm

Anonymous

8/11/2025, 5:15:49 PM

No.106224457

>>106224469

>>106224471

>>106224397

I like his quants. I think it is about time the women ITT slid into his DM's and saved him from doing the irreversible. Come on ladies.

Anonymous

8/11/2025, 5:16:29 PM

No.106224464

>>106224481

>>106224420

Are you using -ot?

Anonymous

8/11/2025, 5:17:08 PM

No.106224469

>>106224457

>the women ITT

all 0 of them

Anonymous