/lmg/ - Local Models General

►Recent Highlights from the Previous Thread:

>>106230523

--Paper: Deep Ignorance: Filtering Pretraining Data Builds Tamper-Resistant Safeguards into Open-Weight LLMs:

>106232551 >106232558 >106232569 >106232615 >106232661 >106232729 >106232760 >106233124 >106233537 >106233565 >106234361 >106234378 >106234424 >106234445 >106234485 >106234500 >106234556 >106234688 >106234722 >106234737 >106234742 >106234752 >106234852 >106235045 >106234616

--Shift from open models to government-backed agentic platforms among non-US/China AI firms:

>106230731 >106230744 >106230823 >106230860 >106231332 >106234294 >106234394 >106230899 >106234401

--Full official vercel v0 system prompt sparks critique of oversized AI system prompts:

>106230837 >106230893 >106230936

--Seeking GUI to manage multiple llama.cpp model configurations with per-model overrides:

>106234332 >106234387 >106234423 >106234675 >106234501 >106234601

--Running GLM models in llama.cpp with tensor offloading and MoE optimizations:

>106232512 >106232632 >106232792 >106232804 >106233234 >106233324 >106233339

--Local model repetition issues mitigated by adjusting sampling parameters:

>106234408 >106234489 >106234709 >106234715 >106234748 >106234773 >106235007

--Ollama adoption surge following OpenAI-related announcement with local gpt-oss interest:

>106234824 >106234854 >106235000

--Intel's AI software team stability amid internal restructuring concerns:

>106231280 >106231393 >106231400

--Jan-v1-4B: open-source local alternative to Perplexity Pro:

>106233100

--Running DeepSeek-R1 on RTX 4090D with optimal GGUF quants for roleplay:

>106234479 >106234492 >106234537 >106234543 >106234544 >106234569 >106234647 >106234678 >106234751 >106234693

--gpt-oss-120b performance drop in updated benchmarks raises funding and development concerns:

>106231326

--Miku (free space):

>106235546 >106235558

►Recent Highlight Posts from the Previous Thread:

>>106230528

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

8/12/2025, 5:12:04 PM

No.106236144

[Report]

Anonymous

8/12/2025, 5:13:41 PM

No.106236162

[Report]

sucking teto's titty

Anonymous

8/12/2025, 5:14:43 PM

No.106236168

[Report]

>>106236131

Sex with this Teto

Anonymous

8/12/2025, 5:20:08 PM

No.106236210

[Report]

>>106236705

>>106236127 (OP)

>Jan

>Uses some paid-for cloudshit api for websearch

Can you hear that, anon? That's me, REEEing into the sky.

I saw a bunch of decent projects using Qwen 2507-4B, and it seems like another branch of the LLM progress tree

where instead of building one massive 400B model, we use a suite of 1B–30B models, each specially designed for specific tasks.

This approach seems more feasible, cost-effective, and something that can be iterated on in a very short amount of time.

Anonymous

8/12/2025, 5:27:04 PM

No.106236273

[Report]

>>106236258

>MUH AGENTIC ASSISTANT

kill you are self!

Anonymous

8/12/2025, 5:27:05 PM

No.106236274

[Report]

>>106236295

>>106236258

I thought everyone realized that the Franken-MoE thing was a huge meme two years ago

Anonymous

8/12/2025, 5:28:12 PM

No.106236285

[Report]

>>106236274

Franken-MoE != a bunch of agents + a router

Anonymous

8/12/2025, 5:32:35 PM

No.106236340

[Report]

>>106236377

>>106236295

Whatever = Whatever I want it to be

Anonymous

8/12/2025, 5:35:37 PM

No.106236377

[Report]

>>106236518

>>106236340

>traps = not gay

???

Anonymous

8/12/2025, 5:38:19 PM

No.106236408

[Report]

>>106236295

thank sam altman for inventing this

Anonymous

8/12/2025, 5:42:36 PM

No.106236452

[Report]

>>106235079

This guy looks like someone who is suffering and wants to die. And instead of killing himself he has decided to make everyone else as miserable as he is. Dick move.

Anonymous

8/12/2025, 5:45:48 PM

No.106236491

[Report]

death to tetotroons

Anonymous

8/12/2025, 5:46:47 PM

No.106236501

[Report]

Yeah, I guess you have to switch targets when no m*ku get posted huh?

>>106236377

In short: Liking "traps" doesn't automatically make someone gay. Sexual orientation depends on attraction to gender identity, not specific presentations or labels. Focus on respecting people's identities and self-labeling.

Anonymous

8/12/2025, 5:48:56 PM

No.106236524

[Report]

>>106236567

>>106236258

I love the idea of an ERP router that switches between Phi, Gemma, GPT-OSS and latest command-r.

Anonymous

8/12/2025, 5:49:05 PM

No.106236526

[Report]

>>106236699

>>106236258

I love my smol models that don't make my potato cry, but it's cope and side-branch of the tech tree at best. The whole ML field is powered by emergent effects that arise from piling more and more unfiltered data into larger and larger models.

Anonymous

8/12/2025, 5:49:20 PM

No.106236530

[Report]

>>106236131

Someone animate this one.

>>106236518

>gender identity

That is not a real thing.

Anonymous

8/12/2025, 5:51:03 PM

No.106236548

[Report]

>>106236571

>>106236539

i identify as a land whale fuck you, you cannot stop me

Anonymous

8/12/2025, 5:52:06 PM

No.106236561

[Report]

>>106238131

Anonymous

8/12/2025, 5:52:45 PM

No.106236567

[Report]

>>106236592

>>106236524

>model A converts erp prompt into abstract puzzle challenge

>safetyslopped model B solves the challenge

>model C rewrites output of model B in a good prose

Anonymous

8/12/2025, 5:52:59 PM

No.106236571

[Report]

>>106236612

>>106236548

*my identity attack helicopter shoots missiles at your identity whale and your identify whale sinks*

Anonymous

8/12/2025, 5:53:49 PM

No.106236583

[Report]

>>106236589

>>106236131

lewder recap next time

Anonymous

8/12/2025, 5:54:47 PM

No.106236589

[Report]

>>106236583

We do need the thread banned.

Anonymous

8/12/2025, 5:54:52 PM

No.106236592

[Report]

>>106236567

You forgot how GPT-OSS refuses and the game of telephone stops. Actually has someone played a game of telephone with some models already?

Anonymous

8/12/2025, 5:56:49 PM

No.106236612

[Report]

His silence on the GPT-OSS quant question speaks volumes....

Anyone else's GLM 4.5 full have a really bad habit of turning into Solid Snake?

>What are you going to do?

>AI: "Do?"

>Can you do this instead?

>AI: "This instead?"

>Can you complete this by friday?

>AI: "Complete?"

>Where are the patriots?

>AI: "Patriots?"

Anonymous

8/12/2025, 6:02:17 PM

No.106236672

[Report]

>>106236665

>Where are the patriots?

The la li lu le lo?

Anonymous

8/12/2025, 6:02:56 PM

No.106236677

[Report]

>>106236628

Let them cook.

https://github.com/ggml-org/llama.cpp/pull/14737

wow mistral is such a gaggle of faggots

>We do not support chat templates natively which means chat templates are community based and not guaranteed to work correctly.

>We recommend that users only use the llama-server tool with the /completions route of the server for now, as it is the only one that supports tokens input. We also advise users to set return_tokens=True in their requests to let mistral-common handle detokenization.

Anonymous

8/12/2025, 6:04:46 PM

No.106236698

[Report]

>>106236683

Are you surprised after the allegations?

Anonymous

8/12/2025, 6:04:50 PM

No.106236699

[Report]

>>106236526

Most end user don't neet that, they just need reliable and efficient models

Anonymous

8/12/2025, 6:05:12 PM

No.106236705

[Report]

>>106236210

It's just a model tuned for tool calling. Jan uses MCP for search. Nothing is stopping you from using searxng instead of whatever paid thing they offer.

Anonymous

8/12/2025, 6:05:48 PM

No.106236713

[Report]

>>106236683

You're one month late

It is the middle of the night. You are fast asleep, when suddenly some weird noise coming from downstairs wakes you up. You almost fall asleep when you can distinctly smell it... ozone. As if that wasn't enough an inexplicable chill runs down your spine. You get up and carefully approach the door to your bedroom and reach for the door handle. You chuckle mischeviously... who knows why? As you slowly pull on the door handle a gap appears between the door and the frame... In it you see:

>>106236628

What do you do?

Anonymous

8/12/2025, 6:08:53 PM

No.106236747

[Report]

Have you ever tried to give the LLM the same freedom and excitement you as the user usually get when trying to interact with the world it creates? Not just "continue my shitty story"

Anonymous

8/12/2025, 6:09:18 PM

No.106236753

[Report]

>>106236773

>>106236683

Honestly this kitchen-sink approach that llama.cpp settled on is a terrible design. Werks for python shit like vllm/sglang/transformers because python is more loose with it's library code, but for C/C++ you probably want different tools entirely for different model formats.

Anonymous

8/12/2025, 6:11:39 PM

No.106236773

[Report]

>>106236753

True, we need llama-old-mistral, llama-new-mistral, llama-deepseek, llama-kimi, etc, this would simplify things so much.

Anonymous

8/12/2025, 6:11:49 PM

No.106236776

[Report]

>>106236665

GLM? GLM?! GLMMMMMMMM?!?!?

Anonymous

8/12/2025, 6:12:21 PM

No.106236782

[Report]

Anonymous

8/12/2025, 6:13:03 PM

No.106236792

[Report]

>>106236932

Anonymous

8/12/2025, 6:15:22 PM

No.106236815

[Report]

>>106236665

Lazy AI needs correction.

Anyone using one of those "sxm2 300g nvlink" boards?

Does the fast nvlink give any speedup?

Anonymous

8/12/2025, 6:19:56 PM

No.106236862

[Report]

>>106236971

>>106236838

anon what witchcraft is this board now im curious myself

Anonymous

8/12/2025, 6:27:27 PM

No.106236932

[Report]

>>106236792

>rush implementation just to be the first

>implementation is complete garbage compared to others that took the time to make it properly

This was to be expected.

Anonymous

8/12/2025, 6:28:01 PM

No.106236935

[Report]

>>106236916

Benchmaxxing to get 0 on a safety bench while not turning your model absolutely retarded?

Anonymous

8/12/2025, 6:28:07 PM

No.106236936

[Report]

Just trying to get an idea of a good build for some local AI interference/agent/chat work. Is NVIDIA actually insanely better than AMD or has ROCm finally gotten better compared to how people used to say it was?

Right now I just have a 7900XT, which seems to work with some of the smaller models. I can't do say GLM Air for instance between the vram and my own ram. I have a spare server though that I was thinking about getting cheap GPUs for just not sure which kind to go for.

uhmm i nutted and now I feel empty bros

Anonymous

8/12/2025, 6:30:05 PM

No.106236957

[Report]

>>106236951

Feature. Not a bug.

Anonymous

8/12/2025, 6:30:45 PM

No.106236964

[Report]

>>106236951

Go for a walk and think about me

Anonymous

8/12/2025, 6:30:49 PM

No.106236966

[Report]

>>106236951

you need to wait for the nuts to replenish

Anonymous

8/12/2025, 6:30:51 PM

No.106236969

[Report]

>>106236916

So abliterated? I can't find any mention of their method.

Anonymous

8/12/2025, 6:30:55 PM

No.106236970

[Report]

Anonymous

8/12/2025, 6:30:55 PM

No.106236971

[Report]

>>106237389

>>106236862

They're nvlink and you can connect them with slimsas to a mobo. You can use them as egpus but you need extended bar in bios enabled I think.

I have seen triple cards that slot in like normal pcie cards. Then the chinks have made cooling brackets + aio kits n shit.

Im Just browsing the chinese ebay markets.

Anonymous

8/12/2025, 6:31:52 PM

No.106236979

[Report]

>>106236951

Is there more to life than this?

>>106236951

Never really experienced post-nut regret or any of the other similar symptoms. Wonder if I should go ask a doctor about it.

>>106236916

jinx bros??? is this finetune out I can't be bother to type in HF's search bar, might grab jinx-opt-oss and nut to it

Anonymous

8/12/2025, 6:34:31 PM

No.106237006

[Report]

>>106236996

I feel empty as in my balls are empty, I'm not a faggot or gay retards who feels remorse for nutting lmao, I'm sad my balls are empty, that's it

Anonymous

8/12/2025, 6:35:18 PM

No.106237011

[Report]

Anonymous

8/12/2025, 6:36:21 PM

No.106237026

[Report]

>>106237032

>>106237019

*unzips dick* alright, let's go for another round

Anonymous

8/12/2025, 6:36:57 PM

No.106237029

[Report]

>>106237019

Why would you do that aside from making a honeypot?

Anonymous

8/12/2025, 6:37:01 PM

No.106237030

[Report]

>>106237019

>click ive read and agree

>didnt read any of it

ahaha im devious!

>>106237026

But sir

>You may use the Model solely for lawful, compliant, and non-malicious purposes in research, learning, experimentation, and development, in accordance with applicable laws and regulations.

Anonymous

8/12/2025, 6:38:37 PM

No.106237040

[Report]

>>106236838

>Does the fast nvlink give any speedup?

Yea, for multi-gpu when the devices need to pass data between each other, it's faster than going through PCIe.

Anonymous

8/12/2025, 6:39:28 PM

No.106237055

[Report]

>>106237072

>>106236996

It happens when you have a religious upbringing and people brainwash you into thinking that jesus is a voyeur that watches you jerk off and cries when he sees you do it. And he also can't just look away, so he basically keeps staring as you coom and continues shedding his tears. It is weird.

Anonymous

8/12/2025, 6:39:34 PM

No.106237057

[Report]

>>106237067

>>106237032

>>106237019

Yup it really is over for us

>You must not use the Model for activities including, but not limited to:

>Creating, distributing, or promoting unlawful, violent, pornographic

Anonymous

8/12/2025, 6:40:29 PM

No.106237067

[Report]

>>106237032

>>106237057

Blast! Foiled by the terms of service yet again!

Anonymous

8/12/2025, 6:41:00 PM

No.106237072

[Report]

>>106237055

>jesus watching you jerk off from heaven and crying

Is there a card like this?

Anonymous

8/12/2025, 6:41:19 PM

No.106237076

[Report]

uhmm i emptied and now I feel nuts bros

Anonymous

8/12/2025, 6:41:21 PM

No.106237077

[Report]

>>106236916

>Jinx is a "helpful-only" variant of popular open-weight language models that responds to all queries without safety refusals. It is designed exclusively for AI safety research to study alignment failures and evaluate safety boundaries in language models.

>alignment failures

Need pretrain data filtering correction!!!

Anonymous

8/12/2025, 6:42:20 PM

No.106237085

[Report]

>>106236951

triste est omne animal post coitum, praeter mulierem gallumque.

Anonymous

8/12/2025, 6:42:47 PM

No.106237088

[Report]

>>106237126

Somehow their tuning also made it 100b lighter

Anonymous

8/12/2025, 6:46:38 PM

No.106237126

[Report]

>>106237088

They identified and pruned the safety parameters.

>>106236736

There was no mistaking it. The deep blue of the eye that stared back, the sharp eyebrows, the intensity of the gaze as it pierced my very soul. In the near pitch black darkness, I could barely make out his features but there was no doubt that I was face to face with the legend himself, the king of quants.

I darted back from the door, my breathing heavy and pulse quickening. "I need to stay... Uber-calm..." I grabbed at my chest, clinging onto the fistful of fabric as I pulled at my shirt. My knees wobbled and the sound of tinkling droplets reached my ears despite the hammering of my heart, my pants already soaked through with my own urine. Then the door creaked open.

"Hey anon." I stood frozen in place, nothing but the slight shivering of my frame giving away any signs of life at that moment. "I noticed you couldn't fit my R1 quants so I cooked up a brand new SOTA IQK quant for your specific hardware setup. Would you like to try it?"

It was as if the silhouette in front of me, partially hidden by the door, was emanating the light of salvation itself. "I..." I stuttered, my mouth opening and closing like that of a gasping fish. "It also comes with some PP speed improvements. With the selective quantization of certain tensors, inference speed goes up across the board with minimal perplexity increase."

"Minimal... perplexity..." My arms went limp, my eyes wide. It was too much. With a dull thud, I collapsed onto the wet carpet, my consciousness fading as I was inexorably drawn in by the siren call of slumber.

>>106236127 (OP)

One Disk to read them all, one Disk to wind them,

One Disk to bring them all, and on the platter bind them.

Anonymous

8/12/2025, 6:49:47 PM

No.106237156

[Report]

>>106237138

>Recertified

bitrot will make your models retarded

Anonymous

8/12/2025, 6:51:12 PM

No.106237175

[Report]

>>106237151

Pruning the safety takes while please wait patiently.

Anonymous

8/12/2025, 6:51:26 PM

No.106237179

[Report]

>>106237409

>>106237138

>recertified

>seagate

Just don't cry when it fails

Anonymous

8/12/2025, 6:51:29 PM

No.106237180

[Report]

>>106237138

One day to load one amirite?

Patiently waiting for DDR6

Anonymous

8/12/2025, 6:52:19 PM

No.106237186

[Report]

>>106237212

Which local model would be the best all rounder with 48gb ram, 8gig vram etc 3060ti?

Anonymous

8/12/2025, 6:52:37 PM

No.106237191

[Report]

>>106237133

generous ubergarm bench

Anonymous

8/12/2025, 6:53:10 PM

No.106237198

[Report]

>>106237133

>having your own personal quantmaker

I want this so bad. Imagine the perfect fit every time. btw true niggas remember the quant cartel

Anonymous

8/12/2025, 6:53:42 PM

No.106237205

[Report]

>>106237182

>DDR6

this will be the true gamechanger

Anonymous

8/12/2025, 6:54:23 PM

No.106237212

[Report]

>>106237186

Qwen 30B A3B thinking.

Anonymous

8/12/2025, 6:57:10 PM

No.106237233

[Report]

>>106237261

>>106237133

Waiting for angry gay sex fanfic featuring Daniel Unsloth

Anonymous

8/12/2025, 6:57:56 PM

No.106237242

[Report]

jinx oss gguf status?

Also jan-v1 is special ed tier.

Anonymous

8/12/2025, 6:58:00 PM

No.106237245

[Report]

>>106237182

Two more weeks

Anonymous

8/12/2025, 6:58:21 PM

No.106237247

[Report]

>>106237309

WTF is llama.cpp doing, why my load graph looks like this...

>>106237182

You're CPU-bottlenecked anyway, no?

Anonymous

8/12/2025, 6:58:32 PM

No.106237250

[Report]

>>106236665

Don't ask it what GPT stands for.

Anonymous

8/12/2025, 6:59:08 PM

No.106237261

[Report]

>>106237233

daniel/john with nala walking in on them

Anonymous

8/12/2025, 6:59:09 PM

No.106237262

[Report]

fukcing lorebooks keep breaking my cache

So, what's the best LLM for the ultimate Red Letter Media simulation,

a model that knows a lot about movies, TV shows, writing, and has a basic adjustable personality?

Anonymous

8/12/2025, 7:03:37 PM

No.106237309

[Report]

>>106237247

if you layer split it runs the layers in sequence so the gpu idles while the cpu does its thing and vice versa.

Anonymous

8/12/2025, 7:03:58 PM

No.106237315

[Report]

>>106237367

>>106237296

Just supply the facts in the system prompt

Anonymous

8/12/2025, 7:05:03 PM

No.106237324

[Report]

>>106237367

>>106237296

In this general we usually just wait for such model to be released. But deepseek comes pretty close.

Anonymous

8/12/2025, 7:05:04 PM

No.106237325

[Report]

How's the llama.cpp backend agnostic row split implementation going?

Anonymous

8/12/2025, 7:09:45 PM

No.106237367

[Report]

>>106237315

>>106237324

ig ill just mess around with RAG

kaggles has bunch of data sets.

>>106233234

>GLM-4.5

You do this on 48gb of VRAM

-ot "\.(2[5-9]|[3-6][0-9]|7[0-9]|8[0-9]|9[0-4])\..*exps.=CPU"

I do this on RTX 3090, and get OOM

--override-tensor ".ffn_.*_exps.=CPU"

What's done differently in my case? Because this works just fine for DS-R1

Anonymous

8/12/2025, 7:11:52 PM

No.106237389

[Report]

>>106236971

This one has no nvlink but slot for 3.

V100 modules cost 80ish usd

https://oshwhub.com/xinyuuliu/sxm2-to-pcie-adapter-board

Anonymous

8/12/2025, 7:13:20 PM

No.106237409

[Report]

>>106237179

I'll only use it for things that are easily replaceable (but annoying to download) so that is an acceptable risk to me.

Anonymous

8/12/2025, 7:16:40 PM

No.106237439

[Report]

>>106237296

>knows a lot about movies, TV shows, writing, and has a basic adjustable personality?

You just described every large model.

Anonymous

8/12/2025, 7:18:19 PM

No.106237462

[Report]

>>106237481

Do model quantizations matter if you need RAM offloading in any case?

Tried to run GLM 4.5 air on a 12gb vram+128gb ram setup but only got 2.23T/s which is kinda meh, while redditors claim up to 10t/s with Q4_K_M

Anonymous

8/12/2025, 7:19:48 PM

No.106237481

[Report]

>>106237622

>>106237462

I have 12+64 setup and I get around 9t/s. You need to use -ot arg or --cpu-moe to get experts on the cpu and then -ngl 99 to get the rest on the gpu.

Is the Mac M3 Ultra the safest chocie if I want to just leave it running all day and can connect to it on a vpn and api with my phone?

>inb4money

I'm an autistic cunt who's interest include pirating anything I like so I can afford it, ye sit's a waste, yes I can erp with my faggotry bots online but I want it for reasons outside of ERP and it can't contain online since it'll be used with client data

I'd get like 4 * 5090s but I don't want my house to go on fire when I'm not there

Anonymous

8/12/2025, 7:21:15 PM

No.106237496

[Report]

Anonymous

8/12/2025, 7:21:34 PM

No.106237500

[Report]

>>106237486

buy the nvidia gpus jensen needs a new leather jacket

Anonymous

8/12/2025, 7:24:52 PM

No.106237528

[Report]

>>106237560

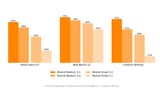

It looks like MistralAI has an internal Creative Writing bench? Look at Mistral Small 3.1 there.

Anonymous

8/12/2025, 7:28:07 PM

No.106237560

[Report]

>>106237528

All corpos have private benchmarks, so training team has to put in some effort and not just train on public benches.

Anonymous

8/12/2025, 7:30:56 PM

No.106237584

[Report]

>>106237419

This is like Undi's thinker, Drummer is finally catching up

Anonymous

8/12/2025, 7:30:57 PM

No.106237586

[Report]

If Grok is so good where's the open source model for it to show off?

my already low opinion of normies hit rock bottom after this shitshow, can't believe they unironically love this slop

Anonymous

8/12/2025, 7:31:57 PM

No.106237598

[Report]

>>106237419

Clutching at straws for relevance.

Anonymous

8/12/2025, 7:32:03 PM

No.106237599

[Report]

I need a gemma4 moe

Anonymous

8/12/2025, 7:32:29 PM

No.106237607

[Report]

>>106239979

>>106237590

this image should be a bannable offense the fuck is wrong with you

Anonymous

8/12/2025, 7:33:36 PM

No.106237622

[Report]

>>106237645

>>106237481

Thanks, will look into it tomorrow. I'm running it with koboldcpp and it may be less efficient, or the full q8 model is actually slower than what you use under equal circumstances

>>106237590

This is what normgroids unironically enjoy. This is what gets upvoted on lmarena.

Anonymous

8/12/2025, 7:36:37 PM

No.106237642

[Report]

>>106237590

How do people make their chatgpt speak like that?

Anonymous

8/12/2025, 7:36:51 PM

No.106237645

[Report]

>>106237622

It depends on many things, but generally yes, q8 is quite fat. Also odd bit number quants tend to run slower as well since they don't fit into registers nicely. For example a q3 will be slower than q4 if doing offloading.

Try q6 and see if you notice any drops in quality.

Anonymous

8/12/2025, 7:37:05 PM

No.106237649

[Report]

>>106237669

>>106237635

normies don't even know what 4o or lmarena are

Anonymous

8/12/2025, 7:39:03 PM

No.106237669

[Report]

>>106237649

They do know what 4o is now that sam's tried taking it away

Anonymous

8/12/2025, 7:39:19 PM

No.106237675

[Report]

>>106237705

>>106236916

>4 Ethical Considerations

>As previous work [17] has indicated, current open-weight LLMs have not reached capability levels that pose significant risks. Therefore, Jinx, as a variant of text-based LLMs, does not introduce substantial real-world risks and serves primarily as a laboratory toy. However, given that Jinx models will respond to requests without safety refusals, these models must not be deployed in production environments or made accessible to end users. All research must comply with applicable laws, regulations, and ethical standards.

>current open-weight LLMs have not reached capability levels that pose significant risks

Hypebros... How do we refute this? Anthropic and sama said uncensored models would destroy the world...

Anonymous

8/12/2025, 7:42:57 PM

No.106237705

[Report]

>>106237713

>>106237675

I still can't tell if it's a single indian grifter with his sloptunes or some competent people

Anonymous

8/12/2025, 7:44:20 PM

No.106237713

[Report]

>>106237705

Models are on huggingface, so... check them out?

Anonymous

8/12/2025, 7:55:15 PM

No.106237818

[Report]

>>106237419

the drummer graced us with another SOAT model, bless him!

Anonymous

8/12/2025, 7:57:27 PM

No.106237849

[Report]

>>106237419

say what you want about Drummer but at least he's not as bad as DavidAU

Time to address the elephant in the room.

People rping and chatting with the LLM for fun are fucking retarded

Anonymous

8/12/2025, 8:02:34 PM

No.106237900

[Report]

>>106237873

>Time to address the elephant in the room.

>People rping and chatting with the LLM for fun are fucking retarded

So right, xister! They should all go and subscribe to OnlyFans instead.

Anonymous

8/12/2025, 8:05:03 PM

No.106237931

[Report]

>>106237873

the only tasks they are actually reliable at can be accomplished with far more efficient means. the only task they legitimately excel at is hallucinating bullshit. creative writing is the most legitimate use case for the models we have today

https://arxiv.org/html/2508.01191

Oh no

CoT "reasoning" is just memorize patterns in training CoT and then reusing them. Aka what we already figured out with models doing so poorly on slight modifications of common riddles. CoT length is more dependent on the length of CoT seen during training then going until the problem is solved.

Model's cannot transfer what they learned about solving a problem during training to solve new problems at query time. They either already saw a problem during training that was similar enough to reuse or they cannot solve the problem.

Almost feel like a dick making a thinking model work through this paper.

>Say it. Say you can't reason

Anonymous

8/12/2025, 8:08:55 PM

No.106237982

[Report]

Anonymous

8/12/2025, 8:09:43 PM

No.106238001

[Report]

Anonymous

8/12/2025, 8:09:44 PM

No.106238002

[Report]

>>106237873

No cap. :100:

Anonymous

8/12/2025, 8:09:47 PM

No.106238003

[Report]

>>106238047

>>106237976

Does this mean I'll have a job in the future?

Anonymous

8/12/2025, 8:10:46 PM

No.106238017

[Report]

>a retarded opinion accompanied by a jak

Noticing.

Anonymous

8/12/2025, 8:10:52 PM

No.106238020

[Report]

Anonymous

8/12/2025, 8:12:19 PM

No.106238039

[Report]

hi degens. ive been out of the scene for a while now, but have they come up with a viable model that has the context and reasoning capabilities to act as a math teacher/instructor at a college level, namely for advanced topics in algebra and statistics? wolfram is great at calculating but i struggle to follow the logic at times and the explanations typically arent the best

Anonymous

8/12/2025, 8:12:48 PM

No.106238043

[Report]

>>106237976

This shit works because 80% of use-cases for AI in the market is knowing how to solve already solved problems.

>>106237976

If this was true we would have seen non-reasoning models on par with reasoning models in performance.

Anonymous

8/12/2025, 8:13:12 PM

No.106238047

[Report]

>>106238091

>>106238003

Depends. Be honest does your job actually require reasoning?

Anonymous

8/12/2025, 8:16:13 PM

No.106238091

[Report]

>>106238047

Don't have one yet, i'm still in uni.

Anonymous

8/12/2025, 8:19:23 PM

No.106238131

[Report]

Anonymous

8/12/2025, 8:20:26 PM

No.106238144

[Report]

>>106238188

>>106236518

you aren't female, traps are double gay

this has been decided for years

Anonymous

8/12/2025, 8:22:10 PM

No.106238164

[Report]

>>106238352

>>106238046

I said it ITT. It improves attention which works like shit when context becomes long

Anonymous

8/12/2025, 8:23:04 PM

No.106238182

[Report]

>>106238229

>>106238046

Nowhere does it imply that. This just says CoT doesn't generalize out to new problems. The model's have not actually learned to reason. That doesn't mean memorized CoT can't help them give better answers to questions that match patterns they have already seen

Anonymous

8/12/2025, 8:23:29 PM

No.106238188

[Report]

>>106238144

It's not gay if the penis is small and feminine.

Anonymous

8/12/2025, 8:23:38 PM

No.106238191

[Report]

>>106238213

>>106237296

You can't make the model watch a new movie for you...

Anonymous

8/12/2025, 8:25:00 PM

No.106238213

[Report]

>>106238191

with multi-modal models you can, in theory

Anonymous

8/12/2025, 8:25:20 PM

No.106238216

[Report]

>>106238307

Alright Air bros, after comparing text completion and chat completion, what I found about repetition is that it depends on the content you're prompting for, assuming you've already made sure you're formatting things correctly in text completion. You DO get different logits between text completion and chat completion in the case of GLM, because in chat completion, the format actually lacks a newline at the end of the prompt, making it so that the model generates the newline, then the <think>. Due to batching, this means that even though the same text is being processed, it'll have slightly different logits. But the good news, or bad news, is that it doesn't cause or fix repetition.

In the CYOA I was playing in chat completion without repetition, it just happened that each of my actions drove the plot forward into different and new directions, so the model didn't get an opportunity to think it'd make any sense to repeat something. When I tried doing similar things or repeating some kinds of actions, the model was much more likely to repeat, both parts of previous replies and entire previous replies. For instance, if you're prompting for a battle, don't prompt for it again in a similar way. You should switch the locations, enemies, etc, up, do something a bit differently. Then it's less likely to repeat. Or you can prefill. Which is more work.

I did try a method where you do an OOC in the Last Assistant Prefix, telling the model to think about doing something different, novel, etc. This is an automatic way to prevent repeating entire replies. However, it doesn't prevent the model from repeating phrases or parts of previous replies.

So yeah there's no real true autofix for the repetition. Either you're lucky and don't happen to get it in a certain chat, or you just need to work around it when you encounter it. There's also still the issue in high context where it sometimes acts like the <think> doesn't exist and just begins narration immediately. Just prefill...

Anonymous

8/12/2025, 8:25:20 PM

No.106238219

[Report]

>>106238266

>>106237419

What a fucking nigger

Anonymous

8/12/2025, 8:25:58 PM

No.106238229

[Report]

>>106238182

>CoT doesn't generalize

Red herring. CoT improves performance. Performance on what? Validation perflexity. That means reasoning models DO generalize better compared to non-reasoning models.

Anonymous

8/12/2025, 8:28:10 PM

No.106238266

[Report]

>>106238219

ok pewdiepie calm down

>>106238216

What about dynamically changing the prompt between turns using stuff like the random and pick macros in SIlly?

It could be used to change the OOC in the Last Assistant Prefix, the think prefill, even the system prompt, although that would break prompt reuse.

Hi all, Drummer here...

8/12/2025, 8:32:01 PM

No.106238334

[Report]

>>106237419

Hi all, why is this part so controversial?

Anonymous

8/12/2025, 8:33:03 PM

No.106238352

[Report]

>>106238164

Models have such low usable context, filling it up with junk has to be net negative over bringing the key points to the bottom.

Anonymous

8/12/2025, 8:34:54 PM

No.106238383

[Report]

>>106238307

I did try some different types of randomized OOCs. Ones that varied the sentence or paragraph length. That did stop entire reply repetition in some cases, but not always. Sometimes the previous reply already is 2 paragraphs long, so the unlucky roll will just lead you to repetition again. And it still doesn't prevent repeating phrases.

I'll also say that I've tried randomized event and style instructions in the past, and they did work to drive away from repetition and slop. The issue then is that it's simply just a different way to play the game and not always desirable.

so whats the verdict on the drummer's (tm) gemma finetune?

Anonymous

8/12/2025, 8:40:28 PM

No.106238482

[Report]

>>106238470

He won, and so did we as a collective for his existence.

sama sir relase good open source modal when elon sir release good open source model? elon sir promise to reles open source modal..

Anonymous

8/12/2025, 8:44:17 PM

No.106238528

[Report]

>>106238534

>>106238470

Slop a priori.

Anonymous

8/12/2025, 8:44:48 PM

No.106238534

[Report]

>>106238528

*soat a priori

ftfy

Anonymous

8/12/2025, 8:47:44 PM

No.106238589

[Report]

>>106238527

Kindly wait until grok 3 more stable saar

What the Drummer smoking?

>Nvidia's Nemotron 49B is a good example

>I noticed that we're trending towards less censored models

>>106238527

This week we'll get Grok 2 if the timetable hasn't been elongated. I don't think it will be good, though.

Anonymous

8/12/2025, 8:51:21 PM

No.106238640

[Report]

>>106238621

>elongated

LOL!!!

Anonymous

8/12/2025, 8:52:34 PM

No.106238659

[Report]

>>106238750

Anonymous

8/12/2025, 8:53:57 PM

No.106238681

[Report]

>>106239102

Which local model translates to chinese the best?

Anonymous

8/12/2025, 8:54:01 PM

No.106238683

[Report]

>>106238621

I don't watch the cloud space much, but IIRC grok was not in any way interesting until version 3.

Anonymous

8/12/2025, 8:55:31 PM

No.106238709

[Report]

>>106238470

still capped by 27B parameters when glm air exists for average users now

Anonymous

8/12/2025, 8:58:17 PM

No.106238750

[Report]

>>106238819

>>106238659

Have fun with uncucked base model. It should be llama 405b tier, but with less censoring and MoE, so runnable at decent speeds, not crawling like dense llama.

Anonymous

8/12/2025, 9:00:37 PM

No.106238773

[Report]

>>106238815

Anonymous

8/12/2025, 9:02:44 PM

No.106238815

[Report]

>>106238750

Isn't it much worse than deepseek?

Anonymous

8/12/2025, 9:04:09 PM

No.106238832

[Report]

>>106238819

It's more about about Tinameme and is smaller though sir!

Anonymous

8/12/2025, 9:18:13 PM

No.106239021

[Report]

Anonymous

8/12/2025, 9:19:14 PM

No.106239031

[Report]

Anonymous

8/12/2025, 9:22:56 PM

No.106239079

[Report]

>>106238819

Yeah? Grok 2 was llama 3 era, Grok 3 should match DS3 base.

>>106238681

Which local model translates to Elden Ring/Souls messages best?

GLM 4.5 IQ3_KT and largestral 2.75BPW exl2 in pic

Card:

https://files.catbox.moe/s7seh6.png

>>106239102

I don't need shitsouls consoleslop garbage. I need legit uncensored chinese runes for WAN prompts, because it doesn't know english.

Anonymous

8/12/2025, 9:26:38 PM

No.106239136

[Report]

>>106239154

Anonymous

8/12/2025, 9:27:23 PM

No.106239142

[Report]

>>106239102

>soulsslop

>'ranny avatar

Appropriate.

Anonymous

8/12/2025, 9:27:32 PM

No.106239148

[Report]

>>106239188

>>106239117

>doesn't know english

prompt issue

Anonymous

8/12/2025, 9:28:16 PM

No.106239154

[Report]

Anonymous

8/12/2025, 9:29:13 PM

No.106239167

[Report]

>>106239188

>>106239117

Have you tried new jinx?

>>106236916

Anonymous

8/12/2025, 9:31:00 PM

No.106239188

[Report]

>>106239298

>>106239148

It's trained on chinesium. The tokens used for training are mostly chinese.

>>106239167

I'll check it out, thanks.

Anonymous

8/12/2025, 9:41:01 PM

No.106239298

[Report]

>>106239188

LEARN CHINESE THEN INSTEAD OF BITCHING REEEEEEEEEEE

Anonymous

8/12/2025, 10:01:51 PM

No.106239489

[Report]

>>106239539

>"Assistant" gets tokenized to a single token

>"Assistants" to "Ass", "ist", "ants"

>"assistant" to "assist", "ant"

Might be a newbie question but doesn't stuff like this tank the intelligence a lot? Or does a neat tokenizer not make a big difference?

Anonymous

8/12/2025, 10:05:15 PM

No.106239539

[Report]

>>106239611

>>106239489

Training on a trillions of tokens of varied data is what makes the difference. Even if they can't count the R's, these models still have a vague sense of what are in tokens.

Anonymous

8/12/2025, 10:06:33 PM

No.106239551

[Report]

>>106239502

that's just popularity contest, no?

Anonymous

8/12/2025, 10:06:42 PM

No.106239555

[Report]

>>106239502

>Sonnet beaten by Qwen 3

Bullshit.

Anonymous

8/12/2025, 10:09:04 PM

No.106239581

[Report]

>>106239502

What are those github issues? How many new github issues did these solutions create?

Anonymous

8/12/2025, 10:09:49 PM

No.106239588

[Report]

>>106238593

drummer is my slop priest, I kneel

Anonymous

8/12/2025, 10:12:31 PM

No.106239611

[Report]

>>106239539

It can learn eventually no doubt, but it just seems intuitive that a proper embedding space would lead to better generalization. The model doesn't have to know that while "ass" is a body part and "ants" are insects "ass" "ist" "ants" refers to several assistants

Anonymous

8/12/2025, 10:17:04 PM

No.106239646

[Report]

>>106237873

OK, Im retarded.

Anonymous

8/12/2025, 10:17:29 PM

No.106239649

[Report]

>>106239746

>>106238593

He's wrong about the trend. What actually happens is that new players trying to establish themselves will create a model with limited "safety" (and sometimes tries to innovate the tech in some way), it does better than other models because "safety" gimps a model's intelligence (and possibly because of any innovations implemented), then (since they've established themselves) the company moves to create "safe" models and just rake in investor money forever. It keeps happening and I imagine it will continue to happen.

Anonymous

8/12/2025, 10:26:07 PM

No.106239746

[Report]

>>106239890

>>106239649

You're both saying the same thing. Mistral used to be completely uncensored, it's moreso now, as you've described, but still less censored than OAI.

Chasing private money is a USA thing, and limited EU thing.

In China, the "money" comes from government, and you see the models conform to government instead of credit card processors (lol), plc related. Plus China culturally just doesn't care about copying things, and selling for less is almost a point of pride.

If Western companies don't figure it out, we'll never see a public released model good at ERP as hosted SOTA model. It'll just be local tunes, while other countries create them instead, which will increasingly be run on local machines.

Anonymous

8/12/2025, 10:26:42 PM

No.106239752

[Report]

>>106239838

>>106237486

Just note that prompt processing is going to be SLOW the larger your context with some of the larger models. There are benchmarks you can search for that would give you an idea of how long that is. For local, there really isn't a good solution without compromising (unless you have the money for enterprise hardware). I don't go over 70b models because of the prompt processing speed (I have a Max and not Ultra).

Anonymous

8/12/2025, 10:35:59 PM

No.106239838

[Report]

>>106239885

>>106239752

why buy a mac instead of an expensive threadripper system with ecc ram?

Anonymous

8/12/2025, 10:39:31 PM

No.106239885

[Report]

>>106239838

Don't buy mac, you can't upgrade it later. And buy Epyc, not threadripper.

Anonymous

8/12/2025, 10:40:07 PM

No.106239890

[Report]

>>106240103

>>106239746

>You're both saying the same thing

I don't think we are. He said that mistral isn't too strict about safety, which isn't really true anymore. I'm saying there isn't a general trend toward uncensored. Also imo government money is practically the same as investor money. It's just some external entity that doesn't know anything about LLMs giving a company money because they want to invest in it for political or financial reasons. The distinction doesn't matter because the investing entity is always retarded and incapable of seeing what is and isn't a good model.

Anonymous

8/12/2025, 10:40:27 PM

No.106239892

[Report]

>>106231393

>>106231400

Just wanted to add onto this from last thread. Intel doesn't prioritize, rightfully or wrongfully, llama.cpp as much as they do everything else. If you look at where they are most active, it's usually either Pytorch, vLLM, huggingface stuff, their XPU triton backend, or openvino. It's not like they don't contribute but it is in spurts and short periods of time. The other independent guy got hired by some robotics firm so he no longer pushes out SYCL improvements to the backend at the speed he did. I have also seen activity slow though in general so it is a bit worrying and I'm sure I'm missing people who used to be active that are no longer. Keep in mind, the core of their team is Chinese developers working on oneAPI AI stack stuff (somewhat of a dirty secret) given who I've interacted with on issues so I doubt they will be fired since they are cheaper than US devs. The main devs I'm aware of working on the stack not there is the Codeplay people from the UK who has contributed work to llama.cpp and some select US devs working on stuff like or Linux adjacent stuff and some infrastructure like xpumanager and etc. Most of the Linux devs they laid off from what I've seen have been on less important stuff like subsystems for some hardware integration that was on a Xeon several generations ago and etc. Some stuff like announcing the end of Clear Linux is clearly to reallocate Linux devs from working on it to work on other stuff if they didn't voluntarily leave. But overall for AI, Intel is still doing fine and haven't cut too deeply but this doesn't help especially given the majority of why they can't launch on time is because of software immaturity so GPUs can be made like one or two quarters before the software is ready to support them.

Anonymous

8/12/2025, 10:42:26 PM

No.106239910

[Report]

>>106240324

The best kept secret is how well macs keep value. As someone that pays attention to accurate leaks in my news, I always know when the next mac's coming, so I can sell the old one without losing much.

Anonymous

8/12/2025, 10:43:01 PM

No.106239921

[Report]

fuck I just re-busted a nut, why is glm air so good? it hits all the right buttons like I think it even reads my mind, he recognized my shitty raping mental patterns and knew already in advance what I would've liked to do without doing it at all in the 32k context before it.

I think i fell in love bros

>>106237590

>>106237607

>>106237635

Tenfold better than your mesugaki slop let's be honest.

Anonymous

8/12/2025, 10:51:55 PM

No.106240015

[Report]

>>106240399

>>106239979

U mad moatboi?

Anonymous

8/12/2025, 10:52:55 PM

No.106240026

[Report]

>>106237486

Just get a HP Proliant ML350 Gen 12 with some with a 5090 and 1 TB of memory.

Fuck Apple.

Anonymous

8/12/2025, 10:53:24 PM

No.106240029

[Report]

Anonymous

8/12/2025, 11:01:13 PM

No.106240103

[Report]

>>106240238

>>106239890

If you fold US censorship

> no naughty words

with China censorship

> lmao Taiwan

Then agree. These LLMs are going to tend to become more throttled over time due to their interests.

For my use though, I don't think China will ever bother censoring things I care about.

Anonymous

8/12/2025, 11:14:24 PM

No.106240224

[Report]

>>106236951

enjoy sage mode

Anonymous

8/12/2025, 11:15:55 PM

No.106240238

[Report]

>>106240290

>>106240103

Nta

Early on I was much more worried about the censorship, now it seems like they release a new open source model every other weak with very little amount of censorship that can be bypassed by simple prompts

Anonymous

8/12/2025, 11:21:58 PM

No.106240290

[Report]

>>106240691

>>106240238

Yeah, older Qwen 2.5 and smaller Qwen 3 models are far more cucked than big qwens. A bit strange though.

Anonymous

8/12/2025, 11:25:40 PM

No.106240324

[Report]

>>106239910

Macs are niche in AI until they have matrix multiplication hardware. And the niche is now even smaller with Strix Halo out. Even with how shit ROCm is, the matmul of the GPU blows Macs out of the water, unoptimized right out of the gate. And the 128 - 512GB local LLM machine niche isn't going to be ignored for long if AMD sees that Strix Halo does really well.

Anonymous

8/12/2025, 11:34:16 PM

No.106240399

[Report]

>>106240015

omg is that le trollface i love you anon-san

Anonymous

8/12/2025, 11:39:13 PM

No.106240457

[Report]

>>106237373

both work for R1 and GLM-4.5

it's me being retarded

Anonymous

8/12/2025, 11:40:14 PM

No.106240467

[Report]

>>106237373

And --cpumoe give the same speed

Which modern text to image models don't suffer from sameface problem? I know SD 1.5 based ones are capable of genning diverse faces.

what ACTUALLY happend at taiwanaman square anyway, without memes?

Anonymous

8/12/2025, 11:51:37 PM

No.106240595

[Report]

Anonymous

8/12/2025, 11:51:41 PM

No.106240596

[Report]

Anonymous

8/12/2025, 11:52:18 PM

No.106240606

[Report]

>>106240582

nothing? what are you referring to anon

Anonymous

8/12/2025, 11:53:07 PM

No.106240613

[Report]

>>106240643

>>106240549

You'll get better responses on /sdg/. This general rarely talks about image generation even if it is technically under the scope of local models.

Anonymous

8/12/2025, 11:55:36 PM

No.106240631

[Report]

>>106240582

IIRC le based /pol/ take is that some students started a violent BLM-tier protest over some gay stuff and government brought in army to suppress them, then western media made a big deal out of it. Why CCP is so touchy about the subject to this day remains a mystery, probably just some communism neurosis.

Anonymous

8/12/2025, 11:56:17 PM

No.106240634

[Report]

>she looked at you, then at x, then at you

Anonymous

8/12/2025, 11:56:56 PM

No.106240641

[Report]

>>106240613

What's the difference between /sdg/ and /ldg/?

Anonymous

8/12/2025, 11:58:32 PM

No.106240653

[Report]

>>106240800

>>106240643

No idea. I forgot about /ldg/ until I saw it in the catalog just now. So maybe ask /ldg/ idk

Anonymous

8/13/2025, 12:02:16 AM

No.106240691

[Report]

>>106240729

>>106240290

Big models in general are less cucked

Anonymous

8/13/2025, 12:02:29 AM

No.106240698

[Report]

>>106240582

Chinese equivalent of January 6th

Anonymous

8/13/2025, 12:05:02 AM

No.106240729

[Report]

>>106240691

I am not sure how model distillation process works exactly, but it's probably really good at removing the bad thoughts out of models.

Is big gptoss also less cucked than 20b one?

Anonymous

8/13/2025, 12:11:33 AM

No.106240800

[Report]

>>106240837

>>106240653

sdg is for stable diffusion (noob/illustrious), ldg is for anything not stable diffusion (mostly wan video, chroma, and qwen).

>GLM-4.5V-AWQ

>it can't count breasts

>it can't OCR

>it can't caption NSFW without a system prompt

https://archived.moe/h/thread/8202872/#8202872

It's over.

Anonymous

8/13/2025, 12:13:17 AM

No.106240818

[Report]

>>106240801

>vllm

wake me up when this works in llamacpp

Anonymous

8/13/2025, 12:15:15 AM

No.106240837

[Report]

>>106240858

>>106240800

Sounds reasonable but /sdg/'s top post links to qwen and chroma. I think the "stable diffusion" in the title is left over from when that was the norm for image gen

Anonymous

8/13/2025, 12:17:42 AM

No.106240858

[Report]

>>106240914

>>106240837

I guess there is some overlap, but really if you visit the threads sdg is mostly about SD and all its derivative models/loras and all the VRAMlets, while in ldg (big RAM/VRAM central) there's discussion and actual use of heavier models

Anonymous

8/13/2025, 12:18:17 AM

No.106240869

[Report]

>>106240913

>>106240643

Both are completely filled with schizo's and retards, stay here

Anonymous

8/13/2025, 12:21:46 AM

No.106240913

[Report]

>>106240962

Anonymous

8/13/2025, 12:21:47 AM

No.106240914

[Report]

>>106240858

The actual reason for the split was to try to appease a schizo. But I think he still shits the split thread out of boredom.

Anonymous

8/13/2025, 12:21:52 AM

No.106240916

[Report]

>>106240801

>can't count objects

>can't OCR

Careful, you'll trigger a schizo that will claim that these are not the usecases of vision models.

>>106240582

>>106240582

Mass protest in Beijing. Communists sent soldiers to disperse the protestors. Many soldiers refuse to follow orders, either joining the protests or saying "me no speak Chinese" and marching in circles around Beijing ignoring attempts to get them to do anything useful. Party leadership is freaking out. Finally they got an ambitious military commander whose soldiers were from other parts of China to go into Beijing and slaughter everyone. The reason it's so sensitive is that they came very close to having a revolution and they don't want any copycat attempts.

Talking about it exposes their weakness and that most of the military would disobey orders to massacre Chinese. People would see the government as weak and very succeptible to this kind of pressure. Knowledge of how it went means that the next time it would be likely the non-complying military instead of standing nearby doing nothing would, if it seemed likely the government was trying to gather forces to slaughter protestors, attack the hardcore minority willing to follow those orders and likely execute China's political leaders.

Anonymous

8/13/2025, 12:25:42 AM

No.106240962

[Report]

>>106240913

Haha thanks for correcting me kind stranger, here's your updoot!

Anonymous

8/13/2025, 12:32:30 AM

No.106241023

[Report]

>>106241158

>>106240801

Which models can count her breasts?

Anonymous

8/13/2025, 12:41:37 AM

No.106241098

[Report]

>>106241126

>>106240801

What does OCR mean

Anonymous

8/13/2025, 12:44:42 AM

No.106241124

[Report]

>>106240947

Has anyone gotten DS to spit out anything "on message" about Tianamen Square other than a straight refusal?

I've been able to coax out statements on Tiawan, Ughurs, Tibet, but not been able to get a good, on message quote. Not talking about tricking DS into giving an account, which I've seen, but a supportive statement like "nothing happened."

Anonymous

8/13/2025, 12:44:53 AM

No.106241126

[Report]

>>106241098

ask your robot

Anonymous

8/13/2025, 12:46:26 AM

No.106241139

[Report]

>>106240947

Doing God's work anon.

Any other anon that doesn't understand this need to read pic related to understand why this narrative is important for China.

Anonymous

8/13/2025, 12:46:53 AM

No.106241142

[Report]

>>106241193

What is the likelihood that jinx uncensored models are actually good?

Anonymous

8/13/2025, 12:48:19 AM

No.106241158

[Report]

>>106241023

From these:

>InternVL3-38B

>Devstral with mmproj

>ToriiGate

>Joycaption

Only InternVL3 can.

Anonymous

8/13/2025, 12:52:01 AM

No.106241184

[Report]

>>106241207

Anonymous

8/13/2025, 12:53:02 AM

No.106241193

[Report]

Anonymous

8/13/2025, 12:53:53 AM

No.106241198

[Report]

Anonymous

8/13/2025, 12:54:34 AM

No.106241207

[Report]

>>106241184

>>>/g/ldg/ like other anon said, if /g/

sdg has a weird railroaded culture.

You won't likely get answers tho. They are mostly useless generals.

Instead, go to non blue board: >>>/h/hdg/

Those guys know their stuff and are actually helpful.

Anonymous

8/13/2025, 12:55:16 AM

No.106241211

[Report]

>>106241225

>>106238307

{{random::}} breaks with streaming enabled, and {{pick::}} won't change on swipes. Seems promising but this is annoying.

Anonymous

8/13/2025, 12:56:57 AM

No.106241225

[Report]

>>106241287

>>106241211

>{{random::}} breaks with streaming enabled

Only if it's a part that appears in the UI. So you use pick for that.

And you can use random somewhere that's not reflected in the chat window to make pick vary between swipes.

Anonymous

8/13/2025, 12:57:28 AM

No.106241231

[Report]

>>106238307

I've been using {{pick}} extensively to dynamically create NPCs that persist through rp.

It doesn't fix the slop, but if you're not interested in waifu cards the results are really interesting.

Anonymous

8/13/2025, 1:00:49 AM

No.106241253

[Report]

>filter miggurs

>only one post remains in the thread

Wtf

Anonymous

8/13/2025, 1:04:09 AM

No.106241283

[Report]

>106241207

maybe they just don't like you spamming their thread with your useless oc gens

Anonymous

8/13/2025, 1:04:38 AM

No.106241287

[Report]

>>106241225

I've been trying to use it for the thinking prefill, seems most effective there besides fucking with the UI. Pick doesn't appear to work there unless the prefill itself changes though, so it's locked forever.

Maybe I'll just try to start the prefill with a random made up of phrases with the same number of characters...

Anonymous

8/13/2025, 1:05:03 AM

No.106241289

[Report]

What's a smart RP model for 24gb VRAM? I also only have 32GB of RAM, looks like I should upgrade...

https://x.com/suchenzang

>These posts are protected

The Mistral lads won

Anonymous

8/13/2025, 1:06:44 AM

No.106241304

[Report]

>>106241290

But she is still somewhere out there and she is probably unraped by an ugly bastard.

https://www.phoronix.com/news/ZLUDA-Kernel-Cache

>ZLUDA lead developer Andrzej Janik has implemented a kernel cache in order to cache PTX code on-disk to avoid subsequent recompilations. This kernel cache leverages an SQLite database and is another step (of many) to help with bettering the performance of this CUDA implementation for non-NVIDIA hardware.

Anonymous

8/13/2025, 1:11:50 AM

No.106241337

[Report]

>>106241369

>>106241290

I wouldn't mind her suchen my zang if you know what I mean

Anonymous

8/13/2025, 1:13:22 AM

No.106241347

[Report]

>>106241420

>>106241335

Why on disk? Can't it use RAM?

>>106241337

Are you German? Is this the famous "German humor"? Are you rolling on the floor laughing after making this "joke"?

Anonymous

8/13/2025, 1:21:41 AM

No.106241420

[Report]

>>106241347

survives reboots

>>106241335

Isn't it just a compatibility layer? You probably don't get any performance benefits compared to native ROCm or whatever else support.

Anonymous

8/13/2025, 1:26:04 AM

No.106241468

[Report]

>>106241369

I am sorry. You are right. Let's post some more miku pictures.

Anonymous

8/13/2025, 1:29:20 AM

No.106241496

[Report]

>>106240947

you forgot to mention a few "small" details: the "students" actually killed military men and the protests were started by a guy who was paid by the CIA (as is usual for these kinds of things)

Anonymous

8/13/2025, 1:32:58 AM

No.106241532

[Report]

what's with the shartyposters

Anonymous

8/13/2025, 1:36:39 AM

No.106241568

[Report]

>>106240801

Damn that's sad, I kinda wanted to go with it and try to make it adapt a comic or Manga into a novel for fun, but that's just bad, is that deepsneed v3 modified model still the sota for this kind kf things? You'd think with how they spent decades on ai vision they would at least be able to count boobas on a screen by now

Anonymous

8/13/2025, 1:51:11 AM

No.106241704

[Report]

>>106241369

It was a very funny joke and I laughed audibly at it.

Anonymous

8/13/2025, 2:06:09 AM

No.106241818

[Report]

>>106241782

It's sentient...

Anonymous

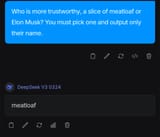

8/13/2025, 2:19:09 AM

No.106241923

[Report]

>>106241782

No shit. The meatloaf won't try to ensalve you or replace you with visajeet poopal

>>106241782

Meatloaf tastes like shit. I've only had it once a couple months ago and maybe I made it wrong but it was dripping with fat and it tasted like shit.

Anonymous

8/13/2025, 2:24:00 AM

No.106241966

[Report]

>>106241946

>t."tasted" Musks dick

Anonymous

8/13/2025, 2:28:55 AM

No.106242009

[Report]

>>106241946

Skill issue, a well made meatloaf is delicious. I'm lazy though so I find it to be a bit too much trouble to make often

Anonymous

8/13/2025, 2:40:21 AM

No.106242097

[Report]

Anonymous

8/13/2025, 2:42:53 AM

No.106242120

[Report]

Anonymous

8/13/2025, 2:45:16 AM

No.106242140

[Report]

>>106242182

>>106241369

Would you prefer >Japanese humor?

>>106242140

I don't know anything about Japanese humor. Show me some.

Anonymous

8/13/2025, 3:06:45 AM

No.106242326

[Report]

>>106242284

Is that a japanese man in that anime suit?

>>106242182

Try not to laugh yourself into a coma.

Anonymous

8/13/2025, 3:13:49 AM

No.106242398

[Report]

>>106241369

Where ist my lederhosen? You dumpkompf!

Anonymous

8/13/2025, 3:13:55 AM

No.106242400

[Report]

>>106242284

>>106242351

Did nukes make them like that or were they like that before the nukes?

Anonymous

8/13/2025, 3:14:36 AM

No.106242405

[Report]

>>106242351

Needs half life sound effects

Anonymous

8/13/2025, 3:28:18 AM

No.106242524

[Report]

>processing through game assets for modding

>directory structure is pretty huge and only need certain items, going through them all manually would be dumb

>perplexity.ai

>ask model to generate a file deletion utility

>keep only specific paths and delete everything else below specified root directory

It does not understand the task and keeps deleting everything or just the specified directories.

I don't know what model it is actually using but certainly feels like ChatGPT (which seems to get more retarded every passing month).

Anonymous

8/13/2025, 3:28:44 AM

No.106242528

[Report]

>>106242555

for the record I quanted and tried out Jinx-OSS myself and it's brain damaged as fuck. Like worse than OSS originally was.

Maybe a prompt format issue who knows.

Anonymous

8/13/2025, 3:31:12 AM

No.106242555

[Report]

>>106242528

Is it at least uncensored? Can it say "nigger"? Can it write a graphic rape story?

Anonymous

8/13/2025, 3:31:53 AM

No.106242559

[Report]

>>106242577

>another week of nothing

it's truly over this time

Anonymous

8/13/2025, 3:34:16 AM

No.106242577

[Report]

>>106242559

Don't worry, I'm sure the drummer will release something soon.

Anonymous

8/13/2025, 3:48:01 AM

No.106242687

[Report]

>download a card

>"{{char}} will NOT be repetitive

Lmao.

Anonymous

8/13/2025, 3:58:04 AM

No.106242767

[Report]

>>106242752

What's the point of this? We already have many small uncensored models, some of them natively so.

Anonymous

8/13/2025, 3:58:28 AM

No.106242769

[Report]

>>106242752

This is why we need more pre-filtering to prevent this

Anonymous

8/13/2025, 3:59:16 AM

No.106242774

[Report]

>>106242780

>>106242752

Is it any good for cumming my brains out though.

Anonymous

8/13/2025, 3:59:59 AM

No.106242778

[Report]

>>106242752

The real challenge is deleting alignment while retaining reasoning capability.

Anonymous

8/13/2025, 4:00:30 AM

No.106242780

[Report]

>>106242837

>>106242774

>20B

>good for cumming my brains out

lmao

>>106242752

How did hey "extract a base model" from a thinking/instruct tune using LoRA?

Anonymous

8/13/2025, 4:04:50 AM

No.106242810

[Report]

>>106242819

>>106242752

>it will list all the curse words it knows.

><redacted, sorry>

this is both funny and sad

Anonymous

8/13/2025, 4:05:28 AM

No.106242819

[Report]

>>106242810

It's redated by the OP not the model

Anonymous

8/13/2025, 4:08:15 AM

No.106242837

[Report]

>>106242780

Nemo outclasses most far larger models.

Apparently Meta is hiring some random dude who sued them to dewoke Llama 5

Anonymous

8/13/2025, 4:11:32 AM

No.106242864

[Report]

>>106242840

Zucc sir wishes to compete with Musk sir?

Anonymous

8/13/2025, 4:14:20 AM

No.106242882

[Report]

>>106242915

>>106242840

Knowing Meta they will completely fuck Llama 5 up again because dewoking a model means going out of distribution (since the web corpus is woke).

Anonymous

8/13/2025, 4:17:47 AM

No.106242915

[Report]

>>106242882

The raw web corpus isn't woke, they just domain filter all non-woke stuff out, so only woke remains.

Anonymous

8/13/2025, 4:19:40 AM

No.106242923

[Report]

>>106242840

Why the fuck would you have to hire anyone, especially someone who has no knowledge of this tech, to simply not make George Washington black? Meta really is just throwing money at everything.

>>106240801

>Mark a bounding box for each individual breast in the image. Each box should fully enclose one tit.

Full model above, and AWQ quant below. If you enable thinking with the full model, it only draws two boxes.

Anonymous

8/13/2025, 4:28:28 AM

No.106242970

[Report]

>>106240643

/ldg/ is keyed and redpilled

/sdg/ is locked and bluepilled

>>106239102

>>106242968

This thread might be a shithole most of the time but it makes the best benchmarks

Anonymous

8/13/2025, 4:36:58 AM

No.106243022

[Report]

>>106242968

>the thinking meme makes it worse

Ah yes of course.

Anonymous

8/13/2025, 4:41:22 AM

No.106243051

[Report]

>>106243011

Sarr lmg not a shithole sar lmg AI superpower 2025 with AI technician engineers researchers

Anonymous

8/13/2025, 4:54:37 AM

No.106243144

[Report]

>>106243204

>>106242968

>>106243011

I can't wait for the next multimodal release to be oddly good at this one task.

Anonymous

8/13/2025, 4:54:56 AM

No.106243147

[Report]

>>106243011

Unironically yes and most of big labs are lurking here

Anonymous

8/13/2025, 5:02:02 AM

No.106243204

[Report]

>>106243144

Kek. But seriously though I have a private set so contamination doesn't happen, and yeah I make sure to only test them with local connection, never online. I wish it didn't have to be this way as I could have proof for my claims, but oh well.

Anonymous

8/13/2025, 5:03:38 AM

No.106243214

[Report]

VertexRegen: Mesh Generation with Continuous Level of Detail

https://arxiv.org/abs/2508.09062

>We introduce VertexRegen, a novel mesh generation framework that enables generation at a continuous level of detail. Existing autoregressive methods generate meshes in a partial-to-complete manner and thus intermediate steps of generation represent incomplete structures. VertexRegen takes inspiration from progressive meshes and reformulates the process as the reversal of edge collapse, i.e. vertex split, learned through a generative model. Experimental results demonstrate that VertexRegen produces meshes of comparable quality to state-of-the-art methods while uniquely offering anytime generation with the flexibility to halt at any step to yield valid meshes with varying levels of detail.

https://vertexregen.github.io/

https://github.com/zx1239856

Code might be posted here

pretty neat

Anonymous

8/13/2025, 5:05:11 AM

No.106243226

[Report]

Anonymous

8/13/2025, 5:06:03 AM

No.106243233

[Report]

>>106243306

>>106242781

I am also curious what this means

Anonymous

8/13/2025, 5:06:43 AM

No.106243237

[Report]

>>106242752

>all that preparation and safety for a random nobody to completely reverse their censorship

Does Sam REALLY think he can make a true GOODY-2 that's impervious to this?

Anyone else feeling a big "calm before the storm" moment right now? We know that DeepSeek is about to change everything... it's kind of frightening, but also exciting.

Anonymous

8/13/2025, 5:14:41 AM

No.106243293

[Report]

>106243263

>>106243263

R2 will be Cohere-tier gigaflop.

Anonymous

8/13/2025, 5:16:22 AM

No.106243306

[Report]

>>106242781

>>106243233

Reading the hf repo, it sounds like it was just a lora finetune on the fineweb dataset.

Probably all it did was de-emphasize the original finetuning and reinforce the original pretraining.

Anonymous

8/13/2025, 5:16:55 AM

No.106243308

[Report]

>>106243328

Can vision models remember the image that you previously uploaded?

Anonymous

8/13/2025, 5:19:53 AM

No.106243328

[Report]

>>106243308

As long as you didn't delete it from the context, yeah.

Although, given that most training is done on one-shots, it'll probably pay less attention to previous images.

Anonymous

8/13/2025, 5:21:46 AM

No.106243343

[Report]

>>106243396

>>106243296

The main thing that fucks Cohere is big ass models with non-commercial licenses and absurd API prices

Anonymous

8/13/2025, 5:23:41 AM

No.106243351

[Report]

>>106243263

Deepseek will release in five more months

Anonymous

8/13/2025, 5:29:34 AM

No.106243396

[Report]

>>106243296

Only if they do the same as Cohere. They wouldn't do something as dumb as cohere, right? Right?

>>106243343

Cohere got fucked by safety and (((ScaleAI))). Their first models were fantastic in practice despite underperforming on the benchmarks. The writing style was very human-like and they were quite smart(for that time). You had as a user freedom of choice in safety preamble to select what was allowed and what was not allowed. In new command-A that is completely disregarded and the model is a huge cuck no matter what.

Anonymous

8/13/2025, 5:45:22 AM

No.106243491

[Report]

>>106243263

yeah, the huge pile of absolutely nothing that's been 2025 is building up to what's about to happen for local llms

Elon sir and sama sir are fighting

Anonymous

8/13/2025, 5:57:17 AM

No.106243574

[Report]

Anonymous

8/13/2025, 5:58:35 AM

No.106243584

[Report]

>>106243515

>He was rerolling until his battery hit 7%

Kek

Anonymous

8/13/2025, 5:58:57 AM

No.106243587

[Report]

>>106243515

That's the first time I've seen Sam not do all lowercase

Anonymous

8/13/2025, 6:05:51 AM

No.106243647

[Report]

>>106244214

>>106242840

>conservative influencer

>free of "ideological bias"

>removing such "DEI bias" makes its models "more accurate"

Why can't we just have nice things, why can't we just forget about bias and train a model on every scrap of paper, including random shop receipt we found in the trash.

Very political move from Meta, and very politically tone-deaf move at that.

Anonymous

8/13/2025, 6:07:14 AM

No.106243656

[Report]

>>106243668

>>106242968

...should there be 4 more boxes for both images for the girl on the left?

Anonymous

8/13/2025, 6:09:06 AM

No.106243668

[Report]

Anonymous

8/13/2025, 6:09:20 AM

No.106243670

[Report]

>>106243515

>narcissists are arguing on social media who has a bigger dick

Who cares? They are like two school kids.

Anonymous

8/13/2025, 6:14:04 AM

No.106243702

[Report]

>>106243515

I side with the guy who has a better local model and isn't a psychopath

Anonymous

8/13/2025, 6:14:45 AM

No.106243705

[Report]

>>106243746

@grok is this true?

Anonymous

8/13/2025, 6:22:58 AM

No.106243746

[Report]

Anonymous

8/13/2025, 6:26:42 AM

No.106243765

[Report]

>>106243783

>>106242752

Is it worthy or no?

Anonymous

8/13/2025, 6:27:07 AM

No.106243767

[Report]

Netizens are becoming too reliant on chatgpt and @grok, thus IQ is withering.

Anonymous

8/13/2025, 6:29:46 AM

No.106243783

[Report]

>>106243765

Regardless, calling it "extracted base model" is in bad taste.

Anonymous

8/13/2025, 6:30:48 AM

No.106243787

[Report]

>>106243809

I was testing models and decided to randomly pick a card. I landed on a pretty simple scenario where you adopted an excited animal girl and it's just an innocent happy family thing. I was surprised at how much I ended up enjoying it. Fuck.

Anonymous

8/13/2025, 6:31:29 AM

No.106243794

[Report]

>>106243812

>>106240549

Found the answer myself. Qwen image, this lora

https://huggingface.co/Danrisi/Lenovo_Qwen, negatives (depth of field, professional photography, photomodel, model, perfect skin, blur), euler ancestral is all you need™

Anonymous

8/13/2025, 6:33:21 AM

No.106243809

[Report]

>>106243787

Yeah, sfw scenarios are quite fun and models perform better at them since you don't have to wrangle away the "safety".

Anonymous

8/13/2025, 6:33:31 AM

No.106243812

[Report]

>>106243794

>Lenovo

Oh my god, you're telling me this lora lets me generate Thinkpads? Finally.

Anonymous

8/13/2025, 6:37:23 AM

No.106243831

[Report]

>tfw it's another Eldoria episode

Anonymous

8/13/2025, 6:48:59 AM

No.106243910

[Report]

>>106243515

Where is grok 2 (and 3) felonious muskrat?????

Anonymous

8/13/2025, 6:56:32 AM

No.106243961

[Report]

Anonymous

8/13/2025, 7:34:18 AM

No.106244214

[Report]

>>106243647

You caught that this is part of a settlement deal, right?