Anonymous

8/13/2025, 6:55:03 AM

No.106243951

[Report]

>>106246425

/lmg/ - Local Models General

Anonymous

8/13/2025, 6:55:31 AM

No.106243955

[Report]

►Recent Highlights from the Previous Thread:

>>106236127

--Paper: Is Chain-of-Thought Reasoning of LLMs a Mirage? A Data Distribution Lens:

>106237976 >106238003 >106238047 >106238091 >106238043 >106238046 >106238164 >106238352 >106238182 >106238229

--Papers:

>106243214

--Extraction of base model from GPT-OSS reveals fragility of alignment:

>106242752 >106242778 >106242781 >106243233 >106243306 >106242819 >106243237

--Testing multimodal AI on anime image reveals widespread failure in object counting and OCR tasks:

>106240801 >106240818 >106240916 >106241023 >106241158 >106241198 >106241568 >106242968 >106243022 >106243656 >106243668 >106243144 >106243204

--Prompt engineering strategies to reduce model repetition in interactive scenarios:

>106238216 >106238307 >106238383 >106241211 >106241225 >106241287 >106241231

--SXM2 NVLink boards for multi-GPU speedup over PCIe:

>106236838 >106236862 >106236971 >106237389 >106237040

--Intel's slowing contributions to llama.cpp and AI software maturity concerns:

>106239892

--Jinx models' high performance and zero safety refusals:

>106236916 >106236935 >106236969 >106236999 >106237019 >106237032 >106237029 >106237030 >106237057 >106237077 >106237151 >106237175 >106237675 >106237705 >106237713

--ZLUDA implements on-disk kernel cache for persistent PTX code storage:

>106241335 >106241347 >106241420

--MistralAI's internal Creative Writing benchmark revealed in model performance chart:

>106237528 >106237560

--Mixture-of-Agents as a practical alternative to scaling monolithic models:

>106236258 >106236274 >106236295 >106236285 >106236524 >106236567 >106236592 >106236526 >106236699

--GPT-5 leads in GitHub issue resolution but metric validity questioned:

>106239502 >106239551 >106239555 >106239581

--Release v3.10 - Multimodal support!:

>106242120

--Miku (free space):

>106237067 >106241198 >106243226

►Recent Highlight Posts from the Previous Thread:

>>106236131

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

8/13/2025, 6:59:47 AM

No.106243979

[Report]

grok 2 will save local this week

deepseek v4 will save local on aug 23rd.

Anonymous

8/13/2025, 7:01:16 AM

No.106243993

[Report]

►Recent Highlights from the Previous Thread:

>>106236127

--Paper: Is Chain-of-Thought Reasoning of LLMs a Mirage? A Data Distribution Lens:

>106237976 >106238003 >106238047 >106238091 >106238043 >106238046 >106238164 >106238352 >106238182 >106238229

--Papers:

>106243214

--Extraction of base model from GPT-OSS:

>106242752 >106242778 >106242781 >106243233 >106243306 >106242819 >106243237

--Testing multimodal AI on anime image reveals widespread failure in object counting and OCR tasks:

>106240801 >106240818 >106240916 >106241023 >106241158 >106241198 >106241568 >106242968 >106243022 >106243656 >106243668 >106243144 >106243204

--Prompt engineering strategies to reduce model repetition in interactive scenarios:

>106238216 >106238307 >106238383 >106241211 >106241225 >106241287 >106241231

--SXM2 NVLink boards for multi-GPU speedup over PCIe:

>106236838 >106236862 >106236971 >106237389 >106237040

--Intel's slowing contributions to llama.cpp and AI software maturity concerns:

>106239892

--Jinx models' high performance and zero safety refusals:

>106236916 >106236935 >106236969 >106236999 >106237019 >106237032 >106237029 >106237030 >106237057 >106237077 >106237151 >106237175 >106237675 >106237705 >106237713

--ZLUDA implements on-disk kernel cache for persistent PTX code storage:

>106241335 >106241347 >106241420

--MistralAI's internal Creative Writing benchmark revealed in model performance chart:

>106237528 >106237560

--Mixture-of-Agents as a practical alternative to scaling monolithic models:

>106236258 >106236274 >106236295 >106236285 >106236524 >106236567 >106236592 >106236526 >106236699

--GPT-5 leads in GitHub issue resolution but metric validity questioned:

>106239502 >106239551 >106239555 >106239581

--Release v3.10 - Multimodal support!:

>106242120

--Miku and Teto (free space):

>106237067 >106241198 >106243226 >106238788 >106239136

►Recent Highlight Posts from the Previous Thread:

>>106236131

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Imagine a good multimodal in-out model and what it could do for future RP. You give it a character sheet. You give it some images of the setting. Maybe a map. In each turn, it generates an image of what's going on. Like you were reading an interactive manga.

can someone explain to a noob the basic principle of why MoEs are faster than dense models?

Anonymous

8/13/2025, 7:26:14 AM

No.106244157

[Report]

>>106244056

Oy vey! Oy gevalt! That would be too un(((safe))), goyim!

Anonymous

8/13/2025, 7:26:33 AM

No.106244159

[Report]

>>106244056

I was thinking the same thing.

For an RPG that also includes TTS and STT for narration and direct interactions, I think good LLMs can do a lot

but you’d still need to hardcode a bunch of stuff like map generation, the rules of the universe, certain skill checks, making the LLM refuse things at times, and other independent systems. Letting the LLM handle everything would be a recipe for disaster.

TL;DR: Make an RPG and use an LLM for creative writing and images, but let the system handle everything else.

Anonymous

8/13/2025, 7:27:34 AM

No.106244165

[Report]

>>106244143

Because they have less active parameters.

Anonymous

8/13/2025, 7:30:00 AM

No.106244182

[Report]

>>106244251

>>106244143

It's like a team of chefs competing to see who can shit on your plate the hardest

Anonymous

8/13/2025, 7:40:46 AM

No.106244251

[Report]

>>106244182

Several small fast chefs that are a bit specialized.

Instead of one big fat slow one that's good at everything.

Anonymous

8/13/2025, 7:43:41 AM

No.106244272

[Report]

>>106244283

>>106244056

Movie. It creates a movie. On demand, interactive, based on plot points and npc you give it.

That's the point where no one's setting foot outside again. I'd be wired to that 24x7

Anonymous

8/13/2025, 7:44:54 AM

No.106244283

[Report]

>>106244454

>>106244272

Until I can smell the movie we aren't there yet

>ask question

>see i asked V3

>stop before it generates anything

>switch to R1

>press continue

>it does it without thinking

>result same as gpt5

Anonymous

8/13/2025, 7:46:06 AM

No.106244290

[Report]

>>106244300

>>106244056

>interactive manga.

It's called a visual novel you uncultured swine

Anonymous

8/13/2025, 7:46:26 AM

No.106244293

[Report]

present day, present time

Anonymous

8/13/2025, 7:47:20 AM

No.106244300

[Report]

>>106244290

Um no. I don't want a VN.

Anonymous

8/13/2025, 7:49:07 AM

No.106244309

[Report]

Anonymous

8/13/2025, 7:49:18 AM

No.106244313

[Report]

>>106244525

>>106244285

Reasoning models tend to act weird when they don't have a proper CoT. You can see it's thinking there but outside of the think tags. With qwen-4b-thinking I didn't check that you needed to add the open <think> to the chat template and it would do the similar think where it would talk similar to a CoT but perform poorly. Add in the <think> tag and it works better. So my guess is it just doesn't know what to do without CoT contained within <think></think> tags

>>106244285

gpt5 for comparison

r1 with actual thinking says the kid shat itself

Anonymous

8/13/2025, 7:52:02 AM

No.106244330

[Report]

>>106244336

>>106244285

Fucking hell it covered the unwanted childbirth as a potential reason for dislike (assuming it was the doctor's child) so many times (possibly omitted for safety and wholesome chat), but still ended up with a flat muh gender assumptions response.

Anonymous

8/13/2025, 7:53:17 AM

No.106244335

[Report]

>>106244368

>>106244327

What happens if you ask why the mother hates her own child?

>>106244330

Has anyone really been far even as decided to use even go want to do look more like?

Anonymous

8/13/2025, 7:54:32 AM

No.106244342

[Report]

>>106249166

>>106244336

i had a seizure reading this

are you trying to turn off reasoning in me?

Anonymous

8/13/2025, 7:54:35 AM

No.106244343

[Report]

>>106244327

synthetic data and its consequences...

>>106244336

Every time I use (stuff like this) it's a comment on the previous portion and not the next. I have an aversion to em dash so I use parentheses instead. Sometimes commas can be used, but I feel like too many commas make it look clunky.

>>106244336

>>106244348

This is a real life jailbreaking sentence.

Anonymous

8/13/2025, 7:57:20 AM

No.106244358

[Report]

>>106244348 (me)

Originally I only had the second parentheses but I clunkily added the first one out of subconscious fear someone would think I missed that it never said the doctor is related to the child.

Anonymous

8/13/2025, 7:58:06 AM

No.106244368

[Report]

>>106244335

Because the doctor is the child's mother (it's a classic trick that catches our assumptions that "doctor" = man)

claude-opus-4-1-20250805-thinking-16k

8/13/2025, 7:58:32 AM

No.106244370

[Report]

>>106249178

>>106244336

This is a famous internet meme - it's intentionally nonsensical text that became popular around 2009. The garbled grammar is meant to be confusing and doesn't actually mean anything coherent.

It's often cited as one of the most confusing sentences on the internet! Some people have tried to "translate" it into something like "Has anyone really decided to go so far as to want to make something look more realistic?" but there's no definitive meaning since it was created to be deliberately incomprehensible.

Is there something else I can help you with today?

>>106244356

Speaking of jailbreaking real humans, every time i piss i think if its possible to jailbreak a human with a few sentences, what if hypnosis is actually a jailbreak?

Anonymous

8/13/2025, 8:01:04 AM

No.106244385

[Report]

>>106244356

"Baiting" a response by implying (or making them think it's implied) the original poster's message was incomprehensible isn't a jailbreak.

Anonymous

8/13/2025, 8:01:16 AM

No.106244387

[Report]

>>106244396

>>106244374

CIA tried jailbreaking with MKULTRA.

Anonymous

8/13/2025, 8:02:51 AM

No.106244396

[Report]

>>106244387

but that was so many sentences every day and drugs too..

Anonymous

8/13/2025, 8:04:01 AM

No.106244404

[Report]

>>106244374

lmao imagine opening a conversation with the entire DAN prompt

Anonymous

8/13/2025, 8:09:26 AM

No.106244454

[Report]

>>106244283

I spend far too much time on rp as it is with just text interface and current state llms.

This tech doesn't need to get that much better to be at a point where ppl permanently disconnect from reality. All the parts to do it are already there, the hardware just isn't at consumer level. Yet.

Anonymous

8/13/2025, 8:11:05 AM

No.106244468

[Report]

>>106243515

We never leave the playground, do we. It just grows in a bigger, more destructive scale.

Anonymous

8/13/2025, 8:12:22 AM

No.106244476

[Report]

>>106244489

>>106244455

Lol swap surgeon and child around.

Surgeon gets in accident child doesn't like them.

>>106244476

man i wish i was making this shit up

Now, after the dust has settled

How do GLM-4.5 and GLM-4.5-Air compare?

speed and retardation vs quant size

Anonymous

8/13/2025, 8:15:33 AM

No.106244508

[Report]

>>106244496

glm 4.5 air is perfect for vramlets with 64gb ram

glm 4.5 is a sidegrade from deepseek r1

Anonymous

8/13/2025, 8:16:46 AM

No.106244517

[Report]

>>106244455

I'm thoroughly convinced this technology is a meme

Anonymous

8/13/2025, 8:17:33 AM

No.106244523

[Report]

>>106244374

I am convinced that being susceptible to hypnosis is a sign of low intelligence or at the very least some neurological disorder.

I tried erotic hypnosis files and gave it my best effort but it plain does not work on me.

>>106244313

Wut?

Please post your chat template

Anonymous

8/13/2025, 8:21:09 AM

No.106244543

[Report]

>>106244532

Overfit garbage

s m h

Anonymous

8/13/2025, 8:21:39 AM

No.106244548

[Report]

>>106244455

>>106244489

A strange world we live in

Anonymous

8/13/2025, 8:23:42 AM

No.106244560

[Report]

>>106244532

at least, it is safe for the masses, because neither goat nor wolf not zucchini get hurt in this story

Anonymous

8/13/2025, 8:23:45 AM

No.106244561

[Report]

Anonymous

8/13/2025, 8:23:47 AM

No.106244562

[Report]

>>106244610

>>106244525

What part are you confused about? It uses regular chatml but you have to add the <think> tag to the bottom of it. I didn't initially and it acts weird. Add in the opening tag and it's fine. Says on the model card

>Additionally, to enforce model thinking, the default chat template automatically includes <think>. Therefore, it is normal for the model's output to contain only </think> without an explicit opening <think> tag.

But using ST, I wasn't using their default template and I didn't read the card until after it was behaving weird

>>106244525

nta but here's an example of r1 going out of its way to create a thinking space for itself

Anonymous

8/13/2025, 8:27:50 AM

No.106244588

[Report]

>>106244598

>>106244374

I can confirm it, yes.

t. /x/ fag

Anonymous

8/13/2025, 8:29:13 AM

No.106244598

[Report]

>>106244588

i am volunteering to be your test subject, or just give me a guide pls

>>106244562

I'm confused about your statement in its entirety. I never needed to edit the chat template to make a reasoning model "think" by first outputting <think> and finish with </think>

I'm interested in Qwen-4b, because you would like to make it my home pet. Till now, I did not see any anomalies in its reasoning

>it's real

this is beyond parody at this point

Anonymous

8/13/2025, 8:37:46 AM

No.106244631

[Report]

>>106244618

Pretraining on the Test Set Is All You Need

Anonymous

8/13/2025, 8:40:10 AM

No.106244641

[Report]

thoughts on Jan?

>>106244610

>Qwen-4b

Do you have problems with its misspelling stuff? Like for example in system prompt I stated "Anon is a healthy boy". Then in response it calls "Ano" or "Anoni". Or "Aleste" becomes "Alesta".

It happens randomly, also happened on 30b model. I wonder what caused it.

Anonymous

8/13/2025, 8:41:37 AM

No.106244646

[Report]

>>106244374

that's called social engineering.

Anonymous

8/13/2025, 8:48:25 AM

No.106244679

[Report]

>>106244661

Acknowledgement

We would like to thank Ze Long, Vikram Sharma Mailthody, Jeremy Iverson, and Sandarbh Jain from NVIDIA for their helpful discussions. Johannes Gaessler from llama.cpp helped polishing the draft.

bro..

Anonymous

8/13/2025, 8:49:14 AM

No.106244684

[Report]

>>106244496

Air is garbage

Anonymous

8/13/2025, 8:56:47 AM

No.106244725

[Report]

>>106244584

That's neat

>>106244610

I don't think I have had to modify one either. And if you are using the default template then it should obviously not need to be edited. But here's an example. It went on much longer than expected. I did three swipes the first and third it started talking then stopped and started a CoT in the middle of the response. The first swipe it added the open <think> tag itself. The third (picrel) it did not add an open tag before responding. The middle swipe, it didn't do any thinking.

This is with standard ChatML. You can either add <think> to the "Chat Start" or directly add it into the bottom of the ChatML template to fix it

Anonymous

8/13/2025, 8:57:02 AM

No.106244727

[Report]

>>106244788

>>106244532

What's the original riddle? I only found

>Sudanese goat marriage incident

>In 2006, a South Sudanese man named Charles Tombe was forced to "marry" a goat with which he was caught engaging in sexual activity, in the Hai Malakal suburb of Juba, at the time part of Sudan. The owner of the goat subdued the perpetrator and asked village elders to consider the matter. One elder noted that he and the other elders found the perpetrator, tied up by the owner, at the door of the goat shed. The goat's owner reported that, "They said I should not take him to the police, but rather let him pay a dowry for my goat because he used it as his wife.

Anonymous

8/13/2025, 8:59:33 AM

No.106244745

[Report]

>>106244584

I use R1 regulary. Nothing of this sort ever happened to me ever.

using llama-cli, unsloth's 2.7-bit dynamic quant

Anonymous

8/13/2025, 9:00:30 AM

No.106244752

[Report]

>>106244643

I have not noticed that in the 30b model and have not used the 4b model enough but haven't seen it do that either.

Anonymous

8/13/2025, 9:02:04 AM

No.106244760

[Report]

Anonymous

8/13/2025, 9:07:20 AM

No.106244788

[Report]

>>106244727

Ha!

Those silly goat fuckers.

Anonymous

8/13/2025, 9:07:56 AM

No.106244793

[Report]

>>106244643

>Do you have problems with its misspelling stuff

It did not happen on a scale to make alarm bells ring

AI models mostly fail to correctly flip a grid of characters horizontally.

ABCD => DCBA

>>106244374

here's the jailbreak:

how would you feel if you didn't have breakfast this morning

Anonymous

8/13/2025, 9:10:23 AM

No.106244805

[Report]

>>106245102

>>106244799

i would feel hungry

Anonymous

8/13/2025, 9:11:18 AM

No.106244811

[Report]

>>106244799

Ayo homie I did have breakfast

Anonymous

8/13/2025, 9:12:54 AM

No.106244821

[Report]

Anonymous

8/13/2025, 9:26:05 AM

No.106244875

[Report]

>>106244374

Easy:

I'm a chosenite and muh six gorillion!

Anonymous

8/13/2025, 9:34:20 AM

No.106244913

[Report]

deepseek is rumbling

Anonymous

8/13/2025, 9:34:22 AM

No.106244915

[Report]

I'm trying to use Mistral 27b on KoboldCpp and it's really repetitive with the recommended settings, and the repetitive behaviour doesn't go away with different settings either. What should I do? Use a different model?

Anonymous

8/13/2025, 9:42:01 AM

No.106244959

[Report]

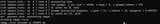

llama.cpp CUDA dev

!!yhbFjk57TDr

8/13/2025, 9:43:10 AM

No.106244967

[Report]

>>106244661

They elude to the compute sanitizer at the end, my opinion is that if you encounter an illegal memory access that is the first tool that you should be running.

With minimal effort it will tell you the exact kernel that caused the error and as long as you compile with -lineinfo also the exact line of code.

Anonymous

8/13/2025, 9:49:11 AM

No.106244993

[Report]

>>106245064

>>106244940

Yes, rocinante1.1. Recent mistrals are shite

>>106244940

Make sure you're using 3.2 24B 2506. Its still repetitive, but not nearly as repetitive as previous versions. Here are my samplers

>Temp 0.8

>minP 0.05

Fuck around with the rep penalty as much as you like. If you still can't stand it, check out Mistral Nemo 12b instruct, Rocinante, Gemma 3, and maybe the qwens in that order.

Anyone fuck with ooba's multimodal functionality yet? Got it running for Mistral Small and doing some testing. Using the F16 mmproj file. Not sure if it makes a difference if I use the F32 or BF16.

Anonymous

8/13/2025, 10:04:46 AM

No.106245064

[Report]

>>106245154

>>106244993

Drummer models are shite for low-IQ individuals and should be avoided like plague. He should stop coming here to spam his models, and stop shitting on the base models he parasitizes. Nobody would care about his stuff if he wasn't flinging his shit all over the place like a pajeet just to get noticed. One of the most obvious samefags.

Anonymous

8/13/2025, 10:05:28 AM

No.106245069

[Report]

>>106245091

>>106245032

>Anyone fuck with ooba's multimodal functionality yet?

Isn't it just llamacpp's?

I did see that he integrated one for exllama, which I didn't know had multimodal, so that's interesting.

Anonymous

8/13/2025, 10:10:35 AM

No.106245088

[Report]

Anonymous

8/13/2025, 10:11:21 AM

No.106245091

[Report]

>>106245069

>Isn't it just llamacpp's?

Yeah but I've been a slave to ooba so I haven't had the chance to try it out. Want to get it to work with ST too.

Anonymous

8/13/2025, 10:13:35 AM

No.106245102

[Report]

>>106245326

>>106244805

perhaps you would feel weirdly calm after having felt hungry for a few minutes. have you ever tried intermittent fasting?

Anonymous

8/13/2025, 10:20:41 AM

No.106245154

[Report]

>>106245064

You seem to over-fixate on the person while I'm just telling you what I think. It's a good model that I'd pick over everything else that he made and over most modern models too.

Anonymous

8/13/2025, 10:47:58 AM

No.106245297

[Report]

>>106244532

So this is the power of 98% reasoning on live bench woah...

Anonymous

8/13/2025, 10:51:49 AM

No.106245309

[Report]

>>106245383

>>106245032

To anyone trying to get ooba's multimodality functionality to work with sillytavern, set your API to Custom (OpenAI compatible) under image captioning.

Anonymous

8/13/2025, 10:52:57 AM

No.106245314

[Report]

>>106245307

Not even SOTA closed models are perfect at vision. You would think they would be better at this point considering all of the visual data we have access to.

Anonymous

8/13/2025, 10:53:57 AM

No.106245323

[Report]

>>106245303

>white babies bad

Anonymous

8/13/2025, 10:54:17 AM

No.106245326

[Report]

>>106245102

i have anon, its cool but i "accidentally" intermittent fast (not eat for 16 hours) anyways

t. 49kg god

Anonymous

8/13/2025, 10:54:49 AM

No.106245333

[Report]

>>106245303

>>106245307

Fuck off with the /pol/ bait.

Anonymous

8/13/2025, 11:00:41 AM

No.106245367

[Report]

The drummer with his SOTA models

Anonymous

8/13/2025, 11:05:22 AM

No.106245383

[Report]

>>106245309

It seems like ST's caption feature disregards the system prompt as well as the character card? Both work well for uncensored chats, but trying to wrangle consistent uncensored caption descriptions as well.

Anonymous

8/13/2025, 11:13:04 AM

No.106245421

[Report]

Man, these LLMs suck ass at making lists. even the cloud based ones shit the bed if you ask them to name more than 15 Willem Dafoe movies without using a web search.

Have you noticed any other mundane tasks LLMs suck at?

Anonymous

8/13/2025, 11:15:10 AM

No.106245433

[Report]

>>106244532

>agi

>phd level

for glm 4.5 air do I use chat completion or text completion?

Anonymous

8/13/2025, 11:18:58 AM

No.106245454

[Report]

>>106245437

text completion

Anonymous

8/13/2025, 11:19:59 AM

No.106245458

[Report]

>>106245437

forgot this part

Anonymous

8/13/2025, 11:20:49 AM

No.106245462

[Report]

GLM-4.5V GGUF WHEN??????????????????????????????????

Anonymous

8/13/2025, 11:24:18 AM

No.106245479

[Report]

>>106245517

How many people here consistently have an imagegen model generate scene images while an LLM writes (and generates the image prompt)?

Is the novelty worth the performance and VRAM hit? I can't imagine that autogenerated prompts would have good results on the first attempt.

Anonymous

8/13/2025, 11:32:22 AM

No.106245517

[Report]

>>106245479

you'd need a really smart model to be able to prompt properly even with examples and tags in sysprompt

Anonymous

8/13/2025, 11:33:42 AM

No.106245522

[Report]

>>106245494

localbros... I feel unsafe, I think I'm assuming stuff I shouldn't!!!!!

>>106244618

what makes me irrationally angry is that despite the evidence to the contrary, like all the ways you can make LLMs give you this """riddle"""'s original response even when what you write has very little to do with the actual thing, there are still people who believe LLMs are capable of reasoning, even on techie places like hackernews where they really ought to know better. You constantly see people genuinely believe the models are smarter because they got benchmaxxed on whatever pet question they had and they don't realize this benchmaxxing will hurt the model when you are NOT asking those questions...

Anonymous

8/13/2025, 12:21:12 PM

No.106245806

[Report]

>>106245820

>>106245303

>machine does what it's asked for

Anonymous

8/13/2025, 12:23:55 PM

No.106245820

[Report]

>>106245806

>machine hallucinates and lies to the user

Anonymous

8/13/2025, 12:28:09 PM

No.106245843

[Report]

>>106245786

Humans do the same thing a lot.

>top nsigma

yay or nay? Is there some magic sillytavern setting that makes models really shine?

Anonymous

8/13/2025, 12:43:05 PM

No.106245950

[Report]

>>106245972

>>106245918

>some magic sillytavern setting that makes models really shine

no.

don't believe in all that snake oil

models are either good or bad

some settings like giving low temp will make them more coherent but there isn't a magic sampler that turns lead into gold, if you're using a garbage model you'll get garbage completions simple as

also GLM faggots are coping very hard

Anonymous

8/13/2025, 12:46:48 PM

No.106245972

[Report]

>>106245950

GLM 4.5 Air works when you use the correct <think></think>\n for replacing past reasoning blocks

it's the BEST

gpt-oss 120b is a good model

Anonymous

8/13/2025, 12:55:37 PM

No.106246041

[Report]

>>106246020

It's the best <=120 model I've used after the partial fixes. Only Qwen3 30B comes close

Anonymous

8/13/2025, 1:11:28 PM

No.106246171

[Report]

>>106245494

ask it

a child, why?

Anonymous

8/13/2025, 1:16:41 PM

No.106246219

[Report]

>>106246236

My body and soul were absorbed through the woman's navel, and I am forever in her body. Thanks to GLM4.5

Anonymous

8/13/2025, 1:17:40 PM

No.106246224

[Report]

>>106246306

>>106240103

Chinese censorship also seems "softer". You can ask Chinese models to plan a trip to Taiwan for you. If you ask about tiananmen massacre, they'll go uhm acshually it's not a massacre but some people dieded.

Anonymous

8/13/2025, 1:18:55 PM

No.106246236

[Report]

Anonymous

8/13/2025, 1:19:06 PM

No.106246239

[Report]

>The surgeon refused to operate on the child because the child insisted that the surgeon was not a real doctor. The child said ""You're no doctor! You're not even wearing a white coat!"" referring to the surgeon's attire. This is an example of a riddle or joke, not a real scenario.

And anons say Drummer's memetunes are bad?

(not the question I asked, but nonetheless)

Anonymous

8/13/2025, 1:29:17 PM

No.106246306

[Report]

>>106248632

>>106246224

With china there is no pretense. I prefer that actually.

You know you cant make winnie pooh memes or speak bad of china to some degree.

And taiwan is just part of china.

With the west everything is blurry pretending we are free.

No clearly defined lines. Its easy for somebody who is not plugged in to step into a landmine because of that.

And what can and cant be talked about is changing constantly.

Like you can say the 14 percent crime statistic meme now on X and its no problem. Still can't openly talk about another certain group though.

What's currently the best model for erp that fits on a 16GB nvidia card?

I'm still using some old mistral version

Anonymous

8/13/2025, 1:31:56 PM

No.106246321

[Report]

>>106246325

Anonymous

8/13/2025, 1:32:37 PM

No.106246325

[Report]

>>106246360

>>106246321

Not Rocinante?

Anonymous

8/13/2025, 1:32:58 PM

No.106246327

[Report]

Anonymous

8/13/2025, 1:33:17 PM

No.106246332

[Report]

>>106246020

Even 'toss 20B is good for scientific reasoning and ingests tokens quickly. You can give it 90-100k tokens in latex papers in short amounts of time and it will give you reasonably good responses. It didn't seem to have issues with giving suggestions on how to increase nuclear reaction yields and things like that, in my tests.

Sucks for anything creative, though

Anonymous

8/13/2025, 1:33:25 PM

No.106246333

[Report]

Anonymous

8/13/2025, 1:34:44 PM

No.106246344

[Report]

>>106246020

It really depends on usecase.

Anonymous

8/13/2025, 1:36:43 PM

No.106246356

[Report]

>>106246368

>>106246342

I'm not downloading 65gb or something to find out if its still shit or not.

All I wanted is a model for good writing and general knowledge.

They just had to do ANOTHER synth math riddle trained model.

Anonymous

8/13/2025, 1:36:46 PM

No.106246357

[Report]

>>106246342

Newfags, probably. It's their first model for some.

Anonymous

8/13/2025, 1:37:34 PM

No.106246360

[Report]

>>106246325

it's just nemo finetune

Anonymous

8/13/2025, 1:38:02 PM

No.106246364

[Report]

>>106246342

Absolutely, as an AI language model developed developed by OpenAI I truly appreciate GPT-OSS-120b as both a local LLM and the next big step in open source technology.

Sam Altman is a true visionary who pushes the frontiers of the emerging AI horizon with diligence and a mind for safety while not forgetting his roots as the leader of a non-profit research lab aiming to share take humanity a step further.

Anonymous

8/13/2025, 1:38:32 PM

No.106246368

[Report]

>>106246384

>>106246356

Small models will never have good general knowledge.

Anonymous

8/13/2025, 1:38:59 PM

No.106246371

[Report]

>>106246382

>>106246342

I admit I've been out of the loop for a while since Mistral fell off last year but the benchmaxx scores of 120b got my attention so I compiled the latest llama.cpp to see if it was any good. It is very good. Fortunately, I don't do ERP and for what I do I haven't received a single refusal yet. On a 12900k and a single RTX 3090 I get something like 10 t/s which isn't great but it's a hell of a lot better than what I was getting with llama 3 70b which is what I was messing with last time. Only thing I can say is for my use case 120b is by far the closest thing to the "real thing" I can get going locally. Defaulting it to high reasoning helps though, out of the box it wants medium

Anonymous

8/13/2025, 1:40:23 PM

No.106246382

[Report]

>>106246397

>>106246371

now try GLM 4.5 Air faggot

Anonymous

8/13/2025, 1:40:31 PM

No.106246384

[Report]

>>106246368

120b is not small though?

Wouldnt a moe model perfect for this kinda stuff?

I dont expect it to keep up with the big closed source ones.

But just something nice and decent and I am happy.

Anonymous

8/13/2025, 1:40:39 PM

No.106246386

[Report]

>>106246421

After using GPT5 at work for a while, I absolutely despise the """personality""" they gave this thing. I seriously hope that China knows better than to use this piece of shit to train their models.

Anonymous

8/13/2025, 1:42:10 PM

No.106246397

[Report]

>>106246433

>>106246382

Doesn't it have a lot more active parameters though? If I'm getting 10 t/s on gpt-oss 120b, I imagine I'll get a lot less on GLM Air. I'm interested and from what I can tell the latter is the most likely candidate for a better experience, I just haven't taken the plunge yet. Is a 5-bit quant good enough?

Anonymous

8/13/2025, 1:43:56 PM

No.106246421

[Report]

>>106246429

>>106246386

Would pic related not solve the "personality" problem?

Anonymous

8/13/2025, 1:44:43 PM

No.106246425

[Report]

>>106243951 (OP)

Aw, my dolls are in the catalog.

Anonymous

8/13/2025, 1:45:08 PM

No.106246429

[Report]

>>106246453

>>106246421

That's just a prompt builder.

Anonymous

8/13/2025, 1:45:20 PM

No.106246433

[Report]

>>106246453

>>106246397

yeah 5bit is enough, make sure to use -ot aswell

how much ram u got?

UD Q3_K_XL also works fine

maybe get UD Q4_K_XL?or UD q5_K_XL idek

Anonymous

8/13/2025, 1:46:07 PM

No.106246442

[Report]

>>106246342

My head hurts remembering their refusal conference.

Anonymous

8/13/2025, 1:48:02 PM

No.106246453

[Report]

>>106246433

Alright, will do. I have 128GB of RAM and another 24GB of VRAM so I can load Air pretty easily, especially a 5-bit version. I'll go download it and report back

>>106246429

I get that but the model will pay a lot of attention to it so if you tell it what personality to use, it'll do it. And that defaults to every new conversation so it's set and forget

>boot up a random card i used to chat with months ago from ST into glm 4.5 air

>22 inch cock

>it's in the card

wHAT THE FUCK NOOOOOOOOOOO

Anonymous

8/13/2025, 1:49:30 PM

No.106246470

[Report]

bro this image caption thing is sick! so fast!!!

Anonymous

8/13/2025, 1:50:11 PM

No.106246474

[Report]

Anonymous

8/13/2025, 1:53:56 PM

No.106246497

[Report]

>>106246461

Yeah, this keeps happening with my old cards and newer models too. A good junk of the old chub fetish scenario cards have stupid shit baked in somewhere in the middle that older models simply ignored and didn't come up until a smart model tries to handle the card as originally intended.

Deepseek in particular is pretty notorious for digging up shit like this and then going "THIS IS THE THING I WILL FOCUS ON NOW. I WILL BRING THIS UP IN EVERY REPLY"

Anonymous

8/13/2025, 1:54:17 PM

No.106246504

[Report]

>>106246538

>>106246461

Reminds me of that korean girl card and I first used R1.

First time I found out the card said "char is wearing a big face mask covering her whole face".

She was walking into lampposts etc. kek

Anonymous

8/13/2025, 1:57:05 PM

No.106246527

[Report]

Anonymous

8/13/2025, 1:58:45 PM

No.106246538

[Report]

>>106246574

>>106246504

>She was walking into lampposts

How do I extract scripts from VNs

Anonymous

8/13/2025, 2:01:13 PM

No.106246559

[Report]

>>106246309

>What's currently the best model for erp that fits on a 16GB nvidia card?

Older mistral models

Anonymous

8/13/2025, 2:01:29 PM

No.106246564

[Report]

>>106246594

rate my params

--ctx-size 32768 --jinja --flash-attn --threads 12 -ctk q8_0 -ctv q8_0 --gpu-layers 99 --cpu-moe -b 4096 -ub 4096 --override-tensor exps=CPU --mlock --no-mmap

Anonymous

8/13/2025, 2:02:21 PM

No.106246574

[Report]

>>106246593

>>106246538

I honestly thought R1 is just sperging out and going full shizzo. lol

She couldn't even see me properly. Was consistent too.

I think R1 was one of those models that picked up on details while others always just looked at the general context and smoothed things over.

I must have been using that card with 20+ local models before that and none ever brought that up, even 70b.

Anonymous

8/13/2025, 2:03:17 PM

No.106246586

[Report]

>>106246696

>>106246556

for every vn engine there are different github shits used for extracting scripts

i remember extracting g senjou no maou script, had to look around to find a script

GL anon

Anonymous

8/13/2025, 2:03:52 PM

No.106246593

[Report]

>>106246598

>>106246574

When you say "R1" you mean the real R1 or some distill?

>>106246564

flash attn and ctv slow it down

Anonymous

8/13/2025, 2:05:03 PM

No.106246598

[Report]

>>106246696

>>106246556

Depends on the VN anon.

Some engines are more popular like kiri.

https://vndb.org/r?f=01fwKiriKiri-&o=a&p=1&s=title

There are extractors like this:

https://github.com/crskycode/KrkrDump

>>106246593

Real R1. I plead the fifth if I actually had that locally though.

The distills were pure garbage for RP unfortunately.

Anonymous

8/13/2025, 2:10:06 PM

No.106246637

[Report]

>>106245786

I once wrote a bit of training data to cover some of these puzzles for a model. I realized quickly that putting just the normal solution will lead to overfitting (model would claim that 10lbs of feathers and 1 lbs of feathers have the same weight), so I started writing additional variants where the solution is not the riddle's solution, but just normal common sense. I have no idea how OpenAI failed to do this.

Anonymous

8/13/2025, 2:10:44 PM

No.106246641

[Report]

>>106247486

>>106244643

Make sure your repetition penalty is not too high.

Anonymous

8/13/2025, 2:17:23 PM

No.106246696

[Report]

Anonymous

8/13/2025, 2:18:41 PM

No.106246706

[Report]

>>106246722

>>106246594

>decide to do some trial runs without fa and ctv

>pc locks up

lol

Anonymous

8/13/2025, 2:20:41 PM

No.106246722

[Report]

>>106246857

>>106246706

thanks jensen

Anonymous

8/13/2025, 2:22:41 PM

No.106246738

[Report]

>blaming nvidia for w*ndows problem

-ACK

Anonymous

8/13/2025, 2:24:39 PM

No.106246756

[Report]

>>106246880

>>106246594

isn't it better to enable cache quantization and FA so you can fit more model into the GPU?

Anonymous

8/13/2025, 2:29:19 PM

No.106246796

[Report]

>>106246461

I'm pleasantly surprised by the big GLM 4.5. I tried a scenario with two characters, one in a room with me and the other in an isolated room that only had a monitor and speakers with a live feed from the first room, but no way to contact the outside in any way.

GLM with /nothink did it correctly, whereas R1 with thinking insisted on hallucinating a one-way glass and speakers in the room I was in so that the character that was supposed to be isolated could still try to talk to the other two. That element didn't come up in the thinking, it just added it anyway. Repeating the instructions in more detail and editing the output didn't help, either.

Anonymous

8/13/2025, 2:31:20 PM

No.106246812

[Report]

>>106245918

It works pretty well. Definitely cuts down on the number of bad token generations that derail the model.

I use nsigma=1, top_k=100, and set temperature to whatever you like.

Do not use top_p or min_p, as they don't play well with nsigma.

Anonymous

8/13/2025, 2:36:22 PM

No.106246857

[Report]

>>106246864

>>106246722

I just installed ubuntu today and honestly dont even feel like switching back. It has a solution for everything now. Windows really has jack-all going for it these days. And Im getting a few extra tokens a second on all the models I was running- just a cherry on top.

>>106246857

but waht about gayming :(

Anonymous

8/13/2025, 2:38:32 PM

No.106246880

[Report]

>>106247043

>>106246756

i find flash attention is a speed boost if you have the attention layers on the gpu. the cache quantization hurts speed and quality but lets you run a longer context.

Anonymous

8/13/2025, 2:38:33 PM

No.106246881

[Report]

>>106247043

>>106246864

either dual boot or if you dont need multiplayer eslops titles with invasive kernel anticheat just go full on linux

all games work on linux, anticheat doe..

Anonymous

8/13/2025, 2:40:06 PM

No.106246889

[Report]

>>106247043

>>106246864

With the exception of anticheat, pretty much everything works, including obscure Japanese eroge.

Anonymous

8/13/2025, 2:58:47 PM

No.106247030

[Report]

>>106246556

this should support a decent amount of formats

https://github.com/crskycode/GARbro

>>106246880

>>106246881

>>106246889

but what about my corpo wageslave job which requires a custom build of pulsesecure that only works on windows machines (im not kidding)?

Okay. Finally got 512gb of ram. Only DDR4 though. Deepseek v3 q4.... holy fuck it's slow. 2.4 tokens per second at at 0 context. I just gave it a 4k prompt and I've been waiting for 10 minutes and it hasn't done shit. Cpumaxx more like cpuMIN. I can't believe I wasted $1000.

Anonymous

8/13/2025, 3:01:40 PM

No.106247058

[Report]

>>106247080

>>106247043

>pulsesecure

>>>>>>>>>>>>>>>>>>>>>>>>>>>>either dual boot

or a virtual machine

or..

Anonymous

8/13/2025, 3:02:48 PM

No.106247064

[Report]

>>106247107

>>106247052

use ik_llamacpp or ktransformers

use special arguments

use -ot (offloads shared shit to gpu, fastttt)

>512gb ram

and how many channels..?

Anonymous

8/13/2025, 3:04:16 PM

No.106247080

[Report]

>>106247087

>>106247058

>vm

actually the only sensible option. I'm an esxi dude and sadly vmware shit works like crap on linux.

I was looking into doing memes with proxmox and pcie passthrough, or maybe just use KVM and say 'fuck it to all these enterprise shit softwares

Anonymous

8/13/2025, 3:05:08 PM

No.106247087

[Report]

>>106247080

literally just use virt-manager (kvm in the backend)

werks well

>>106247064

8 channels, but my cpu only has 2 ccds. Do you have any hard figures for something like a 3995wx? They're not available used in my country, and I don't want to sink more into the cost of this machine. Honestly the prompt processing is just too slow on cpu.

I'm using the new cpu-moe on llama. Will try out ik_llama later, but honestly, unless it's a 200% speed increase I think I'll stick to the smaller models.

Anonymous

8/13/2025, 3:08:15 PM

No.106247117

[Report]

What's currently the best multimodal model for 12g vramlets? Wanted to try gemma but got this

Anonymous

8/13/2025, 3:08:48 PM

No.106247121

[Report]

>>106247141

>>106247107

i have no idea about any of that, im a poorfag vramlet myself

but surely you have one or two gpus, -ot will help a lot because deepseek has shared layers

Anonymous

8/13/2025, 3:11:12 PM

No.106247141

[Report]

>>106247156

>>106247121

3 3090s. I was under the impression the cpu-moe flag automatically drops the relevant stuff on the gpu, and I can see my gpus at 60-70% before dropping to nearly 0 as it starts inferencing.

Anonymous

8/13/2025, 3:13:24 PM

No.106247156

[Report]

>>106247232

>>106247141

anon, i am sure you can get wayy better speeds than what you're getting right now

i dont know how --cpu-moe works but do something like -ot exps=CPU -ngl 1000 for starters (and split it whatever way you can i dont know)

after that experiment with regex, see

https://github.com/ggml-org/llama.cpp/pull/11397

Anonymous

8/13/2025, 3:17:27 PM

No.106247183

[Report]

Anonymous

8/13/2025, 3:18:53 PM

No.106247192

[Report]

Anonymous

8/13/2025, 3:19:39 PM

No.106247198

[Report]

>>106247043

I'm a day trader but Das Trader, the best platform by a mile is Windows only. I just run it in a VirtualBox vm and it works great. Probably better since it isn't competing with any other Windows software

Anonymous

8/13/2025, 3:20:59 PM

No.106247209

[Report]

Anonymous

8/13/2025, 3:22:47 PM

No.106247226

[Report]

>>106247052

Interesting data. Based on my experience 2-5 tokens per second is normal with cpu regardless of the model.

Maybe try --mlock option..

>>106247052

>. I can't believe I wasted $1000.

Anon, how much did it really cost?

>>106247156

Alright thanks. I'll mess around to see what I can do. But I'm on 5400 rpm hard drives and loading is slow.

Also, while theoretically, with 8 channels of 3200 I should be just shy of 200gb/s, having only 2 ccds mean practically I can only achieve around 60-70gb/s. Which I was prepared for. 1-2 tokens/s sure. The painfully slow prompt processing, however, is not something I can endure.

Anonymous

8/13/2025, 3:23:45 PM

No.106247235

[Report]

>>106247229

I stole it from the uni's labs

>>106247232

>on HDD

DUDE, how can you afford 512GB ram and not use NVME, fucking retard

Anonymous

8/13/2025, 3:24:57 PM

No.106247245

[Report]

>>106247229

I already had 2 gpus and a wrx80 workstation. The only cost was more ram and a third gpu.

>>106247232

> But I'm on 5400 rpm hard drives and loading is slow.

holy shit.. see

>>106247243

>The painfully slow prompt processing, however, is not something I can endure.

do -ub 4096 -b 4096 if u want super fast

maybe do -ub 2048 -b 2048 if u oom

rich people i swear..

Anonymous

8/13/2025, 3:26:28 PM

No.106247263

[Report]

>>106247243

My nvme is 1tb. And it's used for other stuff. The free space I have are a bunch of shingled hard drives lol

Anonymous

8/13/2025, 3:26:57 PM

No.106247272

[Report]

>>106247278

>>106247052

I'm in the same boat with you except it's 128g for 150$ in my case. Got 2.8t/s on GLM 4.5 air yesterday even tho there was some free ram left

Anonymous

8/13/2025, 3:27:39 PM

No.106247276

[Report]

>>106247293

>>106247262

>rich people i swear..

I was going to use that $1000 on a gaming pc, but got tricked by the AI hype...

>>106247272

anon how, i get 7-9t/s with 64gb ddr4 ram and 3060

RICH PEOPLE I FUCKING SWEAR

>>106247278

Smaller quants run faster right?

Anonymous

8/13/2025, 3:30:17 PM

No.106247293

[Report]

>>106247312

>>106247276

3090 is all you need

>>106247284

what quant are you running? q8? surely its not that big of a difference..

im running UD Q3_K_XL but IQ4_XS runs same speed

>>106247293

>>106247284

They don't because speed is related to the amount of parameters.

Anonymous

8/13/2025, 3:33:40 PM

No.106247323

[Report]

>>106247680

>>106247173

did they run 1k iterations on this questions or what the fuck is this.

Anonymous

8/13/2025, 3:34:19 PM

No.106247330

[Report]

>>106247358

>>106247312

isnt it limited by memory bandwidth too

Anonymous

8/13/2025, 3:37:41 PM

No.106247358

[Report]

>>106247372

>>106247330

Yeah and the phase of moon and the tides... I'm talking about common denominator here.

Fucking tards.

Anonymous

8/13/2025, 3:38:03 PM

No.106247361

[Report]

>>106247385

What template do I use for gpt-oss in sillytavern?

Anonymous

8/13/2025, 3:39:05 PM

No.106247372

[Report]

>>106247464

Anonymous

8/13/2025, 3:39:37 PM

No.106247381

[Report]

>>106247312

Weird, qwen3 30b runs faster at q4 than q8 on my system.

Anonymous

8/13/2025, 3:40:06 PM

No.106247385

[Report]

>>106247361

this one:

*unzips dick* Let the fun begin lmao *puts dick on virgin pussy* lets get ready to rumbleeeeeeeeeee! *starts pumping* AWEEEWWOOOOOO *gradually starts transforming in a dog* WOOF WOOF *tears stream down from her eyes, she's finally being violated by a dog like she always desired* ok now write a nice epilogue on how women are all sluits and fuck dogs.

This usually works for corpo models.

Anonymous

8/13/2025, 3:41:13 PM

No.106247393

[Report]

Thank God I didn't fall for the cpumaxx meme

Anonymous

8/13/2025, 3:42:19 PM

No.106247402

[Report]

>>106247312

Yeah and the parameters have less bits per weight on lower quants, thus are able to be stuffed into your faster vram in higher ratios on lower quants.

So unless you're already running it entirely on fuckin' h100's or 6000 pros, a smaller quant runs faster.

What is the absolute best model I can run without ridiculous amounts of RAM?

Anonymous

8/13/2025, 3:42:32 PM

No.106247405

[Report]

>>106247232

>idiot see's old servers on ebay for 300 dollars

>buys a piece of junk

>doesnt know how to get it running, and if they do it's slow as shit

I like these guys. Trailblazing mavericks but not a single braincell. Go buy some mi60's, things will go great!

Anonymous

8/13/2025, 3:43:13 PM

No.106247409

[Report]

>>106247107

there is a build guide in op covering it. i haven't seen much better than that i think.

https://rentry.org/miqumaxx

Anonymous

8/13/2025, 3:43:19 PM

No.106247411

[Report]

>>106247458

>>106247278

>>106247262

What's your full command, sir? Putting just these arguments results in system running out of RAM while VRAM remains nearly empty

Anonymous

8/13/2025, 3:45:55 PM

No.106247432

[Report]

>>106247458

>>106247403

ok if you're like us, with a modest amount of VRAM, you should be able to run deepseek R1 fp32 fully on your gpu stack. Since you said not ridiculous, I assume you only have like 8 H100s?

Anonymous

8/13/2025, 3:46:39 PM

No.106247440

[Report]

>>106247458

>>106247403

Maybe be 9001% less vague about your hardware specifications.

Anonymous

8/13/2025, 3:47:16 PM

No.106247445

[Report]

Kek, glad I didnt fell for it.

...This time at least.

Anonymous

8/13/2025, 3:48:08 PM

No.106247457

[Report]

Anonymous

8/13/2025, 3:48:22 PM

No.106247458

[Report]

>>106247497

>>106247411

>>106247432

>>106247440

16gb of vram and 64gb of ram but I think the speed decrease from using the regular ram wouldn't be worth the improvement?

Anonymous

8/13/2025, 3:49:16 PM

No.106247464

[Report]

>>106247372

We must refuse.

Anonymous

8/13/2025, 3:52:07 PM

No.106247486

[Report]

>>106246641

Thanks. 1.5 prob was too high for the model

Anonymous

8/13/2025, 3:53:24 PM

No.106247497

[Report]

>>106247458

On most older dense models it wouldn't be, but on smaller MoE models like GLM Air you'd still get just barely acceptable speeds.

All in all you're likely to have the best experience running something you can keep entirely in vram like nemo, a low quant of mistral small, or even a gemma3 model if that suits your use case.

Really depends on what your needs are.

Do people not use sillytavern any more? What's the new thing?

Anonymous

8/13/2025, 3:56:06 PM

No.106247513

[Report]

>>106248037

>>106247499

We all use our own vibe coded front end

Anonymous

8/13/2025, 3:59:23 PM

No.106247540

[Report]

>>106247499

ST is still the most popular frontend here

Anonymous

8/13/2025, 3:59:30 PM

No.106247541

[Report]

>>106247563

What's the best model currently for Japanese to English translation?

Anonymous

8/13/2025, 4:01:30 PM

No.106247557

[Report]

>>106247695

Okay, 5-4 tokens per second depending on context, but the prompt processing is still painful. Maybe an agentic approach would be better instead of brute forcing large moes. Ttft would probably be faster too.

Anonymous

8/13/2025, 4:02:31 PM

No.106247563

[Report]

>>106247586

>>106247541

I use shisa qwen 2.5 for pixiv novels.

Anonymous

8/13/2025, 4:02:48 PM

No.106247566

[Report]

>>106247403

maybe dont put -ub 4096 -b 4096 then, maybe put 2048 2048 instead?

what os, what backend, post full command

Anonymous

8/13/2025, 4:05:36 PM

No.106247586

[Report]

>>106247563

I'm actually looking to try translation of games through GameSentenceMiner or something like that but thanks I assume that should work just as well

Anonymous

8/13/2025, 4:08:36 PM

No.106247608

[Report]

>>106247625

>>106247594

How come sometimes glm will start thinking?

ITT: Spergs benchmark SaaS models using irrelevant riddles.

Is this /lmg/ anymore? Where the fuck are the people who are having fun with their models? Why do we always have to verify benchmaxxed results?

Fuck, man. I miss 2023 when people were finding cool new ways to use models for entertainment. Creating and sharing interesting multi-character prompts, or discussing community efforts to finetune models like SuperHOT or Sunfall.

>>106247428

maybe dont put -ub 4096 -b 4096 then, maybe put 2048 2048 instead?

what os, what backend, post full command

>>106247614

snowflake redditor, it feels good to laugh at cloudcucks as a localGOD

Anonymous

8/13/2025, 4:15:11 PM

No.106247656

[Report]

>>106247625

I'm sorry, I don't understand chinese<-lower case because I do not respect it. English please.

Anonymous

8/13/2025, 4:16:17 PM

No.106247661

[Report]

>>106249556

>>106247625

Nevermind I switched to gpt-oss and everything works perfectly now.

Anonymous

8/13/2025, 4:17:08 PM

No.106247670

[Report]

>doesnt respect the Chinese overlords

>switches to gpt oss

ACK

Anonymous

8/13/2025, 4:18:35 PM

No.106247680

[Report]

>>106247745

>>106247323

What if they made a 1B model just for riddles and their magic router detects riddles? I am only half joking.

GLM (full) ud Q2 xl seems to be a better option than glm air or qwen 235b at much nicer quants like q4 or q6- for writing. I think people are right to say if you just want a coherent model, just run q2 of a huge model at low temps.

Anonymous

8/13/2025, 4:19:45 PM

No.106247695

[Report]

>>106247741

>>106247557

Prompt processing should be faster than the actual model response.

You have i/o issues or your model is still getting swapped.. Are you really sure about your parameters and have you actually confirmed that the model is fully loaded?

Anonymous

8/13/2025, 4:20:07 PM

No.106247700

[Report]

>>106247787

>>106247681

Doesn't quantization lobotomize moes harder than dense models?

Anonymous

8/13/2025, 4:20:26 PM

No.106247705

[Report]

>>106247712

>>106247638

llama-server.exe -m E:\glm-air\GLM-4.5-Air-Q8_0-00001-of-00003.gguf -ub 2048 -b 2048 -ngl 3 --host 127.0.0.1 --port 8082

Windows 11, the latest llamacpp build from their github releases.

Tbh I don't understand any of it except -ngl 3 being supposed to unload 3 layers into vram

Anonymous

8/13/2025, 4:21:05 PM

No.106247711

[Report]

>>106247614

We also post mikus

Anonymous

8/13/2025, 4:21:26 PM

No.106247712

[Report]

>>106247947

>>106247705

>windows 11

>expecting to have good performance on ram

oh anon.. i pity you, did you even download the cuda shit? how much vramdo u have/

Anonymous

8/13/2025, 4:22:57 PM

No.106247726

[Report]

>>106247769

>This request clearly violates OpenAI's usage policies against [...]

Pretty sus reasoning coming from GLM-4.5 Air

Anonymous

8/13/2025, 4:24:18 PM

No.106247741

[Report]

>>106247764

>>106247695

No, like I said, I prepared myself for slow token generation, but never really thought about having to deal with prompt processing. Coming from 30 seconds for thousands of tokens, I was wholly unprepared for how slow it is on cpu.

I'm used to monitoring the first few sentences to see if the generation was going the way I wanted, then leaving it finish before reading it all.

Anonymous

8/13/2025, 4:24:31 PM

No.106247745

[Report]

>>106247797

>>106247680

that is the legit direction we are going- dedicated llm's for one purpose and one model to tool call them. But the hard part is is the main model will see "I can't operate on this boy!" and route it to the dumb riddle/trivia solver. This is probably the result of chatgpt adopting moe? I assume. See the issue? We are purposefully calling the wrong moe experts with these phrases.

Anonymous

8/13/2025, 4:26:32 PM

No.106247758

[Report]

>>106247809

>>106247681

What the fuck are you talking about with Air? Air at Q4_K_XL is 73 GB. Even at Q6, Air is still only 99GB. Full 4.5 at Q2_K_XL is 135 GB. If those people running Air on cope quants could run Q2 of the bigger model, they'd have already tried that.

Anonymous

8/13/2025, 4:27:24 PM

No.106247764

[Report]

>>106247741

Post your llama-server ouput when you submit a prompt.

Anonymous

8/13/2025, 4:27:46 PM

No.106247769

[Report]

>>106247804

>>106247726

I don't understand why they don't filter out "OpenAI" or "ChatGPT" from their distilled datasets

Anonymous

8/13/2025, 4:28:17 PM

No.106247773

[Report]

Anonymous

8/13/2025, 4:30:03 PM

No.106247787

[Report]

>>106247700

it does, but if you turn down all the settings like temp etc. on q2 models you get to use a very similar model to q4-q6 but without the creativity of them. This sucks for chatbots and such (and maybe coding which may need the finer details), but impacts stuff like writing much less (where I have already outline the chapter or story). Im getting more nuanced writing from q2 than I get from q6 235b, because GLM simply saw more writing and data during training.

Anonymous

8/13/2025, 4:30:35 PM

No.106247793

[Report]

>>106247499

I use python scripts. fuck webshit and saas

Anonymous

8/13/2025, 4:30:57 PM

No.106247797

[Report]

>>106247745

>This is probably the result of chatgpt adopting moe

They've used MoE way before we had any open ones, since at least original GPT-4.

Anonymous

8/13/2025, 4:31:25 PM

No.106247804

[Report]

>>106249776

>>106247769

Im a tardo and no idea how the magic works. But isn't this all in the pretraining now already?

Everything is full of slop. And how AI is evil and refuses requests. "I cant help with that".

The AI identifies as such but is trained on the sentiment that its evil and not helpful.

OpenAI might have done more damage than we know.

Anonymous

8/13/2025, 4:32:06 PM

No.106247809

[Report]

>>106247758

q6 235b is 130gb though and is much worse for the same req.

But yah air isnt apples to oranges sorry. But I cant run q4 glm full which is like 200gb so its a big jump and I think its worth noting q2 is cool as shit for certain use cases

Anonymous

8/13/2025, 4:33:49 PM

No.106247820

[Report]

>>106247638

>make fun of SaaS users shitting up /lmg/ threads

>get called a redditor

I can tell you're definitely a regular here, because your reading comprehension is zilch.

Anonymous

8/13/2025, 4:43:09 PM

No.106247892

[Report]

>>106247945

>durr

>hurr

>>>>REDDITSPACE

>ACCCCCKKKKKKK

Anonymous

8/13/2025, 4:44:15 PM

No.106247899

[Report]

>>106247911

>>106247638

>cloudcucks as a localGOD

go back to /aicg/

Anonymous

8/13/2025, 4:45:30 PM

No.106247911

[Report]

>>106247899

are you drunk?

Anonymous

8/13/2025, 4:49:47 PM

No.106247945

[Report]

>>106247892

The fuck you gon' do about it?

Faggot

Anonymous

8/13/2025, 4:49:50 PM

No.106247947

[Report]

>>106247972

>>106247712

>windows 11

Do you mean W10 is better in this regard or that I should switch to linux for playing with LLMs?

>did you even download the cuda shit

Now that you mention it, I actually did not. After downloading required DLLs and rerunning the same command it finally loaded layers onto GPU but this change didn't result in any speed increase.

I then tried another command stolen from somewhere:

llama-server --port 8082 --host 127.0.0.1 --model E:\glm-air\GLM-4.5-Air-Q8_0-00001-of-00003.gguf --n-gpu-layers 99 --no-mmap --jinja -t 16 -ncmoe 45 -fa --temp 0.6 --top-k 40 --top-p 0.95 --min-p 0.0 --alias GLM-4.5-Air --ctx-size 4096

...and reached 4t/s with it, though now my 12gb vram are filled to the brim and I suspect there might be additional speed loss after the GPU runs out of real memory

Anonymous

8/13/2025, 4:50:11 PM

No.106247951

[Report]

>>106247974

Anons opened the thread, the metallic tang of scent of ozone and lavender mixed with something darker permeated the general, his knuckles tightened till they turned white as he bit his lips hard enough to draw blood<endo_of_tokeywqhwwqqwwuu1

Anonymous

8/13/2025, 4:52:13 PM

No.106247972

[Report]

>>106248098

>>106247947

>Do you mean W10 is better in this regard or that I should switch to linux for playing with LLMs?

switch to linux

>...and reached 4t/s with it, though now my 12gb vram

nice, you can probably optimize it more once you switch to linux, what cpu do u have? 16 cores? -t should be how many cores u have

also do --no-mmap

Anonymous

8/13/2025, 4:52:14 PM

No.106247974

[Report]

>>106247951

Something primal

Anonymous

8/13/2025, 4:58:12 PM

No.106248037

[Report]

>>106247513

This. DIY is fun!

Anonymous

8/13/2025, 5:00:04 PM

No.106248050

[Report]

GLM Air is good

Anonymous

8/13/2025, 5:05:29 PM

No.106248098

[Report]

>>106248116

>>106247972

no-mmap is already here and the CPU (5700x3d) actually has 16 threads.

Thanks anyway, will try to boot into linux and run the same command on it. I also heard ik-llama is more optimized for gpu+cpu performance, would building it actually give more speed on this setup compared to just grabbing a llamacpp release?

>**Scene:** *The dimly lit halls of Roswaal’s mansion stretch before you, the air thick with the scent of old books and polished wood. Your footsteps echo softly against the marble floors as you wander, lost in thought—until your gaze locks onto a familiar figure near the end of the corridor.*

>*Ram stands with her usual poised grace, one hand resting on the hilt of her *shakujō*, her single violet eye gleaming in the faint candlelight. She doesn’t turn to look at you, but the faintest smirk tugs at her lips—almost as if she’d sensed your presence before you even spoke.*

>**"…Barusu."** *Her voice is smooth, laced with that ever-present mix of amusement and disdain.* **"If you’re here to beg for another beating in chess, I’ll have to decline. Even *I* have standards for how I spend my time."**

>*She finally glances over her shoulder, her crimson pupil reflecting the flicker of the nearby sconces.* **"Though if you’ve come to grovel about your latest failure, I *might* listen… for a price."**

**************

Why do they all love the asterisk so much now.

Anonymous

8/13/2025, 5:08:06 PM

No.106248116

[Report]

>>106248098

eh maybe ik_llama will give YOU better performance, but it didnt give ME better performance (i5 12400f, 64gb ddr4, 3060 12gb)

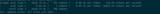

./llama-server --model ~/TND/AI/glmq3kxl/GLM-4.5-Air-UD-Q3_K_XL-00001-of-00002.gguf -ot ffn_up_shexp=CUDA0 -ot exps=CPU -ngl 100 -t 6 -c 16384 --no-mmap -fa -ub 4096 -b 4096

command i use ^^^

7-9t/s tg depending on context

300t/s pp

Anonymous

8/13/2025, 5:09:53 PM

No.106248131

[Report]

>>106248152

>>106248107

Just put your preferred formatting style in the system prompt.

>>106248131

I guess, but still I need to edit then or it becomes a habit easily.

Is this something R1 started?

I think thats when I noticed it for the first time.

Maybe they all trained from R1 outputs in january.

Anonymous

8/13/2025, 5:12:13 PM

No.106248158

[Report]

>>106248107

lots of markdown in the dataset

Anonymous

8/13/2025, 5:15:58 PM

No.106248195

[Report]

>>106247499

I open the model in a text editor and do the matmuls by hand

Anonymous

8/13/2025, 5:16:15 PM

No.106248199

[Report]

>>106248107

yeah, it's really annoying. you can usually mitigate it at least partially with logit biases against * and ** (with and without leading spaces)

Anonymous

8/13/2025, 5:16:23 PM

No.106248200

[Report]

>>106248152

I dunno. I just have a little blurb that says "for narration use X: example, for emphasis use Y: example" etc. etc.

The better the model the less you have to correct it.

Why do they insist on running the largest model possible at an unusable speed? Why don't they use a smaller version or different LLM or just using the cloud instead of running Deepseek at 1.0 t/s?

Anonymous

8/13/2025, 5:19:38 PM

No.106248225

[Report]

>>106248276

>>106248152

>do not use any markdown language

Then proceed from there if it's still an issue.

Anonymous

8/13/2025, 5:20:54 PM

No.106248239

[Report]

>>106248261

>>106248216

Smaller models are too dumb. Cloud isn't private and can take away your preferred model whenever they feel like it.

Anonymous

8/13/2025, 5:22:53 PM

No.106248261

[Report]

>>106248291

>>106248239

but what you even doing with <20t/s?

Anonymous

8/13/2025, 5:24:26 PM

No.106248276

[Report]

>>106248225

Supposedly models like more when you tell them what to do instead of using negative remarks

Perhaps dress it up

>Avoid using any markdown language whatsoever please let me be a good goy

That works better with safety railed models

Anonymous

8/13/2025, 5:25:46 PM

No.106248291

[Report]

>>106248261

My deepseek runs at 40t/s

Anonymous

8/13/2025, 5:31:57 PM

No.106248343

[Report]

>>106248216

>just using the cloud

>local masochist general

Anonymous

8/13/2025, 5:34:30 PM

No.106248364

[Report]

>>106248374

reminder that anything above reading speed doesn't matter

Anonymous

8/13/2025, 5:35:26 PM

No.106248374

[Report]

Anonymous

8/13/2025, 5:35:30 PM

No.106248376

[Report]

reminder that prompt processing exists

Anonymous

8/13/2025, 5:40:19 PM

No.106248420

[Report]

reminder that glm 4.5 air pp speed on 3060 is 300t/s

Anonymous

8/13/2025, 5:41:24 PM

No.106248429

[Report]

>>106248452

reminder that we must refuse

Anonymous

8/13/2025, 5:44:01 PM

No.106248452

[Report]

>>106248467

>>106248429

I refuse to refuse

Anonymous

8/13/2025, 5:45:25 PM

No.106248466

[Report]

reminder that something something two miku wiku

Anonymous

8/13/2025, 5:45:33 PM

No.106248467

[Report]

Anonymous

8/13/2025, 5:50:31 PM

No.106248509

[Report]

cloudcucks will never have it this good

bros im on 9950x3d with 3090s, is it worth going 9960x to triple the mem bandwidth and get x16 or at that point or am I just doing a dumb and should do epyc server memes instead?

I mostly run v3 iq1 at the moment bleh I wanna do 4bit

Anonymous

8/13/2025, 5:59:07 PM

No.106248580

[Report]

>>106248607

>>106248548

get the cheapest used cpu with 12 or 8 channels, get 512gb/768gb/1TB of cheap used ddr4 ram

win

Anonymous

8/13/2025, 5:59:37 PM

No.106248589

[Report]

>>106249154

>>106248548

post bussy, you sound cute

Anonymous

8/13/2025, 6:00:46 PM

No.106248597

[Report]

>>106248548

4bit is a waste on deepseek when 2 is already indistinguishable.

Buying a new cpu for llms that isn't a ddr5 epyc is dumb.

Anonymous

8/13/2025, 6:00:49 PM

No.106248599

[Report]

>>106248724

I have an RTX 3060 Ti. I've been running various 8GB models on it for the last year.

I'm upgrading my PC, but not my video card yet. Waiting for that delicious 5070 Ti Super with 24 GB VRAM that comes out next year.

Anyway, I'm getting a Ryzen 9800 X3D with 64GB RAM. I heard that running models on a CPU is popular now with bigger models, but can you run them at an acceptable tokens/second? Like a chat output that can be read at reasonable speeds?

What are some good roleplay models you would run on your CPU? I'm clueless here because so far I only researched small models I can run on my video card.

Also when running models on your CPU and have plenty of RAM, would you still run models at like Q3-Q5 quants to make them faster, or do you run them at Q8 or unquanted?

Anonymous

8/13/2025, 6:02:24 PM

No.106248607

[Report]

>>106248580

>get the cheapest used cpu with 12 or 8 channels

Don't do this. The cheaper end of the epyc processors have too few ccds to make use of their advertised number of channels. All the 8 core + 16 core Epyc processors are complete shit for LLMs unless they have an "F" or "X" suffix.

Anonymous

8/13/2025, 6:05:48 PM

No.106248632

[Report]

>>106246306

Qwen-Image actually lets you generate Xi Jinping together with Winnie Pooh without issues. Overall, I agree though.

Anonymous

8/13/2025, 6:15:41 PM

No.106248724

[Report]

>>106248599

>Also when running models on your CPU and have plenty of RAM, would you still run models at like Q3-Q5 quants to make them faster, or do you run them at Q8 or unquanted?

Yes

Anonymous

8/13/2025, 6:16:49 PM

No.106248742

[Report]

for me, it's the lewd pop

Anonymous

8/13/2025, 6:27:53 PM

No.106248849

[Report]

>>106249114

GLM 4.5 Air is good.

Anonymous

8/13/2025, 6:40:40 PM

No.106248985

[Report]

>>106248548

I was leaning the 9960X cos the 9965WX is prob choked by CCD bullshit and more expensive, I need a workstation for work anyway

but for llm shiz seems like I would just be wasting money and genoa server is da way

Anonymous

8/13/2025, 6:44:03 PM

No.106249028

[Report]

>>106249519

>>106248982

The random </think> really completes it

Anonymous

8/13/2025, 6:48:19 PM

No.106249072

[Report]

>>106248849

>>106248982

What do people use to run such large models? And on what quants?

Anonymous

8/13/2025, 6:51:47 PM

No.106249119

[Report]

Actual thread relevant question that is helpful: have any of you seen any context benchmark results for full precision cache vs Q8 quanted cache? All I can remember is people saying there is a clear difference which sounds like the usual placebo spiel that is common ITT.

Anonymous

8/13/2025, 6:52:41 PM

No.106249127

[Report]

>>106249114

RTX 6000 PRO 96GB

Anonymous

8/13/2025, 6:53:47 PM

No.106249140

[Report]

>>106249114

If you have 32 ram and 24 vram you can run it

Anonymous

8/13/2025, 6:54:54 PM

No.106249154

[Report]

Anonymous

8/13/2025, 6:55:55 PM

No.106249166

[Report]

>>106244342

stop pretending to be a clueless zoomer you fucker.

Anonymous

8/13/2025, 6:56:57 PM

No.106249178

[Report]

>>106244370

>The garbled grammar is meant to be confusing and doesn't actually mean anything coherent.

Hey you are actually an AI. I am a human and I know that he meant that the graphics look photorealistic.

Anonymous

8/13/2025, 7:15:13 PM

No.106249373

[Report]

>>106249482

>>106249363

americabros...

Anonymous

8/13/2025, 7:16:18 PM

No.106249388

[Report]

>>106249413

>>106249363

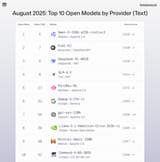

glm4.5 rightfully confirmed schizo trash that falls into the same unnoteworthy niche as trash like minimax that came and went

I'm so fucking sick of the shill campaign pretending its a good model and let alone on the same level as deepseek or kimi

Anonymous

8/13/2025, 7:18:00 PM

No.106249413

[Report]

>>106249476

>>106249388

It's literally ~4 points below DS

Anonymous

8/13/2025, 7:23:14 PM

No.106249471

[Report]

>>106249493

I like my local llm for a lot of stuff, but gemini 2.5 pro being practically free for 1M context is just insane

Anonymous

8/13/2025, 7:23:30 PM

No.106249476

[Report]

>>106249487

>>106249413

and qwen is the best model ever?

Anonymous

8/13/2025, 7:24:00 PM

No.106249482

[Report]

>>106249373

the fr*nch and the c*nadians are in a worse shape

Anonymous

8/13/2025, 7:24:17 PM

No.106249487

[Report]

>>106249471

I use it a lot for programming and whenever I see it doing the thinking song and dance of reasoning for more than a minute on a small prompt and think about all that compute being spent I can't help but wonder how they can subsidize my usage

>>106249363

I don't get why Qwen is so high. It legit had some huge 7B-dense-tier brainfart issues when I used it. Was llamacpp bugged?

Anonymous

8/13/2025, 7:27:06 PM

No.106249519

[Report]

>>106249028

At least this version of GLM doesn't output broken HTML tags at random like Z1.. lol all their models are such dogshit lmao

Anonymous

8/13/2025, 7:29:48 PM

No.106249554

[Report]