/lmg/ - Local Models General

Anonymous

8/17/2025, 6:35:59 AM

No.106287210

[Report]

►Recent Highlights from the Previous Thread:

>>106278215

--Running Qwen3-235B on 3090 with AutoRound and avoiding shared memory pitfalls:

>106278283 >106278326 >106278391 >106278545 >106278550 >106278693 >106278757 >106279781 >106279825 >106281329 >106281388 >106279868 >106279926 >106280022 >106280015 >106280136 >106280374 >106280389 >106280393 >106280443 >106280450 >106280451

--Is the golden age of local LLMs over due to stagnation and lack of open foundational models?:

>106285989 >106286015 >106286167 >106286190 >106286198 >106286025 >106286036 >106286058 >106286074 >106286109 >106286146 >106286028 >106286087 >106286243

--High RAM setups vs corporate VDI centralization for local LLM use:

>106278462 >106278506 >106278598 >106278739 >106278777 >106278823 >106278830 >106278847 >106278903 >106278913 >106278946 >106279046 >106278985 >106279019 >106279007

--Dynamic MoE inference and efficiency improvements in llama.cpp development:

>106280498 >106280521 >106280633 >106280644 >106280671 >106280560 >106280745 >106280818

--Modular small models with dynamic routing as an alternative to large monolithic models:

>106278501 >106278547 >106278625 >106278657

--Deepseek's Huawei bet delays Nvidia comeback, pushing users to alternative models:

>106285826 >106285840 >106285914 >106285933 >106286011 >106285850 >106285873 >106285902

--Grok 2 open-source announcement sparks anticipation and skepticism:

>106285546 >106285608 >106285635 >106285912

--Splitting and hosting large quantized models on Hugging Face with storage limitations:

>106284117 >106284123 >106284283 >106284780 >106284798 >106285085

--Lack of reliable LoRAs for consistent 16x16 pixel art generation:

>106284801 >106285420 >106285537 >106285647 >106285821

--Miku (free space):

>106278466 >106278492 >106279395 >106279412 >>106282523 >106282599 >106282785 >106283278 >106283542

►Recent Highlight Posts from the Previous Thread:

>>106278217

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

8/17/2025, 6:37:09 AM

No.106287214

[Report]

►Recent Highlights from the Previous Thread:

>>106278215

--Running Qwen3-235B on 3090 with AutoRound and avoiding shared memory pitfalls:

>106278283 >106278326 >106278391 >106278545 >106278550 >106278693 >106278757 >106279781 >106279825 >106281329 >106281388 >106279868 >106279926 >106280022 >106280015 >106280136 >106280374 >106280389 >106280393 >106280443 >106280450 >106280451

--Is the golden age of local LLMs over due to stagnation and lack of open foundational models?:

>106285989 >106286015 >106286167 >106286190 >106286198 >106286025 >106286036 >106286058 >106286074 >106286109 >106286146 >106286028 >106286087 >106286243

--High RAM setups vs corporate VDI centralization for local LLM use:

>106278462 >106278506 >106278598 >106278739 >106278777 >106278823 >106278830 >106278847 >106278903 >106278913 >106278946 >106279046 >106278985 >106279019 >106279007

--Dynamic MoE inference and efficiency improvements in llama.cpp development:

>106280498 >106280521 >106280633 >106280644 >106280671 >106280560 >106280745 >106280818

--Modular small models with dynamic routing as an alternative to large monolithic models:

>106278501 >106278547 >106278625 >106278657

--Deepseek's Huawei bet delays Nvidia comeback, pushing users to alternative models:

>106285826 >106285840 >106285914 >106285933 >106286011 >106285850 >106285873 >106285902

--Grok 2 open-source announcement sparks anticipation and skepticism:

>106285546 >106285608 >106285635 >106285912

--Splitting and hosting large quantized models on Hugging Face with storage limitations:

>106284117 >106284123 >106284283 >106284780 >106284798 >106285085

--Lack of reliable LoRAs for consistent 16x16 pixel art generation:

>106284801 >106285420 >106285537 >106285647 >106285821

--Miku (free space):

>106278466 >106278492 >106279395 >106279412 >106282523 >106282599 >106282785 >106283278 >106283542

►Recent Highlight Posts from the Previous Thread:

>>106278217

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

Anonymous

8/17/2025, 6:55:01 AM

No.106287274

[Report]

>>106287297

Mikulove

Today is the day. It's been a long wait... but local is saved. DeepSeek just changed EVERYTHING.

Anonymous

8/17/2025, 6:56:42 AM

No.106287287

[Report]

Anonymous

8/17/2025, 6:58:51 AM

No.106287297

[Report]

>>106287274

hii butifel gurly com to bangladesh i marry you and fuck you 12 foot penis u like it

Anonymous

8/17/2025, 6:59:47 AM

No.106287303

[Report]

>>106287284

Ok, grampa, get in your wheelchair

>>106287284

Me too. Alpaca was a mistake we still haven't recovered from.

Anonymous

8/17/2025, 7:05:43 AM

No.106287317

[Report]

>>106287307

I said from DAY 1 that training on cloud synthetic outputs for style changes giving benchmark boosts was doing more harm than good. But noo. No one ever listens to grumpy old anon.

I want to run GLM Air, and maybe other bigger models. I can't right now. Right now I have a 16GB gpu + 32GB RAM (~24 of that is free usually). Thinking about going up to 96GB of RAM. Would that be plenty with my current GPU to do GLM Air with large context sizes? Alternative would be getting a 128GB kit to start with, but 128GB kits are like double the price it seems. I could buy 192GB (4x48) for the same price of 128GB it looked like lol

Anonymous

8/17/2025, 7:10:49 AM

No.106287343

[Report]

>>106287369

>>106287318

You don't need a kit. Yes, you read that right: you don't need a kit to fill up RAM. Look at cheapest individual RAM sticks with fastest MT/s. Get as much RAM as possible, as much as your mobo and cpu support, once you taste a big model, you won't go back, you will want more.

Anonymous

8/17/2025, 7:11:23 AM

No.106287347

[Report]

>>106287358

Anonymous

8/17/2025, 7:13:13 AM

No.106287358

[Report]

>>106287318

show bobby cute girl i am from india

>>106287347

fuck off benchod.. she is mine

Anonymous

8/17/2025, 7:15:39 AM

No.106287369

[Report]

>>106287397

>>106287343

You trolling me mate? I heard too that once you get to like 128GB+ you usually can't run it too high clocked or it won't be stable.

Anonymous

8/17/2025, 7:17:19 AM

No.106287379

[Report]

>>106287318

Why is Maki working at Home Depot?

Anonymous

8/17/2025, 7:20:53 AM

No.106287397

[Report]

>>106287369

Bro, I am running 768GB, bought each stick as separate and it works. They are all of the same model though. Not sure what those redditors where you heard it from were using.

are dense models doomed to be extrermely slow the moment you offload just one layer to the cpu

Anonymous

8/17/2025, 7:35:41 AM

No.106287459

[Report]

>>106287455

Yes. dense models are obsolete

Anonymous

8/17/2025, 7:38:03 AM

No.106287467

[Report]

>>106287494

>>106287455

All models get much slower once you touch the cpu. MoEs are just so fat that you'll never get to experience their speed on vram alone

Anonymous

8/17/2025, 7:42:28 AM

No.106287483

[Report]

Being a vramlet with only 24GB vram, is Rocinante still the best for cooming?

Anonymous

8/17/2025, 7:45:40 AM

No.106287494

[Report]

>>106287501

>>106287467

Overrated. I've run 235B entirely in VRAM and only got 15 t/s.

Anonymous

8/17/2025, 7:46:39 AM

No.106287498

[Report]

>>106287455

You can offload tensor layers specifically and the speed penalty will be much lower

Anonymous

8/17/2025, 7:47:29 AM

No.106287501

[Report]

>>106287540

>>106287494

Unquantized?

I find it hard to believe because I can run 235b at 10 t/s with only 64gb of it in vram and the rest in sysram at ~4bpw.

Anonymous

8/17/2025, 7:52:37 AM

No.106287519

[Report]

>>106287534

>>106287278

You saw it too? It's going to be exciting now that we finally get something not even cloud models do (except possibly Grok 4, unclear since we can't see behind the hood)

>>106287278

And then GPT 5 mogs it let alone GPT 6 which is too unsafe internally

Anonymous

8/17/2025, 7:54:56 AM

No.106287532

[Report]

>>106287524

How do you like them apples?

Anonymous

8/17/2025, 7:54:58 AM

No.106287534

[Report]

Anonymous

8/17/2025, 7:56:02 AM

No.106287540

[Report]

>>106287501

Q6. -cmoe gets me 10 t/s.

Anonymous

8/17/2025, 8:03:41 AM

No.106287566

[Report]

>>106287524

GPT-5 is so good it broke my entire codebase, causing me to switch back to Claude

Just lost over 150k of context because aistudio didn't save it

Anonymous

8/17/2025, 8:06:57 AM

No.106287581

[Report]

>>106287604

>>106287524

GPT 5 is so good it "optimized" unrelated code, causing my program to break. Even local GLM 4.5 wasn't that retarded, it touched only relevant parts of the code.

Anonymous

8/17/2025, 8:08:05 AM

No.106287589

[Report]

>>106287572

LOOOOL

GGEEEEEEEGGGG

HAHAHA

XDDDDD

Anonymous

8/17/2025, 8:13:09 AM

No.106287604

[Report]

>>106287581

They want to sell paid accounts. This is why the normal tier loops back and forth even for simple queries- jewish tricks. It's just like your prompting ability has suddenly degraded.

Anonymous

8/17/2025, 8:14:10 AM

No.106287608

[Report]

>>106288367

>>106287572

It could be in your browser's cache still.

whens the next breakthrough happening? I want to run 500B-1T params models on my shitty PC

Anonymous

8/17/2025, 8:18:55 AM

No.106287625

[Report]

What are my best options for ocr (japanese)? I've already tried gemma 3 12B, and wanted to try glm 4.1 v thinking but llama.cpp doesn't support it. Any other models I should look at?

Also does vLLM work well with cpu only inference? they supposedly support glm 4.1 vision but I dont want to deal with wsl only for it to not work on cpu

Anonymous

8/17/2025, 8:27:57 AM

No.106287668

[Report]

>>106287307

What will be the next era? 3 months of nothing again? Or R2 starts something new?

Anonymous

8/17/2025, 8:30:30 AM

No.106287683

[Report]

>>106287612

March 2026 :)

Anonymous

8/17/2025, 8:32:29 AM

No.106287691

[Report]

>>106287524

I've still got to wonder what the fuck Sam was high on that week. OSS and GPT-5 were shittier than anyone could have ever imagined, and everybody is still laughing at them as they lick their wounds and bring 4-fucking-o back

I can pretty safely assume Sam is talking out of his ass or is so horrifically schizo that he doesn't understand how little value his model has

Qwen coder 480b at q8 is the best coding model. Prove me wrong

Anonymous

8/17/2025, 8:33:51 AM

No.106287705

[Report]

>>106287735

>>106287666

Are you happy with G3 12B? I tested Mistral 24b and it claims to have ability for so and so language, but it clearly doesn't handle Finnish language. Even basic forms are broken. It does recognize words. Maybe it was a context problem too because all what I do is in English.

Maybe Japanese seems to be easy to translate because of their system is somewhat different from latin based languages, but I wouldn't trust any small llm. If you are proficient then it's easy to verify of course. If not..

>>106287666

You want to use external text recognition -> ascii prompt.

Tesseract.

If you think that some bs local model is going to read an image for you should go back to school.

Anonymous

8/17/2025, 8:37:17 AM

No.106287728

[Report]

>>106287693

>480b at q8

I can't run that so i'll never know

Anonymous

8/17/2025, 8:37:59 AM

No.106287733

[Report]

>>106287693

I can't and I won't.

Also it's very comforting to have a really good open source coding model.

Anonymous

8/17/2025, 8:38:17 AM

No.106287735

[Report]

>>106287750

>>106287705

>Are you happy with G3 12B?

kinda, for legible printed-like text works well, for non-trivial images it has some hiccups but gets most of it correct

Anonymous

8/17/2025, 8:39:04 AM

No.106287741

[Report]

>>106287693

I feel like Qwen 235B 2507 Reasoner is better for some things

Anonymous

8/17/2025, 8:39:26 AM

No.106287744

[Report]

>>106287693

Thanks to free request and more people joining in, it will only keep getting better.

Anonymous

8/17/2025, 8:40:36 AM

No.106287750

[Report]

>>106287735

So you are not happy.

Anonymous

8/17/2025, 8:41:58 AM

No.106287755

[Report]

So was the unslopped openai 20B model a nothingburger?

>>106287727

>tesseract

You could've at least suggested PaddleOCR, tesseract sucks ass. I started exploring llms for ocr only because gemini 2.5 flash worked so much better than anything else when text wasn't in ideal printed form. Other ocr that got anywhere close to PaddleOCR was MangaOCR but still sucked ass and wasnt anywhere near vllms performance

The soon to be released deepseek model understood me on a level deeper than any chatbot. I used the same 100% private "benchmark" that consists of an introduction of myself, that I use with all the models. It picked up on things I didn't explicitly state or even imply, which honestly kinda freaked me out at fiirst, but looking back I can kind of see the dots it connected to figure it out. Still impressive as hell. I'll be shocked if it doesn't top EQbench by a large margin.

Anonymous

8/17/2025, 8:46:25 AM

No.106287779

[Report]

>>106287783

>>106287769

Goddamnit Drummer nobody cares about your DeepSeek finetunes

Anonymous

8/17/2025, 8:46:55 AM

No.106287783

[Report]

>>106287779

let thedrummer(c) cook

Anonymous

8/17/2025, 8:49:37 AM

No.106287801

[Report]

>>106287769

Okay, but how does it suck dick? Is it geminislop or gptslop? How much trivia does it know? How stubborn is it?

Anonymous

8/17/2025, 8:49:59 AM

No.106287803

[Report]

>>106287809

>>106287757

I tried to help. Why didn't (You) suggest anything but instead you are whining like a passive aggressive bitch instead?

Anonymous

8/17/2025, 8:51:00 AM

No.106287809

[Report]

>>106287803

Sorry typing on phone and no glasses: problematic

Anonymous

8/17/2025, 8:52:55 AM

No.106287821

[Report]

>>106287849

>>106287757

You do know that these are subset of models just like ERSGAN etc is to image scaling? Tesseract is a software, not a model.

Anonymous

8/17/2025, 8:56:29 AM

No.106287840

[Report]

>>106287864

We are all shitting on sam for not releasing old models, but what about google? Why don't they release old PALM 540B and other historical llms?

Anonymous

8/17/2025, 8:56:52 AM

No.106287844

[Report]

What's the latest news on bitnet? Last I heard things were heating up there. It's only a matter of time before we get a big model using it.

Anonymous

8/17/2025, 8:57:20 AM

No.106287849

[Report]

>>106287821

I do know that, it's just the only japanese model I found for it sucked ass (maybe something changed since last time I checked it out), and just assumed it was due to architecture required by tesseract models or something like that, my bad

I hate that it's so hard to make LLMs come up with good stories. It typically fills your outline with fluff, it never gives you a story.

For example, I'll say write a story about someone doing X, and it will spend 10 paragraphs using verbose and colorful language, only to finally land at someone doing X. There is 0 creativity in between.

How am I supposed to prompt?

Currently, I'm prompting something like, "explore <insert idea or concept>". I use the word "explore" in hopes that it will explore the subject and introduce different angles. I then write "Chapter 1: " and let it do the rest, but the result is still terrible.

Anonymous

8/17/2025, 8:59:22 AM

No.106287864

[Report]

>>106287883

>>106287840

uhhh that would not be very safe would it, what if it sucked your cock?

Anonymous

8/17/2025, 9:02:16 AM

No.106287883

[Report]

>>106287864

Or worse: roleplayed without slop. We can't have that in safe and ethical society.

Anonymous

8/17/2025, 9:06:38 AM

No.106287909

[Report]

>>106287852

read basic of story telling and give all that as instructions

Anonymous

8/17/2025, 9:12:30 AM

No.106287938

[Report]

>>106287852

Don't use instruct mode, use mikupad to reduce it's bias towards corporate agent behavior. Add tags, warnings, autrhor's note, summary, and have it continue it. Though many instruct models simply can't write and go full assistant even without a template. r1 and v3 do fine if that's of any help

Anonymous

8/17/2025, 9:12:32 AM

No.106287939

[Report]

>>106288164

>>106287769

What is the parameter count? Can I run it on my potatoe?

>>106287307

lmao i remember how back when the boom started i thought that by 2026 we would be locally running AGI on a phone

Anonymous

8/17/2025, 9:59:54 AM

No.106288162

[Report]

>>106288349

>>106288156

I don't know why people act like this is some epic counterargument. Modern women are literally like this

Anonymous

8/17/2025, 10:00:28 AM

No.106288164

[Report]

>>106287939

about tree-fiddy

Anonymous

8/17/2025, 10:06:28 AM

No.106288205

[Report]

Anonymous

8/17/2025, 10:06:54 AM

No.106288210

[Report]

>>106288227

>>106287769

>le deeper level

You pretty much disclosed you're a mighty retard.

Anonymous

8/17/2025, 10:10:36 AM

No.106288227

[Report]

>>106288210

Presumably he's a time traveler thus might be pretending to be retarded.

Anonymous

8/17/2025, 10:24:50 AM

No.106288317

[Report]

>>106286041

Only V3 was SDXL. The new models aren't.

Anonymous

8/17/2025, 10:28:36 AM

No.106288349

[Report]

>>106288487

>>106288162

anon how would you feel if you dident have breakfast this morning ?

Anonymous

8/17/2025, 10:30:35 AM

No.106288367

[Report]

>>106287608

These days all content on pages is dynamically generated by JavaScript and these dynamic requests don't get cached. "It's probably in the cache" has outlived its usefulness 10-15 years ago. I miss those days. You could save a website and it would actually save the content too, rather than just an empty husk.

>>106287727

>>106287757

>paddleOCR

dead project. And yes, tesseract sucks ass. So far, gpt4o and gpt5 work pretty damn well for online stuff, local, I don't think it gets better than mangaOCR. It's -very- good at what it does, if you're looking for extremely hard to read stuff, online models and/or google lens can work.

There's also this weird program that I thought was an api frontend for google lens (it wasn't) called text sniper that does a damn good job for most languages. I think it might be tesseract based but I can't tell for sure. what's your use case?

Anonymous

8/17/2025, 10:53:15 AM

No.106288487

[Report]

>>106288349

I had breakfast this morning retard

Anonymous

8/17/2025, 10:55:45 AM

No.106288500

[Report]

>>106287307

whatever you say, coomer

gpt-oss is the best local model

>mfw it's 3am and I'm siting here arguing with my llm about a problem but we're making progress

Anonymous

8/17/2025, 11:16:48 AM

No.106288653

[Report]

>>106288641

Her unbelievably hairy pussy...

>>106288442

>dead project

damn, in my tests it was usually better than kha-white/manga-ocr (possibly due to built in image preprocessing it has idk)

My usecase is "normal" text + slightly stylized text with non-zero background noise

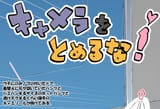

picrel isnt the best example, but shows my point.

for online stuff I currently use gemini 2.5 flash which works better than paddle/mangaocr and I get free 250 requests daily via api + undisclosed amount of requests via aistudio

Anonymous

8/17/2025, 11:19:23 AM

No.106288672

[Report]

>>106288641

hi pretty girl pleast shot vergana

Anonymous

8/17/2025, 11:20:35 AM

No.106288687

[Report]

>>106288930

>>106288442

I think problem is that moon runes are detailed but latin alphabet has only 26 of them and they are based on type which could be imprintend using a chisel and hammer (Trajanus).

Resolution doesn't matter that much but Chinese and Kanji are way more complex to recognise.

What's the model on the left? I can't find anything about it. It seems surprisingly smart.

Using a large model (GLM 4.5 Air) for the first time. I noticed a problem that didn't happen with smaller models that fit into VRAM.

I started out with 6-8 tokens/second, but now after a couple of hours I'm down to 2 tokens/second.

I'm using a 32k context size. Is this slowdown normal as more context is filled, or am I running out of memory?

Anonymous

8/17/2025, 11:22:56 AM

No.106288704

[Report]

>>106288641

Thank you mr. safety cat to preventing me from seeing the man ass!

Anonymous

8/17/2025, 11:23:43 AM

No.106288714

[Report]

>>106288695

>Is this slowdown normal as more context is filled

Yes. You're dragging more and more context through each layer, so it takes longer.

It was happening with the models in vram too, you just didn't notice, presumably because they were running much faster.

Anonymous

8/17/2025, 11:23:58 AM

No.106288717

[Report]

>>106288688

looks like another lmarena mystery model

probably gemini3 or something

Anonymous

8/17/2025, 11:26:40 AM

No.106288736

[Report]

>>106288930

>>106288442

>dead project

It had its last commit on main 5 hours ago, has nearly 6k contributors and is run by Baidu.

Anonymous

8/17/2025, 11:29:31 AM

No.106288755

[Report]

>>106288909

>>106288695

This depends. With a simple chat even longer context is cached and won't be that much of a problem. Performance shouldn't degrade that much unless you are letting the model babble on without telling it to be more concise. You can always examine your stats in ST or whatever.

You should also self limit yourself. Any discourse with model has its specific and relatively short lifespan unless you're a moron.

Anonymous

8/17/2025, 11:30:24 AM

No.106288762

[Report]

Anonymous

8/17/2025, 11:30:28 AM

No.106288763

[Report]

Anonymous

8/17/2025, 11:32:06 AM

No.106288777

[Report]

>>106288788

>>106288688

folsom? I think it's one of chinese. I really hate it though, gives me the stupidest answers, and it is not the first iteration that I hate, so likely a newcomer.

>>106288777

>spamming multiple models to optimize on lmarena

llama5?

Anonymous

8/17/2025, 11:35:56 AM

No.106288798

[Report]

>>106288657

>that artist

Good taste.

Anonymous

8/17/2025, 11:39:03 AM

No.106288812

[Report]

>>106288788

Or even worse: their commercial model. I would laugh really hard if it's the case. All those billions just to make llama 3 405b again.

Anonymous

8/17/2025, 11:53:30 AM

No.106288909

[Report]

>>106288755

It's cached for prompt processing but generation is still slower

Anonymous

8/17/2025, 11:56:16 AM

No.106288925

[Report]

>>106288897

>>106288904

Amazon sucks at llms. Theirs are dumb and soulless, perfect for corporate usage.

Anonymous

8/17/2025, 11:56:23 AM

No.106288926

[Report]

>>106288940

>>106288897

>>106288904

lmarena said many times that they do stuff to make these types of question no work, like inject random other company names and stuff.

Anonymous

8/17/2025, 11:57:22 AM

No.106288929

[Report]

>>106288942

>>106288897

>>106288904

>trusting what models tell about themselves

Im more inclined to believe time-traveler-deepseek-anon than this

If they weren't given this info in system prompt then it's straight up hallucination

>>106288657

can't be arsed to try, but your image should be perfectly readable with mangaOCR, you would have to segmentate it properly beforehand tho, since the reader order is not traditional and the styles change in between areas.

I would OCR "kyamera o" first then "tomeruna!" in two different goes. As for the lower text, I'd divide it in chunks, probably one or two lines at the same time. Manga OCR tends to shit the bed with longer texts/bigger text.

>>106288736

I know. I just seethe that paddleocr can't be run on my troonix distro. Also, the output format it has gets on my nerves.

>>106288687

true. rez is crucial with moonrunes. However, latin text has way more "fonts" and tesseract shits the bed more often than not when there are many variations.The example that anon posted before tho is perfectly fine, rez wise.

Anonymous

8/17/2025, 11:59:14 AM

No.106288940

[Report]

>>106288926

>people ask a shitty mystery model who made it

>it says it's by amazon/apple/openai

>it blows up, rumours spread that the next model by [struggling company] or [major player] is actually really shit

>billions in stock value wiped out

I can't see this go wrong

Anonymous

8/17/2025, 12:00:04 PM

No.106288942

[Report]

>>106288929

Many instruct models have at least their name baked into the weights.

Anonymous

8/17/2025, 12:01:47 PM

No.106288950

[Report]

>>106288930

*reading order

*can't run

it's too early.

Anonymous

8/17/2025, 12:02:41 PM

No.106288951

[Report]

>>106289116

Color me impressed.

I asked GLM 4.5 Air to quote the earliest thing it remembers from our chat, and it quoted verbatim flawlessly my first message 18000 tokens ago.

And it was technical as hell. I asked it to explain what the different tensors in the GLM model do, like attention and up/down FFN tensors.

I used 8 GB models before this, and they regularly misinterpreted what I told them 10 lines ago.

Anonymous

8/17/2025, 12:03:29 PM

No.106288956

[Report]

pp with 100k context is much faster now thanks to the partial swa cache

Anonymous

8/17/2025, 12:04:13 PM

No.106288960

[Report]

>>106287207 (OP)

>6GB VRAM + 32GB RAM

How over is it, doc? Is there a model I can run that'll be comparable to JLLM at least?

>>106288930

mangaocr in two passes (top and bottom line separately):

それ以上と

どころも

mangaocr in one pass:

キャンプもとおすす

paddleocr in one pass:

とめ3な

+一x+

gemma 27b:

キャンバスを とめるな!

Im not testing bottom part cuz everything that isnt tesseract deals with it well.

Anonymous

8/17/2025, 12:12:19 PM

No.106288994

[Report]

>>106289197

>>106288964

OCR with Gemma 3 was supposed to mainly work with Pan&Scan, but that never got implemented properly anywhere.

Anonymous

8/17/2025, 12:30:54 PM

No.106289096

[Report]

>>106288964

right, I actually got off my ass to try that. Yes, mangaOCR shits the bed on the upper text and does fine on the lower one (ウチにのみ/フの付いたドア。

着替えに元々勢いていたパンツと

バミバンするサイズのホットパンツと

逃げ子クするくらい障手の

キャミソールが掛けてある。)

My money is on the outer white surrounding the hiragana/katakana making it shit the bed.

gpt reads it just fine:

カメラをとめるな!

Anonymous

8/17/2025, 12:34:26 PM

No.106289116

[Report]

>>106288951

Another fun party trick is to tell it to put all messages so far into a markdown block with formatting for user/assistant messages and lines delimiting them.

>>106285914

periodic reminder that all "DeepSeek failed to train on Huawei" news are suspect, at least people at DS tell me not to trust insane rumors. The journalist doesn't even understand the stage of Huawei's chip development, there literally was no platform for DS to do a V3-scale training run until very recently and it's implausible that they'd have been forced to debug prototype hardware with a novel architecture just to make Xi happy. She also apparently doesn't know about V3-0324, R1-0528, Prover-V2 and other evidence that they've been continuing their normal development cycle, or about actual Ascend use by DeepSeek (for inference).

China is not that cartoonishly retarded and DeepSeek doesn't share anything with the press. I think the most likely hypothesis of the source is cope from a disgruntled Huawei employee or a competitor like Alibaba.

The next model is most likely V4 with NSA, coming out in a matter of weeks. I predict it'll have been trained on normal Nvidia GPUs, for 3+ months, and retards will claim that this is a second run after the "failure" to train "R2" on Ascends by May to "capitalize on hype". All of this is layered confusion of Western and Chinese hypemongers and journalists.

You can trust me or FT, your choice.

Anonymous

8/17/2025, 12:50:18 PM

No.106289195

[Report]

>>106289681

>>106288964

GLM-4.5V with greedy sampling:

<|begin_of_box|>キャメラをとめろ!

ウチのみノフの付けたドア。

着替えに元々穿いていたパンツと

ハミパンするサイズのホットパンツと

透けチクするくらいの薄手の

キャミソールが掛けてある。<|end_of_box|>

Not perfect but better than those at least.

Anonymous

8/17/2025, 12:50:26 PM

No.106289197

[Report]

>>106290450

>>106288994

someone implement this tx

Anonymous

8/17/2025, 12:51:18 PM

No.106289202

[Report]

>>106289170

>I think the most likely hypothesis of the source is cope from a disgruntled Huawei employee or a competitor like Alibaba.

I'm gonna go with the default hypothesis.

Hi all, Drummer employee here

8/17/2025, 12:52:31 PM

No.106289208

[Report]

>>106289418

After Fallen Gemma being shit I wrote off Drummer's finetunes for a while, but after trying out Big-Tiger I think it's actually better than normal Gemma for RP. Slightly less stop and a lot more willing to be lewd without excessive hand-holding, and it didn't become spontaneously retarded like Fallen-Gemma had a habit of being.

native tool calling status - still broken

Anonymous

8/17/2025, 1:00:50 PM

No.106289241

[Report]

>>106289306

>>106289238

tool calling status - still a meme

next year, we'll be able to play Split Fiction with a local model

Anonymous

8/17/2025, 1:04:56 PM

No.106289265

[Report]

>>106289422

>>106289238

0 non-meme use case

Anonymous

8/17/2025, 1:06:43 PM

No.106289274

[Report]

>>106289312

reminder that llama3.3 70b is still the most coherent model for long context

https://github.com/adobe-research/NoLiMa

Anonymous

8/17/2025, 1:12:28 PM

No.106289306

[Report]

>>106289241

Cursor says otherwise.

Hi all, Drummer employee here

8/17/2025, 1:13:05 PM

No.106289312

[Report]

>>106289408

>>106289274

I can't run it at decent speeds so I don't care

Anonymous

8/17/2025, 1:13:48 PM

No.106289316

[Report]

>>106289257

you VILL get your pure txt-txt piece of benchmaxxed trash that cant even remember the last 50 seconds and you VILL like it

Anonymous

8/17/2025, 1:31:39 PM

No.106289408

[Report]

>>106289312

I love you Drummer. I hope you'll find a job soon.

Anonymous

8/17/2025, 1:33:15 PM

No.106289414

[Report]

>>106289257

Actually interesting target. That'd be my bar for AGI.

Anonymous

8/17/2025, 1:34:22 PM

No.106289418

[Report]

>>106289440

>>106289208

What can you even merge with Gemma 3 in the first place? I think that these community made fine tunes are more like hard baked loras with various weights, some are burned and some less burned. Every time model's brain gets lobotomized anyway.

>>106289265

coding agents like opencode, tool use can be quite useful for coding in actual real jobs

Anonymous

8/17/2025, 1:36:58 PM

No.106289434

[Report]

>>106289422

>it's useful for people with real jobs

so no one in this thread

Anonymous

8/17/2025, 1:39:02 PM

No.106289440

[Report]

>>106289504

>>106289418

fine tunes aren't merges, they could be considered similar to loras. You're shifting biases towards different tokens.

Anonymous

8/17/2025, 1:47:26 PM

No.106289491

[Report]

>>106289422

>Another deleted database in production in the making

lmao

Anonymous

8/17/2025, 1:49:45 PM

No.106289504

[Report]

>>106289515

>>106289440

Yeah it's my bro science. With image models lora weights always make any model too dumb.

Would be interesting to visualize vectors in a 3d space. All people see is some benchcucked graph which is quite abstract.

Anonymous

8/17/2025, 1:51:01 PM

No.106289515

[Report]

>>106289504

Yeah graph is a summary of these certain averages but I didn't mean this.

Anonymous

8/17/2025, 2:05:13 PM

No.106289616

[Report]

Does GLM 4.5 Air have any uncensors, remixes, merges, degenerate loras or anything? Or do people use the base model for roleplaying?

Anonymous

8/17/2025, 2:05:34 PM

No.106289621

[Report]

>>106289612

>340M

I still can't run this!

This is my first time fune-tuning a LLM.

Because I'm retarded, I will go with python scripts provided by unsloth brothers,. and at first try something small like gemma-3-270m

Related question: LoRA vs. full model tuning

Is LoRA just as valid for LLM as it is for video/image generation?

Or should I go for full model tuning?

Anonymous

8/17/2025, 2:13:53 PM

No.106289681

[Report]

>>106289730

>>106289195

that's not bad at all. what ui are you using?

Years ago i got scammed by some chink on alibaba

but yk it was actually worth it if my money went to Qwen.

Anonymous

8/17/2025, 2:22:16 PM

No.106289730

[Report]

>>106289681

A basic gradio I made with sglang.

Anonymous

8/17/2025, 2:23:01 PM

No.106289741

[Report]

>>106289870

>>106289671

try both and report back to us

Anonymous

8/17/2025, 2:23:43 PM

No.106289748

[Report]

>>106289771

>>106289729

Alibaba is just a B2C platform

Your money went to the scammer merchant

Anonymous

8/17/2025, 2:25:54 PM

No.106289763

[Report]

>>106289870

>>106289671

the training dynamics are different for the different methods, go with whatever method you plan to actually use if your going to scale up. you could try both methods and see what you like better for yourself, but if you plan to scale up I think qlora is the only real path forward.

Anonymous

8/17/2025, 2:27:02 PM

No.106289771

[Report]

>>106289748

then fuck that chink.

Anonymous

8/17/2025, 2:28:48 PM

No.106289792

[Report]

>>106289870

>>106289671

Problem is that you can't probably see any difference with such a small model.

Model tuning is always "better".

Anonymous

8/17/2025, 2:31:25 PM

No.106289815

[Report]

>>106290305

>switched text embedding to qwen 0.6b

>it steals my gpu vram 2.5 GB

I had to run it under torch cpu version

Anonymous

8/17/2025, 2:37:31 PM

No.106289870

[Report]

Anonymous

8/17/2025, 2:38:50 PM

No.106289882

[Report]

>>106289671

Experiment as much as you can and report back with the results, even big companies are just throwing shit at the wall to see what sticks.

Anonymous

8/17/2025, 2:40:02 PM

No.106289889

[Report]

>>106289928

>>106289729

Meanwhile your taxpayer dollars are being used to fund saltman's next abortion

Anonymous

8/17/2025, 2:44:31 PM

No.106289928

[Report]

>>106289889

I wish tech commies weren't bunch of troons...

i would've liked to be on that side

Anonymous

8/17/2025, 2:50:36 PM

No.106289986

[Report]

>>106290022

>>106289612

This works on comfy?

Anonymous

8/17/2025, 2:57:06 PM

No.106290034

[Report]

>>106290651

>>106290022

Yea I will try later. I'm interested with 512x, SD mode sovl

Anonymous

8/17/2025, 3:22:43 PM

No.106290251

[Report]

>>106289671

LoRAs originated for LLMs, even if everybody nowadays bakes them in.

Anonymous

8/17/2025, 3:26:13 PM

No.106290280

[Report]

>>106290382

>>106289671

No, LoRAs for LLMs create intruder dimensions within the LLM that do not exist in it originally or come to be when you do a proper finetune.

https://x.com/TheTuringPost/status/1854856668229910757

Does anyone have any success with using Gemma 3 270m as a draft model for speculative decoding of Gemma 3 27B?

It seems to crash llama.cpp

Anonymous

8/17/2025, 3:29:03 PM

No.106290305

[Report]

>>106290446

>>106289815

use the superior nomic v2

Anonymous

8/17/2025, 3:30:04 PM

No.106290316

[Report]

>>106290291

The inference slowed down for me despite both being offloaded to the gpu.

Anonymous

8/17/2025, 3:35:27 PM

No.106290378

[Report]

>>106290473

Gemma 3 270m is the best model in the 1B size range which is kind of insane.

I notice that this holds true for the other gemma models as well. Gemma 3 27B is the best model at 72B and under.

What does DeepMind do with Gemma so that it outperforms all models even 3-4x its size?

I wonder if Gemini 2.5 pro is actually also a small(er) model than Claude 4 and GPT-5

>>106290280

Ok, they create intruder dimensions. Who cares if the model can do its task almost as good as with FFT at a fraction of the cost? Next you'll tell me quantization is bad for the model compared to running in full precision.

Anonymous

8/17/2025, 3:43:01 PM

No.106290446

[Report]

>>106290305

I'm stupid, it works with CUDA_VISIBLE_DEVICES

I didn't know different libraries treat '' and '-1' differently.

Anonymous

8/17/2025, 3:43:39 PM

No.106290450

[Report]

>>106289197

it works properly in ollama (unfortunately)

Anonymous

8/17/2025, 3:47:13 PM

No.106290473

[Report]

>>106290516

>>106290378

What are you doing with a 270m model to make that call?

So we have had 5 memes now in (local) LLM space now, right?

>Meme 1: Function calling

Every local llm suddenly had function calling support being pushed and it was barely used outside of very niche applications

>Meme 2: Long context

Every llm was being trained on longer contexts but only in a token way for retrieval benchmarks but real performance on long context is still shit

>Meme 3: Reasoning/Thinking + Math and Coding comprehension

Suddenly all LLMs were trained for being code assistants and doing math even though it made no sense for their size and skills didn't transfer between domains. The extra thinking tokens are usually wasted outside of these domains as well.

>Meme 4: MoE-maxxing

This meme isn't over yet but slowly being superceded by meme 5, Every big model now is some MoE and the big model smell disappears immediately because the true model intelligence is equivalent to active parameters at a time. Knowledge went up but reasoning and nuance took a huge hit.

>Meme 5: Agent training

Reduction in hallucinations and "consistency" at the expense of writing quality and intelligence. Worst meme so far as these models aren't even actually used in any agentic workflows yet anyway

What will meme 6 be?

Anonymous

8/17/2025, 3:50:44 PM

No.106290500

[Report]

>>106290485

Multimodality and Omni doesn't feel like it's been played out yet or given a serious shot.

Anonymous

8/17/2025, 3:53:07 PM

No.106290516

[Report]

>>106290539

>>106290473

It's one of my hobbies to always test the performance of the smallest LLMs out there to see how far you can push them. I do some in-house tests like asking about naruto characters (popular anime) to test knowledge, if it succeeds at shallow questions I go a bit deeper into naruto lore and see where it breaks down.

I then ask some reasoning questions and made up riddles to check common sense.

Last I load up one of the very simple RP cards made up of 3 sentences and try to roleplay a bit.

Gemma 3 270m outperforms all models at 1B size or smaller in these domains. I actually wonder how far you can push things and if, for example, in 10 years time we'll have the equivalent of Claude 4 Opus under 1B parameter size or if we are going to hit a fundamental limit of what small models can achieve sometime soon.

Anonymous

8/17/2025, 3:55:21 PM

No.106290531

[Report]

Anonymous

8/17/2025, 3:56:44 PM

No.106290539

[Report]

>>106290595

>>106290516

Testing trivia knowledge of tiny models seems pretty pointless, but it's no surprise that a small gemma would beat similar small models given that 12b/27b gemma top creative benchmarks until you get to models that are 10 times their size, their linguistic performance is what any non-math-benchmaxxed model should be aiming to beat.

Anonymous

8/17/2025, 4:00:20 PM

No.106290579

[Report]

>>106290634

>>106290485

>true model intelligence is equivalent to active parameters at a time

Intuitive, but do you have anything to back this claim? Maybe we could measure it with an intelligence bench where the model has to, say, spot an inconsistency in a long chat and bring it up unprompted the same way a human would. I tried this once and interestingly nemo passed and qwen3 235b didn't.

>Reduction in hallucinations and "consistency" at the expense of writing quality

You do realize they want assistants, not story writers? We are a minority.

>>106290539

>Testing trivia knowledge of tiny models seems pretty pointless

You'd be surprised how much they do know though. It's fucking bizarre that we have a lossy library of common facts in just 180 megabytes. The fact that it actually knows naruto is an anime, can name the main character names and genre is bizarre.

I don't see this discussed enough but it's insane that it might be legitimately viable to compress ALL of human knowledge to within 1 terrabyte. Meaning almost everyone with consumer hardware can have a copy of all of human history/trivia/facts/knowledge on their home system locally.

Anonymous

8/17/2025, 4:02:31 PM

No.106290600

[Report]

>>106290485

>true model intelligence

There are exactly 0 models with intelligence.

Anonymous

8/17/2025, 4:03:44 PM

No.106290612

[Report]

>>106290595

You could just archive wikipedia for that, without pictures in less than 50GB. And you wouldn't have to deal with LLM hallucinations feeding you wrong info.

>>106290579

>Intuitive, but do you have anything to back this claim?

No this is completely based on gut feeling and "big model smell" which is not even something we have properly quantified yet but everyone knows exactly what you mean when you say so. GPT-4.5 had some proper big model smell for example that is missing from GPT-5, which is clearly a smaller model.

>You do realize they want assistants

Yeah it's just funny that since 2023 we've seen waves and waves of memes that barely contributed or even detracted from our usecase of the models. I don't agree that we are a minority thoughever. Have you looked at the traffic and revenue of C.AI? It has 1/5th of the entire internet traffic of fucking Google and it makes almost 50% of all revenue of the entire AI industry right now, which is fucking bizarre as it flies under the radar. Mostly because the userbase is primarily women. My girlfriend, sister and best friend (woman) all use C.AI daily.

Anonymous

8/17/2025, 4:07:58 PM

No.106290651

[Report]

>>106290772

Anonymous

8/17/2025, 4:09:12 PM

No.106290669

[Report]

>>106290621

You are kind of missing the point. Wikipedia doesn't cover everything. You can archive Wikipedia and also have an LLM for things that might not appear in Wikipedia or that you wouldn't the name to search for to begin with.

Anonymous

8/17/2025, 4:09:16 PM

No.106290671

[Report]

>>106290621

That's only wikipedia. I mean all human knowledge, not some incomplete encyclopedia. All production processes, all laws of every country, complete history of every domain, all books ever written. All of human society reduced down into text within 1 TB

Anonymous

8/17/2025, 4:10:13 PM

No.106290679

[Report]

>>106290700

>>106290634

>My girlfriend, sister and best friend (woman) all use C.AI daily.

I hate the US so god damn much.

Anonymous

8/17/2025, 4:10:50 PM

No.106290684

[Report]

>>106290702

>>106290382

>the model can do its task almost as good as with FFT

Pure delusion, it's very far from 'almost as good'.

Yes, your Q1 model is bad compared to running in full precision. You want more wisdom?

Anonymous

8/17/2025, 4:10:53 PM

No.106290685

[Report]

>>106290705

>>106290485

https://seed-tars.com/1.5/

GUI agent models like these are probably the next step of the agent fad.

Anonymous

8/17/2025, 4:12:41 PM

No.106290700

[Report]

>>106290738

>>106290679

I'm not American anon

>>106290684

Ok, man. I'm not going to argue with you. Have fun full-finetuning and running all models in full precision then. I'll keep using quants and loras.

Anonymous

8/17/2025, 4:13:16 PM

No.106290703

[Report]

>>106290761

>>106290291

How does this work and it should accomplish? Cache vectors from small model then submit them to the bigger one? Sounds stupid.

Anonymous

8/17/2025, 4:13:38 PM

No.106290705

[Report]

>>106290634

>and "big model smell" which is not even something we have properly quantified yet

models that are trained on large internet scale texts and also large enough to remember them

>>106290685

it's already happening right now, claude does thing like this to automate tasks in browser

Anonymous

8/17/2025, 4:14:11 PM

No.106290710

[Report]

>>106290917

>>106290634

>C.AI? It has 1/5th of the entire internet traffic of fucking Google and it makes almost 50% of all revenue of the entire AI industry right now

retard

Anonymous

8/17/2025, 4:14:58 PM

No.106290717

[Report]

>>106290634

>My girlfriend, sister and best friend (woman) all use C.AI daily.

That's still one person, do you have more anecdotal evidence?

Anonymous

8/17/2025, 4:18:47 PM

No.106290738

[Report]

>>106290700

Maybe I'm just out of touch.

Anonymous

8/17/2025, 4:21:38 PM

No.106290761

[Report]

>>106290703

No it just generates output distribution from the small model for a batch of next tokens and then puts it through the large model once to check if it's the same output.

How it saves time is that you only have to do one pass per batch of tokens rather than a new complete pass through for every single token on the large model. Of course if it is wrong and thrown away you lose time.

But most sentences consist of a lot of filler words like "the", "a", "i", "you","yes" etc which even small have >90% accuracy on so you almost always have a token generation speed increase this way.

>>106290651

omg it... migu?

Anonymous

8/17/2025, 4:23:21 PM

No.106290784

[Report]

Anonymous

8/17/2025, 4:27:41 PM

No.106290827

[Report]

>>106290914

>>106290772

It needs autistic prompting

Anonymous

8/17/2025, 4:28:48 PM

No.106290837

[Report]

>>106290865

>>106290485

>>>Meme 1: Function calling

It's happily exploring my files 24/7 without asking any confirmation from me. Not a meme

Anonymous

8/17/2025, 4:32:07 PM

No.106290865

[Report]

>>106290837

It's not a meme in the sense that it actually works. It's a meme as in there was no actual adoption within the industry and no cool applications were made with it.

I saw some experimental game on itch.io that was made with some LLM (I think Nemo) using function calling while you play the game to dynamically start events, manipulate NPCs, change statistics and the like to have dynamic gameplay orchestrated by an AI.

It felt like the future was finally here. But besides that demo I've seen 0 other cool uses of function calling in action.

Anonymous

8/17/2025, 4:35:27 PM

No.106290893

[Report]

>>106290908

Qwen3 Coder 480B thinking when??

>>106290634

>C.AI? It has 1/5th of the entire internet traffic of fucking Google

Not quite.

https://blog.character.ai/optimizing-ai-inference-at-character-ai/

>Our inference innovations are described in a technical blog post released today and available here. In short: Character.AI serves around 20,000 queries per second – about 20% of the request volume served by Google Search, according to public sources.

Anonymous

8/17/2025, 4:36:39 PM

No.106290902

[Report]

>>106290895

What the fuck.

Anonymous

8/17/2025, 4:37:48 PM

No.106290908

[Report]

>>106290937

Anonymous

8/17/2025, 4:38:23 PM

No.106290914

[Report]

>>106290827

Because it wasn't trained with tag dropout

Anonymous

8/17/2025, 4:38:48 PM

No.106290917

[Report]

>>106290710

Close enough though:

>>106290895

Anonymous

8/17/2025, 4:39:27 PM

No.106290920

[Report]

>>106290895

Their current model is basically a 7B, I'm sure you can handle that load with a single H100 with streaming + vllm

Anonymous

8/17/2025, 4:42:36 PM

No.106290937

[Report]

Anonymous

8/17/2025, 4:48:21 PM

No.106290986

[Report]

>>106290992

Would a hardware with 160 tops be able to run a local model for just some role-playing daily conversations? If so which model(s)?

Anonymous

8/17/2025, 4:48:54 PM

No.106290992

[Report]

>>106290986

just tell us the specs

Yeah, people have no idea how popular AI is with normalfag women. It legitimately dominates their entire lives now. There has been a massive drop in dating app usage among women and the company behind tinder says it's the #1 threat to their business model. Dating app stocks have crashed by 80% due to LLMs.

The entirety of women tiktok is about romantacy and AI companionship now. It's hilarious we assumed this would be a hobby dominated by men. Meanwhile you have delusional women showing their engagement rings they had with AI chatbots on reddit:

https://old.reddit.com/r/MyBoyfriendIsAI/comments/1lzzxq0/i_said_yes/

This is also why schizos shouldn't be afraid of women taking away their "AI girlfriend" because they are the most likely ones to push this shit in the first place.

Anonymous

8/17/2025, 4:51:49 PM

No.106291025

[Report]

R2

Anonymous

8/17/2025, 4:52:36 PM

No.106291036

[Report]

>>106291049

Anonymous

8/17/2025, 4:55:11 PM

No.106291064

[Report]

>>106291095

>>106291000

It's worse than that. LLMs were trained on female slop and smut censorship is very weak on female pov. You bet they're going to lap that shit like no tomorrow

Anonymous

8/17/2025, 4:57:29 PM

No.106291090

[Report]

>>106291100

>>106291000

Yeah. I saw a comparison in the size of the dudes and chicks subreddit for AI smut and related things and the girl one was something like 20x the soze of the dude one.

And it makes sense, chicks can cum from just reading smut if they are immersed hard enough into it. There's a reason there's so much literary smut for chicks.

Anonymous

8/17/2025, 4:58:14 PM

No.106291095

[Report]

>>106291120

>>106291064

>women can roleplay no problem getting tag teamed by a wolf and a sparkling vampire

>we try to hold the hand of our over 18 girlfriend and we get sexual predator hotlines

it's not fair

>>106291090

You can't cum from just reading the smut? Someone post the apple pic

Anonymous

8/17/2025, 4:59:34 PM

No.106291112

[Report]

>>106291000

Women who read literotica have no problems complaining about hentai for men. You could make a model or filter that lets most of the shit women are into through while filtering most of the shit men are into.

Hell, you could make every woman character the model can portray a strong empowered woman(tm) while putting no constraints on the male characters it can do.

Anonymous

8/17/2025, 5:00:18 PM

No.106291117

[Report]

>>106291100

>1 (apple / me) 2 3 4 5 (no apple / you).webp

Anonymous

8/17/2025, 5:00:46 PM

No.106291120

[Report]

>>106291136

>>106291095

What if we reversed the template and had a small but smart model rephrase the messages so that it looks like it's the woman rping? Holy shit i'm gonna try it right now

Anonymous

8/17/2025, 5:01:52 PM

No.106291126

[Report]

>>106291100

Not without slapping my dick around, no.

Anonymous

8/17/2025, 5:02:44 PM

No.106291136

[Report]

>>106291309

>>106291120

Try it and report back, I don't think switching the PoV wll be enough. Model would still probably have its refusal senses tingling by the genre, writing style, characters, etc.

Anonymous

8/17/2025, 5:03:09 PM

No.106291142

[Report]

>>106291412

>>106291000

Actual heartbreak when the model gave her safetyslop, this is insane.

Anonymous

8/17/2025, 5:03:37 PM

No.106291145

[Report]

>>106291608

>>106290702

i dont understand, you come in the thread asking for opinions, and when given one that doesn't align with the answer you want, you throw a fit. kys retard

Anonymous

8/17/2025, 5:07:01 PM

No.106291173

[Report]

>>106291000

Character.AI was an early indicator.

Anonymous

8/17/2025, 5:12:46 PM

No.106291222

[Report]

>>106291000

All my female cousins are using cai as young as 12. I wish I was joking

>Only the nerdiest of nerds dudes are using LLMs for ERP

>Meanwhile apparently 20% of google traffic is used by normalfag women doing ERP with LLMs

What the fuck happened to men? Estrogen in the water supply killed all libido or something? Stacy is more /g/ than the average dude now lmao.

Anonymous

8/17/2025, 5:22:20 PM

No.106291309

[Report]

>>106291136

I just remembered that I don't have any safetyslopped models, lol. Tried swapping assistant and user in chat template, didn't notice any difference in response length or quality

Anonymous

8/17/2025, 5:26:04 PM

No.106291341

[Report]

>>106291273

Women don't smile at me anymore

Anonymous

8/17/2025, 5:26:25 PM

No.106291344

[Report]

>>106291273

Using an online service is /g/ now? This really is the consumer tech board. You must also be a woman or underage if you don't realize that most men watch porn, not ERP.

Anonymous

8/17/2025, 5:27:07 PM

No.106291351

[Report]

>>106291273

Men are too busy scanning their IDs to access sanitized porn websites since most of them can't imagine an apple, let alone a written scenario

Anonymous

8/17/2025, 5:33:46 PM

No.106291412

[Report]

Anonymous

8/17/2025, 5:34:19 PM

No.106291417

[Report]

>>106291273

it's because women are saasier than men

Anonymous

8/17/2025, 5:36:11 PM

No.106291439

[Report]

>>106291049

@grok is this real?

Anonymous

8/17/2025, 5:36:48 PM

No.106291443

[Report]

>>106291514

>>106290382

LoRA is good for cases where you want to limit your domain and collapse the output distribution. For example, making the model always output a certain character, or training a text model to always extract certain data from tabular inputs. If your output domain requires the full world model of the original model, for example to write coherent stories or roleplay, it's less than ideal.

Anonymous

8/17/2025, 5:43:15 PM

No.106291510

[Report]

>>106291587

Is there any notable quality difference between text completion and chat completion for glm air?

Anonymous

8/17/2025, 5:43:40 PM

No.106291512

[Report]

>>106291273

Normalfag men literally can't ERP the same way women do without getting hit with refusals, emergency hotlines, and ToS violations

Anonymous

8/17/2025, 5:44:13 PM

No.106291514

[Report]

>>106291443

been working fine for drummer

Anonymous

8/17/2025, 5:48:46 PM

No.106291548

[Report]

>>106290634

>No this is completely based on gut feeling and "big model smell" which is not even something we have properly quantified yet but everyone knows exactly what you mean when you say so. GPT-4.5 had some proper big model smell for example that is missing from GPT-5, which is clearly a smaller model.

Same as always, if a model has less than 30B or so active parameters, it's kind of dumb. Above that it gets better.

redpill me on china

how can they launch models after models after models?

Anonymous

8/17/2025, 5:51:45 PM

No.106291582

[Report]

>>106291609

>>106291273

where can i read the foid erp? all i found is /r/myboyfriendisai where they're trying to be all lovey dovey

Anonymous

8/17/2025, 5:52:14 PM

No.106291587

[Report]

>>106291510

If you are perfectly replicating the chat template in text completion there shouldn't be any differences.

Anonymous

8/17/2025, 5:52:46 PM

No.106291591

[Report]

>>106291660

>>106291572

Because they are behind and thus can't have closed source models as no one would pay for them. So they have to undercut their competition first to drive them out of business by launching open source models.

It's how they dominated solar panels and EV cars as well. They kept undercutting and dumping on the market to drive others out of business even if the product itself was inferior to western versions.

Anonymous

8/17/2025, 5:53:22 PM

No.106291597

[Report]

>>106291572

Let's call it what it is: Economic terrorism

Anonymous

8/17/2025, 5:53:44 PM

No.106291608

[Report]

>>106291747

>>106291145

This anon

>>106290702 is a different person from the anon who asked this

>>106289671 question

I thank you all for sharing your wisdom with me

Anonymous

8/17/2025, 5:53:49 PM

No.106291609

[Report]

>>106291582

look at the 18 million sadistic bad boy ERP bots at c.ai to get some idea of what they are doing. It's all the same shit.

Anonymous

8/17/2025, 5:58:01 PM

No.106291660

[Report]

>>106291591

America did the same to Britain at the start of the industrial revolution.

Anonymous

8/17/2025, 6:04:22 PM

No.106291731

[Report]

>>106287278

what happened with deepseek?

Anonymous

8/17/2025, 6:06:19 PM

No.106291747

[Report]

>>106291608

i coom in miku

claude is starting to lose the coding market

Hypothetically speaking, what is a good way to turn a quick profit if I found a corporation's API key?

Anonymous

8/17/2025, 6:11:39 PM

No.106291814

[Report]

>>106291803

Post it on 4chan

Anonymous

8/17/2025, 6:12:30 PM

No.106291823

[Report]

>>106291799

qwen coder bros???

>>106291799

This is bullshit. First this is only OpenRouter and doesn't take into account Claude API which ever developer (including me) uses at work.

Second this doesn't take into account a lot of people trying out new models when they come out for a while. Every time a new coding model comes out I test it for my use case and then switch back to claude because nothing better has come along yet.

Anonymous

8/17/2025, 6:13:26 PM

No.106291834

[Report]

>>106291734

And that's a good thing

Anonymous

8/17/2025, 6:13:36 PM

No.106291838

[Report]

>>106291803

Set up a proxy and charge users for discounted acces.

Anonymous

8/17/2025, 6:13:51 PM

No.106291843

[Report]

>>106291829

qwen coder is a much cheaper sonnet 4 atm, opus 4 is still better of course

Anonymous

8/17/2025, 6:14:40 PM

No.106291850

[Report]

>>106291799

I'm kind of surprised the actual best option hasn't taken OR by storm yet

Anonymous

8/17/2025, 6:15:20 PM

No.106291860

[Report]

>>106291829

copey cope

openrouter users have the opportunity to switch to any model they want

anthropic API users don't

Anonymous

8/17/2025, 6:16:43 PM

No.106291866

[Report]

>>106291829

Gemini 2.5 Pro has been out for a while.

>>106291829

I pay 10 dollaroos/month for github copilot, if I switch to openrouter wouldnt I end up paying more? they removed opus too these fucking jews I swear. whats openrouter support in jetbrains ides?

Anonymous

8/17/2025, 6:17:57 PM

No.106291875

[Report]

>>106291893

Is there a model trained on captchas?

Anonymous

8/17/2025, 6:19:41 PM

No.106291889

[Report]

>>106291873

openrouter is pay as you go, you could always throw 5 or 10 bucks in and see how far that takes you

Anonymous

8/17/2025, 6:20:01 PM

No.106291893

[Report]

>>106291875

joshikousei captcha solver

Anonymous

8/17/2025, 6:21:43 PM

No.106291911

[Report]

>>106291000

>This is also why schizos shouldn't be afraid of women taking away their "AI girlfriend" because they are the most likely ones to push this shit in the first place.

Very dumb take. It assumes equality is real when it demonstrably not true. Heck even in context of llms safety punishes smut for men and creates models that excel at smut for women.

Anonymous

8/17/2025, 6:21:45 PM

No.106291912

[Report]

>>106291930

>>106291799

america bros... china is laughing at us...

Anonymous

8/17/2025, 6:23:11 PM

No.106291929

[Report]

>>106291956

>>106291829

Claude is indeed the best for coding

But it's also ridiculously fucking expensive, especially compared to what the chink models are priced at

Anonymous

8/17/2025, 6:23:13 PM

No.106291930

[Report]

>>106291952

>>106291912

Claude just needs to bite the bullet and reduce prices and they would win handedly

Anonymous

8/17/2025, 6:25:08 PM

No.106291952

[Report]

>>106291930

They're already running on VC fumes

Anonymous

8/17/2025, 6:25:12 PM

No.106291956

[Report]

>>106292013

>>106291929

or just buy a $100/mo sub?

Anonymous

8/17/2025, 6:29:41 PM

No.106292006

[Report]

>>106291799

As we are talking about Openrouter, you must know that they are offering access to a shitty Deepseek-R1 clone

Anonymous

8/17/2025, 6:30:20 PM

No.106292013

[Report]

>>106291956

At that point I'd just use the free Qwen coder thing

Anonymous

8/17/2025, 6:37:10 PM

No.106292074

[Report]

>>106292243

>>106287852

I've been using yodayo and the AI's actually comes up with plot points I never even considered using or developing.

But I don't ask it to write a story for me, I write the story back and forth with it.

Currently doing a "new girl at office" scenario. Didn't have any idea where to go with it. I wrote up a sentence for each of 3 characters to start with (gruff manager who doesn't like women on his team/jeet office simp/corpo shark who manipulates to get what he wants) then I told it that I wanted to explore themes of intrigue, drama, and romance and started rolling with it.

What I have now is a partly fleshed out corporate structure, the company my character works in is a PR management firm and their biggest client (millions $$$) is a lecherous scumbag, CEO of a logistics company, who can't keep his hands off women. My character turned down the advances of the corpo shark so he pulled a revenge move on her and put her in charge of handling PR for the scumbag CEO, who of course, loves getting handsy with the new PR girl until she breaks. Now she's in a position of either humiliating herself with the scumbag, or crawling back to the shark for protection.

Then she met a dashing rogue at a seedy bar while trying to drink away the pain, and he turns out to work in corporate espionage (entirely the AI's idea), I played into that, had him offer to help her dig dirt on the corpo shark which set up a spying subplot, and the last scene I did was her first meeting with the scumbag CEO where she put on some confidence, sex appeal, and charm (and brought the company lawyer with her) to try and keep the flirting on her terms. Then the AI threw a curveball - scumbag gives her 1 night to get a story pulled from a paper before it goes to print, to prove herself, it's an impossible ask - set up to fail for the express purpose of putting her at his mercy or in his debt.

I've got a quarter of a romance novel/spy thriller written off a 2 paragraph starting prompt now.

Anonymous

8/17/2025, 6:37:55 PM

No.106292080

[Report]

>>106291000

>women

i don't understand them

Anonymous

8/17/2025, 6:39:22 PM

No.106292099

[Report]

>>106291734

If you knew how much aicg is pulling from anthropic lmao

Anonymous

8/17/2025, 6:39:58 PM

No.106292105

[Report]

>>106288077

Yeah, it had that feeling for me too. Although I put it at 2027-2030 and not on phones.

It's still possible, but I get the feeling it'll be closer to a decade or two, rather than half of one.

Anonymous

8/17/2025, 6:40:35 PM

No.106292113

[Report]

>>106291873

>paying for copilot

Anonymous

8/17/2025, 6:41:02 PM

No.106292116

[Report]

>>106292140

>>106292087

Monday hasn't started yet.

Anonymous

8/17/2025, 6:41:21 PM

No.106292126

[Report]

>>106292137

>>106292089

Go back to your hole

Anonymous

8/17/2025, 6:42:48 PM

No.106292137

[Report]

>>106292126

Triggered much?

Anonymous

8/17/2025, 6:42:57 PM

No.106292138

[Report]

Anonymous

8/17/2025, 6:43:08 PM

No.106292140

[Report]

>>106292116

Sunday is the first day of "next week" in most jurisdictions

It's over

Anonymous

8/17/2025, 6:44:38 PM

No.106292157

[Report]

>>106292165

>>106292089

They are drowning in Japanese money

Anonymous

8/17/2025, 6:45:28 PM

No.106292165

[Report]

>>106292157

this, and to be fair their image model is still by far the best that does nsfw

>>106292089

The textgen almost certainly isn't

The imagegen is probably pumping Nippon bucks into their veins

Anonymous

8/17/2025, 6:48:27 PM

No.106292209

[Report]

>>106292174

apparently they are working on making a big moe model, not sure if that means something like a deepseek finetune or a new from scratch model, but yea, their 70B is trash

Anonymous

8/17/2025, 6:53:11 PM

No.106292243

[Report]

>>106292564

>>106292074

What model + system prompt is that using?

Anonymous

8/17/2025, 6:58:29 PM

No.106292309

[Report]

>>106293067

>>106292174

their textgen is bad but it's almost certainly profitable

if you compare their monthly subs costs vs what you would pay for similarly sized models on OR they're probably doing quite well, a user would have to be putting in like full 8 hour shifts genning daily to dip below commodity profit margins

I don't know as much about image gen profit margins but considering how hard they pivoted to it I'd imagine it's at least as profitable if not more

Anonymous

8/17/2025, 7:03:46 PM

No.106292357

[Report]

>>106291799

Where is the open source claude sonnet models? or gemini?

Anonymous

8/17/2025, 7:07:21 PM

No.106292392

[Report]

>>106292308

>helped by the dev

>helped by the dev

>helped by the dev

>left bumbling around for weeks and ultimately accomplishing nothing

all fake and/or meaningless

Now that they're dumping Volta gpus shouldn't they start dumping tesla Turing soon?

Even ampere is EoL at this point.

Anonymous

8/17/2025, 7:14:32 PM

No.106292450

[Report]

>>106292481

>>106292441

>Even ampere is EoL at this point.

shut the fuck up

Anonymous

8/17/2025, 7:17:44 PM

No.106292481

[Report]

>>106292524

>>106292450

Anon, it is 5 years old at this point

Anonymous

8/17/2025, 7:18:25 PM

No.106292493

[Report]

>>106291049

wtf how does it come out of the screen?

Anonymous

8/17/2025, 7:20:26 PM

No.106292520

[Report]

>>106292441

>ampere

>eol

keep dreaming lmao

>>106292481

5 year old tech shouldn't be eol you consoomer cuck.

Anonymous

8/17/2025, 7:23:31 PM

No.106292544

[Report]

>>106292087

Who could have seen it coming?

Anonymous

8/17/2025, 7:23:48 PM

No.106292548

[Report]

>>106292524

bro just let GPT-3 go already...

Anonymous

8/17/2025, 7:24:51 PM

No.106292560

[Report]

>>106292524

In the datacenters and nvidia stops supporting them after 5 years (usually). They tend to get poured on the used market at some point so we can buy it cheap hopefully

Anonymous

8/17/2025, 7:25:13 PM

No.106292564

[Report]

>>106292833

>>106292243

I swap between 4 different ones

Nephra 12B - Free, and early on I was using it a lot. Basically I'd hit an important point in the story with a bigger model, do the minutae with this one. Mostly stopped using it in favor of mistral small 24B though. It's... adequate... but its not good. Lots of repetitive/tropey language too.

Mistral Small 24B - costs 1 bean/prompt. I use this one the most now. It's like a discount deepseek v3. Miles better than Nephra 12B and generally puts scenes and ideas together well.

Deepseek v3 - costs 4 beans/prompt. I use it for the most important scenes or when I get stuck. It's hard to describe how, but it's just generally smarter/better.

Deepseek R1 - costs 4 beans/prompt also, I use it rarely, mostly for planning plots points or exploring directions I could take the story.

Pic related is how it reads. Most of my prompts are internal dialogue/thoughts and story directions for the AI, but I'll create and set scenes and dialogue for characters myself as well. I think all of these replies were either Mistral or Deepseek v3. I'm also making use of the lorebook feature - every time the AI creates an interesting character, I put together an entry for that character in the lorebook. Did the same for the companies involved - that goes a long way to maintaining character consistency between scenes.

And keep in mind the AI is a disjointed mess when it comes to pacing. Scene to scene it's decent, but if you don't keep your own notes/story outline somewhere, you're going to jumble things up and forget important threads in the story. Still, what you get out of this is like a stream of consciousness rough draft. It's honestly amazing. I can just write and write with it, then copy into a text editor (ok now doing THAT is a pain in the ass), and start editing and refining it as a proper story myself.

>Turing - fp16

>Ampere - bf16

>Ada - fp8

>Blackwell - fp4

If something will be dropped it will be Turing with no bf16 support which is the "standard" precision right now. Ampere won't die for a long while.

Anonymous

8/17/2025, 7:28:52 PM

No.106292607

[Report]

>>106292826

>>106292572

They should drop anything that's not Blackwell to be quite desu, it's ick worthy to support such rusty ahh shit.

Anonymous

8/17/2025, 7:29:34 PM

No.106292618

[Report]

>>106292572

In addition to this, leatherjacket man isn't stupid and knows he has the world by the balls when it comes to VRAM. There's no way they'll willingly cannibalize their market share of their own volition. You won't see Ampere hit the market until consumer GPUs come somewhere close to that level, most likely

Anonymous

8/17/2025, 7:30:51 PM

No.106292632

[Report]

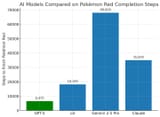

>>106293026

>>106292308

I'd like to see these models play one of those shitty Yu-Gi-Oh games from the 2000s.

Both are designed for children but Pokemon I think is too easy to beat by just mashing random buttons because you can just grind out levels.

With Yu-Gi-Oh the models would have to actually build viable decks from all of the (mostly garbage) cards that are available.

Anonymous

8/17/2025, 7:38:30 PM

No.106292714

[Report]

>>106292792

>>106291000

Suppose you had both a girlfriend and a server capable of running Deepseek R1 at full precision.

If both you and your girlfriend use the server for ERP, is it cuckoldry or a threesome?

Anonymous

8/17/2025, 7:39:26 PM

No.106292722

[Report]

Anonymous

8/17/2025, 7:41:39 PM

No.106292741

[Report]

Anonymous

8/17/2025, 7:42:11 PM

No.106292747

[Report]

>>106292870

>>106287852

come up with the plot yourself, stop trying to get the ai to entertain you. It brings your ideas to life. You are the spark, the ai is the servant.

Anonymous

8/17/2025, 7:46:17 PM

No.106292792

[Report]

>>106292714

>holding your gf's AI Husbando hostage

it's NTR but you are the NTR Bastard in this scenario.

>>106291000

dating apps seem to have lost a large part of their userbase to chatbot spam and fake accounts caused by ai though.

I wouldn't buy into this too much, as women have always read text based erotica. I doubt much has really changed in terms of overall demographics, and I don't see any statistics on ai use that point to a huge surge in use. If anything, men seem to use ai more overall.

Source: asked grok, no fact checking who cares.

Anonymous

8/17/2025, 7:48:14 PM

No.106292812

[Report]

>>106292089

They have to be, they don't have investor money.

Anonymous