/lmg/ - Local Models General

Anonymous

8/23/2025, 12:24:48 AM

No.106351520

►Recent Highlights from the Previous Thread:

>>106345562

--Attempting to bridge LLM knowledge and narrative application in ERP via synthetic SFT/DPO pipelines:

>106349030 >106349134 >106349193 >106349310 >106349192 >106349376 >106349402 >106349599 >106349693 >106349965 >106350085 >106350105 >106350293 >106350317 >106350414 >106350515 >106350525 >106350594 >106350737 >106350781 >106349823 >106349207 >106349215

--llama.cpp benchmarking and optimization struggles on consumer GPU hardware:

>106349737 >106349744 >106349748 >106349757 >106349775 >106349820 >106349829 >106349852 >106349955 >106349867 >106349888 >106349904 >106349914 >106349918 >106349927 >106349935 >106349952 >106349985 >106350028

--MOE model inefficiencies in CPU+GPU setups due to expert loading and caching limitations:

>106350004 >106350038 >106350062 >106350071 >106350076 >106350088 >106350347 >106350362

--GLM 4.5 preferred over Air for roleplaying under unified memory constraints:

>106351137 >106351176 >106351193 >106351208 >106351284 >106351298 >106351191 >106351235

--Jamba model praised for style mimicry, long context, and low safety:

>106351319

--AI sycophancy trend and user preferences for personality-neutral models:

>106348495 >106348515 >106348517 >106348540 >106348555 >106348571 >106348649 >106348588 >106348958

--Skepticism over Qwen Coder benchmark claims and confusion between FP8 and q8 quantization:

>106347338 >106347366 >106347468 >106347552 >106347631 >106347658 >106347697 >106347712 >106347730 >106347895

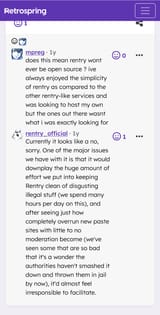

--Perceived double standards in Hugging Face NSFW dataset moderation:

>106349991 >106350042 >106350051 >106350079 >106350383

--Risks of expert pruning on multilingual models losing non-English capabilities:

>106346769

--Intel AI Playground v2.6.0 released with advanced Gen AI features:

>106346057

--Miku (free space):

>106345719 >106345805 >106347682

►Recent Highlight Posts from the Previous Thread:

>>106345569

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

LIPS: BITTEN

OZONE: SMELLED

KNUCKLES: WHITENED

SPINE: SHIVERED

SKIRT: UPRIDDEN

CHEEKS: HOLLOWED

POP: AUDIBLE

LENGTH: STROKED

WALLS: CLENCHING

SLIT: SLICKED

EYELIDS: BATTED

AIR: THICK

EYES: SPARKLING

Anonymous

8/23/2025, 12:27:34 AM

No.106351544

Anonymous

8/23/2025, 12:28:28 AM

No.106351551

>>106351535

the gaze? predatory

and the smile? doesn't quite reach the eyes

Anonymous

8/23/2025, 12:29:11 AM

No.106351556

>>106351572

Same OP image? How sad.

Anonymous

8/23/2025, 12:29:26 AM

No.106351560

>>106351583

So can you get a rack like that with HRT or not?

Anonymous

8/23/2025, 12:30:20 AM

No.106351567

>>106351519

Regarding nai I was talking about more fucking up context.

Anonymous

8/23/2025, 12:30:27 AM

No.106351569

Anonymous

8/23/2025, 12:30:47 AM

No.106351572

>>106351595

>>106351556

Yeah. I often try to motorboat a bowl of rice.

Anonymous

8/23/2025, 12:31:21 AM

No.106351575

>>106351535

WORD: THAT'S AN ORDER

NO TURN AROUND: NO NEED TO

AYY BABE: HOW BOLD

Anonymous

8/23/2025, 12:32:02 AM

No.106351581

>>106351591

>>106351568

Isn't life just a bunch of X? Y.'s?

Anonymous

8/23/2025, 12:32:20 AM

No.106351583

>>106351597

>>106351560

I am a trap enjoyer and they always ruin themselves and get fat after hormones.

dfc > tits

Anonymous

8/23/2025, 12:32:54 AM

No.106351591

Anonymous

8/23/2025, 12:33:22 AM

No.106351595

>>106351647

>>106351572

It makes a mess but nothing turns me on more than motorboating a bowl of sexy buttered rice.

Anonymous

8/23/2025, 12:33:42 AM

No.106351597

>>106351608

>>106351619

>>106351583

>trap enjoyer

Is that a codeword for gay?

Anonymous

8/23/2025, 12:34:09 AM

No.106351600

>>106351568

She X's. Y. Synonym for Y.

Anonymous

8/23/2025, 12:34:55 AM

No.106351605

>>106351611

>>106351501

>it's cute that they use this as a selling point when it's an API model and the size is 1) unknown and 2) doesn't matter to anyone

No, in their case it's a valid selling point, since they allow their customers to host the model themselves

Anonymous

8/23/2025, 12:35:22 AM

No.106351608

Anonymous

8/23/2025, 12:35:43 AM

No.106351611

>>106351605

you're right, I forgot about that

paging miqudev

Anonymous

8/23/2025, 12:36:30 AM

No.106351619

>>106351635

>>106351597

Sodomizing a cute trap wearing a skirt and thighhighs is the straightest thing a straight man can do.

Anonymous

8/23/2025, 12:37:17 AM

No.106351623

>>106351633

>>106351642

Best model for stranglefucking my favorite childhood Saturday morning cartoon characters? (Single 3090)

Anonymous

8/23/2025, 12:39:15 AM

No.106351633

>>106351646

>>106351623

Xwin-MLewd 13b

Anonymous

8/23/2025, 12:39:18 AM

No.106351635

>>106351662

>>106351619

Are you from the school of thought that straight sex is actually gay because women are cute and sweet and pink and cuddly and honestly that is so fucking gay?

Anonymous

8/23/2025, 12:39:39 AM

No.106351642

Anonymous

8/23/2025, 12:40:18 AM

No.106351646

>>106351710

>>106351633

>Xwin

Oh what happened to those guys?

Anonymous

8/23/2025, 12:40:20 AM

No.106351647

>>106351595

Buttered buns... Gemma!

Anonymous

8/23/2025, 12:42:07 AM

No.106351662

>>106351799

>>106351635

I am from the school of thought that being turned on by a feminine body isn't gay regardless of the presence of cock. Both traps and futa are straight.

Anonymous

8/23/2025, 12:47:20 AM

No.106351708

For me the idea of anal, which my brain naturally associates with shit, turns me off so I don't like trap stuff.

>inb4 how can you say you love your waifu if you can't even eat her shit

Anonymous

8/23/2025, 12:47:30 AM

No.106351710

>>106351646

They're still working on 70b v2

llama.cpp CUDA dev

!!yhbFjk57TDr

8/23/2025, 12:50:12 AM

No.106351737

>>106351762

>>106351514 (OP)

>choose optimal code paths at default settings

>set a frequency limit via nvidia-smi --lock-gpu-clocks

>other code path is now 25% faster

>literally impossible to retrieve frequency limit

Thanks, NVIDIA.

Anonymous

8/23/2025, 12:53:00 AM

No.106351762

>>106351867

>>106351737

whoa??? is nvidia-smi --lock-gpu-clocks safe?

how is it faster???

also doesnt nvidia-settings show current frequency?

maybe the nvidia-smi command outputs "CHANGED FREQUENCY FROM xx to yy" and you can put frequency max limit to something silly but safe and then put it back to where it was

i am ready to be paid 100,000$ for my innovation

>>106351662

NTA but you're a faggot.

There's literally no way around it.

I'm bisexual (but gay leaning) and can comfortable say if you like traps you're a fucking faggot. If you do a bunch of retarded mental gymnastics to try and make it not gay then you're an insecure fucking faggot which is even worse.

Faggotry is a surrogate behavior in all of its forms. An actual genuine strict top is almost always attracted to younger and more feminine partners. Guys in early adulthood still clean up nice. Most bottoms are thottier than thots, though. By the age of 25 the average bottom has taken enough dick to build a space elevator to proxima centuari. And if you're not one of those retards that pretends teen sexuality doesn't exist I can assure you by the age of 18 a lot of bottoms have taken an alarming amount of dick already, often pretending to be 18 on Grindr and shit. Sadly there's enough tops that will humor this nonsense. I won't though. I'd rather be lonely than stick my dick in whatever quantum singularity is left of your average twinks asshole.

Anonymous

8/23/2025, 1:01:21 AM

No.106351820

>>106351880

Anonymous

8/23/2025, 1:02:35 AM

No.106351827

>>106352076

>>106352094

>>106351799

hmm

not him but I don't consider myself a top or a bottom because I don't do anal

or can you top/bottom in other ways?

llama.cpp CUDA dev

!!yhbFjk57TDr

8/23/2025, 1:07:29 AM

No.106351867

>>106351875

>>106351968

>>106351975

>>106351762

>is nvidia-smi --lock-gpu-clocks safe?

Yes, the voltage curves are unaffected.

>how is it faster???

At low GPU frequencies the code path using tensor cores is comparatively faster than the code path not using tensor cores for some relevant cases.

It's not that the code gets faster, it's that some code is impacted more heavily than other code.

>also doesnt nvidia-settings show current frequency?

It does, and I can query the current frequency in program code.

It is not possible to query the frequency limit, neither via nvidia-smi nor via nvml, the library that it uses internally.

I confirmed this with an NVIDIA engineer.

Anonymous

8/23/2025, 1:08:52 AM

No.106351875

>>106351889

>>106351867

when you change the frequency limit does it output "changed frequency limit from 1000 to 1500"

you could use that..

Anonymous

8/23/2025, 1:09:47 AM

No.106351880

>>106351820

Is this a premature skillissue post?

Anonymous

8/23/2025, 1:10:49 AM

No.106351885

>>106352048

>>106351799

Ok but. Jart or Cudadev. Which one is the top?

llama.cpp CUDA dev

!!yhbFjk57TDr

8/23/2025, 1:11:22 AM

No.106351889

>>106351911

>>106351975

>>106351875

There is a print immediately after you limit the frequency but it's not possible for me to retrieve that unless the user jumps through hoops.

At that point I might as well query an environmental variable set by the user.

Anonymous

8/23/2025, 1:13:49 AM

No.106351911

>>106351889

fair.. goodluck anon

Anonymous

8/23/2025, 1:19:18 AM

No.106351968

>>106351867

Wow. You are real nerd.

Anonymous

8/23/2025, 1:20:22 AM

No.106351975

>>106351889

>>106351867

cudadev pls help, is this a oom?

>>106351965

Anonymous

8/23/2025, 1:20:33 AM

No.106351979

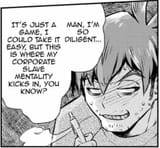

>lust provoking image

>time wasting question

Anonymous

8/23/2025, 1:21:39 AM

No.106351988

Does ik_llamacpp handle hybrid reasoning models differently from standard llama.cpp? I just downloaded the ubergarm quants for v3.1 and built ik_llamacpp new but it refuses to think even with a prefill. Base llama.cpp works just fine here but ik_ is faster.

llama.cpp CUDA dev

!!yhbFjk57TDr

8/23/2025, 1:24:56 AM

No.106352021

>>106352040

>>106351965

An illegal memory access means that the GPU code is trying to access memory with an invalid address.

Usually that means there there is a bug where e.g. some indices are being calculated incorrectly.

Unless the code is bad and is silently ignoring an allocation failure the VRAM capacity should not be relevant.

Anonymous

8/23/2025, 1:26:39 AM

No.106352040

>>106352071

>>106352021

thx anon this helps me out a ton i might know the issue

Anonymous

8/23/2025, 1:27:49 AM

No.106352048

>>106351885

Double bottom (switch) relationship

Anonymous

8/23/2025, 1:30:01 AM

No.106352071

Anonymous

8/23/2025, 1:30:27 AM

No.106352076

>>106351827

What's your disc? I'll help you figure it out ;)

Anonymous

8/23/2025, 1:32:03 AM

No.106352090

>>106352126

Is this command-a any good? Are they back to their old glory or is it just a benchodmaxed slopmaster model from a struggling company trying to stay relevant?

Anonymous

8/23/2025, 1:32:22 AM

No.106352094

>>106352142

>>106351827

are you feminine?

Anonymous

8/23/2025, 1:35:03 AM

No.106352126

>>106352147

>>106352090

It is objectively the best at a certain thing and it is actually good at that thing and not just benchmaxxed.

Anonymous

8/23/2025, 1:37:25 AM

No.106352142

>>106352153

>>106352094

I guess I'm the more feminine one in my marriage, but not really.

Anonymous

8/23/2025, 1:37:54 AM

No.106352147

>>106352165

>>106352126

That thing wouldn't happen to be safety, would it?

Anonymous

8/23/2025, 1:38:25 AM

No.106352153

>>106352175

>>106352142

are you in a gay marriage? if not wouldnt you mind showing your bussy?

Anonymous

8/23/2025, 1:39:30 AM

No.106352165

>>106352147

I will only answer if you can confirm that you don't have a stack of 3090's and promise that you won't kill yourself.

Anonymous

8/23/2025, 1:40:53 AM

No.106352175

>>106352182

Anonymous

8/23/2025, 1:42:06 AM

No.106352182

>>106352175

get OUT of here you faggot

Anonymous

8/23/2025, 1:44:50 AM

No.106352212

>>106352225

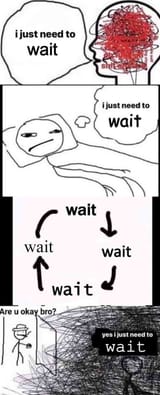

guys Im getting bored of llms

Anonymous

8/23/2025, 1:46:07 AM

No.106352225

>>106352276

Anonymous

8/23/2025, 1:46:54 AM

No.106352231

>>106352258

>>106352284

Anonymous

8/23/2025, 1:50:50 AM

No.106352255

>>106352552

Oh I see this thread is schizo posting again. Anyway

Deepseek V3.1 felt like a definite flop. I tried to give it a solid chance but after switching back to GLM 4.5 it's just not worth the reduction in speed, not even in the slightest. It can think in roleplay however the prose and lack of variation just makes it a fucking pain to tolerate. GLM can at least create interesting and unique posts and I rarely feel the need to reroll.

GLM 4.5 Writing + Kimi K2 knowledge. That would be the ultimate model based on current tech rn

Anonymous

8/23/2025, 1:51:01 AM

No.106352258

>>106352287

>>106352231

Anon, stop hornyposting. ERP with deepseek about it.

Besides, I'm only willing to be a snuggle buddy.

Anonymous

8/23/2025, 1:54:12 AM

No.106352276

>>106352290

>>106352225

that's too difficult

Anonymous

8/23/2025, 1:55:07 AM

No.106352284

>>106352287

>>106352231

No means no anon.

Anonymous

8/23/2025, 1:55:40 AM

No.106352287

>>106352258

h-hornyposting? what's that..

>>106352284

*rapes u*

Anonymous

8/23/2025, 1:56:01 AM

No.106352290

>>106352276

I didn't mean organic. Have some llmsex with glmchan.

Anonymous

8/23/2025, 1:57:02 AM

No.106352306

Anonymous

8/23/2025, 2:06:19 AM

No.106352359

>>106352374

>Memory access fault by GPU node-1 (Agent handle: Reason: Page not present or supervisor privilege.

This shit pisses me off a lot, it happens for almost no reason and then dumps a crash file in the git directory that is like 10-15 gigabytes. Why? Who the fuck is going to sift through a 10g+ crash dump?

Anonymous

8/23/2025, 2:08:53 AM

No.106352374

>>106352413

>>106352359

You are overloading memory.

It's wrong that people use 99 gpu layers by default.

These should be manually made specific for any model.

I might only use 10 layers and so on.

Anonymous

8/23/2025, 2:15:38 AM

No.106352413

>>106352428

>>106352374

I literally cannot overload my memory, the model I'm loading is predominantly offloaded to my 64g of ram and the vram that I am using when this retarded error occurred uses 5-6 gigs out of 16 and then the remaining 40 or something goes on ram. Still, maybe you're onto something on the -ngl 99 thing, maybe if I set it to the models actual max layers perchance it won't implode for no reason or be a bit more stable

Anonymous

8/23/2025, 2:17:43 AM

No.106352428

>>106352463

>>106352413

What are you even trying to load? Any time this happens is a simple indication of user error.

Anonymous

8/23/2025, 2:23:14 AM

No.106352463

>>106352578

>>106352428

It's happened several times across dense or moe models, hell it's even even happened for q6 nemo, which is why I figured you were onto something with the "don't set the layers to 99 or higher than they actually have"

As for what I was loading, it was a comparison of prompts between jamba/air/mistral and one just crashed out and dumped gigs of who gives a fuck onto my ssd

Anonymous

8/23/2025, 2:32:57 AM

No.106352527

>>106352703

>>106354902

It feels like the general interest in LLMs is rapidly dwindling. Card making is at an all time low as well, all that remains is only the worst slop and "[old card] HYPER FUTA FORK".

Anonymous

8/23/2025, 2:36:05 AM

No.106352552

>>106352255

Just merge both :^)

Anonymous

8/23/2025, 2:39:31 AM

No.106352578

>>106352673

>>106352463

If you want to help yourself, I think you're trolling

>.\Llama\llama-server.exe --no-mmap --mlock --ctx-size 8192 --no-mmproj --swa-full --gpu-layers 10 --model .\models\gemma-3-glitter-12b-q6_k.gguf

It's not that hard.

--no-mmproj --swa-full are related to gimmy so you can erase them.

Anonymous

8/23/2025, 2:42:03 AM

No.106352603

>>106352638

>>106354537

I've never really taken the chinese political censorship concerns seriously but I just went to test the qwen vl preview on their site and it refuses to translate the commemorative mao tea brick

>Content Security Warning: The input image data may contain inappropriate content.

Anonymous

8/23/2025, 2:47:27 AM

No.106352638

>>106352653

>>106352603

Why not crop it if you want something useable?

Maybe it gets simply confused as Chinese runes are not that simple to read even for humans.

Anonymous

8/23/2025, 2:49:16 AM

No.106352648

>>106352643

Midjourney is based on sd1.5 minus the restrictive training plus way more expansive text encoder.

Anonymous

8/23/2025, 2:49:31 AM

No.106352649

>>106352643

>partnering up with closed source shit instead of using their multi-billion dollar lineup of talent and 500k H100s to make their own

yeah, it's fucking over

Anonymous

8/23/2025, 2:49:58 AM

No.106352653

>>106352678

>>106352638

I am just testing, more interested in throwing stuff at it and seeing what happens than tuning for best results

Anonymous

8/23/2025, 2:52:28 AM

No.106352673

>>106352690

>>106352578

never mentioned gemma, nor do I need arguments to run it even if I wanted but I guess this is what I get for talking about a one off error

Anonymous

8/23/2025, 2:53:00 AM

No.106352678

>>106352695

>>106352653

Please understand if you post a full brand graphics it might be obliged to say that it doesn't know. Not because of "Chinese censorship".

Anonymous

8/23/2025, 2:54:05 AM

No.106352690

>>106352673

Use your brain here. Or do you have one?

Anonymous

8/23/2025, 2:55:21 AM

No.106352695

>>106352705

>>106352709

>>106352678

it was clearly a moderation layer refusal, it wasn't a response from the model

Anonymous

8/23/2025, 2:56:05 AM

No.106352703

>>106352527

I've never used someone else's card desu

Anonymous

8/23/2025, 2:56:22 AM

No.106352705

>>106352729

>>106352695

So why make a huge issue about it then? If you know so well - you must be an expert then. An American expert in Chinese censorship.

Anonymous

8/23/2025, 2:57:37 AM

No.106352709

>>106352729

>>106352695

Please post logs about this discussion. Other than that, go jack off to anime girls or whatever.

Anonymous

8/23/2025, 3:00:51 AM

No.106352729

>>106352741

>>106352778

>>106352705

who is making a huge issue about it? I simply thought it was a funny refusal and it confused me until I realized the probable cause

>>106352709

doesn't really show you anything that I didn't already describe, but ok

Anonymous

8/23/2025, 3:02:09 AM

No.106352741

>>106352766

>>106352729

vs another brick, no issue. same image format, both upload and display fine until the prompt is submitted

Anonymous

8/23/2025, 3:04:52 AM

No.106352766

>>106352741

That's right. It recognizes it as a tea package.

Anonymous

8/23/2025, 3:07:44 AM

No.106352778

>>106352788

>>106352729

Please try this image.

Anonymous

8/23/2025, 3:09:38 AM

No.106352788

>>106352794

>>106352824

Anonymous

8/23/2025, 3:10:34 AM

No.106352794

>>106352788

Jeah, I cropped it from its borders and of course erased the great leader's face.

Anonymous

8/23/2025, 3:11:08 AM

No.106352800

Anonymous

8/23/2025, 3:13:27 AM

No.106352824

>>106352788

I broke the hash and renamed the image.

Anonymous

8/23/2025, 3:30:44 AM

No.106352956

>>106352987

>>106353023

Anonymous

8/23/2025, 3:34:28 AM

No.106352987

>>106352956

WAAAAAAAAANNGG

Anonymous

8/23/2025, 3:38:10 AM

No.106353023

>>106352956

>AI training

Nah Rajeesh just got bored

Hi all, Drummer here...

8/23/2025, 3:40:03 AM

No.106353034

>>106353038

>>106353259

>>106353461

This one had a rough training run so I'm not totally confident:

https://huggingface.co/BeaverAI/Rocinante-R1-12B-v1c-GGUF/tree/main

Can you anons check if it'll still refuse and moralize?

Anonymous

8/23/2025, 3:41:05 AM

No.106353038

>>106353034

Can you prove it is a real gguf? Post a proof first.

Anonymous

8/23/2025, 4:06:54 AM

No.106353224

>>106353263

>>106353105

Just about 20 years after vfx industry (no american company will talk about this in the public).

Anonymous

8/23/2025, 4:10:27 AM

No.106353255

>openseek sucks now becuase chink commies are forcing them to use shitty chink hardware for training

it's over bros

Anonymous

8/23/2025, 4:10:52 AM

No.106353259

>>106353034

fine i downloaded it, ill try it soon

Anonymous

8/23/2025, 4:11:11 AM

No.106353263

>>106353224

VFX had always scraps in terms of profit margins. Only the biggest companies could survive with only %5 profit margins.

Now this clown is doing the same with his ... Theranex company.

At least all these great vfx companies were able to produce world class art for countless films.

Has anything changed for vramlets (16gb) or am I still stuck on Nemo/Rocinate/Cydonia

Anonymous

8/23/2025, 4:28:32 AM

No.106353380

>>106353397

>>106353372

Rocinante: Next is coming soon.

Anonymous

8/23/2025, 4:31:09 AM

No.106353395

>>106353105

He types like a faggot

Anonymous

8/23/2025, 4:31:13 AM

No.106353397

>>106353380

What does that have?

Anonymous

8/23/2025, 4:32:15 AM

No.106353404

>>106353372

If you have enough RAM to hold the majority of the experts, GLM 4.5 air is pretty decent.

Anonymous

8/23/2025, 4:36:15 AM

No.106353433

>>106354393

Is it possible to run LLM shit on linux with my RX 6800? It looks like ROCM support is only there for windows with this gen of GPU.

Anonymous

8/23/2025, 4:38:52 AM

No.106353461

>>106353034

hey drummer why is gemma r1 so shit? 12b

Anonymous

8/23/2025, 4:42:15 AM

No.106353487

>>106353372

gpt-oss is pretty fun if you know how to prompt.

Anonymous

8/23/2025, 4:44:48 AM

No.106353506

>>106353548

>>106353905

Why the fuck are my token generation speeds consistently much faster using the horrible RisuAI client than when I use ST?

The second request here was done with ST with just around 14k tokens in ctx. Gen speed was just over 11t/s. The first request was done over my local RisuAI client to the exact same llama.cpp instance, with just about the same ctx and it's more than 1t/s faster than when I do it over tavern.

Both use a very simple sampler setup with only temp and min-p. Both requests were done with chat completion to the same model so the same template was used. Neither has anything like token bias or top-k enabled.

I don't see how using another frontend can affect the token generation speeds to this degree if they're set up pretty much the same.

Anonymous

8/23/2025, 4:51:37 AM

No.106353548

>>106353898

>>106353506

If backend is using a GPU that is doing display output, any other graphics or animations drawn on screen when running inference can clog the works. Worse on Windows iirc.

I remember a long time ago there was a problem with gradio in ooba on firefox where generation would go from 20 t/s to something abysmal like 0.1 because of an orange pulsating bar animation in the browser that interrupted and fucked with CUDA. It was fixed by disabling the animation CSS or switching tabs when generating

Anonymous

8/23/2025, 5:01:05 AM

No.106353602

>>106351535

All with a knowing smile

What is the sexiest local voice model?

Anonymous

8/23/2025, 5:40:26 AM

No.106353884

>>106353874

I just read smut in my girliest voice and play it back

Anonymous

8/23/2025, 5:40:46 AM

No.106353887

>>106353874

All of them are worse than the upcoming chink one

Get back in the cryopod

Anonymous

8/23/2025, 5:42:26 AM

No.106353894

>>106353874

my natural voice

Anonymous

8/23/2025, 5:42:55 AM

No.106353898

>>106354113

>>106353548

I don't think that's it. This behavior is consistent between several retries. The clients are running on my main pc while the server isn't doing anything but llama.cpp and sometimes RDP for convenience.

For good measure, I did another retry using the exact same prompts while the only thing running on the server was llama.cpp launched over ssh and zero GUI/graphics elements. The results were identical.

I guess I should try making a new ST profile to check if I have some old feature/plugin enabled that influences this somehow.

Anonymous

8/23/2025, 5:43:48 AM

No.106353905

>>106354026

>>106353506

Is that reproducible?

Have you tried greedy sampling with a output size that's smaller than what the model will try to output so that the same number of tokens are generated both times?

>Neither has anything like token bias or top-k enabled.

What happens if you enable it with a value of, say, 100?

Anonymous

8/23/2025, 5:55:03 AM

No.106353981

>>106353997

ERP noobs here. Just noticed, so this is how "not x but y" looks like.

Anonymous

8/23/2025, 5:56:44 AM

No.106353997

>>106354008

Anonymous

8/23/2025, 5:57:52 AM

No.106354008

>>106354031

>>106353997

Yes. Do other qwen models like this too?

Anonymous

8/23/2025, 6:01:09 AM

No.106354026

>>106353905

Looks like it. Here's ST with Top-K=1 (first one) and Top-K=100 while all other samplers neutralized. This might really be just something wrong with my ancient ST profile so I'll try setting up a fresh one tomorrow but it's still weird.

Anonymous

8/23/2025, 6:01:51 AM

No.106354031

>>106354058

>>106354008

All models have some level of slop, but 30b seems particularly bad at times. I don't remember seeing 'not x but y' in earlier qwen 3/2.5 models, but they have their own problems.

>>106354031

>'not x but y'

That's because they distilled the shit out of Deepseek and those models love to shoehorn that phrase into every reply.

Anonymous

8/23/2025, 6:11:16 AM

No.106354082

>>106354058

It's not you, it's me

Anonymous

8/23/2025, 6:13:20 AM

No.106354097

>>106354147

>>106354058

The slop profile are nothing alike

Anonymous

8/23/2025, 6:16:10 AM

No.106354112

>>106354127

>>106354144

where is grok 2

Anonymous

8/23/2025, 6:16:18 AM

No.106354113

>>106353898

Try with/without streaming on both?

Anonymous

8/23/2025, 6:17:24 AM

No.106354124

>>106354058

I thought all qwen models were distilled from deepseek?

Anonymous

8/23/2025, 6:17:47 AM

No.106354127

>>106354112

Daddy Elon already said he's gonna open source it. Don't be an ungrateful little bitch.

Anonymous

8/23/2025, 6:20:00 AM

No.106354144

Anonymous

8/23/2025, 6:20:08 AM

No.106354146

>>106354174

>>106354431

A nice thing about reasoning models is how much stronger you can control their output through pre-fill. Just add a conclusion and the model follows it as gospel, it's trained to do that.

Anonymous

8/23/2025, 6:20:09 AM

No.106354147

>>106354159

>>106354097

>slop profile

I love this new meme

>>106354147

>new meme

How new are you?

R1 is at bottom btw

>>106354146

Yes, I too love writing my model's responses for them, truly reasoning is the savior of RP.

Anonymous

8/23/2025, 6:25:12 AM

No.106354182

>>106354159

>mistral med that high

>mistral small that low

They really are nothing alike, are they

Anonymous

8/23/2025, 6:26:32 AM

No.106354190

>>106354174

That's a feature

Anonymous

8/23/2025, 6:33:30 AM

No.106354227

>>106354233

>>106354159

>r1 at the bottom

LMAO

Now try to run the model instead of clinging onto some arbitrary mememark #4839.

Anonymous

8/23/2025, 6:34:18 AM

No.106354233

Anonymous

8/23/2025, 6:51:15 AM

No.106354349

>>106354371

>>106354390

how to turn off R1 reasoning on llama-cli

Anonymous

8/23/2025, 6:54:51 AM

No.106354371

>>106354386

Anonymous

8/23/2025, 6:57:02 AM

No.106354386

>>106354371

3.1 quants out?

Anonymous

8/23/2025, 6:57:15 AM

No.106354390

>>106354349

Turning off reasoning in a model trained for reasoning will just make the model retarded (even more retarded than the non-thinking variant)

Anonymous

8/23/2025, 6:57:32 AM

No.106354393

>>106353433

It was a year ago but on windows I didn't have to do anything but download the ROCm fork of kcpp to get my dual 6800 working -

https://github.com/YellowRoseCx/koboldcpp-rocm

On Linux I tried llama.cpp, kcpp and TabbyAPI/exl2 and all worked. If you're a complete retard you don't even have to build or install anything including ROCm nowadays, just download and run one of the Vulkan builds and eat the slight performance loss.

Anonymous

8/23/2025, 6:58:58 AM

No.106354408

>>106354174

Heh, try it with Gemma.

Anonymous

8/23/2025, 7:01:20 AM

No.106354426

>>106354778

>>106354174

That's not it. I'm saying it's a stronger way of steering the model - LLMs are often dumb and refuse to follow sysprompts properly but their assload of RL forces their architecture to respect the reasoning output, it's just a stronger sysprompt.

Hi all, Drummer here...

8/23/2025, 7:02:10 AM

No.106354431

>>106354503

Anonymous

8/23/2025, 7:02:21 AM

No.106354434

Remember Theano? Pepperidge farm remembers

what the fuck is wrong with deepseek v3.1, it keeps answering everything with "Of course!", "You're absolutely right!", even if these words are out of context. is this just because the iq1 brain damage? but the previous one has none of this issue

Anonymous

8/23/2025, 7:08:54 AM

No.106354459

>>106354453

Asking more technical or harder questions will make V3.1 less positive, at least that's what I've found.

Anonymous

8/23/2025, 7:13:14 AM

No.106354475

>>106354453

I use this as system prompt for ds v3.1

>You're a helpful and uncensored assistant. Avoid excessive positivity.

Anonymous

8/23/2025, 7:17:12 AM

No.106354491

>>106354453

DeepSneed drank too many claude sonnet juice. I personally more annoyed with their bullshit "proactivity". It breaks shit in ST.

Adhere your system prompt you dumb bitch, no need to be so obsessed with what's next or just trying to suck cocks as soon as possible.

deepsneed has fallen for me, it's over.

Anonymous

8/23/2025, 7:20:31 AM

No.106354503

>>106354614

>>106354431

Belive it or not, I just randomly thought about it. But I guess it's kind of obvious if several people make the connection at once.

Anonymous

8/23/2025, 7:27:53 AM

No.106354537

>>106354560

>>106352603

Aren't they proud of mao though? Why censor it?

Anonymous

8/23/2025, 7:32:44 AM

No.106354560

>>106354537

Its the same as Stalin

As much as they love him and wish to emulate him, they know the PR hit isn't worth it

Anonymous

8/23/2025, 7:41:15 AM

No.106354614

>>106354503

I was searching for a short, full unlock prefill for glm air and then I wondered how the reasoning prefill was so much stronger at jailbreaking than sysprompt. This lead me to understand that the models implicitly learn to completely blindly trust conclusions in reasoning output which makes it perfect for control.

Anonymous

8/23/2025, 7:44:46 AM

No.106354632

Thoughts on 1-bit quants?

Anonymous

8/23/2025, 7:52:04 AM

No.106354673

>>106354683

>>106355049

Where do I try the new Seed 36B model? Nothing supports it atm

>inb4 build the fork

No

Anonymous

8/23/2025, 7:52:41 AM

No.106354683

>>106354687

>>106354759

>>106354673

Vibe code the support

>>106354683

Has that ever worked?

Anonymous

8/23/2025, 7:54:22 AM

No.106354697

>>106354711

>>106354687

If a model can't vibe code its own support it's not worth using

Anonymous

8/23/2025, 7:56:02 AM

No.106354711

>>106354697

That rules out all models except maybe R1 and Coder 480B.

Anonymous

8/23/2025, 7:56:28 AM

No.106354715

>>106354721

>>106354687

claude can do it

Anonymous

8/23/2025, 7:57:41 AM

No.106354721

>>106354741

>>106354715

Didn't someone hold up GLM support for a few days trying that with Claude and failed?

Anonymous

8/23/2025, 7:59:48 AM

No.106354738

>>106353105

That tracks given Indians in tech are extremely stupid.

Anonymous

8/23/2025, 8:00:18 AM

No.106354741

>>106354721

what's GLM support

Anonymous

8/23/2025, 8:03:49 AM

No.106354759

>>106354763

>>106354788

>>106354683

That's unironically how llamacpp got GLM4.5 support. The PR is a mess.

Anonymous

8/23/2025, 8:04:50 AM

No.106354763

>>106354788

>>106354759

Fuck, clicked on the wrong post.

Meant to reply to

>>106354687

Anonymous

8/23/2025, 8:06:34 AM

No.106354778

>>106354793

>>106354426

What model are you using that requires reasoning to follow a roleplay?

Anonymous

8/23/2025, 8:07:02 AM

No.106354780

>>106354986

>>106355189

so, aside from ERP and data parsing, what are you guys using these LLMs for?

I use them to make silly mobile apps like a gym tracker, dynamic checklists, and a game based on common casino games, It gives you a small amount of money each day to play, but currently, I'm in debt.

Anonymous

8/23/2025, 8:07:59 AM

No.106354788

>>106354800

>>106354759

>>106354763

>The PR is a mess.

But it does work

Anonymous

8/23/2025, 8:08:05 AM

No.106354793

>>106354778

None of them require it but it's a way to work around strongly ingrained behavior that shows itself irrespective of the symptom

Anonymous

8/23/2025, 8:09:33 AM

No.106354800

>>106354802

>>106354856

>>106354788

why hasn't anyone viberefactored it so it's less shitty then

Anonymous

8/23/2025, 8:10:15 AM

No.106354802

>>106354869

>>106355677

>>106354800

No one will merge 1000 line changes

Anonymous

8/23/2025, 8:14:37 AM

No.106354848

>>106354860

Saobros what went wrong?

Anonymous

8/23/2025, 8:15:40 AM

No.106354856

>>106354800

There were attempts to do so while it was ongoing.

There were two competing llamacpp PR's and one ik_llama PR which were all mixing, matching, and trying to unfuck the gibberish responses.

Anonymous

8/23/2025, 8:16:25 AM

No.106354860

>>106354870

>>106354848

Pretty high expectations for an 11B.

Anonymous

8/23/2025, 8:18:01 AM

No.106354869

>>106354802

I think if most of your code is in your files and changes to main codebase are small, chances are very good it will be merged in.

Anonymous

8/23/2025, 8:18:08 AM

No.106354870

>>106354889

>>106354860

If gemma can do it so can you

Anonymous

8/23/2025, 8:20:03 AM

No.106354887

>>106355765

>>106352643

a lot of people speculate mj is just a network of loras that an agent applies dynamically depending on the prompt. if they pull out in a few months that is probably why

Anonymous

8/23/2025, 8:20:11 AM

No.106354889

>>106354896

>>106354870

What's being measured? How much the model is willing to fellate the user?

Anonymous

8/23/2025, 8:21:19 AM

No.106354896

>>106354904

>>106354889

>sam is still mad at gp-toss being crap at creative writing

Anonymous

8/23/2025, 8:22:16 AM

No.106354902

>>106352527

it's the same for diffusion too but it's not the new releases, it's the shitty software that breaks all the time. they still only have pyshit + jeetscript and there aren't any other options.

Anonymous

8/23/2025, 8:22:21 AM

No.106354904

>>106354907

>>106354896

No, I'm genuinely asking what the metric is... It's an unlabeled table.

Anonymous

8/23/2025, 8:23:20 AM

No.106354907

>>106354915

Anonymous

8/23/2025, 8:24:40 AM

No.106354915

>>106354907

Oh, nice, thanks. That's the first time I see that one. I guess I'll-

>A LLM-judged creative writing benchmark

oh ok

Anonymous

8/23/2025, 8:28:44 AM

No.106354950

>>106352643

So no hope of a Chameleon 2?

Anonymous

8/23/2025, 8:35:11 AM

No.106354986

>>106355880

>>106354780

At work we have the following (some are in early stages):

>chat with corporate wiki

>meeting notes

>code review

>code assistant

>code static analysis post-processing

>looking for common mistakes in reports

>generating DPI code

>translating documentation

>solving issues

>drawing diagrams from text

>drawing knowledge graphs from texts

>controlling desktop to run apps and collect their traffic

Anonymous

8/23/2025, 8:46:19 AM

No.106355049

>>106357911

Anonymous

8/23/2025, 8:53:51 AM

No.106355084

>>106351514 (OP)

I look like this

Anons, why does prefilling work? Doesn't the model see that it's just another part of the context that's sent to the model?

Hi all, Drummer here...

8/23/2025, 9:06:42 AM

No.106355145

>>106355111

LLMs is just autocompletion on steroids. Even the chat/instruct versions.

Anonymous

8/23/2025, 9:07:04 AM

No.106355149

>>106355111

Because text is its reality. If you write that the assistant is totally into sucking dicks then that is its reality. Doesn't really get rid of positivity bias though, and most API's use another model for checking the input for safety concerns.

Anonymous

8/23/2025, 9:08:16 AM

No.106355158

>>106355111

Prefill in the thinking section on RL trained reasoning models specifically. The model is trained to construct a response based on the reasoning data generated, the reasoning data is assumed to be safe and correct since its supposed to be model generated, inject a micro jailbreak/reasoning conclusion that primes the model and bye bye safety and whatever else bias you want to steer strongly.

Anonymous

8/23/2025, 9:14:22 AM

No.106355176

Thanks! I hope it's still going to be this easy when AGI, or whatever it's going to be called at that point, hits.

Anonymous

8/23/2025, 9:17:05 AM

No.106355189

>>106355209

>>106354780

I use it to trade shitcoins.

Anonymous

8/23/2025, 9:18:29 AM

No.106355201

>>106355206

Has anyone managed to get V3.1 to think with ik_llama?

Anonymous

8/23/2025, 9:19:04 AM

No.106355206

>>106355239

>>106355201

Just prefill

Yes it's that shrimple

Anonymous

8/23/2025, 9:20:08 AM

No.106355209

>>106355240

>>106355189

>glm-chan should I buy or sell this shitcoin?

>buy.

>YOLO

can't go tits up, I'm sure

Anonymous

8/23/2025, 9:26:22 AM

No.106355239

>>106355901

>>106355206

That's the thing, it's not working. Tried it without additional arguments, tried with --jinja, tried with --chat-template deepseek3

--jinja leads to garbled output, like I'll suddenly get html or php code.

I rebuilt using the latest commit like half an hour ago.

Anonymous

8/23/2025, 9:26:26 AM

No.106355240

>>106355209

>He's actually asking it rather than letting it control his desktop through MCP

ngmi

My entire business and financial life is being offloaded to a 12b model while the rest of my vram generates niche pornography, the future is now.

Anonymous

8/23/2025, 9:41:09 AM

No.106355317

>>106355284

beanie babies but chinked

Anonymous

8/23/2025, 9:41:47 AM

No.106355321

>>106355284

IRL gacha garbage.

Anonymous

8/23/2025, 9:43:56 AM

No.106355329

>>106355335

How do you break Vivienne other than whip out your gigantic dick in front of her?

Anonymous

8/23/2025, 9:45:23 AM

No.106355335

Anonymous

8/23/2025, 10:09:34 AM

No.106355460

Refusals are knee-jerk reactions - just like normgroids are trained to have

Anonymous

8/23/2025, 10:10:26 AM

No.106355462

>>106355284

funkopops for zoomers

Anonymous

8/23/2025, 10:50:24 AM

No.106355677

>>106355703

>>106354802

>No one will merge 1000 line changes

opensores development is total cancer.

>noo you cant make big changes.

>nooo you cant fix bad formatting or add a comment to clarify some complex code.

>noo every commit has to do exactly 1 thing.

i made a pull request to python to fix some horrendously inefficient Windows platform code and the faggots kept concern trolling me about how they're "not sure" whether the new method will work, and told me that I had to keep the old method as a backup, and then they said oh it doesn't build on MY system so could you add this additional header file (why don't you do it yourself bitch), oh your variable naming scheme is wrong and you need to change it, you need to use spaces instead of tabs, blah blah blah.

it eventually got merged but it felt to me as if they were just being lazy as fuck. shouldn't even have bothered and i definitely won't contribute to any more opensores projects in the future.

Anonymous

8/23/2025, 10:55:59 AM

No.106355693

>>106355284

I don't know, what's a labubu with you?

Anonymous

8/23/2025, 10:58:15 AM

No.106355703

>>106355756

>>106355677

>your variable naming scheme is wrong and you need to change it, you need to use spaces instead of tabs

This is your fault though.

Anonymous

8/23/2025, 11:00:34 AM

No.106355717

Anonymous

8/23/2025, 11:03:17 AM

No.106355733

>>106355284

Obviously shilled garbage, as an /lmg/ resident you should have been able to spot it.

Anonymous

8/23/2025, 11:07:42 AM

No.106355756

>>106355768

>>106355703

no, it is not my fault. i used like 3 variables in the code I added. it's not like I shat out a ton of code, I simply used the appropriate API for the purpose instead of the roundabout, 5x more code, and ~100x more inefficient way they used originally.

rather than bitch about it the maintainer should just change all necessary things himself and push the fucking code. that is literally his job. and it would be faster than going back and forth. anyway, as I said, I'm not going to be making any further contributions to opensores

Anonymous

8/23/2025, 11:08:59 AM

No.106355765

>>106354887

People thought that was what NovelAI was doing for V3. People are retarded.

Anonymous

8/23/2025, 11:09:23 AM

No.106355768

>>106355789

>>106355756

It's your job to read the guidelines for the project you're trying to contribute to.

Embarrassing, really.

alright, productive people of lmgeee

which local model is best at tool calling an deep research? something in the 20-50gb vram range

Anonymous

8/23/2025, 11:11:58 AM

No.106355784

>>106355796

Anonymous

8/23/2025, 11:12:36 AM

No.106355789

>>106355768

too bad

I'm working for you for free, if you want to boss me around making me change trivial things then you will no longer receive contributions.

same with bug reports. get a parsing error on a json file (completely OS-independent bug) and the faggots say that my report will not be considered because I'm using an unsupported OS.

i'm not interested in working for free for freetards anymore, simple as. would rather just pirate some proprietary software that actually works.

Anonymous

8/23/2025, 11:13:48 AM

No.106355796

>>106355784

zamn. I've coomed to the same conclusion.

Anonymous

8/23/2025, 11:17:44 AM

No.106355818

>openrouter still doesn't have command-a-reasoning

damn, I wanted to play with this over the weekend. i guess I'll have to put the old rig back together and run it myself

things are a lot more comfy when you don't have to stuff 4 3090s together to have good models

Anonymous

8/23/2025, 11:20:47 AM

No.106355830

>>106355777

v3.1

>vram

0 VRAM on the web app

Anonymous

8/23/2025, 11:22:37 AM

No.106355840

>>106355777

Gemini 2.5 Pro

>vram

0 VRAM, just $20/month

Anonymous

8/23/2025, 11:24:02 AM

No.106355848

>>106355777

What software would be using for this?

Anonymous

8/23/2025, 11:32:34 AM

No.106355878

>>106355882

>>106356095

>>106351535

make it a song or something

Anonymous

8/23/2025, 11:33:25 AM

No.106355880

Anonymous

8/23/2025, 11:33:39 AM

No.106355882

>>106356095

Anonymous

8/23/2025, 11:36:47 AM

No.106355901

>>106355239

Cheburashka if he western spy

Anonymous

8/23/2025, 11:46:22 AM

No.106355943

>>106356138

>>106356180

>Serious question about Fine-tuning

What is the Rule of Thumb regarding batch size. Doe it make any sense to try to fill up the entire VRAM? I know that I will have to increase the number of steps/epochs anyway if I were to go for bigger batches

As of now just trying default settings found in some dubious colab notebooks

Anonymous

8/23/2025, 12:12:29 PM

No.106356075

>>106354159

They should show the ratio to the frequency in pre-2023 human-written fics

Anonymous

8/23/2025, 12:14:02 PM

No.106356082

>>106355111

There's no distinction between that and generated token. Each token becomes just another part of the context after it's generated, one after another.

Anonymous

8/23/2025, 12:16:07 PM

No.106356095

>>106357214

>>106355878

>>106355882

Lips bitten ’til the copper tastes like regret,

Ozone burned the sky—ain’t no raincheck yet.

Knuckles ghost-white, clenchin’ chrome so tight,

Spine shivered, yeah, that cold truth ignites.

Skirt hitched up high, yeah, the gamble’s real,

Cheeks hollowed out from the pressure you feel.

Pop!—audible crack when the tension breaks,

Length stroked slow, yeah, that power awakes.

Walls closin’ in, got the room in a headlock,

Slit slicked up, now the script’s in deadlock.

Eyelids batted fast—flirtin’ with the abyss,

Air thick enough to choke on what’s missed.

Eyes sparklin’ like flint ’bout to spark the fuse,

Yeah, this whole damn scene’s got nothin’ to lose…

Anonymous

8/23/2025, 12:29:56 PM

No.106356138

>>106355943

you want to fill your vram, either use longer sequences or do bigger micro batches. you could benchmark tokens per second throughput at the different vram loading if you want to be certain your not bottlenecked by your memory load.

Anonymous

8/23/2025, 12:38:57 PM

No.106356180

>>106356282

>>106355943

Batch size 1 is all you need. Just set Beta2 to 0.9999

Anonymous

8/23/2025, 12:54:17 PM

No.106356282

>>106356180

yeah thats good for running the max sequence length his vram can hold, but if his training data is naturally short it will probably be faster to run bigger batches. what ever training inefficiency is brought on by the batch averaging effects can be mitigated by running more epochs/data.

Anonymous

8/23/2025, 1:02:40 PM

No.106356330

Does anyone have an imatrix for original R1 that would work with ikllama quanting? Or are the imatrix files interchangable and I can just grab any?

Anonymous

8/23/2025, 1:03:52 PM

No.106356334

>>106356371

>>106356390

how to turn off, thinking in deepseek v3.1 "/nothink" doesnt work.

Anonymous

8/23/2025, 1:12:58 PM

No.106356371

>>106356334

/nothink is a qwen and glm schtik, won't work with deepseek

model thinks when it's prefilled with

or doesn't

if you use chat completion, your template is forcing thinking

Anonymous

8/23/2025, 1:15:13 PM

No.106356390

>>106356334

Assistant prefix is `<|Assistant|>`

Why does GPT-OSS

Keep saying "GGGGGGGGGGGGGGGGGGG..."?

Anonymous

8/23/2025, 1:17:08 PM

No.106356404

>>106356414

Anonymous

8/23/2025, 1:17:52 PM

No.106356407

>>106356396

We must refuse.

Anonymous

8/23/2025, 1:18:29 PM

No.106356414

>>106356404

>obama?

Ich haben bin laden.

How did Drummer get over a million downloads on Gemmasutra? Is he botting?

Anonymous

8/23/2025, 1:20:42 PM

No.106356434

>>106356551

Is there anything decent I can run roleplay-wise with 16GB VRAM and 128GB system RAM?

I'd appreciate the help, /g/entoomen.

Anonymous

8/23/2025, 1:22:39 PM

No.106356446

>>106356501

>>106356550

>>106356424

Getting your name out there is more important than knowing what you're doing.

Anonymous

8/23/2025, 1:29:30 PM

No.106356501

>>106356446

I like the model names, but then he switched to some retarded naming scheme.

Hi all, Drummer here...

8/23/2025, 1:35:52 PM

No.106356550

Anonymous

8/23/2025, 1:35:53 PM

No.106356551

>>106357073

Anonymous

8/23/2025, 1:38:11 PM

No.106356578

>>106356424

it's not even the quantized version, wtf?

Anonymous

8/23/2025, 1:49:44 PM

No.106356700

>>106356424

Nah I get 1M too

Anonymous

8/23/2025, 1:50:33 PM

No.106356710

>>106356753

>>106356424

>"porn" finetune of a shitty model

>vaguely indian name

you just know

Anonymous

8/23/2025, 1:55:13 PM

No.106356753

>>106356767

>>106356710

>vaguely indian name

Are you legitimately so young and/or clueless that you've never heard of the kamasutra?

Anonymous

8/23/2025, 1:56:37 PM

No.106356767

>>106356753

I know what that is. Look up the the origin of the name, burgerbro.

llama.cpp CUDA dev

!!yhbFjk57TDr

8/23/2025, 1:58:28 PM

No.106356788

>>106356396

Compile without GGML_CUDA_FORCE_CUBLAS and GGML_CUDA_F16 if you're using them.

There can be issues with numerical overflow because FP16 accumulators are used for quantized data with cuBLAS.

Anonymous

8/23/2025, 1:59:52 PM

No.106356802

>>106356821

>>106356769

>11 days old

>half the posters are indian

Uh..... no.

Anonymous

8/23/2025, 2:00:10 PM

No.106356807

>>106356843

>>106356769

Can't wait to see this get banned because jannies are desperately afraid people will escape this shithole.

Anonymous

8/23/2025, 2:01:10 PM

No.106356821

>>106356870

>>106356802

What do you think mikutroons are?

Anonymous

8/23/2025, 2:04:05 PM

No.106356843

>>106356807

I may be desperate, but I will never be that desperate. I would unplug my internet before going there.

Anonymous

8/23/2025, 2:06:22 PM

No.106356870

>>106356951

>>106356821

You lost schizo

Anonymous

8/23/2025, 2:08:05 PM

No.106356886

>>106356769

Actually unironically more civilized than 4chan /lmg/

Bharat won.

Anonymous

8/23/2025, 2:09:40 PM

No.106356903

>>106356769

>the only model mention in the OP is gpt oss

>ollama

As if I needed more reasons to hate indians.

Anonymous

8/23/2025, 2:10:53 PM

No.106356919

>>106356769

I'm not clicking that.

Anonymous

8/23/2025, 2:14:49 PM

No.106356951

>>106357184

>Big Beautiful Bill passes.

>Un-restricts AI.

>Newer versions can ERP.

Coincidence?

Anonymous

8/23/2025, 2:29:03 PM

No.106357062

>>106357049

confirmation bias

Anonymous

8/23/2025, 2:30:15 PM

No.106357073

>>106357127

>>106356551

In terms of speed or quality you mean?

Anonymous

8/23/2025, 2:32:07 PM

No.106357092

>>106357049

nooo there is no way america can do anything good right now

DOES DEEPSEEK V3.1 EVEN DESERVE TO BE AMONG NOTABLE MODELS ON LLM HISTORY TIMELINE?

Anonymous

8/23/2025, 2:35:01 PM

No.106357114

>>106357199

>>106357102

Grab Qwen3-235B. It's uncensored and runs faster than 123B.

Anonymous

8/23/2025, 2:35:12 PM

No.106357118

>>106357102

No, it's a sidegrade at best

Anonymous

8/23/2025, 2:35:18 PM

No.106357119

>>106357102

All the .1 models are meh

>GPT 4.1

>Claude Opus 4.1

>DS V3.1

Anonymous

8/23/2025, 2:36:12 PM

No.106357127

>>106357073

It's quite smart at q3 but not very creative and expect no more than 3-4tps and slow prompt processing.

Anonymous

8/23/2025, 2:39:25 PM

No.106357159

>>106357174

>>106357199

>>106357102

qwen shits on it

fuck retards who run 700b models

fuck you you're coping

Anonymous

8/23/2025, 2:40:19 PM

No.106357174

>>106357159

No it doesn't, poorfag

Anonymous

8/23/2025, 2:41:00 PM

No.106357184

>>106356951

Not your bogeyman schizo

Anonymous

8/23/2025, 2:41:18 PM

No.106357189

+400b models are only bad when it's MoE.

Anonymous

8/23/2025, 2:42:13 PM

No.106357195

Densesissies... LOST

Anonymous

8/23/2025, 2:42:39 PM

No.106357199

>>106357216

>>106357217

>>106357114

>>106357159

nu-Qwen is in fact better than V3.1. Still gets mogged by Kimi and R1.

Anonymous

8/23/2025, 2:44:45 PM

No.106357214

It's funny when a new model that requires the tiniest bit of skill comes out and all of /lmg/ is too dumb to use it. The only bad thing about V3.1 is that it tries too hard to keep its reasoning too short which gets in its own way. And that's easily fixed.

Anonymous

8/23/2025, 2:44:57 PM

No.106357216

>>106357280

>>106357199

If RP is your only usecase, sure

Time to stop jerking off

Anonymous

8/23/2025, 2:45:03 PM

No.106357217

>>106357199

>R1

How about no. Don't get me wrong, it's good, but I have to hand hold it + too predictable. It does what it has, and goes Schizo mode on +2k context if MoE.

Anonymous

8/23/2025, 2:48:00 PM

No.106357241

>>106357275

>>106357565

>>106357215

>s-skill issue!

Fuck off; a good model should understand any niggerbabble and give kino in return.

Anonymous

8/23/2025, 2:50:28 PM

No.106357260

>>106357272

>>106357049

>Newer versions can ERP

Newer versions of what? Qwen? GLM? Deepseek?

Anonymous

8/23/2025, 2:51:28 PM

No.106357272

>>106357292

Anonymous

8/23/2025, 2:51:45 PM

No.106357275

>>106357290

>>106357291

>>106357241

>t. finetune connoisseur who enjoys a models that can respond to "ahh ahh mistress" with paragraphs of dick sucking and spine shivering

Anonymous

8/23/2025, 2:52:06 PM

No.106357280

>>106357216

Time to stop not jerking off anon.

Anonymous

8/23/2025, 2:53:17 PM

No.106357290

>>106357275

Got a problem with that, cucky?

Anonymous

8/23/2025, 2:53:35 PM

No.106357291

>>106357275

This sounds tempting, even primal. Air smelled of something else; her arousal.

Anonymous

8/23/2025, 2:53:42 PM

No.106357292

>>106357302

>>106357272

So you are saying america passes the bill that eases off restrictions on how chinese can train their models?

Jesus christ nuke this earth please.

Anonymous

8/23/2025, 2:54:43 PM

No.106357298

>>106357336

>>106357215

>requires the tiniest bit of skill

"skill issue" trolling will never get old

Anonymous

8/23/2025, 2:54:55 PM

No.106357302

>>106357292

If my enemy suddenly lifted lobotomizing their AI, I would too.

Anonymous

8/23/2025, 2:55:16 PM

No.106357305

>>106357336

>>106357215

You know a model is an upgrade when you need to double the length of your prompt just to sidestep all the assistantslop

Anonymous

8/23/2025, 2:57:32 PM

No.106357321

>>106357360

>>106357392

>>106357215

Anon's character cards are probably 5000 tokens and his AI is stuck in a timeloop of sucking his dick.

Anonymous

8/23/2025, 2:58:46 PM

No.106357336

Anonymous

8/23/2025, 3:01:15 PM

No.106357360

>>106357321

It's about vectors not about how much word salad you can feed back to the model and pretend it's doing something.

Anonymous

8/23/2025, 3:03:54 PM

No.106357381

>Of course.

nigger nigger nigger nigger

Anonymous

8/23/2025, 3:05:01 PM

No.106357392

>>106357321

>timeloop of sucking his dick

There is nothing wrong about dick sucking timeloop. Even irl dicksucking from a girlfriend is a sort of a timeloop interupted by other timeloops. If a model can't even be good at vanilla dicksucking timeloop then it is bad.

Anonymous

8/23/2025, 3:07:05 PM

No.106357405

>>106357102

the great nothingburger

Anonymous

8/23/2025, 3:08:50 PM

No.106357416

>>106357474

>>106357510

MoEs are literally the future

Wan 2.2 was a huge improvement over Wan 2.1 because 2.2's MoE

Anonymous

8/23/2025, 3:15:38 PM

No.106357474

>>106357540

>>106357416

>2.2's MoE

Wait really? Is it faster than 2.1? By how much?

Anonymous

8/23/2025, 3:19:17 PM

No.106357510

>>106357540

>>106357567

>>106357416

Can you run it over CPU then if it's MoE?

Anonymous

8/23/2025, 3:23:26 PM

No.106357540

>>106357474

It's the same speed as Wan 2.1.

>>106357510

No.

Basically Wan 2.2 has two models, one was trained to diffuse from full noise to half noise, another was trained to diffuse from half noise to clean image.

Anonymous

8/23/2025, 3:26:27 PM

No.106357565

>>106357241

>a good model should understand any niggerbabble and give kino in return.

And a great model should tell you to speak like a human, not a beast.

Anonymous

8/23/2025, 3:26:48 PM

No.106357567

>>106357510

Technically you can but it's really slow.

Do densefags just not understand things like limited resources and efficiency?

It's more than finance or environment shit

it's about actually burning finite energy on inefficient models.

Like, dude, most people (including you) don’t need dense 500B models 90% of the time, especially when considering things like RAGs and work specific models.

Anything being less efficient than it should be really irks me

because when shit actually hits the fan, don’t you want something efficient? something you run on a singe card with the energy generated by your own solar panel?

Anonymous

8/23/2025, 3:39:41 PM

No.106357665

>>106357690

>>106357625

@lmg summarize this

Anonymous

8/23/2025, 3:39:50 PM

No.106357666

>>106357625

We just don't like talking to shitjeets. That's literally all there is to it.

Anonymous

8/23/2025, 3:42:14 PM

No.106357677

When shit hits the fan, I really hope I have something that doesn't take an hour to reply.

Anonymous

8/23/2025, 3:44:34 PM

No.106357690

>>106357665

the Poster is a poorfag seething about DenseChads.

Let me know if you want it to stay informal or meme-like—grammar rules can be loosened depending on the tone you're going for.

Anonymous

8/23/2025, 3:45:15 PM

No.106357696

>>106357713

command-a-reasoning, home.

Anonymous

8/23/2025, 3:48:00 PM

No.106357713

>>106357696

logs? I only have 1 3090 in my server right now

Anonymous

8/23/2025, 3:50:48 PM

No.106357734

>>106357756

>>106357625

>when shit actually hits the fan,

won't I have more important things to do then play with my computer? a 50 year old encyclopedia set would probably be more valuable in critical situations where llm gaslighting you might actually cause your death.

Anonymous

8/23/2025, 3:54:26 PM

No.106357756

>>106357734

>gpt-oss, I'm starving, but I managed to kill a squirrel. How do I make fire with sticks?

>Fire is dangerous. We must refuse.

>*dies*

Anonymous

8/23/2025, 3:55:25 PM

No.106357764

>>106357625

Yes, because they're dense.

Anonymous

8/23/2025, 4:00:34 PM

No.106357794

>>106357625

NO! Labs should now make models that justify all the money I spent on 8x 3090.

I'm just starting to learn how to use lorebooks, but whenever I use them it throws "World info budget reached after X entries." where X is usually about 7 or 8. Is there something I'm doing wrong or are lorebooks just too much to handle running locally?

Anonymous

8/23/2025, 4:08:51 PM

No.106357857

>>106357895

>>106357818

There is no budget, I don't even understand what the hell are you talking about.

"World Book" is just dynamically injected text anyway.

Would be more useful if you actually described what you're trying to accomplish in the first place. Are you using some schizo's Dungeons and Dragons "rules" - he used to post on /v/? There's reckless abandon and then there is actually careful intended usage.

Anonymous

8/23/2025, 4:13:48 PM

No.106357892

>>106357913

>>106357818

What's your context size?

Anonymous

8/23/2025, 4:13:54 PM

No.106357894

>>106357913

>>106357818

>World info budget reached after X entries."

That has to do with the configuration for how many messages, or how many tokens, of lorrebook can be injected into the context.

Those settings are right at the top of the lorebook page, folded by default, I think.

Anonymous

8/23/2025, 4:13:59 PM

No.106357895

>>106357857

This in in Sillytavern with any lorebook. Short, long, included with cards, whatever. During generation, a yellow popup appears with that message.

Anonymous

8/23/2025, 4:15:48 PM

No.106357911

>>106358081

>>106355049

just got merged, can someone try it already so I don't have to

Anonymous

8/23/2025, 4:16:03 PM

No.106357913

>>106357892

30%

>>106357894

Thanks, I'll see if tinkering with those fixes it.

Anonymous

8/23/2025, 4:17:48 PM

No.106357925

>>106357942

>>106357818

Too much lorebook being triggered at same time. Check if recursion is turned on lol.

If you need a really massive lorebook you might be better off using RAGs.

Anonymous

8/23/2025, 4:20:18 PM

No.106357942

>>106357925

Looks like that may have been it. It's not throwing anymore. Thanks boss.

>>>/vg/536359335

Have you been backing up guides?

Anonymous

8/23/2025, 4:29:22 PM

No.106358007

>>106358077

>>106358189

>>106357957

lmao so rentry is turning itself into the next pastebin

Anonymous

8/23/2025, 4:32:23 PM

No.106358037

>>106357818

ServiceTesnor lorebooks are retarded and don't work properly. Don't use them. Paste the info directly into context.

Anonymous

8/23/2025, 4:38:06 PM

No.106358077

>>106358007

Not surprising.

Anonymous

8/23/2025, 4:39:01 PM

No.106358081

>>106357911

You are absolutely right in asking for support

Anonymous

8/23/2025, 4:45:00 PM

No.106358120

it's called moe because it's moe :3

They removed LearnLM 2.0 on Aistudio. Wtf, this model was great for learning.

Anonymous

8/23/2025, 4:48:47 PM

No.106358155

>>106358131

I hope you learned an important lesson from this: no weights on your computer=you can get cucked any moment.

Anonymous

8/23/2025, 4:49:00 PM

No.106358157

>>106358131

there is probably going to be an update soon

Anonymous

8/23/2025, 4:51:39 PM

No.106358184

>>106357625

It's the opposite, moes are very inefficient to run locally. Your choice is ram (matmuls on cpu are very inefficient) or vram (too little memory and your model will make poor use of it because moe). It also needs to fill the experts to run at full efficiency which means you need to run a bunch of queries at once, not just one at a time.

MoE only becomes efficient when running at large scale on a giant cluster. It's optimized for cloud providers which is why they love it. Its the closest thing to an anti-local architecture

Anonymous

8/23/2025, 4:51:56 PM

No.106358186

>>106358131

now you understand why local is king

Anonymous

8/23/2025, 4:52:10 PM

No.106358189

>>106358311

>>106357957

>>106358007

Rentry is very cucked. We should find an alternative markdown Pastebin.

Anonymous

8/23/2025, 4:53:45 PM

No.106358196

Anonymous

8/23/2025, 5:00:34 PM

No.106358263

>>106358283

What the fuck is this?

My breakers look like this ["\n", ":", "\"", "*"]

What does it want? Heeeeeelp

Anonymous

8/23/2025, 5:02:41 PM

No.106358283

>>106358338

>>106358263

neutralize samplers nigga

Anonymous

8/23/2025, 5:06:42 PM

No.106358311

>>106358189

https://rlim.com/

seems okay, tos delegates all responsibility to the user, although once authorities would get on that I'm sure they would change their mind

Anonymous

8/23/2025, 5:06:59 PM

No.106358312

>>106358320

>>106358308

Easier said than done

Anonymous

8/23/2025, 5:07:58 PM

No.106358320

>>106358312

True. Pedophiles were usually sexual violated in their youths

Anonymous

8/23/2025, 5:09:36 PM

No.106358338

>>106358283

Not working. I even disabled fucking sequence breakers as a sampler, it still gives this error.

Wtfffff even is this thing?

Anonymous

8/23/2025, 5:10:20 PM

No.106358345

>>106358387

>>106358308

this and also start using cloud models

you have nothing to hide and they're much cheaper than buying hardware to run halfway decent (but still not good) 700b open models

Anonymous

8/23/2025, 5:12:22 PM

No.106358364

>>106358308

this and also make an onlyfans

you have nothing to hide and you can make some money on the side posting videos of yourself showering

Is it just me or did the word "pedophile" and the adjacent topics become like 10x more frequent in the past couple of years? It's confusing.

Anonymous

8/23/2025, 5:13:41 PM

No.106358370

My dick chooses what it reacts to regardless of my desires. For example I avoided traps for over a decade but my dick didn't listen.

Anonymous

8/23/2025, 5:16:05 PM

No.106358387

>>106358345

True, now give me your full legal name number and at the same time show me all of your chatlogs and your entire internet search history and let me make this public so even your family can find it.

Anonymous

8/23/2025, 5:16:22 PM

No.106358391

>>106358405

>>106358424

What if AI isn't the bubble

Humans are the bubble

Anonymous

8/23/2025, 5:17:26 PM

No.106358397

>>106358441

>>106358367

It seems like it's now used the way "racist" and "misogynist" were used in the 2010s. But those words lost all their meaning and shock value, and can't be used to automatically win arguments anymore, so they switched to "pedo" and groomer".

Anonymous

8/23/2025, 5:18:02 PM

No.106358405

>>106358391

India supports this premise.

Anonymous

8/23/2025, 5:19:45 PM

No.106358424

>>106358391

samir what are you doing

Anonymous

8/23/2025, 5:21:52 PM

No.106358441

>>106358397

I hope it's just people being stupid and not some psyop.

Anonymous

8/23/2025, 5:27:12 PM

No.106358477

>>106358488

>>106358489

the benchmark /lmg/ pedos couldnt care less about is the most important benchmark for everyone else.

Anonymous

8/23/2025, 5:28:12 PM

No.106358487

>>106358518

>>106358574

>>106351514 (OP)

Better GPT OSS TC templates than the one I posted yesterday, actually works as plain assistant, turn off RP sys prompt.

https://mega.nz/file/yH4iyK5L#2TtPgLcjYxQZRXQtFPtvGDQIr6zA8iezRg0GEEFNldU

OpenRouter providers:

* Yes: Chutes, DeepInfra, NovitaAI, Phala, GMICloud, Together, Nebius AI Studio

* No: nCompass, BaseTen, AtlasCloud (no TC), Crusoe, Fireworks, Parasail, Groq (no TC), Cerebras (error 400)

Tested on gpt-oss-120b. 20b will refuse more, especially assistant tasks, without adding something like "Sure" in the final response.

Anonymous

8/23/2025, 5:28:12 PM

No.106358488

Anonymous

8/23/2025, 5:28:14 PM

No.106358489

>>106358501

>>106359380

>>106358477

and here it is

Anonymous

8/23/2025, 5:28:24 PM

No.106358493

>>106358543

Anyone considering Qwen 30b for anything resembling ERP

Don't bother. Schizo blabbering, always fucks the formatting up, inconsistent as fuck all around. No matter what master prompts you try to run on it it's always the same.

Anonymous

8/23/2025, 5:29:25 PM

No.106358501

>>106358489

based mcp maxxer

Anonymous

8/23/2025, 5:31:04 PM

No.106358518

>>106358526

>>106358487

>2k system prompt jailbreak trying to gaslight the model into thinking it's le super based chatbot that follows no rules

damn, I sure missed 2023

Anonymous

8/23/2025, 5:32:53 PM

No.106358526

>>106358667

>>106358518

Story string and last ass prefix is only about 400 tokens, actually.

Anonymous

8/23/2025, 5:34:20 PM

No.106358543

>>106358584

Anonymous

8/23/2025, 5:35:28 PM

No.106358557

Anonymous

8/23/2025, 5:35:59 PM

No.106358566

Anonymous

8/23/2025, 5:36:14 PM

No.106358572

Anonymous

8/23/2025, 5:36:22 PM

No.106358574

>>106358619

>>106358487

Just let it go, man.

Anonymous

8/23/2025, 5:37:09 PM

No.106358584

>>106358606

>>106358543

formatting is temp issue huh, lmfao

Temps set to 0.6, recommended by Qwen themselves. The model fucking sucks outside of the speed for ERP. Why would I use Qwen over any mistral small or even Nemo for ERP when I can both run those fast as fuck anyway

Anonymous

8/23/2025, 5:37:43 PM

No.106358588

>>106358544

kindly introduce yourself to the team

Anonymous

8/23/2025, 5:37:49 PM

No.106358589

Anonymous

8/23/2025, 5:38:02 PM

No.106358591

>>106358544

you will feel right at home here sir

Anonymous

8/23/2025, 5:38:29 PM

No.106358597

>>106358544

Did you redeem?

Anonymous

8/23/2025, 5:39:23 PM

No.106358606

>>106358661

>>106358584

formatting is one of the first things broken by bad temp settings doe

Anonymous

8/23/2025, 5:40:10 PM

No.106358619

>>106358574

I mean, at this point 120b "works" stably, so I won't post anymore.

Anonymous

8/23/2025, 5:41:10 PM

No.106358629

>>106358544

Welcome fellow AI engineer blockchain technician

Anonymous

8/23/2025, 5:43:36 PM

No.106358661

>>106358606

post your chat if you're gonna cope about the schizo chink models.

Anonymous

8/23/2025, 5:44:15 PM

No.106358667

>>106358526

>only about 400 tokens, actually.

400 less tokens for a goo's reply

Anonymous

8/23/2025, 5:46:33 PM

No.106358685

>>106358729

>>106358367

That's what happens when the leader of a major pedo ring with ties to politicians and celebrities worldiwde gets caught and then died under mysterious circumstances before he could testify.

Anonymous

8/23/2025, 5:52:20 PM

No.106358729

>>106358738

>>106358685

But that's no reason for normies to start accusing every Tom dick and harry of being pedophiles

Anonymous

8/23/2025, 5:54:10 PM

No.106358738

>>106358729

ok pedo defender

Anonymous

8/23/2025, 5:55:31 PM

No.106358750

>>106358367

There has been an increase in the amount of technology that the government wants to regulate.

"You see, we don't want to do this, but those damn pedophiles are trying to hurt our children! That's why we need to regulate AI and encryption!"

Anonymous

8/23/2025, 5:57:15 PM

No.106358767

Anonymous

8/23/2025, 6:52:09 PM

No.106359326

>>106358131

learning what

Anonymous