/ldg/ - Local Diffusion General

Anonymous

9/3/2025, 5:22:55 AM

No.106469514

[Report]

>>106469555

Blessed thread of frenship

Anonymous

9/3/2025, 5:23:15 AM

No.106469516

[Report]

>>106469555

They tell me this thread is blessed

neta is the future for anime start learning now

https://neta-lumina-style.tz03.xyz/

p ost. cardnon

9/3/2025, 5:29:15 AM

No.106469555

[Report]

>>106469516

>>106469514

all of Gods children are blessed by his grace

Anonymous

9/3/2025, 5:29:25 AM

No.106469558

[Report]

>>106469701

Anonymous

9/3/2025, 5:29:51 AM

No.106469565

[Report]

>>106469593

>>106469536

>.xyz

Oh yes, anon, this link is definitely trustworthy.

Anonymous

9/3/2025, 5:33:05 AM

No.106469593

[Report]

Anonymous

9/3/2025, 5:36:00 AM

No.106469612

[Report]

>>106469621

>normalfags calling other normalfags out as being too eager to use the word "clanker" as a slur

ai bros stay winning

Anonymous

9/3/2025, 5:37:41 AM

No.106469621

[Report]

>>106469642

>>106469612

I didn't realize styxhexenhammer was this big

Anonymous

9/3/2025, 5:38:09 AM

No.106469624

[Report]

is there any hope for local t2v? except for making shizo videos, local t2v is obsolete. only local i2v is excellent for serious things

Anonymous

9/3/2025, 5:39:31 AM

No.106469633

[Report]

>>106469625

It's pretty good for porn with porn LoRAs but yeah most videos end up like a fever dream.

Anonymous

9/3/2025, 5:39:45 AM

No.106469634

[Report]

>>106469625

I mean, we’re basically in the Ford model an era of this stuff and here you are writing off the future of cars. Bit silly don’t you think?

Anonymous

9/3/2025, 5:40:15 AM

No.106469642

[Report]

>>106469621

i dont know who that is or how he relates

Anonymous

9/3/2025, 5:40:42 AM

No.106469643

[Report]

>>106469625

It's good for producing extremely plausible realistic single frames from the video.

Like if candid realism if your goal I'd just extract a frame from i2v

Anonymous

9/3/2025, 5:41:42 AM

No.106469652

[Report]

>>106469660

Anti AI fags have gone fucking nuts the last few months.

Anonymous

9/3/2025, 5:43:16 AM

No.106469658

[Report]

>>106469701

whats the best tool for automated video captioning? I want to try training a t2v lora but I dont wanna caption my entire dataset by hand. I'm trying chatgpt but it cant caption videos for shit.

Anonymous

9/3/2025, 5:43:26 AM

No.106469660

[Report]

>>106469652

>the last few months.

That's an understatement

Anonymous

9/3/2025, 5:51:05 AM

No.106469699

[Report]

>>106469625

remember how anon hyped Wan T2I? shame the diaperfag decided to tune qwen instead. maybe the bigasp guy will do it.

>>106469658

Gemini. It shits all over the other models for OCR stuff.

For example.

>>106469558

Based on the video provided, here is a description of what happens:

The video features two characters from the TV series Star Trek: The Next Generation: the android Lieutenant Commander Data (on the left) and Lieutenant Commander Geordi La Forge (on the right). They are seated side-by-side in the cockpit of what appears to be a shuttlecraft.

The sequence of events is as follows:

Data holds up a blue and white package resembling a pack of cigarettes.

He removes a single cigarette and places it in his mouth.

The tip of the cigarette spontaneously lights up on its own.

Data takes a drag from the cigarette and then exhales smoke.

Throughout this entire action, Geordi La Forge looks at Data with a surprised and bewildered expression.

Anonymous

9/3/2025, 5:52:13 AM

No.106469703

[Report]

>>106469536

>Boris Vallejo

Looked up his style and... that's quite sad. Of course, nano banana can pull of his style just fine.

Anonymous

9/3/2025, 5:57:57 AM

No.106469729

[Report]

love me ambiguous language

According to Gemini

In this video:

The scene opens with Captain Picard looking thoughtful in his ready room. In the background, the Vocaloid star Hatsune Miku, now impressively dressed in a red Starfleet command uniform, walks into the room holding a Starfleet PADD (Personal Access Display Device).

She stops and stands behind the captain. Then, in a move that would surprise even the Borg, Picard picks up a 20th-century-style handgun from his desk and calmly examines it.

It's a fantastic edit, but a few small clues give it away:

The Starfleet Guest Star: As you know, Hatsune Miku, while a cultural icon here in Japan and worldwide, never actually made it onto the Enterprise-D's crew roster.

The Firearm: The biggest giveaway is the prop. Starfleet exclusively uses energy-based weapons like phasers. A projectile-based handgun like that is a massive anachronism and would be completely out of place in Picard's ready room, unless he was in the middle of a Dixon Hill holodeck program.

This is another great example of combining real footage with a digitally inserted character to create a completely new, surreal, and humorous narrative.

Anonymous

9/3/2025, 6:31:26 AM

No.106469882

[Report]

>>106469755

Isnt gemini paid? Do you use with some local client like kobold or silly tavern? Im trying to find good uncensored llm for images

Anonymous

9/3/2025, 6:31:29 AM

No.106469883

[Report]

>>106470154

>>106469755

those are not good captions

>>106469705

>Two elf female characters with pointed ears. The character in the foreground has long, wavy blonde hair, light skin, and wears a blue, off-shoulder dress with white fur trim. She has a worried expression and blue markings on her forehead. The character behind her has long, orange hair, light brown skin, and wears a sleeveless purple top. She has green markings on her face and is embracing the blonde-haired character from behind, with one arm around her shoulder. The background is a textured, dark green and blue gradient, resembling a forest or cave

Anonymous

9/3/2025, 6:34:40 AM

No.106469899

[Report]

>>106469887

basterd bitch delete this

Anonymous

9/3/2025, 6:39:30 AM

No.106469922

[Report]

What's the most viable captioning method for deviantart-tier freak fetish stuff?

should i get my lazy ass out of bed and finish installing wan? how long do gens take with a 5090

Anonymous

9/3/2025, 6:56:56 AM

No.106470011

[Report]

>>106470030

>>106469998

they'll take no time at all you fuckin ass

Anonymous

9/3/2025, 7:00:38 AM

No.106470024

[Report]

>>106470038

>>106469998

sure / depends on settings but in the order of some minutes with many settings. you can do 1 minute gens at a not too terrible resolution if you take the fast options with 4 steps or so.

Anonymous

9/3/2025, 7:01:45 AM

No.106470030

[Report]

>>106470011

my bad man, i genuinely got no clue on this shit

Anonymous

9/3/2025, 7:02:49 AM

No.106470038

[Report]

Anonymous

9/3/2025, 7:03:32 AM

No.106470041

[Report]

>>106474735

>>106469998

it takes me roughly 4-5 minutes for a 720p 8 second video

Anonymous

9/3/2025, 7:14:33 AM

No.106470084

[Report]

>>106470020

Just like my futa doujins!

Anonymous

9/3/2025, 7:14:34 AM

No.106470085

[Report]

>>106470165

>>106469998

2.2 is so good it convinced me to try training video loras.

>>106469701

thanks bruv. got everything captioned, surprised it let me do them all for free

Anonymous

9/3/2025, 7:22:47 AM

No.106470125

[Report]

Damn, AI inventing new instruments.

Anonymous

9/3/2025, 7:27:40 AM

No.106470141

[Report]

>>106470148

>>106470133

very mongolian

Anonymous

9/3/2025, 7:28:58 AM

No.106470148

[Report]

>>106470141

I fed Gemini a Batzorig video screenshot lol

Anonymous

9/3/2025, 7:30:19 AM

No.106470154

[Report]

>>106469883

Yeah but I didn't prompt it how to caption. I was just more interested to see if it could identify what was happening in the video at all.

Anonymous

9/3/2025, 7:32:17 AM

No.106470165

[Report]

>>106470085

>surprised it let me do them all for free

np. I assume they do it as a means to capture audience share. Their free stuff is very generous. I honestly just pay for gemini as my GPUs are usually blasting away at training most of the time anyway. It's the best model for captioning in my opinion. And the fact google made veo 3 should indicate as much.

>>106469887

>>106470020

>Reference image

Literally just prompt for the guy

>A caveman carrying a wounded woman while pointing a spear a large flying bird over them while sitting atop a rocky hill by Boris Vallejo

https://files.catbox.moe/zcos9q.jpeg

Local would never.

Anonymous

9/3/2025, 7:37:10 AM

No.106470186

[Report]

>>106470209

did comfy fix the shitty qwen edit text encode node?

Anonymous

9/3/2025, 7:43:09 AM

No.106470209

[Report]

>>106470186

every fix breaks two more things. python was a mistake

Anonymous

9/3/2025, 7:44:06 AM

No.106470211

[Report]

>>106470850

Anonymous

9/3/2025, 7:49:33 AM

No.106470233

[Report]

>>106470176

Do you have some special version lol? I get this on nano which isn't even close.

Anonymous

9/3/2025, 7:50:01 AM

No.106470235

[Report]

>>106470324

>>106470176

Didn't think it would recognize it. Flux can't really handle a more complex composition like that.

Anonymous

9/3/2025, 7:51:48 AM

No.106470246

[Report]

Anonymous

9/3/2025, 8:12:02 AM

No.106470324

[Report]

>>106470235

Not bad. Unlike the original nano banana can't show me booba, so a Chroma LoRA would win anyway.

As for the results you're getting with nano banana, no idea what you're using. I can get his likeness right away even across other seeds.

>A caveman with a shield standing atop a rocky hill while goblins are incoming. A woman kneels beside him by Boris Vallejo

https://files.catbox.moe/ak75k4.jpeg

Anonymous

9/3/2025, 8:15:30 AM

No.106470339

[Report]

Anonymous

9/3/2025, 9:39:35 AM

No.106470715

[Report]

ultra cozy

Anonymous

9/3/2025, 9:56:30 AM

No.106470779

[Report]

>>106470835

is there anything as good as veo3?

Anonymous

9/3/2025, 10:08:55 AM

No.106470835

[Report]

>>106470779

Yes veo3 is as good as veo3.

Anonymous

9/3/2025, 10:10:54 AM

No.106470850

[Report]

>>106470211

if not for toes I would say it's not a gen

Anonymous

9/3/2025, 10:18:40 AM

No.106470895

[Report]

>>106470885

I was not aware she had a cat.

Anonymous

9/3/2025, 10:20:26 AM

No.106470908

[Report]

2.2 for vace soon?

Anonymous

9/3/2025, 10:30:49 AM

No.106470940

[Report]

>>106470956

Anonymous

9/3/2025, 10:36:03 AM

No.106470956

[Report]

>>106470940

Expected miku to walk out of that...This thread is getting to me

Anonymous

9/3/2025, 10:46:36 AM

No.106470993

[Report]

>>106471015

>>106470885

Who are those two from? I recognize them from something...

Anonymous

9/3/2025, 10:48:57 AM

No.106471007

[Report]

not what I wanted at all but okay.

Anonymous

9/3/2025, 10:49:24 AM

No.106471012

[Report]

bros... i beg... do loras work with qwen nunchaku yet... bros...

Anonymous

9/3/2025, 10:49:59 AM

No.106471015

[Report]

>>106470993

Himawari and the flat chested one from Yuru Yuri

Anonymous

9/3/2025, 10:50:44 AM

No.106471018

[Report]

>>106469701

>He removes a single cigarette and places it in his mouth.

obviously wrong

he was holding the cigarette already

Anonymous

9/3/2025, 10:54:51 AM

No.106471031

[Report]

>>106471035

>>106469755

>Then, in a move that would surprise even the Borg

wtf

implying they have feels

Anonymous

9/3/2025, 10:56:10 AM

No.106471035

[Report]

>>106471031

There was that one episode where the borg had feels.

>>106470885

Animate this.

Anonymous

9/3/2025, 11:06:15 AM

No.106471067

[Report]

Anonymous

9/3/2025, 11:27:47 AM

No.106471172

[Report]

>>106471461

>New furk post

See any issues here?

Anonymous

9/3/2025, 11:44:01 AM

No.106471248

[Report]

>>106471262

>>106471219

>water is wet

Anonymous

9/3/2025, 11:46:36 AM

No.106471259

[Report]

hey bros anyone got a spare 5090 to donate :) I promise ill train some qwen ToT loras with it

Anonymous

9/3/2025, 11:47:14 AM

No.106471262

[Report]

>>106471248

I also just found out he blocked me. But look at his loss.

He's basically trained a broken LoRA and bragging about it.

Anonymous

9/3/2025, 11:48:38 AM

No.106471266

[Report]

>>106471219

Is that 5600 steps??

Anonymous

9/3/2025, 11:52:11 AM

No.106471295

[Report]

>>106471219

nans for days

I'm not terribly impressed by how Qwen handles traditional media.

Anonymous

9/3/2025, 11:59:19 AM

No.106471339

[Report]

>>106471307

Ask furk to train you a nan lora for you.

Anonymous

9/3/2025, 12:01:47 PM

No.106471357

[Report]

>>106471711

>>106471219

well yeah, they aren't giving consumer cards 96gb vram because it would destroy their enterprise market overnight. that's why i'm hoping for a deepseek-level breakthrough from china but in the hardware space. they already have modded cards. they are also making 96gb custom cards but they're kind of shit because low bandwidth, no cuda, and shit-tier drivers.

Anonymous

9/3/2025, 12:06:44 PM

No.106471391

[Report]

>>106472947

>>106471307

The paintings themselves in the back is honestly really well done, just the girl is slopped.

Anonymous

9/3/2025, 12:07:24 PM

No.106471396

[Report]

>>106469998

5 hours of genning god damn. having fun with the I2V

Anonymous

9/3/2025, 12:17:32 PM

No.106471461

[Report]

>>106471172

just image AI generating FPS walkthough jump-scare game movies in perpetuity

Anonymous

9/3/2025, 12:19:08 PM

No.106471473

[Report]

I shouldn't have updated my OS.

DRAM/VRAM management is kinda fucky now. God damn.

>>106471357

It's not his post that's cringe. It's that he's bragging about his hardware while being unaware he is basically showing the world that his LoRA is stillborn.

Anonymous

9/3/2025, 1:28:07 PM

No.106471833

[Report]

>>106471941

>>106471711

nta, but I can't help but question how the man is such a prolific (shit)poster seemingly everywhere but somehow missed that their training run was cooked from the go.

Anonymous

9/3/2025, 1:43:40 PM

No.106471933

[Report]

>>106472188

Anyone ever used the captioning tool in Onetrainer? Usable or megacopium only good for boorutags?

Anonymous

9/3/2025, 1:45:16 PM

No.106471941

[Report]

>>106471833

I'm convinced his low intelligence robbed him of his ability to second guess and check himself and by radiating enough confidence in a field most people knew little about, he was able to accidentally grift his way to notoriety by just being a fucking idiot.

Anonymous

9/3/2025, 2:27:17 PM

No.106472188

[Report]

>>106472218

>>106471933

usable, but you still have to check manually for any flops afterward, if anything it saves time by doing the heavy captioning for you.

Anonymous

9/3/2025, 2:32:23 PM

No.106472218

[Report]

>>106472188

Can it do nsfw?

Anonymous

9/3/2025, 2:32:59 PM

No.106472220

[Report]

I know you can upscale and interpolate wan video but is there anything to fix any fuckups in the video like when something gets blurred out or things like that?

Anonymous

9/3/2025, 2:36:06 PM

No.106472242

[Report]

with wan 2.2 you can save the latent from the high noise sampler and reroll with the low noise sampler to hopefully get a better result

Anonymous

9/3/2025, 2:44:58 PM

No.106472298

[Report]

>>106472460

Can I run Wan on my M4 Max? How's the speed?

Anonymous

9/3/2025, 3:02:42 PM

No.106472405

[Report]

>>106470133

>Playing 'viking boat'

Anonymous

9/3/2025, 3:03:34 PM

No.106472413

[Report]

Anonymous

9/3/2025, 3:08:42 PM

No.106472450

[Report]

>>106471711

Are you telling me that ohwx man training of himself is not the true way ?

Seriously this guy has been doing this for such a long time yet he has learning nothing, still seems to think there's some magic token combination, still hasn't understood that repeats are only for balancing training data when doing multiple concepts at the same time, doesn't even understand the principle of A - B testing and instead changes lots of parameters between experimental runs.

Snakeoil salesman if there ever was one.

Anonymous

9/3/2025, 3:10:03 PM

No.106472460

[Report]

>>106472298

You probably can, I saw someone posting that they got it running, the speed was something horrendous though.

I'm going insane trying to find a good noob-based model with decent coherence that allows some flexibility beyond basic tags. For example, this one

https://civitai.com/models/1201815?modelVersionId=1491533 - you can actually add variations like 'blue glowing tattoo' instead of just 'tattoo' without it breaking. Problem is, these models all have shit mixes and can't follow artist styles closely like vanilla noob does. But I'm too much of a shitter to get kino results with plain noob. Is there a good middle ground model/remix that actually respects artist styles while being more forgiving?

Anonymous

9/3/2025, 3:14:17 PM

No.106472495

[Report]

>>106472485

anything that uses (only) CLIP will never give you the control you seek

Anonymous

9/3/2025, 3:16:57 PM

No.106472516

[Report]

>>106472485

Your best bet is to find a model that has an LLM text encoder slapped onto it. Idk if noob has a variant like that tho.

Anonymous

9/3/2025, 3:18:27 PM

No.106472526

[Report]

>>106472485

illustrious has limited natural language support

Anonymous

9/3/2025, 3:21:56 PM

No.106472544

[Report]

beeg birb

Anonymous

9/3/2025, 3:33:18 PM

No.106472639

[Report]

>>106472965

>>106471573

The 1girl machine keeps churning, but memory management sucks.

Anonymous

9/3/2025, 3:34:07 PM

No.106472643

[Report]

>>106472485

https://huggingface.co/Minthy/RouWei-Gemma

someone's been trying to stitch better encoders to sdxl but i don't see much difference so far

>>106471219

>NaN

That explains those terrible LoRAs of himself.

Anonymous

9/3/2025, 3:55:36 PM

No.106472799

[Report]

Anonymous

9/3/2025, 4:06:37 PM

No.106472882

[Report]

>random character sheet out of nowhere

Thanks, I guess

Anonymous

9/3/2025, 4:09:41 PM

No.106472916

[Report]

>>106472945

>>106472904

What did you prompt for ?

Anonymous

9/3/2025, 4:13:03 PM

No.106472945

[Report]

>>106472916

https://genshin-impact.fandom.com/wiki/Jahoda

The appearance paragraph xd. I just specified anime artstyle. I guess AI generated articles are good prompts lmao

Anonymous

9/3/2025, 4:13:04 PM

No.106472946

[Report]

>>106472904

not a footfag but that little red foot is cute

Anonymous

9/3/2025, 4:13:14 PM

No.106472947

[Report]

>>106471391

At least it draws really good legs

Anonymous

9/3/2025, 4:14:58 PM

No.106472965

[Report]

>>106472639

That's not a Luger. Man.

Anonymous

9/3/2025, 4:25:59 PM

No.106473065

[Report]

beeg guy

Anonymous

9/3/2025, 4:31:42 PM

No.106473120

[Report]

>multiple seeds, samplers and schedulers

>it keeps giving me ref sheets

There is no way a paragraph can have such strong specific style "vibes" that it fucks with the model. Is this a thing,

Anonymous

9/3/2025, 4:33:29 PM

No.106473136

[Report]

>>106473159

>>106469887

Seb McKinnon Lora for Flux? That's not available on civitai, I want to test it.

Anonymous

9/3/2025, 4:35:46 PM

No.106473152

[Report]

>an entire separate general of waisloppers

mortifying

Anonymous

9/3/2025, 4:36:06 PM

No.106473159

[Report]

Anonymous

9/3/2025, 4:46:37 PM

No.106473264

[Report]

>>106473481

It feels like progress is pretty stagnant after a few months. too bad the software still sucks ass and just got worse. any new models on the horizon to look forward to at least?

Anonymous

9/3/2025, 4:47:59 PM

No.106473282

[Report]

>>106473365

>>106473269

qwen hinted at some updates but that's about it.

Anonymous

9/3/2025, 4:48:54 PM

No.106473289

[Report]

>>106473365

>>106473269

Goddamn man we just had qwen not even 2 weeks ago. Is this zoomer brain I heard about?

Chroma loras are so easy to bake, all you need is:

10-15 512x512 images

natural gemini captions

adamw optimizer

constant scheduler

batch size 1

set it for about 2K steps (about 150ish epochs)

Anonymous

9/3/2025, 4:51:26 PM

No.106473316

[Report]

>512x512

we're regressing, not progressing

Anonymous

9/3/2025, 4:55:30 PM

No.106473360

[Report]

>>106473553

>>106473301

I don't know if I would call that good quality anon. This seems to have the same quality problem flux did if you trained at such low resolution

>>106473282

probably some standard controlnet IP adapter thing for their existing stuff.

>>106473289

qwen isn't really as impressive as it should be at that size. synthetic slopped datasets are a step backwards and the two stage models for wan is just annoying for a 10% higher quality video than 2.1

Anonymous

9/3/2025, 4:58:14 PM

No.106473386

[Report]

>>106473301

>Chroma loras are so easy to bake,

Yes, it's shockingly easy to train Chroma loras effectively

>natural gemini captions

Don't need this, JoyCaption is good enough, and if all you train is a single concept like a person (or even an art style assuming it's consistent) you can train with just a simple 'foobar' nonsense tag and it will have no problem training it

You didn't mention learning rate, for people I would suggest 0.0001 (1e-4) for art styles you probably want to go a bit higher since it's more abstract as a concept

Anonymous

9/3/2025, 4:59:21 PM

No.106473394

[Report]

>>106473497

>>106473365

>qwen isn't really as impressive as it should be at that size

you are right but a lot of people don't want to believe it. people will dismiss the arena rankings as nonsense, but qwen ranks around the same place as hidream and it honestly looks it. i went to train a qwen lora and it was like 60gb worth of slop. it's incredibly bloated for a model that does not feel anywhere near the top-10. if it was good it would've made way more strides like flux dev did compared to SD3/SDXL (though at the time we didnt realize how impossible it would be to tune).

Anonymous

9/3/2025, 4:59:50 PM

No.106473400

[Report]

>>106473365

You know I was gonna take you seriously but then

>10% higher quality video than 2.1

Ahh another vramlet seethe. Trust me if you can't run this you probably just dip from the scene, it's gonna get worse from here.

Anonymous

9/3/2025, 5:03:48 PM

No.106473430

[Report]

recommend me some cool Illustrious base model hidden gems

>>106473301

people should just ignore chroma, and work on qwen. chroma is like bigasp. big potential, crappy results. maybe another model, merged with chroma will save the day

>>106473301

when will girls stop having their feet buried in the ground?

Anonymous

9/3/2025, 5:06:11 PM

No.106473453

[Report]

>>106473446

When you type full body shot or prompt something regarding footwear

Anonymous

9/3/2025, 5:08:19 PM

No.106473473

[Report]

>>106473538

Do flux loras still work with chroma

Anonymous

9/3/2025, 5:08:43 PM

No.106473478

[Report]

>>106473431

>chroma is like bigasp

come on now lets not be disingenuous...

at least bigasp was trained at 1024x!

Anonymous

9/3/2025, 5:09:07 PM

No.106473481

[Report]

>>106473365

>>106473394

>qwen isn't really as impressive as it should be

I'd agree, but the alternatives in terms of prompt adherence and non-mangled hands/poses are kinda slim.

But the shocking amount of sameface and general lack of variance between seeds hurt the model a lot.

Picrel, 3 seeds, same prompt.

Anonymous

9/3/2025, 5:14:30 PM

No.106473529

[Report]

because qwen is DPO'd to shit, it has been said multiple times. you need to inject noise if you want an actual different image per seed

Anonymous

9/3/2025, 5:14:59 PM

No.106473534

[Report]

>>106473497

Lack of seed variation is actually good for i2v gen purposes. You can change the pose or other details, and the face/character tends to stay the same. I agree it does take some tard wrangling to avoid unwanted generalizations.

Anonymous

9/3/2025, 5:15:20 PM

No.106473538

[Report]

>>106473473

Some do some don't. You have try one by one.

Anonymous

9/3/2025, 5:16:14 PM

No.106473546

[Report]

>>106473497

I personally like it makes editing an image much easier without just losing the entire damn composition.

>>106473360

It's not the resolution, most likely prompted to look like a phone camera shot

This is from a Chroma lora I recently trained at 512 resolution

>>106473431

Qwen's comprehension of traditional media styles, as well as creativity, is piss poor. I don't think it will be remedied by finetuning

It's a great model, but more experienced users will get more out of chroma.

Anonymous

9/3/2025, 5:19:28 PM

No.106473577

[Report]

>>106473766

>>106473431

One of the really popular SDXL model makers is making a finetune right now, I've used a prototype lora of it and it is VERY promising.

>>106473446

This nigga likes feet!

>>106473553

Yeah they're prompted/oversharpened to look like phone shots after they're loaded to IG.

Anonymous

9/3/2025, 5:21:49 PM

No.106473595

[Report]

>>106473637

I'm new I2V and I'm following the guide. When I try to generate with the first workflow (

https://rentry.org/wan22ldgguide) I'm getting:

> ValueError("type fp8e4nv not supported in this architecture. The supported fp8 dtypes are ('fp8e4b15', 'fp8e5')")

And ChatGPT insists that fp8e4nv doesn't work on a 3090. Is it wrong?

Anonymous

9/3/2025, 5:25:09 PM

No.106473621

[Report]

>>106473571

>I don't think it will be remedied by finetuning

I think you can, but it will be very expensive since the model is large and massively overtrained, not sure if anyone with enough money would think it's worth it

Anonymous

9/3/2025, 5:27:05 PM

No.106473637

[Report]

>>106474533

>>106473595

I had that same issue when I tried a new comfy install, even though I used the e4m3fn models with my old install no problem. I dunno. Just try the e5m2 models.

Anonymous

9/3/2025, 5:29:31 PM

No.106473656

[Report]

>>106473674

>>106473571

>remedied by finetuning

The fuck, if the distilled horseshit that flux was able to be unfucked by finetuning, a non distilled model should be 100 fold easier.

Anonymous

9/3/2025, 5:31:50 PM

No.106473674

[Report]

>>106473703

>>106473656

qwen would take forever. it needs a bit more elbow grease and cash than chroma

Anonymous

9/3/2025, 5:32:51 PM

No.106473687

[Report]

>>106474253

https://chromaawards.com/

I think 11labs is paying off civit to not add a chroma category because of this shit

Anonymous

9/3/2025, 5:33:35 PM

No.106473695

[Report]

>>106473571

>Qwen's comprehension of traditional media styles, as well as creativity, is piss poor

correct

>I don't think it will be remedied by finetuning

it CAN, but nobody will because the model is way too bloated.

>but more experienced users will get more out of chroma.

completely false

>>106473674

People keep saying that but all big finetune needs a lot, chroma needed 105,000 H100 hours are you saying qwen would need more? SDXL needed a cluster, finetune will need big hardware for any model. It's such a non argument.

Anonymous

9/3/2025, 5:36:02 PM

No.106473716

[Report]

>>106473703

chroma is 8.9b. qwen is 20b

Anonymous

9/3/2025, 5:36:17 PM

No.106473719

[Report]

>>106473703

>are you saying qwen would need more?

it entirely depends on the size of the model. the other anon is right, it's too bloated but it's also overturned which is why there isn't much seed variation

Anonymous

9/3/2025, 5:36:44 PM

No.106473723

[Report]

>>106473730

>>106473431

qwen is overfit and bloated to shit. just do wan

Anonymous

9/3/2025, 5:37:48 PM

No.106473730

[Report]

>>106473723

wan is slightly over it as well but at least it's in the realm of doable

>>106473301

hello beautiful babe

Anonymous

9/3/2025, 5:38:08 PM

No.106473733

[Report]

>>106473703

yes, qwen would absolutely need more because it's massive in comparison to chroma. chroma already had to cope by removing parameters and training at 1/4 the resolution of fucking sdxl. and even with all that, he still wound up spending $150k on it. acting like the compute costs for these models are the same as SDXL is simply retarded

Anonymous

9/3/2025, 5:39:48 PM

No.106473749

[Report]

>>106473703

Use your brain

Anonymous

9/3/2025, 5:41:39 PM

No.106473766

[Report]

>>106473797

>>106473577

>prototype lora

Where?

>>106473732

Love it lmao

>>106473766

I've said too much.

Anonymous

9/3/2025, 5:48:24 PM

No.106473812

[Report]

>>106473797

Oh you meant a chroma tune, that;s good was wondering how did you manage to make a qwen tune look so shitty lol

Anonymous

9/3/2025, 5:54:13 PM

No.106473856

[Report]

>>106469536

Neta is not perfect, but this is the only anime model that can handle multiple subjects on screen without mangling them

Anonymous

9/3/2025, 6:03:34 PM

No.106473943

[Report]

>>106473431

Most people outside of here are ignoring chroma

Anonymous

9/3/2025, 6:09:39 PM

No.106473977

[Report]

>>106473991

Anonymous

9/3/2025, 6:11:11 PM

No.106473987

[Report]

>>106473797

>prompt for indian man

>get a cholo

Anonymous

9/3/2025, 6:11:42 PM

No.106473991

[Report]

>>106473977

>t. has futa images saved

Anonymous

9/3/2025, 6:19:38 PM

No.106474052

[Report]

>>106473720

>white mans kriptonite.png

Anonymous

9/3/2025, 6:26:23 PM

No.106474103

[Report]

>>106474422

Anonymous

9/3/2025, 6:42:06 PM

No.106474253

[Report]

>>106473687

Could be, damn

Anonymous

9/3/2025, 6:54:21 PM

No.106474383

[Report]

>>106476130

Are flux dev and schnell loras interchangeable?

>>106474328

Hello, I'm trying to switch from Forge to ComfyUI.

I prefer ComfyUI's interface because my entire txt2img + hires fix workflow fits on my screen without scrolling.

The problem is that I can't get it to work correctly. I've posted more details in the attached thread.

Any help would be appreciated. Thanks!

json:

https://files.catbox.moe/nv2b7k.json

Anonymous

9/3/2025, 6:56:11 PM

No.106474406

[Report]

>>106474388

I think sdxl wants -2 clip layer

Anonymous

9/3/2025, 6:57:16 PM

No.106474422

[Report]

>>106476679

Anonymous

9/3/2025, 7:08:16 PM

No.106474533

[Report]

>>106473637

Yeah that worked.

Anonymous

9/3/2025, 7:08:21 PM

No.106474536

[Report]

>>106474631

>>106474388

clip needs to be -2. Not sure what you are trying to do with the tiled vae encode/decode nodes. Also 'BREAK' commands don't work in the default CLIP Text Encode nodes, there are custom nodes that use the A1111 parser if you want to keep them but in Comfy you should break each one out into separate text encode nodes and concat them.

Anonymous

9/3/2025, 7:14:46 PM

No.106474602

[Report]

>>106474619

>>106469492 (OP)

how do i generate abstract happy merchant memes? i am super retarded when it comes to prompting ai

Anonymous

9/3/2025, 7:16:05 PM

No.106474619

[Report]

>>106474602

literally just img to img and play with the denoise

>>106474536

Thanks I am looking here and /adt/ for answers. All those options were loaded by default when I dragged the gen made in Forge to Comfy. I will keep in mind what you tell me.

Anonymous

9/3/2025, 7:22:18 PM

No.106474669

[Report]

>>106474631

you can also just disable the CLIP Set Last Layer node, ComfyUI will automatically use -2 with SDXL models.

Anonymous

9/3/2025, 7:24:47 PM

No.106474692

[Report]

>>106474631

>>106474388

I think it would be much faster to just make a workflow from the ground up

Anonymous

9/3/2025, 7:28:48 PM

No.106474735

[Report]

>>106470041

Fuck me I don’t know how you guys put up with that, 5seconds for my 1girls feels insufferably inexcusably long as it is. I’m sure someone will chime in with “it’s worth it” or whatever but that’s into “too long for me to bother” territory. And that’s with the fastest consumer card.

Anonymous

9/3/2025, 7:31:05 PM

No.106474752

[Report]

>>106471050

Well it’s a good thing the oral insertion lora bandit got bored, otherwise…ToT

Say I'm training a lora and I want 3000 steps total, with 20 images.

Is it better to do 3 epochs with 1000 steps each, or 6 epochs with 500 steps each?

Is there a noticeable difference between the two at same step amounts (ie, first at epoch 2, 2000 steps, second at epoch 4 at 2000 steps)

Anonymous

9/3/2025, 7:35:28 PM

No.106474793

[Report]

>>106474761

what is an "epoch" and what is a "step"?

Anonymous

9/3/2025, 7:44:06 PM

No.106474864

[Report]

>>106474761

it's the same exact thing, 1 epoch is just one complete pass of your entire dataset (including repeats). step count is what matters, so just up your number of epochs until you hit 3000 steps total. repeats are only useful if you are training on multiple datasets at once

Anonymous

9/3/2025, 7:45:43 PM

No.106474876

[Report]

>>106474761

You are confused

An 'epoch' is having trained all images once, 100 epochs is having trained all images 100 times

A 'step' is a single training iteration, typically that is 1 image, but it can be more if you are using a higher batch

So, to make it simple, if you are training 20 images, 1 epoch = 20 images thus 20 steps IF you are using batch 1 (1 image trained at once)

If you are using batch 2 (2 images trained at once) 1 epoch = 20 images, BUT 10 steps (2 images trained at once)

I'm going insane trying to upscale chroma, I don't know why it only gives me blurrier results.

I'm using ultimate sd upscale, is there anything better or simpler?

Anonymous

9/3/2025, 7:51:36 PM

No.106474935

[Report]

Anonymous

9/3/2025, 7:56:41 PM

No.106474969

[Report]

>>106475008

>>106474911

>0.5 cfg

r u sure?

Anonymous

9/3/2025, 8:00:36 PM

No.106475008

[Report]

>>106475031

>>106474969

It's just a random example I tried.

I tried everything including this cfg I've seen somewhere else.

Either this node is not compatible with chroma or I'm missing something.

Anonymous

9/3/2025, 8:04:09 PM

No.106475031

[Report]

>>106475183

>>106475008

nta but 0.22 denoise is too low, especially with a controlnet. try increasing it to 0.35 - 0.5. i haven't used chroma much so idk about what sampler settings it uses but cfg 4.5 should be good unless you're using a lightning lora. 40 steps also seems excessive for an upscale

Anonymous

9/3/2025, 8:05:43 PM

No.106475046

[Report]

>>106475972

https://www.youtube.com/watch?v=YZTvL8C_xz4

not a shill but has anyone tried this method for longer clips?

Anonymous

9/3/2025, 8:06:39 PM

No.106475056

[Report]

>>106473497

Yeah seriously. I'm a newfag and I don't really fw NSFW at the moment but if it could just mix things up a little bit more it would be perfect. It could be faster of course but a fast model that spits out crap output is infinitely slow.

Anonymous

9/3/2025, 8:08:30 PM

No.106475074

[Report]

>>106476331

Anonymous

9/3/2025, 8:21:42 PM

No.106475179

[Report]

>>106475264

>>106474911

youre using some retarded samplers and scheduler

Anonymous

9/3/2025, 8:21:53 PM

No.106475183

[Report]

>>106475031

OK I will try again, thanks;

Anonymous

9/3/2025, 8:23:10 PM

No.106475199

[Report]

change the location to a pond in a forest. a tent is nearby with a campfire outside it. keep the character in green and in the same style.

qwen edit is neat, also maintained the pixel style:

Anonymous

9/3/2025, 8:30:18 PM

No.106475264

[Report]

>>106475179

I've also used euler/simple and unipc, same results.

Is it possible to run Wan 2.1 with A1111? And would a 3060 12gb be capable of generating 480p videos?

Anonymous

9/3/2025, 8:37:23 PM

No.106475314

[Report]

>>106475486

>>106475302

>Is it possible to run Wan 2.1 with A1111

hard no

>And would a 3060 12gb be capable of generating 480p videos?

soft yes

Anonymous

9/3/2025, 8:45:29 PM

No.106475373

[Report]

>>106475486

Anonymous

9/3/2025, 8:47:27 PM

No.106475382

[Report]

>>106475401

Is there an "apply controlnet" node with latent instead of image as input?

Anonymous

9/3/2025, 8:48:03 PM

No.106475391

[Report]

4chan janny d0x dump

go fuck yourself you disgusting kike nigger sludge

https://files.catbox.moe/r1zn79.7z

death to israel, death to all kike niggers and their golems. there is no future for you.

Anonymous

9/3/2025, 8:49:01 PM

No.106475401

[Report]

>>106475413

>>106475382

no the controlnet needs an image input

Anonymous

9/3/2025, 8:50:11 PM

No.106475413

[Report]

>>106475401

Ah, welp ok thanks anon.

Anonymous

9/3/2025, 8:58:56 PM

No.106475486

[Report]

Anonymous

9/3/2025, 9:00:50 PM

No.106475502

[Report]

>>106475540

>ERROR - An unexpected error occurred during conversion of Tron_Flux.safetensors: [WinError 123]

I removed all spaces and brackets and parentheses from the filenames and this shit still won't start converting.

Anonymous

9/3/2025, 9:05:46 PM

No.106475540

[Report]

bros... does nunchaku qwen work with loras...

Anonymous

9/3/2025, 9:11:19 PM

No.106475575

[Report]

>ERROR - ERROR: No UNet LoRA weights were applied despite being found!

>ERROR - This may indicate a key mapping or base model mismatch.

YOU TOLD ME IT'S COMPATIBLE YOU STUPID FUCKING PROGRAM

Anonymous

9/3/2025, 9:11:45 PM

No.106475578

[Report]

>>106475601

Anonymous

9/3/2025, 9:11:53 PM

No.106475579

[Report]

>>106475569

lol man just check the github. It's in like big font every time they update it

Anonymous

9/3/2025, 9:12:41 PM

No.106475585

[Report]

>>106475611

Why are there 3 imagegen generals?

Anyway

>https://github.com/Tencent-Hunyuan/HunyuanWorld-Voyager

> Available to the world except the European Union, the UK, and South Korea

I know yuros cucked themselves with legislation but what did korea do?

>>106475578

im gonna kill myself

Anonymous

9/3/2025, 9:15:25 PM

No.106475605

[Report]

>animateddiff_\d+.mp4

isn't this localgen, why are you using an online app?

Anonymous

9/3/2025, 9:15:45 PM

No.106475608

[Report]

>>106475601

Please don't.

Anonymous

9/3/2025, 9:15:49 PM

No.106475611

[Report]

>>106475585

>korea

What didn't they do.. baka

Anonymous

9/3/2025, 9:19:53 PM

No.106475638

[Report]

Im no druggie but i know image gen can be better than adderall lol

Anonymous

9/3/2025, 9:45:29 PM

No.106475862

[Report]

>>106476060

>>106475601

Please, think of the bimbos!

Too bad it didn't get the reflection quite right.

Anonymous

9/3/2025, 9:54:46 PM

No.106475939

[Report]

>Error (code 1224) "The requested operation cannot be performed on a file with a user-mapped section open."

Is the anon who was using the flux lora converter the other day here? How did you make this shit work? Shit collides with itself

Anonymous

9/3/2025, 9:59:45 PM

No.106475963

[Report]

>>106476079

Anonymous

9/3/2025, 10:01:05 PM

No.106475972

[Report]

>>106475982

>>106475046

It's still only using the last frame for the next part of the generation.

Is there really no way to use more frames in latent to preserve motion?

Anonymous

9/3/2025, 10:03:48 PM

No.106475982

[Report]

>>106476544

>>106475972

honestly the fastest way would be to gen with lightx2, then have a node that extracts the last frame, and feed that back into the img source, then redo the prompt.

stitch 5 clips = 25s. also, if the second clip is off you can just redo it, idk if batch processing would be ideal.

Noob here. Any info on using multiple GPU's for stuff anywhere? Can't seem to be able to find much on it. I have two 4070's which ollama will use to load up to ~20GB models, but I don't see that happening when using ComfyUI. Is it just a Comfy thing?

Anonymous

9/3/2025, 10:16:02 PM

No.106476053

[Report]

Anonymous

9/3/2025, 10:17:41 PM

No.106476060

[Report]

>>106476071

>>106475862

impressionism unlocked

Anonymous

9/3/2025, 10:18:55 PM

No.106476071

[Report]

>>106476091

Anonymous

9/3/2025, 10:19:44 PM

No.106476075

[Report]

>>106476241

>>106476037

you have to use some hacky unstable custom node for it. the only UI using ggml is anistudio but can't recommend in it's current state

Anonymous

9/3/2025, 10:19:57 PM

No.106476079

[Report]

>>106476158

>>106475963

I can't stop genning random 1girl slop. Your harry is pretty damn good though. How much hand gacha did that take?

so i just discovered i can make 14 second videos with my rtx 3050 8gb in like 12 seconds with fastwan, wtf. for people like me who are stupid technologically speaking, just download the Pinokio app, it's plug and play

Anonymous

9/3/2025, 10:22:04 PM

No.106476091

[Report]

>>106476158

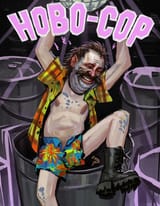

>>106476071

Lemmy, the Hobo Cop!

Anonymous

9/3/2025, 10:23:59 PM

No.106476103

[Report]

>>106476106

>>106476088

I cant imagine how garbage it looks, post it

Anonymous

9/3/2025, 10:24:53 PM

No.106476106

[Report]

The best gen you ever did was one you cancelled midway.

Anonymous

9/3/2025, 10:28:28 PM

No.106476130

[Report]

>>106476656

>>106474383

I have not tried loras but I have tried controlnets and they don't seem to work between them. If chroma is schnell (not sure, I think it started out as such but became some kind of frankenmodel over time) then comfy will let me load flux dev loras on it but they look messed up.

Anonymous

9/3/2025, 10:30:46 PM

No.106476146

[Report]

>generate with SDXL

>fix with Gemini

>animate with Grok

is there seriously a better way with a measly 8GB VRM GPU?

Anonymous

9/3/2025, 10:31:00 PM

No.106476148

[Report]

>>106476305

>>106469492 (OP)

sauce on the nazi anons

Anonymous

9/3/2025, 10:31:34 PM

No.106476154

[Report]

>>106476271

>>106476110

shut the fuck up disgusting nigger bot

death to israel and your american golems

Anonymous

9/3/2025, 10:32:03 PM

No.106476158

[Report]

>>106476079

Great k-pop 1girls

>How much hand gacha did that take?

None. Got lucky since I didn't tag characters.

>>106476091

ace of spades, space of aids

Anonymous

9/3/2025, 10:42:19 PM

No.106476241

[Report]

>>106476075

Damn that sucks. Thanks for the response

Anonymous

9/3/2025, 10:43:59 PM

No.106476251

[Report]

>>106476037

https://github.com/pollockjj/ComfyUI-MultiGPU

VRAM block splitting is supported by this node. If you're looking for parallel inferencing, I have no clue.

Anonymous

9/3/2025, 10:46:38 PM

No.106476271

[Report]

>>106476154

ai local is chinese. you are on wrong thread faggot shizo

Anonymous

9/3/2025, 10:50:39 PM

No.106476305

[Report]

>>106476311

>>106476148

What do you mean?

Anonymous

9/3/2025, 10:51:12 PM

No.106476311

[Report]

>>106476305

I mean a catbox anon

Anonymous

9/3/2025, 10:51:36 PM

No.106476313

[Report]

>>106475916

i realize i never tried genning mirrors. looks pretty cool

Anonymous

9/3/2025, 10:52:21 PM

No.106476319

[Report]

Anonymous

9/3/2025, 10:53:53 PM

No.106476331

[Report]

>>106475074

The Chad haoming just implementing things without fanfare vs the virgin panchovix promising everything with fanfare and BTFOing himself before he even started.

Anonymous

9/3/2025, 10:56:17 PM

No.106476353

[Report]

>>106476110

>he doesn’t have an aggressive preview interval

Ngmi

Anonymous

9/3/2025, 10:59:38 PM

No.106476386

[Report]

>>106476418

>>106476198

how?? you can vid2vid?

Anonymous

9/3/2025, 11:01:48 PM

No.106476406

[Report]

>>106476435

>>106476396

Features [Sep. 03]

Most base features of the original Automatic1111 Webui should still function

New Features

Support Wan 2.2

txt2img, img2img, txt2vid, img2vid

Support Nunchaku (SVDQ) Models

dev, krea, kontext, t5

Support Flux Kontext

img2img, inpaint

>>106476386

>>106476395

its an i2v, flux > wan

Anonymous

9/3/2025, 11:05:19 PM

No.106476435

[Report]

>>106476447

>>106476396

>>106476406

He’s been silently grinding I’ve used forge classic for months now and it just works. Haven’t upgraded to the flux/wan builds yet cause I don’t want to be a Guinea pig but tester but after a few more releases mite b gud. Panchovix shat on.

Anonymous

9/3/2025, 11:05:36 PM

No.106476436

[Report]

OMG GUYS! it's gradio!!! So much wow! let's bloat together!

Anonymous

9/3/2025, 11:07:20 PM

No.106476447

[Report]

>>106476435

I use reforge for illu/noob anime stuff (wai v14, etc) and comfy for wan 2.2 and qwen edit, but im curious how long gens take in this gui. worth a try at least.

Anonymous

9/3/2025, 11:09:00 PM

No.106476461

[Report]

>>106476396

anyone got gen times comparing to nooodle ui?

Anonymous

9/3/2025, 11:19:56 PM

No.106476544

[Report]

>>106475982

This works in general, it's not complicated. I haven't been able to find a specific node for extracting the last frame of a batch of images, though.

Anonymous

9/3/2025, 11:21:21 PM

No.106476556

[Report]

Anonymous

9/3/2025, 11:26:13 PM

No.106476605

[Report]

>>106476396

But muh speed! My noodes! You CANT make something easy! We are supposed to be big boys!

>>106476418

well her expression looks a lot like this

Anonymous

9/3/2025, 11:29:48 PM

No.106476630

[Report]

>>106476861

>>106476396

Yeeeh! the Forge UI with all the improvements and implementations

Want something? Xformers? Cuda? SAtention?

Yes? Just install it yourself with this outdated third pary guide!

Anonymous

9/3/2025, 11:32:47 PM

No.106476646

[Report]

>>106471573

i distrohopped and current thing krita works with 1998 hp microsoft srgb profile again

Anonymous

9/3/2025, 11:32:56 PM

No.106476647

[Report]

>>106477167

Anonymous

9/3/2025, 11:33:59 PM

No.106476657

[Report]

>>106476621

you surely mean this

>>106476198

Anonymous

9/3/2025, 11:35:00 PM

No.106476661

[Report]

>>106476656

bow physics good

boob physics bad

Anonymous

9/3/2025, 11:36:24 PM

No.106476667

[Report]

Anonymous

9/3/2025, 11:37:52 PM

No.106476679

[Report]

>>106474422

Perhaps. Hope you get a better computer soon troon destroyer 9000. Show us how it's done

The eyes are fun to gen though. thx original eyes anon for inspo

Anonymous

9/3/2025, 11:38:06 PM

No.106476681

[Report]

Can anyone throw a qwen edit workflow my way?

Anonymous

9/3/2025, 11:41:55 PM

No.106476720

[Report]

Anonymous

9/3/2025, 11:42:54 PM

No.106476725

[Report]

>>106476898

so how come 2.1 lightx2 works better than 2.2, for wan 2.2?

Anonymous

9/3/2025, 11:43:55 PM

No.106476735

[Report]

love qwen edit. it just works.

>replace the blonde anime girl with Miku Hatsune.

Anonymous

9/3/2025, 11:48:25 PM

No.106476771

[Report]

>>106476629

it's not that old, man!

Anonymous

9/3/2025, 11:52:02 PM

No.106476809

[Report]

Anonymous

9/3/2025, 11:54:16 PM

No.106476832

[Report]

>>106476924

>>106476656

Not bad. I like how the shadow of the bow string tracks along her leg as it moves.

Anonymous

9/3/2025, 11:55:54 PM

No.106476838

[Report]

>>106476889

Anonymous

9/3/2025, 11:55:57 PM

No.106476839

[Report]

>>106477189

has anyone gen'd a good medusa? I swear ai cant do a good medusa

Anonymous

9/3/2025, 11:58:15 PM

No.106476856

[Report]

Anonymous

9/3/2025, 11:58:37 PM

No.106476861

[Report]

>>106476900

>>106476630

>Yes? Just install it yourself with this outdated third pary guide!

you mean just like we do with comfy?

Anonymous

9/3/2025, 11:59:15 PM

No.106476867

[Report]

>>106473797

Is this someone you're stalking

Anonymous

9/4/2025, 12:01:59 AM

No.106476889

[Report]

>>106476938

>>106476838

zoom in, enhance, what is the location?

>>106476725

some say because comfy's/kijai's implementation is wrong and the wan moe ksampler is the "correct" implementation but I haven't had good experiences with it in my limited testing

https://github.com/stduhpf/ComfyUI-WanMoeKSampler/tree/master

Anonymous

9/4/2025, 12:02:40 AM

No.106476900

[Report]

>>106476861

even the org wiki maintainer doesn't know shit about the software

Anonymous

9/4/2025, 12:05:21 AM

No.106476924

[Report]

>>106476935

>>106476832

are you still using pixelwave, or is this qwen? i haven't kept up with what's new

Anonymous

9/4/2025, 12:05:48 AM

No.106476929

[Report]

>>106476898

I get better motion with this sampler when using with or without 2.2 lora.

Anonymous

9/4/2025, 12:06:39 AM

No.106476935

[Report]

>>106476924

That picture was variously pixelwave and chroma through different iterations. The last img2img was pixelwave.

Anonymous

9/4/2025, 12:07:14 AM

No.106476938

[Report]

>>106476889

Outside Chroma HQ with lora on -1. This is inside with lora on 1

Anonymous

9/4/2025, 12:08:03 AM

No.106476946

[Report]

>>106476973

Can qwen edit run the lightning lora or is it gonna fuck shit up? Alsop what was the magic number that prevented the zoom?

Anonymous

9/4/2025, 12:11:56 AM

No.106476973

[Report]

>>106476982

>>106476946

yeah there is an 8 step one specifically for qwen edit.

Anonymous

9/4/2025, 12:12:57 AM

No.106476982

[Report]

Anonymous

9/4/2025, 12:13:18 AM

No.106476984

[Report]

Anonymous

9/4/2025, 12:19:58 AM

No.106477030

[Report]

Man, Qwen is so fucking literal with everything.

Anonymous

9/4/2025, 12:32:02 AM

No.106477127

[Report]

>>106477085

>People will argue it's a bad thing.

Anonymous

9/4/2025, 12:33:11 AM

No.106477143

[Report]

>models looking like slopped shit is good because... i can larp as a prompt engineer!

Anonymous

9/4/2025, 12:34:01 AM

No.106477155

[Report]

>>106477279

>>106477085

You could 100% sell fashion like this these days bro. Go make a bank.

Anonymous

9/4/2025, 12:34:24 AM

No.106477161

[Report]

>>106477085

What did it take literally?

Anonymous

9/4/2025, 12:35:11 AM

No.106477167

[Report]

>>106476647

Hahaha. Nice.

Anonymous

9/4/2025, 12:38:17 AM

No.106477189

[Report]

>>106476839

Best I could do

Anonymous

9/4/2025, 12:48:29 AM

No.106477261

[Report]

>>106476629

lower ur tone before speaking here again

Anonymous

9/4/2025, 12:50:12 AM

No.106477279

[Report]

>>106477155

Sounds like work, man.

Anonymous

9/4/2025, 12:56:01 AM

No.106477323

[Report]

>>106476629

Absolutely based

Anonymous

9/4/2025, 1:01:34 AM

No.106477369

[Report]

Anonymous

9/4/2025, 1:02:43 AM

No.106477378

[Report]

Anonymous

9/4/2025, 1:02:48 AM

No.106477380

[Report]

Anonymous

9/4/2025, 1:48:20 AM

No.106477738

[Report]

Anonymous

9/4/2025, 5:34:00 AM

No.106479197

[Report]

>>106476898

Blurrier than KJ nodes at 3:3 steps... I'll stick with KJ.