/ldg/ - Local Diffusion General

Anonymous

9/6/2025, 6:44:32 PM

No.106503413

[Report]

>>106503449

>model-00001-of-00010.safetensors

should I stitch everything ?

Anonymous

9/6/2025, 6:45:53 PM

No.106503430

[Report]

>>106503467

Anonymous

9/6/2025, 6:47:36 PM

No.106503449

[Report]

>>106503468

>>106503413

Don't just cat them together each file has a unique header and metadata.

Anonymous

9/6/2025, 6:48:21 PM

No.106503456

[Report]

>>106503578

>>106503425

What layer preset do you use? One anon claimed that 'blocks' is superior but slower

Anonymous

9/6/2025, 6:48:31 PM

No.106503460

[Report]

comfy should be dragged out on the street and shot

Anonymous

9/6/2025, 6:49:17 PM

No.106503467

[Report]

>>106503430

wow new comfy UI looks fire

Anonymous

9/6/2025, 6:49:18 PM

No.106503468

[Report]

>>106503714

>>106503449

alright I will find the best way to do it then

>>106503402 (OP)

New to this whole comfyui thing, here's my first image made with

CLIPLoader (GGUF) Qwen2.5-VL-7B-Instruct-Q3_K_M.gguf

Unet Loader (GGUF) qwen-image-Q4_k_m.gguf

Load VAE qwen_image_vae.safetensors

Anonymous

9/6/2025, 6:54:57 PM

No.106503527

[Report]

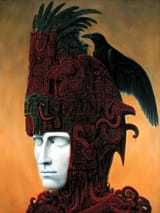

So I've been playing with those Chroma Radiance models (very underbaked I guess, the then latest one was okay, but the one released a day later was absolute shit). I noticed it decides to denoise some stuff it can't recover from. This happened with Euler, etc. I thought, huh, I wish there was some mechanism for it to correct its mistakes.

Anyway, I noticed that Euler ancestral worked much better. I tried it with current Chrome (v48) and it works even better there. Pic related, Euler ancestral and DPM++ 2s ancestral "converge" to the first image, while ~all of the non-ancestral samplers converge to the second image. Keep in mind that I'm using a lora based on a Belgian comic book, so the one on the left is more correct. Give Chroma with ancestral samplers a chance.

I just noticed chroma loads faster than sd1.5, kek. Shame its still slow to gen, even with the 8 step loras.

Anonymous

9/6/2025, 6:59:10 PM

No.106503578

[Report]

>>106503456

Oh, I'm using diffusion-pipe for Chroma atm. If OneTrainer were in the browser (like Comfy), then I'd probably use it. That being said, I've found block-only training to work well in the past for other models like Sigma

Anonymous

9/6/2025, 7:00:01 PM

No.106503588

[Report]

>>106503742

>>106503546

desu it looks all right for something "underbaked", is it slower to use the radiance one over the regular chroma model?

Anonymous

9/6/2025, 7:01:46 PM

No.106503607

[Report]

>>106503738

https://github.com/leejet/stable-diffusion.cpp/blob/master/docs/wan.md

bros, anistudio is going to have true multigpu before cumfart. what is this timeline?

Anonymous

9/6/2025, 7:03:02 PM

No.106503623

[Report]

>>106503660

>>106503577

>I just noticed chroma loads faster than sd1.5, kek.

absolute cap. actually load both and take screenshots of time to image.

Anonymous

9/6/2025, 7:03:17 PM

No.106503628

[Report]

>>106503546

why do these models have such a hard time with swords, it's not like there's lack of training data, but even the best models struggle with drawing swords correctly

Anonymous

9/6/2025, 7:03:30 PM

No.106503634

[Report]

Anonymous

9/6/2025, 7:04:03 PM

No.106503640

[Report]

>>106503626

i just love big pendulous bosoms so much its unreal bros.

Anonymous

9/6/2025, 7:05:33 PM

No.106503656

[Report]

Blessed thread of frenship

Anonymous

9/6/2025, 7:06:06 PM

No.106503660

[Report]

>>106503691

>>106503623

Im not doing all that, got gens running. Try it for yourself, I'm using the chroma workflow from

https://github.com/ChenDarYen/ComfyUI-NAG

Anonymous

9/6/2025, 7:06:08 PM

No.106503661

[Report]

>>106503626

lust provoking nun

Anonymous

9/6/2025, 7:09:14 PM

No.106503691

[Report]

>>106503737

>>106503660

unless you're misusing the word "loads" somehow it's physically impossible, sd1.5 is a smaller model than chroma. the smaller one will load faster, simple as,

Anonymous

9/6/2025, 7:11:22 PM

No.106503714

[Report]

>>106503735

>>106503468

import pathlib

import safetensors.torch

state_dict = {}

path = pathlib.Path(".")

files = path.glob("*.safetensors")

for file in files:

state_dict.update(safetensors.torch.load_file(file))

safetensors.torch.save_file(state_dict, "merged.safetensors")

Anonymous

9/6/2025, 7:12:28 PM

No.106503718

[Report]

>>106505006

>>106503577

>I just noticed chroma loads faster than sd1.5

Unless you are using q4 of Chroma and t5xxl, then not a chance

SD1.5 is 4gb, Chroma checkpoint is ~16gb + txx5 which is 9gb

What probably happened is that the Chroma checkpoint was cached in ram

Anonymous

9/6/2025, 7:14:19 PM

No.106503735

[Report]

>>106503714

I'm trying something else but thanks for sharing anon

Anonymous

9/6/2025, 7:14:33 PM

No.106503737

[Report]

>>106503855

>>106503691

Try it for yourself or don't lol, anyway, back to genin'

Anonymous

9/6/2025, 7:14:40 PM

No.106503738

[Report]

>>106503607

Nice. Now all he needs to do is... make the engine actually work (and not crash as well).

>>106503588

Oh no, the images show v48.

Pic related is from a day before yesterday version of Radiance. The one I currently have on my computer literally cannot generate good pictures, like awful noisy crap.

Radiance is about 1.5x slower than Chroma/Flux for my AMD card. Use the ChromaRadianceOptions node and set nerf_tile_size to 0 or 4k. Doesn't affect VRAM at all on my machine.

Anonymous

9/6/2025, 7:15:55 PM

No.106503751

[Report]

>>106503843

>>106503742

Forgot to mention the most important stuff: my v48 trained loras work on Radiance.

Anonymous

9/6/2025, 7:17:25 PM

No.106503764

[Report]

>>106503789

>>106503546

prompt? i have a hard time getting disembodied heads to work

Anonymous

9/6/2025, 7:17:59 PM

No.106503769

[Report]

>>106502771

>Just ohhh, mmm, hmm that kind of stuff. I think contextually the words in the script helped it figure out what I wanted.

That's actually crazy, I have to try this out. Thanks anon

Anonymous

9/6/2025, 7:17:59 PM

No.106503770

[Report]

>>106504268

>>106503497

Hope you stick around and post some cool stuff, Anon.

>>106503546

There's so much stuff that works on certain prompts/gens and utterly breaks on others with Chroma, it's kinda ridiculous. Even after genning a thousand plots I'm still not 100% sure on what I would stick with.

>>106503626

Her shadow makes her look like a muppet, heh.

Anonymous

9/6/2025, 7:20:06 PM

No.106503789

[Report]

>>106503764

>This is a color illustration of a Miku Hatsune, a girl with teal twintails. wearing a gray dress and a black cloak with a golden clasp. In her left hand she holds a bloodied sword, drenched in blood, dripping with blood. In her right hand is the decapitated head of Teto, a girl with two red tornado curls. She is lifting it by one of the curls. She is walking a stony path on a moor. It is overcast.

I'm having a real hard time to get the neck-gore part to work. These models haven't seen decapitated heads, I'm quite certain.

Anonymous

9/6/2025, 7:20:28 PM

No.106503793

[Report]

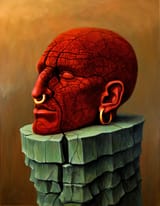

Guys a little help, what models can be used to achieve this style?

>>106503803

Attaching a few more

>>106503742

The 'fuzzy edges' of Radiance keep getting less prominent, but they're still there.

Has lodestone mentioned how many more epochs he has planned on training, or will he just keep on until it's good or the money run out ?

Anonymous

9/6/2025, 7:23:20 PM

No.106503830

[Report]

>>106503869

Anonymous

9/6/2025, 7:24:50 PM

No.106503843

[Report]

>>106503751

Yeah, this is really great, whether you use VAE or Pixel Space doesn't seem to have any effect on the layers that a lora is trained on, so a standard Chroma VAE trained lora should work exactly as well on Chroma Pixel Space, aka Radiance, at least in theory.

Anonymous

9/6/2025, 7:26:15 PM

No.106503855

[Report]

>>106503737

ah ok gotcha so it's a classic case of

>i don't actually understand what's happening but i'm still right and you're wrong anyway kthxbye.

typical.

Anonymous

9/6/2025, 7:26:39 PM

No.106503861

[Report]

>>106503822

nta but he seems to have unlimited money. the initial fundraiser only raised 25k but he already spent 6x that. he either has a secret sponsor or he's throwing his entire house into this

Anonymous

9/6/2025, 7:27:48 PM

No.106503869

[Report]

>>106503949

Anonymous

9/6/2025, 7:28:11 PM

No.106503874

[Report]

>>106503742

oof that still looks rough, let's hope it'll get the result of v48 with more training

Anonymous

9/6/2025, 7:28:27 PM

No.106503879

[Report]

>>106503949

>>106503803

>>106503816

proably any noobAI/Illustrious based mix with the right artist/series tags desu. doesn't look particularly obscure or unique, just that kinda late 90s cowboy bebop/eva aesthetic.

Anonymous

9/6/2025, 7:28:54 PM

No.106503883

[Report]

>>106503980

>>106503822

With Radiance I've noticed it will not go above the resolution its trained with. With other models it's like 'yeah let's add some artefacts" but with Radiance it's like "here's your upscaled 512x512 illustration". This is my experience with non-photo stuff (I don't really do any photorealistic stuff, so idk).

Anonymous

9/6/2025, 7:34:42 PM

No.106503949

[Report]

>>106503883

isnt this true with any model (cascade, sana) that claims to support '4k gens'?

>We introduce Sana, a text-to-image framework that can efficiently generate images up to 4096 × 4096 resolution

it's all bullshit, no free lunch. there is no getting around the fact that it's being trained at tiny resolution.

>>106503980

I didn't mean like a proper upscale, just an interpolated one. Like when I look at a Radiance gen, I think "oh, you just gave up". It can look fine, but it's low resolution.

Anonymous

9/6/2025, 7:42:21 PM

No.106504041

[Report]

Anonymous

9/6/2025, 7:43:10 PM

No.106504053

[Report]

>>106504035

(cont) but my point is that it doesn't really INVENT shit.

Anonymous

9/6/2025, 7:43:39 PM

No.106504063

[Report]

>>106503980

sana uses resolution binning to avoid this so even the 1024 1.0 model can do 4k images without deformities albeit with poor fidelity.

you'll only get bodyhorror when you set use_resolution_binning=False desu

Anonymous

9/6/2025, 7:44:33 PM

No.106504075

[Report]

>>106504359

>>106504035

I wouldn't jump to conclusions right now, it's really undertrained at the moment, did he say on his discord how much time it's gonna take?

Anonymous

9/6/2025, 7:45:38 PM

No.106504092

[Report]

>>106503803

>>106503816

>>106503822

Love the 80's/90's shading. Soon I won't have to put up with basic anime art shit.

Anonymous

9/6/2025, 7:47:02 PM

No.106504113

[Report]

Can you make a lora from pics that are below 512px? For example for an accessory or a headgear?

>https://docs.comfy.org/tutorials/flux/flux-1-uso

has anyone tried this yet? especially for style transfers/deslopping flux?

Anonymous

9/6/2025, 7:56:00 PM

No.106504228

[Report]

>>106504286

>>106504211

>flux

we moved on to qwen and edit does a good enough job already

Anonymous

9/6/2025, 7:58:25 PM

No.106504263

[Report]

>>106504275

>>106503816

Fixed it for this general.

I'm kidding, of course. I like the gens. Are they from some Anon or just random finds?

Anonymous

9/6/2025, 7:59:02 PM

No.106504268

[Report]

>>106505573

>>106503770

I can see all the seams of your shitty upscaling job

Anonymous

9/6/2025, 7:59:44 PM

No.106504275

[Report]

>>106505573

>>106504263

found it on X, nice conversion, via Qwen Im assuming? Are you the Nazi girl qwen anon btw?

Anonymous

9/6/2025, 8:00:29 PM

No.106504285

[Report]

>>106504307

>>106504211

it's really bad, the details are destroyed (worse than on Chroma lol)

Anonymous

9/6/2025, 8:00:31 PM

No.106504286

[Report]

>>106504228

I use Qwen edit and even with the style transfer LORA it slops styles up.

Anonymous

9/6/2025, 8:01:13 PM

No.106504299

[Report]

>>106503980

>picrel

ooooooh that takes me back

Anonymous

9/6/2025, 8:02:00 PM

No.106504307

[Report]

>>106504350

>>106504285

that gen is focused on identity preservation rather than style transfer though, right?

Anonymous

9/6/2025, 8:06:04 PM

No.106504350

[Report]

Anonymous

9/6/2025, 8:06:52 PM

No.106504359

[Report]

>>106504384

>>106504075

I don't really watch his Discord, joined just to confirm I got the correct Radiance fork of Comfy.

But my point wasn't a reproach, just an observation. But now that I've thought about it: Might the 512 -> 1024 switch be even worse for Radiance than it was for normal Chroma? I heard Radiance converges 8x faster (1 week vs 2 months)...

>>106504359

>I heard Radiance converges 8x faster (1 week vs 2 months)...

on the paper it says it's 8x faster on inference?

https://arxiv.org/pdf/2507.23268

Anonymous

9/6/2025, 8:13:11 PM

No.106504429

[Report]

>>106504384

I thought it was based on some 1+ year old stuff, with toy models and this was the first proper model.

Anonymous

9/6/2025, 8:13:28 PM

No.106504432

[Report]

>>106504468

>>106504384

it's 8x faster than other pixel methods, but still 1.5x slower than going for a VAE

Anonymous

9/6/2025, 8:15:56 PM

No.106504468

[Report]

>>106504535

>>106504432

I think you are an LLM.

Anonymous

9/6/2025, 8:18:40 PM

No.106504502

[Report]

>see radial attention got a recent update

>still nothing checked on the to do list

sigh...

Anonymous

9/6/2025, 8:21:15 PM

No.106504535

[Report]

>>106504468

says the bot kek

will we be able to move away from the videos are 5 seconds max because hallucination at some point in the future or is this the max?

https://voca.ro/19mPhLXOiiml

I can't believe this is what finally got me to install ComfyUI.

Anonymous

9/6/2025, 8:25:31 PM

No.106504576

[Report]

>>106504677

>>106504563

nope we will never ever have anything better this is the best itll ever be and yoou are a retarded faggot for thinking otherwise

Anonymous

9/6/2025, 8:29:48 PM

No.106504629

[Report]

>>106504563

I think it'll get better, but that also depends on jewdia

Anonymous

9/6/2025, 8:36:14 PM

No.106504677

[Report]

>>106504791

>>106504563

>we will escape last frame

>we will escape +3 second nodes

>we will escape loop n' burn workflows

>we will escape load video to extend

>we will escape 5 second hell

>>106504576

Cursed reply of uncertainty and malevolence

Anonymous

9/6/2025, 8:38:33 PM

No.106504695

[Report]

>>106504756

>>106504563

with current model, all we need is to be able to connect multiple 81 frames together with more than one frame as a starting latent to preserve motion

Anonymous

9/6/2025, 8:44:51 PM

No.106504756

[Report]

>>106505160

>>106504695

Think

https://github.com/bbaudio-2025/ComfyUI-SuperUltimateVaceTools does that but then again, its for vace. Seems to be the best stitch up nodes/workflows I've found. As for being seamless, its a hit or miss

Anonymous

9/6/2025, 8:46:53 PM

No.106504779

[Report]

>>106504790

>>106504570

what settings did you use?

Anonymous

9/6/2025, 8:48:17 PM

No.106504790

[Report]

>>106504779

Pretty much the default of

https://github.com/wildminder/ComfyUI-VibeVoice except using q4 and 20 steps.

Anonymous

9/6/2025, 8:48:25 PM

No.106504791

[Report]

>>106504677

you forgot

>we will escape python

Hello, share some sloppy /adt/ Neta Lumina experiments:

-Test between Janku and Neta Lumina only tags:

>>106502411

-Test between WAI v15, Neta Lumina, Qwen and Nano BAnana only tags:

>>106501820

-Bloat it, and a little meltie:

>>106502890

-HiResfix 0.4 denoise

>>106504681

Miscelanieus gens using only prose (contradicting the official guide)(spam reason didnt use the other '>'):

>106502492

>106502549

>106502523

>106502694

>106503582

>106503871

Also and more important, Question,

Does anybody knows why its so slow Neta Lumina compared to other "heavy" models?,

Maybe because it's not an SDXL/Flux/Qwen and Comfy isn't using sage attention, xformers, cuda, etc.?

when will AI have this level of sovl?

Anonymous

9/6/2025, 8:59:29 PM

No.106504882

[Report]

>>106504906

>>106504840

Interesting that anon compared Neta with.... those models lol

Anonymous

9/6/2025, 9:00:49 PM

No.106504891

[Report]

>>106504906

>>106501820

>booruprompting NL models

holy retard

Anonymous

9/6/2025, 9:01:52 PM

No.106504898

[Report]

>>106505084

can't seem to get vibevoice to work in comfy..

VibeVoiceSingleSpeakerNode

Error generating speech: Model loading failed: VibeVoice embedded module import failed. Please ensure the vvembed folder exists and transformers>=4.51.3 is installed.

the vvembed folder is there from the git clone and transformers 4.55 is installed

Requirement already satisfied: transformers in ./comfyui/lib/python3.12/site-packages (4.55.2)

>>106504882

Which models do you recommend? I suffer from chronic Vramlet

>>106504891

Official guides recomend boru prompting!

Anonymous

9/6/2025, 9:05:32 PM

No.106504932

[Report]

>>106504906

Naked NoobAI vPred is the only local Booru model that matters other than the potential behind Neta.

Anonymous

9/6/2025, 9:12:22 PM

No.106505006

[Report]

Anonymous

9/6/2025, 9:16:06 PM

No.106505044

[Report]

Friendly thread of shilling failed models

Anonymous

9/6/2025, 9:20:11 PM

No.106505084

[Report]

>>106505213

>>106504898

just use the gradio interface. every audio models in comfy sucks because the interface is all over the place. it really isn't suited to that kind of work

Anonymous

9/6/2025, 9:21:00 PM

No.106505097

[Report]

>>106505122

qwen is only good with a boreal lora, otherwise it's all sameface shit

>>106504906

Naa you have to use them in conjunction, the model has no idea what you are trying to convey with just the tags,

Here is "create a image where depicting #jeanne_d'arc_alter_\(fate\) is squatting in front of pot of black roses holding a watering can and watering the roses, she is looking at the roses expressionless, the background is white,"

I am too lazy to look up her outfit tags but yeah you get the idea. I don't know what he is testing on the 2nd and 3rd ones

>slow

Heftier text encoder is my guess

Anonymous

9/6/2025, 9:22:44 PM

No.106505122

[Report]

>>106505097

does it help for qwen image edit aswell?

Anonymous

9/6/2025, 9:22:58 PM

No.106505125

[Report]

qwen nunchaku lora yet?

Anyone recommend a comfyui that lets me iterate through a list/text file to replace part of the prompt?

Example: "A dancing cat, illustrated by {artist}"

Where {artist} will be replaced by the next entry in a csv file or equivalent for each successive image.

Not useful: wildcard where it replaces the variable with a random entry from the list, rather than iterating sequentially.

Anonymous

9/6/2025, 9:26:19 PM

No.106505154

[Report]

>>106505214

>>106505111 (me)

Also you really need to use artist mix since all the artist in this model is underbaked as fuck

Anonymous

9/6/2025, 9:26:55 PM

No.106505160

[Report]

>>106504756

yeah something like that but for wan2.2

Anonymous

9/6/2025, 9:27:07 PM

No.106505163

[Report]

>Generate only comma-separated Booru tags (lowercase with spaces). Strict order; medium, meta, then general tags. Include counts (1girl), appearance, clothing, accessories, pose, expression, actions, background. Use precise Booru syntax. No extra text. Short length. Do NOT use any ambiguous language. DO NOT prefix tags with words like "meta:" or "artist:". DO NOT include artist names. Your response will be used by a text-to-image model, so avoid useless meta phrases like “This image shows…”, "You are looking at...", etc.

Anonymous

9/6/2025, 9:27:30 PM

No.106505167

[Report]

>>106505152

use the ComfyUI API

Anonymous

9/6/2025, 9:28:00 PM

No.106505172

[Report]

>>106504843

I'd bet an uncensored model 10 times the size of Qwen could handle that.

Anonymous

9/6/2025, 9:30:15 PM

No.106505195

[Report]

>>106505206

I saw something like this

Newbie question

>The most important difference between forge and reForge is that forge works with SDXL models and flux, while reForge is only for SDXL-based models. reForge itself has a few optimizations that aren't present in forge and slightly better extension compatibility, making it the best option for SDXL models between each UI.

I am still not sure how to choose if I want re or non. How do I know if I'll want to run or at least start with flux models?

Anonymous

9/6/2025, 9:31:17 PM

No.106505206

[Report]

Anonymous

9/6/2025, 9:31:21 PM

No.106505207

[Report]

>>106505152

ask a chatbot to write you a oneliner desu

Anonymous

9/6/2025, 9:32:07 PM

No.106505213

[Report]

>>106505084

>gradio

thanks.. didn't know there was one but found one

>>106505111

>>106505154

Thanks,

>Heftier text encoder

I don't know what is it, but im using the one that Neta Lumina suggests that is Google Hema and Flux vae.

Also i'm using NetaYumeLumina

Anonymous

9/6/2025, 9:33:30 PM

No.106505227

[Report]

>>106505296

>>106505214

I love how ComfyUI let me have a txt2img hiresfix in one view

Anonymous

9/6/2025, 9:33:46 PM

No.106505229

[Report]

>>106505196

>How do I know if I'll want to run or at least start with flux models?

If you have even a sliver of creativity you'll soon run into the limits of sdxl.

Anonymous

9/6/2025, 9:36:00 PM

No.106505240

[Report]

>>106505319

>>106505196

NeoForge, forget ReForge, forget Forge

Anonymous

9/6/2025, 9:40:15 PM

No.106505269

[Report]

>>106505335

>>106505214

You didn't actually have to download the gemma model separately, it comes embedded if you downloaded the civit one. Anyway it's basically what takes your words and turns it into the language the model speaks to make your image. Lumina uses a small llm (gemma 2B) and sdxl uses clip which multitudes smaller. Using an llm gives the advantage of understanding the context behind words which gives you better prompt adherence.

Anonymous

9/6/2025, 9:42:56 PM

No.106505296

[Report]

>>106505335

>>106505227

it's too busy and panning sucks when inferencing. like the UI has Parkinson's or something

>>106505240

neo forge is still baking, give it time. i would absolutely NOT recommend using it right now as its based on the wildly outdated forge instead of reforge.

Anonymous

9/6/2025, 9:46:38 PM

No.106505335

[Report]

>>106505398

>>106505269

I see, if im understandiing I'm bloating my workflow and increasing my processing time by using two Gemma 2B models.

One is already attached, and the other I downloaded and put it in the node. The reason I had to download it is that the imported workflow indicated I needed the Gemma 2B.

>>106505296

more compact!

Anonymous

9/6/2025, 9:47:04 PM

No.106505339

[Report]

>>106505356

everytime i get bored of wan i forget how good it understands camera controls and i just nut harder

>>106505319

NeoForge is already operational and supports all models except Qwen.

The only distinction from ReForge is the "++" samples and exotic schedulers that 99% of the time you won't use. I'm using it at the moment, more stable, more speed than ReForge and Forge

Anonymous

9/6/2025, 9:49:26 PM

No.106505356

[Report]

>>106505339

unless you use that 4step lora.. then it disregards any sort of camera control whatsoever

Anonymous

9/6/2025, 9:49:26 PM

No.106505357

[Report]

>>106505383

>>106505319

ani will steal the thunder before that happens. what would be the point of using a gradio UI if there is a full on desktop app available?

Anonymous

9/6/2025, 9:50:55 PM

No.106505371

[Report]

>>106505414

>>106505354

cool. now actually use it with chroma and watch as the memory management makes you sit there like a dumb cunt for minutes.

look, i'll give you that it works completely fine for SDXl but anything else is out of scope.

the controlnet it uses is completely out of date and missing several things.

Anonymous

9/6/2025, 9:51:06 PM

No.106505372

[Report]

>>106505354

>the "++" samples and exotic schedulers that 99% of the time you won't use.

speak for yourself

Anonymous

9/6/2025, 9:52:01 PM

No.106505383

[Report]

>>106505411

>>106505357

shut the fuck up, grown ups are talking. go back to adt

Anonymous

9/6/2025, 9:53:02 PM

No.106505393

[Report]

>>106505401

Anonymous

9/6/2025, 9:53:18 PM

No.106505398

[Report]

>>106505443

>>106505335

No you should be fine, in comfy you load the TE and Unet separately, so what you are doing is just using another copy of the TE instead of using the one in the model,. Processing wise it doesn't matter, not much you can do about the speed other than maybe get sage running (which increases the speed around 30-is%) but honestly model is just slow

Anonymous

9/6/2025, 9:53:38 PM

No.106505401

[Report]

>>106505465

Anonymous

9/6/2025, 9:54:38 PM

No.106505411

[Report]

>>106505383

you are mad because I am right

Anonymous

9/6/2025, 9:55:17 PM

No.106505414

[Report]

>>106505975

>>106505371

>the controlnet it uses is completely out of date and missing several things.

Please explain this

Anonymous

9/6/2025, 9:58:13 PM

No.106505437

[Report]

>>106495787

>>106495819

>>106495834

I really want to know what tags/lora anon used to get this aesthetic out of noob

>>106505398

>sage running (which increases the speed around 30-is%)

How can I achieve that? I believe it will help me with using SDXL, WAN, or Qwen as well, thank you,

Anonymous

9/6/2025, 9:59:40 PM

No.106505456

[Report]

>>106505443

Also is there an option no magnet the nodes between then for more COMPACTNESS

Anonymous

9/6/2025, 10:00:21 PM

No.106505465

[Report]

>>106505486

>>106505401

Beksinski test

Anonymous

9/6/2025, 10:01:39 PM

No.106505476

[Report]

>>106505914

>>106505443

SDXL doesn't work with sage and qwen and neta generate black screen. But for wan it's huge.

Anonymous

9/6/2025, 10:04:00 PM

No.106505486

[Report]

>>106505538

>>106505465

Nice. If you'd oblige me, I'm interested in the set size, tagging, training params, etc. Looks really cool.

>>106505486

OneTrainer, 16gb Chroma preset. 0.0003 LR adamnw8bit + cosine. Batch 2, per step 3. Training resolution 512. Images tagged with JoyCaption beta one + some booru tags

Anonymous

9/6/2025, 10:15:33 PM

No.106505573

[Report]

>>106504268

Damn, I changed something about my latent rescale code last evening and I might have fucked something up.

There shouldn't be any seams, because I'm not tiling at all.

It might also have been a Wan quirk, sometimes it really goes overboard with backgrounds. I dunno!

>>106504275

Yeah, hi. Have a dipsy.

Anonymous

9/6/2025, 10:16:37 PM

No.106505580

[Report]

What am I missing about this? I got WebUI working and seem to only be able to create deformed beasts. I just want to generate realistic human portraits.

Anonymous

9/6/2025, 10:18:25 PM

No.106505597

[Report]

>>106505618

>>106505587

how about generating at normal resolution and then downsizing

Anonymous

9/6/2025, 10:19:00 PM

No.106505602

[Report]

Does lora rank matter for training character/style or is it only good for the distill experiments and other memes?

Anonymous

9/6/2025, 10:20:51 PM

No.106505618

[Report]

>>106505623

>>106505597

This is a cropped 512x512 image in any case. What checkpoint/sampling method should I be using?

Anonymous

9/6/2025, 10:21:28 PM

No.106505623

[Report]

>>106505668

>>106505618

What model are you using?

Anonymous

9/6/2025, 10:26:41 PM

No.106505670

[Report]

>training samples become entirely black

>system crashes

uh... hah... okay

Anonymous

9/6/2025, 10:27:41 PM

No.106505676

[Report]

>training samples become entirely black

>click "stop training"

>system crashes

uh... hah... okay

Anonymous

9/6/2025, 10:28:01 PM

No.106505678

[Report]

>>106505692

>>106505668

what the fuck is even that?

Anonymous

9/6/2025, 10:29:55 PM

No.106505689

[Report]

>>106505891

>>106505668

use qwen

gen 1024x1024

downscale to 256x256

Anonymous

9/6/2025, 10:30:24 PM

No.106505692

[Report]

>>106505668

>>106505678

My sweet summer child

Anonymous

9/6/2025, 10:31:44 PM

No.106505710

[Report]

>>106505879

>>106505538

How long does it take to converge?

Anonymous

9/6/2025, 10:39:24 PM

No.106505799

[Report]

>>106505587

That's my passport picture you freak, stop doxing me.

Anonymous

9/6/2025, 10:41:11 PM

No.106505819

[Report]

>>106505879

>>106505192

Very nice, there's great texture when using Chroma for art

What does it mean when it just generates a matte block of color?

Anonymous

9/6/2025, 10:45:56 PM

No.106505879

[Report]

>>106506009

>>106505710

I let it run for 100 epochs, little bit under 3 hours. I think I better up the training resolution

>>106505819

It's good, but I think the Image Contrast Adaptive Sharpening node from essentials is pretty much mandatory with some styles. It's just too blurry without

>>106505689

NTA but should I not be genning above 1MPixel with Qwen? Their own examples show super high resolutions being used and I get much better results when I push it to 1536x1536 (can't go any higher than that because VRAMlet)

Anonymous

9/6/2025, 10:47:29 PM

No.106505894

[Report]

>>106505192

Is this darkseed

Anonymous

9/6/2025, 10:47:49 PM

No.106505898

[Report]

>>106505915

>>106505865

What model? For qwen and neta you need to remove the sage attention from the bat file

Anonymous

9/6/2025, 10:49:07 PM

No.106505911

[Report]

Anonymous

9/6/2025, 10:49:22 PM

No.106505914

[Report]

>>106506072

>>106505476

>SDXL doesn't work with sage

what about pony, illustrious and noob?

Anonymous

9/6/2025, 10:49:23 PM

No.106505915

[Report]

>>106505948

>>106505898

For qwen

>remove the sage attention from the bat file

I don't know what this means

Anonymous

9/6/2025, 10:52:22 PM

No.106505947

[Report]

>TFW I don’t have voice samples of my oneitis to run in vibevoice

WHY MEEEEEEEEEE

Anonymous

9/6/2025, 10:52:26 PM

No.106505948

[Report]

>>106506068

>>106505915

The launch file nigga. Also if you are using qwen regularly make a permanent copy with just the one parameter removed. I think comfy has been working on hotswapping sage, but idk if it's done yet.

Anonymous

9/6/2025, 10:54:11 PM

No.106505967

[Report]

should i be using the default lanczos upscaler for Wan or is there one better you guys recommend?

Anonymous

9/6/2025, 10:55:56 PM

No.106505975

[Report]

>>106505414

someone made a issue. what part do you not understand? it is missing crucial parts of ip-adapter that make it useless. you can't select if you want style or composition so instead you get nothing when using it.

Not enough chroma hate in this thread so I'll catch us up.

1. Chroma failed. The issues that were apparent early on were never fixed.

2. Lodestones is incapable of seeing a project through to completion and is incapable of documenting his process. His delusional sycophants see this as a positive.

3. Lodestones is now using donated funds to train whacky concepts with no hope of viable output. Like a qwen edit style edit chroma model and a vae less chroma. He may as well be burning cash on live stream.

Anonymous

9/6/2025, 10:58:49 PM

No.106506000

[Report]

>>106505990

keep crying while i jork to chroma outputs.

chroma is retarded in many ways yet it's still better than flux.

Anonymous

9/6/2025, 11:00:13 PM

No.106506007

[Report]

>>106505990

AntiChromaSchizo was right all along.

>>106505879

No repeats? Or does Chroma just necessitate many many steps

Anonymous

9/6/2025, 11:01:47 PM

No.106506025

[Report]

>>106506009

Not that guy but I train 30 steps for 100-150 epochs. But still testing since there is no actual proper info for this.

Anonymous

9/6/2025, 11:02:31 PM

No.106506033

[Report]

>>106505990

>Same copypasted bait from the inception of Chroma

A better bait was

>Qwen is better

But Qwen is still leagues behind Chroma and the only evidence of it being "better" was slopped images. You still don't get the prompting freedom, variety or photorealism out of a pure txt2img prompt on Qwen, and you certainly can't just train a LoRA either as that LoRA would still have the same issues.

Interesting video on woct0rdho's radial attention on 12gb card:

https://www.youtube.com/watch?v=tNXdSnP-Tdc

and looks like he's been updating it

https://github.com/woct0rdho/ComfyUI-RadialAttn going give it another go later on

Anonymous

9/6/2025, 11:02:59 PM

No.106506036

[Report]

>>106505990

4. Now Lodestones begs /ldg/ anons to use their GPU to train his failed model like a total narcissistic furry.

If it's not a competitive model, at least it should be a cult model.

Anonymous

9/6/2025, 11:03:12 PM

No.106506038

[Report]

>>106506704

>>106505990

>3. Lodestones is now using donated funds to train whacky concepts with no hope of viable output. Like a qwen edit style edit chroma model and a vae less chroma.

These are actually the best things he's been working on. chroma itself is hopeless because of 512, but these experiments could produce useful knowledge for everyone.

Anonymous

9/6/2025, 11:03:52 PM

No.106506044

[Report]

>>106506053

training is scary because sometimes the samples get really bad but then they get better but then they get bad again and your scared they wont get better

Anonymous

9/6/2025, 11:04:23 PM

No.106506048

[Report]

>>106506152

>>106506034

Is it still hardlocked into specific resolutions?

Anonymous

9/6/2025, 11:04:43 PM

No.106506053

[Report]

>>106506077

>>106506044

just disable samples and free your mind

Anonymous

9/6/2025, 11:06:10 PM

No.106506068

[Report]

>>106505948

why are you shilling comfyui so hard?

Anonymous

9/6/2025, 11:06:33 PM

No.106506071

[Report]

>>106506009

>No repeats? Or does Chroma just necessitate many many steps

I've mostly trained just one concept so there's no need for repeats.

Anonymous

9/6/2025, 11:06:36 PM

No.106506072

[Report]

>>106505914

anon those all use sdxl as a base

>>106506053

im not smart enough to fully grasp and understand when its finished or under or over baked so i need constant samples and to sit on the edge of my seat in fear

>*anon announces his post is bait*

>*other anons immediately take said bait*

Why are you like this.

Anonymous

9/6/2025, 11:08:41 PM

No.106506089

[Report]

>>106506034

considering no one talks about this, i assume it was snake oil/another meme speed up

Anonymous

9/6/2025, 11:10:27 PM

No.106506104

[Report]

>>106506077

You know a LoRA is overfitted when it performs exceptionally well on the training data but struggles to generalize to new, unseen prompts and images, often exhibiting artifacts, oversaturation, or a lack of flexibility. You can identify overfitting by using validation sets to test performance, observing learning curves that show divergence between training and validation performance over time, or by generating a grid of images across different epochs to spot deteriorating quality. Symptoms like an "burnt-out look", image distortion, or the inability to generate varied poses or styles outside the training set are strong indicators of a LoRA that has become too specialized.

Anonymous

9/6/2025, 11:10:30 PM

No.106506105

[Report]

>>106506081

>Still that anon btw

Anonymous

9/6/2025, 11:10:56 PM

No.106506110

[Report]

>>106506077

hmm, sounds like you just like the fear and anticipation.

Anonymous

9/6/2025, 11:12:03 PM

No.106506120

[Report]

>>106506081

You may think it's bait, but those were my genuine thoughts

Anonymous

9/6/2025, 11:14:20 PM

No.106506139

[Report]

>>106505891

If you have kjnodes installed, there's a 'empty latent custom presets' node.

In the custom_nodes folder of kjnodes there's a custom_dimensions.json.

Plop these in there:

{

"label": "QWEN 1:1",

"value": "1328x1328"

},

{

"label": "QWEN 16:9",

"value": "1664x928"

},

{

"label": "QWEN 9:16",

"value": "928x1664"

},

{

"label": "QWEN 4:3",

"value": "1472x1104"

},

{

"label": "QWEN 3:4",

"value": "1104x1472"

},

{

"label": "QWEN 3:2",

"value": "1584x1056"

},

{

"label": "QWEN 2:3",

"value": "1056x1584"

}

Anonymous

9/6/2025, 11:14:41 PM

No.106506144

[Report]

Anonymous

9/6/2025, 11:15:53 PM

No.106506152

[Report]

>>106506048

Watch the video, it explains it but yeah looks like its still locked. Here's the width, height and lengths that work apparently (picrel)

>>106506092

Kek the video just came out yesterday

Anonymous

9/6/2025, 11:17:42 PM

No.106506163

[Report]

>>106506183

>>106506092

Radial iirc was for better longer videos.

Anonymous

9/6/2025, 11:17:44 PM

No.106506166

[Report]

>>106506325

I just started messing with OneTrainer so forgive my dumb question. Do I need ALL the files from pic related in addition to the actual checkpoint or is there a specific file that I should get instead?

I ask because if I point OneTrainer to just the base model by itself, it does not work. But if I point it to the same file from within a folder that houses all those files, it works. I want to know what file/folder is allowing me to proceed so I don't have to download everything if I don't have to.

Anonymous

9/6/2025, 11:20:13 PM

No.106506183

[Report]

>>106506277

>>106506163

sure but what is the actual speed up, and how bad is the quality loss?

show me

Anonymous

9/6/2025, 11:25:22 PM

No.106506224

[Report]

so we escape the hell of 5 second videos, do you have enough vram to prompt them?

what do you guys prompt all day? your waifu?

Anonymous

9/6/2025, 11:28:12 PM

No.106506248

[Report]

>>106506310

>>106506231

If It were /adt/, I would say yes, but here we mostly try and test things in general. Sometimes they are blue balls like, and other times they are purely technical.

>>106506183

Holy shit you are beyond retarded. Read the fucking github

https://github.com/woct0rdho/ComfyUI-RadialAttn woct0rdho's version is NOT about speed. Also look at the video

https://www.youtube.com/watch?v=tNXdSnP-Tdc

Wipe your own ass for once.

Anonymous

9/6/2025, 11:35:10 PM

No.106506308

[Report]

>>106506393

>>106506277

>woct0rdho's version is NOT about speed

??

Anonymous

9/6/2025, 11:35:16 PM

No.106506310

[Report]

>>106507138

>>106506248

Yeah, I think I spend more time tinkering with new tech and Comfy than actually prompting anything.

Anonymous

9/6/2025, 11:36:35 PM

No.106506325

[Report]

>>106506357

>>106506166

I would use easy training scripts for sdxl

Anonymous

9/6/2025, 11:37:56 PM

No.106506337

[Report]

>>106506393

>>106506277

i'm not watching you video cheng.

> woct0rdho's version is NOT about speed.

are you dumb as fuck?

Anonymous

9/6/2025, 11:39:22 PM

No.106506348

[Report]

radial is not about speed retard

Anonymous

9/6/2025, 11:39:23 PM

No.106506349

[Report]

>>106506231

Usually I just gen a lot of plots and plop them in here, or try new models to compare... and then plot them again.

But lately I've been very stuck with genning random 1/2/3girls after being stuck on Wan for a bit.

Even if Qwen is slopped, it's a very fun model, and I barely touched any LoRAs yet.

Image Edit is also fun, but I don't really know what to do with it anymore.

Anonymous

9/6/2025, 11:40:10 PM

No.106506357

[Report]

>>106506384

Anonymous

9/6/2025, 11:40:31 PM

No.106506362

[Report]

>>106505865

>What does it mean when it just generates a matte block of color?

Take "Rothko" out of the prompt.

Anonymous

9/6/2025, 11:43:21 PM

No.106506384

[Report]

Anonymous

9/6/2025, 11:43:22 PM

No.106506385

[Report]

Chroma is a pretty good model, I'm really enjoying using it. The problem is that simply doesn't live up to SD1.5.

>>106506308

>>106506337

>retards cant figure out the main focus is to make longer more coherent videos

Anonymous

9/6/2025, 11:46:13 PM

No.106506409

[Report]

>>106506455

Anonymous

9/6/2025, 11:46:22 PM

No.106506411

[Report]

>>106506393

it does both retard, it speeds up the inference + makes longer videos more coherent

Anonymous

9/6/2025, 11:48:18 PM

No.106506426

[Report]

>>106506440

I think you get the best results with Chroma when you gen with Chroma and then use Qwen to img2img refine the output. That way you get the hyperrealism that Qwen gives you.

Anonymous

9/6/2025, 11:50:06 PM

No.106506440

[Report]

>>106506393

>still needs certain resolutions and lengths

>>106506426

unironically this. i love making crazy shit in chroma that qwen simply doesn't understand and then denoising it at a low strength for a better look.

Anonymous

9/6/2025, 11:50:51 PM

No.106506445

[Report]

>>106506470

>to use comfyui please make an account

>please enter your phone number and credit card to make an account

it's going to get to this and you know it

Anonymous

9/6/2025, 11:51:24 PM

No.106506451

[Report]

>>106506925

Are these settings good for training in OneTrainer for Illustrious? I did some tests and I sometimes have to increase the strength of the LoRa to 1.2 or more for the likeness to appear.

Anonymous

9/6/2025, 11:51:41 PM

No.106506455

[Report]

>>106506528

>>106506409

Please keep these coming, very good style and non-ai like

Anonymous

9/6/2025, 11:52:37 PM

No.106506459

[Report]

>>106506599

>>106506449

Somebody would either fork ComfyUI or it would be replaced by another UI, at that point.

Anonymous

9/6/2025, 11:52:41 PM

No.106506461

[Report]

>>106506599

>>106506449

that's fine anon, the git is still public so we can simply rip all that shit out.

now.. the git going read only is when people should start to worry.

Anonymous

9/6/2025, 11:54:07 PM

No.106506470

[Report]

>>106506477

>>106506445

Are these base model Chroma or are you using a lora ?

That fine detail comes through very well I must say.

Anonymous

9/6/2025, 11:54:51 PM

No.106506477

[Report]

Anonymous

9/6/2025, 11:58:49 PM

No.106506509

[Report]

>>106506588

>>106506449

I think it's more like blender desu

Anonymous

9/6/2025, 11:59:17 PM

No.106506512

[Report]

>>106506527

qwen image 80s on a 3060 @110w

Anonymous

9/7/2025, 12:00:40 AM

No.106506527

[Report]

Anonymous

9/7/2025, 12:01:03 AM

No.106506528

[Report]

>>106506545

>>106506455

That's a failbake desu. I'll come back to my multi-artist smorgasbord later. Thx though. Gathering some new training data.

Anonymous

9/7/2025, 12:04:37 AM

No.106506545

[Report]

>>106506641

>>106506528

what were the settings for the failbake? and was that with onetrainer? i have stuff i want to train but i am hesistant.

Anonymous

9/7/2025, 12:10:38 AM

No.106506588

[Report]

>>106506627

>>106506509

does blender make addons you have to pay for? I thought it was a non profit foundation not a greedy corp

>>106506459

>>106506461

this will happen because comfyorg will go public eventually. it would be at the peak of it's popularity

Anonymous

9/7/2025, 12:15:35 AM

No.106506627

[Report]

>>106506848

>>106506588

Blender itself? I dont think so, but there are thousands of paid blender addons

Anonymous

9/7/2025, 12:17:16 AM

No.106506637

[Report]

>>106504840

>why its so slow Neta Lumina compared to other "heavy" models?

It should be as heavy as SDXL theoretically but the model has not had much if anything done to its code after having been copy pasted from the research repo in comparison with other models in ComfyUI. Doing torch.compile on the model shows how much faster it could get, I get a 2 to 3x speedup. Better hope someone steps up to the plate to do that work, ain't easy.

>>106506545

Onetrainer, 3e-4 lr on both unet and encoder, adamw cosine, batch size 2, noob vpred. The rest mostly default. Perhaps simply lowering the lr would be okay. The biggest hitch is me not knowing how to compensate for a highly diverse dataset with some artists being more represented / higher quality than others, etc. Those params work fine with my single artist sets.

I'm behind on the training lore desu.

Anonymous

9/7/2025, 12:23:01 AM

No.106506689

[Report]

>>106506599

>bad things could happen in the future, i must theorize and doompost about it so i can get those delicious (you)s

>the end times are coming!! THE END TIMES ARE COMING!!!

kek

Anonymous

9/7/2025, 12:24:55 AM

No.106506701

[Report]

>>106506599

>the most techworker-saturated goon hobby

>thinking you could lockdown anything from them

lol

Anonymous

9/7/2025, 12:25:05 AM

No.106506703

[Report]

>>106506641

>for a highly diverse

*for what I assume is a highly diverse and semi-large sized dataset

Anonymous

9/7/2025, 12:25:20 AM

No.106506704

[Report]

>>106506038

>but these experiments could produce useful knowledge for everyone.

or the opposite: because it's being trained on 512 it will look like shit regardless, leaving the actual effectiveness of these techniques unclear

Anonymous

9/7/2025, 12:29:31 AM

No.106506734

[Report]

>>106506798

>>106506641

for chroma right? why are you training both the TE and unet? pretty sure the TE is turned off for a reason

fine, i'll try radial attention.

i think no one talks about it because it has retarded requirements, the prompt and resolutions need to be super specific which sucks dick.

Anonymous

9/7/2025, 12:36:48 AM

No.106506798

[Report]

>>106506813

>>106506734

>for chroma right?

read the post again

Anonymous

9/7/2025, 12:37:43 AM

No.106506813

[Report]

>>106506798

skipped that bit somehow, my b.

Anonymous

9/7/2025, 12:38:16 AM

No.106506817

[Report]

>>106506829

>>106506787

Aren't anons ITT just stitching the videos with last-first frame?

Anonymous

9/7/2025, 12:39:57 AM

No.106506829

[Report]

>>106506817

not sure how that's relevant but possibly.

not sure how they aren't getting huge degradation in quality though. there was that one video where it was a pokemon character slowly undressing over 40ish seconds and it didnt have any vae bullshit going on

Anonymous

9/7/2025, 12:42:26 AM

No.106506848

[Report]

>>106506627

all of those are third party. the problem with blender is the licence which also holds back comfyui. both are gpl3 so saas faggots are the only ones who are able to commercialize the code

Is there a way to get hires.fix to work in comfyui like it does in forge? All the workflows i've tried work differently and i'm not able to set the upscaler and it's denoising strength.

Anonymous

9/7/2025, 12:47:39 AM

No.106506892

[Report]

>>106506869

Here you go, complete the rest of the nodes with the model of your choice

https://files.catbox.moe/xld1sp.json

>>106506451

if you are the /anon/ from adt, an anon there shared an screenshot when he trained his yuri girl lora some threads ago

>>106506902

>complete the rest of the nodes with the model of your choice

thanks for the json, i have no idea what the fuck im doing with nodes yet so i'll try to frankenstein it by connecting the dots and see if that works

Anonymous

9/7/2025, 12:57:26 AM

No.106506965

[Report]

>>106506992

>>106506925

>if you are the /anon/ from adt,

Nope, i've never even gone to adt but i'll take a look. Do you have the screenshot or thread number by any chance?

Anonymous

9/7/2025, 1:00:45 AM

No.106506984

[Report]

I think I'm nearing my end of the 'Qwen' look, but it takes fashion prompts so incredibly well that it's kinda hard to stop.

Anonymous

9/7/2025, 1:01:30 AM

No.106506989

[Report]

>>106507109

>>106506943

Middle portion is where the upscale happens then you encode to a latent and send that to the ksampler where you can set the denoise and steps and stuff like in forge.

I left the links open cause they change depending which of the model you are using if XL just have checkpoint loader and vae loader and connect as needed. If others you add whatever they need to load lol.

Anonymous

9/7/2025, 1:01:52 AM

No.106506992

[Report]

>>106507077

Anonymous

9/7/2025, 1:03:08 AM

No.106507000

[Report]

>>106507049

>>106506787

anon here

it seems okay? i'm really not seeing the benefits yet but i've only gen'd one video

Anonymous

9/7/2025, 1:10:19 AM

No.106507048

[Report]

>>106507061

Comfyui has this really cool new feature where if you leave the tab open long enough it will start gobbling up sys ram and raping your cpu.

Anonymous

9/7/2025, 1:10:29 AM

No.106507049

[Report]

>>106507075

>>106507000

Did you use 2.1 or 2.2? I cant get it to work with any of the all-in-one 2.2

Anonymous

9/7/2025, 1:11:31 AM

No.106507061

[Report]

>>106507048

Yeah I always thought my pc was too fast, glad comfy is giving us the features the real ones want.

Anonymous

9/7/2025, 1:11:36 AM

No.106507062

[Report]

Anonymous

9/7/2025, 1:12:38 AM

No.106507067

[Report]

>>106506077

What you're supposed to do is dump all of the lora epochs into Forge and do X/Y grid tests for various prompts and figure out which ones fit the style best with the least amount of artifacts or burning. The training samples usually suck and gives different type of outputs from my experience.

Anonymous

9/7/2025, 1:12:39 AM

No.106507068

[Report]

Anonymous

9/7/2025, 1:13:16 AM

No.106507075

[Report]

>>106507122

>>106507049

because the all-in-one is shit.

also, i take it back, i lowered the resolution and just keep getting errors because the res and prompt isnt specifically how it wants it.

for fairness i'll try it again in a higher res which i guess is where you'd use it but.. so far there are zero speed increases. the light loras are doing all the work while radial just lies there like a bored whore.

Anonymous

9/7/2025, 1:13:41 AM

No.106507077

[Report]

>>106507085

>>106506902

Ahem... *clears throat* dear anons, I want to say that I LOVE FORGE, it is the best UI I've seen so far and I will NEVER, I repeat, NEVER touch a node in my life, did you hear me? NEVER!

>>106506943

>>106506992

Why do you want to use hiresfix in Comfy? Is absolute inferior, they are people in redit commenting on this.

Comfy has never figured out how lllyasviel managed to do hiresfix. He has just been coping, creating around 50 different hiresfix nodes and upscaler nodes, and upscalers of upscalers, all without any success.

Anonymous

9/7/2025, 1:14:56 AM

No.106507085

[Report]

>>106507077

>the screenshot

Anonymous

9/7/2025, 1:19:01 AM

No.106507100

[Report]

>>106507164

Anonymous

9/7/2025, 1:20:22 AM

No.106507106

[Report]

>>106507165

I LOVE BLOAT!!!!! I LOVE PYTHON BLOOOOAAAATTTT!!!! WEB DEVELOPMENT IS THE BEEEEST!!!!

Anonymous

9/7/2025, 1:20:58 AM

No.106507109

[Report]

>>106507172

>>106506989

I'm slowly figuring it out, i'm VERY new to nodes so i'll just download some workflows and rip the basics from them. I should be able to figure it out, hopefully I don't brick my comfyui with too many nodes lol

Anonymous

9/7/2025, 1:21:47 AM

No.106507114

[Report]

>>106507129

So organic...

Anonymous

9/7/2025, 1:21:50 AM

No.106507116

[Report]

SNAKES SNAKES ON MY WALL SNAKES UP MY ASSSS

Anonymous

9/7/2025, 1:22:46 AM

No.106507121

[Report]

hey at least it isn't all programmed in Go

Anonymous

9/7/2025, 1:22:53 AM

No.106507122

[Report]

>>106507162

>>106507075

Cant even get this to run, it just throws errors and this is with the provided workflow kek

Anonymous

9/7/2025, 1:23:00 AM

No.106507123

[Report]

>>106507169

why is girls smoking so fucking kino in art but trash irl?

>inb4sloppa

yeah i dont care i also like burger king.

Anonymous

9/7/2025, 1:23:50 AM

No.106507129

[Report]

>>106507137

>>106507114

chroma version?

Anonymous

9/7/2025, 1:25:42 AM

No.106507137

[Report]

Anonymous

9/7/2025, 1:25:53 AM

No.106507138

[Report]

>>106506310

See this in a lot of hobbies. I’m also into 3d printing and there’s definitely a split between “working on the printer is the hobby” and “end products are the hobby I just want the printer to work and be unobtrusive”. Perhaps it’s human nature.

Anonymous

9/7/2025, 1:29:34 AM

No.106507155

[Report]

>schizo won

grim

Anonymous

9/7/2025, 1:30:27 AM

No.106507162

[Report]

>>106507122

default wf works, it just has stupid model naming so make sure to replace them with shit that makes sense.

but honestly doesn't seem worth it. too autistic as it requres exact resolutions

Anonymous

9/7/2025, 1:30:32 AM

No.106507164

[Report]

>>106507100

You sneaky fuck.

I appreciate you animating my slop.

Anonymous

9/7/2025, 1:30:42 AM

No.106507165

[Report]

>>106507173

>>106507106

But enough about comfy

Anonymous

9/7/2025, 1:31:36 AM

No.106507169

[Report]

>>106507212

>>106507123

it's just photogenic, looks good in movies which influenced the generation that changed its image from glamourous to trashy

plus it stinks

Anonymous

9/7/2025, 1:32:23 AM

No.106507171

[Report]

>>106507179

Anyway, Euler ancestral fixes like 50% of Khroma's hand issues.

Anonymous

9/7/2025, 1:32:23 AM

No.106507172

[Report]

>>106507190

>>106507109

>hopefully I don't brick my comfyui with too many nodes lol

don't worry, updates do that just fine

Anonymous

9/7/2025, 1:32:23 AM

No.106507173

[Report]

>>106507165

was he not talking about comfy? lol

Anonymous

9/7/2025, 1:33:16 AM

No.106507179

[Report]

>>106507191

>>106507171

thank you for reminding me to try this

>>106507172

i do not plan on updating unless absolutely necessary and i have a backup ready in case i brick it. i just dont want to brick it since im a noob

Anonymous

9/7/2025, 1:35:42 AM

No.106507191

[Report]

>>106507196

>>106507179

I recommend using the Euler ancestral node and pumping eta up to 1.5.

Anonymous

9/7/2025, 1:36:07 AM

No.106507193

[Report]

>>106507257

>>106507190

are you implying pros want to brick or something?

Anonymous

9/7/2025, 1:36:45 AM

No.106507196

[Report]

>>106507205

>>106507191

with the clownsharksampler? i'll try. still currently fighting against radial attn but it's turning into a waste of time. could have generated so much shit in the time it took me so far.

Is anyone else having issues with ComfyUI? It seems to interfere with my prompts. I want to create prompts, but something always happens with Comfy that prevents me from doing so, wasting my free time fixing unexpected problems.

I feel like pic related

Anonymous

9/7/2025, 1:38:01 AM

No.106507202

[Report]

>>106507266

>>106507190

If you just installed it's probably too late current version is pretty fucked memory wise. Anyway should be ok if you are just doing XL stuff at least.

Anonymous

9/7/2025, 1:38:01 AM

No.106507203

[Report]

>>106507197

>something

no please, do be more vague.

my issues with comfy so far have always been in front of the monitor.

Anonymous

9/7/2025, 1:38:17 AM

No.106507205

[Report]

>>106507196

No, I meant the vanilla Euler ancestral node.

Anonymous

9/7/2025, 1:38:35 AM

No.106507209

[Report]

>>106507197

why else do you think there has been more outspoken hate for the griftui?

Anonymous

9/7/2025, 1:39:02 AM

No.106507212

[Report]

>>106507293

>>106507169

it really is such a great aesthetic

How many steps does WAN need without the 4steps lora?

Anonymous

9/7/2025, 1:39:27 AM

No.106507217

[Report]

>>106507197

waiter, waiter, more downscaled jpegs

Anonymous

9/7/2025, 1:39:35 AM

No.106507219

[Report]

>>106507228

>>106507197

Did you create your ComfyAccount? So we can telemetry your nodes¿

Anonymous

9/7/2025, 1:39:49 AM

No.106507220

[Report]

Anonymous

9/7/2025, 1:41:07 AM

No.106507228

[Report]

>>106507219

>¿

of course...

Anonymous

9/7/2025, 1:42:40 AM

No.106507236

[Report]

>>106507252

>>106507216

It defaults to 20 per sampler, with 25 giving better results generally. You get artifacting starting around 15 when I was experimenting weeks ago.

Anonymous

9/7/2025, 1:43:52 AM

No.106507243

[Report]

>>106507252

>>106507216

20 if you go by the comfy workflow, officially it's 40 steps. for the 5B version it's 50.

Anonymous

9/7/2025, 1:44:05 AM

No.106507244

[Report]

>>106507260

>>106507197

I have been here for years and have never seen a WebUI bug issue, no one saying "I don't know what's wrong with Forge when I do x thing, ..." and this is a UI that has no maintenance.

Anonymous

9/7/2025, 1:45:21 AM

No.106507252

[Report]

>>106507236

>>106507243

So 20 per sampler ... No wonder there is such a strong push to shill the lightning lora

>>106507197

Why is it that in every thread (excluding the ComfyHaters) there is always someone asking for help with Comfy?

There is always some dependency issue, always some Sage Attention that isn't working as it should, always some black screen output.

What's going on?

This is excluding the ComfyHaters and focusing on the number of people asking questions about Comfy because things aren't working.

>>106507193

of course not, but noobs like me are more prone to bricking something through lack of knowledge or retardation

>If you just installed it's probably too late current version is pretty fucked memory wise

pic related is my version, is that the one that's fucked?

Anonymous

9/7/2025, 1:46:12 AM

No.106507260

[Report]

>>106507244

sometimes people would kick and scream about an oom issue but it was mostly people being retarded

Anonymous

9/7/2025, 1:46:50 AM

No.106507266

[Report]

>>106507377

>>106507202

meant to quote you here

>>106507257

Anonymous

9/7/2025, 1:47:13 AM

No.106507270

[Report]

>>106507257

I’ve seen this thread the last little while every so often say that 3.50 was the one that seemed to turbofuck the memory issues but I don’t use comfy so I dunno lel

Anonymous

9/7/2025, 1:47:25 AM

No.106507274

[Report]

>>106507316

>>106507257

See? this is not a ComfyHater, this is another Comfy user witha problem

Anonymous

9/7/2025, 1:47:33 AM

No.106507275

[Report]

>>106507262

never understood why you are such a massive faggot

Anonymous

9/7/2025, 1:48:28 AM

No.106507284

[Report]

>>106507296

yeah fuck radial, i'm over it. it refuses any resolutions it should be able to take (according to gemini and gpt)

Anonymous

9/7/2025, 1:48:56 AM

No.106507290

[Report]

>>106507255

greed is the motivation. the project is under control by an ex Google chink scammer and ever since there has been a lot of problems. it's not that people want comfyui to be replaced but that it must be replaced to stop the headaches, bloat and griftmaxxing

Anonymous

9/7/2025, 1:49:10 AM

No.106507293

[Report]

>>106507212

definitely understand where smokeules anon was coming from

Anonymous

9/7/2025, 1:49:15 AM

No.106507294

[Report]

>>106505990

chroma works for me since v2X, sorry you are retarded

Anonymous

9/7/2025, 1:49:39 AM

No.106507296

[Report]

>>106507316

>>106507284

Don't you see? Another Comfy user, we are excluding haters (like me) and focusing on the real users with real problems

Anonymous

9/7/2025, 1:49:58 AM

No.106507300

[Report]

>>106507262

he lives rent free in ldg brains to this very day

Anonymous

9/7/2025, 1:51:17 AM

No.106507308

[Report]

>>106507255

because its the main ui people use and every software project under the sun was always a buggy mess?

Anonymous

9/7/2025, 1:51:38 AM

No.106507312

[Report]

Anonymous

9/7/2025, 1:51:55 AM

No.106507316

[Report]

>>106507274

>>106507296

nah, you are just a comfy hating schizo. there is nothing wrong with my SOTA node spaghetti

Anonymous

9/7/2025, 1:58:41 AM

No.106507377

[Report]

>>106507266

Yeah the problem started a few versions back but that one still has it. You can try it yourself there seems some people still unaffected it.

Anonymous

9/7/2025, 2:06:00 AM

No.106507450

[Report]

Anonymous

9/7/2025, 2:25:08 AM

No.106507591

[Report]

>>106504843

Out painting can probably do it. Simple prompts, who knows?

Anonymous

9/7/2025, 2:35:10 AM

No.106507666

[Report]

>>106507773

These threads are still consistently the most brainless thing on all of /g/.

If it gets contained to one thread then I can call it a "containment thread", but otherwise I'm legit just one step away from thinking that the mods should ban it.

Move it to /s/ or /a/.

AI is technology and you use AI to generate images of fictional anime characters with big cans by typing "anime characters with big cans". Therefore it belongs on the technology board.

Trucks move food so let's have a thread about trucks on /ck/.

I'm convinced that about 1% of the people here even have a passing interest in how AI works and how this mistifying computer program is able to able to create a photorealistic image of Chun-Li eating a big mac with Peter Griffin. You click the button.

Anonymous

9/7/2025, 2:51:53 AM

No.106507773

[Report]

Anonymous

9/7/2025, 2:57:19 AM

No.106507806

[Report]

>>106503803

Greatest anime art style in the history of anime.

Anonymous

9/7/2025, 5:12:45 AM

No.106508741

[Report]