/ldg/ - Local Diffusion General

Anonymous

6/12/2025, 2:56:52 AM

No.105566552

[Report]

let's have sexi sex

>still no way to train chroma except with diffusion pipe on linux/wsl

GAY

>>105566558

this but unironic.

the easier lora training is the more the masses will come.

Anonymous

6/12/2025, 2:59:15 AM

No.105566573

[Report]

On another board anon informed me that this thread is blessed. Can someone tell me if that is true?

Anonymous

6/12/2025, 2:59:41 AM

No.105566577

[Report]

>>105566640

Cursed thread of tech stagnation

Anonymous

6/12/2025, 3:00:06 AM

No.105566582

[Report]

total mikunog death

Anonymous

6/12/2025, 3:01:27 AM

No.105566590

[Report]

>>105566598

>>105566569

>>105566558

no one will train a lora for a model that gets a new checkpoint every 4 days and which just started training with a bigger resolution that will need multiple epochs to have results

Anonymous

6/12/2025, 3:02:32 AM

No.105566598

[Report]

>>105566629

>>105566590

>no one

I will, fuck nuts

It only takes an hour or so to train flux LoRA's on my GPU

Anonymous

6/12/2025, 3:03:48 AM

No.105566611

[Report]

>>105566569

>gatekeeping is actually bad

hm... i dont fink so anonie

Anonymous

6/12/2025, 3:03:49 AM

No.105566612

[Report]

>>105566647

Blessed thread of friendship.

Anonymous

6/12/2025, 3:04:19 AM

No.105566613

[Report]

>friendship

Found the tourist.

>>105565292

yes.

>>105565315

hi comfy. I am NOT a dramaposter, I don't have a grudge against you. I just want to ask, what do you want us to provide so we can merge this in?

https://github.com/comfyanonymous/ComfyUI/pull/7965

IE, do you want an XY chart for more evidence the workflow is better? Update it so both workflows are recommended? great free software maintainers don't just do whatever people ask, but they do set expectations so we know what you require before merging. thanks.

Anonymous

6/12/2025, 3:05:55 AM

No.105566629

[Report]

>>105566598

sure, you can train a lora for yourself in the meantime but nothing that wont be obsolete when chroma finishes training

Blessed thread of frenship

Anonymous

6/12/2025, 3:07:01 AM

No.105566640

[Report]

Anonymous

6/12/2025, 3:07:39 AM

No.105566647

[Report]

Anonymous

6/12/2025, 3:08:20 AM

No.105566650

[Report]

>>105566619

+1 for chroma, sooner or later this will need to be merged anyway

Anonymous

6/12/2025, 3:08:48 AM

No.105566653

[Report]

>>105566530 (OP)

Thank you for baking this thread, anon.

>>105566634

Thank you for blessing this thread, anon.

Anonymous

6/12/2025, 3:09:42 AM

No.105566658

[Report]

>>105566706

>>105566619

kekd heartily

Anonymous

6/12/2025, 3:14:50 AM

No.105566694

[Report]

>>105566728

Anonymous

6/12/2025, 3:16:19 AM

No.105566706

[Report]

>>105566885

>>105566658

>shitty turd colour

>shitty aesthetics

>apple logo

into the trash it goes, and into the trash you also go. into the trash it all goes.

Anonymous

6/12/2025, 3:18:52 AM

No.105566728

[Report]

>>105566744

Anonymous

6/12/2025, 3:19:23 AM

No.105566731

[Report]

what upscaler is good

Anonymous

6/12/2025, 3:20:44 AM

No.105566744

[Report]

>>105566764

Anonymous

6/12/2025, 3:20:47 AM

No.105566745

[Report]

>>105566619

suddenly tsunami waves begin to fill the background, the farmer frank's head explodes into chucks of brain matter and blood.

Oh no anon.

>still no flux dev kontext

Anonymous

6/12/2025, 3:23:06 AM

No.105566758

[Report]

>>105566751

But you have lots of other toys to play with.

Anonymous

6/12/2025, 3:24:14 AM

No.105566764

[Report]

>>105567006

>>105566744

i'm getting wood

>>105566751

bro even the biggest pro version was mid, let alone the 5x worse one they said they will open source who knows when, what the fuck are you expecting

Anonymous

6/12/2025, 3:28:57 AM

No.105566796

[Report]

>>105566777

NTA, Your number checks out. I believe you.

There we go, old VACE wan 14b without skip layer guidance looping workflow, frames are blurry (the generated frames are in the middle)

>>105566807

vs now with skip layer guidance, same seed

>>105566530 (OP)

Is

https://github.com/FizzleDorf/AniStudio/ the best UI for someone that wants something that runs bare metal instead of Python or a web UI?

Anonymous

6/12/2025, 3:36:51 AM

No.105566845

[Report]

>>105566829

Sad attempt at astroturfing bro, just let it go.

>>105566706

i was just seeing if chroma could draw an imac g3, jeez

Anonymous

6/12/2025, 3:44:42 AM

No.105566893

[Report]

>>105566885

i think i'm going to throw up.

Anonymous

6/12/2025, 3:45:44 AM

No.105566897

[Report]

>>105566885

hans get the sledge hammer.

summary of what does this shit do for wan?

Anonymous

6/12/2025, 3:50:38 AM

No.105566929

[Report]

Anonymous

6/12/2025, 3:51:24 AM

No.105566935

[Report]

>>105566928

Affects motion in a way that Alibaba never really explained except for "leave that shit at the default"

Anonymous

6/12/2025, 3:52:26 AM

No.105566941

[Report]

>>105566928

Fuzz amount for movement. Higher = fuzzier but moves more

Anonymous

6/12/2025, 3:53:03 AM

No.105566943

[Report]

>>105566928

controls how fast the gremlins inside your gpu move

Anonymous

6/12/2025, 3:53:52 AM

No.105566948

[Report]

>>105566777

>trip 7s on a doompost

it's over

Anonymous

6/12/2025, 3:54:34 AM

No.105566953

[Report]

>>105566960

why skip layer guidance no work with comfy core?

Anonymous

6/12/2025, 3:54:57 AM

No.105566955

[Report]

>>105566812

holy shit niiiiiiice

Anonymous

6/12/2025, 3:55:45 AM

No.105566960

[Report]

>>105566953

works with kijais nodes

Anonymous

6/12/2025, 4:02:30 AM

No.105566998

[Report]

>>105566812

trying to fuck around with the online version ofwan. it never seems to stop generating.

Anonymous

6/12/2025, 4:02:47 AM

No.105566999

[Report]

>>105566928

Stop picking at it or it might become infected.

Anonymous

6/12/2025, 4:03:39 AM

No.105567006

[Report]

Anonymous

6/12/2025, 4:03:58 AM

No.105567008

[Report]

>>105566829

Wait another couple years for it to be feature complete

Anonymous

6/12/2025, 4:14:48 AM

No.105567063

[Report]

>snubbed again

/ldg/ has fallen

Anonymous

6/12/2025, 4:17:23 AM

No.105567075

[Report]

wheres your anger anon

Is inpainting an empty space and redrawing/editing existing pixels the same? Or does each need its own type of work?

Anonymous

6/12/2025, 4:19:14 AM

No.105567088

[Report]

>>105567136

Anonymous

6/12/2025, 4:22:33 AM

No.105567105

[Report]

>>105567144

>>105567092

my pic wasn't added in the collage

>>105567078

real inpainting fills the masked area with latent noise and attempts to paint in in a way that fits the surrounding area meant to. Problem is most models aren't very good at inpainting and so anons blame comfyui. I real inpaint model just works except that it will need further processing, also real inpaint models require 1.00 denoise strength to actually work.

just set higher batch, walk away and come back and pick the best result and then use controlnet and or just normal low denoise with none inpaint model. to fix the image.

Anonymous

6/12/2025, 4:25:24 AM

No.105567125

[Report]

>>105567078

there is no real good inpaint though, better off just using a latent noise mask with a decent model imo.

Anonymous

6/12/2025, 4:26:41 AM

No.105567136

[Report]

>>105567088

Cool, share lora?

Anonymous

6/12/2025, 4:26:50 AM

No.105567138

[Report]

>>105567209

a stone statue of Miku Hatsune on a pedestal, in a museum in Tokyo.

chroma 36 detailed, pretty neat, hidream cant get stone right

Anonymous

6/12/2025, 4:26:52 AM

No.105567139

[Report]

>>105567149

flux fill is annoying because it tries to fill the whole mask with what you prompt instead of removing crap you were trying to get rid of around the thing

Anonymous

6/12/2025, 4:27:17 AM

No.105567141

[Report]

>>105567166

>>105567092

I think I posted a good one last thread iirc. Collage nigger snubbed me (unacceptable)

Anonymous

6/12/2025, 4:27:34 AM

No.105567144

[Report]

>>105567185

>>105567105

aww, don't cwy, widdle smeckles!

dere's awways next twime!

>>105567110

I'll prolly just open krita nad redraw the finger...

Anonymous

6/12/2025, 4:28:17 AM

No.105567149

[Report]

>>105567139

sounds like cfg way too high

Anonymous

6/12/2025, 4:30:08 AM

No.105567166

[Report]

>>105567141

Which post? I'll tell you if it's good

Anonymous

6/12/2025, 4:30:46 AM

No.105567167

[Report]

>>105567078

it's the same when you use inpainting model

it's trained so you prompt whatever is in the mask not the whole image

Anonymous

6/12/2025, 4:30:49 AM

No.105567169

[Report]

>>105567440

>>105567078

>>105567110

Look up these nodes for inpainting: Inpaint Crop, and Inpaint Stich. It has some useful settings like a context mask -- this knob expands the context in a separate output so it blends in will with the final output. Its also very fast because it crops a box around the part you want to mask

Anonymous

6/12/2025, 4:32:18 AM

No.105567185

[Report]

>>105567144

no today was suppose to be my day

Anonymous

6/12/2025, 4:33:33 AM

No.105567198

[Report]

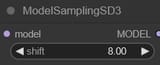

For VACE Wan, does sageattention and fp16 accumulation conflict with the 3 nodes below?

Anonymous

6/12/2025, 4:34:58 AM

No.105567209

[Report]

Anonymous

6/12/2025, 4:35:57 AM

No.105567222

[Report]

>>105567387

>>105567147

There are ways and means, some require more involvement than others. You could crop in gimp, paste as new image but increase its size and use inside of comfy, then once done processing, re-scale and paste back into the exact place, finale 2 passes to fix.

but its time and effort.

Anonymous

6/12/2025, 4:39:52 AM

No.105567253

[Report]

its far better to get the prompt and model used correct the first time because you're gonna wanna second pass it anyway.

Anonymous

6/12/2025, 4:47:22 AM

No.105567302

[Report]

>>105567491

marble instead of stone

hidream also doesn't do this material as well.

Anonymous

6/12/2025, 4:53:18 AM

No.105567342

[Report]

Anonymous

6/12/2025, 4:59:41 AM

No.105567387

[Report]

>>105567440

>>105567222

This? I have that, but when I tell it make it into a finger it's either 50% noise vaguely finger shaped or alien 4d matter.

Anonymous

6/12/2025, 5:01:24 AM

No.105567399

[Report]

can HunyuanVideo-Avatar be used in comfy yet?

Anonymous

6/12/2025, 5:06:08 AM

No.105567440

[Report]

>>105567387

i never use that set of nodes, if you mean

>>105567169

i've tried that and i really did not like it. Actually inpaint models do better on their own using native comfy nodes but the result will look like it was implanted from another world. Different lighting, skin tone etc. So you have to do more passes and when you do you will lose overall composition.

if i really want to fix something i will import into gimp, then manually using mostly the clone and heal tool paint the damn thing as close as i can. then i will denoise that area same seed maybe. A lot would actually go into fixing a finger because your really are fighting the dataset.

Anonymous

6/12/2025, 5:08:24 AM

No.105567456

[Report]

don't fight a models data set, pick the right model, then use another model to convert.

Anonymous

6/12/2025, 5:13:17 AM

No.105567491

[Report]

>>105567582

>>105567302

Wooden statue? Clay sculpture? Ice sculpture?

>>105567491

ice sculpture:

yeah, chroma is pretty awesome, hidream really struggled with diff materials (same type of prompt)

Anonymous

6/12/2025, 5:28:23 AM

No.105567596

[Report]

>>105567582

prompt was: an ice sculpture of Miku Hatsune with a twintails hairstyle standing on a pedestal, in a museum in Tokyo.

seed: 1061947909606121

Anonymous

6/12/2025, 5:29:50 AM

No.105567607

[Report]

>>105567620

Anonymous

6/12/2025, 5:31:10 AM

No.105567612

[Report]

what's the best comfy workflow for image to image (denoise) ? with chroma

Anonymous

6/12/2025, 5:31:13 AM

No.105567613

[Report]

>>105567619

Anonymous

6/12/2025, 5:31:53 AM

No.105567618

[Report]

>>105570317

Anonymous

6/12/2025, 5:32:16 AM

No.105567619

[Report]

>>105567613

and last, wooden sculpture:

Anonymous

6/12/2025, 5:32:22 AM

No.105567620

[Report]

>>105567607

Nice work, Fran-anon~!

Anonymous

6/12/2025, 5:42:30 AM

No.105567684

[Report]

>>105567653

This is just a woman, nigga

Anonymous

6/12/2025, 5:45:55 AM

No.105567709

[Report]

>>105567694

looks like an old hag

Anonymous

6/12/2025, 5:49:15 AM

No.105567725

[Report]

>>105567777

Anonymous

6/12/2025, 5:49:50 AM

No.105567731

[Report]

>>105567750

Guys, be generous to a humble guy like me and make mini girls measuring 15-30 cm, please.

Anonymous

6/12/2025, 5:53:29 AM

No.105567750

[Report]

>>105567872

Anonymous

6/12/2025, 5:58:12 AM

No.105567777

[Report]

>>105567725

btw, is it just me or does wan not have a fisheye view?

Anonymous

6/12/2025, 6:14:12 AM

No.105567872

[Report]

Anonymous

6/12/2025, 6:17:02 AM

No.105567896

[Report]

>>105568034

>>105567880

can you do pocket pussy miku?

Anonymous

6/12/2025, 6:30:37 AM

No.105567990

[Report]

>>105567880

nice refraction

Anonymous

6/12/2025, 6:30:59 AM

No.105567993

[Report]

Anonymous

6/12/2025, 6:37:44 AM

No.105568034

[Report]

>>105567896

I'm seconding this notion.

>>105567880

chroma is on another fucking level

and it's not even finished

Anonymous

6/12/2025, 6:59:29 AM

No.105568158

[Report]

>>105568246

were there other big lewd models that used natural language captions before?

Anonymous

6/12/2025, 7:01:46 AM

No.105568173

[Report]

>>105568177

>>105568149

What do you mean? It's version 34 already.

Anonymous

6/12/2025, 7:02:35 AM

No.105568177

[Report]

Anonymous

6/12/2025, 7:03:19 AM

No.105568184

[Report]

>>105568189

is each version an epoch? how many until it is "done"?

>>105568184

He initially said 50, but that was before Ponyfag gave him free access to the clusters he uses, so who knows when it ends

Anonymous

6/12/2025, 7:06:21 AM

No.105568201

[Report]

>>105568213

>>105568189

Do you think those two furries engage in filthy sex together? I mean he has to pay somehow. Two sodomites are the only hope for community trained models. Grim.

Anonymous

6/12/2025, 7:08:45 AM

No.105568213

[Report]

>>105568201

honestly furries have spurred some of the most amazing progress in ai so far

their fantasies can never be fulfilled irl so it makes some sense

Anonymous

6/12/2025, 7:09:24 AM

No.105568218

[Report]

>>105568250

>>105568189

He probably gave him access because the writing is on the wall for pony v7 (it sucks ass) and he needs a better base model to train on. As soon as Chroma fully converges, Pony v8 will start training on it.

best of the last 10 threads imo

>>105511079

>>105511695

>>105512575

>>105513313

>>105514073

>>105514524

>>105514648

>>105514721

cont'd next post, they auto-detect too many links as spam now which makes this almost impossible to do

Anonymous

6/12/2025, 7:15:44 AM

No.105568246

[Report]

>>105568158

Omnigen was trained on an unfiltered dataset, including danbooru.

It just never picked up steam cause it's trained using the SDXL VAE instead of the Flux one.

>>105568244

Fuck off with the spam, nobody gives a fuck what you think is 'best', retard.

Anonymous

6/12/2025, 7:16:08 AM

No.105568250

[Report]

>>105570233

>>105568218

Do you think ponyfag will learn anything? His disclaimer about v7 was already alarming enough few months ago.

Anonymous

6/12/2025, 7:17:03 AM

No.105568259

[Report]

Anonymous

6/12/2025, 7:18:48 AM

No.105568267

[Report]

Anonymous

6/12/2025, 7:19:23 AM

No.105568274

[Report]

>>105568244

Thank you, I appreciate your efforts.

Anonymous

6/12/2025, 7:19:49 AM

No.105568279

[Report]

Anonymous

6/12/2025, 7:20:20 AM

No.105568281

[Report]

>>105568288

>>105568149

im actually impressed, the ice sculpture one turned out way better than hidream which cant do the materials well. it's a fun model.

Anonymous

6/12/2025, 7:21:07 AM

No.105568286

[Report]

Anonymous

6/12/2025, 7:21:25 AM

No.105568288

[Report]

>>105568281

Tensor based logarithms are still pretty much off.

Anonymous

6/12/2025, 7:22:41 AM

No.105568292

[Report]

So is the chroma also some model on civitai that you slap into comfy or do you need an entire new set of tools?

Anonymous

6/12/2025, 7:23:02 AM

No.105568295

[Report]

>>105558215

>>105564324

>>105564509

>>105565097

>>105565164

>>105565504

>>105565694

>>105565725

>>105566004 idk what you prompted but it just looks like turning the cfg up to 11

>>105566224

And I can't include two more links but the first Hatsune Miku sculpture and Debo's bloodstained driveway from this thread.

That's the end. Thank you everyone for posting gens

Anonymous

6/12/2025, 7:23:46 AM

No.105568300

[Report]

>>105568305

>>105568300

i fucking love these, based hudson river anon

Anonymous

6/12/2025, 7:27:20 AM

No.105568316

[Report]

>>105568332

>>105568305

haha good eye

I've been trying Pieter Claesz too (this is the same seed), he only painted still life and not landscapes but the model seems to do a clever job of transferring his dark classical style even though he never painted this kind of thing

Anonymous

6/12/2025, 7:27:50 AM

No.105568319

[Report]

>>105568332

Anonymous

6/12/2025, 7:29:43 AM

No.105568327

[Report]

kek

Anonymous

6/12/2025, 7:31:10 AM

No.105568332

[Report]

>>105568355

>>105568316

pieter claesz is p. good

can chroma do darker stuff like beksinski? if it can i'm buying a 3090/4090 and it's not even a discussion

>>105568319

dunno

they're just comfymaxx

Anonymous

6/12/2025, 7:32:47 AM

No.105568339

[Report]

>>105568394

I've hit peak, I can never improve on this, the perfect woman

Anonymous

6/12/2025, 7:35:49 AM

No.105568355

[Report]

>>105568396

>>105568332

Yeah I think the first image avoids AI-isms like those fractal like repeating little leaves and such which are often so common with painterly gens. It's pretty coherent.

Second image pretty much loses that something.

Anonymous

6/12/2025, 7:42:43 AM

No.105568394

[Report]

>>105571434

>>105568339

I seen dudes in prison more attractive than this

>>105568355

I think I also used a different (less detailed) noise scheduler for the one you dislike so maybe that's what you're noticing, like it's a bit muddier on the leaves because it's less 'complete'

Anonymous

6/12/2025, 7:43:50 AM

No.105568398

[Report]

Anonymous

6/12/2025, 7:44:32 AM

No.105568402

[Report]

>>105568462

>>105567147

Get Krita Diffusion and you never have to leave Krita

Anonymous

6/12/2025, 7:45:13 AM

No.105568407

[Report]

>>105568550

How's the AI in Krita?

Anonymous

6/12/2025, 7:53:57 AM

No.105568462

[Report]

>>105568493

>>105568402

I guess I have to setup something from within comfy too?

Anonymous

6/12/2025, 7:55:47 AM

No.105568474

[Report]

>>105568396

second image has some strange leaves around, but man does it do a good job of translating what the artist "would've" done if he were to paint landscapes

i personally prefer russian realists (shishkin in particular which i believe you've mentioned) but this stuff is good as long as the lighting is moody (which is usually the weakest part about HRS paintings, at least in my opinion; the subjects are great)

Anonymous

6/12/2025, 7:56:19 AM

No.105568478

[Report]

>>105568496

>>105568396

It's just an observation. Can't often do anything but reroll.

I did some paintings with flux (just basic flux style) then upscaling them with sdxl to generate a painterly style but it's a hit and miss.

Too much denoise and it'll fuck up the details, too little and it's not nice enough.

Anonymous

6/12/2025, 7:58:10 AM

No.105568488

[Report]

adetailer can really be a piece of shit at times.

Anonymous

6/12/2025, 7:58:54 AM

No.105568493

[Report]

>>105568963

>>105568462

There are custom nodes involved but it should be able to connect without them I think

https://docs.interstice.cloud/comfyui-setup/

>>105568478

try john atkinson grimshaw

dude made some insanely moody works and was obsessed with withered trees

Anonymous

6/12/2025, 8:06:53 AM

No.105568541

[Report]

>>105568496

Thanks, saving up the name. I'll get back to my setup in a day or two. Can't gen every day or it'll become meaningless and going to burn out.

Anonymous

6/12/2025, 8:08:16 AM

No.105568550

[Report]

>>105568963

Anonymous

6/12/2025, 8:10:38 AM

No.105568561

[Report]

>>105568583

>>105568496

nice, I'll have to try that name later

>A dark, dramatic Frank Frazetta painting. The painting depicts a clearing in a dense, shady forest, surrounded by trees. In the clearing is a rock with a sword hilt embedded in it, the blade of the sword buried deep into the rock. A narrow blue stream trickles past the rock. A bolt of lightning is striking the top of the sword hilt.

>neg: cgi, 3d, text, muddy, messy, abstract

Anonymous

6/12/2025, 8:13:13 AM

No.105568578

[Report]

>>105568586

Was glancing over Krita Diffusion docs and regarding Cumfy, does it use some predefined node setup whenever user submits something from Krita? I mean what if I want to manually optimize or change its parameters inside Comfy? I'm not going to run some geezer's redditor workflows blindly out of the box. God knows how slow they are.

Anonymous

6/12/2025, 8:14:05 AM

No.105568583

[Report]

>>105568597

>>105568561

>Frank Frazetta

you have kino taste anon, let me repeat

take a favorite irl of mine, by Rafail Levitsky

fits this mood you're going for

20Loras

6/12/2025, 8:14:15 AM

No.105568584

[Report]

>>105568718

First time in a long while I've had to post in here.

Anonymous

6/12/2025, 8:14:44 AM

No.105568586

[Report]

>>105568631

>>105568578

it's extremely clunky because you have to use the predefined as you said but have to constantly change shit in the krita config menus which glitch out randomly

Anonymous

6/12/2025, 8:16:34 AM

No.105568597

[Report]

>>105568583

yeah that looks nice, ty

Anonymous

6/12/2025, 8:18:15 AM

No.105568607

[Report]

bigger size

Anonymous

6/12/2025, 8:21:02 AM

No.105568616

[Report]

Anonymous

6/12/2025, 8:23:42 AM

No.105568631

[Report]

>>105568586

Maybe I'll try it out myself. I'm somewhat intrigued. I found this

>https://docs.interstice.cloud/custom-graph/

So I guess it's doable.

On other hand, I have already done scribbles and funny images, then used img2img setup in Comfy. Saving an image from Photoshop and then doing a quick img2img or controlnet thing is pretty quick and simple because I have bunch of my own setups in Comfy.

In this sense Krita might be an overkill but let's see.

Anonymous

6/12/2025, 8:25:17 AM

No.105568638

[Report]

>"back in the old days, we didn't have none of that controlnet and inpainting crap -- and still we could produce better stuff than any of you gooners ever will!"

Anonymous

6/12/2025, 8:38:07 AM

No.105568718

[Report]

>>105569021

Anonymous

6/12/2025, 8:48:30 AM

No.105568762

[Report]

any tutorials on how to do multi-i2v with wan vace? all ive seen is V2V, regular i2v, and regular t2v. nothing actually interesting

what cfg do you like with wan i2v bwos

Anonymous

6/12/2025, 8:56:47 AM

No.105568795

[Report]

Anonymous

6/12/2025, 8:57:07 AM

No.105568797

[Report]

Anonymous

6/12/2025, 8:57:38 AM

No.105568802

[Report]

Anonymous

6/12/2025, 8:58:14 AM

No.105568807

[Report]

Anonymous

6/12/2025, 8:58:32 AM

No.105568808

[Report]

Anonymous

6/12/2025, 8:59:27 AM

No.105568814

[Report]

Fuck Im addicted to genning with chroma and wan, Ill leave some gens for the night and instead of going to sleep Ill just wait for the results awake

Ive not been this addicted since the sd1.5 days

Anonymous

6/12/2025, 9:10:40 AM

No.105568876

[Report]

>>105568888

Anonymous

6/12/2025, 9:11:35 AM

No.105568883

[Report]

Anonymous

6/12/2025, 9:12:25 AM

No.105568888

[Report]

>>105568876

to just generate a normal? why? that function is built into all the other software they use including blender

Anonymous

6/12/2025, 9:22:36 AM

No.105568949

[Report]

>>105568972

>>105568760

She was stunning, jav goat

>>105568550

>>105568493

Gay shit doesn't work even though I have it installed

Anonymous

6/12/2025, 9:26:45 AM

No.105568972

[Report]

>>105568995

>>105568949

Who? I just prompted for a generic "Japanese woman" with a 1980's aesthetic.

Anonymous

6/12/2025, 9:30:13 AM

No.105568995

[Report]

how many clips do you need for wan lora

Anonymous

6/12/2025, 9:34:07 AM

No.105569011

[Report]

>>105569001

For a person, none. You only need images, the more varied, the better. I use 100-200, but 50 or so might work. For a concept, 10-30 low res videos, 50-150 higher res images. Concepts always benefit from mixed datasets, with lower res videos and higher res images.

Anonymous

6/12/2025, 9:36:01 AM

No.105569017

[Report]

is this a meme?

>For LTX-Video and English language Wan2.1 users, you need prompt extension to unlock the full model performance. Please follow the instruction of Wan2.1 and set --use_prompt_extend while running inference.

20Loras

6/12/2025, 9:37:13 AM

No.105569021

[Report]

>>105569819

Anonymous

6/12/2025, 9:38:55 AM

No.105569032

[Report]

Anonymous

6/12/2025, 9:39:44 AM

No.105569034

[Report]

>>105569001

me? for the vid loras I've done wanx already had a basic understanding of the concept, it just needed a guiding hand to make it consistent. 15 low res vids up to 80 frames works, with some pics thrown in for detail

Anonymous

6/12/2025, 9:40:02 AM

No.105569037

[Report]

>>105569159

>Learn about CauseVid/Phantom for Wan

>Wow, 3 times the speed boost sounds aweso-

>cfg 1

>produces flux slop-tier skin

T-thanks

Anonymous

6/12/2025, 9:42:24 AM

No.105569048

[Report]

>>105569088

this one looks good? sampler/scheduler in the filename

>>105568963

AND NOW THE KRITA LOCAL SERVER INSTALLS ALL THE PYTHON SHIT FOR ITSELF AGAIN I DONT HAVE ROOM FOR THIS SHIT IAM KMS

Anonymous

6/12/2025, 9:46:18 AM

No.105569065

[Report]

>>105569114

"syndrome" in negative helps chroma produce better looking people, reduces weird/ugly face gens

I discovered that trick with sdxl base and some other base-ish models, funny to find it useful again. less filtered base datasets probably include a bunch of medical textbook imagery so it makes sense I guess

Anonymous

6/12/2025, 9:49:15 AM

No.105569088

[Report]

>>105569114

>>105569048

Looks good to me. How many steps / cfg?

Anonymous

6/12/2025, 9:50:19 AM

No.105569097

[Report]

>>105569116

>>105568963

>even though I have it installed

are you sure you installed all the custom nodes properly? put your reading glasses on and give the docs a nice good read

>https://docs.interstice.cloud/comfyui-setup/

>>105569052

you can use your existing comfy instance by giving it your server url. it's what i do

Anonymous

6/12/2025, 9:52:35 AM

No.105569114

[Report]

>>105569065

interesting. gonna try that out. just need to find a way to supress the huegmouf (tm)

>>105569088

3.5/25. I went down with the cfg

Anonymous

6/12/2025, 9:52:52 AM

No.105569116

[Report]

>>105569240

>>105569097

It's pulling fucking identical shit that I already have and that the log says are missing .

Anonymous

6/12/2025, 9:55:22 AM

No.105569135

[Report]

bottom feeding schizo has permeated the thread

Anonymous

6/12/2025, 9:57:46 AM

No.105569146

[Report]

Anonymous

6/12/2025, 9:59:48 AM

No.105569159

[Report]

>>105569037

May I see the comparison?

Anonymous

6/12/2025, 10:05:41 AM

No.105569200

[Report]

>>105569236

>>105569200

why is it so sdxl

Anonymous

6/12/2025, 10:11:20 AM

No.105569240

[Report]

>>105569267

>>105569116

did you install those nodes through the manager or manually using git pull? i get issues sometimes when manually installing nodes because of the comfy security stuff

Anonymous

6/12/2025, 10:11:57 AM

No.105569243

[Report]

>>105569236

because I used sdxl

Anonymous

6/12/2025, 10:15:46 AM

No.105569267

[Report]

>>105569278

Anonymous

6/12/2025, 10:16:42 AM

No.105569271

[Report]

>>105569290

kek

in the first version of this still life the fat parrot was a watermelon

but then I added an art style lora that seems to have had a lot of birds in the TD images, because it always wants to turn stuff into birds

Anonymous

6/12/2025, 10:17:27 AM

No.105569278

[Report]

>>105569267

i dunno then bro

Anonymous

6/12/2025, 10:18:18 AM

No.105569282

[Report]

>>105569287

does chroma feature the famous buttchin by default

Anonymous

6/12/2025, 10:19:11 AM

No.105569287

[Report]

>>105569282

replaced by futa cocks

Anonymous

6/12/2025, 10:19:59 AM

No.105569290

[Report]

>>105569313

>>105569271

what lora? i like birds

Anonymous

6/12/2025, 10:22:48 AM

No.105569313

[Report]

Anonymous

6/12/2025, 10:26:23 AM

No.105569347

[Report]

>>105569354

what happened to chroma

why is it bad now

Anonymous

6/12/2025, 10:27:03 AM

No.105569353

[Report]

>>105569595

>>105569236

Because sdxl gets the job done and has been perfected enough. Gone through multiple mixes over the course of many months.

Anonymous

6/12/2025, 10:27:08 AM

No.105569354

[Report]

>>105569347

it wasn't trained by the chinese

Anonymous

6/12/2025, 10:31:38 AM

No.105569386

[Report]

>>105569052

It's a pain to install first time but totally worth the effort. I haven't touched spaghetti in months.

Anonymous

6/12/2025, 10:32:09 AM

No.105569392

[Report]

>>105569236

all you have to do is look at the background to see the difference.

sdxl produces such nonsense backgrounds

>Amateur point of view photo

>ask for cosplay of anime character

>get the most SD 1.5 anime slopped image ever

lodestone is failbaking.

Anonymous

6/12/2025, 10:41:25 AM

No.105569485

[Report]

>>105569494

>>105569444

It's going to suck ass at anime until it's done training and someone finetunes it the way Illustrious did for SDXL. A 5M mixed dataset will never beat a 13M+ pure anime dataset that contains the entire contents of multiple boorus.

Anonymous

6/12/2025, 10:42:19 AM

No.105569494

[Report]

>>105569962

>>105569485

>It's going to suck ass at anime

that's the thing, i'm telling it to gen realistically, but prompting for an anime char realistically instead churns out an anime style, too

Anonymous

6/12/2025, 10:48:24 AM

No.105569543

[Report]

>>105569971

Anonymous

6/12/2025, 10:53:48 AM

No.105569595

[Report]

>>105569779

>>105569353

can you share the workflow of that one anon

Anonymous

6/12/2025, 10:54:52 AM

No.105569609

[Report]

>>105569700

>>105569444

it's not there yet. too much body horror, too many outtakes. I mean, "girl sitting on a couch", how hard can it be.

Dang this is way better to use than trying to to do anything with images post-gen in comfy.

Anonymous

6/12/2025, 11:01:08 AM

No.105569658

[Report]

>>105569709

>>105569636

you can't post that and not catbox the image anon

Anonymous

6/12/2025, 11:02:02 AM

No.105569665

[Report]

>>105569636

IT REALLY WHIPS THE LLAMAS ASS

Anonymous

6/12/2025, 11:04:55 AM

No.105569681

[Report]

Anonymous

6/12/2025, 11:08:06 AM

No.105569700

[Report]

>>105569826

>>105569609

>it's not there yet

>14 epochs left

surely everything is fixed...

Anonymous

6/12/2025, 11:10:10 AM

No.105569709

[Report]

>>105569724

>>105569658

There's still that inpaint discoloration visible tho eh. Can't post whole since it's /aco/ /d/ stuff.

Anonymous

6/12/2025, 11:11:39 AM

No.105569724

[Report]

Anonymous

6/12/2025, 11:15:31 AM

No.105569758

[Report]

Anonymous

6/12/2025, 11:17:37 AM

No.105569779

[Report]

>>105569976

Anonymous

6/12/2025, 11:23:57 AM

No.105569819

[Report]

>>105569839

>>105569021

>(mossacannibalis:0)

> 0

the fuck are you doing

Anonymous

6/12/2025, 11:25:29 AM

No.105569826

[Report]

>>105569992

20Loras

6/12/2025, 11:28:40 AM

No.105569839

[Report]

>>105569854

>>105569819

I have a preset, default prompts, that I build upon for each new subject. Rather than delete the prompt, I just shut it off.

Anonymous

6/12/2025, 11:30:42 AM

No.105569854

[Report]

>>105569978

>>105569839

from my testing, (something:0) still influences the prompt. rabbit hole go

Anonymous

6/12/2025, 11:43:55 AM

No.105569946

[Report]

Anonymous

6/12/2025, 11:46:23 AM

No.105569962

[Report]

>>105569968

>>105569494

That should be an issue with Flux, not with Chroma. Try the prompt on regular V36 version, I suspect V36 detailed is more slopped.

Anonymous

6/12/2025, 11:47:50 AM

No.105569968

[Report]

>>105569962

Also helps to add anime etc... into neg. Never had issue genning photoreal mikus etc..

Anonymous

6/12/2025, 11:48:15 AM

No.105569971

[Report]

>>105570057

>>105569543

nice. prompts/Loras for this?

Anonymous

6/12/2025, 11:49:29 AM

No.105569976

[Report]

20Loras

6/12/2025, 11:50:05 AM

No.105569978

[Report]

>>105569854

Gooners in the shell.

Anonymous

6/12/2025, 11:52:15 AM

No.105569992

[Report]

>>105570057

>>105569826

How many steps is that? It's likely a step count issue, or maybe the prompt itself. Never had such issues.

Anonymous

6/12/2025, 11:56:30 AM

No.105570018

[Report]

Anonymous

6/12/2025, 11:57:09 AM

No.105570021

[Report]

>>105567147

>krita

Its that anon again, i was a bit high and drunk last night and did not see that. it looks interesting, i think i will have to check that out.

>>105569636

it looks like something we all need.

Anonymous

6/12/2025, 12:02:45 PM

No.105570057

[Report]

>>105570089

uncanny valley mergedtodeath sameface fake smile plastic fuckdoll, take 1065

>>105569971

no lora, just the base model.

"an organic portal to the dark world, surrealism, by Zdzislaw Beksinski"

neg "cgi, 3d, text, sepia, fire"

I posted a screencap of the upscaling workflow a while ago.

>>105569992

25, it may also be resolution related? I mean I got a few servicable ones. the prompt was really basic but with a photographer wildcard just to see which ones work, might interfere as well.

Anonymous

6/12/2025, 12:09:59 PM

No.105570089

[Report]

Anonymous

6/12/2025, 12:30:26 PM

No.105570220

[Report]

Anonymous

6/12/2025, 12:32:31 PM

No.105570233

[Report]

>>105568250

>His disclaimer about v7 was already alarming enough few months ago

what disclaimer?

Anonymous

6/12/2025, 12:37:34 PM

No.105570265

[Report]

>>105570146

that's just his day job. once he clocks out, it's sex, bugs, and rock & roll.

Anonymous

6/12/2025, 12:38:08 PM

No.105570269

[Report]

>>105570286

>>105570146

Still prefer him over a jeet

Anonymous

6/12/2025, 12:40:40 PM

No.105570286

[Report]

>>105570269

That's probably a good call.

Anonymous

6/12/2025, 12:44:37 PM

No.105570317

[Report]

>>105567618

Marina Abramovic as a kid

>>105566530 (OP)

Real talk : Does RTX 4060 or 5060 16GB (NOT 8GB) is enough for Video Generation ?

Anonymous

6/12/2025, 1:12:50 PM

No.105570467

[Report]

>>105570471

Anonymous

6/12/2025, 1:13:39 PM

No.105570471

[Report]

>>105570484

>>105570467

Its night, sirs

Anyway, is it enough ?

Anonymous

6/12/2025, 1:14:56 PM

No.105570484

[Report]

>>105570491

>>105570471

Please do the needful, Thank You

Anonymous

6/12/2025, 1:16:20 PM

No.105570491

[Report]

Anonymous

6/12/2025, 1:16:50 PM

No.105570497

[Report]

>>105570461

5060 16gb is much better because of memory bandwidth , 16 is ok for video but its not great, especially long term

Anonymous

6/12/2025, 1:17:30 PM

No.105570498

[Report]

>>105570629

>>105570461

Yes.

Wan Q8 with a bit of offloading.

Wan Q6 with no offloading, I think. Possibly 1-2 for i2v.

>https://huggingface.co/city96/Wan2.1-I2V-14B-480P-gguf/tree/main

Posted this before, but general rule of thumb for loading any AI model is that the filesize is how much VRAM is required to just load the model, though you can offset it a little with virtual VRAM/offloading to RAM/CPU.

That doesn't count loading things like the text encoder or whatever else a model requires on top of that, or inference/generating. The more frames and higher the res, the more VRAM it needs too.

Anonymous

6/12/2025, 1:19:30 PM

No.105570508

[Report]

>>105570461

> does is

> plenk

hello sar

Anonymous

6/12/2025, 1:39:31 PM

No.105570601

[Report]

>>105570657

>>105567694

yeah? did juwanna mann?

Anonymous

6/12/2025, 1:43:56 PM

No.105570629

[Report]

>>105570498

>Wan Q6 with no offloading, I think

With a 16GB 5070ti and 32gb of RAM and using the fp16 t5xxl I still have to offload a little at Q6 (I get AllocationOnDevice crashes after every 4 videos)

You really need 64gb of ram nowadays to have fun with your computer

>reforger

>want to learn comfy instead

>look up tutorials on youtube

>"hey guys, faggotface mcshill here, here's a simplified piece of shit guide that only serves to shill my fucking patreon, subscribe to my patreon for exclusive access to the REAL tutorial

>close video, click another

>"sup guys, amerilard mcskidmark here, here's my poorly explained and meandering six hour long explanation on how to add a single node to your workflow, so you're gonna wanna go ahead and-"

>click another video

>"hello sar, poojat mcstreetshitter here-"

Anonymous

6/12/2025, 1:49:15 PM

No.105570657

[Report]

>>105570601

>>105567653

I need to buy new GPU.

Im sick of being cucked with Online Video generator

Anonymous

6/12/2025, 1:50:47 PM

No.105570670

[Report]

>>105570709

>>105570645

> meandering six hour long explanation

I hate video tutorials for exactly that reason. I wish guides and tutorials were always in writing. Even when a video helps illustrate something, I would have the videos be short, silent, display only and the actual knowledge imparted through words. I can read way faster than it takes to watch a video.

Anonymous

6/12/2025, 1:54:16 PM

No.105570698

[Report]

>>105570645

>https://rentry.org/localmodelsmeta#comfyui

There's a good tutorial linked in there with no patreon bullshittery.

Anonymous

6/12/2025, 1:55:32 PM

No.105570709

[Report]

>>105570645

>>105570670

Just use an AI YouTube summarizer

Anonymous

6/12/2025, 1:56:31 PM

No.105570720

[Report]

>>105570696

Training data for that prompt would be cooking tutorials/shows, stuff like that. Same deal with how it loves to make anime girls talk, because anime girls rarely stand around doing nothing in anime. They're either talking or fighting

Anonymous

6/12/2025, 1:56:57 PM

No.105570725

[Report]

>>105570696

>why do they always talk

What's the prompt? I always have my 1girls just standing there and smiling unless I prompt for "talking to the camera"

Anonymous

6/12/2025, 1:57:16 PM

No.105570729

[Report]

>>105570696

because you didn't give them something else to do with their mouth

Anonymous

6/12/2025, 1:59:28 PM

No.105570746

[Report]

Don't shill today Julien

Anonymous

6/12/2025, 2:03:27 PM

No.105570780

[Report]

>>105570696

women tend to do that

Anonymous

6/12/2025, 2:04:46 PM

No.105570784

[Report]

>>105570778

>adds api node

>surprised pikachu face

>it costs money?!

Anonymous

6/12/2025, 2:05:11 PM

No.105570787

[Report]

>>105570794

>>105570778

Comfy must have decided he was through giving free labor to a pack of ingrates

Anonymous

6/12/2025, 2:05:58 PM

No.105570794

[Report]

>>105570787

now if kijai could only follow suit

Anonymous

6/12/2025, 2:09:29 PM

No.105570821

[Report]

>>105570836

>>105570645

yeah I wouldn't want to learn how to use comfy now. it's got a steep learning curve and that shitty yt algorhythm doesn't help.

check out:

https://www.youtube.com/@latentvision/videos (ComfyUI: Advanced Understanding) (this guy is behind ipadapter for comfyui)

https://www.youtube.com/@drltdata/videos (maker of the impact pack, lots of shit here, he doesn't talk tho. but good place to learn about how to set up facedetailer)

>>105570778

ahahahahaha

Anonymous

6/12/2025, 2:10:19 PM

No.105570827

[Report]

>daily Julien seethe sesh

Just stop schizo

Anonymous

6/12/2025, 2:11:27 PM

No.105570834

[Report]

>>105571764

https://huggingface.co/gdhe17/Self-Forcing#training

What's stopping someone from renting a cluster of GPUs and training self forcing for the 14B model? Other than waiting two more weeks for someone else to do it and saving your money

Anonymous

6/12/2025, 2:11:45 PM

No.105570836

[Report]

>>105570930

>>105570645

>>105570821

I might make a rentry

For FaceDetailer in Comfy, is there a way to set it to do both face and hand? bbox_detector only accepts one input. Do I really have to have the output image flow into another facedetailer with the hand model instead?

Anonymous

6/12/2025, 2:27:36 PM

No.105570930

[Report]

>>105570963

>>105570838

afraid so.

>>105570836

good idea. I was gonna post more links but seem to draw a blank. control alt ai did a few very detailed in-depth videos for comfyui but they just stopped making content. who else is there anyways (who isn't just about hype) ? sarikas, kamph? lol

Anonymous

6/12/2025, 2:29:09 PM

No.105570943

[Report]

>>105570963

>>105570838

There's probably custom extensions you could combine to do it but why bother. You want them separate anyway so you can control the denoise individually.

Anonymous

6/12/2025, 2:32:24 PM

No.105570962

[Report]

anyone have experience making a chroma lora? how long on average would you say does it take to train?

Anonymous

6/12/2025, 2:32:38 PM

No.105570963

[Report]

Anonymous

6/12/2025, 2:43:29 PM

No.105571011

[Report]

Anonymous

6/12/2025, 2:44:23 PM

No.105571015

[Report]

>>105571691

AI must love knees

Anonymous

6/12/2025, 2:54:57 PM

No.105571081

[Report]

>>105571149

Guns fucking suck without loras.

Anonymous

6/12/2025, 2:56:41 PM

No.105571092

[Report]

Anonymous

6/12/2025, 3:05:23 PM

No.105571149

[Report]

>>105571171

>>105571081

cool gen lol. is this lora-less? I mean it's a rifle.

Anonymous

6/12/2025, 3:09:07 PM

No.105571171

[Report]

>>105571234

>>105571149

Yeah but guns are fucking basic shapes slapped together, even thought you get often a pasasble stuff, you also often get SPAS warped with Remington, double mag ARs and bendy AKs. And loras exist only for the most known or meme guns so no comprehensive libraray.

Anonymous

6/12/2025, 3:12:14 PM

No.105571196

[Report]

>>105570838

You can concatenate SEGS, then connect both to a "Detailer (SEGS)".

https://pastebin.com/raw/te36DLSR

>>105570838

You can concatenate SEGS, then connect both to a "Detailer (SEGS)".

https://pastebin.com/raw/te36DLSR (embed)

Anonymous

6/12/2025, 3:16:17 PM

No.105571223

[Report]

>>105571209

Based, thanks for sharing. I haven't been using Comfy for too long, I didn't know you could do this

Anonymous

6/12/2025, 3:17:41 PM

No.105571232

[Report]

>>105570838

The easiest way would be to use swarmui

Anonymous

6/12/2025, 3:17:57 PM

No.105571234

[Report]

>>105572693

>>105571171

I understand. I did some testing with flux last year and it knows some guns, certainly, but that won't help you here. what is this, illustrious, noob?

>>105571209

damn that is.. smart. but what about the prompt? just promptless or dual use

Anonymous

6/12/2025, 3:21:28 PM

No.105571250

[Report]

I want some carrot cake god damnit

Anonymous

6/12/2025, 3:22:51 PM

No.105571258

[Report]

>>105571209

this is the reason I browse these threads. you are the soul of /ldg/

Anonymous

6/12/2025, 3:23:59 PM

No.105571266

[Report]

>>105571322

>>105570645

yes, ai is grifter paradise, and comfy is the most widely used ui.

What's a good amount of steps for near perfect quality for Wan 14B? I do not care about processing time. I want the most amount of quality until diminishing returns is reached

Anonymous

6/12/2025, 3:25:48 PM

No.105571276

[Report]

>>105571288

Anonymous

6/12/2025, 3:27:27 PM

No.105571288

[Report]

>>105571276

would higher steps start introducing artifacts like multiple limbs,etc? I've had good results with 65

>>105571266

>wants to learn about a piece of software

>immediately seeks out "tech" influencers on youtube instead of reading the (free) manual

I'll never understand this. do zoomers just not know how to parse information larger than a tweet?

Anonymous

6/12/2025, 3:33:23 PM

No.105571336

[Report]

>>105571723

>>105571275

its not just about the steps, go through all nodes in the ldg's 720p wan workflow and see what can be improved, fp16 weights for everything, 0.13 teacache, output crf 0 and then reencode it with ffmpeg to what you need later

Anonymous

6/12/2025, 3:33:41 PM

No.105571339

[Report]

>>105571377

>>105571322

your boomer is showing. this is how people get their info now. short form click bait videos

Anonymous

6/12/2025, 3:38:45 PM

No.105571377

[Report]

>>105571322

>>105571339

it's how zoomshits get info, it's not how normal smart people get info

>c-chat are we c-cooked?

yes, yes you are :)

Anonymous

6/12/2025, 3:46:19 PM

No.105571434

[Report]

>>105568394

Yeah, but you're into dudes, so that doesn't really count

Anonymous

6/12/2025, 3:47:04 PM

No.105571440

[Report]

Shit's fucking called SEGS lmao

Anonymous

6/12/2025, 3:48:53 PM

No.105571452

[Report]

Someone cook before miguman does it pls.

Anonymous

6/12/2025, 3:52:19 PM

No.105571480

[Report]

>>105571322

Reading a manual is like reading a wiki page on something.

Extremely non-intuitive.

Anonymous

6/12/2025, 4:08:11 PM

No.105571625

[Report]

>>105571634

https://files.catbox.moe/juk6sj.json

My modified video 2 looped video "Loop Anything with Wan2.1 VACE" (

https://openart.ai/workflows/nomadoor/loop-anything-with-wan21-vace/qz02Zb3yrF11GKYi6vdu)

ComfyUI workflow with Skip Layer Guidance and set up for maximum output quality I could get.

160s/it on a 3090

You can easily speed it up by around 35% with higher TeaCache but in my experience it changes the colors/quality noticably, but might be fine for your use case.

Anonymous

6/12/2025, 4:09:11 PM

No.105571634

[Report]

>>105571625

Middle frames of

>>105566812

were generated by this workflow along with the same prompt used to create the original input video with /ldg/'s i2v Wan workflow.

Anonymous

6/12/2025, 4:10:33 PM

No.105571644

[Report]

>>105571652

>>105566812

>>105566807

Please for the love of god post the workflow or catbox the video for us.

Anonymous

6/12/2025, 4:11:21 PM

No.105571652

[Report]

>>105571680

>>105571644

I just did right above you

Anonymous

6/12/2025, 4:15:30 PM

No.105571680

[Report]

>>105571652

Bless you, sorry, i was catching up and getting ready to go out, bless you again Anon!

Anonymous

6/12/2025, 4:17:10 PM

No.105571691

[Report]

>>105572022

>>105571015

What are you using? Maybe you need to swap for a better model.

>>105571336

>0.13 teacache

is this a typo or secret knowledge? the text in the workflow says that 0.14 is the correct option you should go with for highest quality/slowest

Anonymous

6/12/2025, 4:23:16 PM

No.105571733

[Report]

>>105572255

>>105571322

Manuals are sequential. You generally want to hop from one thing to another out of a long list of possibilities, i.e. you want a walkthrough. Back when it was possible to search for things on the internet you would find a blog breaking down the steps. Now all you can do is search a video title and hope it's not a TTS slideshow or the most Indian man you've ever heard.

Anonymous

6/12/2025, 4:24:11 PM

No.105571739

[Report]

>>105571878

Anonymous

6/12/2025, 4:27:22 PM

No.105571764

[Report]

>>105570834

a few thousand dollars

Anonymous

6/12/2025, 4:38:47 PM

No.105571870

[Report]

what the fuck is this?

https://civitai.com/models/1032887/acceleraterillustriouspaseer?modelVersionId=1895163

it claims to just increase speed by 50% with no explanation or anything?

Anonymous

6/12/2025, 4:39:57 PM

No.105571878

[Report]

>>105571723

>>105571739

nvm i saw the screenshot. for i2v the "highest" setting is 0.13, while for t2v its 0.14

Anonymous

6/12/2025, 4:42:20 PM

No.105571893

[Report]

otaru sama please choose me.

Anonymous

6/12/2025, 4:47:11 PM

No.105571924

[Report]

>>105572208

>there is no XL character lora for Filia from Skullgirls

how in the fuck

Anonymous

6/12/2025, 4:48:46 PM

No.105571939

[Report]

>>105572255

How do you get default "masterpiece, etc" shit out of the way on ComfyUI?

On forge I used styles for this.

Anonymous

6/12/2025, 4:54:27 PM

No.105571976

[Report]

how do i get the fisheye view in wan? i tried every trigger i knew. it was supposed to be easy

Anonymous

6/12/2025, 4:58:39 PM

No.105572015

[Report]

Anonymous

6/12/2025, 4:59:22 PM

No.105572022

[Report]

>>105572234

>>105571691

Still SDXL since most of the coughdiapercough loras is for it. I only make nongoon gens on the side.

Anonymous

6/12/2025, 5:08:17 PM

No.105572118

[Report]

otaru when are going to play with me?

Anonymous

6/12/2025, 5:08:43 PM

No.105572120

[Report]

>>105572426

Anonymous

6/12/2025, 5:08:48 PM

No.105572121

[Report]

>>105571322

To be fair, the documentation on some of these repos can be atrocious.

Having a visual aid when devs assume you can read their mind can be useful when they haven't bothered to update the manual.

Anonymous

6/12/2025, 5:20:36 PM

No.105572208

[Report]

>>105571924

my nigga skullgirls is fairy popular and has decent amount of lora support. check civitai, most of them are trained from pony but seem to work fine for me. Imo its an abomination that's there no proper pine (bombergirl) illustrious lora yet.

https://civitai.com/collections/10048518

Anonymous

6/12/2025, 5:22:49 PM

No.105572219

[Report]

Anonymous

6/12/2025, 5:25:04 PM

No.105572234

[Report]

>>105572246

>>105572022

You could try illustrious, noobai or animagine. They're sdxl-adjacent and illustrious probably caught up on loras the most out of those. Also you might want to mess around you inference settings, looks like your cfg is too high, or the loras you use need lower weights to reduce deepfrying the images.

Anonymous

6/12/2025, 5:26:37 PM

No.105572246

[Report]

>>105572261

>>105572234

I mean I have Illustrious, which is SDXL.

Anonymous

6/12/2025, 5:27:33 PM

No.105572255

[Report]

>>105572334

>>105571733

sure, tutorials are great. comfy just felt self-explanatory because of how easy it is to share workflows, add and manage nodes and models, etc compared to some of the other node-based suites. plus there's a ton of info in discussion and issues on github.

it just seems like people might be unnecessarily frustrating themselves in tar pits like youtube to avoid just following the directions, leaving them forever stuck on "how do I get started".

with that in mind, I guess it'd be helpful to explain that part of the point of highly flexibly frameworks is to not get in the way of high-functioning users, and that dumbing it down any more would damage its utility. which is ironic, since one of comfy's problems is that it's too basic.

>>105571939

good question. try a prompt styler node. some include presets (in json), others are just CSV loaders.

I personally just create a notes node that I keep a few examples in, but you can also just create a fleet of prompt nodes.

Anonymous

6/12/2025, 5:28:19 PM

No.105572261

[Report]

>>105572246

Sorry for the missunderstanding. Well, I hope the cfg or lora weight tips help out a bit.

Anonymous

6/12/2025, 5:30:50 PM

No.105572287

[Report]

>>105572255

I imagine workflows deprecate quickly so nobody can really debug it properly. the comfy environment has been too volatile since the org began which is why there has been more doomers. even ani gave up on comfy's future. it's nothing more than a plugin to more useful apps but even then the experience using comfy in blender and krita is just jank and shitty

Anonymous

6/12/2025, 5:43:14 PM

No.105572386

[Report]

>>105572334

I do hope ani provides a good solution to this but it looks like a ridiculously hard task especially alone. he got this far already so he really is our only bet on better software in the future

Anonymous

6/12/2025, 5:45:47 PM

No.105572398

[Report]

for the vramlets lurking: I'm going to host a self forcing gradio so (You) can have fun with it and share the link in the next thread. just finishing up some things

Anonymous

6/12/2025, 5:46:24 PM

No.105572404

[Report]

>julien shills his shitty wrapper again in hopes of free devs

Anonymous

6/12/2025, 5:47:42 PM

No.105572415

[Report]

>wahhhhh shill comfy more!!!!! don't try to replace it!!!!

Anonymous

6/12/2025, 5:48:54 PM

No.105572426

[Report]

>>105572120

>shitty closed source model

>it's not even as good as Veo 3

At this point I do hope Google destroys the competition if they decide to be closed source.

Anonymous

6/12/2025, 5:50:32 PM

No.105572436

[Report]

>>105572334

>even ani gave up on comfy's future.

Pathetic kek

Anonymous

6/12/2025, 5:50:33 PM

No.105572437

[Report]

Anonymous

6/12/2025, 5:50:33 PM

No.105572438

[Report]

>>105567110

>real inpaint models require 1.00 denoise strength to actually work

Not true.

Anonymous

6/12/2025, 5:52:30 PM

No.105572449

[Report]

>>105567653

Me on the right.

Anonymous

6/12/2025, 5:56:04 PM

No.105572470

[Report]

what are some good checkpoints or loras that can create indoor/outdoor scenes?

I tried SD/Pony/IL but it cannot make a regular room without many artifacts.

I also tried img2img which for some reason just sucks in comfyui. And I also tried controlnet with canny/depth and some sketches i made but again, it failed.

The models on civitai barely look passable...

Any ideas?

Anonymous

6/12/2025, 5:57:40 PM

No.105572482

[Report]

What's the trick to hunyuan i2v taking the image as a first frame reference instead of just doing a sort of img2im and generating something different that just resembles the image?

Anonymous

6/12/2025, 6:23:22 PM

No.105572693

[Report]

>>105571234

>damn that is.. smart. but what about the prompt? just promptless or dual use

Well...I guess promptless could work.

I don't usually use detailer for hands, so I don't know...just came up with this solution since the anon asked for it.