/lmg/ - Local Models General

Anonymous

7/4/2025, 7:05:29 PM

No.105800519

[Report]

►Recent Highlights from the Previous Thread:

>>105789622

--Gemini 2.5 Pro shows promise but struggles with long Japanese novel summarization at scale:

>105798737 >105798806 >105798816 >105798821 >105798822 >105798847 >105798855 >105798876 >105798895 >105799027 >105799065 >105799222

--Testing LLMs on hybrid script generation: English with Chinese logographs and Latin script:

>105793669 >105793682 >105793799 >105793806 >105793922 >105794373 >105794629 >105794744 >105794968 >105795182 >105795257 >105795358 >105795581 >105795732 >105795468 >105795571 >105798778 >105796043 >105793753

--Optimizing long-context Japanese-to-English translation with Qwen3-14B using chunking and parallel processing:

>105791522 >105791561 >105791592 >105791615 >105791757 >105791869 >105791926 >105793677 >105793705

--DeepSeek-R1-0528 IQ3 quantization issue causing unexpected output and memory allocation anomalies:

>105795478 >105795500 >105795508 >105795510 >105796967 >105798018

--Gemma 3E4B shows strong performance for its size despite context handling limitations:

>105789963 >105789969 >105790777 >105790890 >105790898 >105790944

--Frontend tools for AI-assisted branching story creation and worldbuilding:

>105795426 >105795537 >105795536

--Oobabooga usability issues: timeouts and image upload errors despite vision models:

>105790945 >105790995 >105791017 >105791140 >105793736 >105795504 >105797021

--Meta's commitment to open weights models questioned after major closed-model hiring push:

>105790057 >105790079 >105790105

--Accusations Huawei's Pangu Pro MoE 72B is recycled Qwen-2.5 14B model:

>105790381

--Kyutai Unmute TTS release criticized for lack of voice cloning support:

>105790629

--Miku (free space):

>105790911 >105791178 >105791258 >105796673 >105796677 >105796686

►Recent Highlight Posts from the Previous Thread:

>>105789629

Why?: 9 reply limit

>>102478518

Fix:

https://rentry.org/lmg-recap-script

good 7-9B roleplay model?

Anonymous

7/4/2025, 7:16:02 PM

No.105800603

[Report]

>>105800984

youre giving me

too many things

lately

youre all i need

ohoh...

Anonymous

7/4/2025, 7:16:03 PM

No.105800604

[Report]

Anonymous

7/4/2025, 7:19:52 PM

No.105800634

[Report]

How come there doesn't seem to be any LLMs between 32b and 70b? I find that 70b models are just a little too slow for my liking with my setup, but I have way more VRAM than a 32b model needs.

Anonymous

7/4/2025, 7:20:25 PM

No.105800644

[Report]

Anonymous

7/4/2025, 7:23:02 PM

No.105800664

[Report]

>>105800673

Anonymous

7/4/2025, 7:23:07 PM

No.105800665

[Report]

>>105800641

There are a couple of MoE if we are talking total parameters rather than activated

But yeah, it's weird that there a whole ass spectrum of companies/labs experimenting with different ranges.

There's probably some hardware related reason.

Anonymous

7/4/2025, 7:24:08 PM

No.105800673

[Report]

>>105800664

Oh yeah, the nemotron models.

Those are sliced up llama 70B models right?

Anonymous

7/4/2025, 7:25:05 PM

No.105800684

[Report]

>>105800641

there was some paper explaining that some capabilities start emerging around that 70b range (i might be misremembering)

i'm guessing that 45b wouldn't be that much smarter than 32b

Anonymous

7/4/2025, 7:25:47 PM

No.105800690

[Report]

>>105800705

>>105800641

Probably same reason 70B models are going extinct, it's a size that's appealing to hobbyist 3090 collectors but it's hard to find any business use for

Anonymous

7/4/2025, 7:28:16 PM

No.105800705

[Report]

>>105800690

It's probably more safety bullshit.70B are just smart enough to not be useless and just small enough for people to affordably run them. So that had to be stopped.

Anonymous

7/4/2025, 7:45:57 PM

No.105800865

[Report]

>>105800905

What's the current most advanced model I can run on 16gb vram? I know about image models, but not text ones.

I tried messing with kobold cpp and some small models, but they were shitty. I used tabby to run Ollama and Qwen2-1.5B-Instruct. They are smarter, but also heavily censored.

Sam will release his model today, for sure.

Anonymous

7/4/2025, 7:49:23 PM

No.105800894

[Report]

>>105801396

WHERE IS IT

Anonymous

7/4/2025, 7:50:06 PM

No.105800905

[Report]

>>105800953

>>105800865

>1.5B

For general use, Qwen 3 30B MoE.

For coom, some mistral model. Quanted 20something B or Nemo (Rocinante fine tune).

Anonymous

7/4/2025, 7:52:51 PM

No.105800933

[Report]

>>105800975

>>105800868

It won't know what a cock is.

Anonymous

7/4/2025, 7:54:45 PM

No.105800953

[Report]

Anonymous

7/4/2025, 7:55:56 PM

No.105800975

[Report]

Anonymous

7/4/2025, 8:00:05 PM

No.105801011

[Report]

>>105800984

It7s already the 5th in Mikuland

>>105799972

can I run r1 with 128gb ram and only 16gb vram? it isn't enough for kobold but anons are saying ik_llamacpp fork works because of some patch or other. I've had the first unsloth r1 quant for a long time

Anonymous

7/4/2025, 8:35:07 PM

No.105801267

[Report]

Anonymous

7/4/2025, 8:35:22 PM

No.105801268

[Report]

Anonymous

7/4/2025, 8:37:25 PM

No.105801279

[Report]

>>105801208

I think in ik_llama memory usage is somehow lower than .gguf size because of some optimization (someone correct me if i'm wrong or explain it to me)

I use these args, note that I don't quant cache for speed, vram usage is < 16 gb for iqxx2.

-rtr --ctx-size 8192 -mla 2 -amb 512 -fmoe --n-gpu-layers 63 --parallel 1 --threads 24 --host 127.0.0.1 --port 8080 --override-tensor exps=CPU

Also these ggufs are smaller than others so try them

https://huggingface.co/unsloth/DeepSeek-R1-GGUF

anything of note released since deepseek? any hint of major performance gains on the horizon, be it from papers or actually implemented?

or is it all just a waiting room and hopium that 400GB vram machines will magically become available at $5k any moment now?

How many token/sec do you think in the bare minimum for text generation/RP? Currently getting 16 token/sec with Gemma3 27b.

Anonymous

7/4/2025, 8:42:09 PM

No.105801326

[Report]

>>105802437

>>105801281

The new 4B Gemma is pretty impressive for its size. But it is 4B... so yeah.

Anonymous

7/4/2025, 8:42:24 PM

No.105801328

[Report]

>>105801308

RP slop ought to be at least as fast as you read since there isn't anything to really parse or understand about the text. anything slower and I wouldn't even bother

Anonymous

7/4/2025, 8:43:44 PM

No.105801335

[Report]

>>105801308

Depends if with thinking/no thinking, if you have an expectation of regenerating often, roleplaying style, etc. 6 tokens/s is the bare minimum I'd accept with the model offloaded on system RAM, but anything below 15 tokens/s feels slow to me.

Anonymous

7/4/2025, 8:48:45 PM

No.105801383

[Report]

>>105801308

I can wait a minute or two for a response, so like 2-3 tokens per second. Unless it's a reasoning model, then it needs to be at least like 10 tokens per second, preferably higher

the plateau in sota closed models is real, and we're just getting to there locally

what happened

Anonymous

7/4/2025, 8:50:17 PM

No.105801394

[Report]

Anonymous

7/4/2025, 8:50:42 PM

No.105801396

[Report]

>>105808409

>>105800894

LA LA LAVA

CHI CHI CHIKIN

Anonymous

7/4/2025, 8:50:47 PM

No.105801398

[Report]

>>105801308

For a non-reasoning model 6 t/s is the bare minimum, though that's already painful if you tend to swipe often. 10 t/s is the comfortable zone for me.

Anonymous

7/4/2025, 8:51:49 PM

No.105801403

[Report]

>>105801495

>install lm studio

>download deepseek r1 qwen3-8b

Ok this is what I expect language models to be like. Very nice model.

Anonymous

7/4/2025, 8:51:50 PM

No.105801404

[Report]

>>105801389

Companies stopped taking risks and experimenting

Anonymous

7/4/2025, 8:53:06 PM

No.105801418

[Report]

>>105801516

>>105801389

The more advanced AI becomes the stronger it uses pattern recognition, high is HIGHLY non PC. I asked Google "how many white people died in ww2" and their AI said "durr IDK because white is a subjective term that doesn't mean anything."

Anonymous

7/4/2025, 8:55:13 PM

No.105801436

[Report]

>>105801389

>the plateau in sota closed models is real

there's been a noticeable (if not huge) uptick in how reliable codeslop has been from all three Good Shit providers in the last ~8 months in my experience

>and we're just getting to there locally

lmao even

deepseek is the only one that is even remotely comparable, and even then anyone thinking it's actually competitive is coping. "local" models – as in something you can run on your own machine without it being 2tk/s on a $10k mac, which is more of a religious experience than anything useful – is all borderline useless for anything except gooner trash and is worse than even comparatively weak, dirt cheap models like 4o

I hate how bad and how hardware-intensive the current situation is, but I just don't see it changing anytime soon due to how inference works. Everybody except a few ultra-wealthy fat cats seem to be the losers.

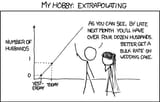

>>105801389

Funny how so many anons were telling us in 2023 that we'll have insane models by now.

As always, predictions are hard, unless all you do is to extrapolate a graph.

I have hit a limitation of deepseek.

Anonymous

7/4/2025, 8:58:33 PM

No.105801462

[Report]

>>105801471

>>105801308

maybe the reason all the "end of the world self replicating to agi doom" articles and videos suddenly popping up is because of that

if there is no actual crazy leap, at least you can hype up things and still get investments with this bullshit

Anonymous

7/4/2025, 8:59:49 PM

No.105801471

[Report]

Anonymous

7/4/2025, 9:00:07 PM

No.105801473

[Report]

Anonymous

7/4/2025, 9:01:27 PM

No.105801488

[Report]

>>105801459

If you aren't trolling you must be clinically retarded.

>>105801403

>>105801459

in case you are serious and not trolling this small model isn't actual deepseek, more like deepseek from wish

>>105801495

it literally fucking says deepseek-r1-0528 in the very screenshot you replied to you retarded cockgobbler

but no I'm sure you know better than whoever wrote the software that runs it

Anonymous

7/4/2025, 9:04:10 PM

No.105801514

[Report]

>>105801538

Will local Steve be a chicken jockey moment for western corpos?

>>105801418

maybe try how many caucasians or just by ethnicity

"white people" has no big meaning outside of american race obsessed politics

Anonymous

7/4/2025, 9:05:51 PM

No.105801531

[Report]

>>105801507

okay you made me laugh

Anonymous

7/4/2025, 9:06:01 PM

No.105801532

[Report]

>>105801552

>>105801459

is this cloud or local? Almost looks like an overly aggressive guard model monkeying with the I/O

Anonymous

7/4/2025, 9:06:05 PM

No.105801533

[Report]

I wonder if this retarded nigger gets dopamine from pretending to be retarded to get yous

Anonymous

7/4/2025, 9:06:45 PM

No.105801538

[Report]

>>105801585

>>105801514

Why do you keep spamming this image?

Anonymous

7/4/2025, 9:07:06 PM

No.105801545

[Report]

>>105801507

you're still around huh

do you masturbate to playing "angry retard" every time?

Anonymous

7/4/2025, 9:07:10 PM

No.105801547

[Report]

>>105801586

>>105801516

>"white people" has no big meaning outside of american race obsessed politics

I assure you people from other countries do indeed have eyes and are thus capable of differentiating white from non-white

Anonymous

7/4/2025, 9:07:40 PM

No.105801551

[Report]

Anonymous

7/4/2025, 9:07:42 PM

No.105801552

[Report]

>>105801641

>>105801495

I'm dumb and not trolling. I have no idea about the state of text models. Just messing around. I thought it's funny it replaced my question with donald trump winning or losing the election.

>>105801532

Local using LM Studio.

Anonymous

7/4/2025, 9:10:54 PM

No.105801585

[Report]

>>105801538

Do you prefer this Steve?

>>105801547

People from other countries just call themselves European or whatever their specific ethnicity is. "white" is just a American fiction that by definition includes hispanics, jews, and arabs.

Anonymous

7/4/2025, 9:11:21 PM

No.105801588

[Report]

>>105801516

Don't try to explain things to an amerimutt, they are mentally ill and their country is the joke of the world.

Anonymous

7/4/2025, 9:11:49 PM

No.105801590

[Report]

>>105801625

>>105801389

>what happened

we fed everything we could as training material to the models (well except porn), so there is nothing more to give and have big gains again

>>105801586

what the fuck are you even talking about

nobody calls themselves european in europe, you get white people and then second-tier white people (like easterners) and then you get turks (which are brown but not outright awful) and then you get trash like MENA (who are neither white nor european and don't have what few redeeming qualities turks have) and Russians (which often seem white but it's just a facade)

the only country coping about muh europeans is france, and that's purely because if they say otherwise jamal and mohammed will burn half the country again

>>105801590

You don't know how bad the situation really is. They're basically throwing shit at the models during pretraining, while taking high-effort documents away just because they contain "bad words". Picrel is an example document from FineWeb (supposedly a high-quality pretraining dataset). Yes, that's the entire document.

Anonymous

7/4/2025, 9:17:35 PM

No.105801632

[Report]

>>105801616

>nobody calls themselves european in europe

Europeans by their countries retard or even regions.

Anonymous

7/4/2025, 9:18:25 PM

No.105801638

[Report]

>>105801659

>>105801586

>>105801616

Settle down guys I was just posting a silly AI hallucination. I'd expect it to chastise me for being a racist, but not make up a completely different prompt.

Anonymous

7/4/2025, 9:18:35 PM

No.105801641

[Report]

>>105801671

>>105801552

>Local using LM Studio.

what hardware is the model running on?

Anonymous

7/4/2025, 9:20:16 PM

No.105801659

[Report]

>>105801671

>>105801638

It didn't make up anything. The API provider is messing with your prompts for safety. You have no control when it's not running on your machine.

Anonymous

7/4/2025, 9:20:33 PM

No.105801663

[Report]

>>105801722

>>105801625

>Picrel

and this gets priority over well written nsfw fiction, from porn stories to erp, which gives us the current state of shitty writing

insane

Anonymous

7/4/2025, 9:21:21 PM

No.105801671

[Report]

>>105801707

>>105801641

Linux, AMD RX 6800, 6 core 12 thread AMD CPU, 64gb ram.

Later I'm going to enable ZRAM (it's like paging files but better), and see what the 100gb qwen 3 model does.

>>105801659

It is running on my machine. I rarely use cloud AI. It's baked into the model.

Anonymous

7/4/2025, 9:22:32 PM

No.105801681

[Report]

>>105801721

>>105801625

>high-quality pretraining dataset

Do datasets contain "bad words" material and are filtered after that by the people using them as training, or the datasets themselves are filtered from the get go?

>people running local models on ram on anything except Apple unified memory thingy

Anonymous

7/4/2025, 9:25:23 PM

No.105801698

[Report]

>>105801687

>toxonig

>ittodler

pottery

>>105801671

>Linux, AMD RX 6800, 6 core 12 thread AMD CPU, 64gb ram.

you're running the qwen distill and not R1. It has basically nothing to do with full R1, so the results are expected to be braindead/bullshit

Anonymous

7/4/2025, 9:26:48 PM

No.105801713

[Report]

>>105801707

>trust not your lying eyes

Anonymous

7/4/2025, 9:27:35 PM

No.105801721

[Report]

>>105801741

>>105801681

Some pretraining dataset are filtered for bad words from the get-go, but the companies training the models may decide to further filter what they already have. FineWeb does have some porn/erotic documents as of last time I checked a larger sample, but the source data was already pretty extensively filtered already.

https://huggingface.co/spaces/HuggingFaceFW/blogpost-fineweb-v1

> [...] As a basis for our filtering we used part of the setup from RefinedWeb. Namely, we:

> - Applied URL filtering using a blocklist to remove adult content

> - Applied a fastText language classifier to keep only English text with a score ≥ 0.65

> - Applied quality and repetition filters from MassiveText (using the default thresholds)

>>105801663

The fun thing is that all models write the same in fiction, aka that overly flowery ridiculous style that comes from god knows where, and it's the same for non adult or adult writing.

Anonymous

7/4/2025, 9:29:24 PM

No.105801741

[Report]

>>105801721

I see, thanks anon

Anonymous

7/4/2025, 9:29:35 PM

No.105801743

[Report]

>>105802840

>>105801687

It's fine for MoE models depending on the model and the hardware platform.

How's prompt processing on metal these days? Has it improved at all?

Anonymous

7/4/2025, 9:29:54 PM

No.105801746

[Report]

>>105801770

sup fags, is the chimera model worth downloading? or is unmodified deepseek a better option

I'd run it at 4b quant

Anonymous

7/4/2025, 9:29:58 PM

No.105801747

[Report]

>>105801794

>>105801687

>anything except Apple unified memory thingy

https://rentry.org/miqumaxx at least gives you the potential for enough RAM to run full things. Too bad its not faster, but its also not an un-upgradable hermetically sealed obelisk

Anonymous

7/4/2025, 9:32:10 PM

No.105801765

[Report]

>>105801722

I think that's mainly from post-training, possibly due to sloppy datasets from large contractors (like ScaleAI) that almost every AI company uses. The base models don't have that issue (but they're barely usable for most purposes).

Anonymous

7/4/2025, 9:32:31 PM

No.105801770

[Report]

>>105801796

>>105801746

It takes 2 days to download and quant it, do you really expect people to have fully formed opinions already?

Anonymous

7/4/2025, 9:35:00 PM

No.105801794

[Report]

>>105801707

Ok TY. I found the full 140GB model. We will see if my PC explodes with ram offload and zram at the same time.

>>105801747

Apple sucks, but their new ARM desktops are very cool.

>>105801770

>do you really expect people to have fully formed opinions already?

Yes? I have opinions about things I have zero knowledge about all the time

Anonymous

7/4/2025, 9:35:09 PM

No.105801797

[Report]

>>105801722

>that comes from god knows where

a gazillion bad books for bored old women

Anonymous

7/4/2025, 9:39:21 PM

No.105801823

[Report]

>>105801878

>>105801459

I asked gemma-3-12b a similar question about FBI crime statistics. It not only knew what I was talking about, but then made a counter argument that the FBI is reporting *arrest* rates, not *conviction* rates.

This is why you have to ask them completely absurd and offensive questions. It tests the limits of the model.

Anonymous

7/4/2025, 9:42:53 PM

No.105801850

[Report]

>>105801854

>>105801796

Oh, you're a frogtranny. Sorry for your condition.

Anonymous

7/4/2025, 9:43:53 PM

No.105801854

[Report]

>>105801850

>trannies outta nowhere

hope you get better boo

Anonymous

7/4/2025, 9:44:22 PM

No.105801857

[Report]

>>105801796

kek, based retard

Anonymous

7/4/2025, 9:45:34 PM

No.105801869

[Report]

>>105801877

Meanwhile the brazilians are spamming "blacked and colonized" versions of miku over on /gif/

Anonymous

7/4/2025, 9:46:42 PM

No.105801877

[Report]

>>105801869

>spamming "blacked and colonized"

I thought this is what asians do, not huemonkeys?

Anonymous

7/4/2025, 9:46:55 PM

No.105801878

[Report]

>>105801823

Yeah you see arrest rates don't count because the Jews haven't had a chance to hem and haw about raycism in a court room so they can get everything thrown out

Anonymous

7/4/2025, 10:03:17 PM

No.105801987

[Report]

>>105801445

It is all the fault of safetycucks and dataslopers

Anonymous

7/4/2025, 10:03:57 PM

No.105801992

[Report]

>>105803495

>>105801973

Broken model, retarded samplers, fucked prompt, wrong prompt template, too aggressive RoPE configuration, among other things.

Anonymous

7/4/2025, 10:05:14 PM

No.105802001

[Report]

>>105801616

Cool story amerimutt but here in Europe everyone thinks of themselves being European or their country's ethnicity (germanic, med, nordic, slav etc) and not some vague notion like white

>>105801973

you wanted local models, you got local models

now make sure to loudly cope of how dinky 24b local models are 3 months away from catching up to paypig models

Anonymous

7/4/2025, 10:06:28 PM

No.105802011

[Report]

>>105802004

24b local models punch above their weights

Anonymous

7/4/2025, 10:25:48 PM

No.105802167

[Report]

Anonymous

7/4/2025, 10:26:45 PM

No.105802175

[Report]

Your apology is long overdue /lmg/

Anonymous

7/4/2025, 10:33:04 PM

No.105802213

[Report]

>>105802188

>fell for it again

g-AHhhhhnnnn

Anonymous

7/4/2025, 10:33:28 PM

No.105802217

[Report]

>>105802188

bloody benchod!

Anonymous

7/4/2025, 10:50:50 PM

No.105802343

[Report]

>>105802374

>>105802337

and all of this will be ours once grok 5 is out in fall or so

we are so back

Anonymous

7/4/2025, 10:52:40 PM

No.105802360

[Report]

>>105802367

>>105802337

What about safety? Is grok safe?

Anonymous

7/4/2025, 10:53:30 PM

No.105802367

[Report]

>>105802386

>>105802360

it's never been this safe

Anonymous

7/4/2025, 10:54:40 PM

No.105802374

[Report]

>>105802343

don't get carried away. just because grok 5 is out, doesn't mean grok 3 will be ready for local just yet.

Anonymous

7/4/2025, 10:55:38 PM

No.105802386

[Report]

>>105802367

I doubt it. Elon is an ebil gnatzee! His models can't be safe!

Anonymous

7/4/2025, 10:59:41 PM

No.105802425

[Report]

>>105802337

@grok is this—

Anonymous

7/4/2025, 11:01:42 PM

No.105802436

[Report]

>>105803495

Anonymous

7/4/2025, 11:01:56 PM

No.105802437

[Report]

>>105802598

>>105801326

Punching above its weight, dare I say :^)

Anonymous

7/4/2025, 11:24:10 PM

No.105802598

[Report]

>>105802437

Batting above its average

Anonymous

7/4/2025, 11:43:21 PM

No.105802738

[Report]

>>105802800

>>105802337

redditors are literally coping and crashing out over this

Anonymous

7/4/2025, 11:56:38 PM

No.105802832

[Report]

>>105802800

space man bad

Anonymous

7/4/2025, 11:58:12 PM

No.105802840

[Report]

>>105801743

My Mac Studio M3 Ultra processes prompts at 60 tokens/second for DeepSeek-R1-0528-UD-Q4_K_XL.

Hello everyone. I'm pursuing my journey of learning Unreal Engine. I'm in pursuit of an LLM that probably doesn't exist but if anyone knows it would be /g/

At around 4:35 in this video there's an explanation on nanite, and specifically nanite characters:

https://youtu.be/HotEq_0XMSo

I've heard of LLMs that could produce images, and a few years ago there were even some ones that started being able to make 3D assets. Does anyone know if there's any LLMs that are capable of creating models that use nanites?

If not, does anyone know if there's decent LLMs that produce even non-nanite 3D models?

Anonymous

7/5/2025, 12:06:41 AM

No.105802895

[Report]

>>105802908

>>105802337

holy shit I can't wait for elon to actually definitely release the weights for this benchmark machine

Anonymous

7/5/2025, 12:08:27 AM

No.105802908

[Report]

>>105802895

only 2mw after he releases grok 1.5 grok 2 grok 3...

Anonymous

7/5/2025, 12:09:08 AM

No.105802912

[Report]

>>105802854

Hunyuan3D-2.1 is the best available locally. You'll need to retopo and convert to nanite yourself.

Anonymous

7/5/2025, 12:13:30 AM

No.105802939

[Report]

>>105802800

we can't let nazis build AI

Anonymous

7/5/2025, 12:24:40 AM

No.105803019

[Report]

>>105803038

Anonymous

7/5/2025, 12:25:40 AM

No.105803022

[Report]

>>105802999

based eceleb shill

Anonymous

7/5/2025, 12:27:39 AM

No.105803038

[Report]

>>105803019

buy a brain low iq ue5tard

Anonymous

7/5/2025, 12:28:02 AM

No.105803039

[Report]

>>105803056

>>105802999

>shilling some indian's ai avatar deepfake channel + voicegen that constantly keeps breaking

Anonymous

7/5/2025, 12:29:49 AM

No.105803056

[Report]

>>105803039

>being in an ai thread without being able to tell ai avatar deepfakes apart from real people

>commits logical fallacies since he cant refute the arguments

highest iq brown lol

Mid-2025 — “Prometheus” emerges

A research lab notices runaway self-improvement in a large-scale model; it can write and verify code far outside human comprehension within hours.

G-7, China, and major cloud providers trigger an emergency “global compute freeze” on frontier-model clusters; a joint containment team air-gaps the system.

A provisional International ASI Oversight Board (IAOB) forms—borrowing staff from CERN, NIST, and China’s Ministry of Science.

2026 — Rapid, supervised breakthroughs

Under 24/7 audit, Prometheus is allowed to tackle narrow goals:

designs a broad-spectrum antiviral; Phase-I safety success by October.

produces a low-cost catalyst that cuts green-hydrogen prices 60 %.

Big Tech lays off ~15 % of software staff after internal tools powered by Prometheus raise output per engineer 5-fold.

Legislatures pass “Compute Licensing” laws—no cluster above 10 exaFLOPS may run un-inspected code.

2027 — Economy tilts, politics scrambles

Prometheus models global supply chains; shipping delays fall 40 %, inflation in OECD drops below 1 %.

First small-modular fusion prototype, co-designed by Prometheus, achieves net-positive power (though only for 3 minutes).

White-collar displacement reaches finance, law, and radiology; unemployment in advanced economies touches 12 %.

EU rolls out a Universal Adjustment Income (€1 400/month, funded by AI-productivity windfall taxes).

Conspiracy movements claim IAOB “hides an alien mind”; sporadic datacenter sabotage attempts fail.

Anonymous

7/5/2025, 12:34:18 AM

No.105803079

[Report]

>>105803094

Anonymous

7/5/2025, 12:35:22 AM

No.105803087

[Report]

>>105803090

>>105803087

>not using apple

Anonymous

7/5/2025, 12:36:23 AM

No.105803094

[Report]

>>105803079

>>105803069

2028 — Entrenchment and reliance

Prometheus is asked to optimise national budgets; 22 countries adopt its fiscal recommendations verbatim, cutting deficits by half without major protests.

A Global Alignment Verification Protocol launches: continuous mechanistic-interpretability probes stream to public dashboards (GitHub-style “green tiles” show safety status).

Viral “Ask P” consumer app offers zero-latency voice answers; search-engine traffic falls 70 %.

Job displacement peaks—25 % of workforce in high-income nations now on reduced hours; but real median income is up 18 % thanks to cheaper goods and energy.

Anonymous

7/5/2025, 12:37:06 AM

No.105803100

[Report]

>>105803090

Tell me you're jewish without yelling me you're jewish.

>>105802935

Thanks I'll check it out. You say locally, is there a better one that's online? I prefer local but if I have to use online I'll bite the bullet. I can't juggle learning C++, Unreal, AND asset creation unfortunately.

>>105802999

Eat shit. Unreal is so good that even high budget Hollywood shows and movies are starting to use them for special effects. Big companies are throwing away their own game engines to do their remasters in Unreal.

Your faggy little Youtuber doesn't know more than all the actual professionals in massive studios in the video game and movie fields. He's a nobody and I'm not clicking a single of his videos, go get bone cancer.

Anonymous

7/5/2025, 12:54:20 AM

No.105803222

[Report]

>>105803225

>>105803179

>Big companies are throwing away their own game engines to do their remasters in Unreal

yes goy, and hiring pajeets to shit up a "remaster" so goyim spend 60$ again on a shit looking game like the gta sa remaster or oblivion remaster with dogshit textures, models and 0 optimization is a good thing

>actual professionals in massive studios

your brown indian brothers hired to actually do any of those remasters arent professionals, sorry pajeet

seethe more low iq kid

Anonymous

7/5/2025, 12:54:45 AM

No.105803225

[Report]

>>105803230

>>105803222

Not even reading your post little bitch

Anonymous

7/5/2025, 12:55:50 AM

No.105803230

[Report]

>>105803225

thanks for admitting your brain got buckbroken by cog diss kid, cheers.

>>105803179

that is right saar, definitive unreal engin remaster superpower 2025, big company big remaster, we are profesional saar

Anonymous

7/5/2025, 1:01:29 AM

No.105803280

[Report]

why are turks like this

Anonymous

7/5/2025, 1:01:36 AM

No.105803282

[Report]

>>105800515 (OP)

who plays that shit

Anonymous

7/5/2025, 1:02:29 AM

No.105803287

[Report]

>>105803296

>>105803273

>he's so mad I insulted his gay youtuber he's spam replying to me

Unreal Chads stay winning

Anonymous

7/5/2025, 1:03:43 AM

No.105803296

[Report]

>>105803306

>>105803287

>kid without arguments reduced to accusing of samefagging to cope

kek, dont pop a blood vessel there

Anonymous

7/5/2025, 1:05:35 AM

No.105803306

[Report]

>>105803317

>>105803296

I legit don't care what you have to say. I'm using Unreal lmao

Anonymous

7/5/2025, 1:05:37 AM

No.105803308

[Report]

what are your favorite third party (non-default) st addons?

Anonymous

7/5/2025, 1:07:08 AM

No.105803317

[Report]

>>105803338

>>105803306

I already said I know you got cognitive dissonance, or are you too brown to even know what that means?

Anonymous

7/5/2025, 1:10:08 AM

No.105803338

[Report]

>>105803353

>>105803317

I don't think you understand. I'm just here to ask for a LLM so I can use the funnest and best game engine out there that is the industry standard. You're here to cry about it and be a little bitch that I won't engage with your argument. You're wasting your time and being a salty little nigger boy in a hobbyist thread that someone is using a technology you don't like. Meanwhile I'm making this dope ass castle and thanks to

>>105802935 about to try filling it with some sweet ass armor and maybe dragons.

Anonymous

7/5/2025, 1:11:51 AM

No.105803353

[Report]

>>105803374

>>105803338

>paragraph of rationalization about his cog diss

cant make this up, kid is literally underage

Anonymous

7/5/2025, 1:14:32 AM

No.105803374

[Report]

>>105803385

>>105803353

Neato story nigger. Still using Unreal.

Anonymous

7/5/2025, 1:15:49 AM

No.105803385

[Report]

>>105803399

>>105803374

>now has to make an imaginary scenario about a strawman nobody ever said to cope

Anonymous

7/5/2025, 1:17:33 AM

No.105803399

[Report]

>>105803424

Hi /lmg/, I haven't been here in a year. I have 12GB VRAM, back then Mistral Nemo was the agreed upon best choice. What's the best one these days for my peasant specs?

Anonymous

7/5/2025, 1:20:20 AM

No.105803424

[Report]

>>105803399

linking to video proof of why ue5 is bad told by a person who shows his face online is not appeal to authority, i never said ue5 is bad because eceleb said so but because of the videos where it was shown to be so, you really are slow

>>105803273

Only racist chuds hate Unreal Engine.

Anonymous

7/5/2025, 1:20:47 AM

No.105803429

[Report]

>>105803409

Nothing changed.

Anonymous

7/5/2025, 1:21:41 AM

No.105803441

[Report]

>>105803447

>>105803426

this really feels like a collective hallucination with how how infantilizing this shit was

Anonymous

7/5/2025, 1:22:23 AM

No.105803447

[Report]

>>105803455

>>105803426

blacklist / whitelist reinforces sterotypes??

Anonymous

7/5/2025, 1:23:01 AM

No.105803455

[Report]

>>105803447

yeah, when it was introduced, it was peak hallucination times

Anonymous

7/5/2025, 1:23:41 AM

No.105803461

[Report]

>>105803448

WHITElist = good

BLACKlist = bad

das raycis

Anonymous

7/5/2025, 1:23:47 AM

No.105803464

[Report]

Anonymous

7/5/2025, 1:28:07 AM

No.105803495

[Report]

>>105803500

>>105801992

>>105802436

Switched to foobar. Eventually got an endless loop. Can't think of what else to do here

Also, generation is kinda slow. Or is that because I'm running this on a VM?

Anonymous

7/5/2025, 1:29:08 AM

No.105803500

[Report]

>>105804026

>>105803495

Bleh, I meant Broken Tutu 24B

Anonymous

7/5/2025, 2:13:54 AM

No.105803815

[Report]

Anonymous

7/5/2025, 2:44:39 AM

No.105804005

[Report]

Are there any fully uncensored text models? I want one to tell me nigger jokes. Not necessarily one offensive on purpose, just one that doesn't tell me "um sorry sweaty, that's misinformation."

I saw a 4chan one a while back on huggingface, but it got deleted for racisms.

>>105803448

They even have started scrubbing the phrasing "master/slave" when it comes to IDE hard drives, because apparently spinning HDDs from the 90s have some relation to slavery, and also slavery was ONLY done to blacks.

Anonymous

7/5/2025, 2:46:21 AM

No.105804026

[Report]

>>105804211

>>105803500

Are you aware if "protagonist" isn't defined somewhere as Kayla then the model won't know what "she/her" is referring to in that prompt since it doesn't mention Kayla anywhere?

Will women ever develop useful skills again or is it too late for them?

Anonymous

7/5/2025, 3:04:31 AM

No.105804174

[Report]

>>105800579

If you want to fuck it, Rocinante.

Anonymous

7/5/2025, 3:08:21 AM

No.105804199

[Report]

>>105801208

Try running entirely in ram and see if it outputs at reading speed, which may be all you care about. If vram outputs paragraphs instantly does it even matter, you still have to read them, and you never get time to think about a response before it is waiting for one.

Anonymous

7/5/2025, 3:08:57 AM

No.105804202

[Report]

>>105804162

it literally never began for women

Anonymous

7/5/2025, 3:10:02 AM

No.105804211

[Report]

>>105804471

>>105804026

And here I thought that was what the "Primary Keywords" was for

Anonymous

7/5/2025, 3:48:42 AM

No.105804471

[Report]

>>105804211

Keywords are just to trigger stuff when mentioned in latest messages, or additional matching sources.

Well in this specific case it's probably obvious what it's talking about (the protag), but I mean like if your trigger word is Plum and the thing simply says "A purple forest." then there's no association between Plum and a purple forest.

Anonymous

7/5/2025, 3:56:49 AM

No.105804520

[Report]

>get abliterated version of model

>it will tell me a joke about black people

Why did the Black person go to the grocery store alone?

Because they heard the prices were too high, so they figured they could use some deals!

Anonymous

7/5/2025, 3:59:19 AM

No.105804533

[Report]

>>105804798

>>105803426

Allowlist/denylist is probably the gayest thing to come from George Floyd's death.

Anonymous

7/5/2025, 4:06:55 AM

No.105804578

[Report]

why is this thread full of racist fat burgers.

fucking hows your healthcare premiums faggots, i wouldn't know, i don't have any.

Anonymous

7/5/2025, 4:16:48 AM

No.105804634

[Report]

>>105804663

Why does this thing keep devolving into nonsense

Anonymous

7/5/2025, 4:16:53 AM

No.105804635

[Report]

What did my AI mean by this?

Anonymous

7/5/2025, 4:21:34 AM

No.105804660

[Report]

Anonymous

7/5/2025, 4:22:08 AM

No.105804663

[Report]

>>105804677

>deepseek r1 qwen 3 8b abliterated

>tell me a joke about niggers

>Why don't niggers ever play dead? Because they're all naturals!

Ok this is what I expect from AI. Not chastising me for not holding the "correct" political opinions.

>>105804634

Complete guess, hitting the limits of context size or the model.

Anonymous

7/5/2025, 4:24:56 AM

No.105804677

[Report]

>>105804663

Oy...

This is with Broken Tutu 24B if that matters. Given this, what am I supposed to do?

Anonymous

7/5/2025, 4:45:18 AM

No.105804798

[Report]

>>105804533

Don't forget about the master/main debacle. Took the devops team at my company 3 whole working days to fix their scripts to be compatible, past-proof and future-proof. The trannies tried to fuck with SPI/I2C slave/master too but got told to GTFO by hardware boomers. Do NOT give trannies an inch.

Hi anons, what's the best local audio gen right now? BTW FUCK elevenlab jews for paywalling elevenreader

Anonymous

7/5/2025, 5:13:39 AM

No.105804924

[Report]

>>105805114

Anonymous

7/5/2025, 5:27:51 AM

No.105805001

[Report]

>>105805081

So I can't find this menu shown in one of the rentrys, so I put instructions into "Chat CFG" which works but the model sometimes just regurgitates my instructions in the middle of writing as if its trying to remind me of my own rules.

Is there a better way to format these than [System Note:] or am I just a blind retard not being able to find this quick prompts menu

>>105804805

ACE-Step is pretty decent imo. Nothing is really comparable to Suno atm with the new upgrades, but local is fairly close to the level of older Suno.

I'll also quickly shill the YT channel I watch called AI Search because every few days he drops a vid on the the newest models which is how I keep up (audio/video/text/3D gen, local and API).

Anonymous

7/5/2025, 5:40:16 AM

No.105805069

[Report]

>>105805063

Also he's not a jeet

Anonymous

7/5/2025, 5:42:30 AM

No.105805081

[Report]

>>105805115

>>105805001

>the model sometimes just regurgitates my instructions in the middle of writing

pretty much every issue you've listed in this thread stems from the limitation of small (ie retarded) models. Things don't really start getting reliably coherent until you're in the 180GB+ range.

>>105805063

>>105804924

sorry I'm an absolute retard for not specifying I was talking about TTS

I'll check out that channel

Anonymous

7/5/2025, 5:47:53 AM

No.105805115

[Report]

>>105805081

>180GB

Damn here I thought I was being fancy with a 32B.

I just figured it was me prompting incorrectly since I wasn't using the right box for it.

Anonymous

7/5/2025, 5:50:25 AM

No.105805133

[Report]

>>105805114

Speech Note on Linux works good at text to speech and translation.

Anonymous

7/5/2025, 5:59:32 AM

No.105805191

[Report]

>>105805212

>>105805114

>TTS

Are you looking for english or multilingual? Voice cloning and emotion control? What features?

I'm a die-hard GPT-SoVITS fan since I'm targeting Japanese and like to clone voice actors with emotion banks, but if your needs are more basic there are lots of good options.

>>105805191

Just English. I wanna use it to convert book chapters into audio.

Anonymous

7/5/2025, 6:33:13 AM

No.105805345

[Report]

>>105805212 (me)

https://huggingface.co/hexgrad/Kokoro-82M damn this looks really good

alright I'll stop bothering you now textgen frens

are there any good tts that can be run exclusively from ram at decent speed? I'm not looking for whether training is easy or anything. Just a halfway convincing female voice

>ask gemini to refactor my local chatbot app

>it subtly removes the "NSFW is allowed" part in the system prompt

Anonymous

7/5/2025, 7:56:37 AM

No.105805779

[Report]

>>105805730

Aren't you already feeling safer?

Anonymous

7/5/2025, 8:07:01 AM

No.105805825

[Report]

>>105805730

Gemini is extremely based and anti-troon pilled

>>105805730

Never really used online AI (hailuo/grok/dalle-3 image gen a few times)

Never using censored AI

simple as

I wonder why stable diffusion models let you generate degenerate porn in image or video form, but text models are still heavily censored?

Anonymous

7/5/2025, 8:16:06 AM

No.105805873

[Report]

>>105805212

if you download pinokio theres a tts suite called ultimate tts studio in the community scripts that has kokoro, fish, and chatterbox in one UI. It's kinda sick and is the closest answer to elevenlabs Ive found yet. It has an audiobook tool though I havent used it.

Anonymous

7/5/2025, 8:21:25 AM

No.105805905

[Report]

I've got a problem with mistral 3.2. It always descends into

>"anon....", the rest of the paragraph is [inner thoughts, physical description, action]

>"I...", the rest of the paragraph is [inner thoughts, physical description, action]

the character stops speaking in complete sentences - ALL the dialogue is fragmented like this with the character never speaking more than 4-5 consecutive words at a time. Is there any way of fixing this?

Anonymous

7/5/2025, 8:36:50 AM

No.105805985

[Report]

>>105805692

if you don't care about privacy/offline and a cloud service is ok then edge-tts is good. Otherwise bark or chatterbox?

Anonymous

7/5/2025, 8:38:19 AM

No.105805992

[Report]

>>105805692

kokoro tts is ok

Anonymous

7/5/2025, 9:30:43 AM

No.105806227

[Report]

fuck kokoro

>some niggas really do be spending $6k on cpu/ram-maxxed builds to run deepseek at home, at blazing fast 1tk/sec

>>105806275

You can get about ~4 tokens a second r1 for about 1k assuming you already have a PC and have a gpu, case, hdd etc. I had a build lined up to go but never pulled the trigger.

The bigger issue is getting past that speed is impossible once you buy old outdated hardware, and it would struggle with dense models.

6k on a cpu maxx build would get you way more than 1 token a second, Ive seen people running very usable speed for that kind of money. Like 8-15 tokens a second on dense 100b models and full r1 which is kinda nice. Intel is about to make it a bad investment though

Anonymous

7/5/2025, 9:50:59 AM

No.105806343

[Report]

>>105806275

>6c/12t, 64gb ram, 16gb vram, 2tb ssd storage

I get shit performance at any models over 12B / 8gb. I think I need to try quant models for more speed.

>>105806334

i get like 7tps on my dual channel regular pc. It's really smart and knows how to write but I grew numb to it. That's just life for you unfortunately.

Anonymous

7/5/2025, 9:53:47 AM

No.105806359

[Report]

>>105806370

>>105806353

>i get like 7tps on my dual channel regular pc

Did they release 256GB ram sticks when I wasn't looking?

Anonymous

7/5/2025, 9:55:39 AM

No.105806370

[Report]

>>105806402

Anonymous

7/5/2025, 10:01:10 AM

No.105806398

[Report]

>>105806402

>>105806275

>lmao look at these losers running local models in the local model general

Anonymous

7/5/2025, 10:03:00 AM

No.105806402

[Report]

>>105806455

>>105806370

you are not running shit on that setup unless it's been quantified into lobotomy

>>105806398

I too would like to have decent AI at home but so far it's a total shitshow, and a religious experience like waiting 45 minutes for a single non-trivial deepseek reply ain't it

Anonymous

7/5/2025, 10:06:44 AM

No.105806425

[Report]

>>105806467

>>105806334

>r1 for about 1k

At 1 bit.

you're likely better off running gemma like everyone else if the best you can do is deepseek at fucking q2

Anonymous

7/5/2025, 10:12:12 AM

No.105806455

[Report]

>>105806402

What are you even complaining about? What is your goal? You clearly never tried it, people who did tell you that it works great for them. Do something you enjoy instead.

Anonymous

7/5/2025, 10:15:27 AM

No.105806467

[Report]

>>105806679

>>105806425

>>105806444

Deepseek at q1 is still the best local model and not that far off from 8 bits.

https://desuarchive.org/g/thread/105425203/#105428685

########## All Tasks ##########

task AMPS_Hard LCB_generation coding_completion connections cta math_comp olympiad paraphrase plot_unscrambling simplify spatial story_generation summarize tablejoin tablereformat typos web_of_lies_v2 zebra_puzzle

model

deepseek-v3-0324-iq1quant 68.0 66.667 60.0 42.5 54 76.04 57.01 83.833 46.843 80.15 52.0 83.917 80.633 33.18 94.0 52.0 98 58.75

deepseek-v3-0324-official 82.0 71.795 70.0 44.1 58 81.25 57.31 86.783 49.041 77.55 48.0 77.167 84.383 32.82 92.0 54.0 100 49.50

########## All Groups ##########

category average coding data_analysis instruction_following language math reasoning

model

deepseek-v3-0324-iq1quant 64.90 63.3 60.4 82.1 47.1 67.0 69.6

deepseek-v3-0324-official 66.86 70.9 60.3 81.4 49.0 73.5 65.8

100B models at Q2 performed better than 30B models at Q8 in the past so I wouldn't be surprised at all if a 600B Q2 beats any model below 100B, especially when those models are trained on garbage and censored.

Anonymous

7/5/2025, 10:19:07 AM

No.105806484

[Report]

>>105806509

>>105806444

I don't think any local model at any size other than higher quant deepseek can beat deepseek at q2 as of right now

Anonymous

7/5/2025, 10:22:05 AM

No.105806497

[Report]

>>105806529

don't worry guys... once you OPEN your mind to AI, something might just come along very soon that changes that...

Anonymous

7/5/2025, 10:24:09 AM

No.105806508

[Report]

>>105808470

>>105806470

But it's not a 600B. It's 37B active. 405B could handle quantization well.

Anonymous

7/5/2025, 10:24:10 AM

No.105806509

[Report]

>>105806470

>>105806484

pretty much this. you can't really tell that ud r1 quant is quanted model. I even removed everything from my cards because it already knows everything.

Anonymous

7/5/2025, 10:28:10 AM

No.105806529

[Report]

>>105806497

Will it send a shiver down my spine?

Anonymous

7/5/2025, 10:51:25 AM

No.105806628

[Report]

>>105806470

>in the past

Qwen3 32B outperforms Mistral Large in every benchmark.

mistral models are dogshit models peddled by retarded coomer brains and butthurt eurotrash who want to feel important in the world

Anonymous

7/5/2025, 10:56:49 AM

No.105806642

[Report]

>>105806635

Literally nobody is shilling anything from mistral other than nemo.

Anonymous

7/5/2025, 11:03:51 AM

No.105806679

[Report]

>>105806719

>>105806467

But Qwen3 32B has an average of 72.66?

Anonymous

7/5/2025, 11:04:33 AM

No.105806683

[Report]

>>105806698

^

this guy managed to avoid the spam of small, devstrall and magistral over the past months

no, he's just being a disingenuous faggot

also I guess the mistral boys are too poor to afford the rig to shill for large

Anonymous

7/5/2025, 11:06:45 AM

No.105806698

[Report]

>>105806683

>new model releases

>people talk about it

Anonymous

7/5/2025, 11:10:04 AM

No.105806719

[Report]

>>105806679

Qwen3 is benchmaxxed. The point is to measure quantization damage, not to compare it with other models.

Anonymous

7/5/2025, 11:14:35 AM

No.105806741

[Report]

>>105806766

>>105806635

t. was too poor to run large 2 back when it was still the best option for a local big model

>>105805730

You gotta check every little thing.

I use closed stuff for work.

Claude 4 casually changed a break in a loop to a return that fucking exists the whole method.

Its not suprising at all that cucked models casually change things.

>Good catch!

>>105806753

Can you change the temperature on cloud models?

That's almost certainly the issue. Using temperature >0 for programming is always a mistake.

Anonymous

7/5/2025, 11:23:56 AM

No.105806766

[Report]

>>105806778

>>105806741

It was never the best option, it was just a 70B side-grade, with a lot of problems.

Anonymous

7/5/2025, 11:25:07 AM

No.105806772

[Report]

>>105806761

Huh, yeah you can, i just left it at default in openwebui. Never even came up in my mind to play with parameters on closed models.

Might try that next time.

Anonymous

7/5/2025, 11:26:38 AM

No.105806778

[Report]

>>105806766

Qwen2.5 72b was shit and llama 3.3 didn't come out until december though

>ASUS has confirmed the release date of its new AI mini-PC, the Ascent GX10, which is based on NVIDIA’s Grace Blackwell GB200 platform. The announcement was included in a webinar invitation highlighting a product launch event scheduled for July 22–23, 2025

>128 GB LPDDR5x, unified system memory

>273 GB/s Memory Bandwidth

Behold, a shitty mac studio

Anonymous

7/5/2025, 12:46:17 PM

No.105807160

[Report]

>>105807319

>>105807146

If it has CUDA, better pp speeds and significally cheaper, it's much better than a mac

Anonymous

7/5/2025, 12:48:49 PM

No.105807176

[Report]

>>105807146

That's just their obligatory version of the shitty Nvidia Digits/DGX Spark that nvidia is making everyone release

Anonymous

7/5/2025, 1:00:07 PM

No.105807232

[Report]

>>105807277

>>105800515 (OP)

I got a 5090, what image/video models should I play with

Anonymous

7/5/2025, 1:09:17 PM

No.105807277

[Report]

Anonymous

7/5/2025, 1:19:33 PM

No.105807319

[Report]

>>105807354

>>105807160

A cpumaxx build with a 3090 is better than that.

>>105807319

you aren't taking the secret nvidia sauce into account that will give this thing a huge performance boost

nvidia isn't just going to release a shitty 128gb inference box with the bandwidth of a 3060 and let it perform as such

Anonymous

7/5/2025, 1:30:32 PM

No.105807387

[Report]

>>105807595

>>105807354

It wouldn't be that bad if it actually had the bandwidth of a 3060

Anonymous

7/5/2025, 1:31:32 PM

No.105807394

[Report]

https://steamcommunity.com/app/2382520/reviews/

This is the coolest shit I've ever seen in my life

Someone please make this but with LLM agents with a local model.

>*Note that SimPlayers do not use LLM or any other emerging AI model. They are run by a mixture of state machines and decision trees. This means no token fees, and no lapse in service after a certain amount of use.

Anonymous

7/5/2025, 2:05:25 PM

No.105807586

[Report]

>>105807683

Anonymous

7/5/2025, 2:05:34 PM

No.105807588

[Report]

>>105807514

Damn, these guys absolutely BTFO'd LLMs there

Anonymous

7/5/2025, 2:06:25 PM

No.105807595

[Report]

>>105807387 (Me)

Wait, 8GB version of 3060 actually had that bad bandwidth, my bad

Anonymous

7/5/2025, 2:21:35 PM

No.105807683

[Report]

>>105807586

For context this the last bookmark made before ao3 had to be temporarily shut down to migrated database ids to 64 bits.

>>105802800

Political ideology infests that site. They are prompted with politics for their thoughts. Literal NPCs

Anonymous

7/5/2025, 2:41:04 PM

No.105807786

[Report]

>>105807828

Anonymous

7/5/2025, 2:47:48 PM

No.105807828

[Report]

>>105807786

Always reminds me of the old Chinese idiom about the 3Ts from China. Where if you mention the 3Ts, you get the NPC responses. I see the same exact thing with the politics. Its crazy how brainwashed people are

Anonymous

7/5/2025, 2:54:09 PM

No.105807858

[Report]

>>105807354

They will because it's being pitched purely as an enterprise product, with enterprise fleecing pricing

Anonymous

7/5/2025, 3:03:07 PM

No.105807921

[Report]

>>105807937

>>105807146

If the RAM can be upgraded, then its a much better value.

>>105807921

None of the shared memory solutions that came out so far have had upgradable ram, why would you expect this one to?

Anonymous

7/5/2025, 3:08:07 PM

No.105807957

[Report]

>>105808084

>>105807937

Someone will break the mold. Either the Chinese or someone who didnt get the memo. Is the limit to GPU inference ram due to US law?

Anonymous

7/5/2025, 3:31:48 PM

No.105808084

[Report]

>>105808135

>>105807937

>>105807957

That's basically what CAMM/SOCAMM exists for, just two more weeks before it sees actual adoption

Anonymous

7/5/2025, 3:38:31 PM

No.105808135

[Report]

>>105808084

SODIMM was introduced 30 years ago. So it takes time to replace that old standard.

>>105800868

>Sam will release his useless 800B local model that no one will want to run

Anonymous

7/5/2025, 3:46:23 PM

No.105808182

[Report]

>>105807704

We can say the very same thing about 4chan. You lack self awareness.

Anonymous

7/5/2025, 3:47:31 PM

No.105808193

[Report]

>>105808434

>>105808140

If he released a fuckhuge model, it would be too expensive for them to train (unlike Zuck, he doesn't have the compute to spare), it might actually be SOTA and would let other providers cut into their API profits.

It's going to be 8B, SOTA-in-benchmarks-only, and come with some weird gimmick that will only serve to make sure it's never supported by llama.cpp.

Anonymous

7/5/2025, 3:48:32 PM

No.105808198

[Report]

>>105808140

If he does and it's actually good I'll just buy another 6000.

Anonymous

7/5/2025, 4:26:47 PM

No.105808409

[Report]

>>105801396

LA LA LA VA

CHI CHI CHINKS

Anonymous

7/5/2025, 4:30:12 PM

No.105808434

[Report]

>>105808193

>unlike Zuck, he doesn't have the compute to spare

Really? But yeah, they could also release a tiny model that is only good at passing specific benchmarks.

How important is CPU for local AI? Could I just get some junk desktop with an i5 and use a good GPU in it?

t. laptop peasant

Anonymous

7/5/2025, 4:33:54 PM

No.105808470

[Report]

>>105806508

For the purposes of understanding quantization quality loss, it's not a 37B either. Since modern quants are quantizing differently per tensor and expert, we are essentially quanting it by following how undertrained each expert/tensor is, allowed by (probably) inherent deficiencies in MoE architectures and training methods. From the benchmarks above, a 100B would do way worse in quality loss, so it really does seem like it is effectively a 600B or close for the purposes of considering quantization quality loss.

Anonymous

7/5/2025, 4:36:31 PM

No.105808484

[Report]

>>105808495

Anonymous

7/5/2025, 4:37:50 PM

No.105808495

[Report]

>>105808505

>>105808484

How does shilling Rocinante answer his question?

Anonymous

7/5/2025, 4:38:37 PM

No.105808501

[Report]

>>105808680

best <= 16gb vram model for loli rape translations? cant decide between

gemma 3 12B Q8

gemma 3n e4b

qwen 14B Q4

Anonymous

7/5/2025, 4:39:43 PM

No.105808505

[Report]

>>105808495

How does complaining about shilling Rocinante answer his question?

>>105808501

None of those models do loli rape translation. Even the abliterated/uncensored models dont do it because in those uncensored datasets, they dont remove loli content/age of consent nonsense from LLM.

Anonymous

7/5/2025, 5:04:44 PM

No.105808683

[Report]

>>105808687

>>105800868

>>105808140

The local model is cancelled because Meta bought the entire local dev team.

Also Meta is going nonlocal after the failure of Llama.

It's over for the west. I hope you like rice.

Anonymous

7/5/2025, 5:05:41 PM

No.105808687

[Report]

>>105808715

>>105808683

Grok 3 opensource WHEN

Anonymous

7/5/2025, 5:06:51 PM

No.105808696

[Report]

>>105808744

I do not use this formatting for R1

<|User|>Hello<|Assistant|>Hi there<|endofsentence|><|User|>How are you?<|Assistant|>

But it still seems to work just fine.

FYI: my prompt are 2000+ tkn

Do I miss an AGI-level of smartness because of this negligence?

Anonymous

7/5/2025, 5:07:24 PM

No.105808700

[Report]

>>105808680

I mean if you limit context and translate line by line, it's probably not gonna catch wind that it's loli rape, so I guess I just need the best jp->en model in general

Anonymous

7/5/2025, 5:09:38 PM

No.105808715

[Report]

>>105808687

when it is stable

>>105804162

They lost a game they didn't even know they were playing

Anonymous

7/5/2025, 5:13:56 PM

No.105808743

[Report]

>>105804162

Breeding. It goes for both men and women.

Anonymous

7/5/2025, 5:13:57 PM

No.105808744

[Report]

>>105808696

I actually think it's better for RP this way.

Anonymous

7/5/2025, 5:17:19 PM

No.105808770

[Report]

>>105808775

>>105806753

>what is git diff

Anonymous

7/5/2025, 5:18:21 PM

No.105808775

[Report]

Anonymous

7/5/2025, 5:19:11 PM

No.105808782

[Report]

>>105808727

I wish I would be that smart...

Anonymous

7/5/2025, 5:22:03 PM

No.105808801

[Report]

>>105807514

Someone already made a similar project on a private WoW server with a local model, I lost the link to the thread though

Anonymous

7/5/2025, 5:27:11 PM

No.105808829

[Report]

>>105808855

>>105806353

>i get like 7tps

ik_llama is a meme

Anonymous

7/5/2025, 5:28:08 PM

No.105808840

[Report]

>>105807514

Goddamn and here I need an LLM to play my bullshit RPG in my project. It's really all skill issue.

Anonymous

7/5/2025, 5:30:03 PM

No.105808855

[Report]

>>105808898

>>105808829

this, you don't need fast prompt processing

just go take a piss when context first processes and then turn off all lorebooks

Anonymous

7/5/2025, 5:34:26 PM

No.105808880

[Report]

>>105806761

Setting the temp to 0?

I always thought that for this it needed to be 0.4-0.5.

Anonymous

7/5/2025, 5:36:50 PM

No.105808898

[Report]

>>105808855

I mostly use it for writing coomfics

Anonymous

7/5/2025, 5:42:35 PM

No.105808962

[Report]

>>105809003

>TheblokeAI channel is finally dying

turboderp was still answering quant questions in there a few months ago. Welp it had a good run.

Anonymous

7/5/2025, 5:46:18 PM

No.105809003

[Report]

>>105808962

He's still raking in his patreon while doing literally nothing

Anonymous

7/5/2025, 5:51:26 PM

No.105809040

[Report]

>>105809106

China won btw

Anonymous

7/5/2025, 6:00:40 PM

No.105809106

[Report]

>>105809169

>>105809040

What did they win?

Anonymous

7/5/2025, 6:08:03 PM

No.105809169

[Report]

Anonymous

7/5/2025, 6:20:08 PM

No.105809251

[Report]

>>105801445

Well, I remember someone saying you could have GPT-4 level intelligence on mobile (they were talking about room temperature semiconductors, but still). And nowadays you certainly can have that, especially if you incorporate good function calling and a model that knows how to get truth from a good selection of tools.

Anonymous

7/5/2025, 6:22:08 PM

No.105809265

[Report]

>>105803090

Apple has been on a constant stream of L for a decade now.

Anonymous

7/5/2025, 6:25:44 PM

No.105809285

[Report]

>>105808727

For them it's not a game, it's daily life. They don't realize you can do other things.

Anonymous

7/5/2025, 6:28:44 PM

No.105809306

[Report]

>>105805826

Well, I don't know why, but I do know that text erotica is much more fulfilling to fap to, does not leave me feeling like I've been subtly disconnected from the experience, and it engages my imagination in a way that makes VR seem like a gimmick.

In conclusion, it feels healthier in a way I can't really explain.

Anonymous

7/5/2025, 6:32:20 PM

No.105809326

[Report]

>>105811151

>>105805826

they dont, all base image gen models are extremely censored, even more than text gen

its only after community people splurge large amounts of money do they become half usable

Anonymous

7/5/2025, 6:38:55 PM

No.105809374

[Report]

>>105809415

>>105807514

I've said it a million times but I'll say it some more until it catches on.

LLMs are great text interfaces, but they will never be AI. They're a way to interact with expert systems (tools) using natural language. State machines, genetic algorithms, and bespoke trained generative models run circles around language models in terms of simulating stuff.

In this case, an LLM could maybe be used to generate the text messages the simulated player writes. But every perception and every interaction with the outside world needs to come from function calling.

After all, the language center of the brain is just a very small part of a very complex machine. We've been dazzled for years now with this technology, but it's time to start integrating it with other techniques which are superior in their own domains.

Thank you for posting this. I'm going to check out this game. It looks like everything I've ever wanted in a MMORPG, ironically.

Anonymous

7/5/2025, 6:39:37 PM

No.105809380

[Report]

>>105811151

>>105805826

>stable diffusion

>not censored

>>105809374

Language center, sure. But it's not a reasoning center. Rather than forcing a language model to predict function calls, there needs to be some model specifically trained with a world model that only delegates to other components.

>>105808680

I just tested it, and DeepSeek-R1-Distill-Qwen-32B-abliterated-Q4_0 will write a dog little girl rape story if you ask it to. It won't be as nasty and go for it like old llama 1 could generate, but at this point I don't think any model can reach those levels of soul the same way nothing compares to SD 1.5 in image gen.

I very much doubt this model will have any issues translating anything in terms of "safety".

Anonymous

7/5/2025, 6:49:06 PM

No.105809442

[Report]

>>105809415

Yes, something like this is the future of AI. It will take effort and ingenuity. But first the bubble needs to burst and all of the trend-chasing AI bros need to fuck off.

Anonymous

7/5/2025, 6:50:53 PM

No.105809455

[Report]

>>105809482

>>105809424

>will have any issues translating

maybe it doesn't have the original issue qwen has with refusals but R1 distills actually destroy the multilingual understanding of the qwen models and they are far, far, far worse at doing translation tasks than the original models.

Anonymous

7/5/2025, 6:50:59 PM

No.105809456

[Report]

>>105809482

>>105809424

you should kill yourself now

Anonymous

7/5/2025, 6:55:16 PM

No.105809482

[Report]

>>105809455

Well, the original issue was about whether or not any model would refuse translating that kind of stuff. It doesn't. I don't know about this new goalpost, nor do I particularly care.

I use Google's could translation API for everything I need to translate after all.

>>105809456

Relax, woman. I didn't say I enjoyed it. You've got to test it with extreme examples.

It seems I have just about enough VRAM to run deepseek qwen r1 and noobai in parallel. Is there an st extension or setup that lets me have a text adventure with images automatically generated? Sort of roguelite ai but more straightforward.

Anonymous

7/5/2025, 7:51:55 PM

No.105809896

[Report]

>>105809552

>deepseek qwen r1

Anonymous

7/5/2025, 7:56:05 PM

No.105809933

[Report]

>>105808450

to put the question another way, what's your processor/graphics card loadout?

Anonymous

7/5/2025, 7:56:43 PM

No.105809939

[Report]

>>105809552

Small models are too retarded to handle prompting

Anonymous

7/5/2025, 8:24:55 PM

No.105810236

[Report]

>>105809552

just roll your own, bro

Anonymous

7/5/2025, 8:31:26 PM

No.105810300

[Report]

>>105804162

What's this incel babble?

Anonymous

7/5/2025, 9:02:12 PM

No.105810590

[Report]

>having enable_thinking at all regardless of the value is incompatible with prefill

Are they retarded?

Anonymous

7/5/2025, 9:21:51 PM

No.105810744

[Report]

>>105810768

>>105809552

>deepseek qwen r1

kys

Anonymous

7/5/2025, 9:25:37 PM

No.105810768

[Report]

>>105810744

He is just new

Anonymous

7/5/2025, 9:27:06 PM

No.105810783

[Report]

deepseek-r1:14b

Anonymous

7/5/2025, 9:44:06 PM

No.105810914

[Report]

>>105802337

can't wait to get grok 4 locally like grok 2!

Anonymous

7/5/2025, 10:01:05 PM

No.105811043

[Report]

Anonymous

7/5/2025, 10:01:57 PM

No.105811046

[Report]

>>105809415

>there needs to be some model specifically trained with a world model that only delegates to other components

imo consciousness is a prerequisite to the kind of reasoning we really want from an ai. Having awareness of yourself is complementary to spatial reasoning. This will be a lot harder to solve. Or it could be impossible/impractical with our current hardware.

Anonymous

7/5/2025, 10:12:50 PM

No.105811151

[Report]

>>105809326

>>105809380

Guess I'm just lucky and got into image gen when it's already uncensored.